Towards Stable Prediction across Unknown Environments Peng Cui

Towards Stable Prediction across Unknown Environments Peng Cui Tsinghua University

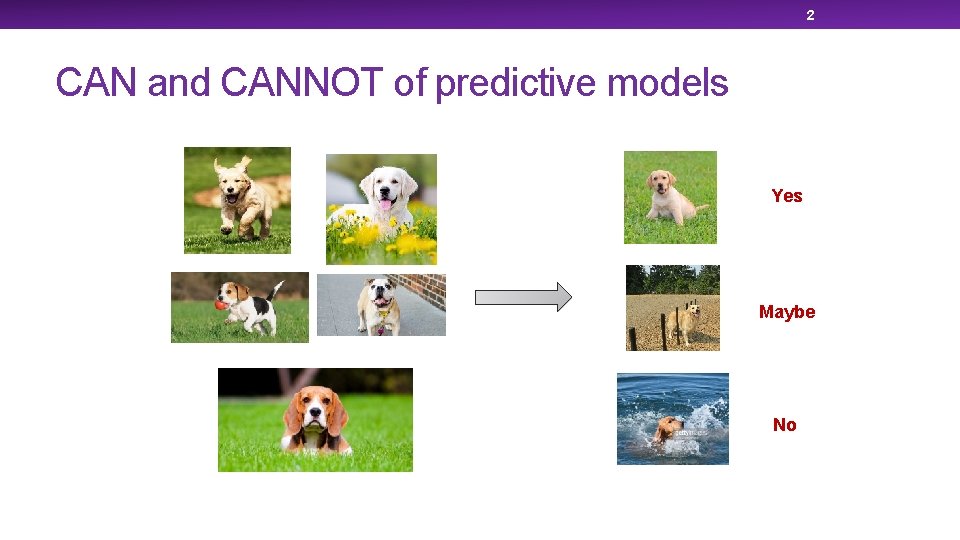

2 CAN and CANNOT of predictive models Yes Maybe No

3 CAN and CANNOT of predictive models • Cancer survival rate prediction Testing Data Features: • Body status • Income • Treatments • Medications Training Data City Hospital Predictive Model City Hospital Higher income, higher survival rate. University Hospital Survival rate is not so correlated with income.

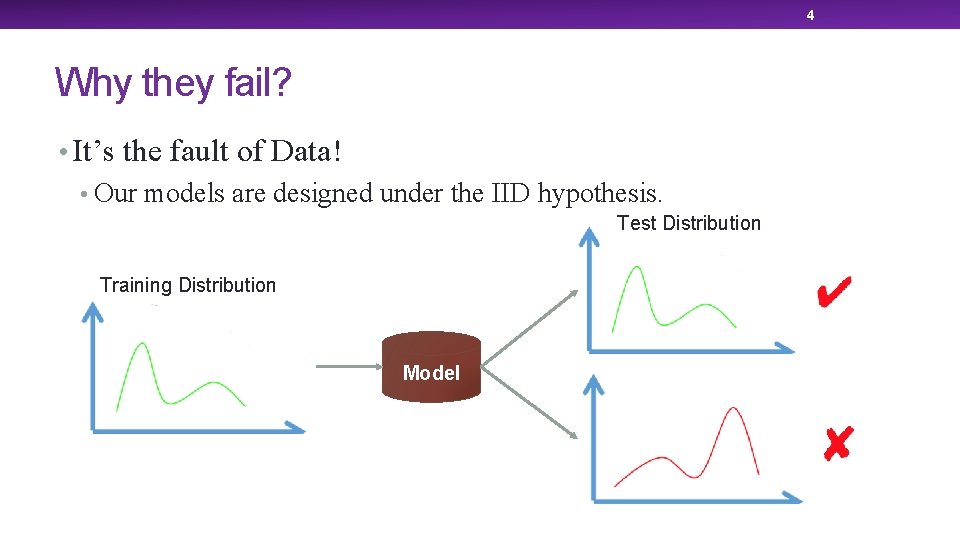

4 Why they fail? • It’s the fault of Data! • Our models are designed under the IID hypothesis. Test Distribution Training Distribution Model

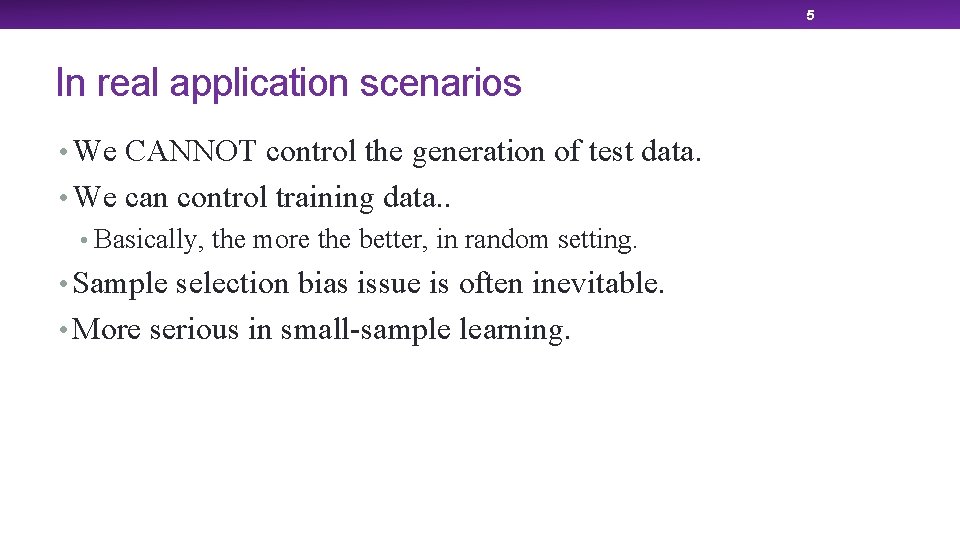

5 In real application scenarios • We CANNOT control the generation of test data. • We can control training data. . • Basically, the more the better, in random setting. • Sample selection bias issue is often inevitable. • More serious in small-sample learning.

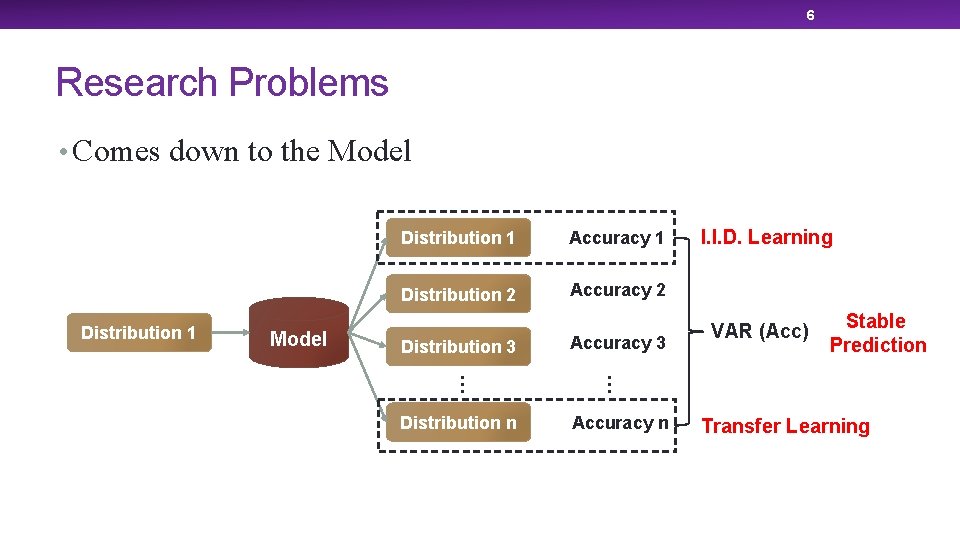

6 Research Problems • Comes down to the Model Distribution 1 Accuracy 1 Distribution 2 Accuracy 2 Distribution 3 VAR (Acc) Stable Prediction … … Distribution n Accuracy 3 I. I. D. Learning Accuracy n Transfer Learning

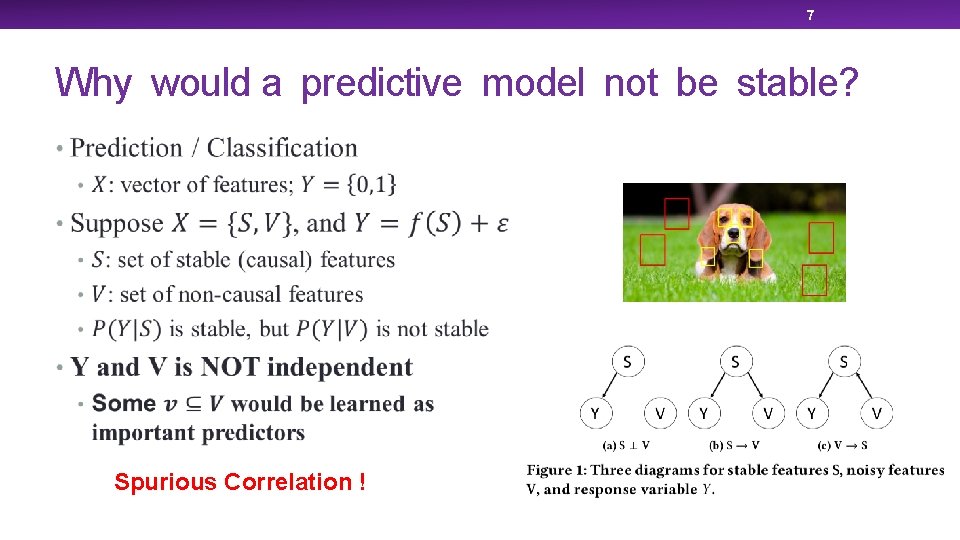

7 Why would a predictive model not be stable? Spurious Correlation !

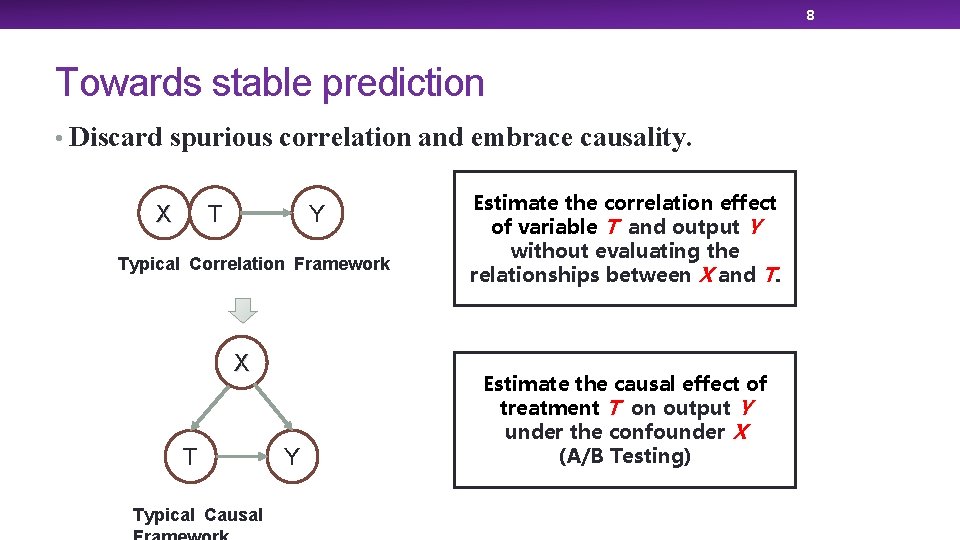

8 Towards stable prediction • Discard spurious correlation and embrace causality. Y T X Typical Correlation Framework X T Typical Causal Y Estimate the correlation effect of variable T and output Y without evaluating the relationships between X and T. Estimate the causal effect of treatment T on output Y under the confounder X (A/B Testing)

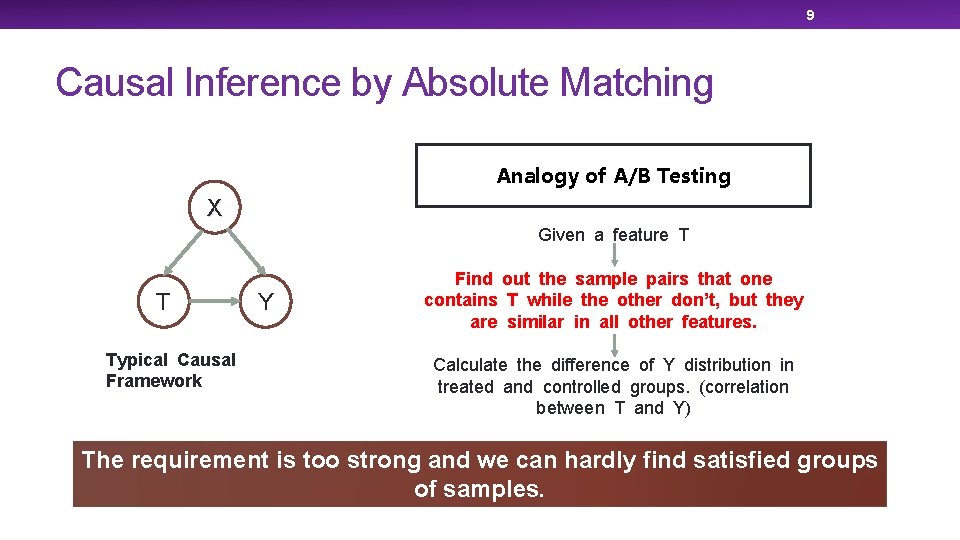

9 Causal Inference by Absolute Matching Analogy of A/B Testing X Given a feature T T Typical Causal Framework Y Find out the sample pairs that one contains T while the other don’t, but they are similar in all other features. Calculate the difference of Y distribution in treated and controlled groups. (correlation between T and Y) The requirement is too strong and we can hardly find satisfied groups of samples.

10 Causal Inference by Confounder Balancing Analogy of A/B Testing X T Typical Causal Framework Given a feature T Y Assign different weights to samples so that the samples with T and the samples without T have similar distributions in X Calculate the difference of Y distribution in treated and controlled groups. (correlation between T and Y) Too many parameters. For N samples and K features, we need to learn K*N weights. Not learning-friendly.

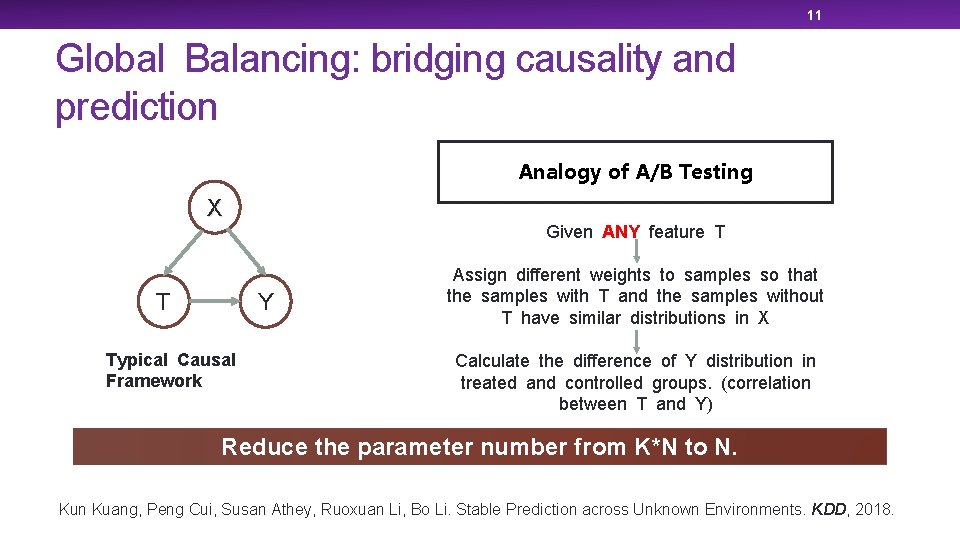

11 Global Balancing: bridging causality and prediction Analogy of A/B Testing X T Given ANY feature T Y Typical Causal Framework Assign different weights to samples so that the samples with T and the samples without T have similar distributions in X Calculate the difference of Y distribution in treated and controlled groups. (correlation between T and Y) Reduce the parameter number from K*N to N. Kun Kuang, Peng Cui, Susan Athey, Ruoxuan Li, Bo Li. Stable Prediction across Unknown Environments. KDD, 2018.

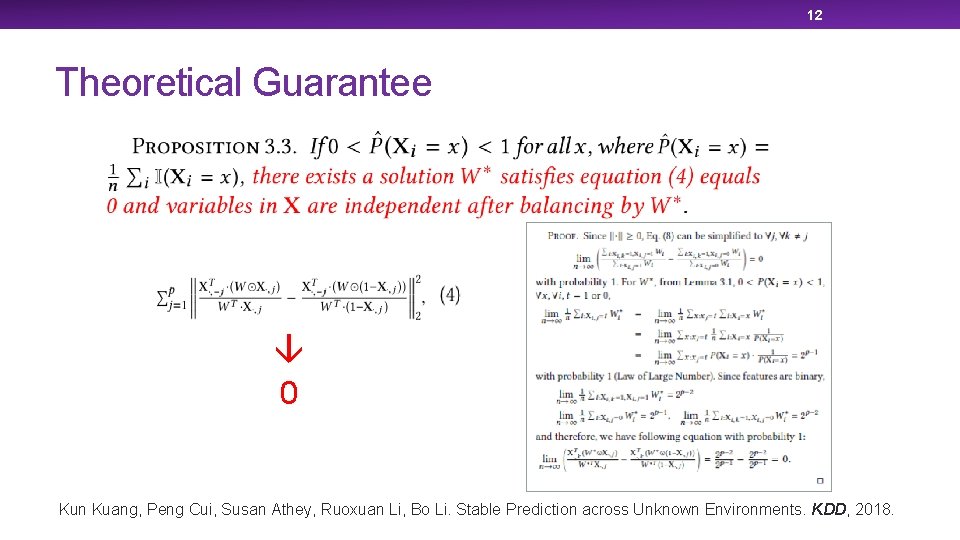

12 Theoretical Guarantee 0 Kun Kuang, Peng Cui, Susan Athey, Ruoxuan Li, Bo Li. Stable Prediction across Unknown Environments. KDD, 2018.

13 Causal Regularizer Set feature j as treatment variable All features excluding treatment j Sample Weights Indicator of treatment status Zheyan Shen, Peng Cui, Kun Kuang, Bo Li. Causally Regularized Learning on Data with Agnostic Bias. ACM MM, 2018.

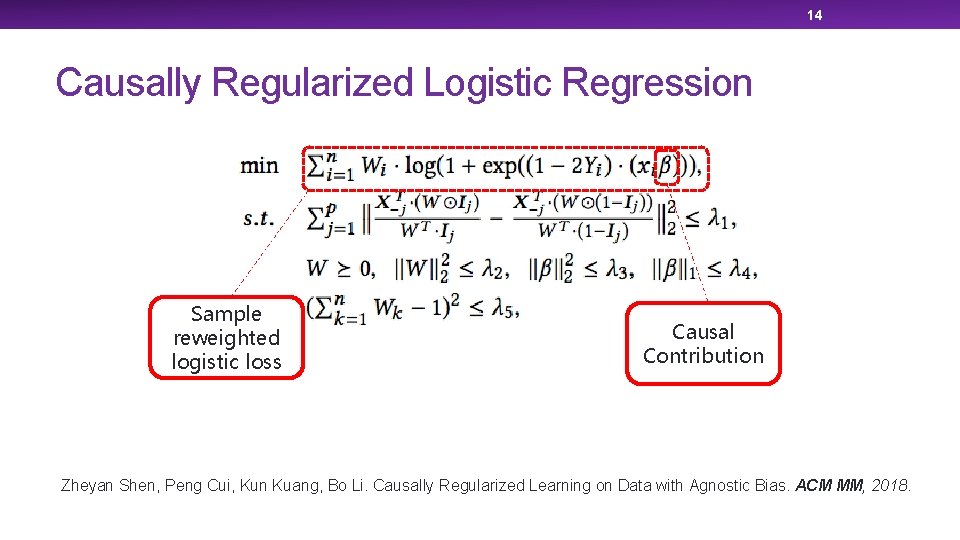

14 Causally Regularized Logistic Regression Sample reweighted logistic loss Causal Contribution Zheyan Shen, Peng Cui, Kun Kuang, Bo Li. Causally Regularized Learning on Data with Agnostic Bias. ACM MM, 2018.

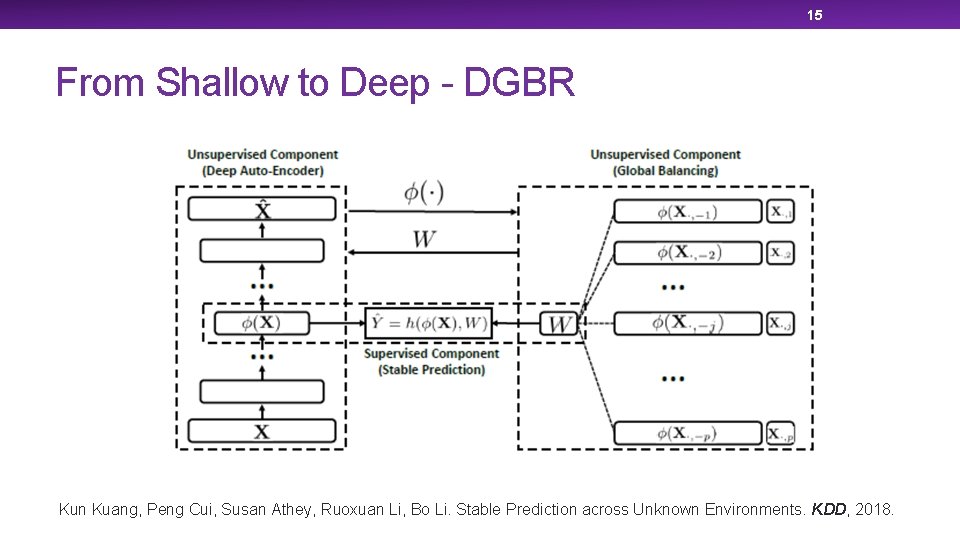

15 From Shallow to Deep - DGBR Kun Kuang, Peng Cui, Susan Athey, Ruoxuan Li, Bo Li. Stable Prediction across Unknown Environments. KDD, 2018.

16 Experiment 1 – non-i. i. d. image classification • Source: YFCC 100 M • Type: high-resolution and multi-tags • Scale: 10 -category, each with nearly 1000 images • Method: select 5 context tags which are frequently co-occurred with the major tag (category label)

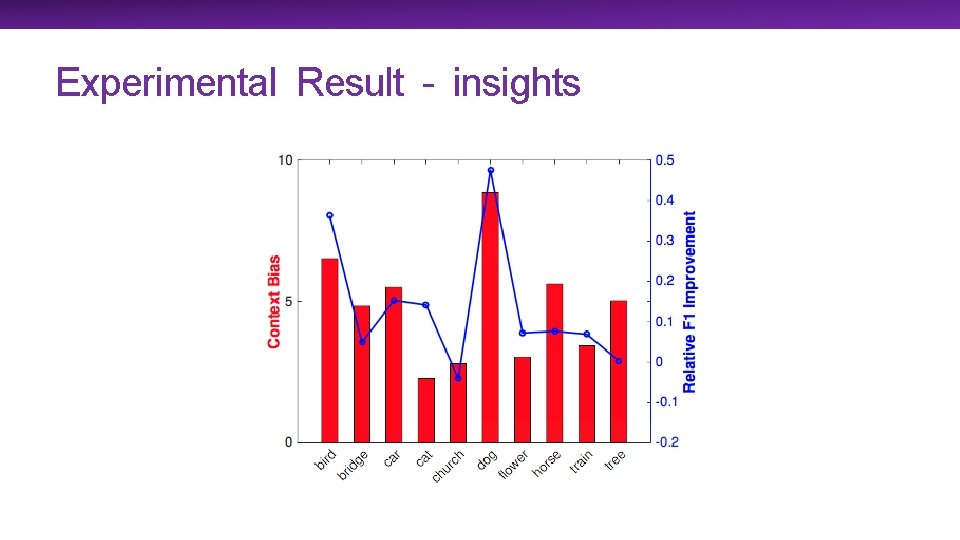

Experimental Result - insights

18 Experimental Result – Stability Traditional regression models are very sensitive to non-iid setting. But our model performs stably.

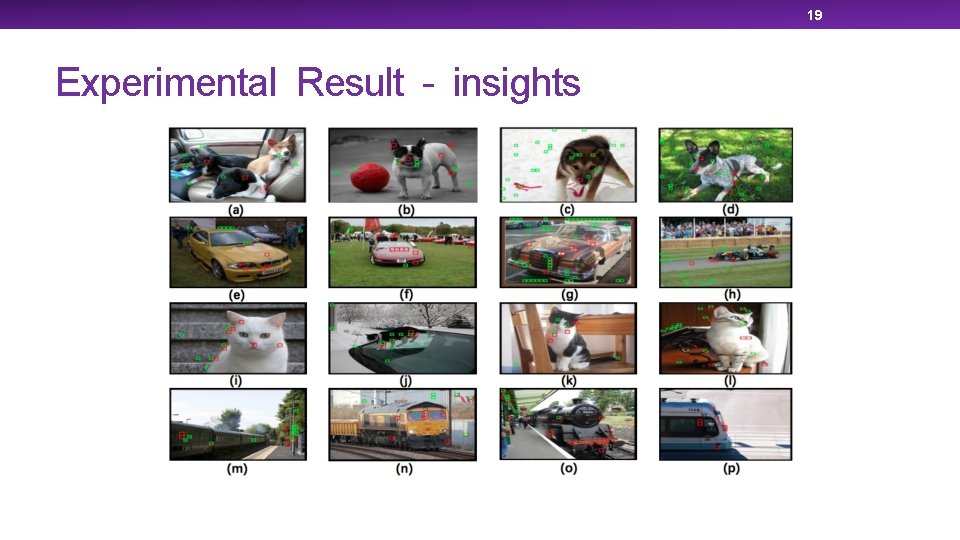

19 Experimental Result - insights

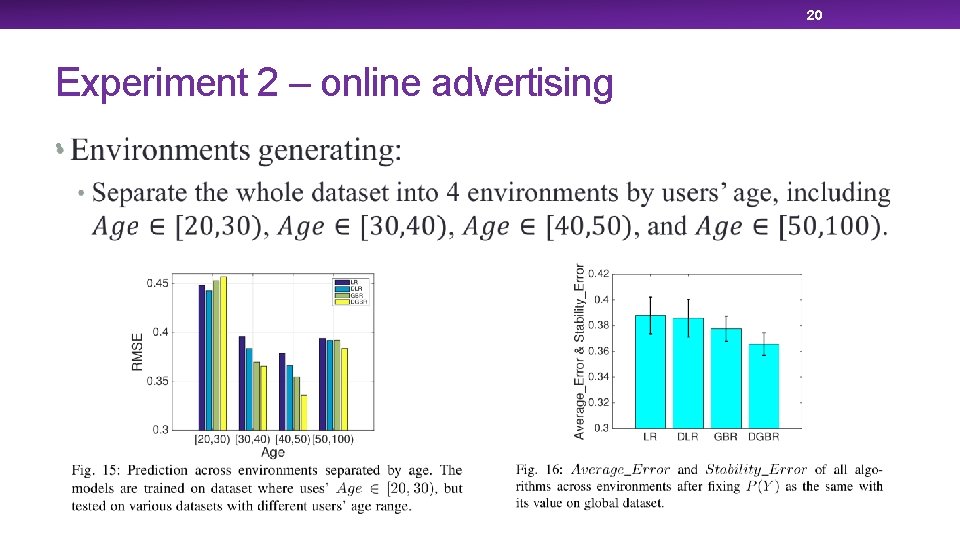

20 Experiment 2 – online advertising •

21 Conclusions • Predictive modeling is not only about Accuracy. • Stability is critical for us to trust a predictive model. • Causality has been demonstrated to be useful in stable prediction. • How to marry causality with predictive modeling effectively and efficiently is still an open problem.

22 Acknowledgement Kun Kuang Zheyan Shen Susan Athey Bo Li Shiqiang Yang Tsinghua U Stanford U Tsinghua U

23 Reference Ø Kun Kuang, Peng Cui, Susan Athey, Ruoxuan Li, Bo Li. Stable Prediction across Unknown Environments. KDD, 2018. Ø Zheyan Shen, Peng Cui, Kun Kuang, Bo Li. Causally Regularized Learning on Data with Agnostic Bias. ACM Multimedia, 2018. Ø Kun Kuang, Peng Cui, Bo Li, Shiqiang Yang. Estimating Treatment Effect in the Wild via Differentiated Confounder Balancing. KDD, 2017. Ø Kun Kuang, Peng Cui, Bo Li, Shiqiang Yang. Treatment Effect Estimation with Data-Driven Variable Decomposition. AAAI, 2017.

24 Thanks! Peng Cui cuip@tsinghua. edu. cn http: //pengcui. thumedialab. com

- Slides: 24