Special Topics in Computer Science Advanced Topics in

- Slides: 29

Special Topics in Computer Science Advanced Topics in Information Retrieval Chapter 2: Modeling Alexander Gelbukh www. Gelbukh. com

Previous chapter q User Information Need o Vague o Semantic, not formal q Document Relevance o Order, not retrieve q Huge amount of information o Efficiency concerns o Tradeoffs q Art more than science 2

Modeling q q Still science: computation is formal No good methods to work with (vague) semantics Thus, simplify to get a (formal) model Develop (precise) math over this (simple) model Why math if the model is not precise (simplified)? phenomenon model = step 1 = step 2 =. . . = result math phenomenon model step 1 step 2 . . . ? ! 3

Modeling q Substitute a complex real phenomenon with a simple model, which you can measure and manipulate formally q Keep only important properties (for this application) q Do this with text: 4

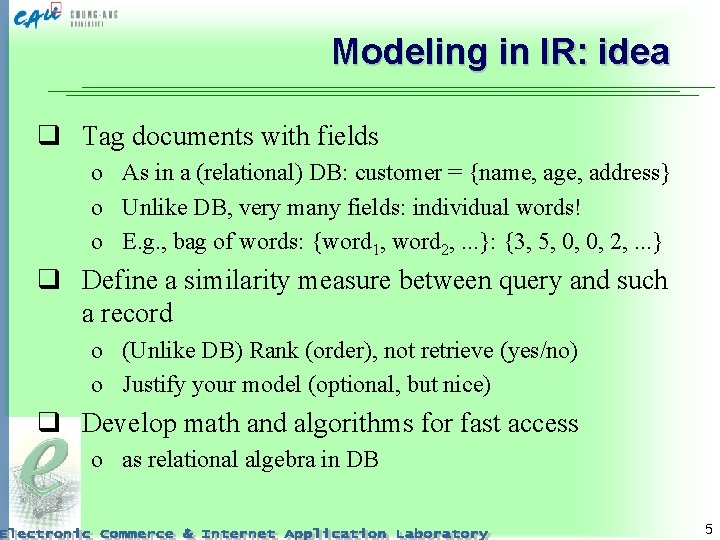

Modeling in IR: idea q Tag documents with fields o As in a (relational) DB: customer = {name, age, address} o Unlike DB, very many fields: individual words! o E. g. , bag of words: {word 1, word 2, . . . }: {3, 5, 0, 0, 2, . . . } q Define a similarity measure between query and such a record o (Unlike DB) Rank (order), not retrieve (yes/no) o Justify your model (optional, but nice) q Develop math and algorithms for fast access o as relational algebra in DB 5

Taxonomy of IR systems

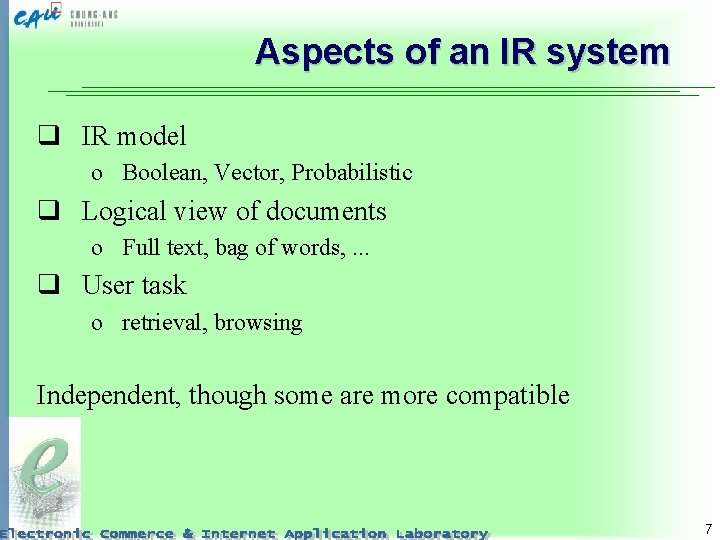

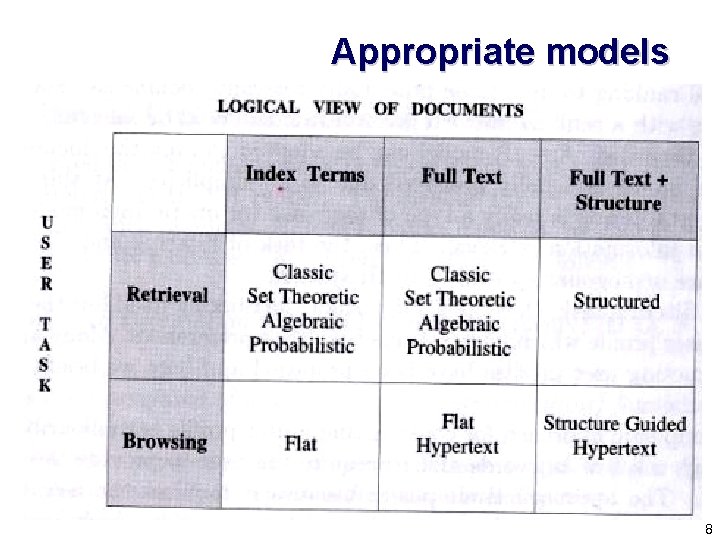

Aspects of an IR system q IR model o Boolean, Vector, Probabilistic q Logical view of documents o Full text, bag of words, . . . q User task o retrieval, browsing Independent, though some are more compatible 7

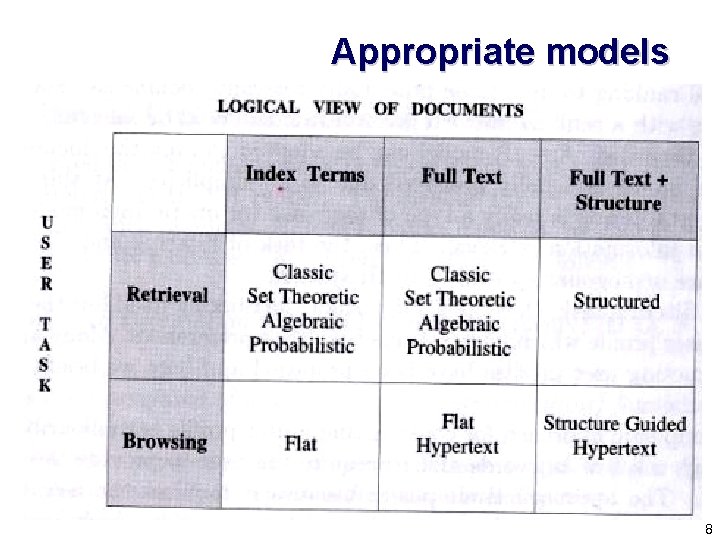

Appropriate models 8

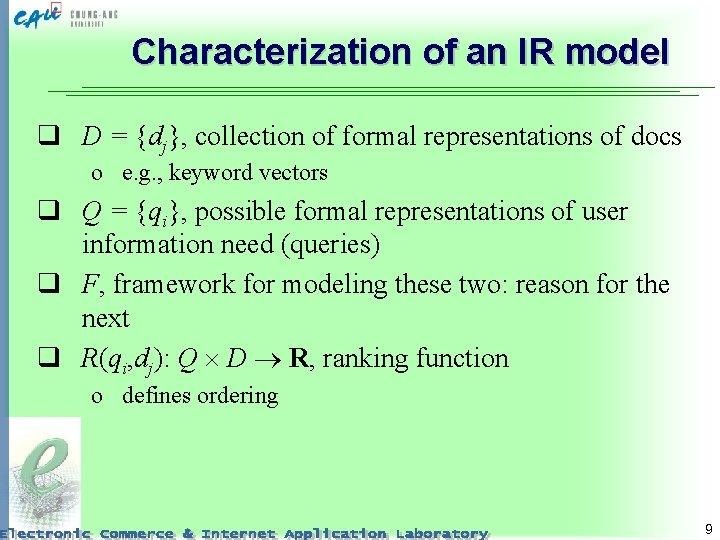

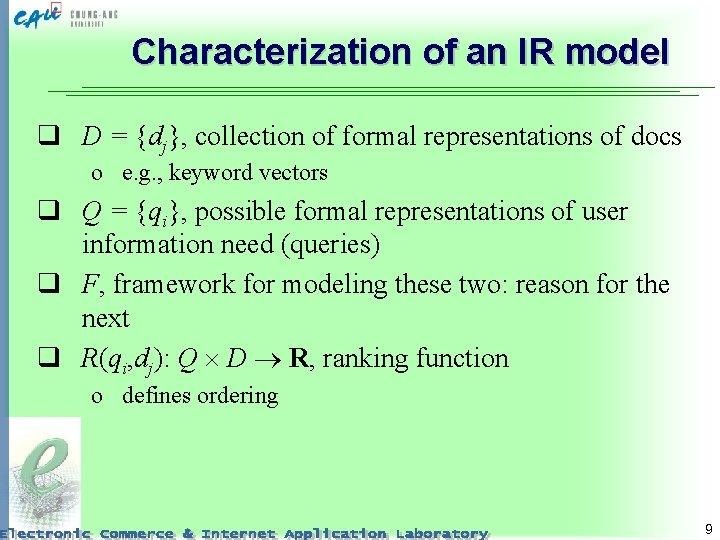

Characterization of an IR model q D = {dj}, collection of formal representations of docs o e. g. , keyword vectors q Q = {qi}, possible formal representations of user information need (queries) q F, framework for modeling these two: reason for the next q R(qi, dj): Q D R, ranking function o defines ordering 9

Specific IR models

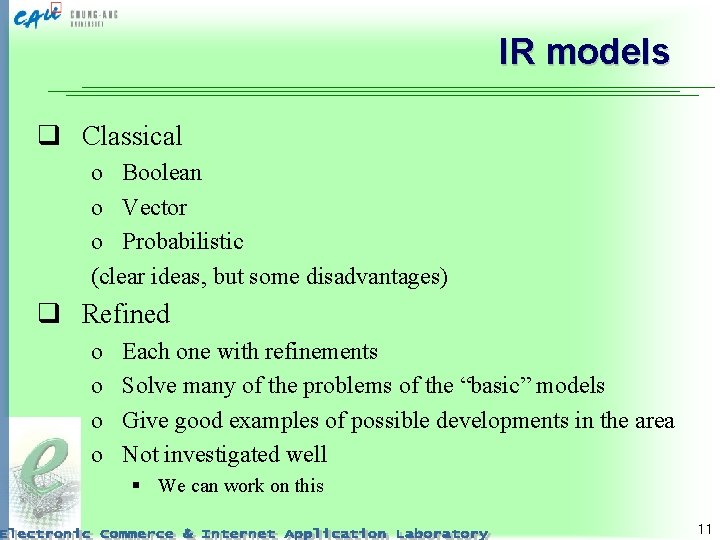

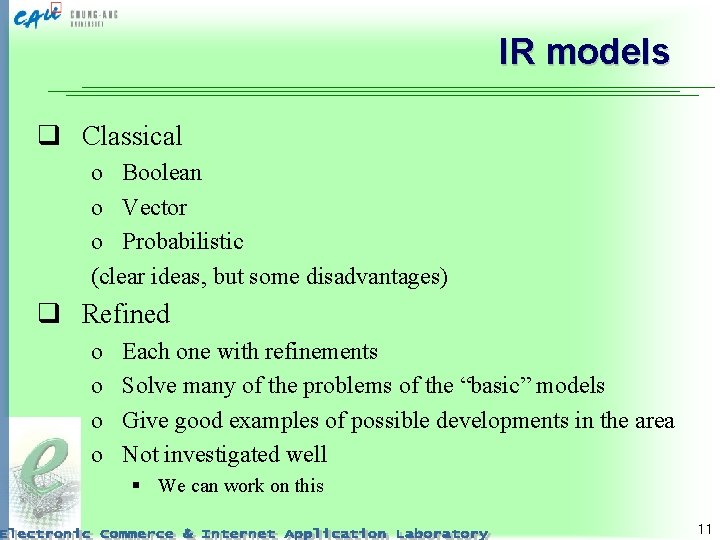

IR models q Classical o Boolean o Vector o Probabilistic (clear ideas, but some disadvantages) q Refined o o Each one with refinements Solve many of the problems of the “basic” models Give good examples of possible developments in the area Not investigated well § We can work on this 11

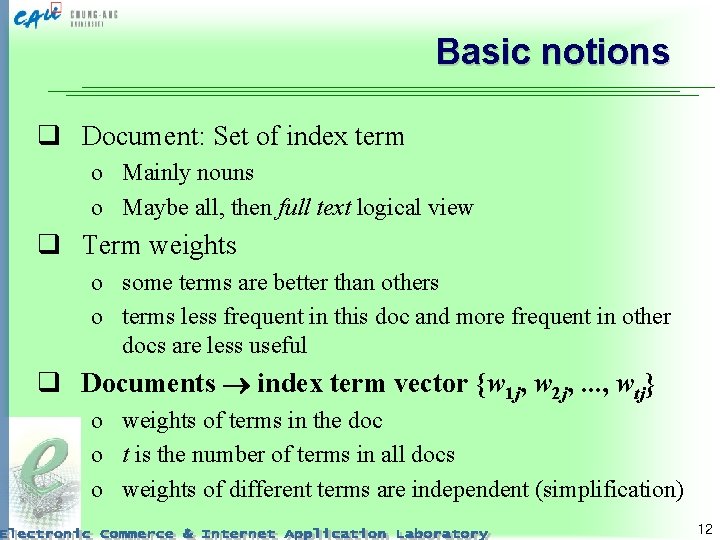

Basic notions q Document: Set of index term o Mainly nouns o Maybe all, then full text logical view q Term weights o some terms are better than others o terms less frequent in this doc and more frequent in other docs are less useful q Documents index term vector {w 1 j, w 2 j, . . . , wtj} o weights of terms in the doc o t is the number of terms in all docs o weights of different terms are independent (simplification) 12

Boolean model q Weights {0, 1} o Doc: set of words q Query: Boolean expression o R(qi, dj) {0, 1} q Good: o clear semantics, neat formalism, simple q Bad: o no ranking ( data retrieval), retrieves too many or too few o difficult to translate User Information Need into query q No term weighting 13

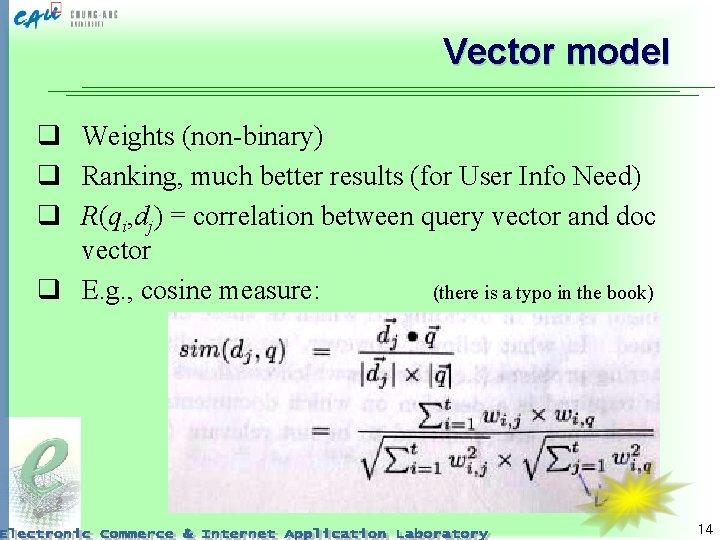

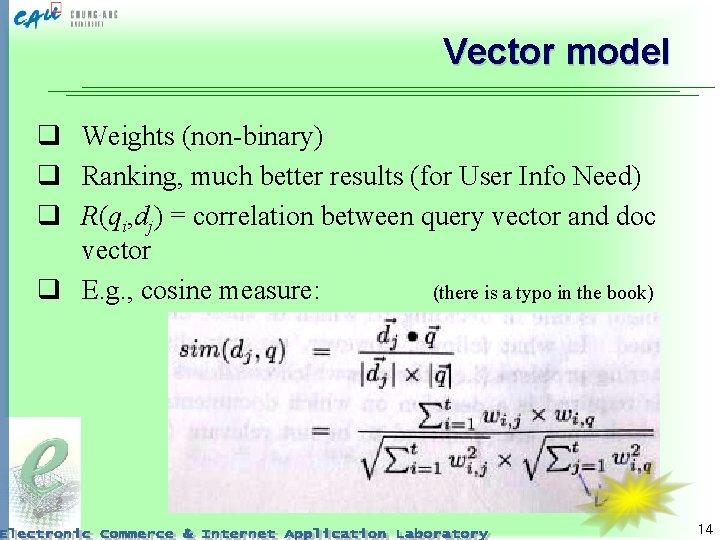

Vector model q Weights (non-binary) q Ranking, much better results (for User Info Need) q R(qi, dj) = correlation between query vector and doc vector q E. g. , cosine measure: (there is a typo in the book) 14

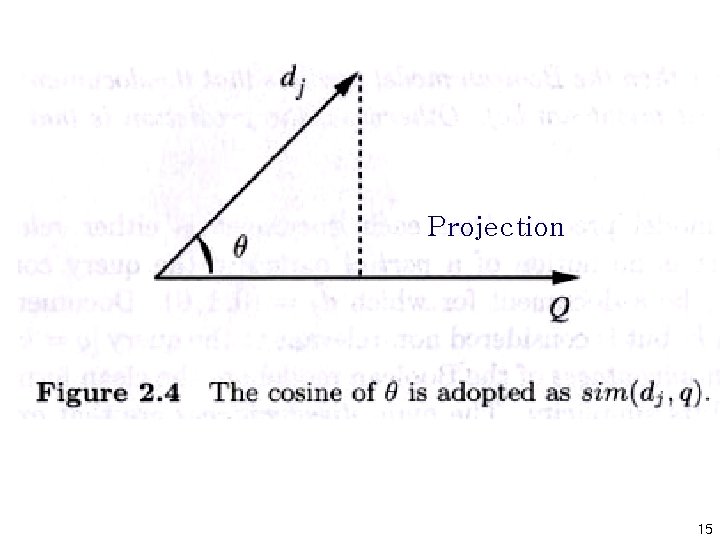

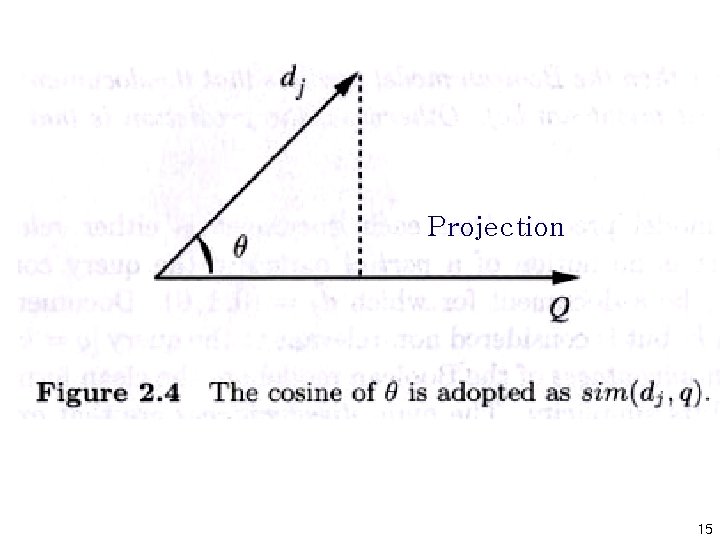

Projection 15

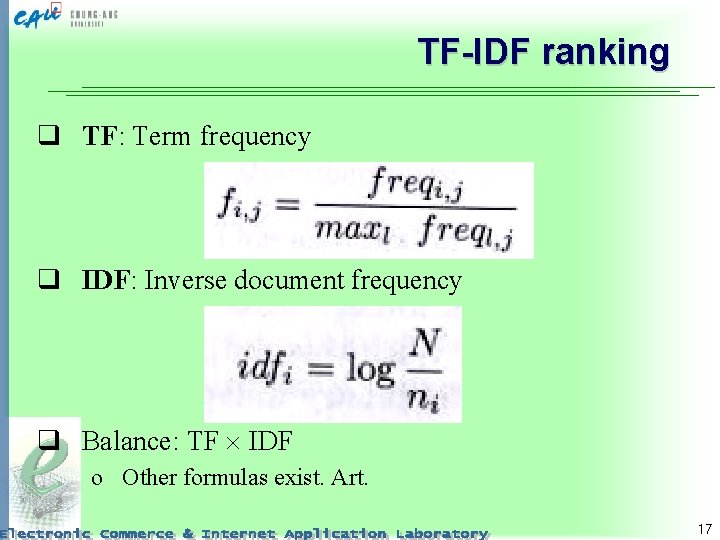

Weights q How are the weights wij obtained? Many variants. One way: TF-IDF balance q TF: Term frequency o How well the term is related to the doc? o If appears many times, is important o Proportional to the number of times that appears q IDF: Inverse document frequency o How important is the term to distinguish documents? o If appears in many docs, is not important o Inversely proportional to number of docs where appears q Contradictory. How to balance? 16

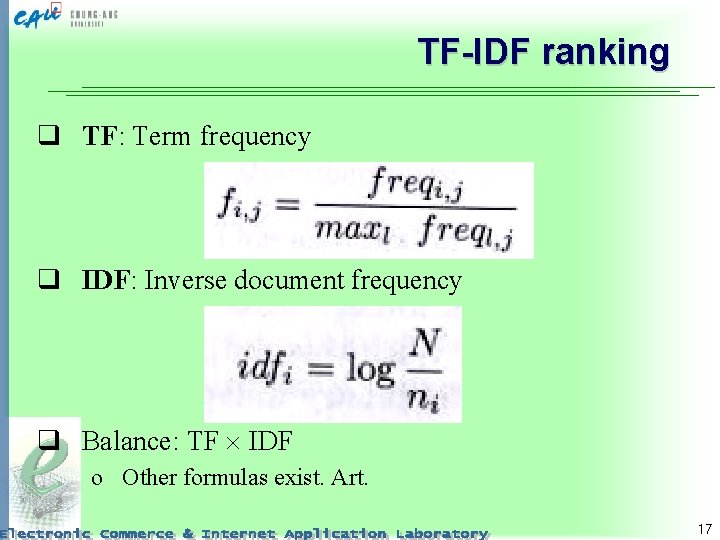

TF-IDF ranking q TF: Term frequency q IDF: Inverse document frequency q Balance: TF IDF o Other formulas exist. Art. 17

Advantages of vector model One of the best known strategies q Improves quality (term weighting) q Allows approximate matching (partial matching) q Gives ranking by similarity (cosine formula) q Simple, fast But: q Does not consider term dependencies o considering them in a bad way hurts quality o no known good way q No logical expressions (e. g. , negation: “mouse & NOT cat”) 18

Probabilistic model q Assumptions: o set of “relevant” docs, o probabilities of docs to be relevant o After Bayes calculation: probabilities of terms to be important for defining relevant docs q Initial idea: interact with the user. o Generate an initial set o Ask the user to mark some of them as relevant or not o Estimate the probabilities of keywords. Repeat q Can be done without user o Just re-calculate the probabilities assuming the user’s acceptance is the same as predicted ranking 19

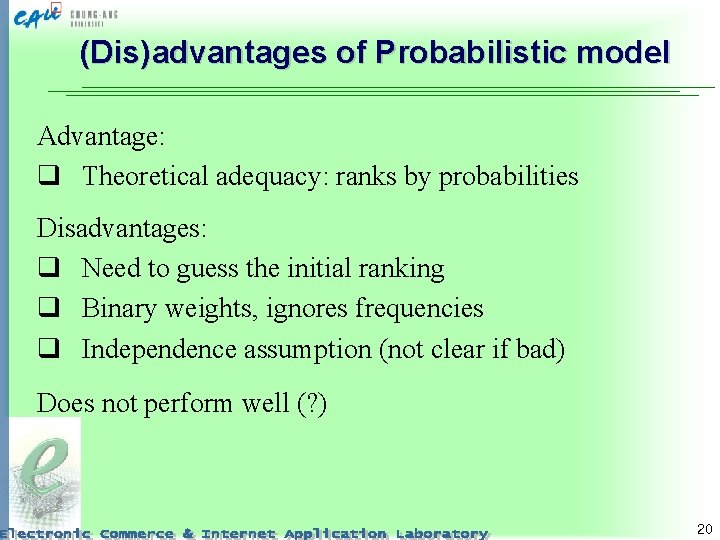

(Dis) advantages of Probabilistic model Advantage: q Theoretical adequacy: ranks by probabilities Disadvantages: q Need to guess the initial ranking q Binary weights, ignores frequencies q Independence assumption (not clear if bad) Does not perform well (? ) 20

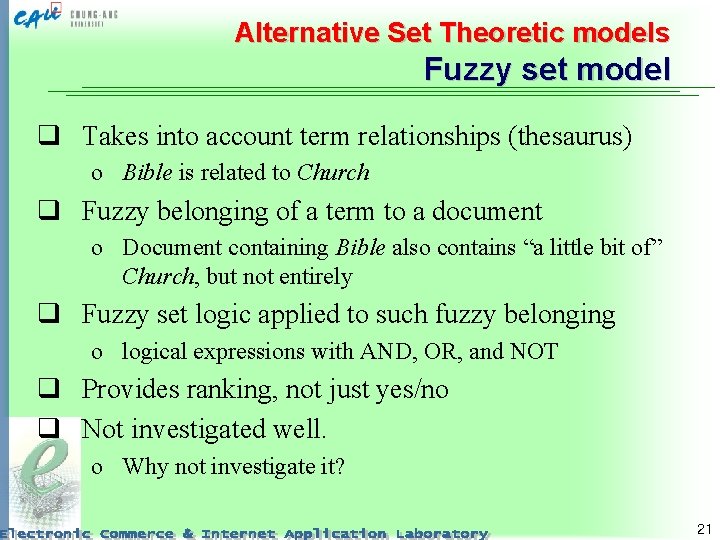

Alternative Set Theoretic models Fuzzy set model q Takes into account term relationships (thesaurus) o Bible is related to Church q Fuzzy belonging of a term to a document o Document containing Bible also contains “a little bit of” Church, but not entirely q Fuzzy set logic applied to such fuzzy belonging o logical expressions with AND, OR, and NOT q Provides ranking, not just yes/no q Not investigated well. o Why not investigate it? 21

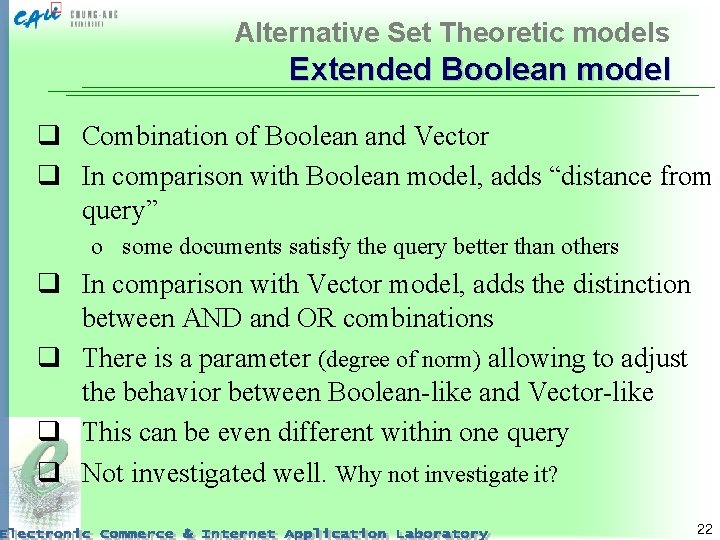

Alternative Set Theoretic models Extended Boolean model q Combination of Boolean and Vector q In comparison with Boolean model, adds “distance from query” o some documents satisfy the query better than others q In comparison with Vector model, adds the distinction between AND and OR combinations q There is a parameter (degree of norm) allowing to adjust the behavior between Boolean-like and Vector-like q This can be even different within one query q Not investigated well. Why not investigate it? 22

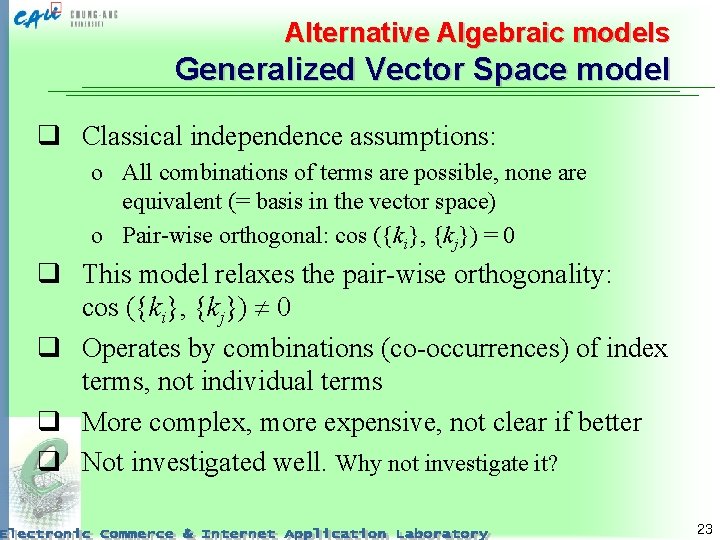

Alternative Algebraic models Generalized Vector Space model q Classical independence assumptions: o All combinations of terms are possible, none are equivalent (= basis in the vector space) o Pair-wise orthogonal: cos ({ki}, {kj}) = 0 q This model relaxes the pair-wise orthogonality: cos ({ki}, {kj}) 0 q Operates by combinations (co-occurrences) of index terms, not individual terms q More complex, more expensive, not clear if better q Not investigated well. Why not investigate it? 23

Alternative Algebraic models Latent Semantic Indexing model q Index by larger units, “concepts” sets of terms used together q Retrieve a document that share concepts with a relevant one (even if it does not contain query terms) q Group index terms together (map into lower dimensional space). So some terms are equivalent. o Not exactly, but this is the idea o Eliminates unimportant details o Depends on a parameter (what details are unimportant? ) q Not investigated well. Why not investigate it? 24

Alternative Algebraic models Neural Network model q NNs are good at matching q Iteratively uses the found documents as auxiliary queries o Spreading activation. o Terms docs terms docs . . . q q Like a built-in thesaurus First round gives same result as Vector model No evidence if it is good Not investigated well. Why not investigate it? 25

Models for browsing q Flat browsing: String o Just as a list of paper o No context cues provided q Structure guided: Tree o Hierarchy o Like directory tree in the computer q Hypertext (Internet!): Directed graph o No limitations of sequential writing o Modeled by a directed graph: links from unit A to unit B § units: docs, chapters, etc. o A map (with traversed path) can be helpful 26

Research issues q How people judge relevance? o ranking strategies q How to combine different sources of evidence? q What interfaces can help users to understand formulate their Information Need? o user interfaces: an open issue q Meta-search engines: combine results from different Web search engines o They almost do not intersect o How to combine ranking? 27

Conclusions q Modeling is needed formal operations q Boolean model is the simplest q Vector model is the best combination of quality and simplicity o TF-IDF term weighting o This (or similar) weighting is used in all further models q Many interesting and not well-investigated variations o possible future work 28

Thank you! Till March 22, 6 pm 29