Special Topics in Computer Science Advanced Topics in

- Slides: 48

Special Topics in Computer Science Advanced Topics in Information Retrieval Lecture 11: Natural Language Processing and IR. Semantics and Semantically-rich representations Alexander Gelbukh www. Gelbukh. com

Previous Lecture: Conclusions q Syntax structure is one of intermediate representations of a text for its processing q Helps text understanding § Thus reasoning, question answering, . . . q Directly helps POS tagging § Resolves lexical ambiguity of part of speech § But not WSD-type ambiguities q A big science in itself, with 50 (2000? ) years of history 2

Previous Lecture: Research topics q Faster algorithms § E. g. parallel q Handlinguistic phenomena not handled by current approaches q Ambiguity resolution! § Statistical methods § A lot can be done 3

Contents q Semantic representations § Semantic networks § Conceptual graphs q Simpler representations § Head-Modifier pairs q Tasks beyond IR § § Question Answering Summarization Information Extraction Cross-language IR 4

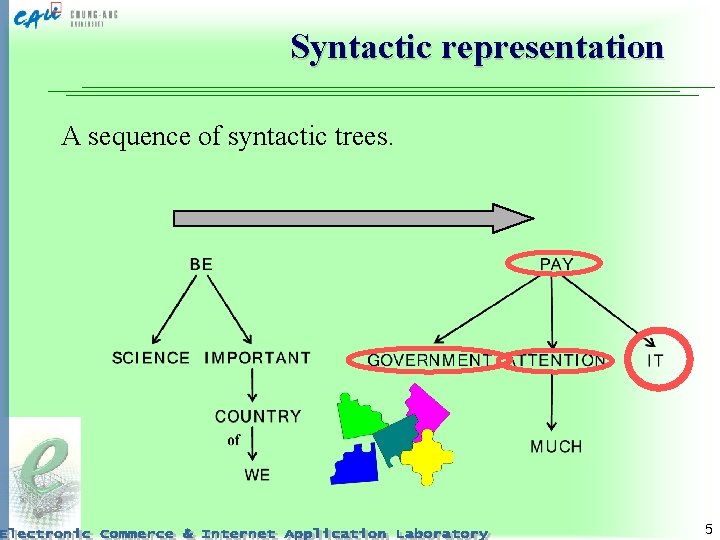

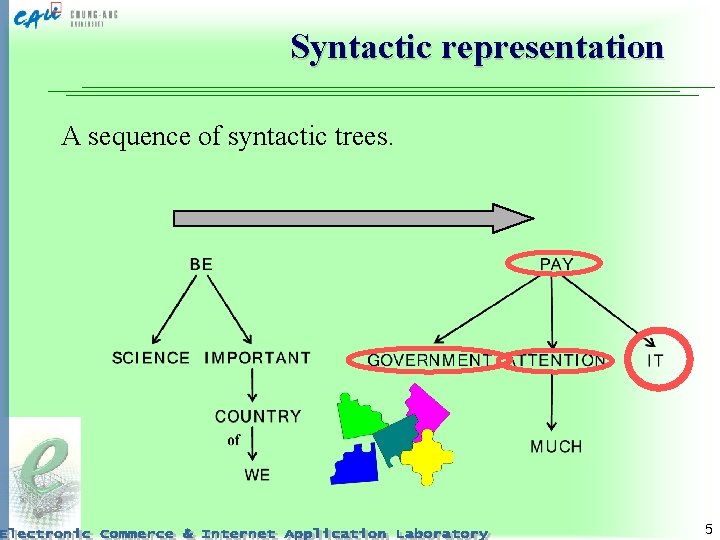

Syntactic representation A sequence of syntactic trees. 5

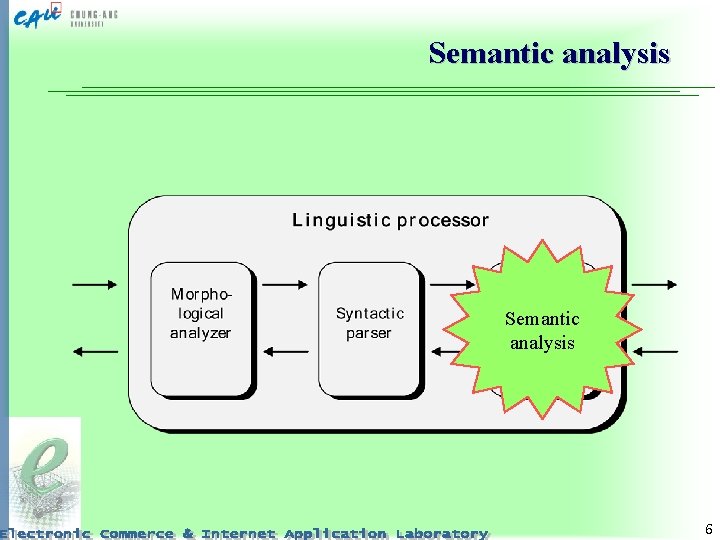

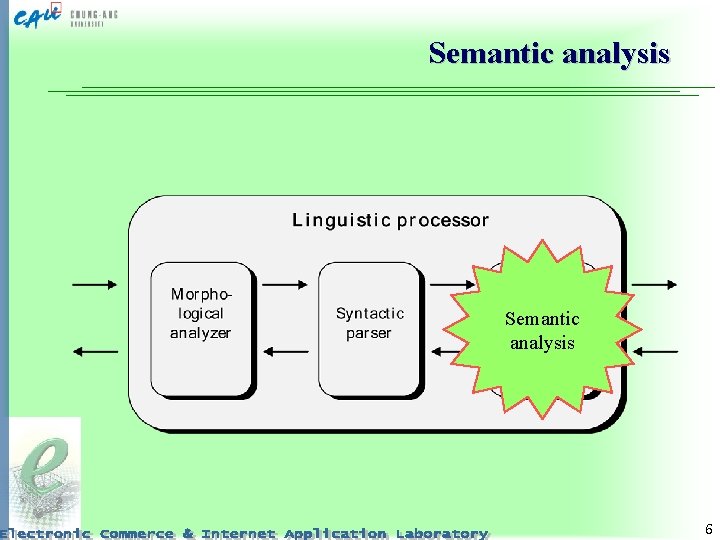

Semantic analysis 6

Semantic representation Complex structure of whole text 7

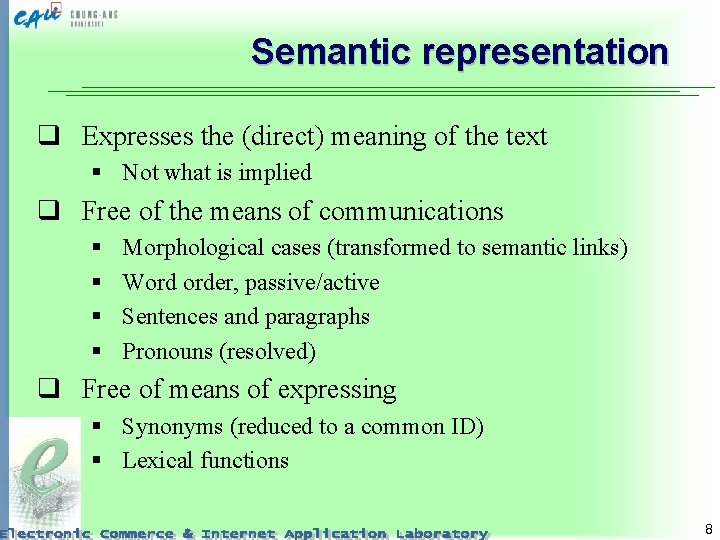

Semantic representation q Expresses the (direct) meaning of the text § Not what is implied q Free of the means of communications § § Morphological cases (transformed to semantic links) Word order, passive/active Sentences and paragraphs Pronouns (resolved) q Free of means of expressing § Synonyms (reduced to a common ID) § Lexical functions 8

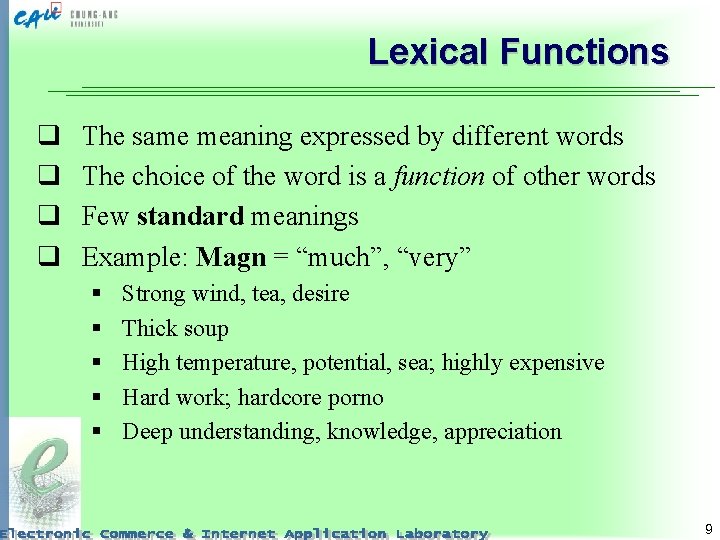

Lexical Functions q q The same meaning expressed by different words The choice of the word is a function of other words Few standard meanings Example: Magn = “much”, “very” § § § Strong wind, tea, desire Thick soup High temperature, potential, sea; highly expensive Hard work; hardcore porno Deep understanding, knowledge, appreciation 9

. . . Lexical Functions q “give” § § § pay attention provide help adjudge a prize yield the word confer a degree deliver a lection q “get” § attract attention § obtain help § receive a degree § attend a lection 10

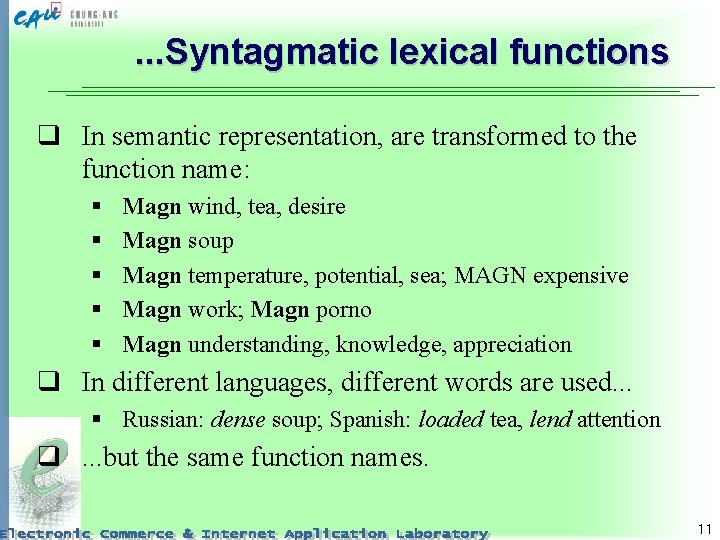

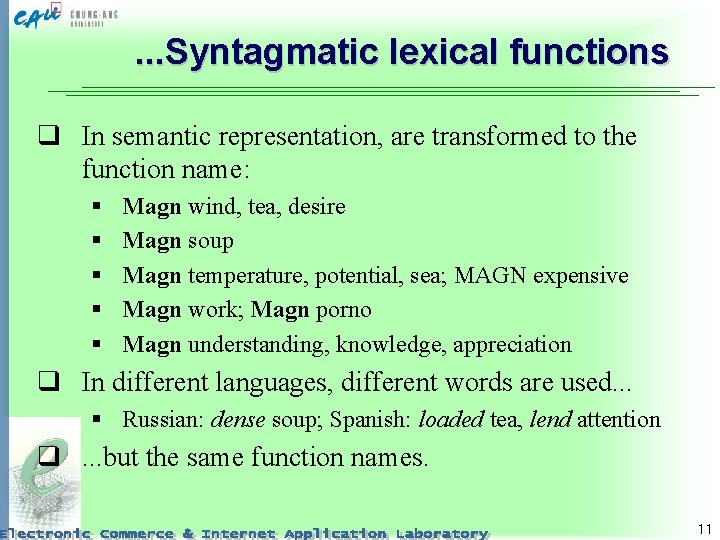

. . . Syntagmatic lexical functions q In semantic representation, are transformed to the function name: § § § Magn wind, tea, desire Magn soup Magn temperature, potential, sea; MAGN expensive Magn work; Magn porno Magn understanding, knowledge, appreciation q In different languages, different words are used. . . § Russian: dense soup; Spanish: loaded tea, lend attention q. . . but the same function names. 11

Example: Translation 12

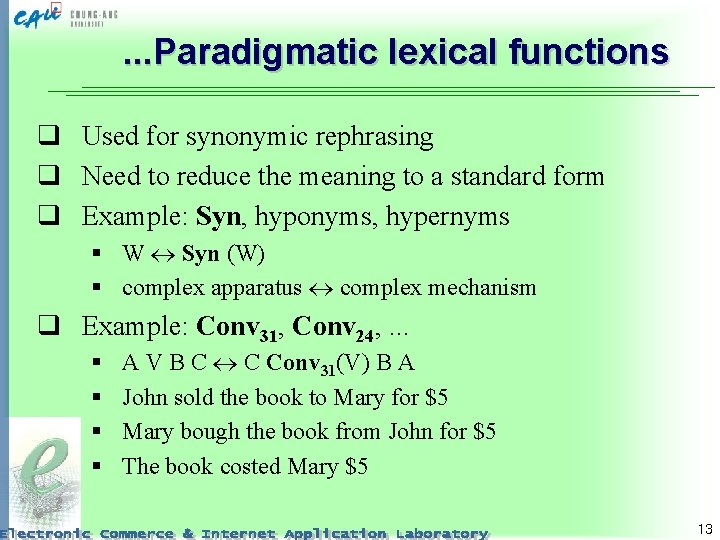

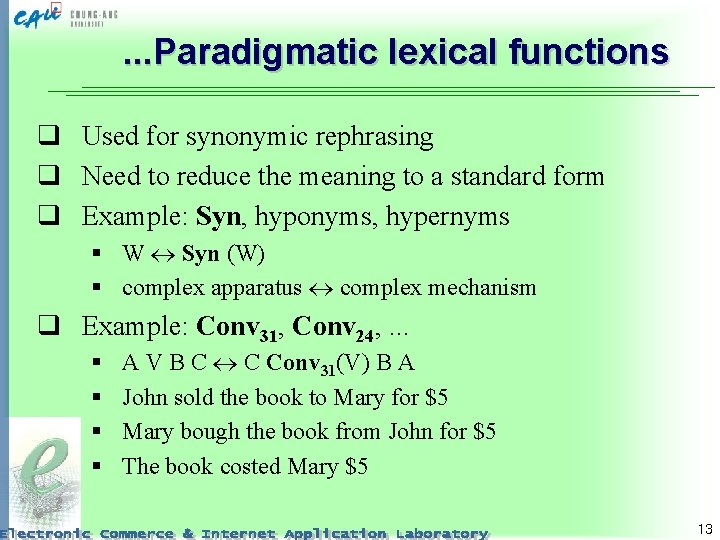

. . . Paradigmatic lexical functions q Used for synonymic rephrasing q Need to reduce the meaning to a standard form q Example: Syn, hyponyms, hypernyms § W Syn (W) § complex apparatus complex mechanism q Example: Conv 31, Conv 24, . . . § § A V B C C Conv 31(V) B A John sold the book to Mary for $5 Mary bough the book from John for $5 The book costed Mary $5 13

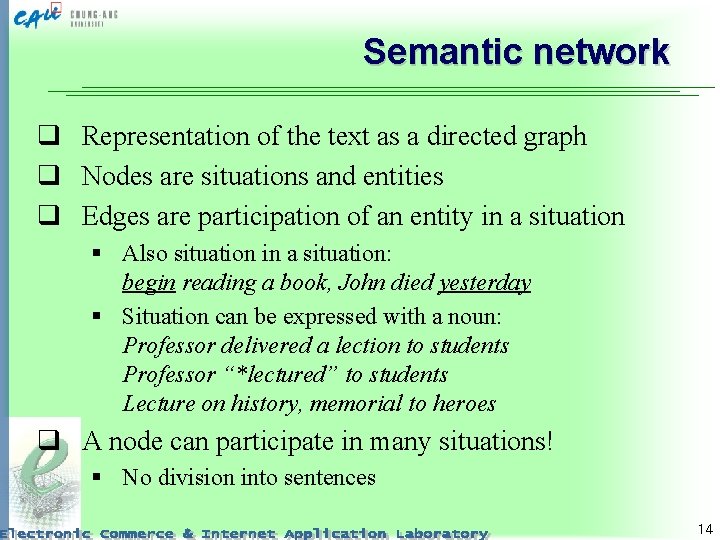

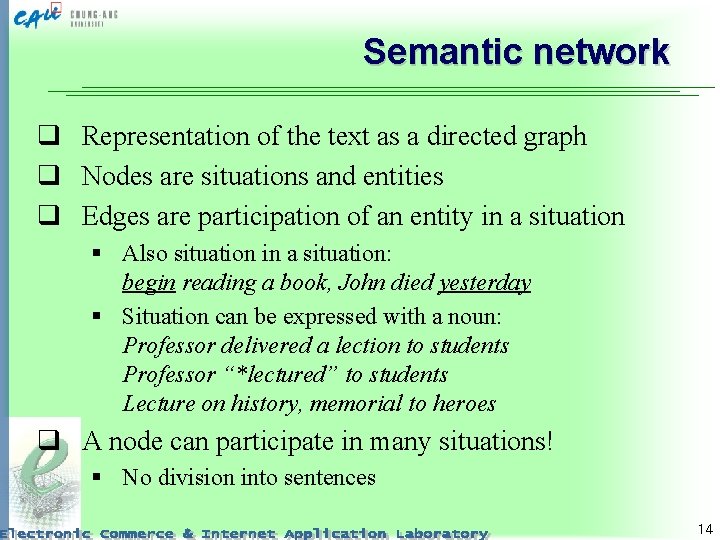

Semantic network q Representation of the text as a directed graph q Nodes are situations and entities q Edges are participation of an entity in a situation § Also situation in a situation: begin reading a book, John died yesterday § Situation can be expressed with a noun: Professor delivered a lection to students Professor “*lectured” to students Lecture on history, memorial to heroes q A node can participate in many situations! § No division into sentences 14

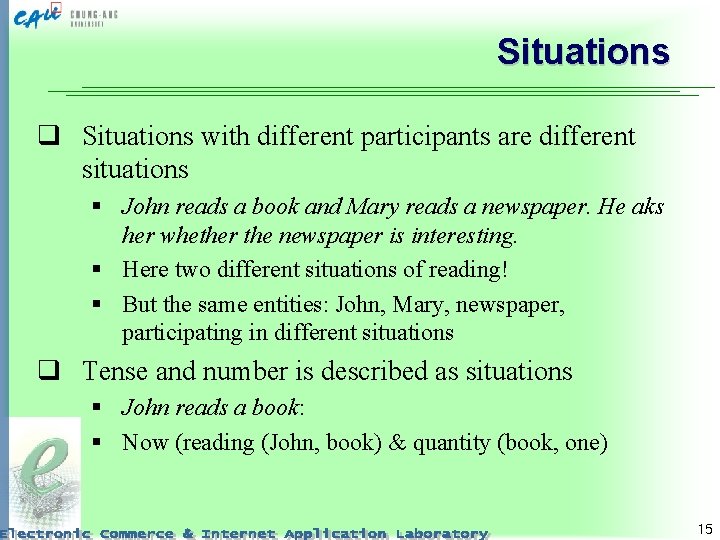

Situations q Situations with different participants are different situations § John reads a book and Mary reads a newspaper. He aks her whether the newspaper is interesting. § Here two different situations of reading! § But the same entities: John, Mary, newspaper, participating in different situations q Tense and number is described as situations § John reads a book: § Now (reading (John, book) & quantity (book, one) 15

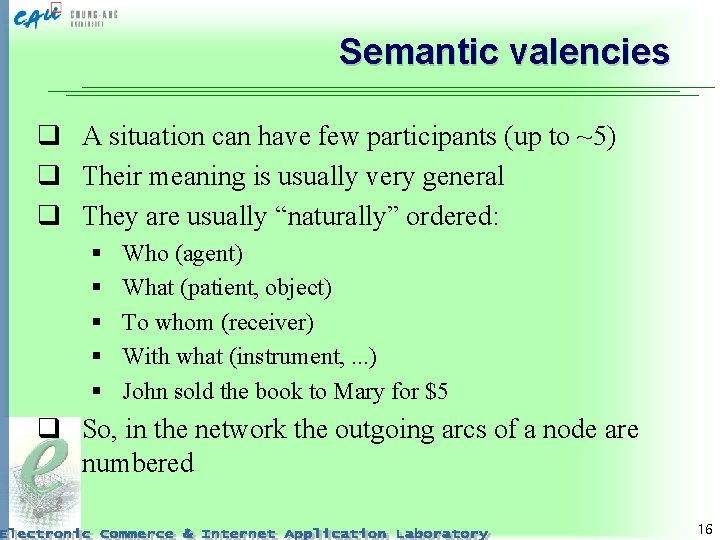

Semantic valencies q A situation can have few participants (up to ~5) q Their meaning is usually very general q They are usually “naturally” ordered: § § § Who (agent) What (patient, object) To whom (receiver) With what (instrument, . . . ) John sold the book to Mary for $5 q So, in the network the outgoing arcs of a node are numbered 16

Semantic representation Complex structure of whole text Now Give 2 1 ATTENTION Now 2 GOVERNMENT IMPORTANT 1 2 SCIENCE 1 COUNTRY 2 Quantity 1 WE Now Possess 1 17

Reasoning and common-sense info q One can reason on the network § If John sold a book, he does not have it q For this, additional knowledge is needed! q A huge amount of knowledge to reason § A 9 -year-old child knows some 10, 000 simple facts § Probably some of them can be inferred, but not (yet) automatically § There were attempts to compile such knowledge manually § There is a hope to compile it automatically. . . 18

Semantic representation. . . and common-sense knowledge 19

Computer representation q Logical predicates q Arcs are arguments q In AI, allows reasoning q In IR, can allow comparison even without reasoning 20

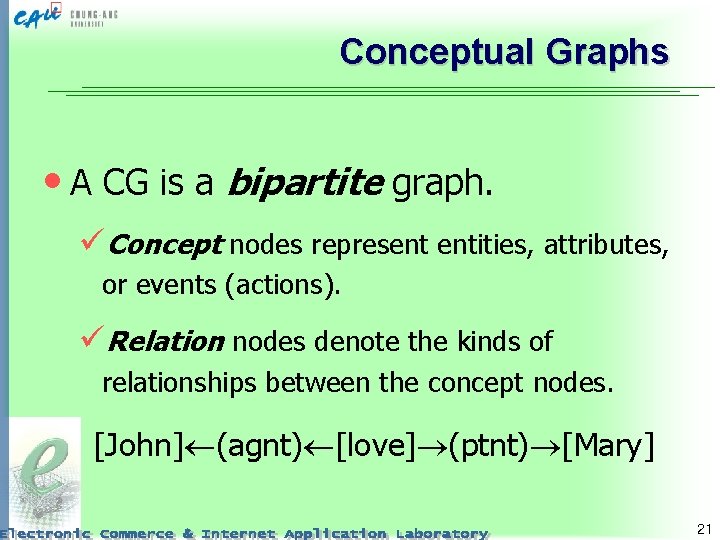

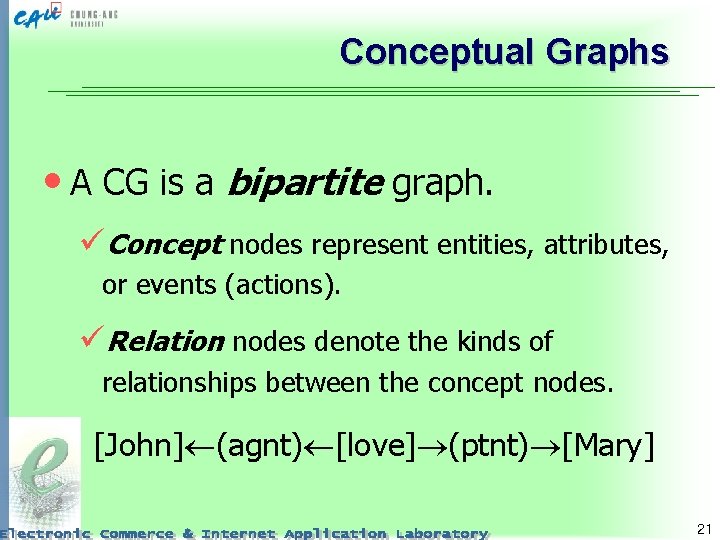

Conceptual Graphs • A CG is a bipartite graph. üConcept nodes represent entities, attributes, or events (actions). üRelation nodes denote the kinds of relationships between the concept nodes. [John] (agnt) [love] (ptnt) [Mary] 21

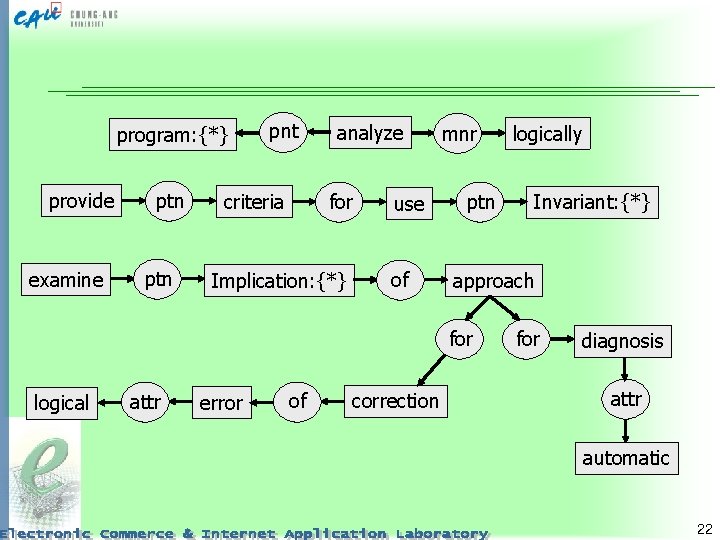

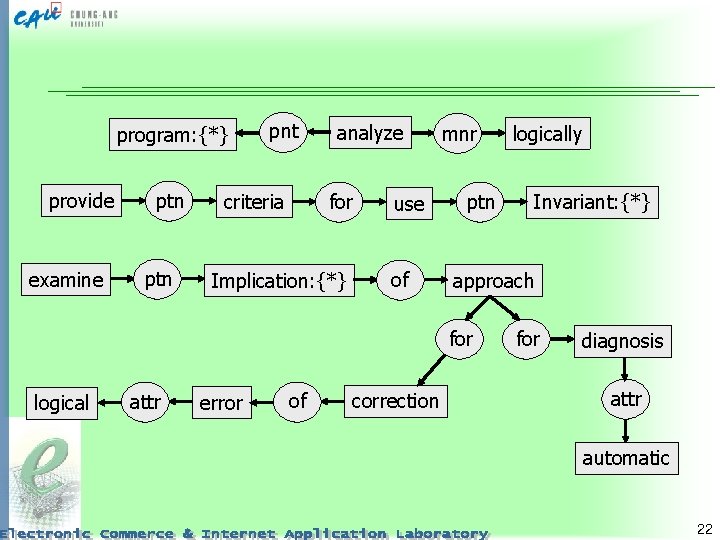

program: {*} provide examine ptn pnt criteria analyze for Implication: {*} use of mnr ptn attr error of correction Invariant: {*} approach for logically for diagnosis attr automatic 22

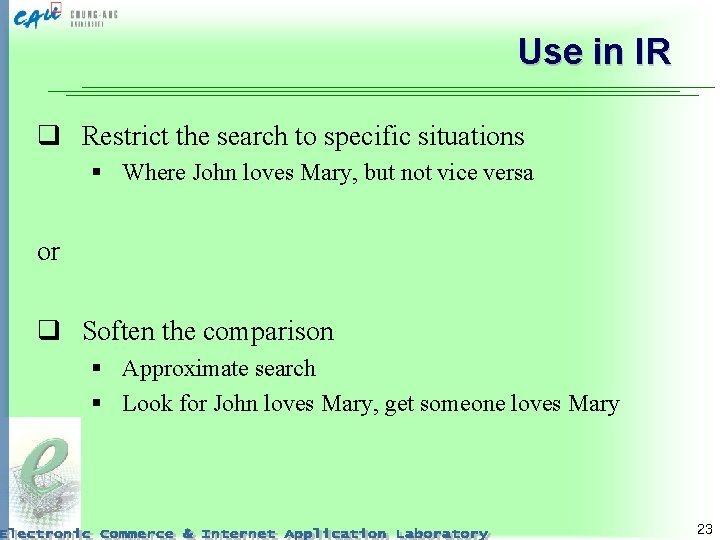

Use in IR q Restrict the search to specific situations § Where John loves Mary, but not vice versa or q Soften the comparison § Approximate search § Look for John loves Mary, get someone loves Mary 23

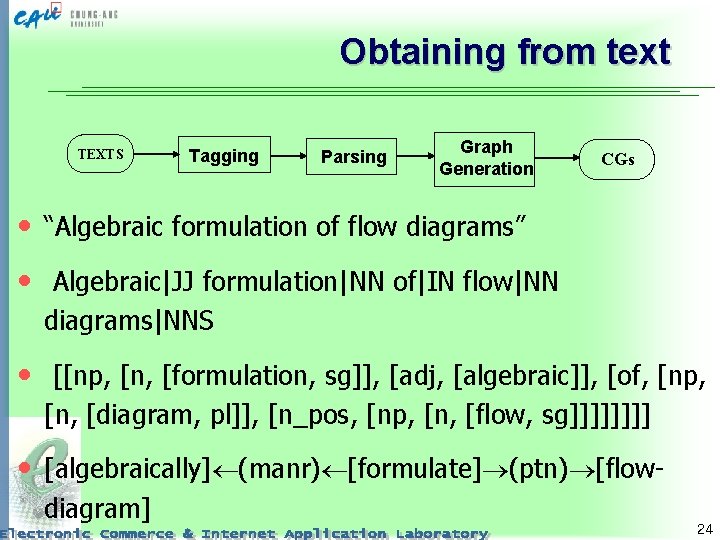

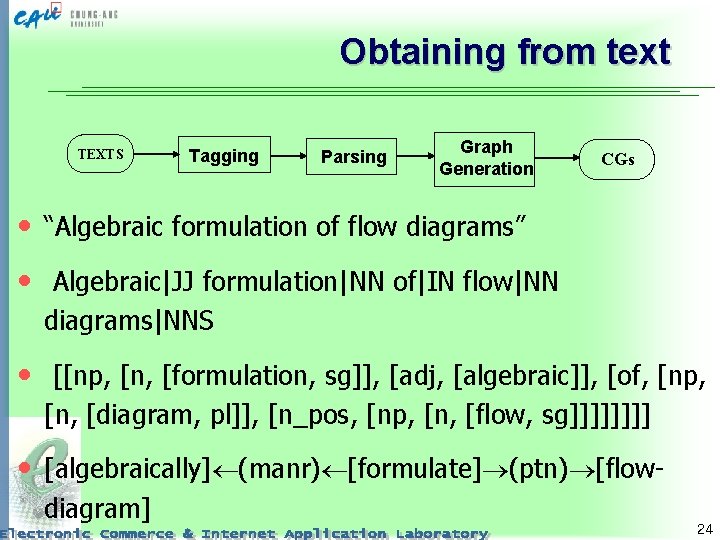

Obtaining from text TEXTS Tagging Parsing Graph Generation CGs • “Algebraic formulation of flow diagrams” • Algebraic|JJ formulation|NN of|IN flow|NN diagrams|NNS • [[np, [n, [formulation, sg]], [adj, [algebraic]], [of, [np, [n, [diagram, pl]], [n_pos, [np, [n, [flow, sg]]]] • [algebraically] (manr) [formulate] (ptn) [flowdiagram] 24

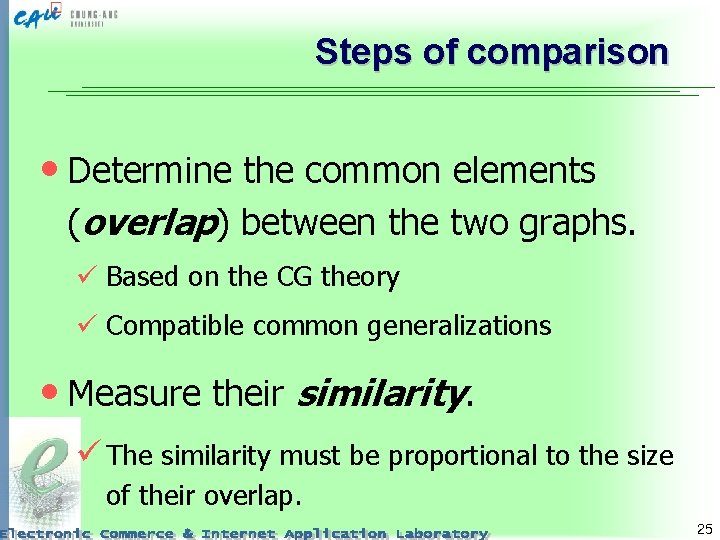

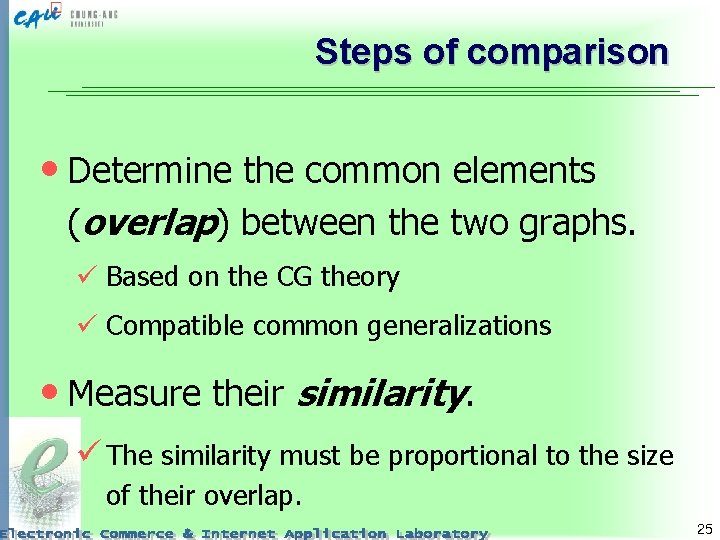

Steps of comparison • Determine the common elements (overlap) between the two graphs. ü Based on the CG theory ü Compatible common generalizations • Measure their similarity. ü The similarity must be proportional to the size of their overlap. 25

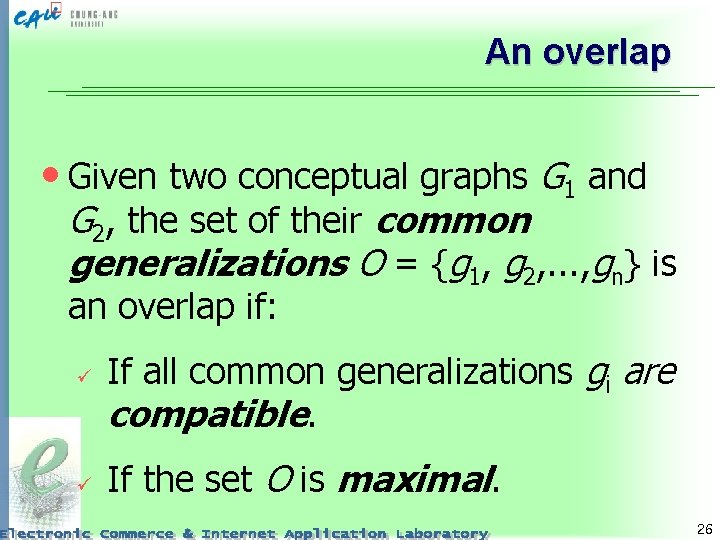

An overlap • Given two conceptual graphs G 1 and G 2, the set of their common generalizations O = {g 1, g 2, . . . , gn} is an overlap if: ü ü If all common generalizations gi are compatible. If the set O is maximal. 26

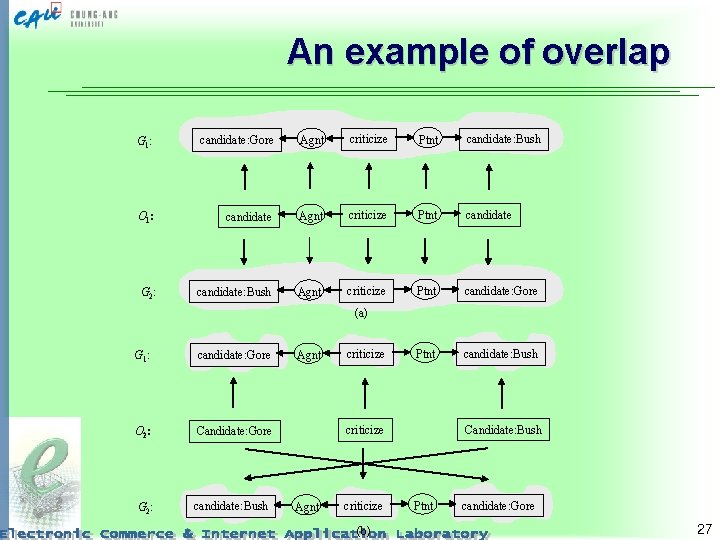

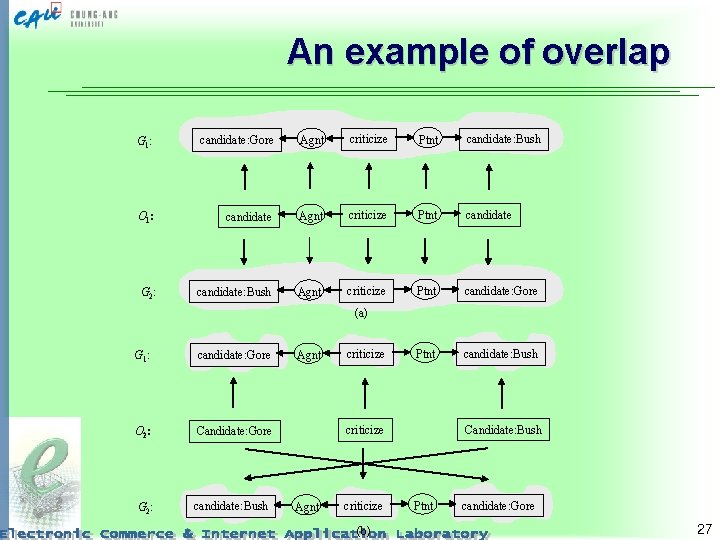

An example of overlap G 1: candidate: Gore Agnt criticize Ptnt candidate: Bush O 1: candidate Agnt criticize Ptnt candidate G 2: candidate: Bush Agnt criticize Ptnt candidate: Gore Ptnt candidate: Bush (a) G 1: candidate: Gore O 2: Candidate: Gore G 2: candidate: Bush Agnt criticize Candidate: Bush criticize Agnt criticize (b) Ptnt candidate: Gore 27

Similarity measure • Conceptual similarity: indicates the amount of information contained in common concepts of G 1 and G 2. ü Do they mention similar concepts? • Relational similarity: indicates how similar the contexts of the common concepts in both graphs are. ü Do they mention similar things about the common concepts? 28

Conceptual similarity • Analogous to the Dice coefficient. • Considers different weights for the different kinds of concepts. • Considers the level of generalization of the common concepts (of the overlap). 29

Relational Similarity • Analogous to the Dice coefficient. • Considers just the neighbors of the common concepts. • Considers different weights for the different kinds of conceptual relations. 30

Similarity Measure • Combines the conceptual and relational similarities. • Multiplicative combination: a similarity roughly proportional to each of the two components. • Relational similarity has secondary importance: even if no common relations exits, the pieces of knowledge are still similar to some degree. 31

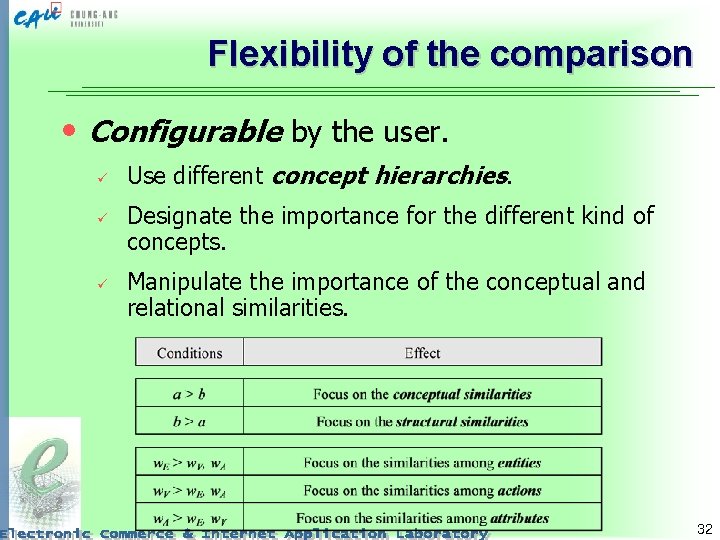

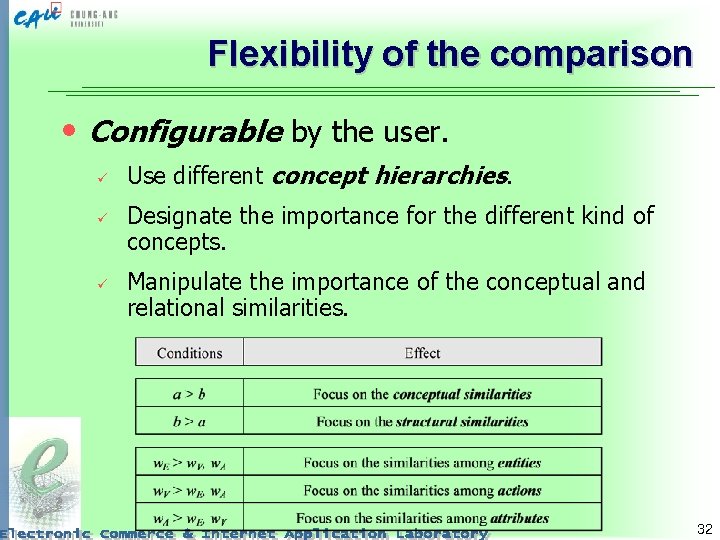

Flexibility of the comparison • Configurable by the user. ü ü ü Use different concept hierarchies. Designate the importance for the different kind of concepts. Manipulate the importance of the conceptual and relational similarities. 32

Example of the flexibility Gore criticezes Bush vs. Bush criticizes Gore 33

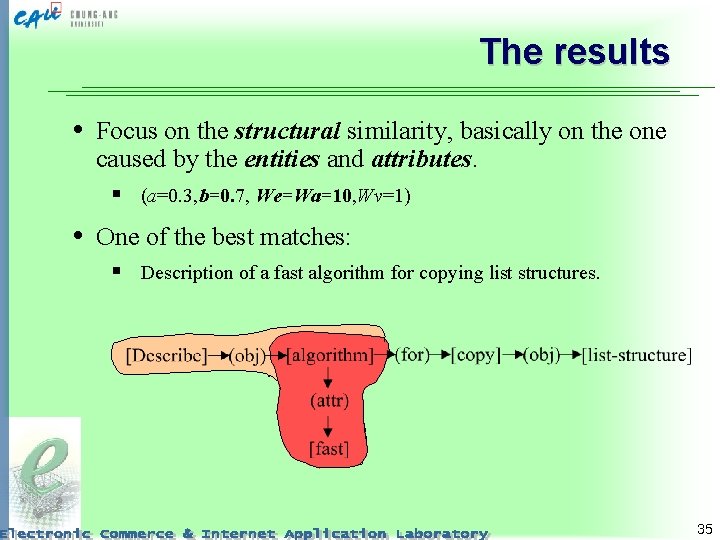

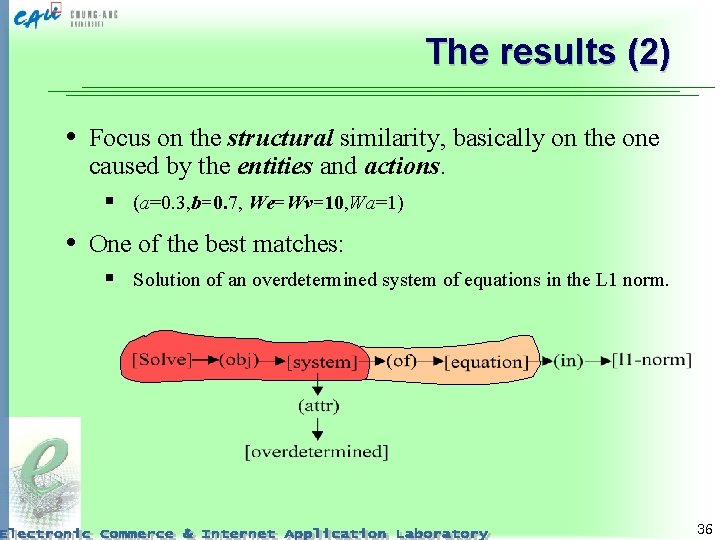

An Experiment • Use the collection CACM-3204 (articles of computer science). § We built the conceptual graphs from the document titles. q. Query: Description of a fast procedure for solving a system of linear equations. 34

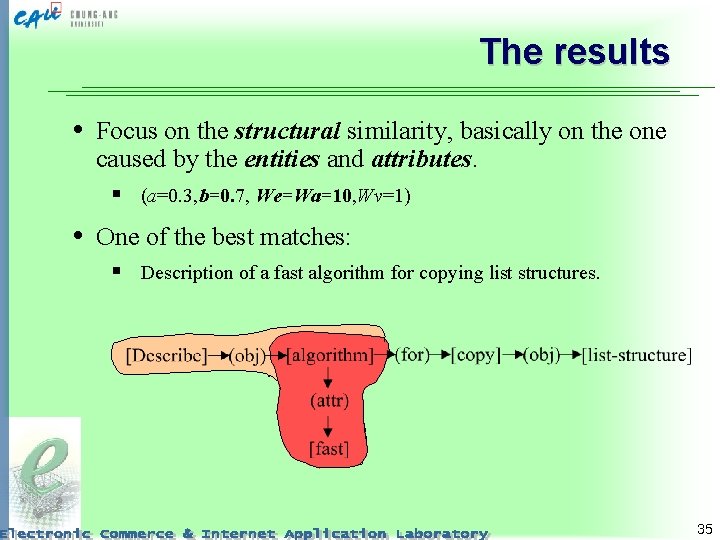

The results • Focus on the structural similarity, basically on the one caused by the entities and attributes. § (a=0. 3, b=0. 7, We=Wa=10, Wv=1) • One of the best matches: § Description of a fast algorithm for copying list structures. 35

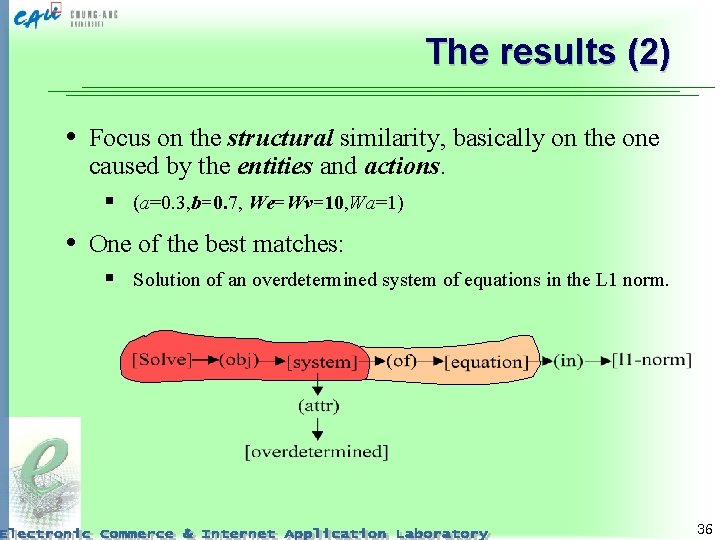

The results (2) • Focus on the structural similarity, basically on the one caused by the entities and actions. § (a=0. 3, b=0. 7, We=Wv=10, Wa=1) • One of the best matches: § Solution of an overdetermined system of equations in the L 1 norm. 36

Advantages of CGs • Well-known strategies for text comparison (Dice coefficient) with new characteristics derived from the CGs structure. • The similarity is a combination of two sources of similarity: the conceptual similarity and the relational similarity. • Appropriate to compare small pieces of knowledge (other methods based on topical statistics do not work). • Two interesting characteristics: uses domain knowledge and allows a direct influence of the user. üAnalyze the similarity between two CGs from different points of view. üSelects the best interpretation in accordance with the user interests. 37

Simpler representations q Head-Modifier pairs § John sold Mary an interesting book for a very low price § John sold, sold Mary, sold book, sold for price interesting book, low price § A paper in CICLing-2004 q Restrict your semantic representation to only two words q Shallow syntax q Semantics improves this representation § Standard form: Mary bought John sold, etc. 38

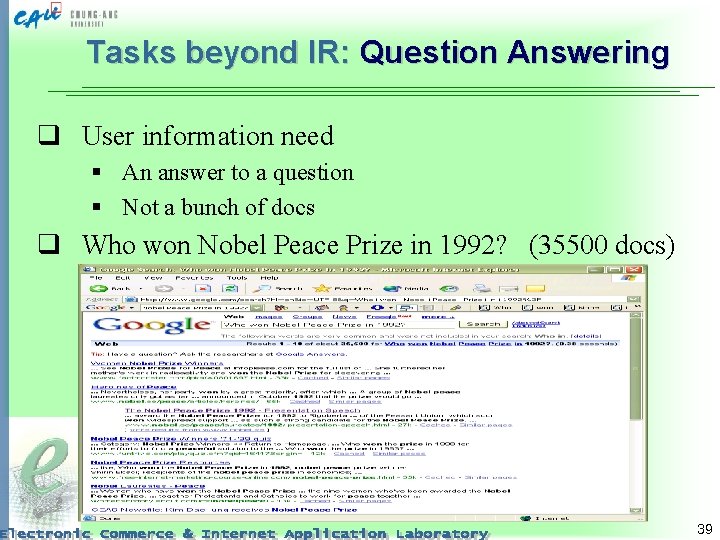

Tasks beyond IR: Question Answering q User information need § An answer to a question § Not a bunch of docs q Who won Nobel Peace Prize in 1992? (35500 docs) 39

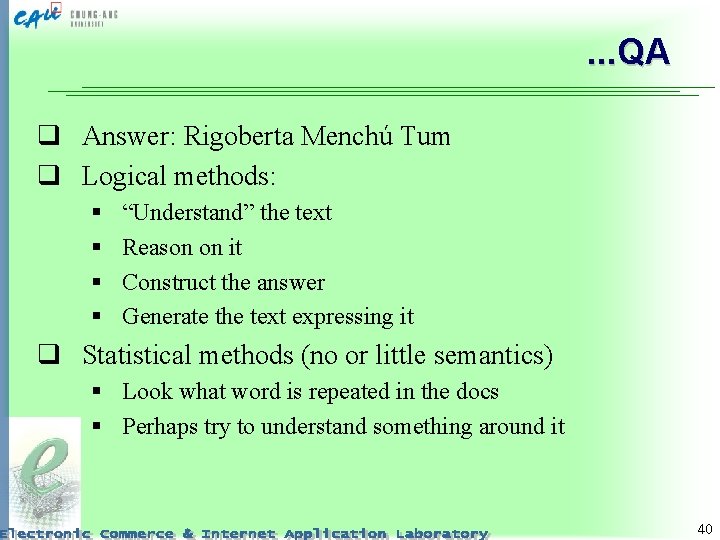

. . . QA q Answer: Rigoberta Menchú Tum q Logical methods: § § “Understand” the text Reason on it Construct the answer Generate the text expressing it q Statistical methods (no or little semantics) § Look what word is repeated in the docs § Perhaps try to understand something around it 40

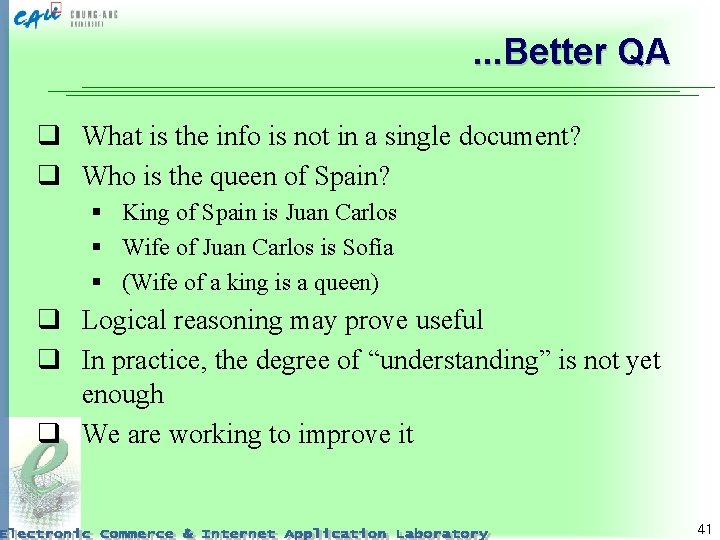

. . . Better QA q What is the info is not in a single document? q Who is the queen of Spain? § King of Spain is Juan Carlos § Wife of Juan Carlos is Sofía § (Wife of a king is a queen) q Logical reasoning may prove useful q In practice, the degree of “understanding” is not yet enough q We are working to improve it 41

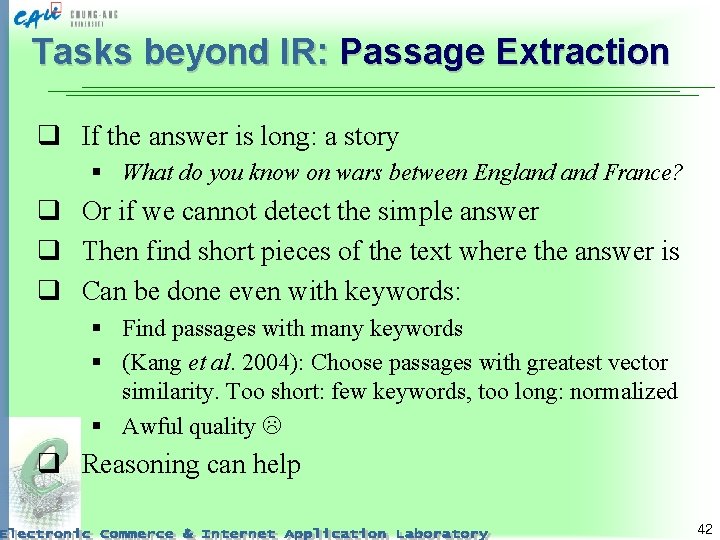

Tasks beyond IR: Passage Extraction q If the answer is long: a story § What do you know on wars between England France? q Or if we cannot detect the simple answer q Then find short pieces of the text where the answer is q Can be done even with keywords: § Find passages with many keywords § (Kang et al. 2004): Choose passages with greatest vector similarity. Too short: few keywords, too long: normalized § Awful quality q Reasoning can help 42

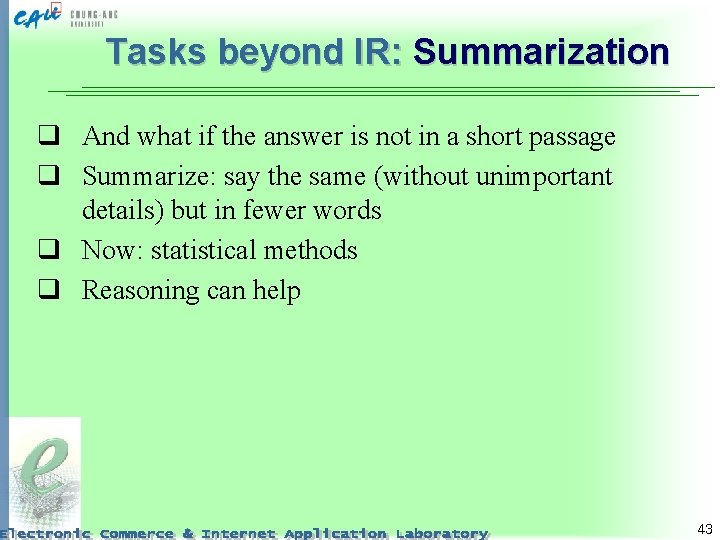

Tasks beyond IR: Summarization q And what if the answer is not in a short passage q Summarize: say the same (without unimportant details) but in fewer words q Now: statistical methods q Reasoning can help 43

Tasks beyond IR: Information Extraction q Question answering on a massive basis q Fill a database with the answers q Example: what company bought what company and when? q A database of three columns q Now: (statistical) patterns q Reasoning can help 44

Cross-lingual IR q Question in one language, answer in another language q Or: question and summary of the answer in English, over a database in Chinese q Is a kind of translation, but simpler § Thus can be done more reliably § A transformation into semantic network can greatly help 45

Research topics q Recognition of the semantic structure § Convert text to conceptual graphs § All kinds of disambiguation q Shallow semantic representations q Application of semantic representations to specific tasks q Similarity measures on semantic representations q Reasoning and IR 46

Conclusions q Semantic representation gives meaning q Language-specific constructions used only in the process of communication are removed q Network of entities / situations and predicates q Allows for translation and logical reasoning q Can improve IR: § § § Compare the query with the doc by meaning, not words Search for a specific situation Search for an approximate situation QA, summarization, IE Cross-lingual IR 47

Thank you! Till June 15? 6 pm Thesis presentation? Oral test? 48