Special Topics in Computer Science Advanced Topics in

- Slides: 31

Special Topics in Computer Science Advanced Topics in Information Retrieval Lecture 9: Natural Language Processing and IR. Tagging, WSD, and Anaphora Resolution Alexander Gelbukh www. Gelbukh. com

Previous Chapter: Conclusions q Reducing synonyms can help IR § Better matching § Ontologies are used. Word. Net q Morphology is a variant of synonymy § widely used in IR systems q Precise analysis: dictionary-based analyzers q Quick-and-dirty analysis: stemmers § Rule-based stemmers. Porter stemmer § Statistical stemmers 2

Previous Chapter: Research topics q Constructing and application of ontologies q Building of morphological dictionaries q Treatment of unknown words with morphological analyzers q Development of better stemmers § Statistical stemmers? 3

Contents q Tagging: for each word, determine its POS (Part of Speech: noun, . . . ) and grammatical characteristics q WSD (Word Sense Disambiguation): for each word, determine which homonym is used q Anaphora resolution: For a pronoun (it, . . . ), determine what it refers to 4

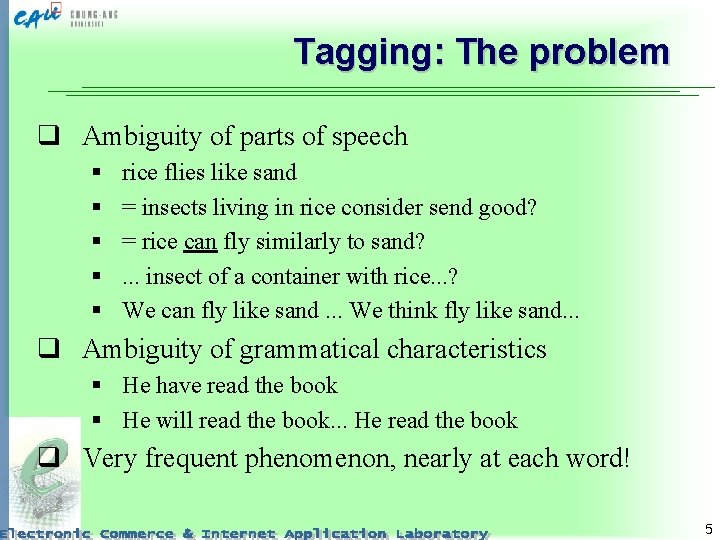

Tagging: The problem q Ambiguity of parts of speech § § § rice flies like sand = insects living in rice consider send good? = rice can fly similarly to sand? . . . insect of a container with rice. . . ? We can fly like sand. . . We think fly like sand. . . q Ambiguity of grammatical characteristics § He have read the book § He will read the book. . . He read the book q Very frequent phenomenon, nearly at each word! 5

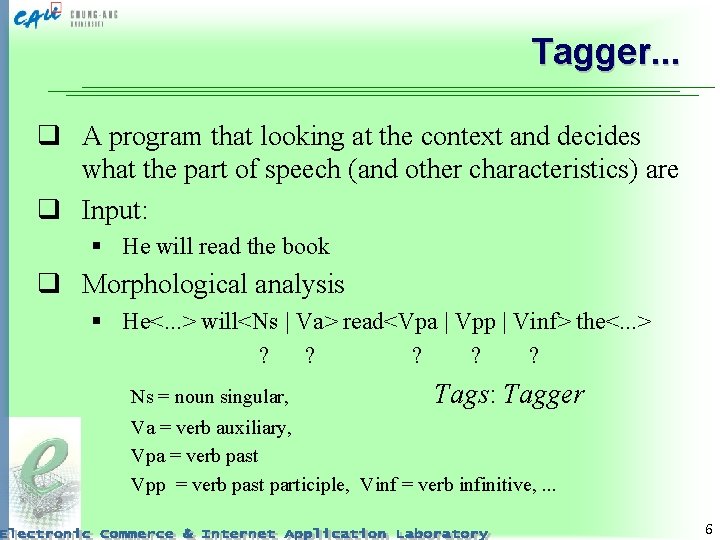

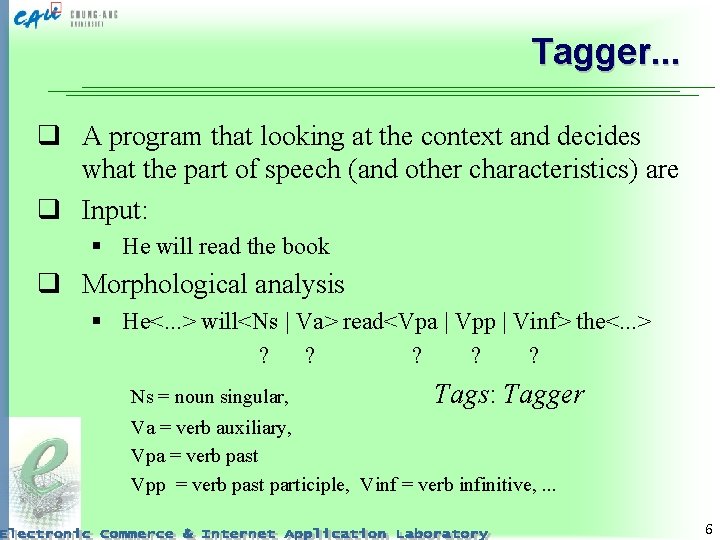

Tagger. . . q A program that looking at the context and decides what the part of speech (and other characteristics) are q Input: § He will read the book q Morphological analysis § He<. . . > will<Ns | Va> read<Vpa | Vpp | Vinf> the<. . . > ? ? ? Ns = noun singular, Tags: Tagger Va = verb auxiliary, Vpa = verb past Vpp = verb past participle, Vinf = verb infinitive, . . . 6

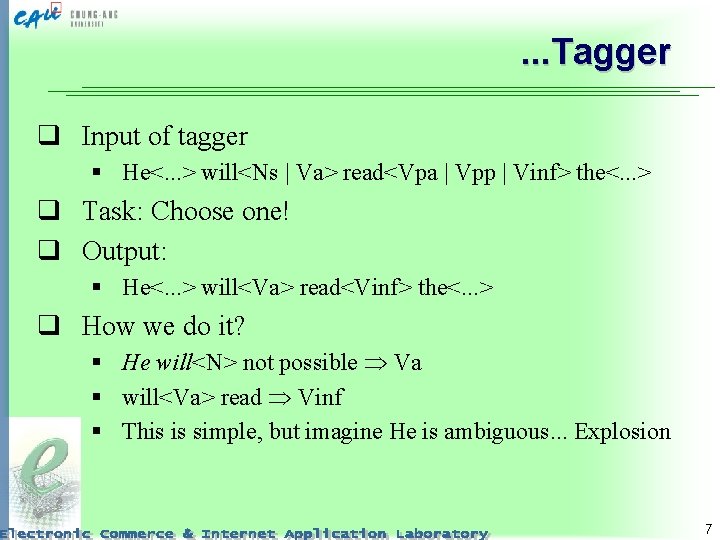

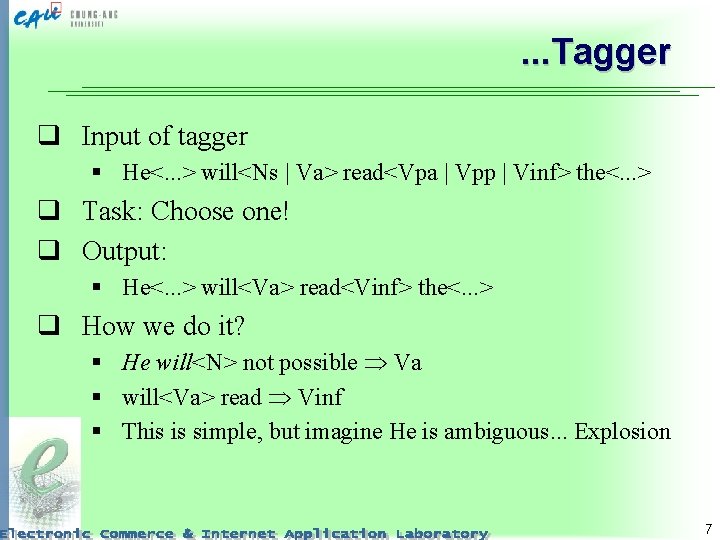

. . . Tagger q Input of tagger § He<. . . > will<Ns | Va> read<Vpa | Vpp | Vinf> the<. . . > q Task: Choose one! q Output: § He<. . . > will<Va> read<Vinf> the<. . . > q How we do it? § He will<N> not possible Va § will<Va> read Vinf § This is simple, but imagine He is ambiguous. . . Explosion 7

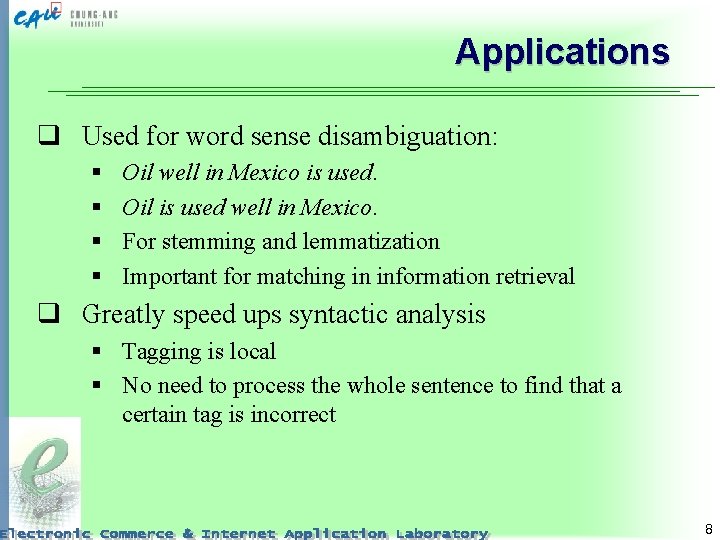

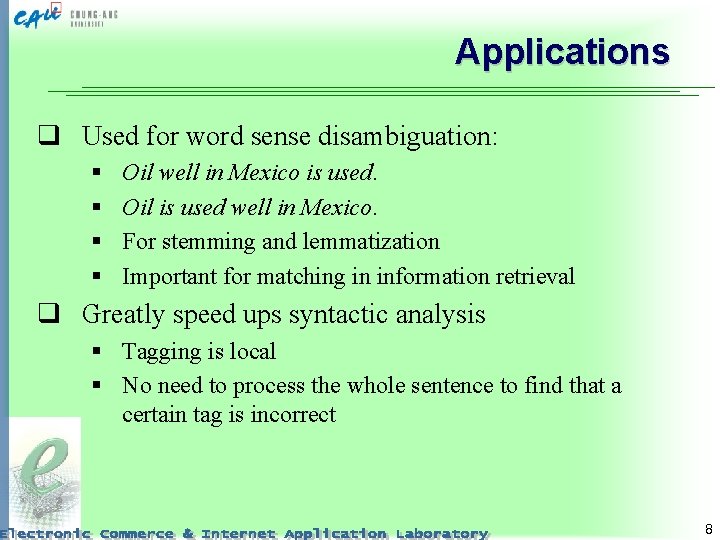

Applications q Used for word sense disambiguation: § § Oil well in Mexico is used. Oil is used well in Mexico. For stemming and lemmatization Important for matching in information retrieval q Greatly speed ups syntactic analysis § Tagging is local § No need to process the whole sentence to find that a certain tag is incorrect 8

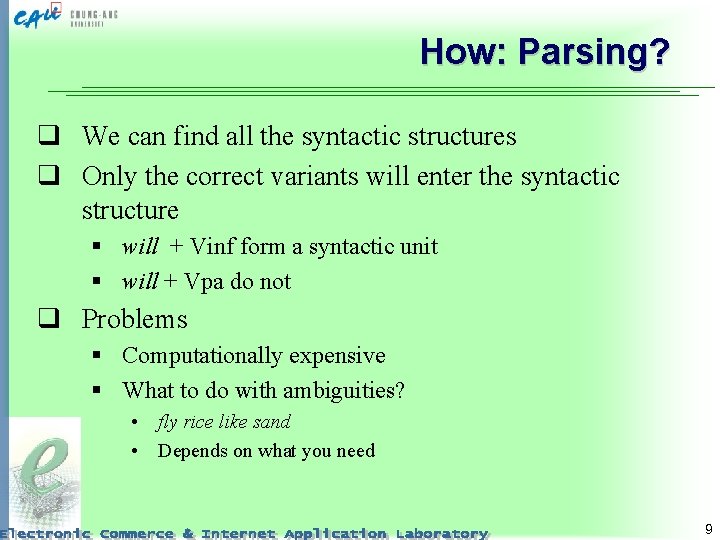

How: Parsing? q We can find all the syntactic structures q Only the correct variants will enter the syntactic structure § will + Vinf form a syntactic unit § will + Vpa do not q Problems § Computationally expensive § What to do with ambiguities? • fly rice like sand • Depends on what you need 9

Statistical tagger q Example: Tn. T tagger q Based on Hidden Markov Model (HMM) q Idea: § Some words are more probable after some other words § Find these probabilities § Guess the word if you know the nearby ones q Problem: § Letter strings denote meanings § “x is more probable after y” are meanings, not strings § so guess what you cannot see: meanings 10

Hidden Markov Model: Idea q A system changes its state § What a person thinks § Random. . . but not completely (how? ) q In each state, it emits an output § What he says when he thinks something § Random. . . but somehow (? ) depends on what he thinks q We know the sequence of produced outputs § Text: we can see it! q Guess what were the underlying states § Hidden: we cannot see them 11

Hidden Markov Model: Hypotheses q A finite set of states: q 1. . . q. N (invisible) § POS and grammatical characteristics (language) q A finite set of observations: v 1. . . v. M § Strings we see in the corpus (language) q A random sequence of states xi § POS in the q Probabilities of state transitions P(xi+1| xi) § Language rules and use q Probabilities of observations P(vk| xi) § words expressing the meanings: Vinf: ask, V 3: asks 12

Hidden Markov Model: Problem q Same observation corresponds to different meaning § Vinf: read, Vpp: read q Looking at what we can see, guess what we cannot § This is why hidden q Given a sequence of observations oi § The text: sequence of letter strings. Training set q Guess the sequence of states xi § The POS of each word q Our hypotheses on xi depend on each other § Highly combinatorial task 13

Hidden Markov Model: Solutions q Need to find the parameters of the model: § P(xi+1| xi) § P(vk| xi) q Optimal way! To maximize the probability of generation this specific output q Optimization methods from Operation Research are used § More details? Not so simple. . . 14

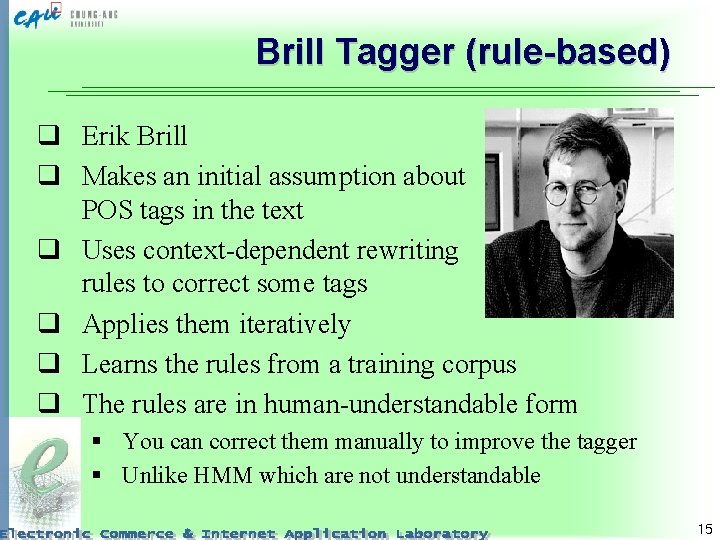

Brill Tagger (rule-based) q Erik Brill q Makes an initial assumption about POS tags in the text q Uses context-dependent rewriting rules to correct some tags q Applies them iteratively q Learns the rules from a training corpus q The rules are in human-understandable form § You can correct them manually to improve the tagger § Unlike HMM which are not understandable 15

Word Sense Disambiguation q Query: international bank in Seoul q Bank: 한 § § financial institution river shore place to store something. . . Korean superior 한상용. . . 원 $ official. . . q Hotel located at the beautiful bank of Han river. § Relevant for the query? q POS is the same. Tagger will not distinguish them 16

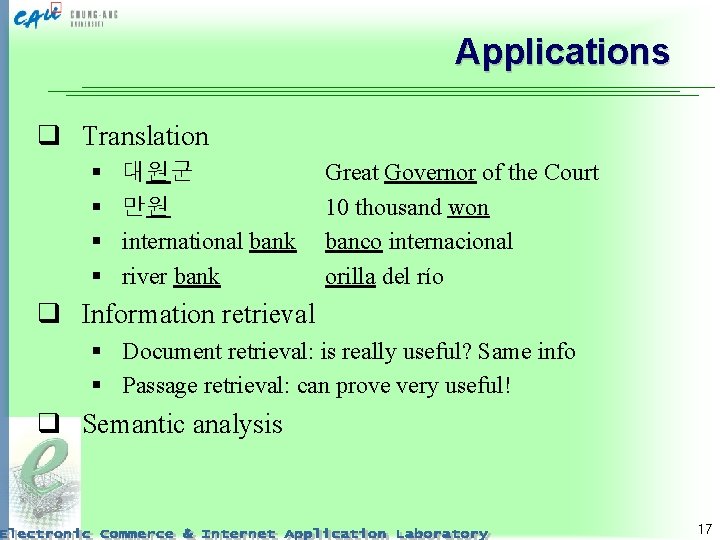

Applications q Translation § § 대원군 만원 international bank river bank Great Governor of the Court 10 thousand won banco internacional orilla del río q Information retrieval § Document retrieval: is really useful? Same info § Passage retrieval: can prove very useful! q Semantic analysis 17

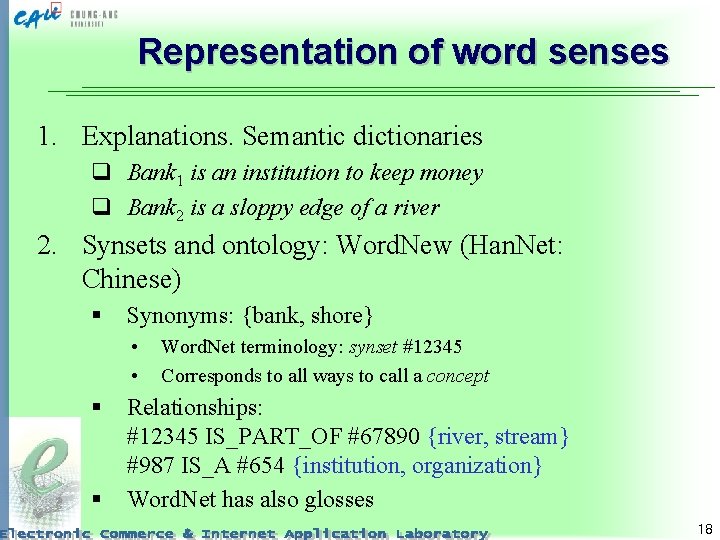

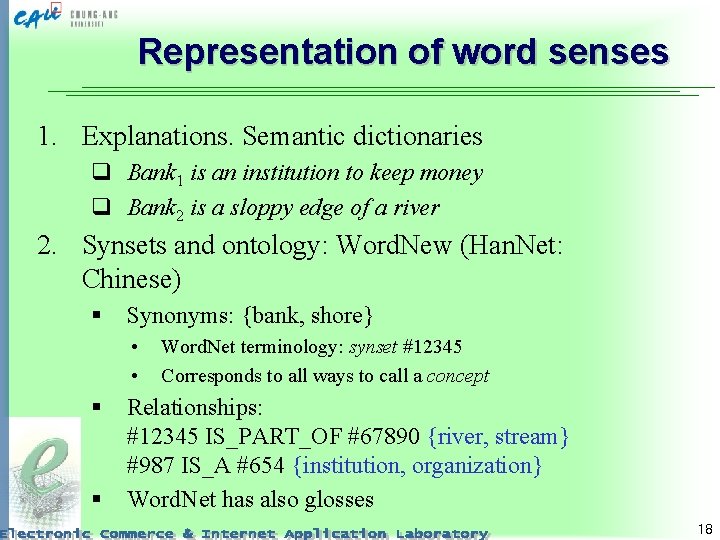

Representation of word senses 1. Explanations. Semantic dictionaries q Bank 1 is an institution to keep money q Bank 2 is a sloppy edge of a river 2. Synsets and ontology: Word. New (Han. Net: Chinese) § Synonyms: {bank, shore} • • § § Word. Net terminology: synset #12345 Corresponds to all ways to call a concept Relationships: #12345 IS_PART_OF #67890 {river, stream} #987 IS_A #654 {institution, organization} Word. Net has also glosses 18

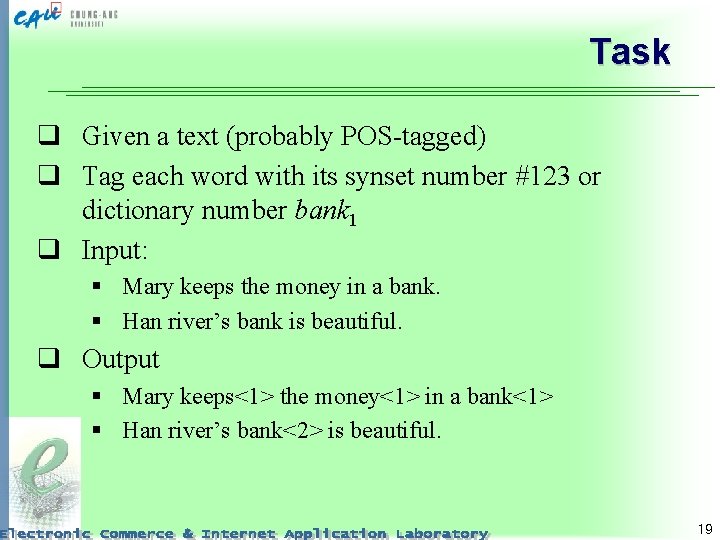

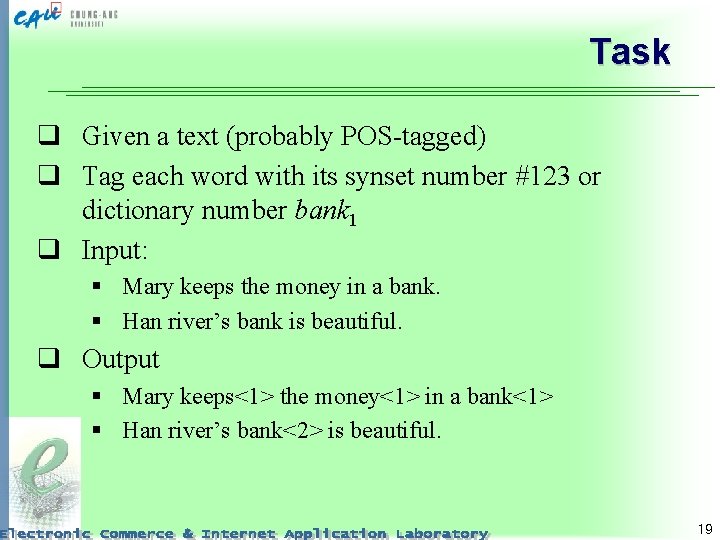

Task q Given a text (probably POS-tagged) q Tag each word with its synset number #123 or dictionary number bank 1 q Input: § Mary keeps the money in a bank. § Han river’s bank is beautiful. q Output § Mary keeps<1> the money<1> in a bank<1> § Han river’s bank<2> is beautiful. 19

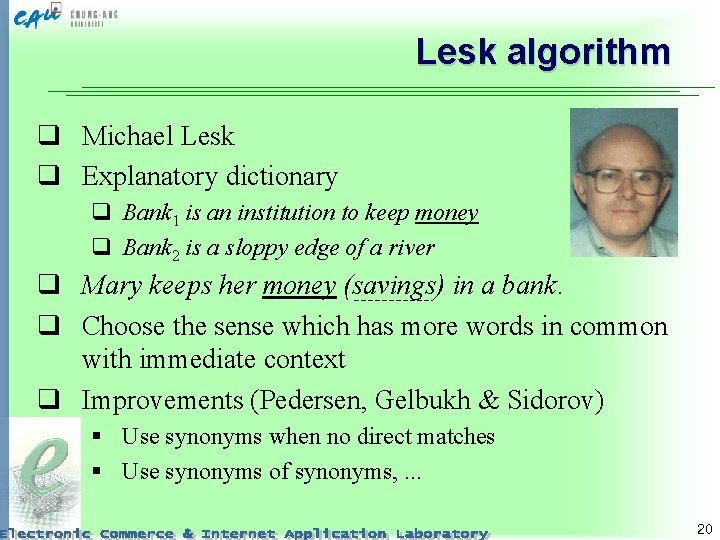

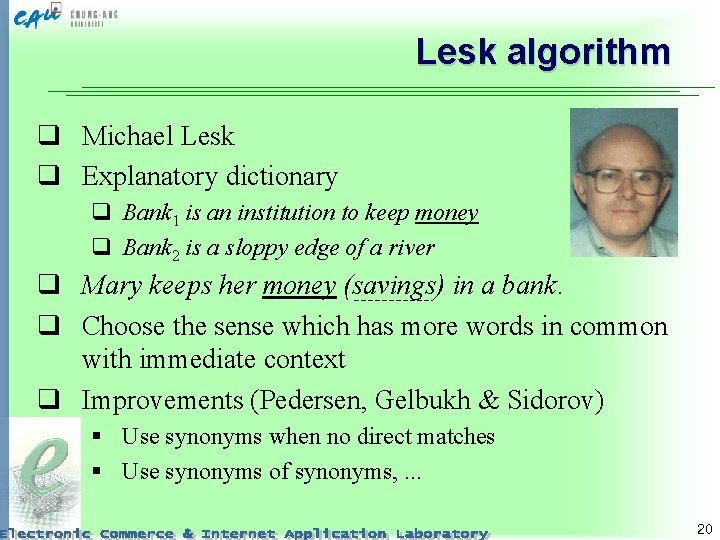

Lesk algorithm q Michael Lesk q Explanatory dictionary q Bank 1 is an institution to keep money q Bank 2 is a sloppy edge of a river q Mary keeps her money (savings) in a bank. q Choose the sense which has more words in common with immediate context q Improvements (Pedersen, Gelbukh & Sidorov) § Use synonyms when no direct matches § Use synonyms of synonyms, . . . 20

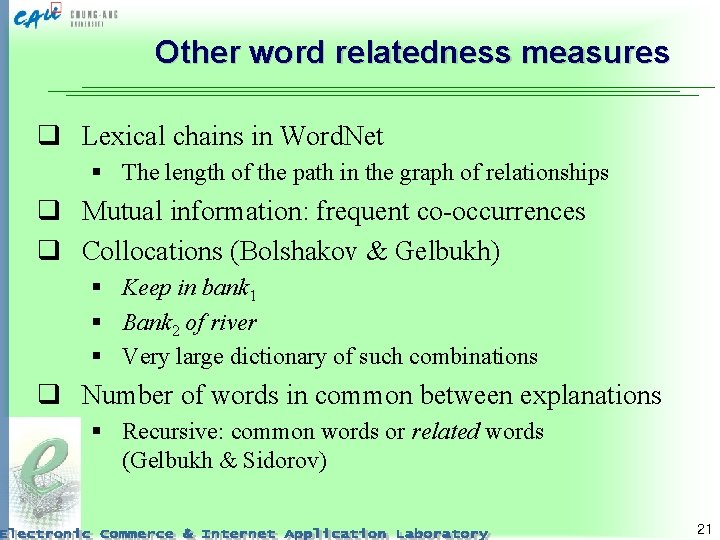

Other word relatedness measures q Lexical chains in Word. Net § The length of the path in the graph of relationships q Mutual information: frequent co-occurrences q Collocations (Bolshakov & Gelbukh) § Keep in bank 1 § Bank 2 of river § Very large dictionary of such combinations q Number of words in common between explanations § Recursive: common words or related words (Gelbukh & Sidorov) 21

Other methods q Hidden Markov Models q Logical reasoning 22

Yarowsky’s Principles q q David Yarowsky One sense per text! One sense per collocation I keep my money in the bank 1. This is an international bank 1 with a great capital. The bank 2 is located near Han river. q 3 words vote for ‘institution’, one for ‘shore’ q Institution! bank 1 is located near Han river. 23

Anaphora resolution q Mainly pronouns. q Also co-reference: when two words refer to the same? q John took cake from the table and ate it. q John took cake from the table and washed it. q Translation into Spanish: la ‘she’ table / lo ‘he’ cake q Methods: § Dictionaries § Different sources of evidence § Logical reasoning 24

Applications q Translation q Information retrieval: § Can improve frequency counts (? ) § Passage retrieval: can be very important 25

Mitkov’s knowledge poor method q Ruslan Mitkov q Rule-based and statistical-based approach q Uses simple information on POS and general word classes q Combines different sources of evidence 26

Hidden Anaphora q John bought a house. The kitchen is big. § = that house’s kitchen q John was eating. The food was delicious. § = “that eating” ’s food q John was buried. The widow was mad with grief. § “that burying” ’s death’s widow q Intersection of scenarios of the concepts (Gelbukh & Sidorov) § house has a kitchen § burying results from death & widow results from death 27

Evaluation q Senseval and TREC international competitions § Korean track available q Human annotated corpus § § § Very expensive Inter-annotator agreement is often low! A program cannot do what humans cannot do Apply the program and compare with the corpus Accuracy q Sometimes the program cannot tag a word § Precision, recall 28

Research topics q q Too many to list New methods Lexical resources (dictionaries) = Computational linguistics 29

Conclusions q Tagging, word sense disambiguation, and anaphora resolution are cases of disambiguation of meaning q Useful in translation, information retrieval, and text undertanding q Dictionary-based methods § good but expensive q Statistical methods § cheap and sometimes imperfect. . . but not always (if very large corpora are available) 30

Thank you! Till May 31? June 1? 6 pm 31