Similarity searching in image retrieval and annotation Petra

- Slides: 22

Similarity searching in image retrieval and annotation Petra Budíková

Outline § Motivation § Image search applications § General image retrieval § Text-based approach § Content-based approach § Challenges and open problems § Multi-modal image retrieval § Comparative study of approaches § Our contributions § Current results, research directions § Automatic image annotation § Naive solution § Demo § Better solution (work in progress) stamp

Motivation § Explosion of digital data § Size - world’s digital data production: § 5 billion GB (2003) vs. 1 800 billion GB (2011) § Data is growing by a factor of 10 every five years. § Diversity § Availability of technologies => multimedia data § Images § Personal images § Flickr: 4 billion (2009), 6 billion (2011) § Facebook: 60 billion (2010), 140 billion (2011) § Scientific data § Surveilance data § … 3

Motivation II § Applications of image searching § Collection browsing § Collection organization § Targeted search § Data annotation and categorization § Authentization Get maximum § information about this: … Summer holiday 2011 What is this? 1552 photos Photo from my trip to highlands Cornflower General image collections Large-scale searching Checking… Iris in the Unknown OK botanical violet flower purple • blue • color • plant • beauty • nature • garden • petals • hydrangea • weed garden 4

General image retrieval § Basic approaches: § Attribute-based searching § Text-based searching § Content-based searching § Attribute-based searching § Size, type, category, price, location, … § Relational databases § Text-based searching § Image title, text of surrounding web page, Flickr tags, … § Mature text retrieval technologies § Basic assumption: additional metadata are available § Human participation mostly needed § Not realistic for many applications 5

General image retrieval II § Content-based searching § Query by example § Similarity measure (distance function) § Optimal unknown § Subjective, context-dependent § Should reflect semantics as well as visual features § State-of-the-art representations of image § Reflect low-level visual features § Global image descriptors: MPEG 7 colors, shapes § Local image descriptors: SIFT, SURF § Semantic gap problem § In general, it is very difficult to extract semantics § Possible only in specialized applications, e. g. face search § The more sophisticated representation of image, the more costly evaluation of distances 6

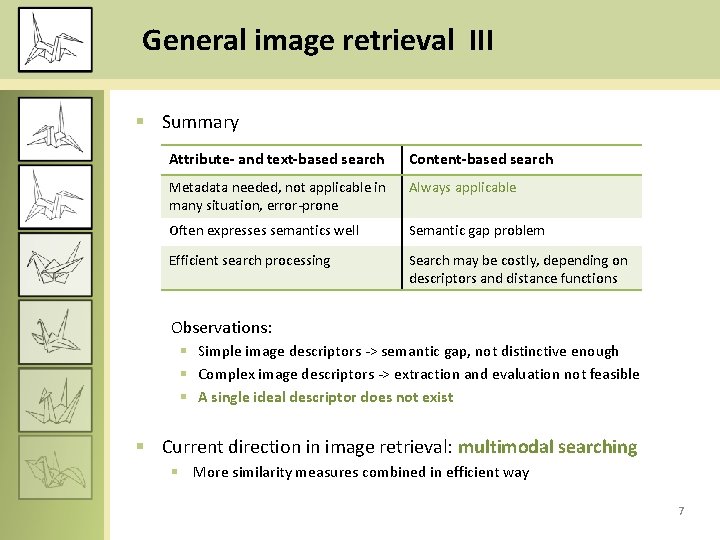

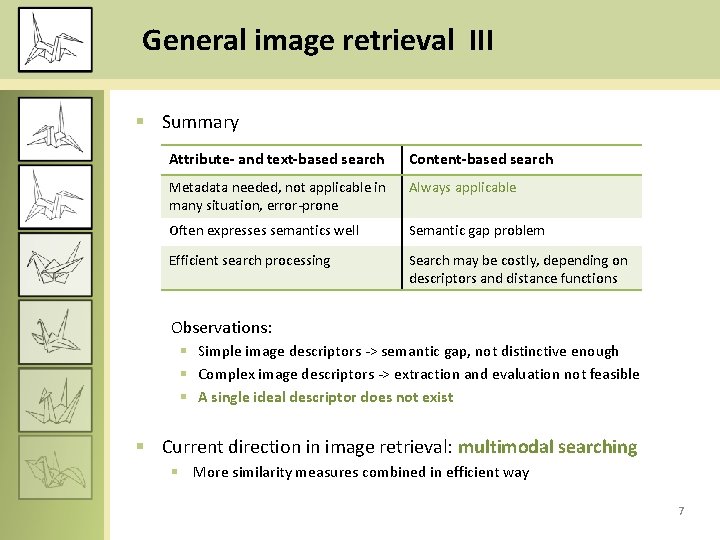

General image retrieval III § Summary Attribute- and text-based search Content-based search Metadata needed, not applicable in many situation, error-prone Always applicable Often expresses semantics well Semantic gap problem Efficient search processing Search may be costly, depending on descriptors and distance functions Observations: § Simple image descriptors -> semantic gap, not distinctive enough § Complex image descriptors -> extraction and evaluation not feasible § A single ideal descriptor does not exist § Current direction in image retrieval: multimodal searching § More similarity measures combined in efficient way 7

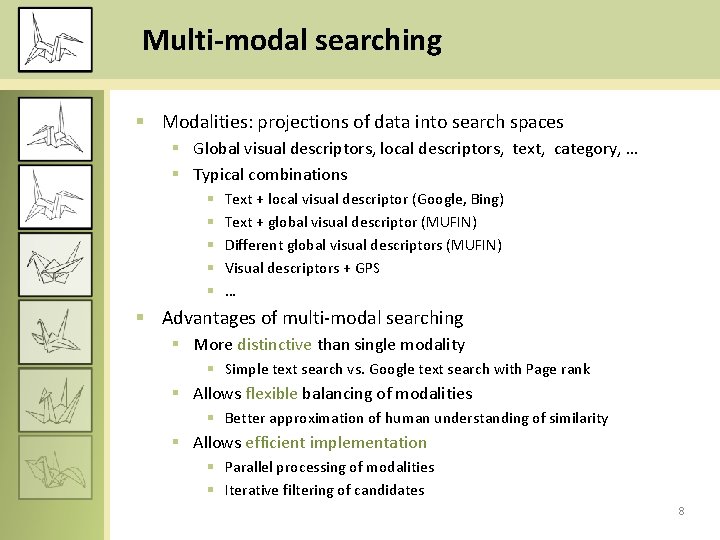

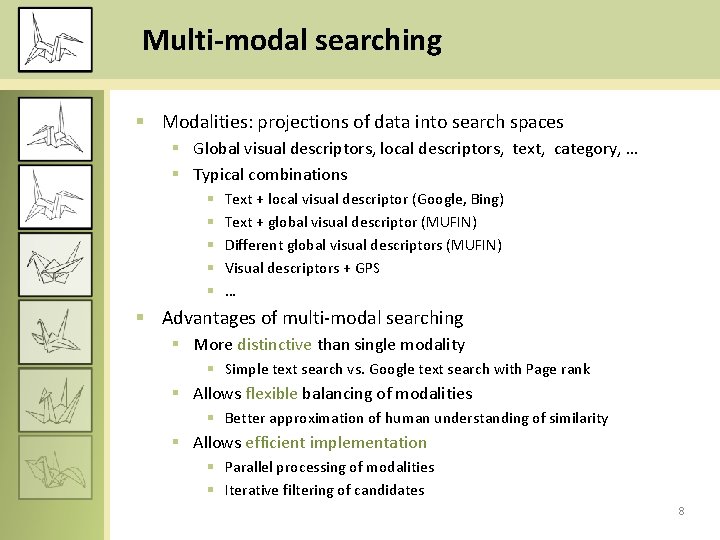

Multi-modal searching § Modalities: projections of data into search spaces § Global visual descriptors, local descriptors, text, category, … § Typical combinations § § § Text + local visual descriptor (Google, Bing) Text + global visual descriptor (MUFIN) Different global visual descriptors (MUFIN) Visual descriptors + GPS … § Advantages of multi-modal searching § More distinctive than single modality § Simple text search vs. Google text search with Page rank § Allows flexible balancing of modalities § Better approximation of human understanding of similarity § Allows efficient implementation § Parallel processing of modalities § Iterative filtering of candidates 8

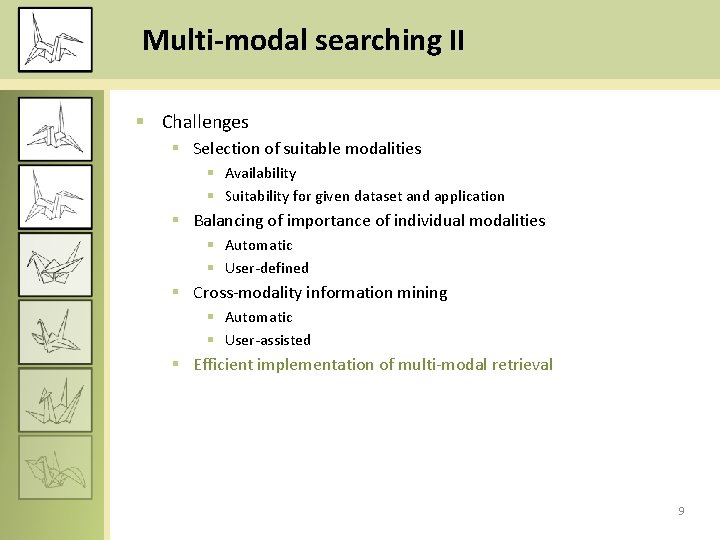

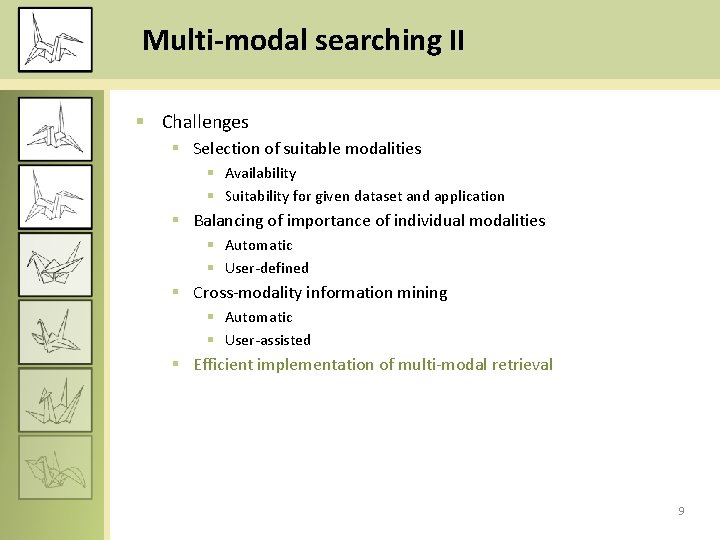

Multi-modal searching II § Challenges § Selection of suitable modalities § Availability § Suitability for given dataset and application § Balancing of importance of individual modalities § Automatic § User-defined § Cross-modality information mining § Automatic § User-assisted § Efficient implementation of multi-modal retrieval 9

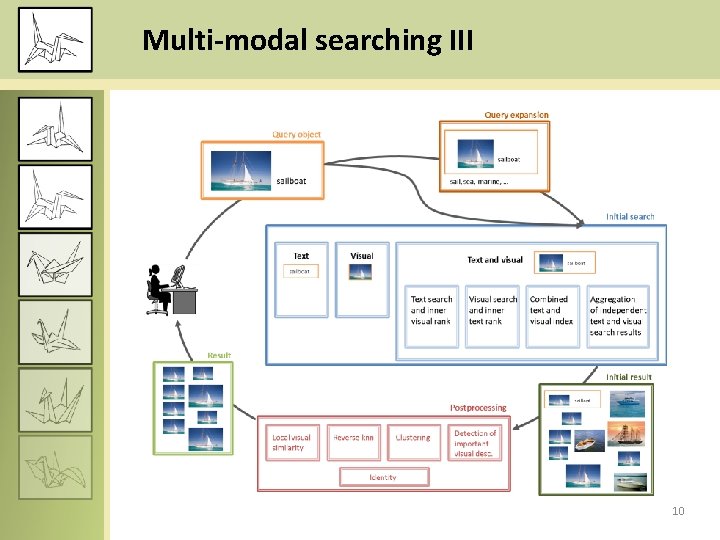

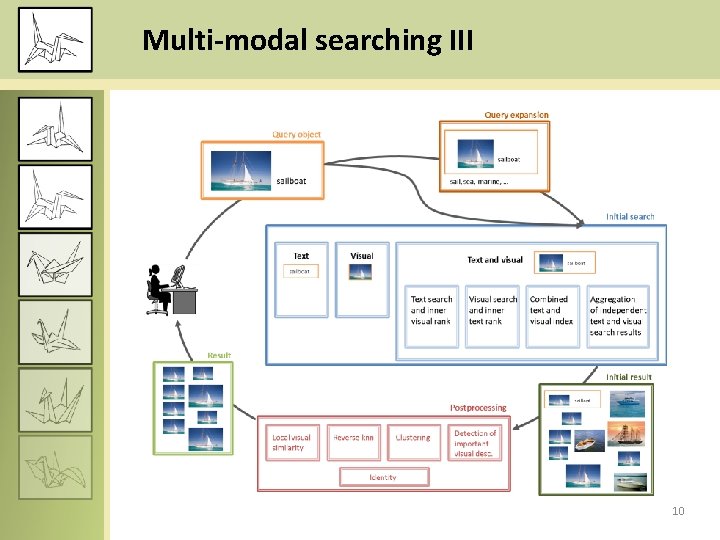

Multi-modal searching III 10

Multi-modal searching IV § Our focus § Let us suppose two modalities – text and global visual features § Frequently used § Available in web search applications § Only consider two-phase searching § Basic search over whole database § Postprocessing of basic search results § Categorize possible solutions § Implement & evaluate § Large-scale data processing § Analyze results 11

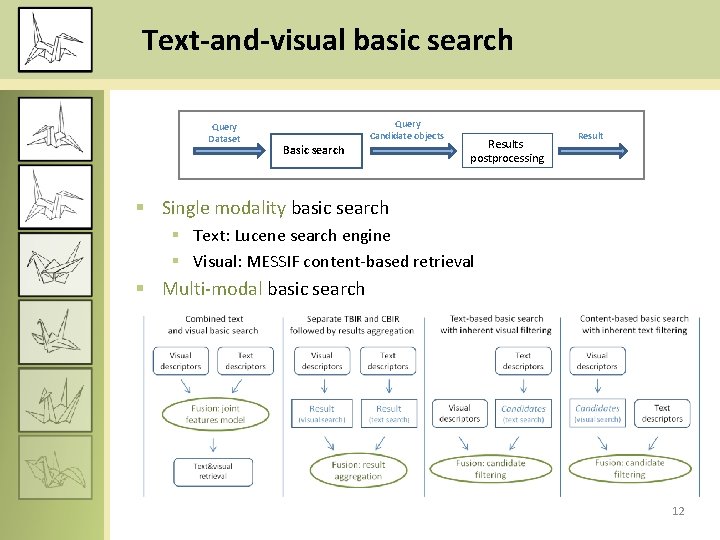

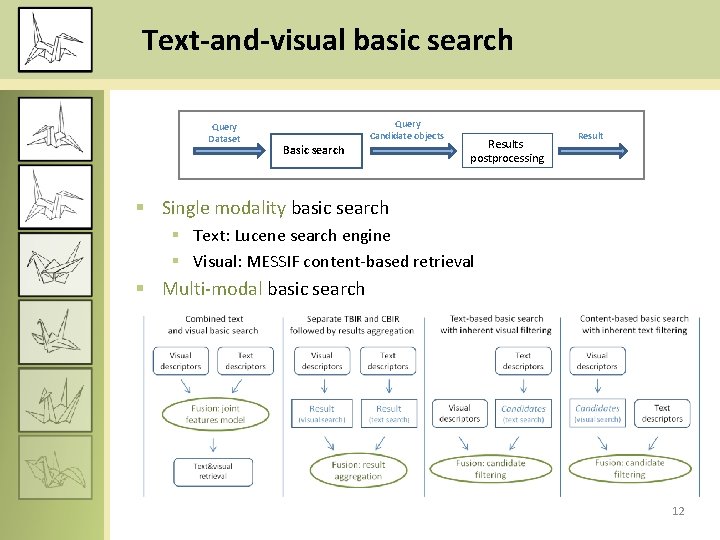

Text-and-visual basic search Query Dataset Query Candidate objects Basic search Results postprocessing Result § Single modality basic search § Text: Lucene search engine § Visual: MESSIF content-based retrieval § Multi-modal basic search 12

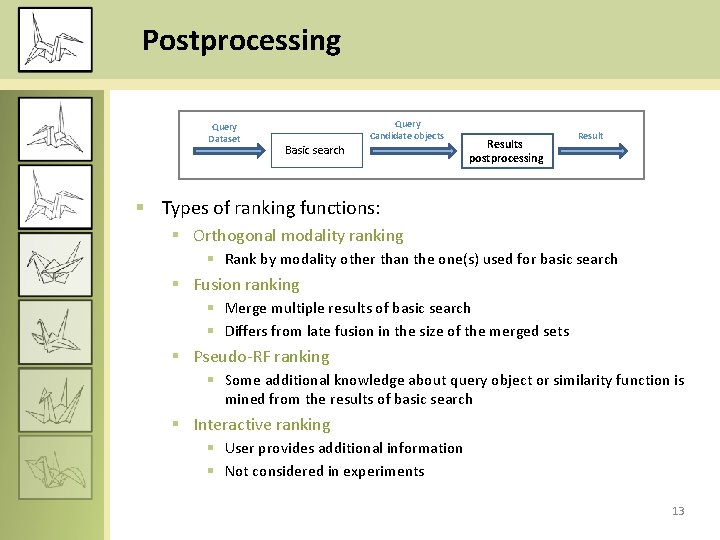

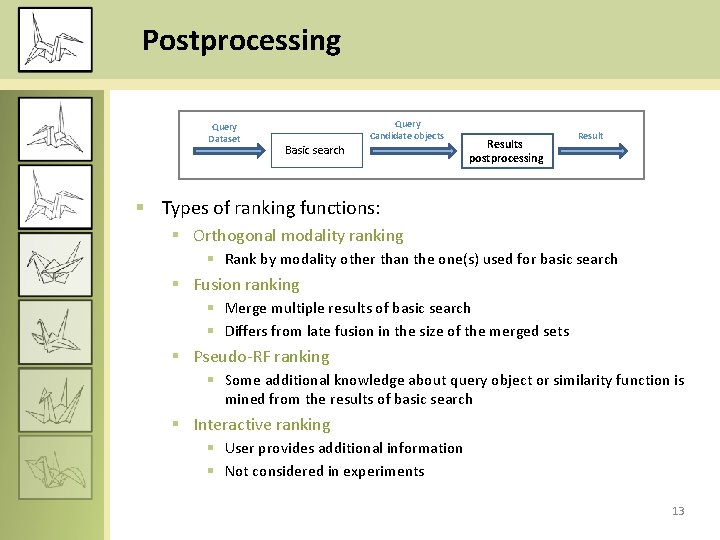

Postprocessing Query Dataset Query Candidate objects Basic search Results postprocessing Result § Types of ranking functions: § Orthogonal modality ranking § Rank by modality other than the one(s) used for basic search § Fusion ranking § Merge multiple results of basic search § Differs from late fusion in the size of the merged sets § Pseudo-RF ranking § Some additional knowledge about query object or similarity function is mined from the results of basic search § Interactive ranking § User provides additional information § Not considered in experiments 13

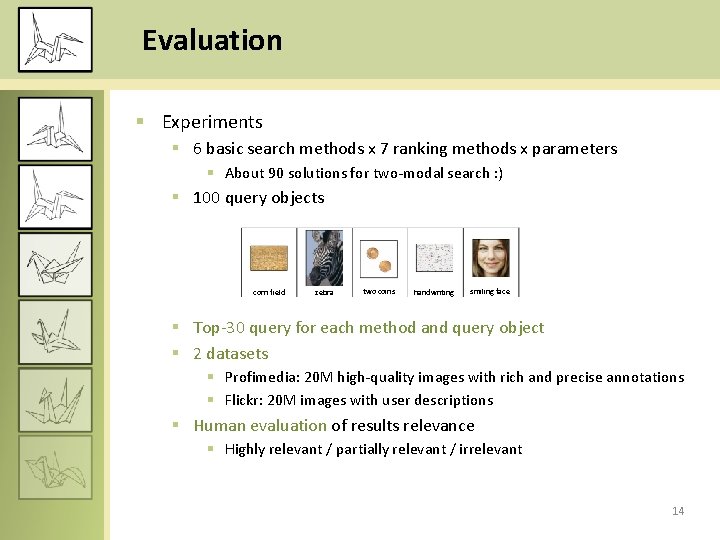

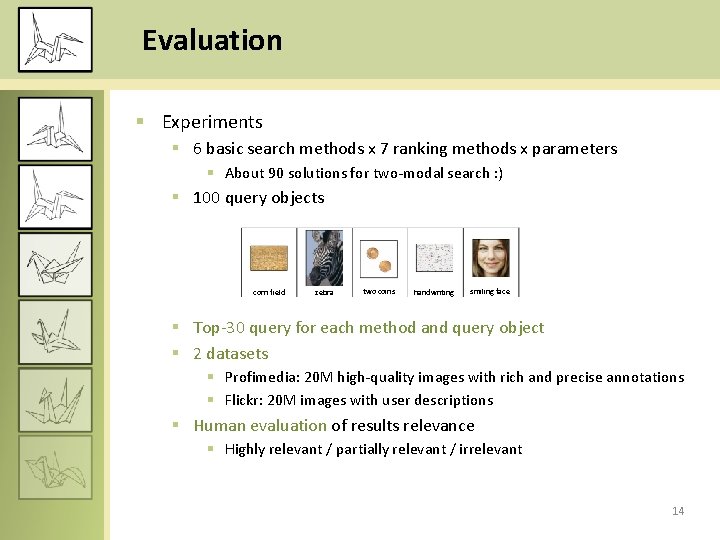

Evaluation § Experiments § 6 basic search methods x 7 ranking methods x parameters § About 90 solutions for two-modal search : ) § 100 query objects corn field zebra two coins handwriting smiling face § Top-30 query for each method and query object § 2 datasets § Profimedia: 20 M high-quality images with rich and precise annotations § Flickr: 20 M images with user descriptions § Human evaluation of results relevance § Highly relevant / partially relevant / irrelevant 14

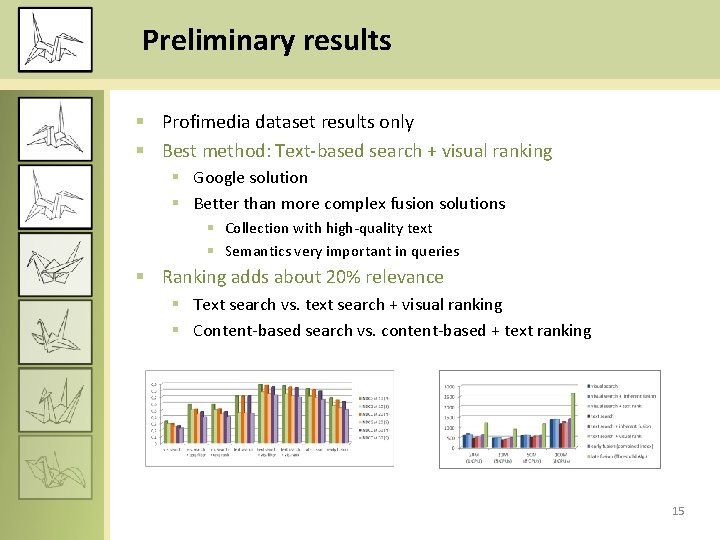

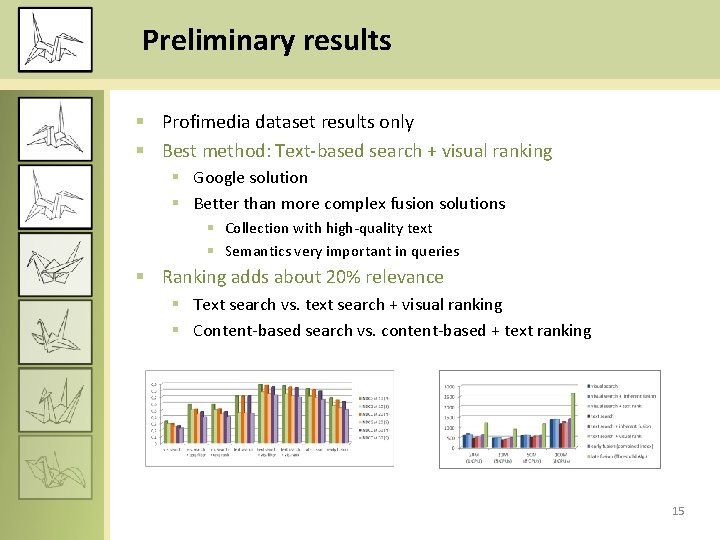

Preliminary results § Profimedia dataset results only § Best method: Text-based search + visual ranking § Google solution § Better than more complex fusion solutions § Collection with high-quality text § Semantics very important in queries § Ranking adds about 20% relevance § Text search vs. text search + visual ranking § Content-based search vs. content-based + text ranking 15

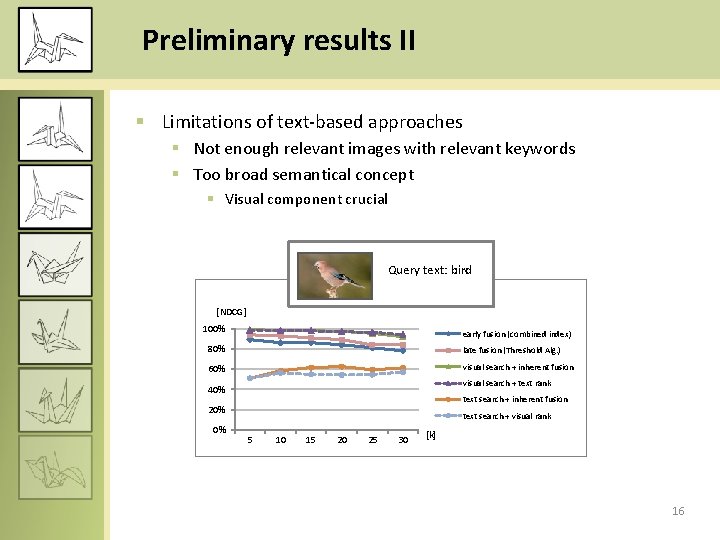

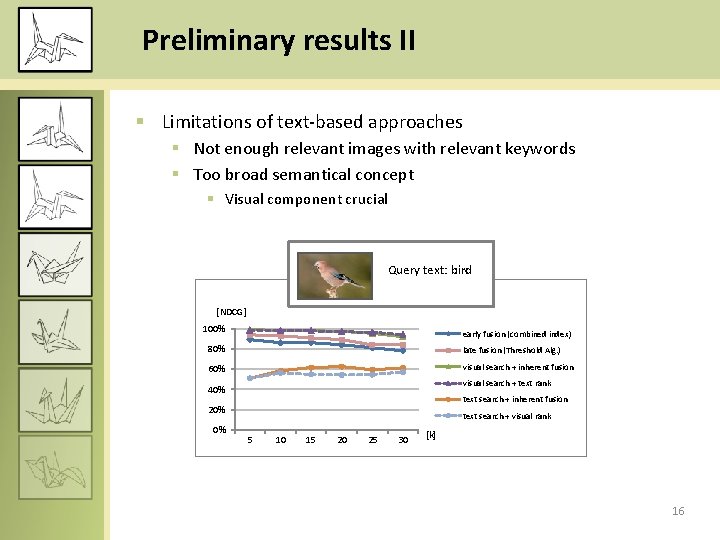

Preliminary results II § Limitations of text-based approaches § Not enough relevant images with relevant keywords § Too broad semantical concept § Visual component crucial Query text: bird [NDCG] 100% early fusion (combined index) 80% late fusion (Threshold Alg. ) 60% visual search + inherent fusion visual search + text rank 40% text search + inherent fusion 20% 0% text search + visual rank 5 10 15 20 25 30 [k] 16

Multi-modal search – future work § Text-and-visual search § Complete analysis of results § Determine conditions which influence usability of individual methods § Dataset properties § Query properties § Automatic recommendation of query processing § Multi-modal search in general § Combination of more than two modalities 17

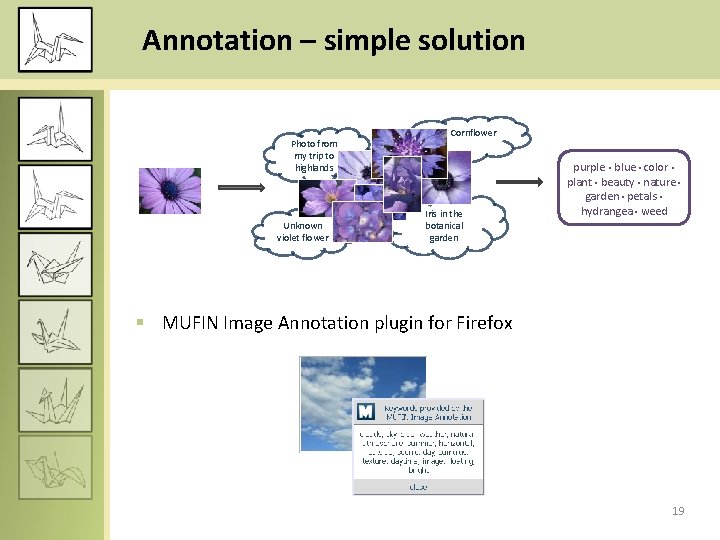

Annotation § Task § For a given image, retrieve relevant text information § Easier: relevant keywords § More difficult: relevant text (Wiki page, …) § Applications § Recommendation of tags in social networks § Classification § Method § Only image available – search by visual features the only possibility § Exploit dataset of images with textual information § Obtain a set of results, what can we do with these? § Simple solution: analyze keywords related to images in similarity search result, return the most frequent ones § Advanced solution: analyze relationships between keywords

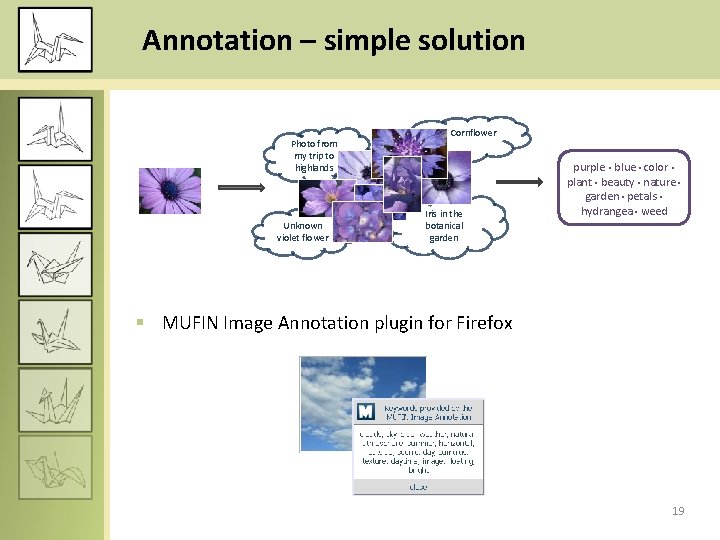

Annotation – simple solution Photo from my trip to highlands Unknown violet flower Cornflower Iris in the botanical garden purple • blue • color • plant • beauty • nature • garden • petals • hydrangea • weed § MUFIN Image Annotation plugin for Firefox 19

Annotation – simple solution II § Limitations § Relevance of results found by content-based retrieval § Semantic gap § Quality of source data § Spelling mistakes, different languages, names, stopwords, … § Natural language features § § Synonyms Hypernyms, homonyms Noun vs. verb … § Possible solutions § Consistence checking over the results § Source text cleaning § Advanced text processing 20

Annotation – advanced solution § Employ knowledge-base to learn about semantics § Word. Net: lexical database of English § Nouns, verbs, adjectives and adverbs are grouped into sets of cognitive synonyms (synsets), each expressing a distinct concept. § Synsets are interlinked by means of conceptual-semantic and lexical relations § Dataset preprocessing § Determine the correct synsets for keywords in the dataset § Analysis of keywords related to the same image § The correct synsets should be “near” in the Word. Net relationships graph § Annotation process (work in progress) § Retrieve similar objects § Analyze relationships between synsets § Synsets found: beagle, dog, terrier -> there’s a dog in the image 21

For more information… … visit mufin. fi. muni. cz http: //mufin. fi. muni. cz/profimedia collection browsing and targeted search in 20 M image collection stamp http: //mufin. fi. muni. cz/annotation info about annotation, demo, plugin download 22

Sequential searching

Sequential searching Sequential searching in information retrieval

Sequential searching in information retrieval Mdland records

Mdland records Text based image retrieval

Text based image retrieval Image similarity measure

Image similarity measure Image similarity search

Image similarity search Image similarity search

Image similarity search Moss software

Moss software Genome assembly ppt

Genome assembly ppt Knuth morris pratt pattern matching algorithm

Knuth morris pratt pattern matching algorithm Searching and sorting arrays in c++

Searching and sorting arrays in c++ Big o java

Big o java Searching and sorting in java

Searching and sorting in java Searching and sorting in java

Searching and sorting in java Searching and sorting in java

Searching and sorting in java Annotation symbols for close reading

Annotation symbols for close reading Poetry

Poetry Gcse photography annotation

Gcse photography annotation Maker annotation tutorial

Maker annotation tutorial Lady macbeth character

Lady macbeth character Acquainted with the night annotation

Acquainted with the night annotation Annotation guide

Annotation guide Bacteriophage annotation

Bacteriophage annotation