Risk Analysis Risk Management References T Lister Risk

- Slides: 36

Risk Analysis

Risk Management § References: § T. Lister, “Risk Management is Project Management for Adults”, IEEE Software, May/June 1997, pp 20– 22. § M. J. Carr, “Risk management May Not Be for Everyone”, IEEE Software, May/June 1997, pp 21– 24. § B. Kitchenham and S. Linkman, “Estimates, Uncertainty and Risk”, IEEE Software, May/June 1997, pp 21– 24. § R. C. Williams, J. A. Walker and A. J. Dorofee, “Putting Risk Management into Practice”, IEEE Software, May/June 1997, pp 75– 82. § E. H. Conrow and P. S. Shishido, “Implementing Risk Management on Software Intensive Projects”, IEEE Software, May/June 1997, pp 83– 89.

Risk Management § Risk management is a critical aspect of successful software engineering where the aim to to deliver software on time and in budget (with all the functionality you promised). § But how can you know what will go wrong before you start the project? § How does it affect your cost estimates? § How does it affect your delivery schedule? § How do you manage your exposure to risk?

Challenges § Completed projects have three state: on-time, late and early (an empty set). § A survey of 8, 380 commercial software-intensive projects found that: § 53% of the projects were “challenged”. § Over budget § Behind schedule § Fewer features and functions than originally specified. § 31% of projects were cancelled.

Challenges § On average, these projects had cost increases of 189% and schedule slippage of 222% versus the original estimates at the time they were completed or cancelled! § Of the completed projects, an average of only 61% of the originally specified features were delivered!

What is Going Wrong? § Are these problems the result of poor management? Inexperience? Negligence? Stupidity? § It is the result of poor risk management and costing strategies. § In order to fix the problem, we have to know what risk is and how to manage it effectively.

Defining Risk § Risk is the probability of failing to achieve particular objectives: § Cost § Performance § Schedule § Risk is more – it is also the consequence of failing to achieve those objectives. § There are various techniques to estimate the probability of the risk occurring, but true risk values can almost never be mathematically computed.

Sources of Risk § There are numerous sources of risk within a project. Common risk group and factors are discussed below. § Project level § Excessive, immature, unrealistic, or unstable requirements. § Lack of user involvement. § Underestimation of project complexity or dynamic nature. § Project attributes § Performance shortfalls (includes errors and quality). § Unrealistic cost or schedule (estimates and/or allocated amounts).

Sources of Risk § Management § Ineffective project management (multiple levels possible). § Engineering § Ineffective integration, assemble and test, quality, control, speciality engineering, or systems engineering (multiple levels possible). § Unanticipated difficulties associated with the user interface.

Sources of Risk § Work environment § Immature or untried design, process, or technologies selected. § Inadequate work plans or configuration control. § Inappropriate methods or tool selection or inaccurate metrics. § Poor training.

Sources of Risk § Other § Inadequate or excessive documentation or review process. § Legal or contractual issues (such as litigation, malpractice, ownership, intellectual property) § Obsolescence (includes excessive schedule length). § Unanticipated difficulties with subcontracted items. § Unanticipated maintenance and/or support costs.

Sources of Risk § But wait, there’s more… § For moderate and high complexity software systems, there a number of issues that can significantly increase costs and result in schedule slippage. § Performance-dominated requirements generation (by either buyer or seller or both) results in an early focus on performance without due consideration for: § Budget – typically inadequate for desired performance level. § Design – performance demands push the design to near the feasible limit of achievable performance. Usually leads to cost blow-out. § Major decisions made too early – lack of understanding of relationship between cost, schedule and performance leads to increased costs and schedule slippage.

Sources of Risk § Excessive optimism in assessing the limits of performance achievable for a given budget and time frame is likely to result in cost and schedule over-run. § These are all risks that may result in the project being challenged. § US Defence work mandates the risk assessment take place and that it follow a directive (Do. D directive 5000. 1) and a standard (EIA/IEEE J-STD-016). However, defence projects still run over time and budget, ie. , their risk assessment and management is deficient!

Common Deficiencies § There are four main risk management deficiencies. 1. Buyer and seller often have risk management processes that are weakly structured or ad hoc. § May exist on paper, but not followed. § No clear mechanism to mitigate risk. § The problem is that there is not a single set of guidelines as to how the risk management process is to be implemented.

Common Deficiencies 2. Risk assessment is often too subjective and not adequately documented. § A weak risk assessment methodology can introduce considerable doubt as to the accuracy and value of the results. § Mathematical operations on uncalibrated risk assessment scores is meaningless. § Lack of detail makes the nature of the risk incomprehensible. § Buyer and seller may use different risk assessment methodologies which makes comparing results difficult or impossible.

Common Deficiencies 3. Risk assessment processes generally focus on the probability associated with a specific event without consideration for the consequences. § Recall that risk is a combination of an events probability and its consequences. § Therefore, there is a need to track both factors over time. 4. Risk assessment and risk handling plans often unlinked and risks are not tracked fully.

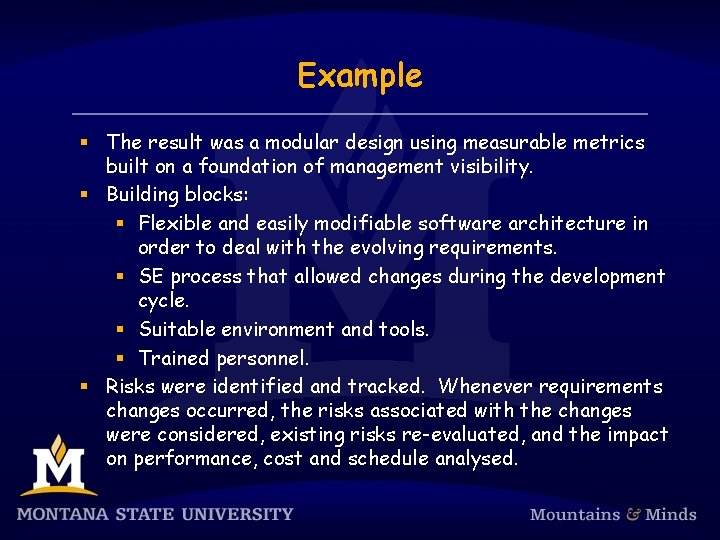

Example § TRW project – ≥ 1, 000 LOC (Ada) Command Control System. § Key challenges: § Tight performance demands. § Other contractors developed external interfaces concurrently. § Significant user interaction. § Evolving requirements. § Fixed price contract. § A flexible architecture and design was needed. Had to uncover potential risk issues early and develop a process that allowed changes to be made easily.

Example § The result was a modular design using measurable metrics built on a foundation of management visibility. § Building blocks: § Flexible and easily modifiable software architecture in order to deal with the evolving requirements. § SE process that allowed changes during the development cycle. § Suitable environment and tools. § Trained personnel. § Risks were identified and tracked. Whenever requirements changes occurred, the risks associated with the changes were considered, existing risks re-evaluated, and the impact on performance, cost and schedule analysed.

Risk Management Overview § Established a risk review board (RRB). § Led by project manager. § Met monthly. § Included representatives from each of the functional and support areas (software, hardware, test, quality assurance, configuration management, . . . ). § Process was iterative. § Documented feedback to both project management and the customer.

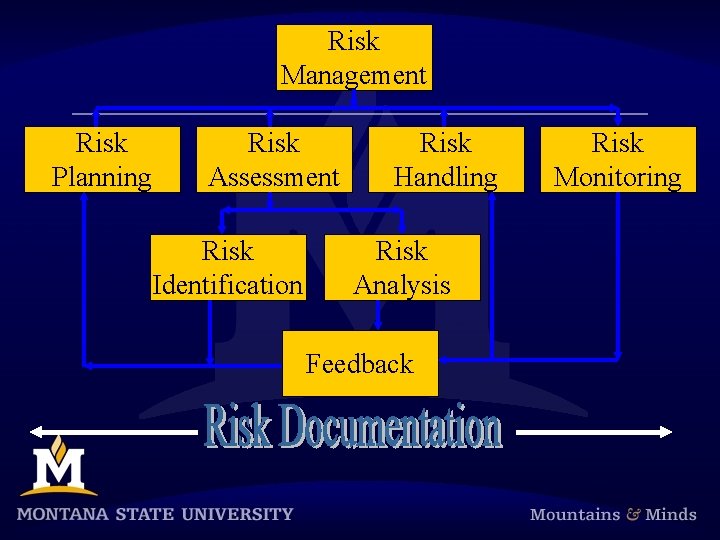

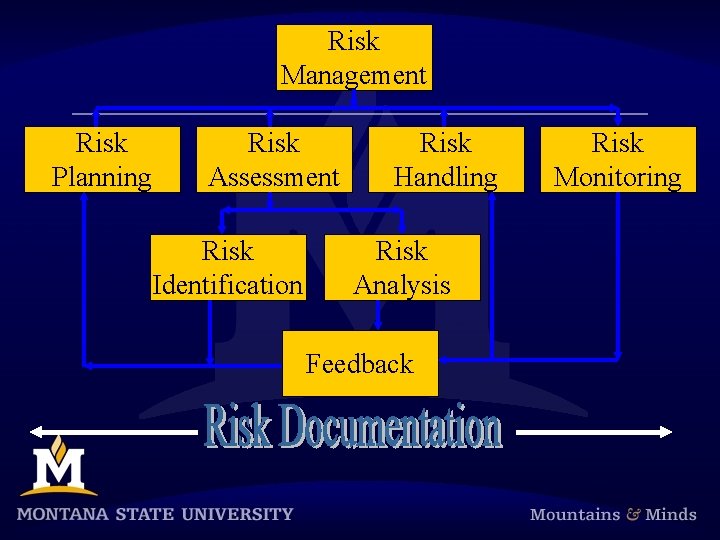

Risk Management Risk Planning Risk Assessment Risk Identification Risk Handling Risk Analysis Feedback Risk Monitoring

Risk Management § All risks are documented. This includes a short description of the risk type (schedule, cost, technical); severity (low, moderate, high); risk mitigation plan; and status of the risk mitigation activity. § Metrics must be used to measure the effect of the risk and to identify if it is indeed occurring. § Everyone on the project is encouraged to identify risks during any management or technical meeting. § Do not shoot the messenger! § Once a risk is identified and a risk mitigation plan created, it is assigned to a responsible person. Risks are monitored and plans updated until the risk is reduced to an acceptable level. § Read the article by Conrow and Shishido to find out how specific risk issues were dealt with on the TRW project.

Putting Risk Management Into Practice § It is all well reading and talking about risk management, but what happens when you are responsible for it? § Where can you find a tried and tested risk management plan? § How do you put what you read into practice? § How do you identify the risks you are exposed to? § Which strategies work well? Which don’t? § Risk management must be a continuous activity throughout the project. § Everyone must be involved in risk management and mitigation if it is to work. § The customer and the supplier must continuously and independently manage their own lists of risks. Some risks may be jointly managed.

Sponsorship § For risk management to succeed, there must be a commitment from a suitably powerful individual – typically the customer project manager. § The sponsor must be visibly involved in the project’s life cycle. § Often all the customer needs to do is ask for the latest status on identified risks on a regular basis.

Focus Activities § Generate a list of risk statements, evaluate them for probability and impact, classify them and rank them in priority order. Develop mitigation strategies for them. § Remember that there is little point spending having 5 people spend 2 days developing a mitigation strategy for an identified risk that has a low probability, low priority and a low impact (ie. , less that 10 days delay to the schedule in this case). Don’t spend more time and money managing the risk than what the cost of the risk actually is! § Document all risks – remember, it’s harder to ignore if it’s written down.

Hints § Document all risks. Be precise – vague wording is ineffective. § Evaluate the likelihood of the risk (estimate satisfactory) § Identify the impact of risk (its consequences). § Quick and dirty estimates (high, medium, low) is typically sufficient. § Assign the risk to an individual. § Make all risks visible to everyone. § Track and monitor all risks. § Classify or group risks. Different people will see different aspects of the same risk (project manager, configuration manager, software engineer, …). § Prioritise risks and re-evaluate periodically.

Hints § You can’t fix everything! Not every risk can be mitigated; every condition has a number of possible outcomes (consequences) and each will create a risk that could be mitigated. Identify the critical risks and watch them for significant changes. § Identify the risks that can be accepted – you could live with them if they occurred. § Identify the risks that are better managed by someone else. § Track risks and monitor them – how else do you know when they are gone? Metrics (measures), indicators and triggers can help you watch for significant changes.

Hints § Are you sure you can close the risk? Closing a risk because you took an action, any action, is not controlling the risk, it is abdicating to it. § Controlling a risk means: § Altering mitigation strategies when they become ineffective. § Taking action on a risk that becomes important enough to require mitigation. § Taking a pre-planned contingency action. § Dropping to a watch-only mode at some specific threshold. § Closing the risk when it no longer exists. § Check to make sure that your actions reduce or manage the risk.

Estimates § When bidding for contracts we need to make several estimates in order to determine schedule, cost, etc. § Estimate uncertainty occurs because an estimate is a probabilistic assessment of a future condition. § Estimators produce an estimate based on an estimation model. This model itself can be a source of errors.

Measurement Error § This occurs if some of the input values into a model have inherent accuracy limitations. § For example, function points are assumed to have a 12% inaccuracy. Therefore a project with an estimated 1, 000 function points, could end up with anywhere between 880 and 1, 120 function points in reality. § Applying a model of 0. 2 person days per function point means your estimate will have a range of 176 to 224 person days, with a most likely value of 200 person days.

Model Error § All empirical models are abstractions of reality. No model includes all factors that affects the final outcome. Factors not explicitly included in the model contribute to model error. § For example, a model of 0. 2 person days per function point is usually obtained from results previously observed. It is unlikely that future projects will achieve exactly the same ratio, but it is expected that the model is “all right on average”. § If you have an estimation model with an inherent 20% inaccuracy and your project is 1, 000 function points, then your estimate is likely to be between 160 and 240 person days.

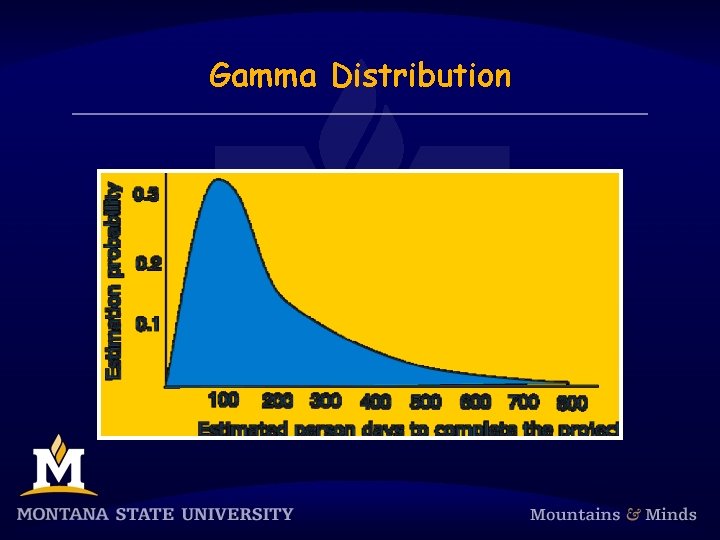

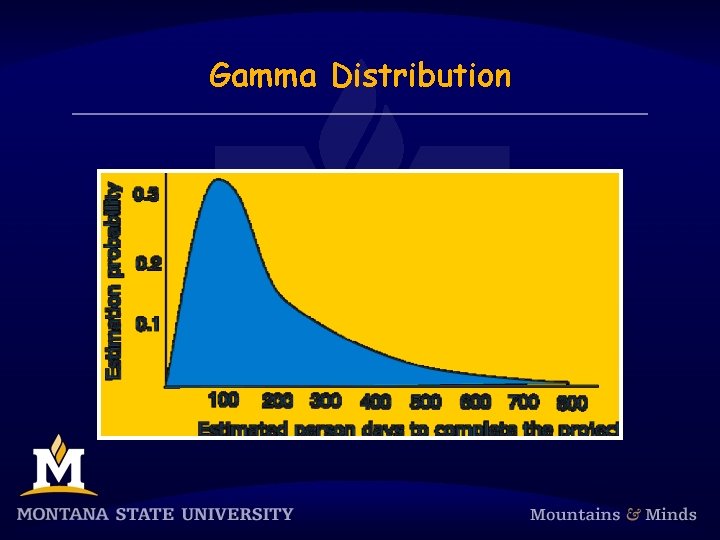

Errors are Additive § Estimate error and model error are additive. The real range of values is between 140. 8 and 268. 8 person days with a most likely value of 200 person days. § Using the mean magnitude of the relative error to assess model accuracy can be misleading. There is no a priori reason for underestimation and overestimation to be of equal size. § Underestimation is naturally bounded: the minimum effort required for an activity is zero. § An overestimate is not naturally bounded. § A more likely probability distribution for the effort estimate is one based on a Gamma Distribution.

Gamma Distribution § f(x) = � ( x) – 1 exp(– x)/ ( ) § where x ≥ 0, and are both real and positive, and ( ) is the Gamma function which is a generalisation of the factorial function. Often = 2 and = 19.

Gamma Distribution

Assumption Error § Occurs when we make incorrect assumptions. For example, the estimate of 1, 000 function points id based on the assumption that we have correctly identified all of the customers requirements. § If you can identify the assumptions, you can determine the risk associated with it. § If there is a 10% probability that the requirements complexity has been underestimated, and if it has you estimate that another 100 function points will be required, you obtain the estimates risk exposure: § Risk exposure = (E 2 – E 1) P 2 § E 1 = effort of original assumption. § E 2 = effort if alternative assumption is true. § P 2 = probability that the alternative assumption is true.

Contingency § This risk exposure corresponds to the contingency you need to protect yourself against the assumption error. § The probabilistic nature of risk means that the contingency will not protect a project if the original assumption is invalid. § Risk exposure and model error are independent. § If you include uncertainty in the model, then you should not include it in the risk assessment. § Do not double count the uncertainty/risk.

Contingency § Risk management should be across the entire organisations project portfolio. § The organisation should hold all of the contingency days in a “central fund” which can be drawn on by projects as needed. This is similar to the philosophy used by insurance companies.