RealTime Animation of Realistic Virtual Humans RealTime Animation

- Slides: 40

Real-Time Animation of Realistic Virtual Humans

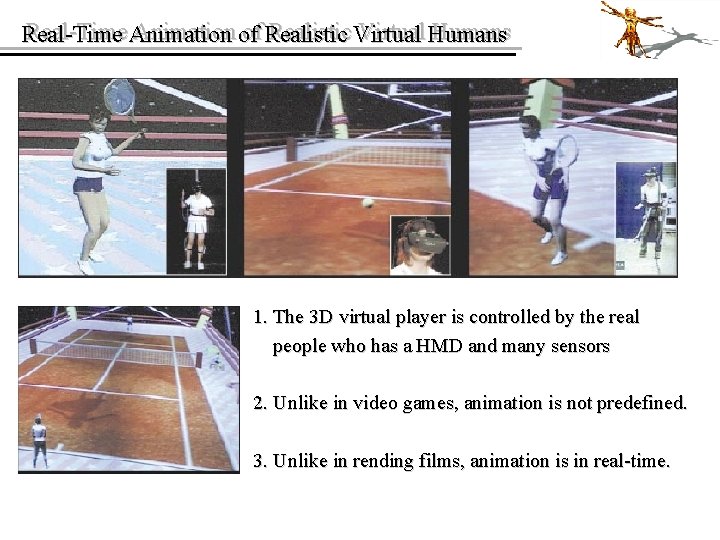

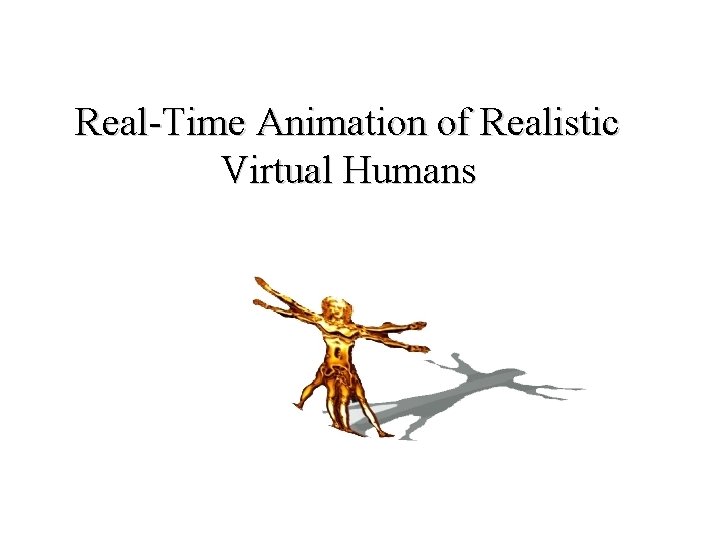

Real-Time Animation of of Realistic Virtual Humans Real-Time 1. The 3 D virtual player is controlled by the real people who has a HMD and many sensors 2. Unlike in video games, animation is not predefined. 3. Unlike in rending films, animation is in real-time.

Real-Time Animation of of Realistic Virtual Humans Real-Time To achieve a real-time virtual humans application we need to consider: 1. Modeling people. 2. How to present deformation of human body. 3. Motion control.

Real-Time Animation of Realistic Virtual Humans 1. Body Creation and Skeleton Animation -Zhou Bin 2. Facial Deformation -Hu Yi 3. Body Deformation and Animation Framework -Yang Yufei

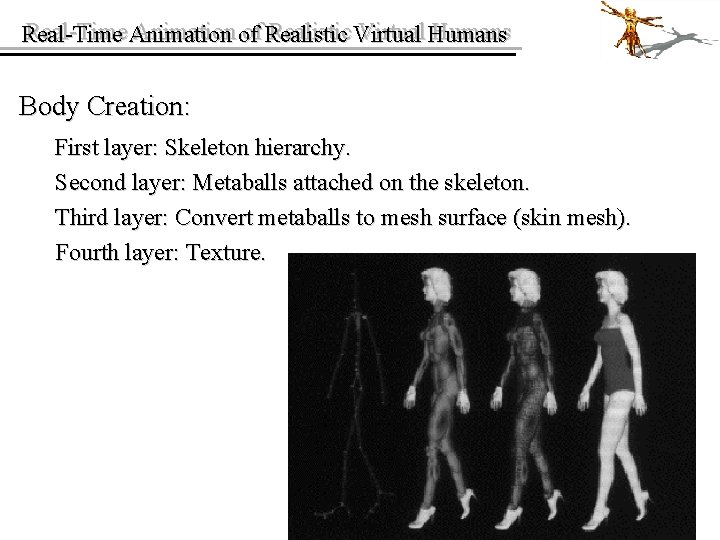

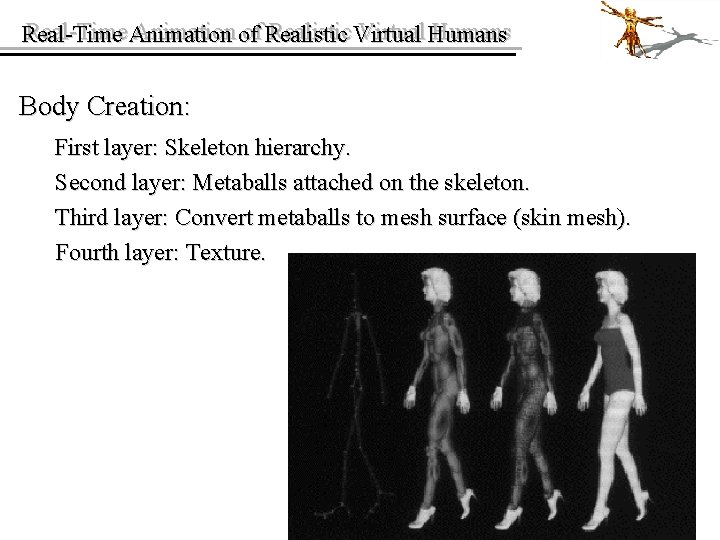

Real-Time Animation of of Realistic Virtual Humans Real-Time Body Creation: First layer: Skeleton hierarchy. Second layer: Metaballs attached on the skeleton. Third layer: Convert metaballs to mesh surface (skin mesh). Fourth layer: Texture.

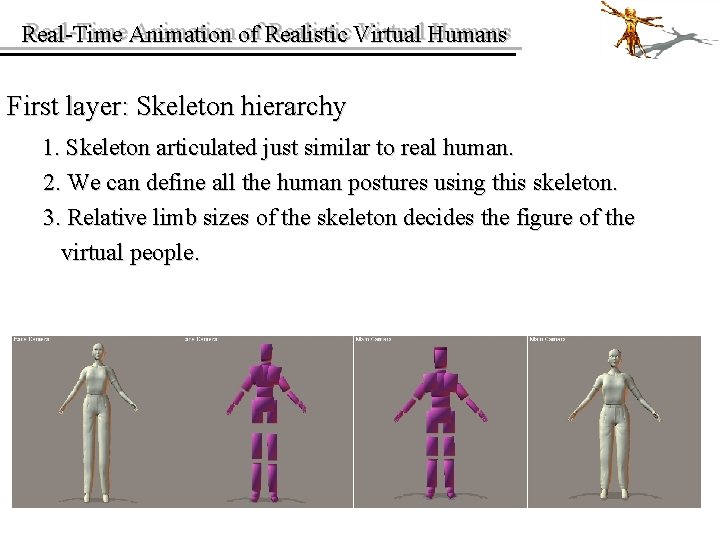

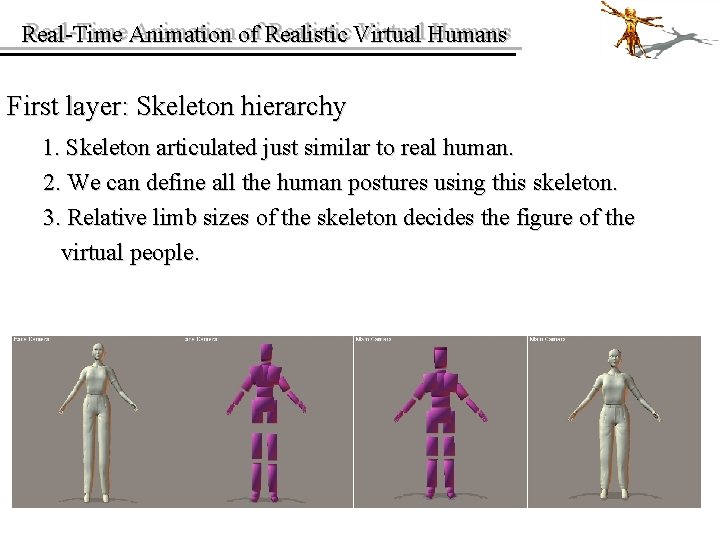

Real-Time Animation of of Realistic Virtual Humans Real-Time First layer: Skeleton hierarchy 1. Skeleton articulated just similar to real human. 2. We can define all the human postures using this skeleton. 3. Relative limb sizes of the skeleton decides the figure of the virtual people.

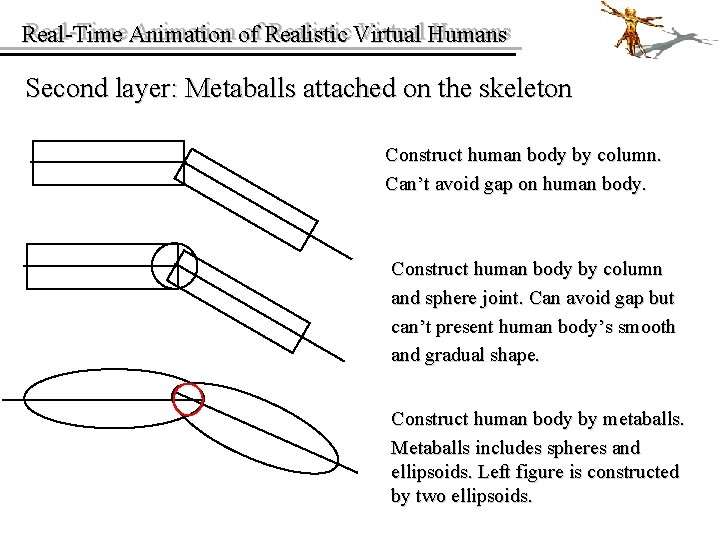

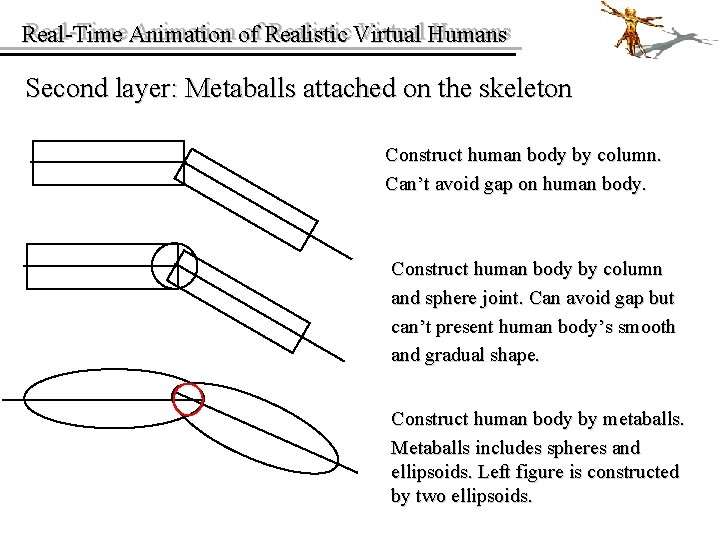

Real-Time Animation of of Realistic Virtual Humans Real-Time Second layer: Metaballs attached on the skeleton Construct human body by column. Can’t avoid gap on human body. Construct human body by column and sphere joint. Can avoid gap but can’t present human body’s smooth and gradual shape. Construct human body by metaballs. Metaballs includes spheres and ellipsoids. Left figure is constructed by two ellipsoids.

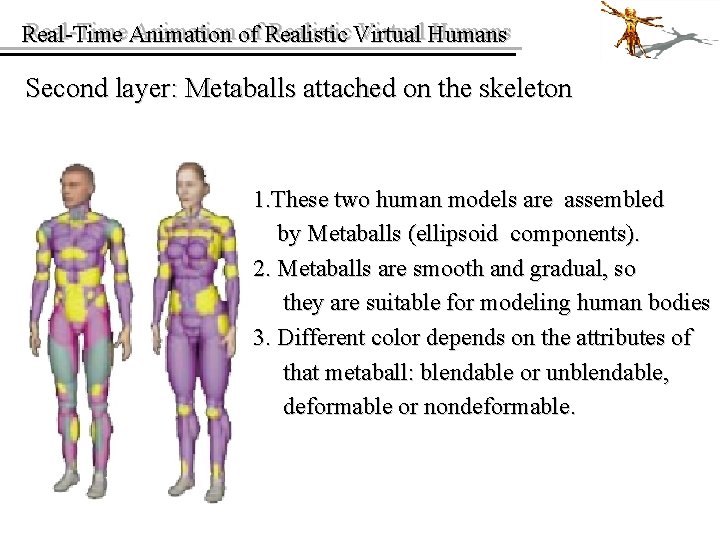

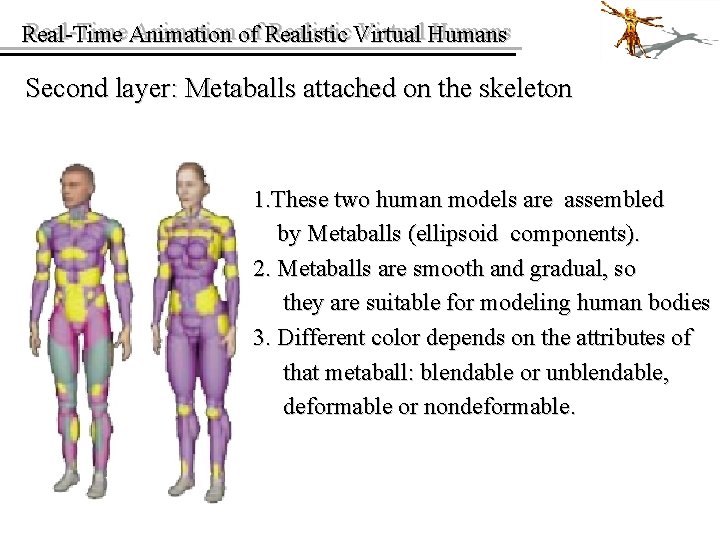

Real-Time Animation of of Realistic Virtual Humans Real-Time Second layer: Metaballs attached on the skeleton 1. These two human models are assembled by Metaballs (ellipsoid components). 2. Metaballs are smooth and gradual, so they are suitable for modeling human bodies 3. Different color depends on the attributes of that metaball: blendable or unblendable, deformable or nondeformable.

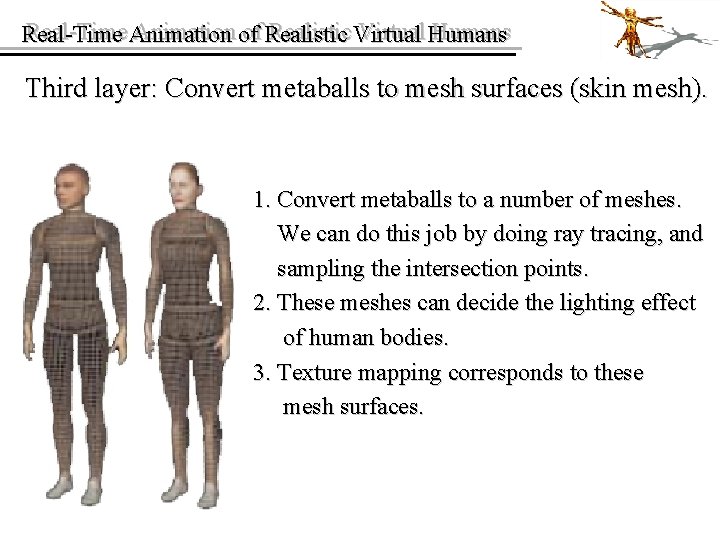

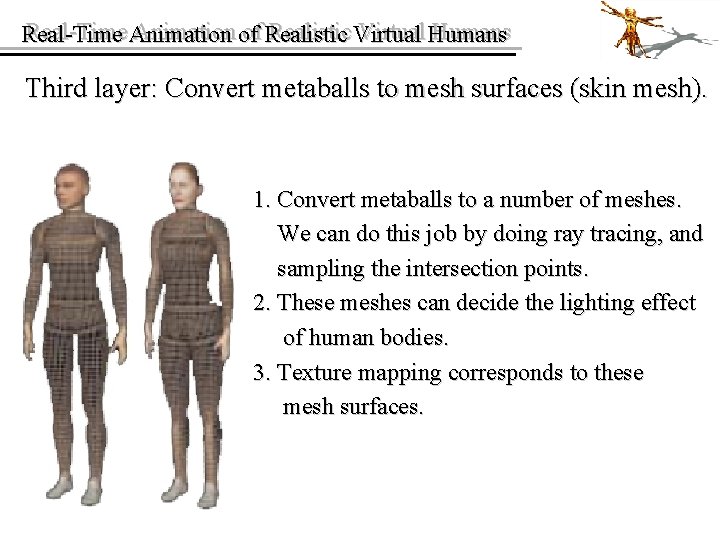

Real-Time Animation of of Realistic Virtual Humans Real-Time Third layer: Convert metaballs to mesh surfaces (skin mesh). 1. Convert metaballs to a number of meshes. We can do this job by doing ray tracing, and sampling the intersection points. 2. These meshes can decide the lighting effect of human bodies. 3. Texture mapping corresponds to these mesh surfaces.

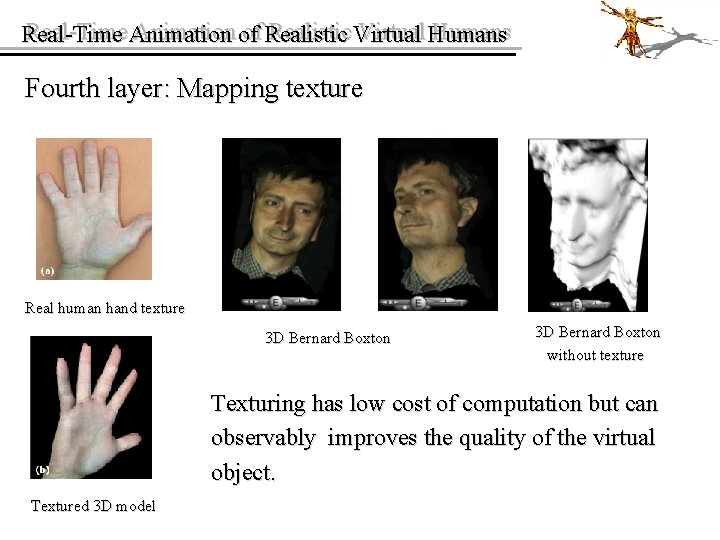

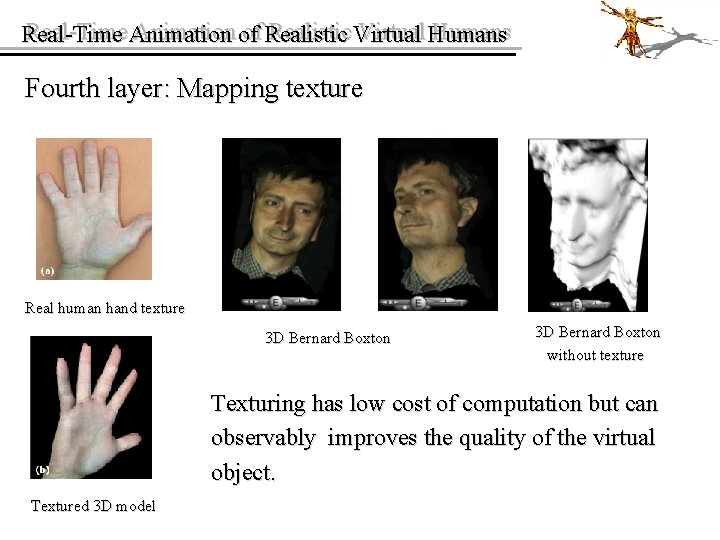

Real-Time Animation of of Realistic Virtual Humans Real-Time Fourth layer: Mapping texture Real human hand texture 3 D Bernard Boxton without texture Texturing has low cost of computation but can observably improves the quality of the virtual object. Textured 3 D model

Real-Time Animation of of Realistic Virtual Humans Real-Time Body Animation: First layer: Skeleton motion. --Let avatars make postures and actions. Second layer: Mesh surface (skin mesh) deformation. --Present deformation of humans’ skin.

Real-Time Animation of of Realistic Virtual Humans Real-Time Skeleton: 1. The hierarchy of skeleton is defined by a set of joints. This set of joints corresponds to the main joints of real humans 2. Each joint consists of a set of degrees of freedom (DOF). DOFs decide ranges of the joint can translate and rotate.

Real-Time Animation of of Realistic Virtual Humans Real-Time Three methods of skeleton motion control: 1. The skeleton motion is captured in real time and drives the avatar. 2. The skeleton motion is predefined. It will be activated from a database as a response to human’s input. E. g. Sony’s Eye Toy. 3. The skeleton motion is dynamically calculated. Doesn’t require a user’s continual intervention. E. g. complex games, and AI application.

Real-Time Animation of of Realistic Virtual Humans Real-Time u Human Head Modeling Facial Animation • To simulate humans requires real-time visualization and animation.

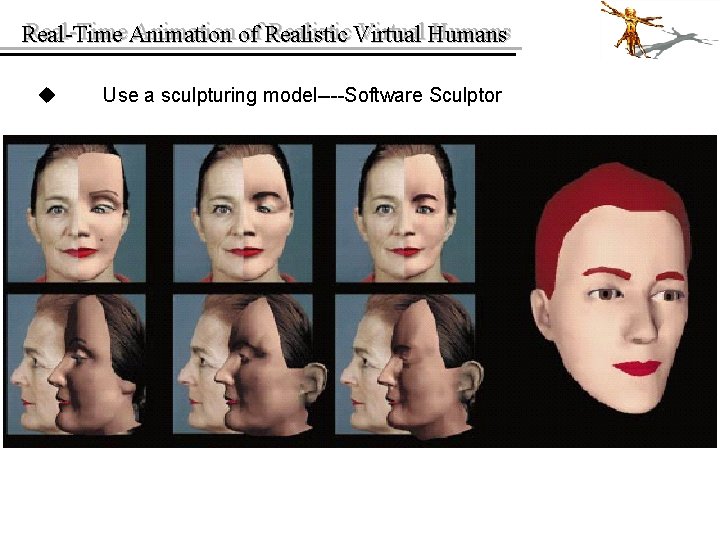

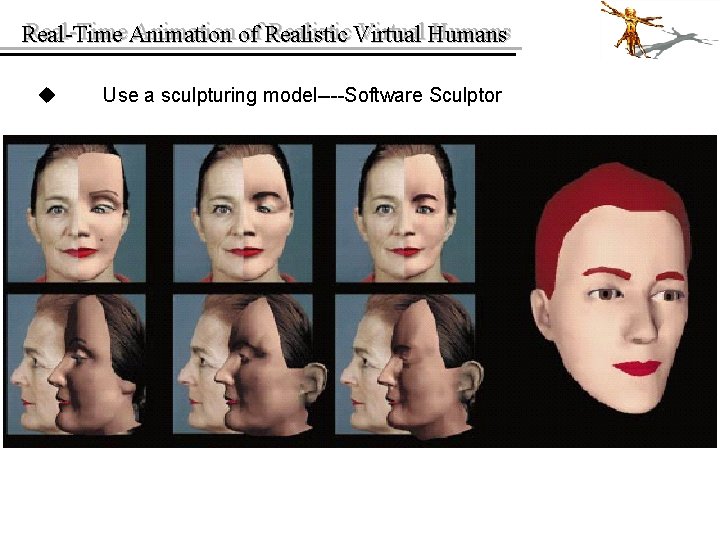

Real-Time Animation of of Realistic Virtual Humans Real-Time u Human Head Modeling • Scanning scan the surface of the head and construct a head model • Use a sculpturing model----Software Sculptor

Real-Time Animation of of Realistic Virtual Humans Real-Time u Use a sculpturing model----Software Sculptor

Real-Time Animation of of Realistic Virtual Humans Real-Time u Facial Animation • Facial deformation model • Facial motion control

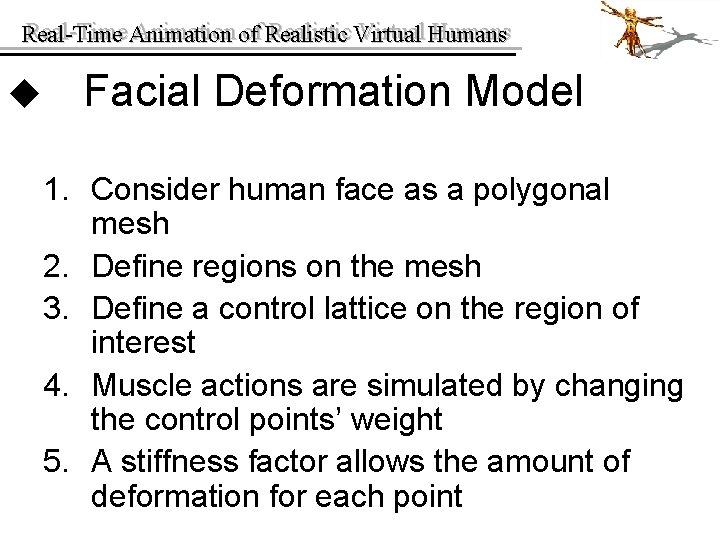

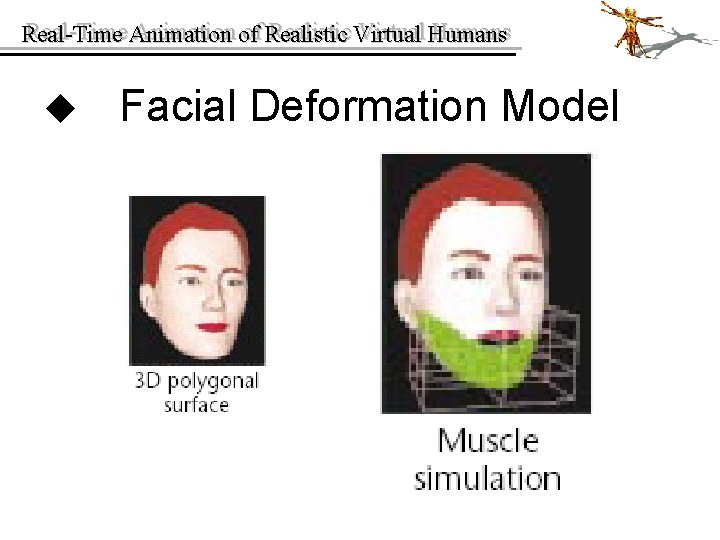

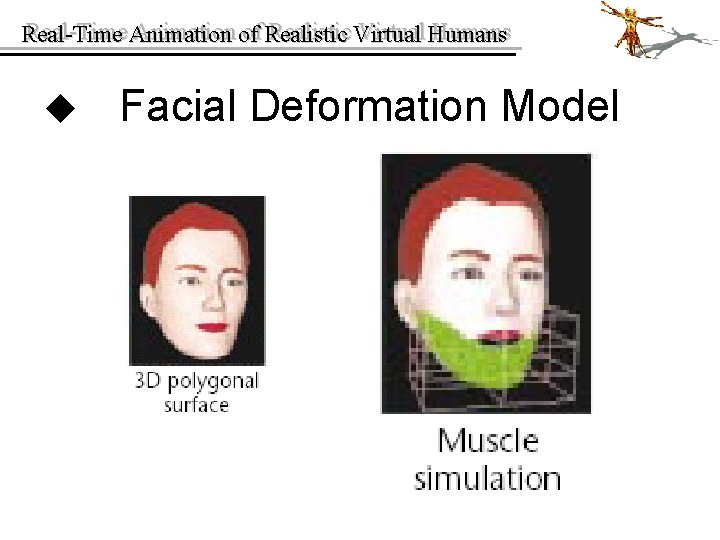

Real-Time Animation of of Realistic Virtual Humans Real-Time u Facial Deformation Model 1. Consider human face as a polygonal mesh 2. Define regions on the mesh 3. Define a control lattice on the region of interest 4. Muscle actions are simulated by changing the control points’ weight 5. A stiffness factor allows the amount of deformation for each point

Real-Time Animation of of Realistic Virtual Humans Real-Time u Facial Deformation Model

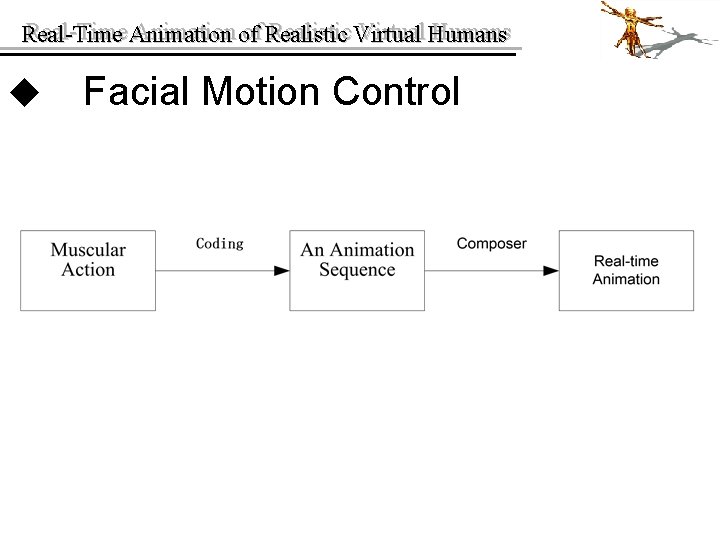

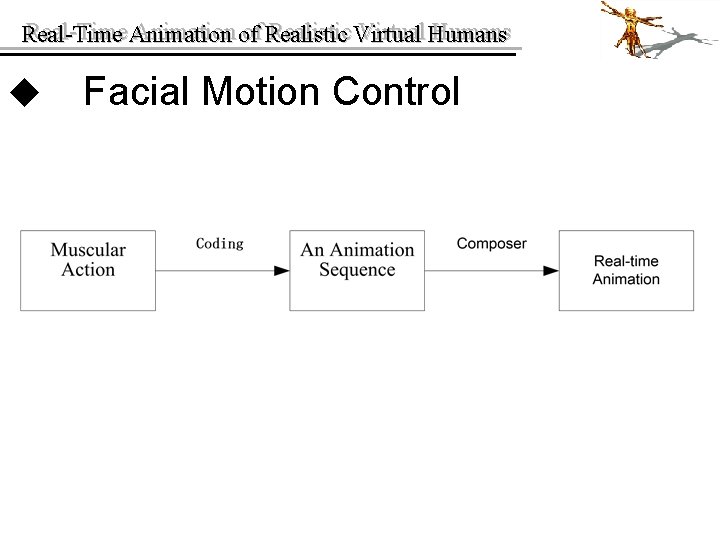

Real-Time Animation of of Realistic Virtual Humans Real-Time u Facial Motion Control

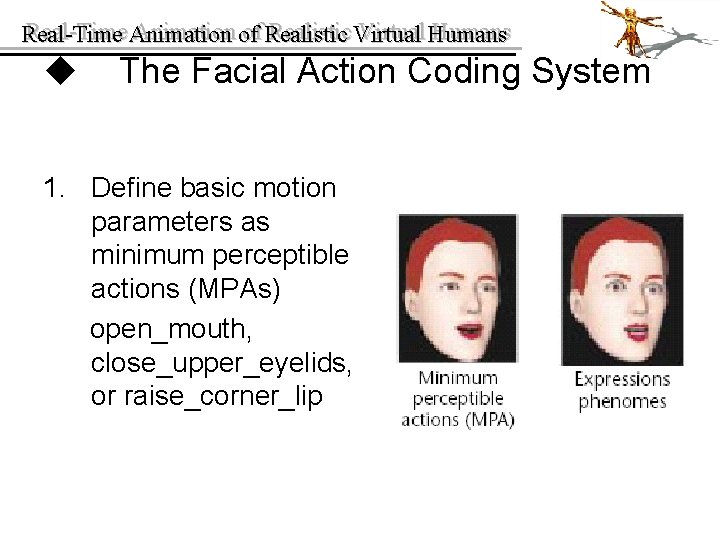

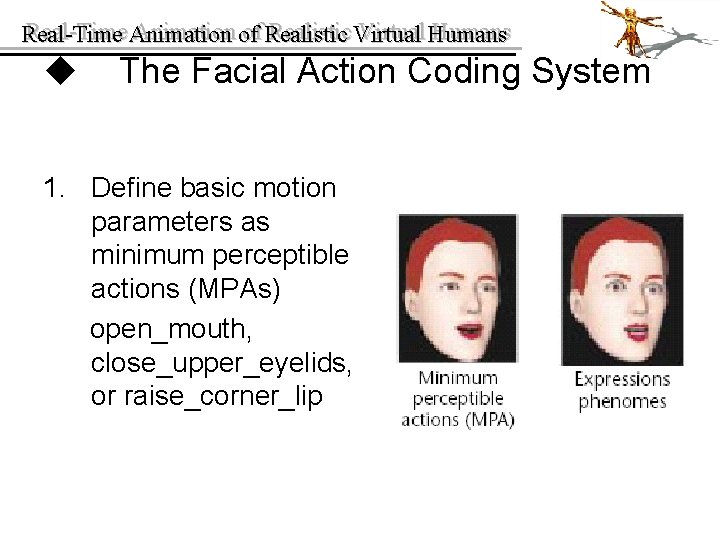

Real-Time Animation of of Realistic Virtual Humans Real-Time u The Facial Action Coding System 1. Define basic motion parameters as minimum perceptible actions (MPAs) open_mouth, close_upper_eyelids, or raise_corner_lip

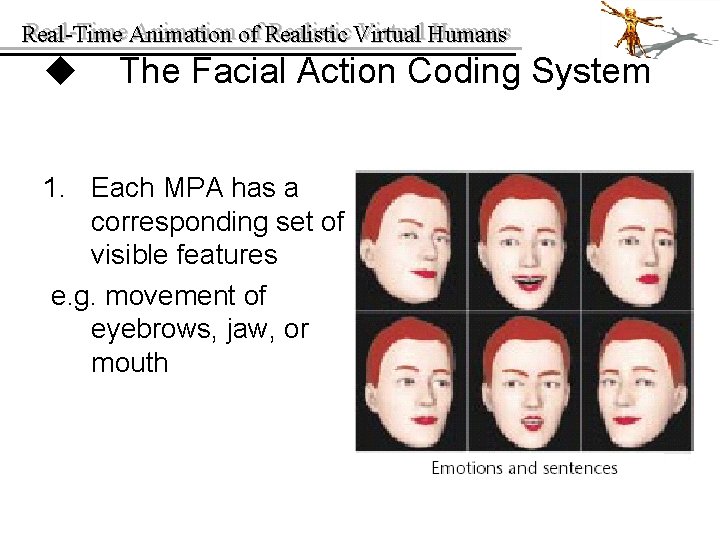

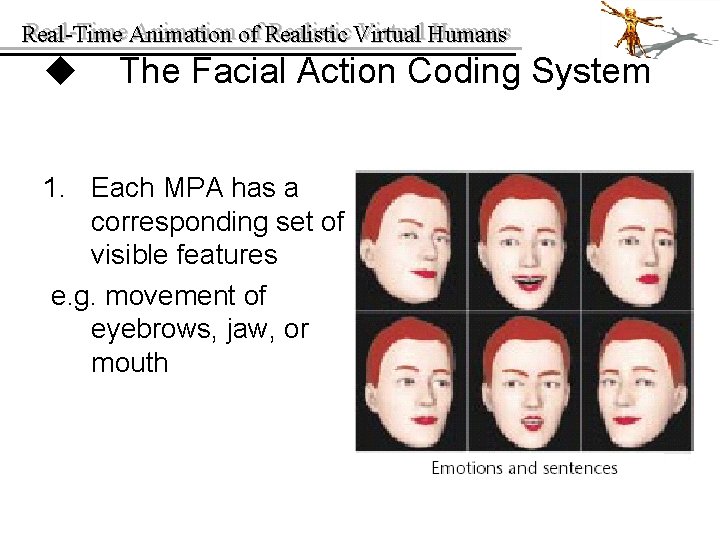

Real-Time Animation of of Realistic Virtual Humans Real-Time u The Facial Action Coding System 1. Each MPA has a corresponding set of visible features e. g. movement of eyebrows, jaw, or mouth

Real-Time Animation of of Realistic Virtual Humans Real-Time u The Facial Action Coding System 2. Real-time facial animation module uses three different input methods • Video • Audio or Speech • Predefined Actions

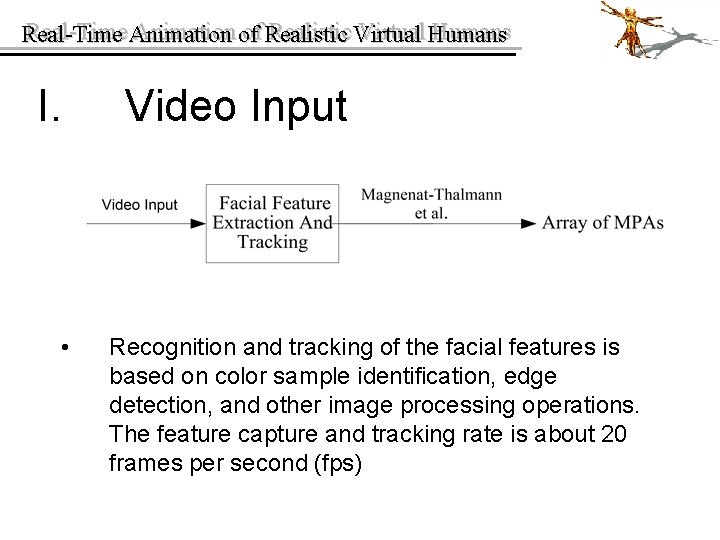

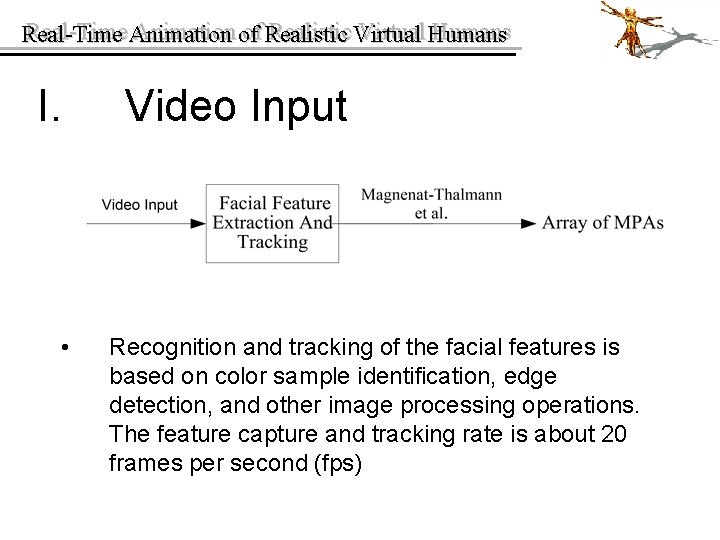

Real-Time Animation of of Realistic Virtual Humans Real-Time I. • Video Input Recognition and tracking of the facial features is based on color sample identification, edge detection, and other image processing operations. The feature capture and tracking rate is about 20 frames per second (fps)

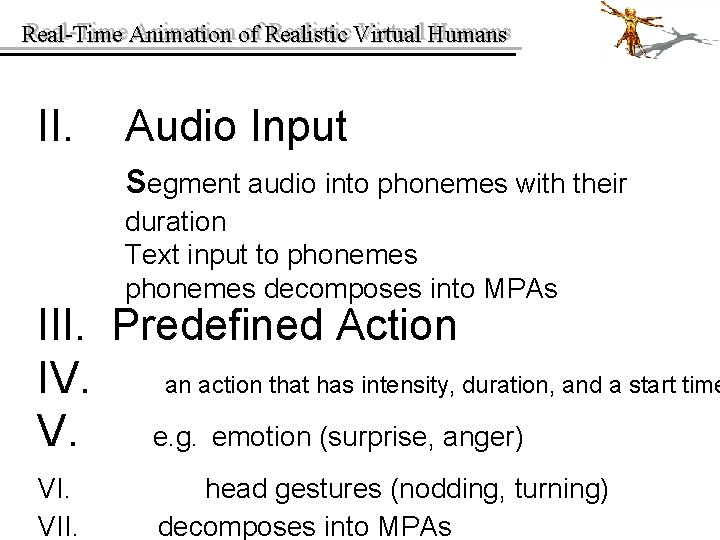

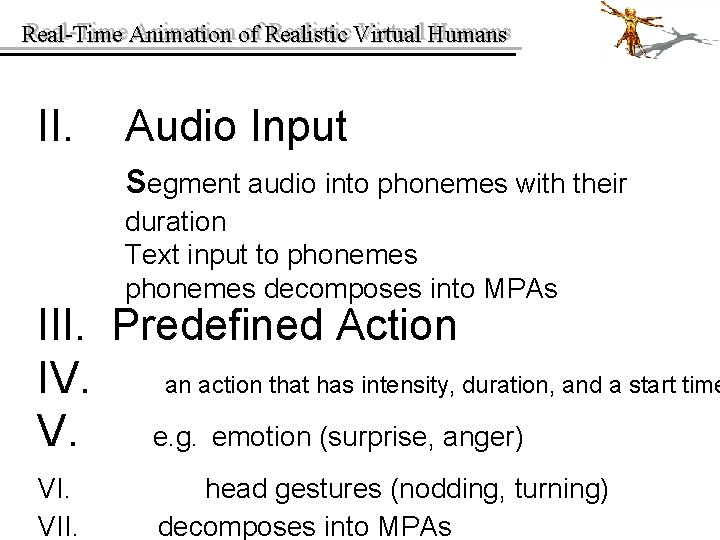

Real-Time Animation of of Realistic Virtual Humans Real-Time II. Audio Input segment audio into phonemes with their duration Text input to phonemes decomposes into MPAs III. Predefined Action IV. an action that has intensity, duration, and a start time V. e. g. emotion (surprise, anger) VI. VII. head gestures (nodding, turning) decomposes into MPAs

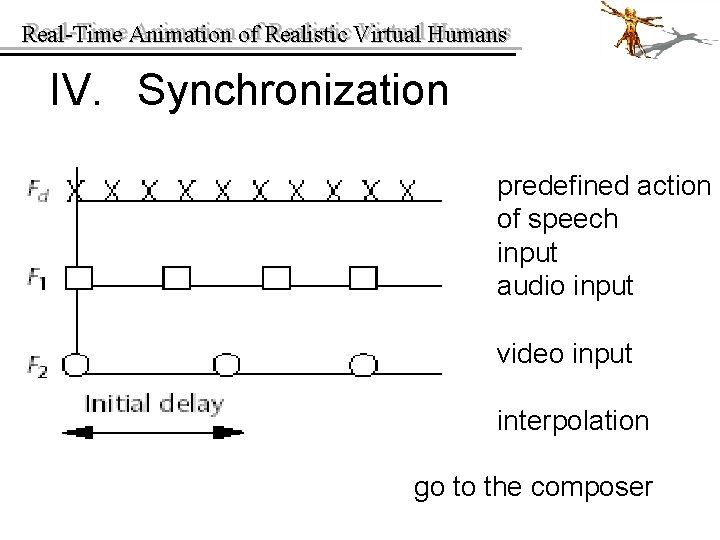

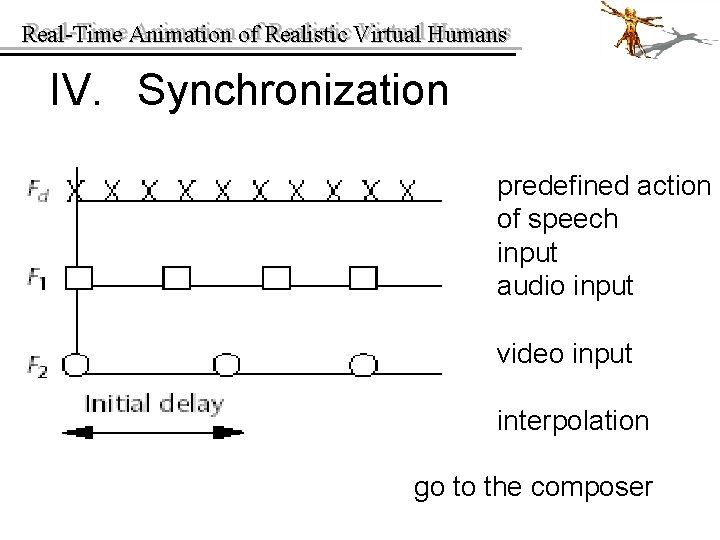

Real-Time Animation of of Realistic Virtual Humans Real-Time IV. Synchronization predefined action of speech input audio input video input interpolation go to the composer

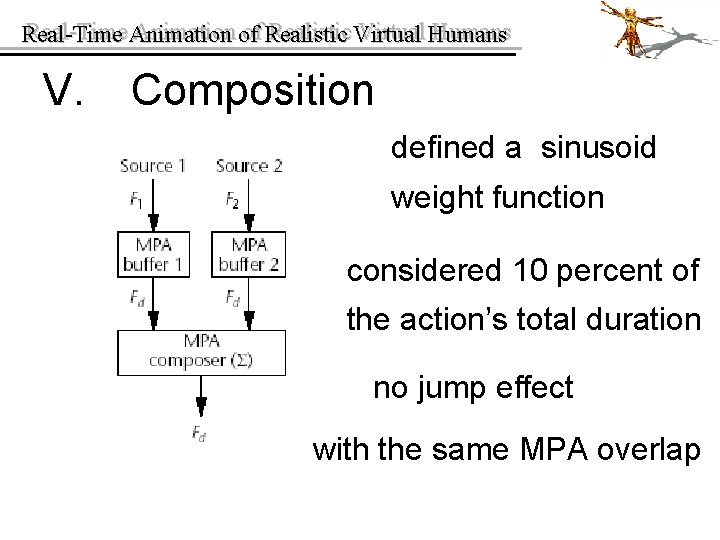

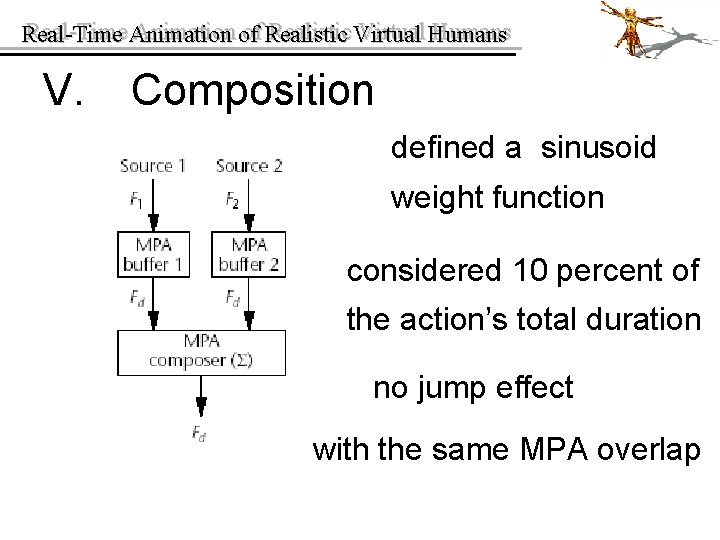

Real-Time Animation of of Realistic Virtual Humans Real-Time V. Composition defined a sinusoid weight function considered 10 percent of the action’s total duration no jump effect with the same MPA overlap

Real-Time Animation of of Realistic Virtual Humans Real-Time u Body deformations • The ways we do the representing humans – Polygonal representation – Visual accuracy representation – The combination of previous two • Implement results u Animation framework u Two case studies

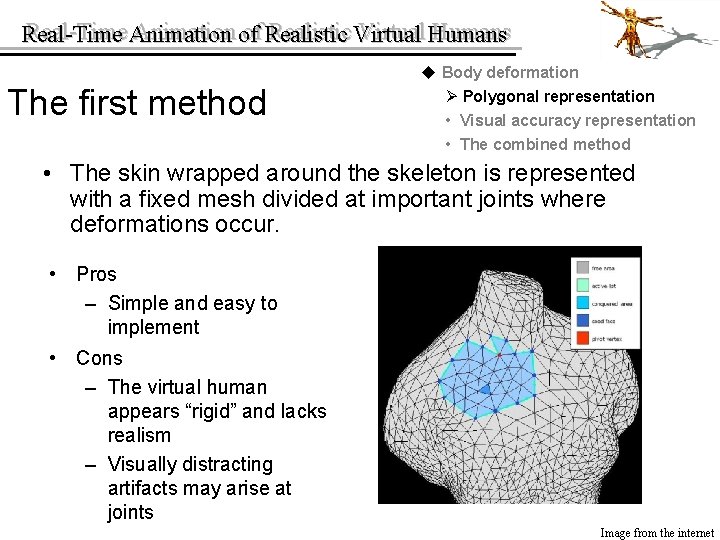

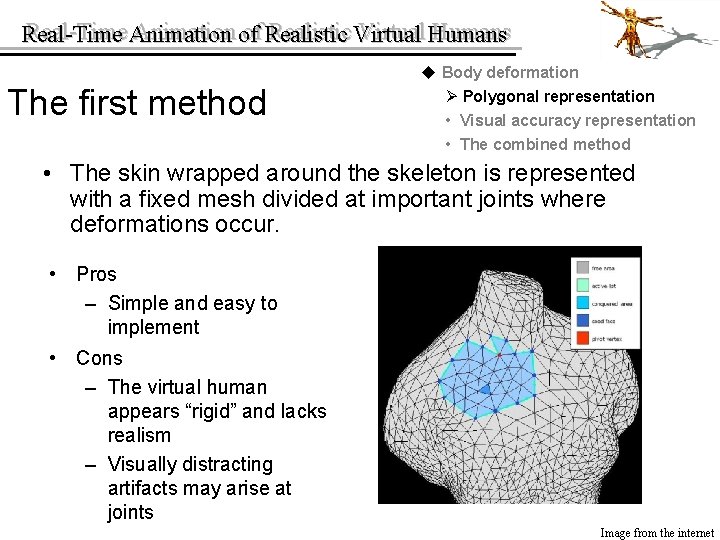

Real-Time Animation of of Realistic Virtual Humans Real-Time The first method u Body deformation Ø Polygonal representation • Visual accuracy representation • The combined method • The skin wrapped around the skeleton is represented with a fixed mesh divided at important joints where deformations occur. • Pros – Simple and easy to implement • Cons – The virtual human appears “rigid” and lacks realism – Visually distracting artifacts may arise at joints Image from the internet

Real-Time Animation of of Realistic Virtual Humans Real-Time The second method u Body deformation • Polygonal representation Ø Visual accuracy representation • The combined method • The application compute the skin from implicit primitives and use a physical model to deform the body’s envelope. • Pros – Stress visual accuracy and yields very satisfactory results in terms of realism • Cons – So computationally demanding – Unsuitable for real-time applications

Real-Time Animation of of Realistic Virtual Humans Real-Time The third method u Body deformation • Polygonal representation • Visual accuracy representation Ø The combined method • It’s a combination of previous two methods, allowing a good trade-off between realism and rendering speed. • Steps – Constructing a body mesh – Deforming by manipulating skin contours

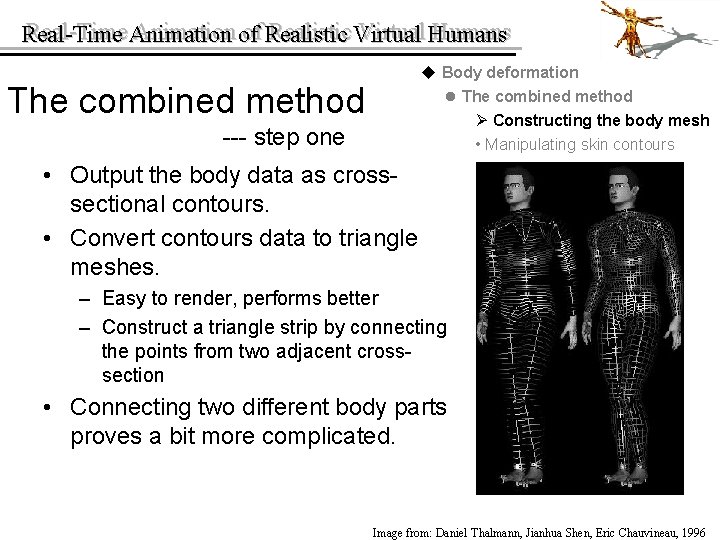

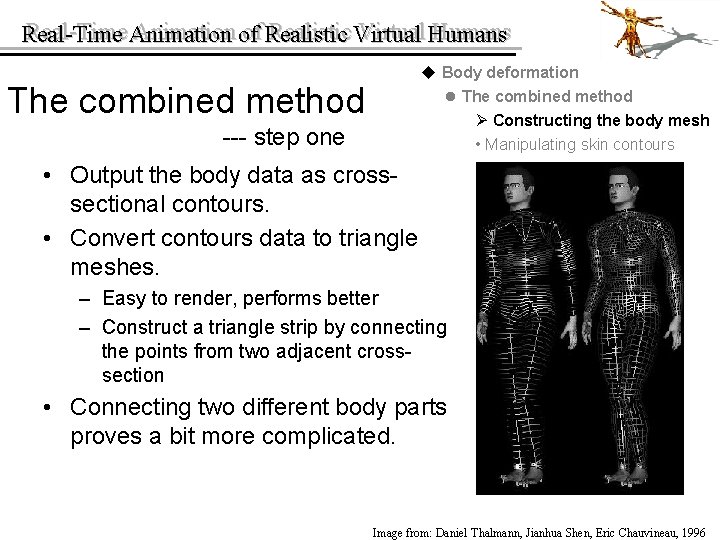

Real-Time Animation of of Realistic Virtual Humans Real-Time u Body deformation l The combined method Ø Constructing the body mesh • Manipulating skin contours The combined method --- step one • Output the body data as crosssectional contours. • Convert contours data to triangle meshes. – Easy to render, performs better – Construct a triangle strip by connecting the points from two adjacent crosssection • Connecting two different body parts proves a bit more complicated. Image from: Daniel Thalmann, Jianhua Shen, Eric Chauvineau, 1996

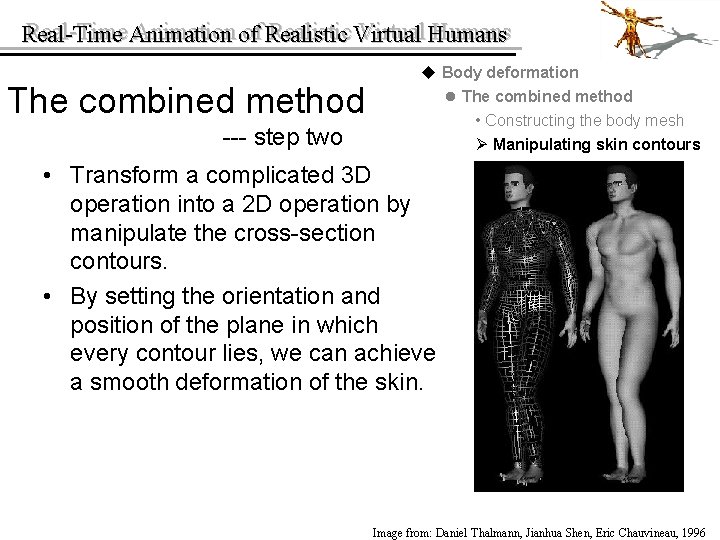

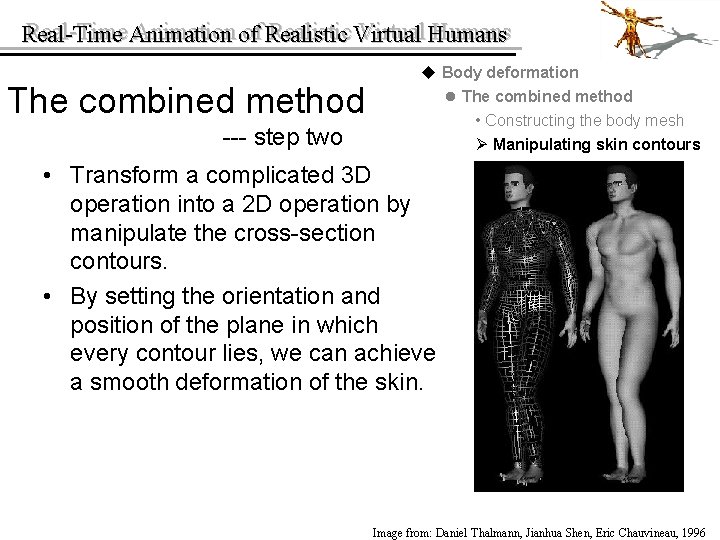

Real-Time Animation of of Realistic Virtual Humans Real-Time The combined method --- step two u Body deformation l The combined method • Constructing the body mesh Ø Manipulating skin contours • Transform a complicated 3 D operation into a 2 D operation by manipulate the cross-section contours. • By setting the orientation and position of the plane in which every contour lies, we can achieve a smooth deformation of the skin. Image from: Daniel Thalmann, Jianhua Shen, Eric Chauvineau, 1996

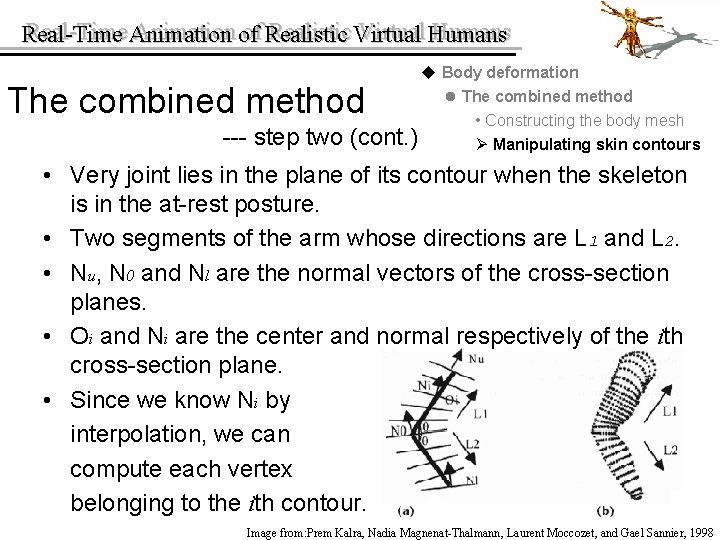

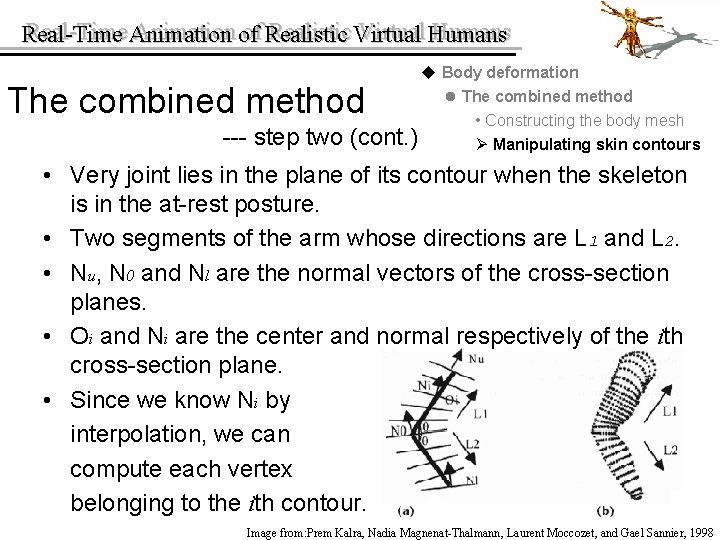

Real-Time Animation of of Realistic Virtual Humans Real-Time u Body deformation l The combined method • Constructing the body mesh (cont. ) Ø Manipulating skin contours The combined method --- step two • Very joint lies in the plane of its contour when the skeleton is in the at-rest posture. • Two segments of the arm whose directions are L 1 and L 2. • Nu, N 0 and Nl are the normal vectors of the cross-section planes. • Oi and Ni are the center and normal respectively of the ith cross-section plane. • Since we know Ni by interpolation, we can compute each vertex belonging to the ith contour. Image from: Prem Kalra, Nadia Magnenat-Thalmann, Laurent Moccozet, and Gael Sannier, 1998

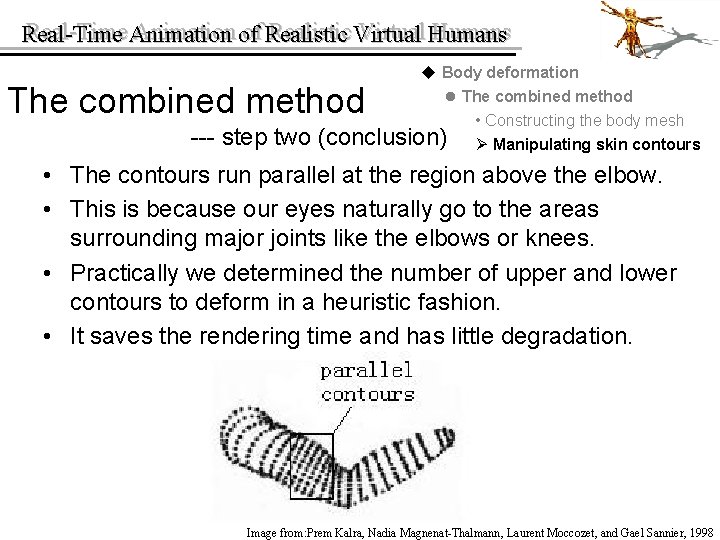

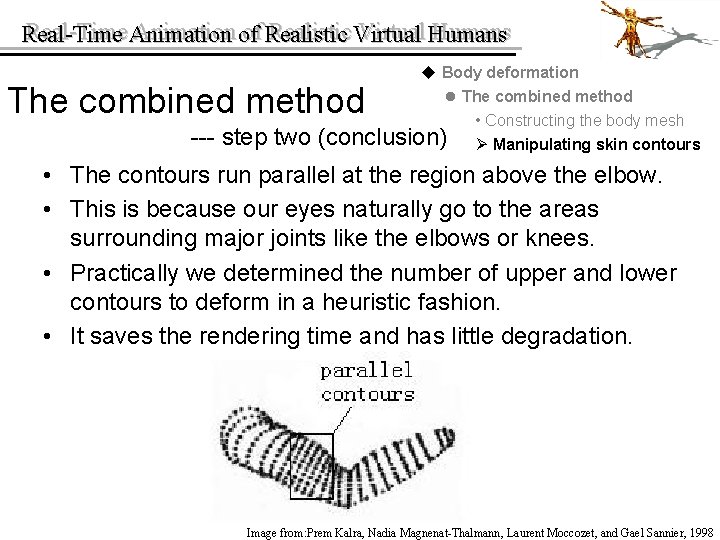

Real-Time Animation of of Realistic Virtual Humans Real-Time u Body deformation l The combined method • Constructing the body mesh (conclusion) Ø Manipulating skin contours The combined method --- step two • The contours run parallel at the region above the elbow. • This is because our eyes naturally go to the areas surrounding major joints like the elbows or knees. • Practically we determined the number of upper and lower contours to deform in a heuristic fashion. • It saves the rendering time and has little degradation. Image from: Prem Kalra, Nadia Magnenat-Thalmann, Laurent Moccozet, and Gael Sannier, 1998

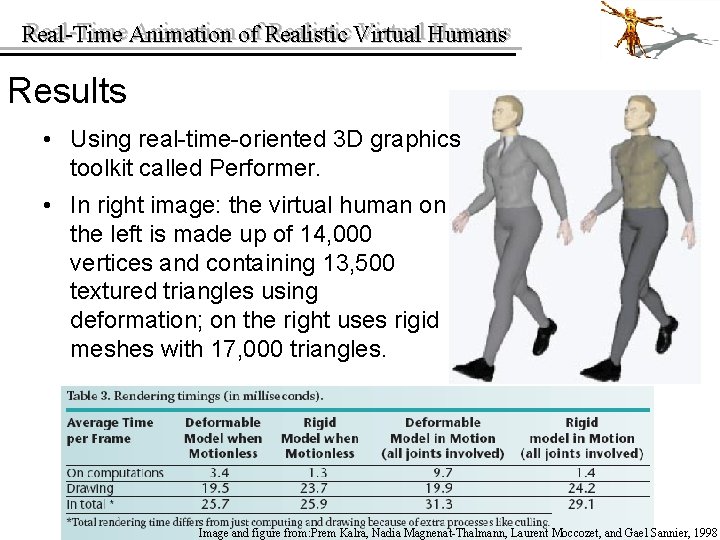

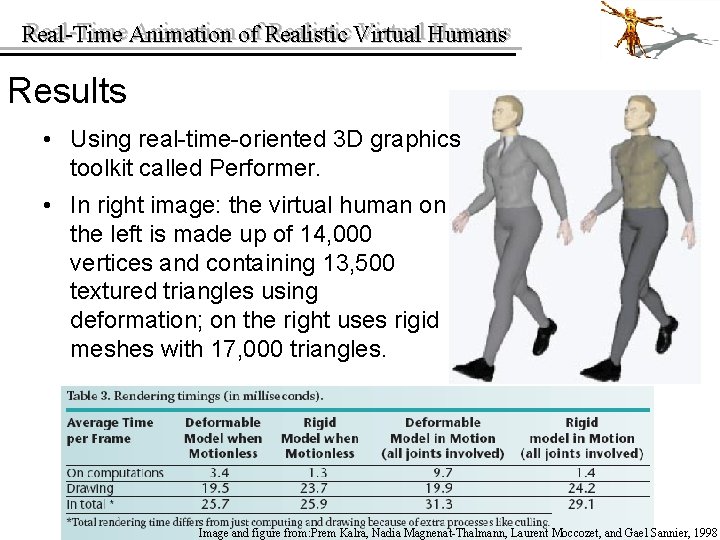

Real-Time Animation of of Realistic Virtual Humans Real-Time Results • Using real-time-oriented 3 D graphics toolkit called Performer. • In right image: the virtual human on the left is made up of 14, 000 vertices and containing 13, 500 textured triangles using deformation; on the right uses rigid meshes with 17, 000 triangles. Image and figure from: Prem Kalra, Nadia Magnenat-Thalmann, Laurent Moccozet, and Gael Sannier, 1998

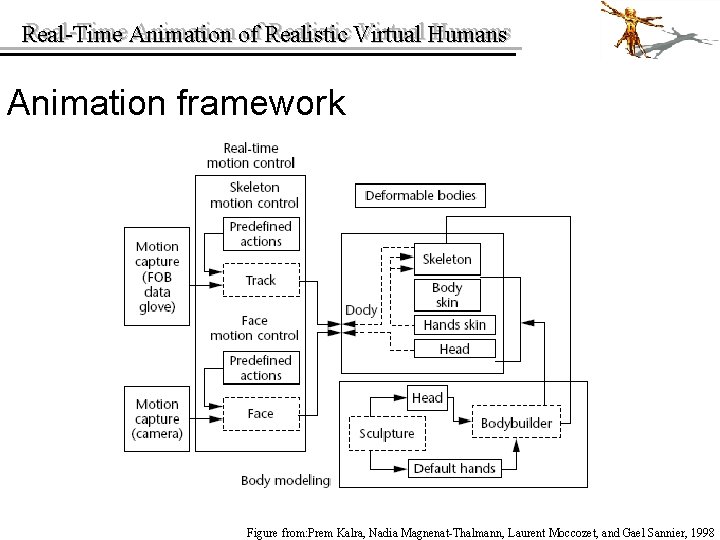

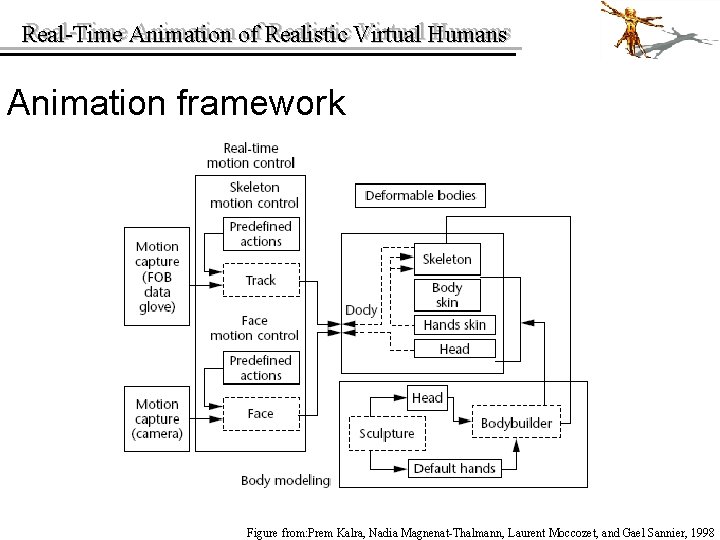

Real-Time Animation of of Realistic Virtual Humans Real-Time Animation framework • The close link between modeling and animation. • The system separates into three units: modeling, deformation and motion control. – Modeling provides geometrical models for the body, hands and face. – Deformations are performed separately on different entities based on the model used for each part. – Motion control generates and controls the movements for different entities.

Real-Time Animation of of Realistic Virtual Humans Real-Time Animation framework Figure from: Prem Kalra, Nadia Magnenat-Thalmann, Laurent Moccozet, and Gael Sannier, 1998

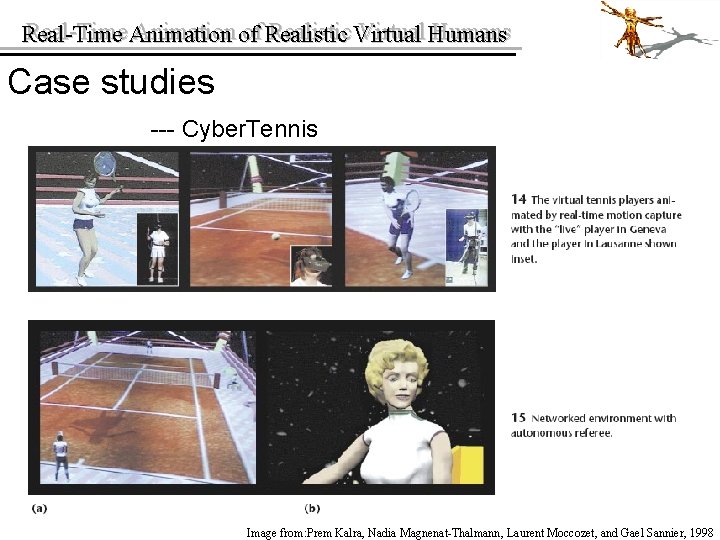

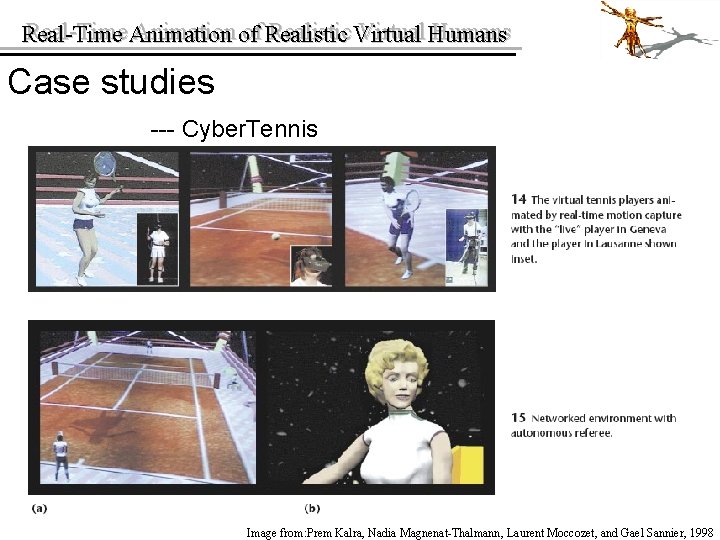

Real-Time Animation of of Realistic Virtual Humans Real-Time Case studies --- Cyber. Tennis Image from: Prem Kalra, Nadia Magnenat-Thalmann, Laurent Moccozet, and Gael Sannier, 1998

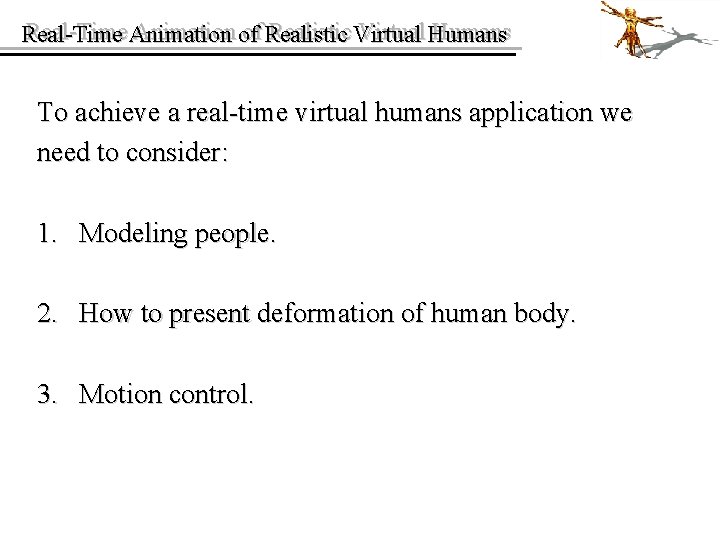

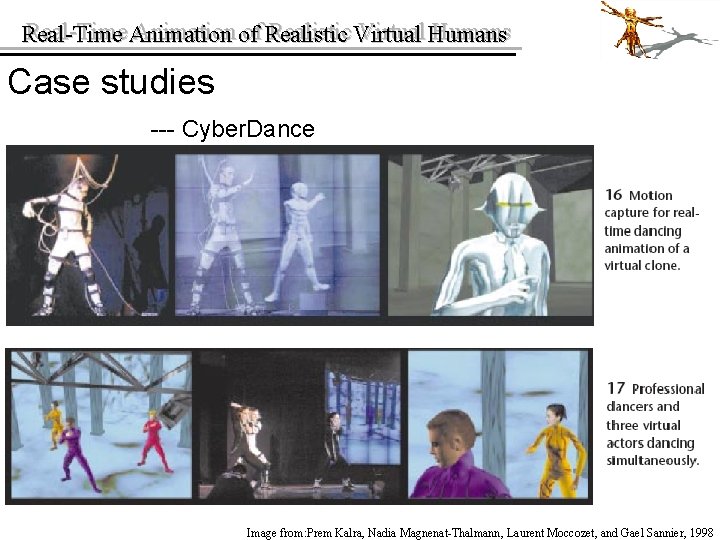

Real-Time Animation of of Realistic Virtual Humans Real-Time Case studies --- Cyber. Dance Image from: Prem Kalra, Nadia Magnenat-Thalmann, Laurent Moccozet, and Gael Sannier, 1998

Traditional vs computer animation

Traditional vs computer animation Has virtual functions and accessible non-virtual destructor

Has virtual functions and accessible non-virtual destructor Michalangelo

Michalangelo Realistic fiction subgenres

Realistic fiction subgenres Elements of realistic fiction

Elements of realistic fiction Disadvantages of realistic job preview

Disadvantages of realistic job preview Modern engineering tools

Modern engineering tools Realistic fiction defintion

Realistic fiction defintion Importance of audience in theatre

Importance of audience in theatre Fiction and non fiction examples

Fiction and non fiction examples Realistic

Realistic Realistic appraisal in hrm

Realistic appraisal in hrm Realistic conflict theory

Realistic conflict theory Realistic fiction story ideas for 7th grade

Realistic fiction story ideas for 7th grade Sadie kopelman

Sadie kopelman What

What Realistic defintion

Realistic defintion Monsters inc realistic

Monsters inc realistic Realistic fiction defintion

Realistic fiction defintion Maddie lambert

Maddie lambert Conventional realistic investigative

Conventional realistic investigative Three little pigs realistic

Three little pigs realistic Realistic conflict theory

Realistic conflict theory Realistic projectile motion

Realistic projectile motion Specific measurable attainable realistic timely

Specific measurable attainable realistic timely Why is a food web more realistic

Why is a food web more realistic Contemporary realism literature

Contemporary realism literature Realism theatre characteristics

Realism theatre characteristics The hello goodbye window summary

The hello goodbye window summary Learning realistic human actions from movies

Learning realistic human actions from movies Realistic conflict theory

Realistic conflict theory Cocomo is not a perfect realistic model

Cocomo is not a perfect realistic model Narrative film

Narrative film Realistic fiction elements

Realistic fiction elements Realistic job preview pros and cons

Realistic job preview pros and cons Abstract vs naturalistic

Abstract vs naturalistic Jigsaw classroom psychology definition

Jigsaw classroom psychology definition Our story

Our story Latin american magic realist voices quiz

Latin american magic realist voices quiz Realistic writing emphasizes accuracy of detail

Realistic writing emphasizes accuracy of detail Realistic conflict theory

Realistic conflict theory