Profiling and Modeling Resource Usage of Virtualized Applications

- Slides: 21

Profiling and Modeling Resource Usage of Virtualized Applications Timothy Wood 1, Lucy Cherkasova 2, Kivanc Ozonat 2, and Prashant Shenoy 1 1 University of Massachusetts, Amherst 2 HPLabs, Palo Alto © 2006 Hewlett-Packard Development Company, L. P. The information contained herein is subject to change without notice

Virtualized Data Centers • Benefits − Lower hardware and energy costs through server consolidation − Capacity on demand, agile and dynamic IT • Challenges − Apps are characterized by a collection of resource usage traces in native environment − Virtualization overheads − Effects of consolidating multiple VMs to one host • Important for capacity planning and efficient server consolidation 2

Application Virtualization Overhead Many research papers measure virtualization overhead but do not predict it in a general way: • − A particular hardware platform − A particular app/benchmark, e. g. , netperf, Spec or Spec. Web, disk benchmarks − Max throughput/latency/performance is X% worse − Showing Y% increase in CPU resources How do we translate these measurements in “what is a virtualization overhead for a given application”? • New performance models are needed 3

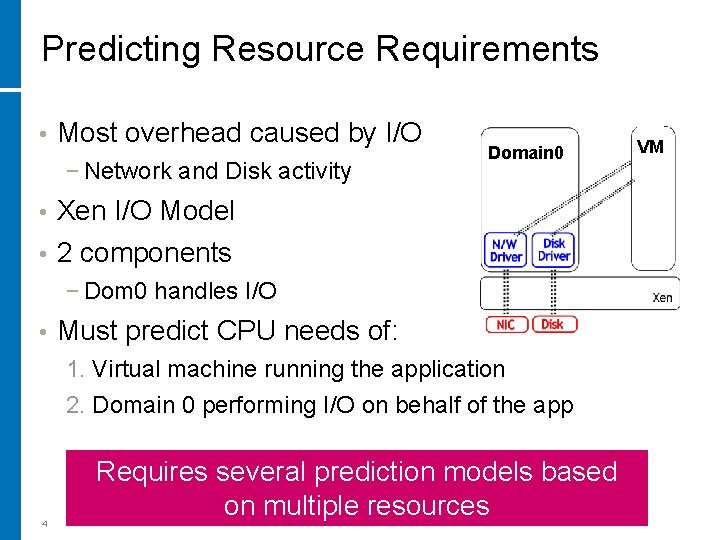

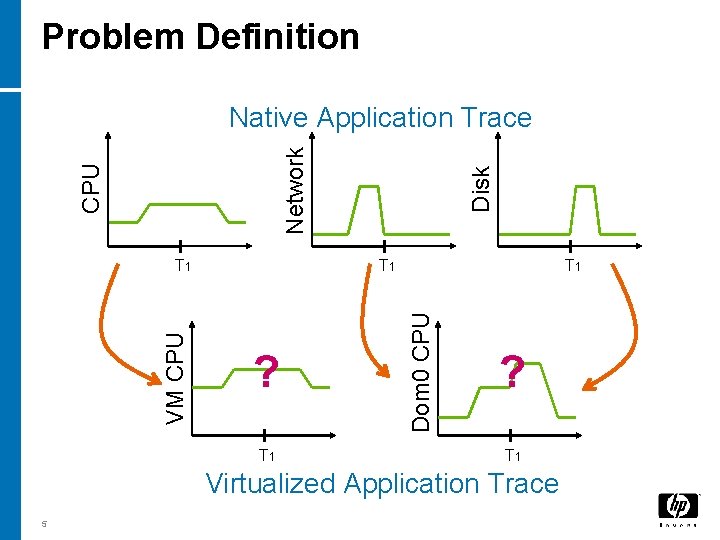

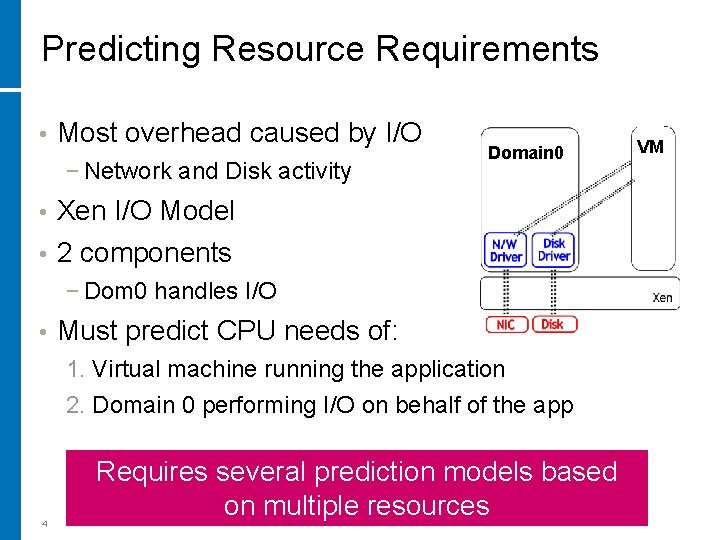

Predicting Resource Requirements • Most overhead caused by I/O − Network and Disk activity Domain 0 Xen I/O Model • 2 components • − Dom 0 handles I/O • Must predict CPU needs of: 1. Virtual machine running the application 2. Domain 0 performing I/O on behalf of the app 4 Requires several prediction models based on multiple resources VM

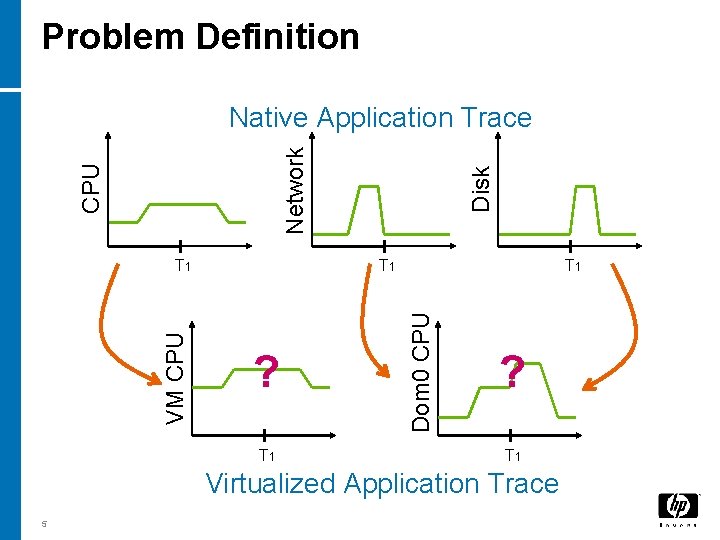

Problem Definition T 1 ? T 1 Dom 0 CPU VM CPU T 1 Disk CPU Network Native Application Trace ? T 1 Virtualized Application Trace 5

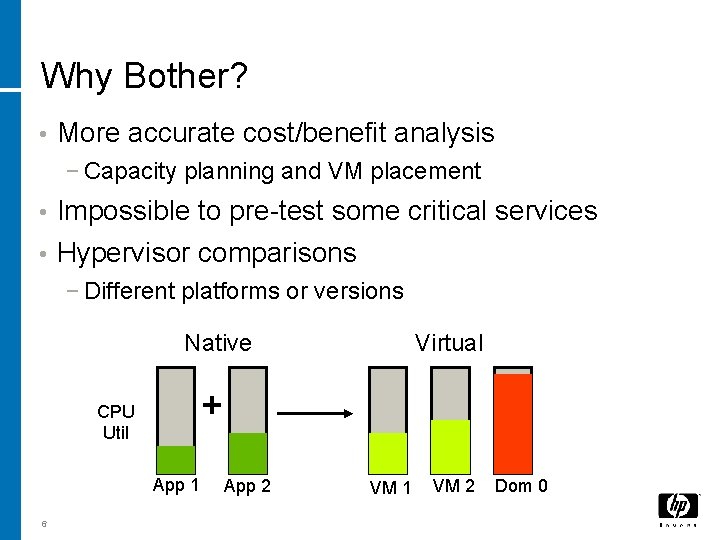

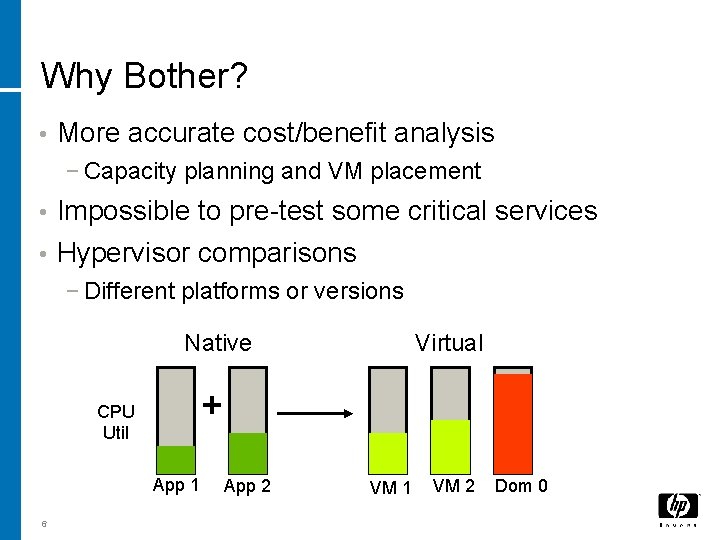

Why Bother? • More accurate cost/benefit analysis − Capacity planning and VM placement Impossible to pre-test some critical services • Hypervisor comparisons • − Different platforms or versions Native + CPU Util App 1 6 Virtual App 2 VM 1 VM 2 Dom 0

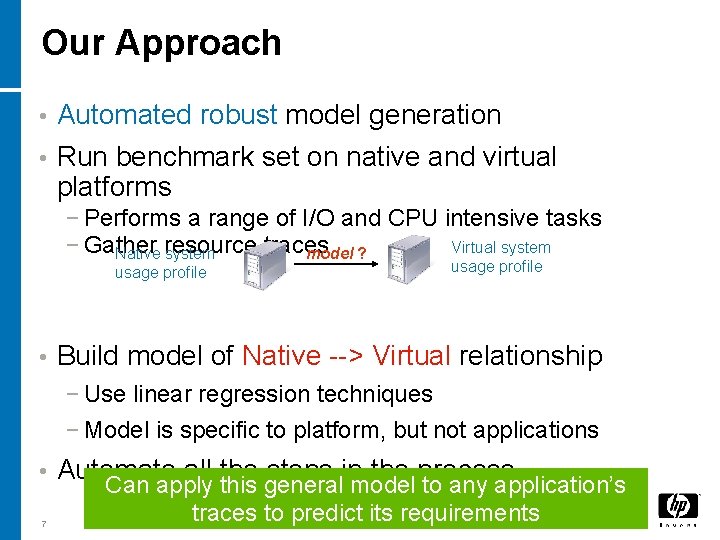

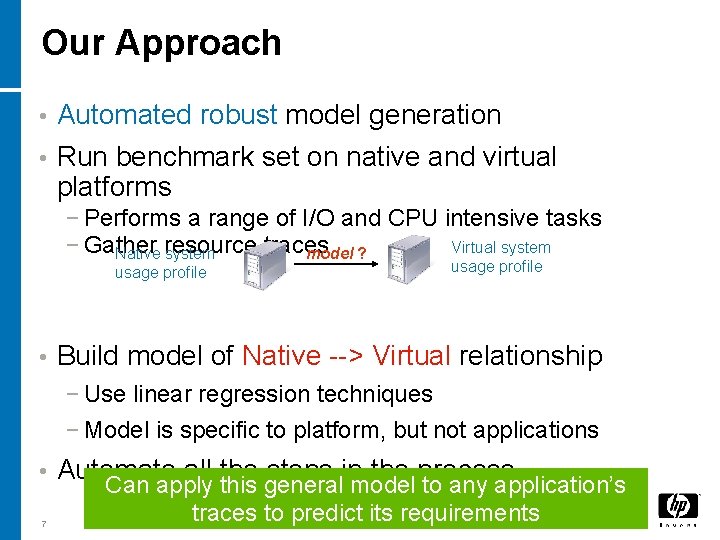

Our Approach • Automated robust model generation • Run benchmark set on native and virtual platforms − Performs a range of I/O and CPU intensive tasks Virtual system − Gather traces Native resource system model ? usage profile • usage profile Build model of Native --> Virtual relationship − Use linear regression techniques − Model is specific to platform, but not applications • 7 Automate all the steps in the process Can apply this general model to any application’s traces to predict its requirements

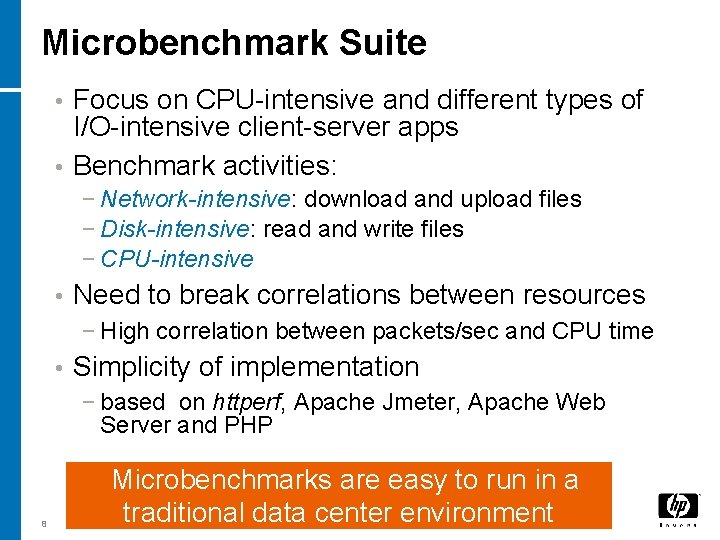

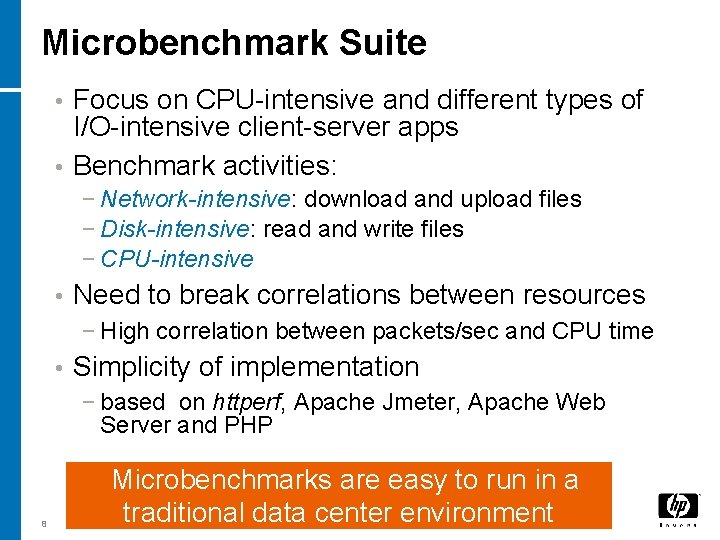

Microbenchmark Suite Focus on CPU-intensive and different types of I/O-intensive client-server apps • Benchmark activities: • − Network-intensive: download and upload files − Disk-intensive: read and write files − CPU-intensive • Need to break correlations between resources − High correlation between packets/sec and CPU time • Simplicity of implementation − based on httperf, Apache Jmeter, Apache Web Server and PHP 8 Microbenchmarks are easy to run in a traditional data center environment

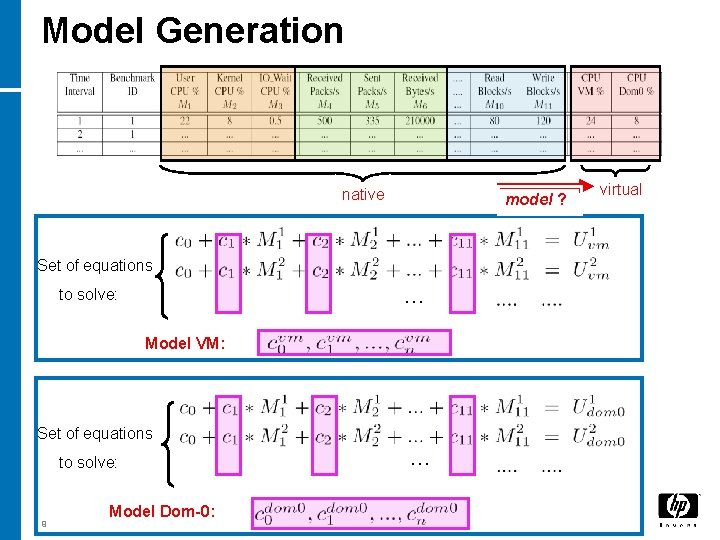

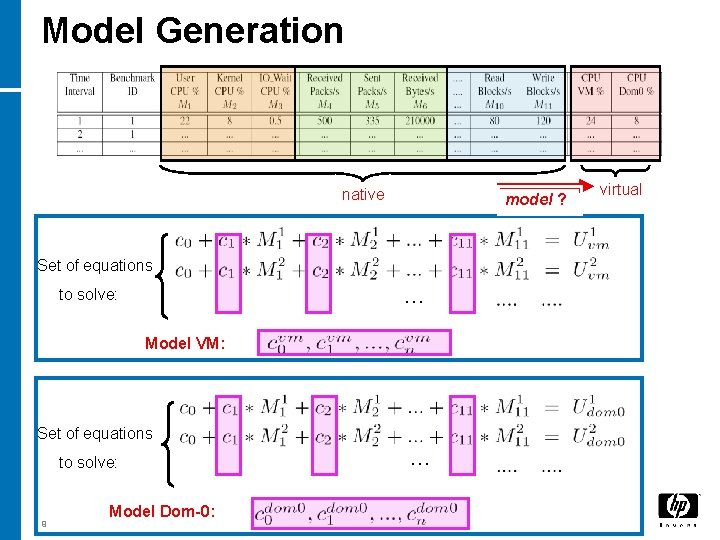

Model Generation native model ? Set of equations … to solve: Model VM: Set of equations to solve: 9 Model Dom-0: … virtual

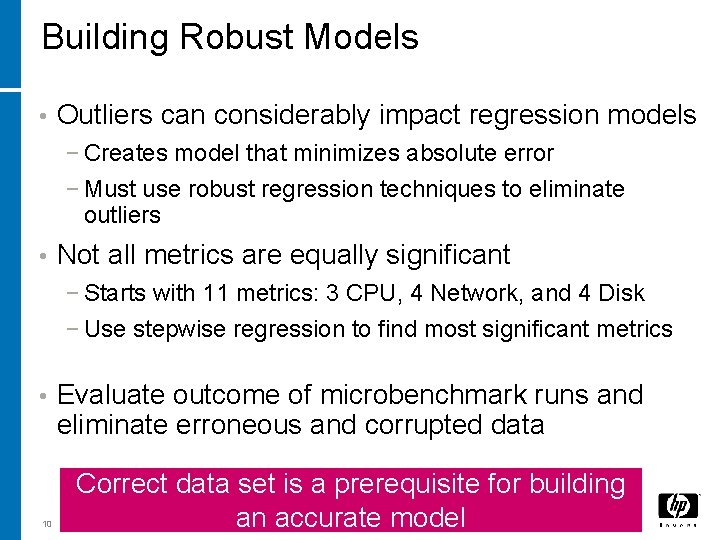

Building Robust Models • Outliers can considerably impact regression models − Creates model that minimizes absolute error − Must use robust regression techniques to eliminate outliers • Not all metrics are equally significant − Starts with 11 metrics: 3 CPU, 4 Network, and 4 Disk − Use stepwise regression to find most significant metrics • 10 Evaluate outcome of microbenchmark runs and eliminate erroneous and corrupted data Correct data set is a prerequisite for building an accurate model

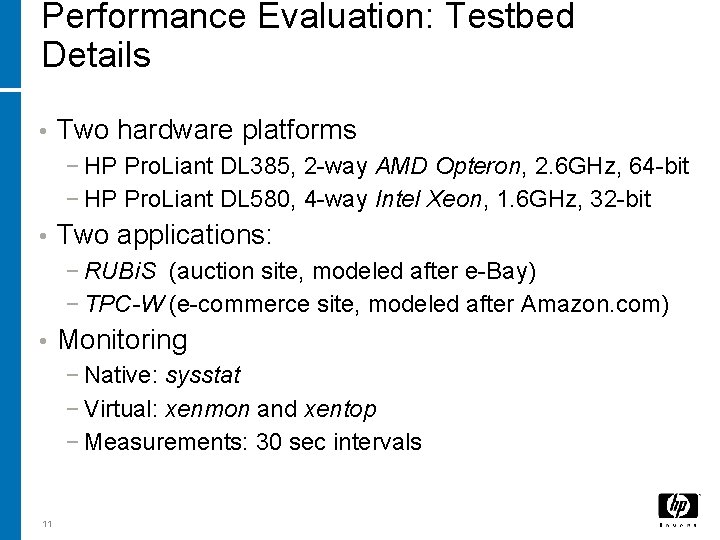

Performance Evaluation: Testbed Details • Two hardware platforms − HP Pro. Liant DL 385, 2 -way AMD Opteron, 2. 6 GHz, 64 -bit − HP Pro. Liant DL 580, 4 -way Intel Xeon, 1. 6 GHz, 32 -bit • Two applications: − RUBi. S (auction site, modeled after e-Bay) − TPC-W (e-commerce site, modeled after Amazon. com) • Monitoring − Native: sysstat − Virtual: xenmon and xentop − Measurements: 30 sec intervals 11

Questions • Why this set of metrics? • Why these benchmarks? Why this process of model creation? • Model accuracy • 12

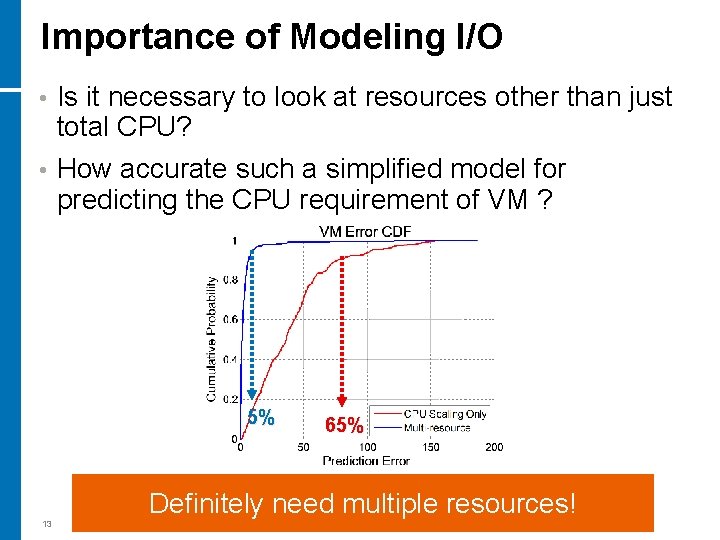

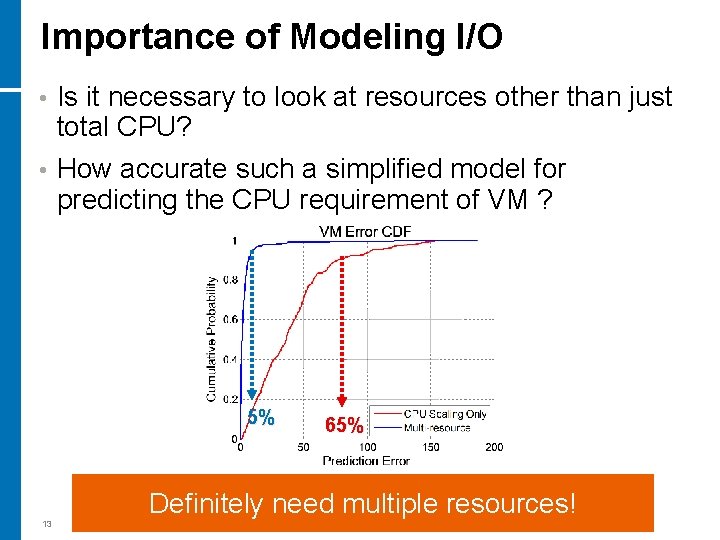

Importance of Modeling I/O Is it necessary to look at resources other than just total CPU? • How accurate such a simplified model for predicting the CPU requirement of VM ? • 5% 65% Definitely need multiple resources! 13

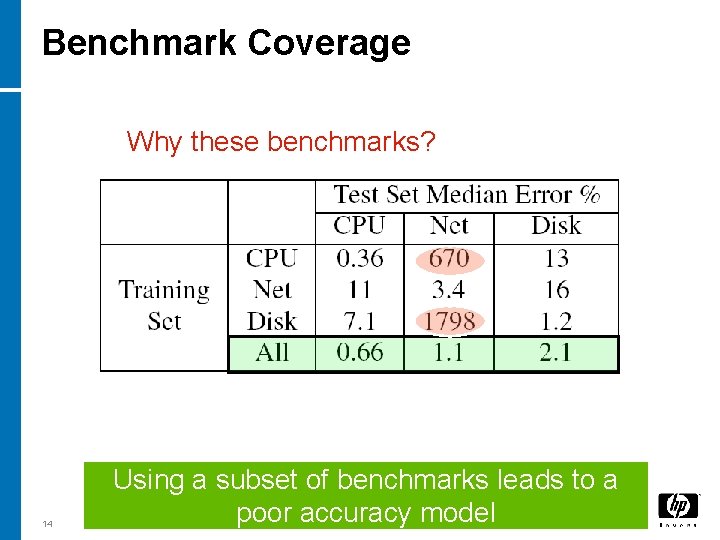

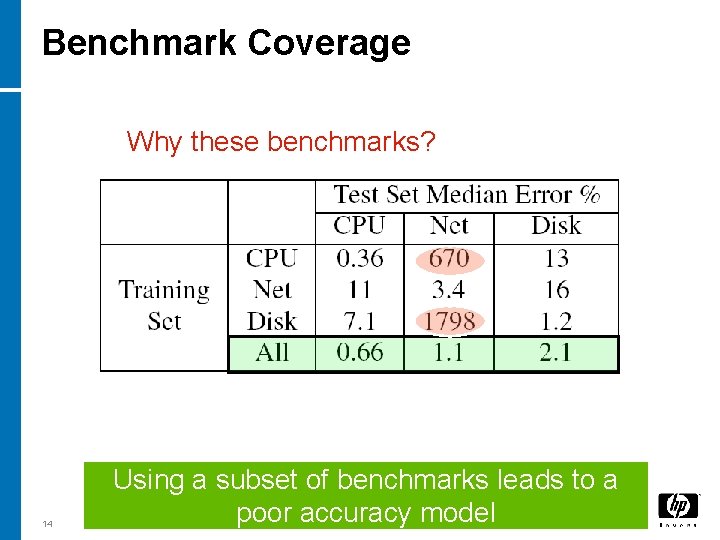

Benchmark Coverage Why these benchmarks? 14 Using a subset of benchmarks leads to a poor accuracy model

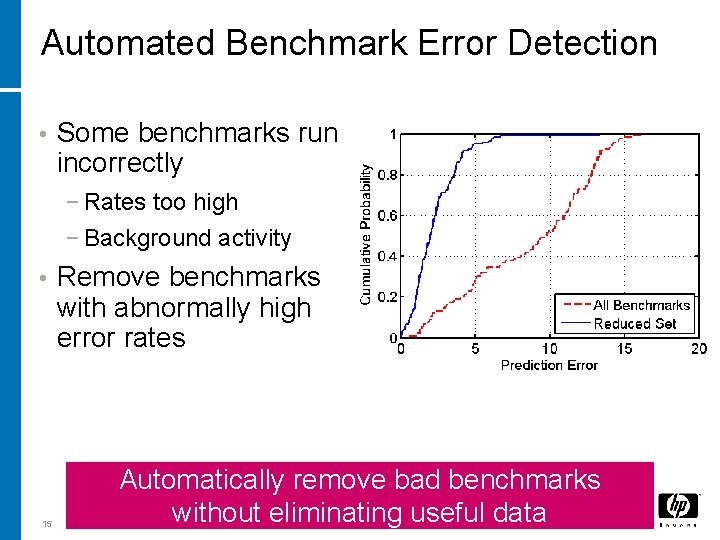

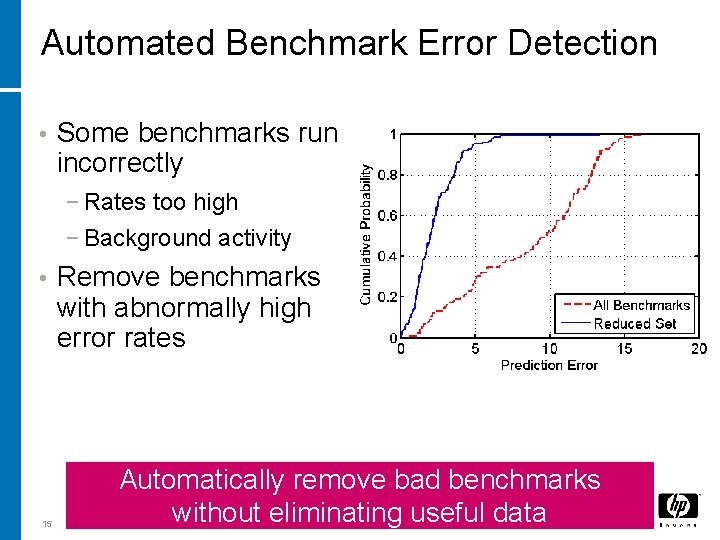

Automated Benchmark Error Detection • Some benchmarks run incorrectly − Rates too high − Background activity • 15 Remove benchmarks with abnormally high error rates Automatically remove bad benchmarks without eliminating useful data

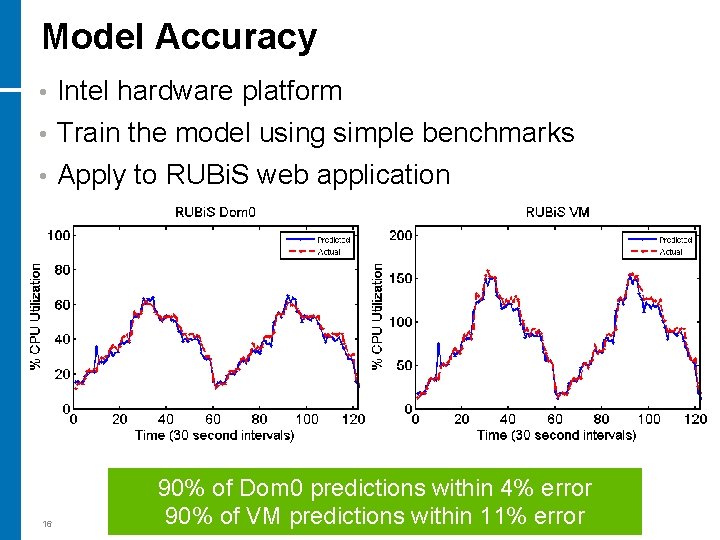

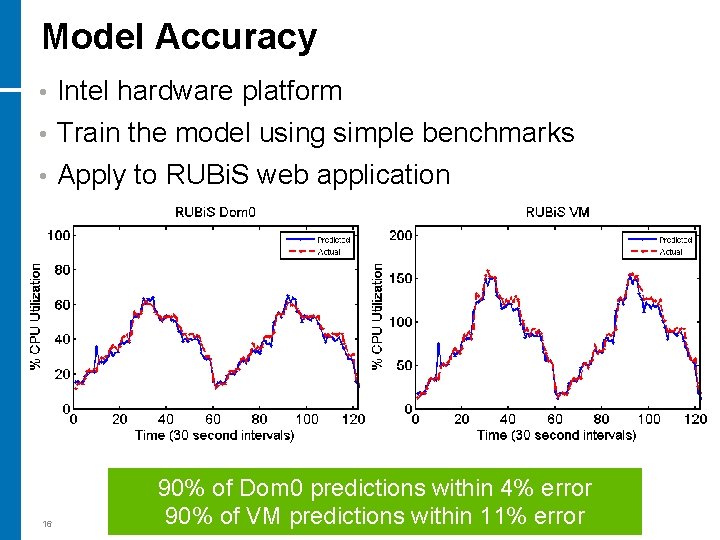

Model Accuracy • Intel hardware platform • Train the model using simple benchmarks • Apply to RUBi. S web application 16 90% of Dom 0 predictions within 4% error 90% of VM predictions within 11% error

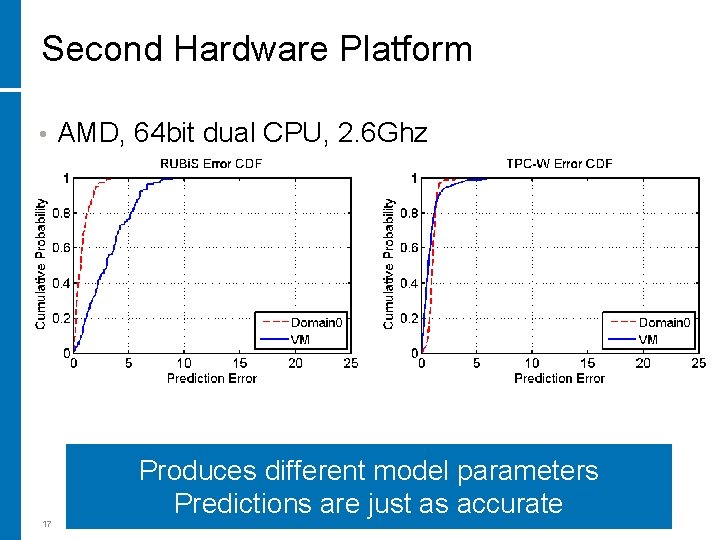

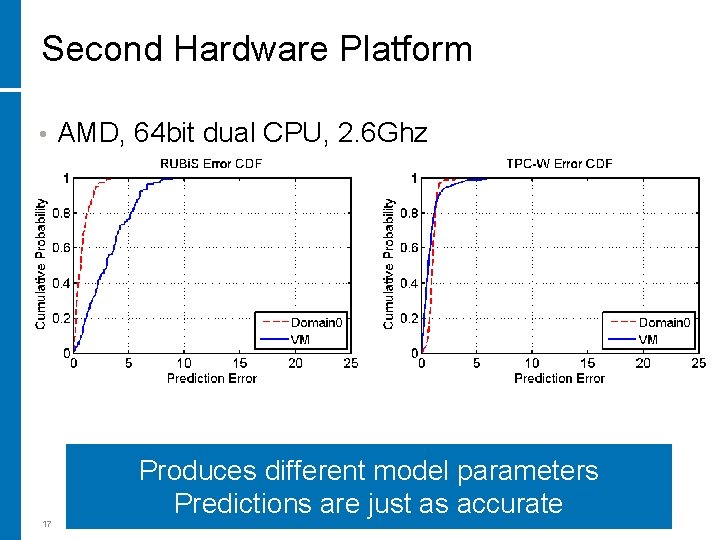

Second Hardware Platform • AMD, 64 bit dual CPU, 2. 6 Ghz Produces different model parameters Predictions are just as accurate 17

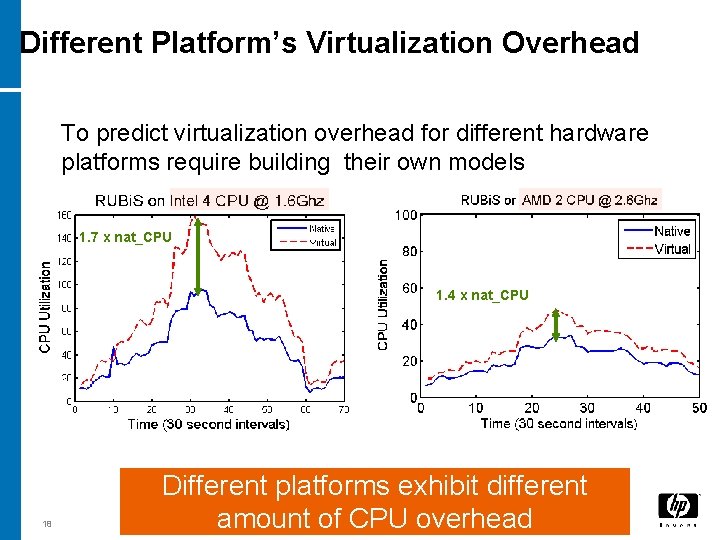

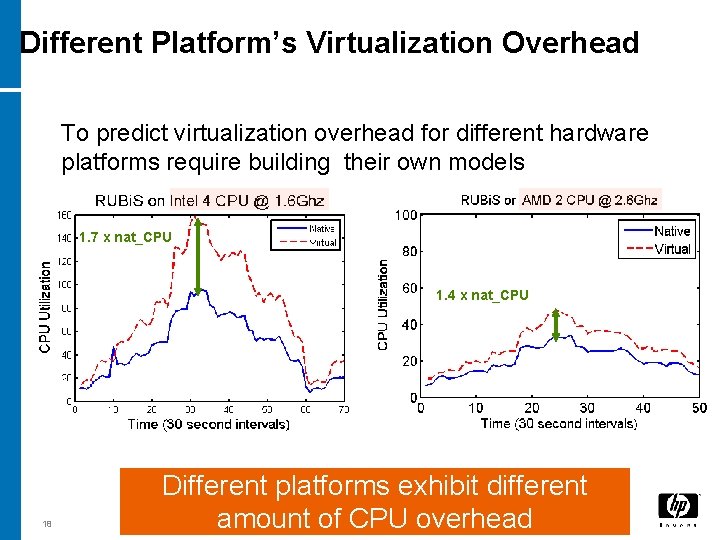

Different Platform’s Virtualization Overhead To predict virtualization overhead for different hardware platforms require building their own models 1. 7 x nat_CPU 1. 4 x nat_CPU 18 Different platforms exhibit different amount of CPU overhead

Summary Proposed approach builds a model for each hardware and virtualization platform. • It enables comparison of application resource requirements on different hardware platforms. • Interesting additional application: helps to assess and compare “performance” overhead of different virtualization software releases. • 19

Future Work Refine a set of microbenchmarks and related measurements (what is a practical minimal set? ) • Repeat the experiments for VMware platform • Linear models – are they enough? • − Create multiple models for resources with different overheads at different rates Evaluation of virtual device capacity • Define composition rules for estimating resource requirements of collocated virtualized applications • 20

Questions? 21