Planning Jeremy Wyatt AI Principles Lecture on Planning

- Slides: 18

Planning Jeremy Wyatt AI Principles, Lecture on Planning

Plan § Situation calculus § The frame problem § The STRIPS representation for planning § State space planning: • Forward chaining search (progression planning) • Backward chaining search (regression planning) § Reading: Russell and Norvig pp. 375 -387 AI Principles, Lecture on Planning

Situation Calculus § The situation calculus is a general system for allowing you to reason automatically about the effects of actions, and to search for plans that achieve goals. § However, it has some flaws that took a long time to iron out… § The situation calculus is composed of • Situations: S 0 is the initial situation Result(a, S 0) is the situation that results from applying action a in situation S 0 • Fluents: functions and predicates that may be true of some situation e. g. ¬Holding(G 1, S 0) says that the agent is not holding G 1 in situation S 0 AI Principles, Lecture on Planning

Situation Calculus § Actions are described in the situation calculus using two kinds of axioms: • Possibility Axioms: say when you can apply an action • Effect Axioms: that say what happens when you apply them § The essential problem with situation calculus however, is that • Effect Axioms only say what changes, not what stays the same, but reasoning in the situation calculus requires the explicit representation of all the things that don’t change. These are handled by Frame Axioms, and hence this is called the Representational Frame Problem. • Reasoning about all the effects and non-effects of a sequence of actions is thus very inefficient. This is known as the Inferential Frame Problem. § Eventually solutions to both the Frame Problems were found. But in the meantime researchers began to look for representations to support more efficient planning. AI Principles, Lecture on Planning

The STRIPS representation § The Grandad of planning representations § Avoids the difficulties of more general representations (e. g. situation calculus) for reasoning about action effects and change § Devised for, and used in the Shakey project § The key contribution of the representation of action effects is to assume that anything that isn’t said to change as the result of an action, doesn’t change § It is this assumption (sometimes called the STRIPS assumption) that avoids the representational frame problem AI Principles, Lecture on Planning

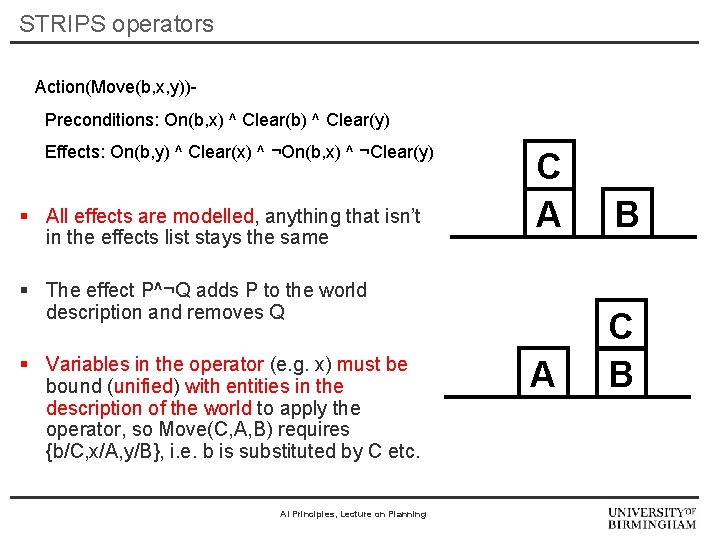

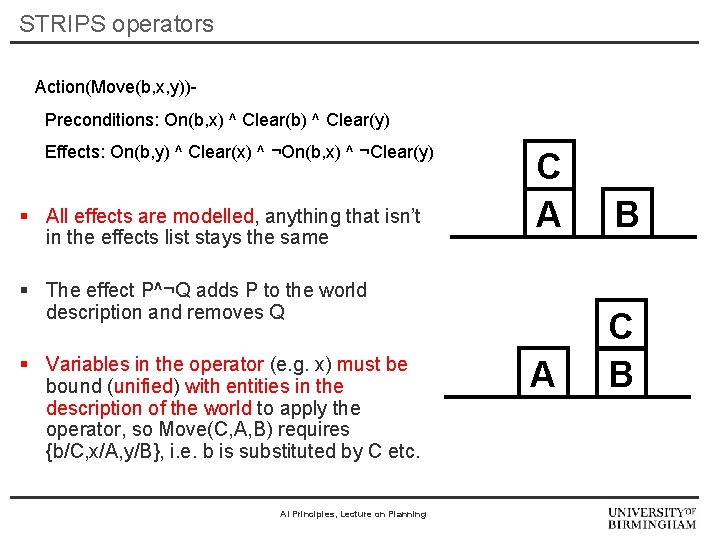

STRIPS operators Action(Move(b, x, y))Preconditions: On(b, x) ^ Clear(b) ^ Clear(y) Effects: On(b, y) ^ Clear(x) ^ ¬On(b, x) ^ ¬Clear(y) § All effects are modelled, anything that isn’t in the effects list stays the same C A B A C B § The effect P^¬Q adds P to the world description and removes Q § Variables in the operator (e. g. x) must be bound (unified) with entities in the description of the world to apply the operator, so Move(C, A, B) requires {b/C, x/A, y/B}, i. e. b is substituted by C etc. AI Principles, Lecture on Planning

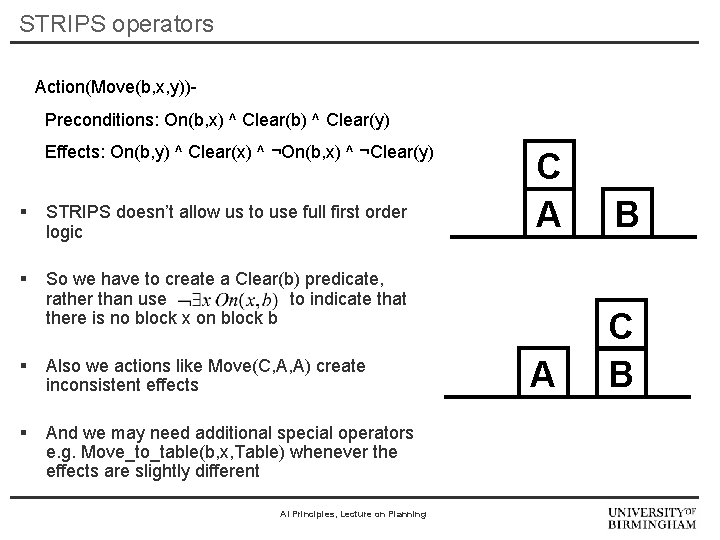

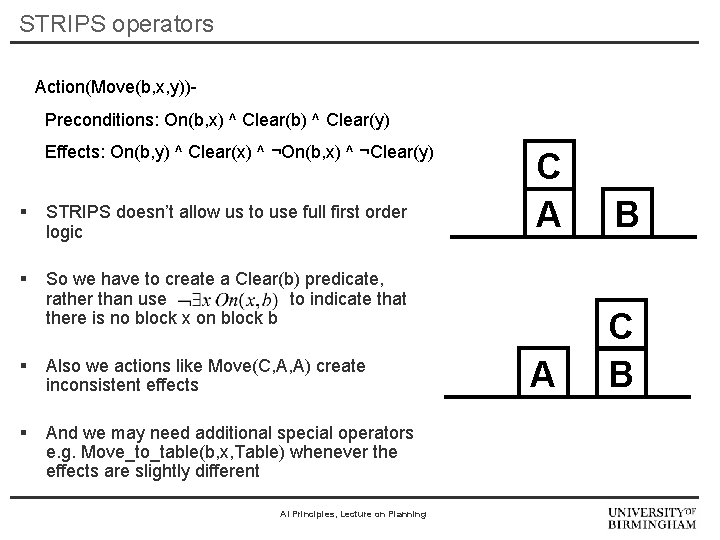

STRIPS operators Action(Move(b, x, y))Preconditions: On(b, x) ^ Clear(b) ^ Clear(y) Effects: On(b, y) ^ Clear(x) ^ ¬On(b, x) ^ ¬Clear(y) § STRIPS doesn’t allow us to use full first order logic § So we have to create a Clear(b) predicate, rather than use to indicate that there is no block x on block b § Also we actions like Move(C, A, A) create inconsistent effects § And we may need additional special operators e. g. Move_to_table(b, x, Table) whenever the effects are slightly different AI Principles, Lecture on Planning C A B A C B

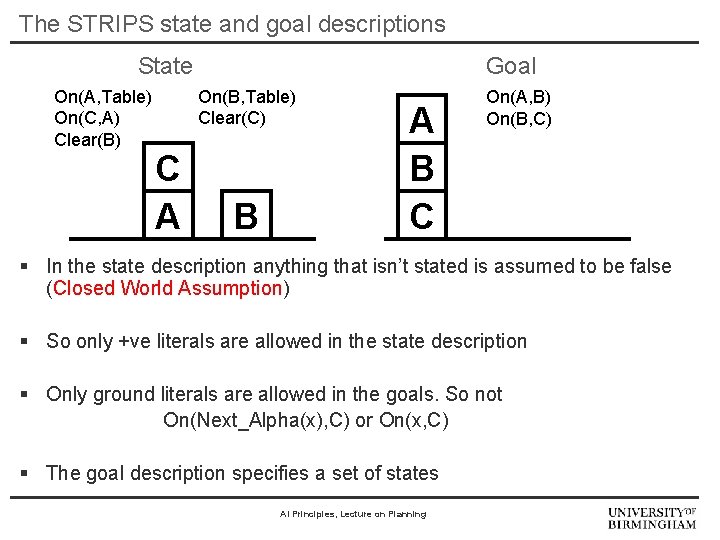

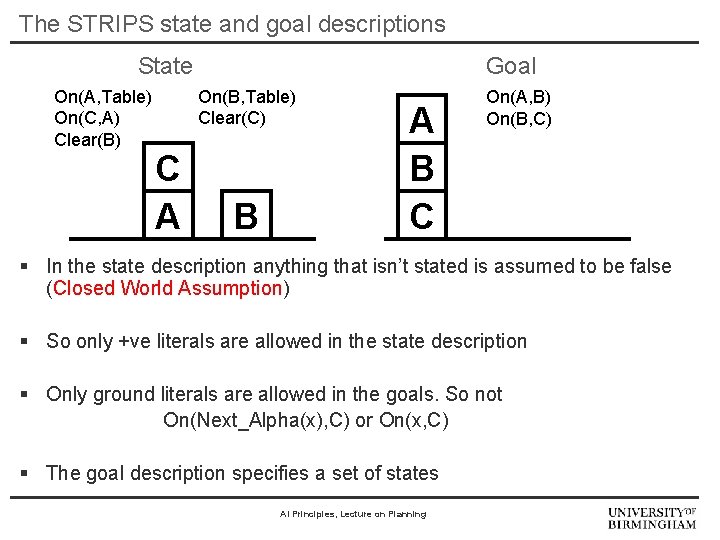

The STRIPS state and goal descriptions State On(A, Table) On(C, A) Clear(B) Goal On(B, Table) Clear(C) C A B C On(A, B) On(B, C) § In the state description anything that isn’t stated is assumed to be false (Closed World Assumption) § So only +ve literals are allowed in the state description § Only ground literals are allowed in the goals. So not On(Next_Alpha(x), C) or On(x, C) § The goal description specifies a set of states AI Principles, Lecture on Planning

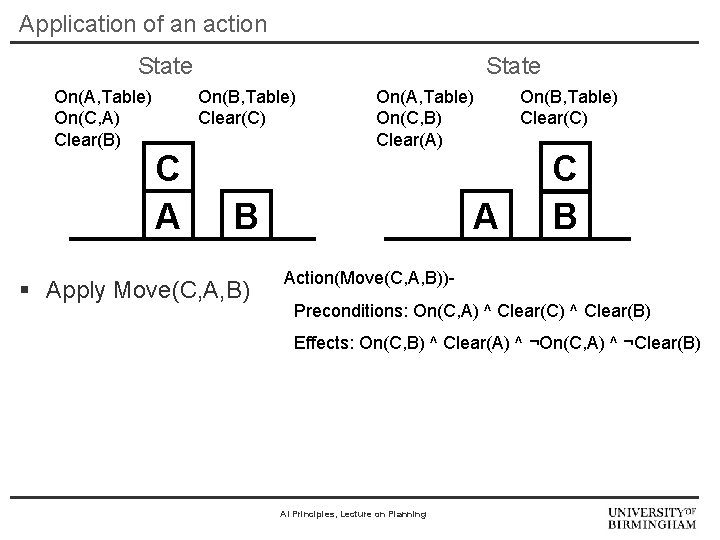

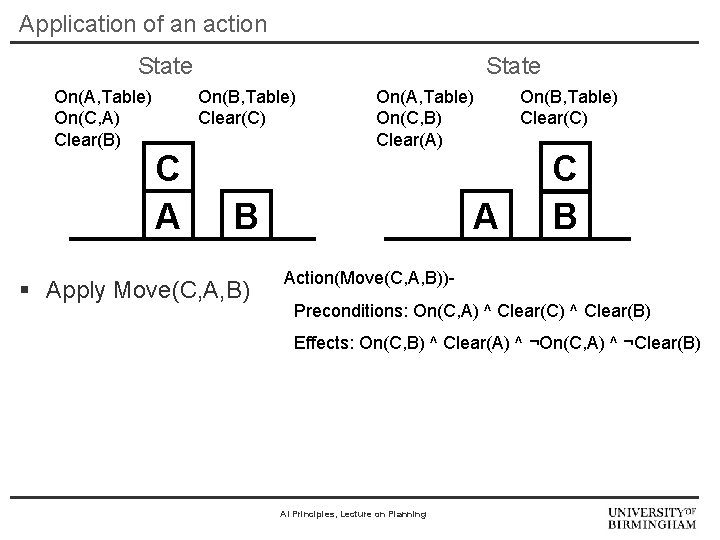

Application of an action State On(A, Table) On(C, A) Clear(B) State On(B, Table) Clear(C) C A On(A, Table) On(C, A) On(C, B) Clear(A) B § Apply Move(C, A, B) A On(B, Table) Clear(C) C B Action(Move(C, x, y))Action(Move(C, A, B))Action(Move(b, x, y))Action(Move(C, A, y))On(C, x) ^^^Clear(b) Clear(C) Clear(y) Clear(C)^^^Clear(y) Clear(B) Preconditions: On(C, A) On(b, x) Clear(y) On(C, y) ^^^Clear(x) ¬On(C, x) ¬Clear(B) Effects: On(C, B) On(b, y) Clear(A)^^^^¬On(b, x) ¬On(C, A) ^^¬Clear(y) ¬Clear(B) AI Principles, Lecture on Planning

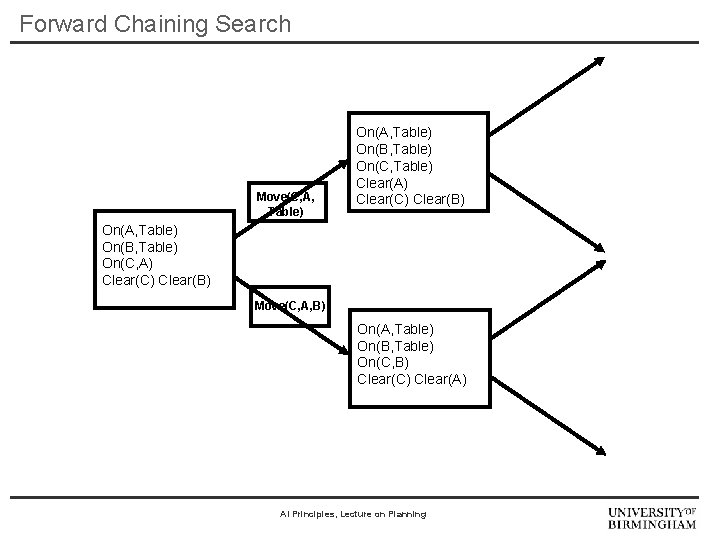

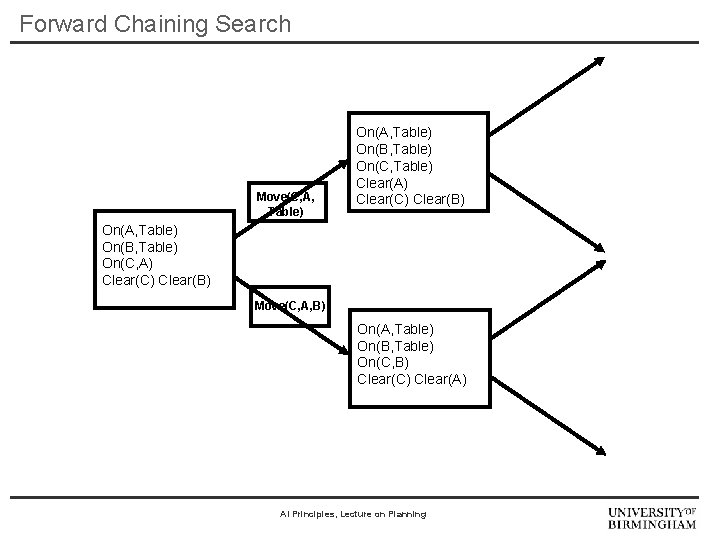

Forward Chaining Search Move(C, A, Table) On(B, Table) On(C, Table) Clear(A) Clear(C) Clear(B) On(A, Table) On(B, Table) On(C, A) Clear(C) Clear(B) Move(C, A, B) On(A, Table) On(B, Table) On(C, B) Clear(C) Clear(A) AI Principles, Lecture on Planning

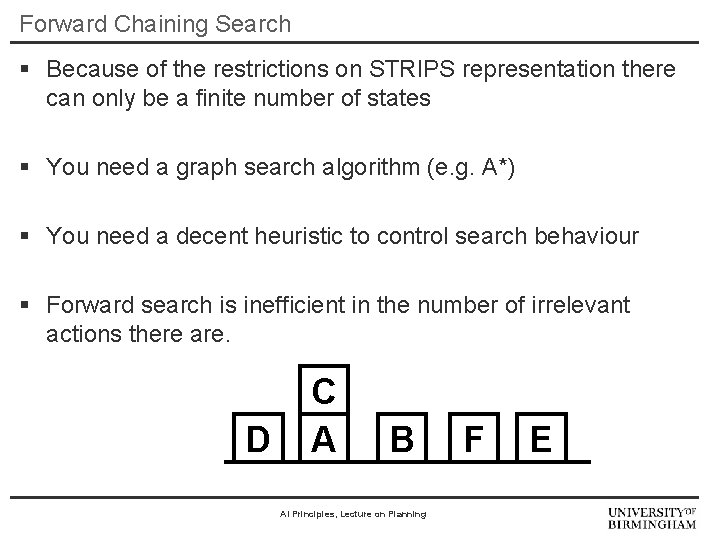

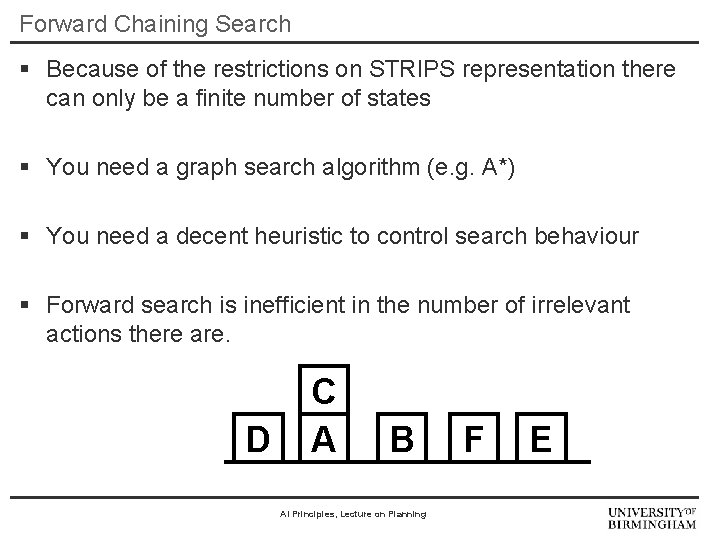

Forward Chaining Search § Because of the restrictions on STRIPS representation there can only be a finite number of states § You need a graph search algorithm (e. g. A*) § You need a decent heuristic to control search behaviour § Forward search is inefficient in the number of irrelevant actions there are. D C A B AI Principles, Lecture on Planning F E

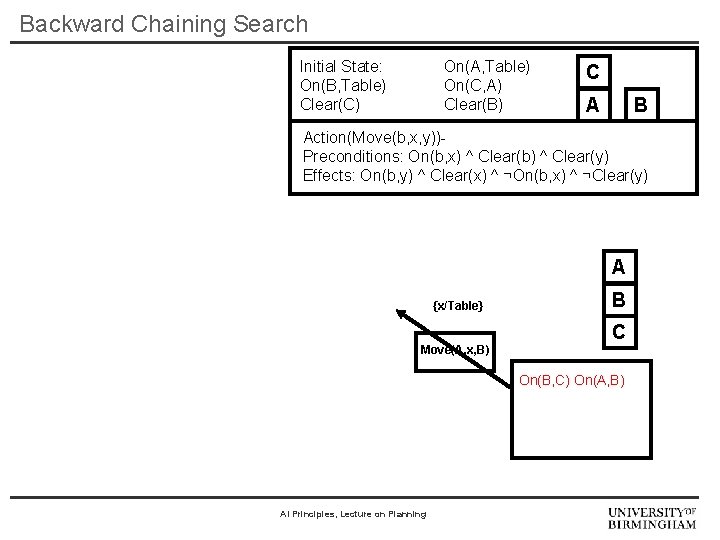

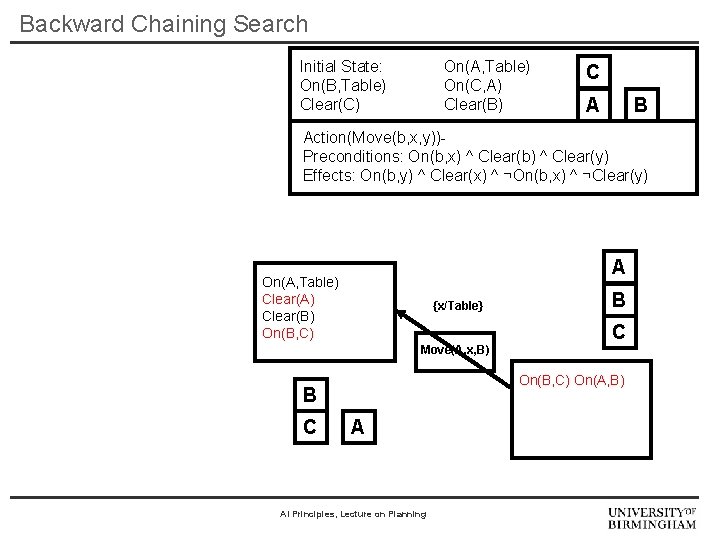

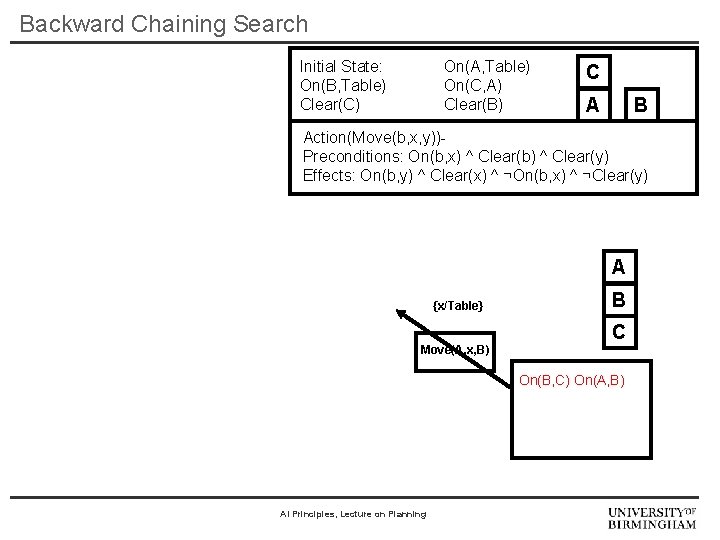

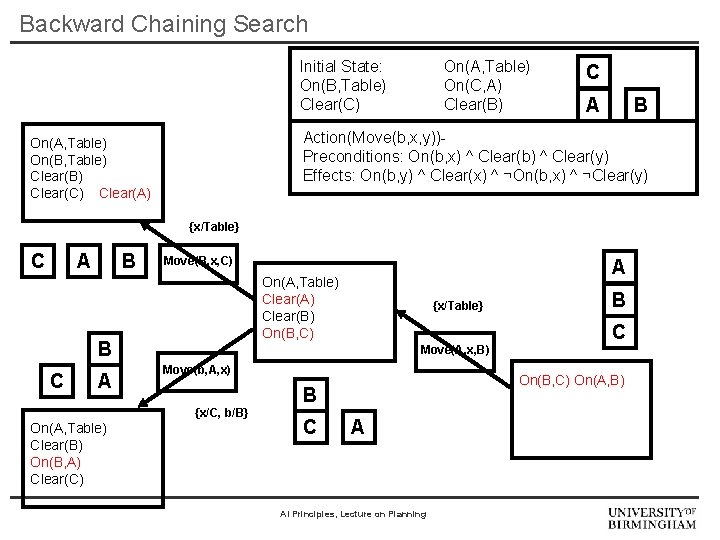

Backward Chaining Search Initial State: On(B, Table) Clear(C) On(A, Table) On(C, A) Clear(B) C A B Action(Move(b, x, y))Preconditions: On(b, x) ^ Clear(b) ^ Clear(y) Effects: On(b, y) ^ Clear(x) ^ ¬On(b, x) ^ ¬Clear(y) A {x/Table} Move(A, x, B) B C On(B, C) On(A, B) AI Principles, Lecture on Planning

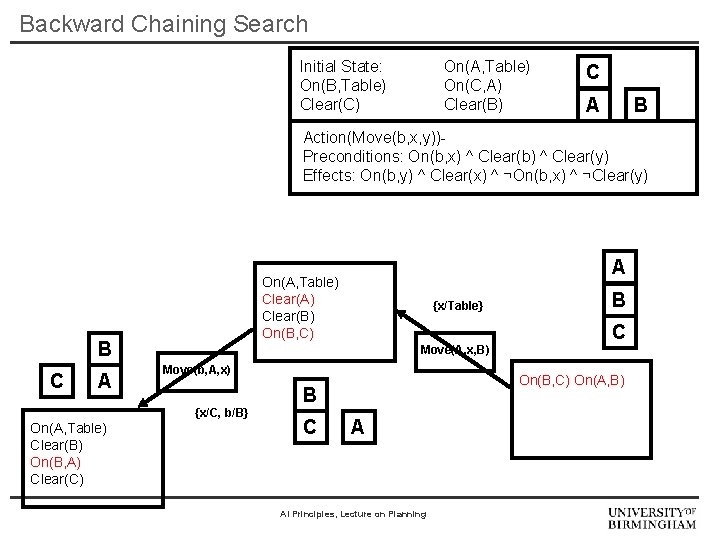

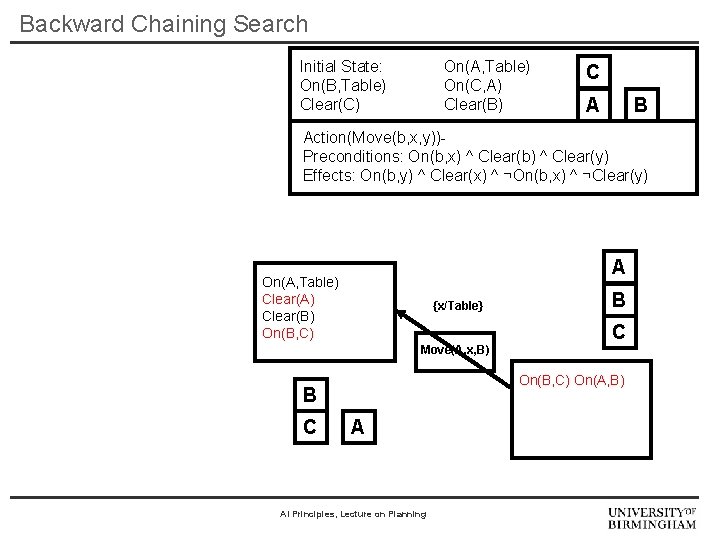

Backward Chaining Search Initial State: On(B, Table) Clear(C) On(A, Table) On(C, A) Clear(B) C A B Action(Move(b, x, y))Preconditions: On(b, x) ^ Clear(b) ^ Clear(y) Effects: On(b, y) ^ Clear(x) ^ ¬On(b, x) ^ ¬Clear(y) A On(A, Table) Clear(A) Clear(B) On(B, C) {x/Table} Move(A, x, B) C On(B, C) On(A, B) B C B A AI Principles, Lecture on Planning

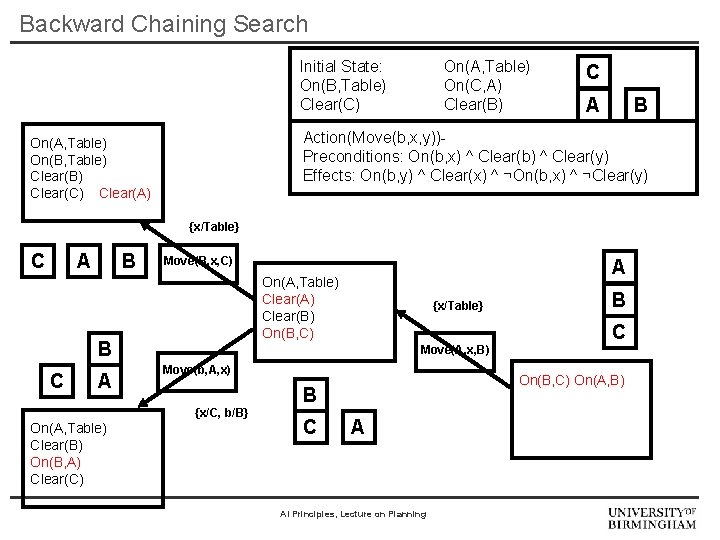

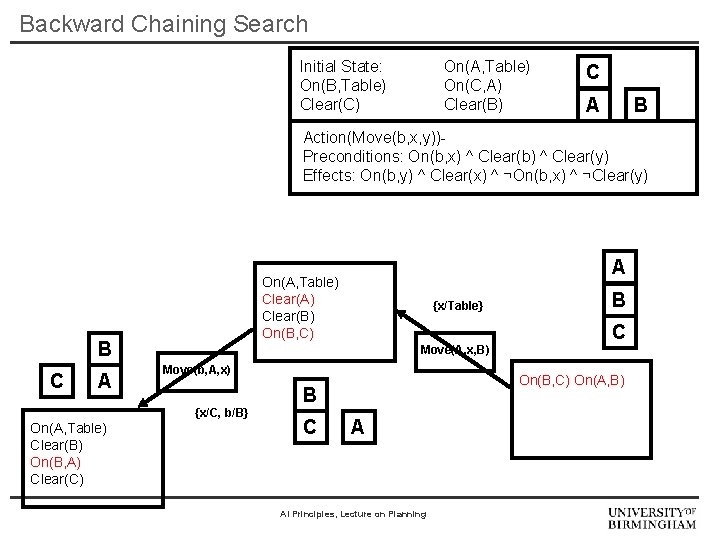

Backward Chaining Search Initial State: On(B, Table) Clear(C) On(A, Table) On(C, A) Clear(B) C A B Action(Move(b, x, y))Preconditions: On(b, x) ^ Clear(b) ^ Clear(y) Effects: On(b, y) ^ Clear(x) ^ ¬On(b, x) ^ ¬Clear(y) B C A {x/Table} Move(A, x, B) Move(b, A, x) {x/C, b/B} On(A, Table) Clear(B) On(B, A) Clear(C) A On(A, Table) Clear(A) Clear(B) On(B, C) C On(B, C) On(A, B) B C B A AI Principles, Lecture on Planning

Backward Chaining Search Initial State: On(B, Table) Clear(C) On(A, Table) On(C, A) Clear(B) C A B Action(Move(b, x, y))Preconditions: On(b, x) ^ Clear(b) ^ Clear(y) Effects: On(b, y) ^ Clear(x) ^ ¬On(b, x) ^ ¬Clear(y) On(A, Table) On(B, Table) Clear(B) Clear(C) Clear(A) {x/Table} C A B Move(B, x, C) B C A {x/Table} Move(A, x, B) Move(b, A, x) {x/C, b/B} On(A, Table) Clear(B) On(B, A) Clear(C) A On(A, Table) Clear(A) Clear(B) On(B, C) C On(B, C) On(A, B) B C B A AI Principles, Lecture on Planning

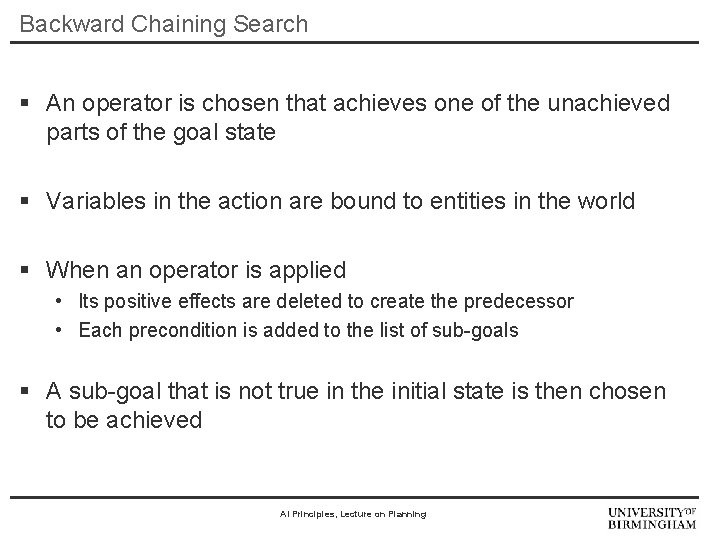

Backward Chaining Search § An operator is chosen that achieves one of the unachieved parts of the goal state § Variables in the action are bound to entities in the world § When an operator is applied • Its positive effects are deleted to create the predecessor • Each precondition is added to the list of sub-goals § A sub-goal that is not true in the initial state is then chosen to be achieved AI Principles, Lecture on Planning

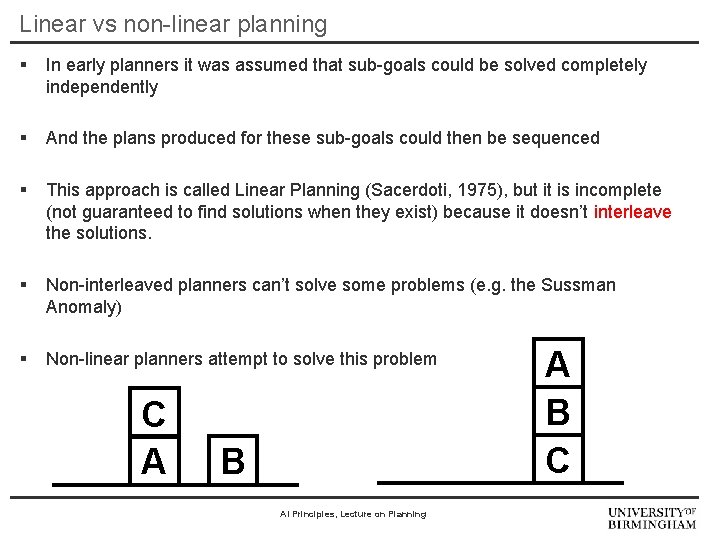

Linear vs non-linear planning § In early planners it was assumed that sub-goals could be solved completely independently § And the plans produced for these sub-goals could then be sequenced § This approach is called Linear Planning (Sacerdoti, 1975), but it is incomplete (not guaranteed to find solutions when they exist) because it doesn’t interleave the solutions. § Non-interleaved planners can’t solve some problems (e. g. the Sussman Anomaly) § Non-linear planners attempt to solve this problem C A B AI Principles, Lecture on Planning A B C

Conclusion § Planning is an important area of AI. State of the art planners are now beyond human level performance. § Specialist representations of action effects have been used to overcome issues of representational and inferential inefficiency § The STRIPS representation has been influential, succeeded by ADL and the overarching framework of PDDL § State space planning still requires search, either forward or backward chaining § This is the ancient history of planning, later on came partial order planning (1975 -1995), satisfiability planners (1992 on), graph planning (1995 on), and then the resurgence of state space planning (1996 on), and also planning under uncertainty (decision theoretic planning) (1997 on). AI Principles, Lecture on Planning

Jeremy c wyatt

Jeremy c wyatt Quién soy yo como me llamó

Quién soy yo como me llamó 01:640:244 lecture notes - lecture 15: plat, idah, farad

01:640:244 lecture notes - lecture 15: plat, idah, farad Sonnets of wyatt and surrey

Sonnets of wyatt and surrey Tesoros encontrados en el mar

Tesoros encontrados en el mar Loves farewell analysis

Loves farewell analysis Ron wyatt descubre la sangre de cristo

Ron wyatt descubre la sangre de cristo Ron wyatt faux

Ron wyatt faux Ron wyatt mar rojo

Ron wyatt mar rojo Sir thomas wyatt they flee from me

Sir thomas wyatt they flee from me Ron wyatt md

Ron wyatt md Wyatt my galley

Wyatt my galley Red sea crossing pillars

Red sea crossing pillars Wyatt

Wyatt Whoso list to hunt sir thomas wyatt

Whoso list to hunt sir thomas wyatt Wyatt horan

Wyatt horan Michelle wyatt

Michelle wyatt Tumba de amram

Tumba de amram Was macht ein aktuar

Was macht ein aktuar