Personalized Federated Learning on NonIID Data Yutao Huang

![References • [1] Qiang Yang, Yang Liu, Tianjian Chen and Yongxin Tong. Federated machine References • [1] Qiang Yang, Yang Liu, Tianjian Chen and Yongxin Tong. Federated machine](https://slidetodoc.com/presentation_image_h2/b44580fa861066d324e3dfb4894be82e/image-15.jpg)

- Slides: 16

Personalized Federated Learning on Non-IID Data Yutao Huang*, Lingyang Chu*, Zirui Zhou, Lanjun Wang, Jiangchuan Liu, Jian Pei and Yong Zhang (* : equal contribution)

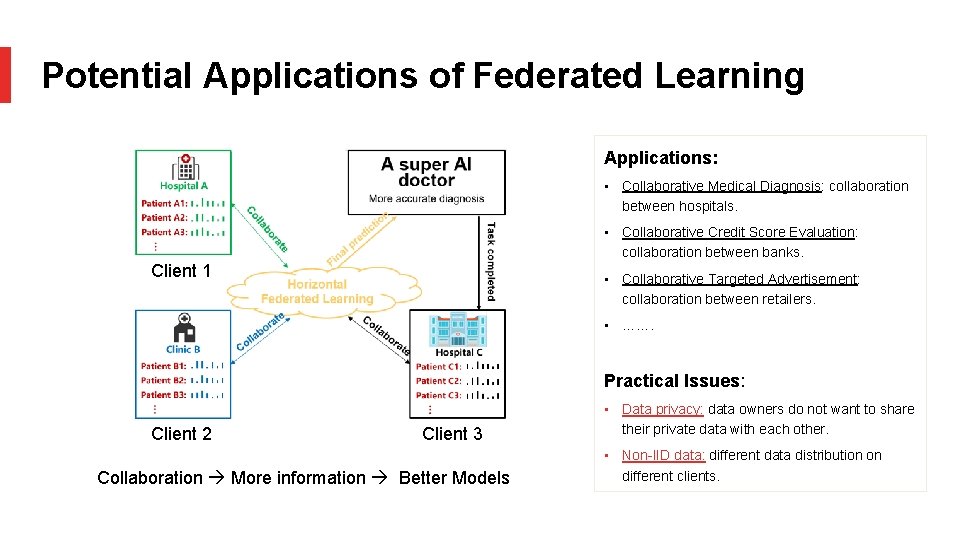

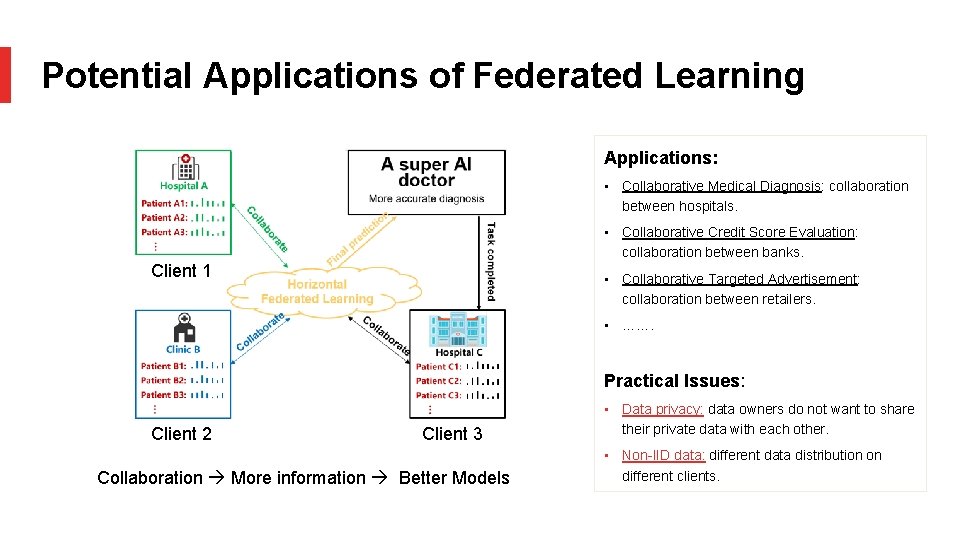

Potential Applications of Federated Learning Applications: • Collaborative Medical Diagnosis: collaboration between hospitals. • Collaborative Credit Score Evaluation: collaboration between banks. Client 1 • Collaborative Targeted Advertisement: collaboration between retailers. • ……. Practical Issues: Client 2 Client 3 Collaboration More information Better Models • Data privacy: data owners do not want to share their private data with each other. • Non-IID data: different data distribution on different clients.

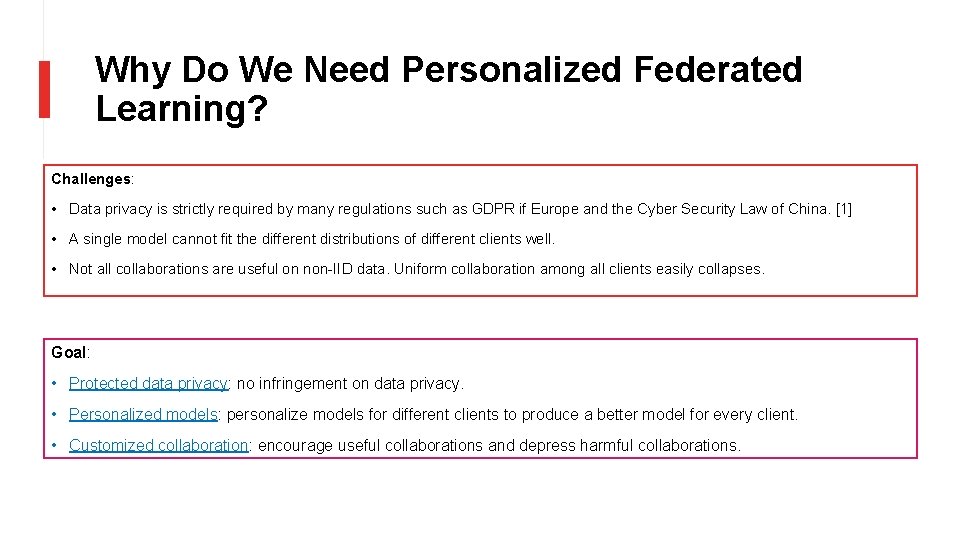

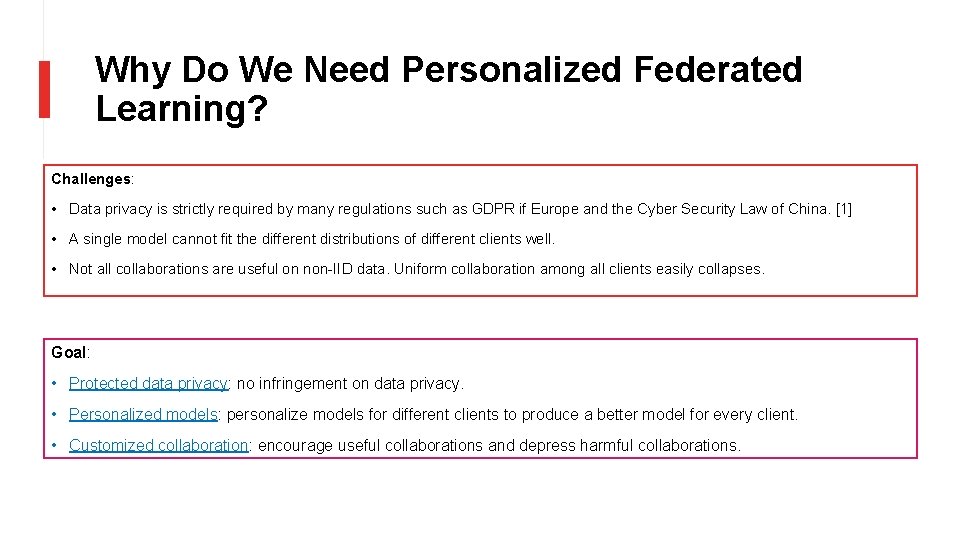

Why Do We Need Personalized Federated Learning? Challenges: • Data privacy is strictly required by many regulations such as GDPR if Europe and the Cyber Security Law of China. [1] • A single model cannot fit the different distributions of different clients well. • Not all collaborations are useful on non-IID data. Uniform collaboration among all clients easily collapses. Goal: • Protected data privacy: no infringement on data privacy. • Personalized models: personalize models for different clients to produce a better model for every client. • Customized collaboration: encourage useful collaborations and depress harmful collaborations.

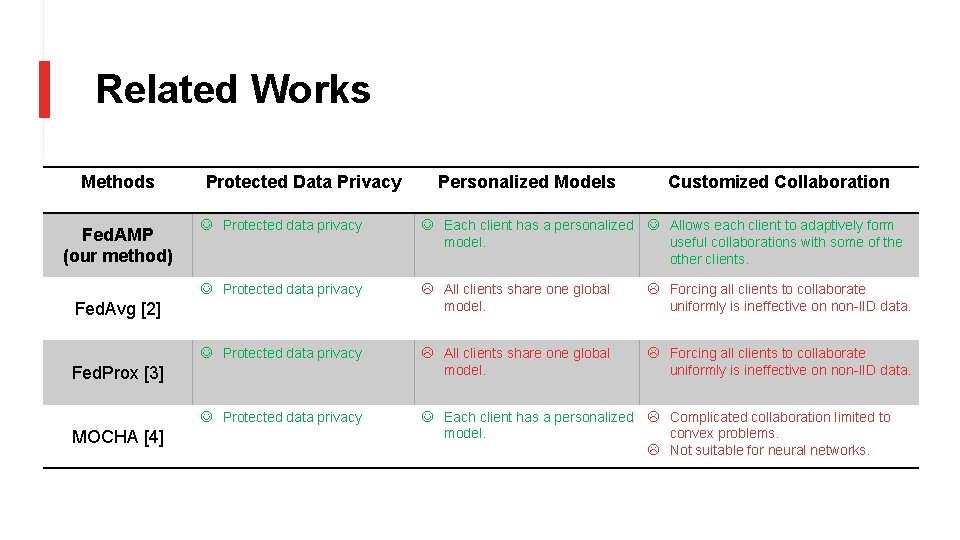

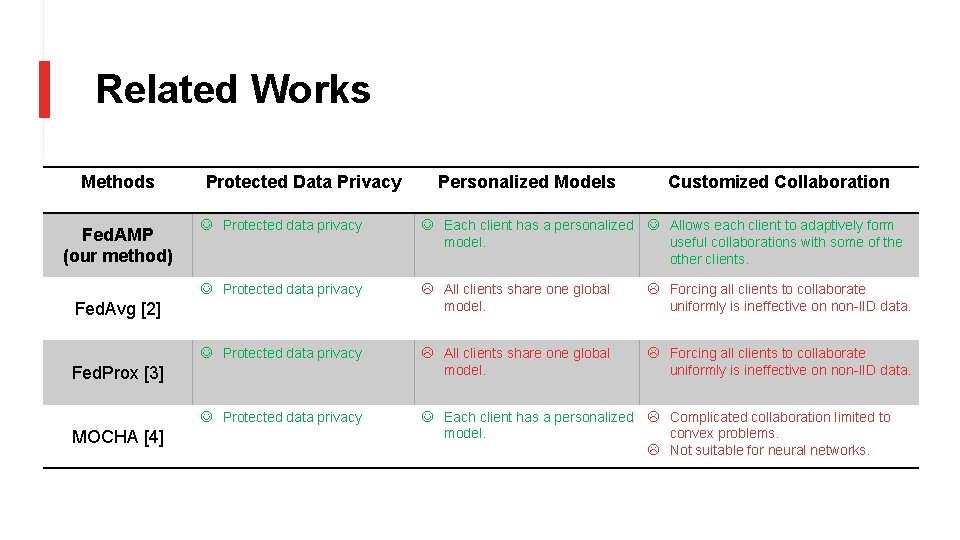

Related Works Methods Fed. AMP (our method) Protected Data Privacy Customized Collaboration J Protected data privacy J Each client has a personalized J Allows each client to adaptively form model. useful collaborations with some of the other clients. J Protected data privacy L All clients share one global model. L Forcing all clients to collaborate uniformly is ineffective on non-IID data. J Protected data privacy J Each client has a personalized L Complicated collaboration limited to model. convex problems. L Not suitable for neural networks. Fed. Avg [2] Fed. Prox [3] MOCHA [4] Personalized Models

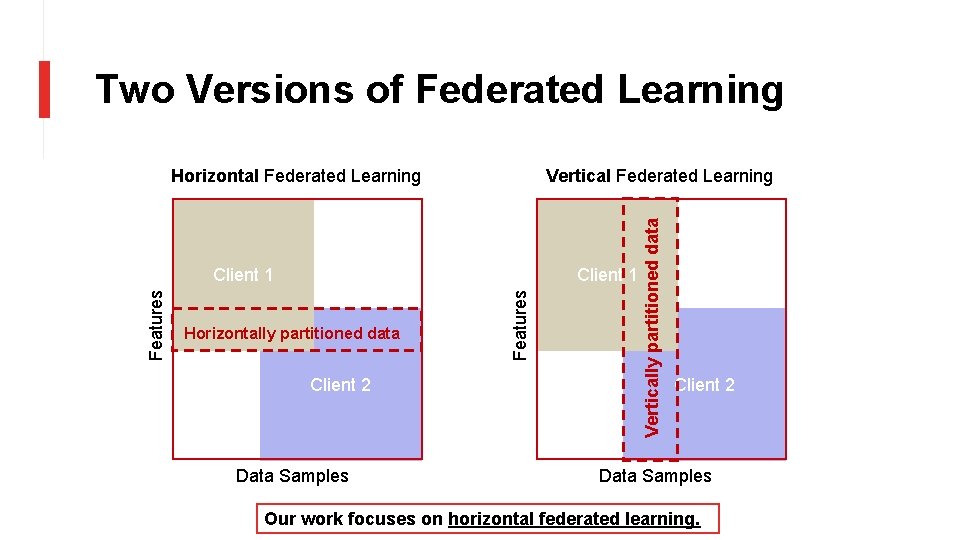

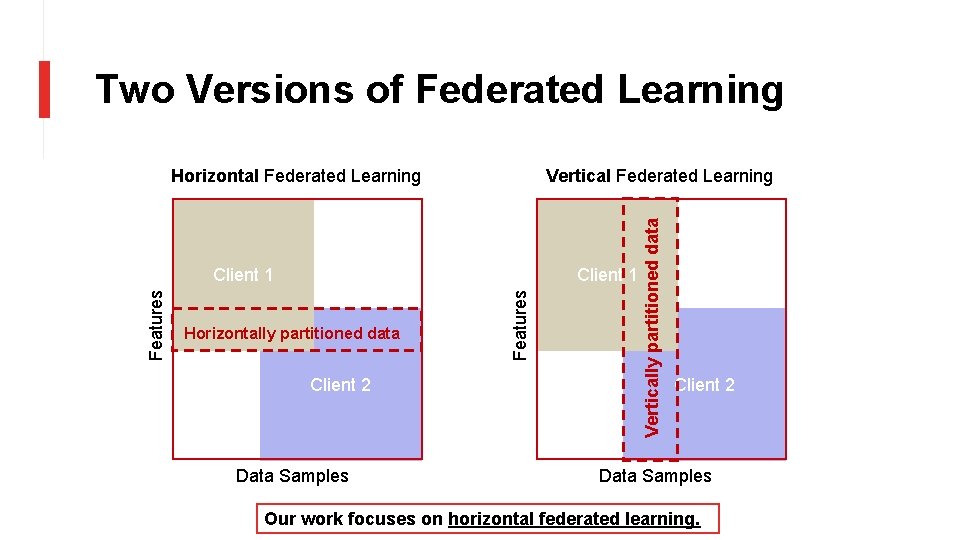

Two Versions of Federated Learning Vertical Federated Learning Client 1 Horizontally partitioned data Client 2 Data Samples Features Client 1 Vertically partitioned data Horizontal Federated Learning Client 2 Data Samples Our work focuses on horizontal federated learning.

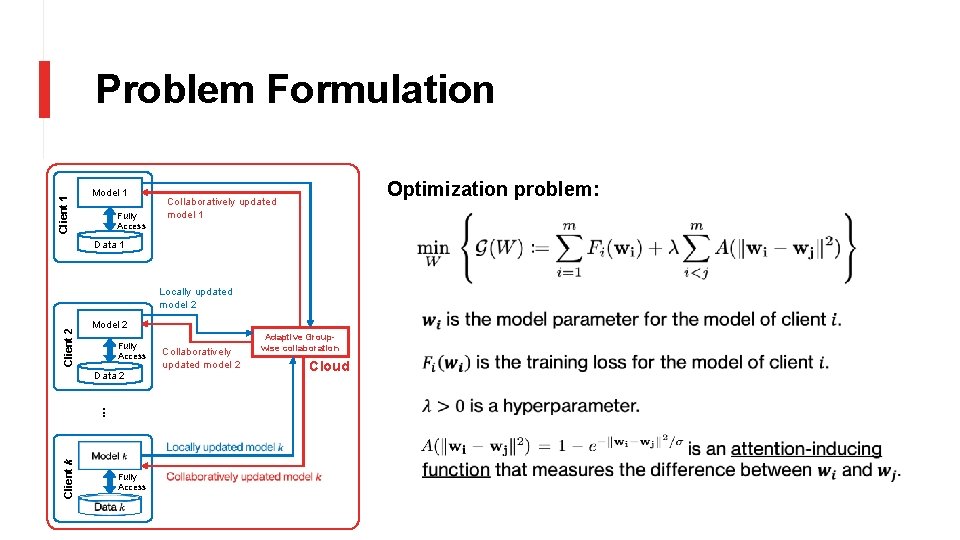

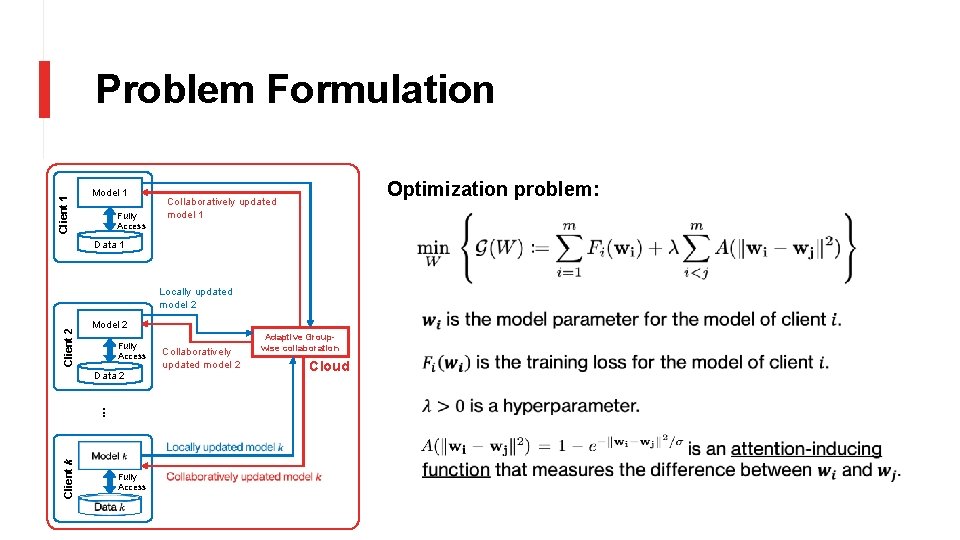

Client 1 Problem Formulation Model 1 Fully Access Optimization problem: Collaboratively updated model 1 Data 1 Client 2 Locally updated model 2 Model 2 Fully Access Client k … Data 2 Fully Access Collaboratively updated model 2 Adaptive Groupwise collaboration Cloud

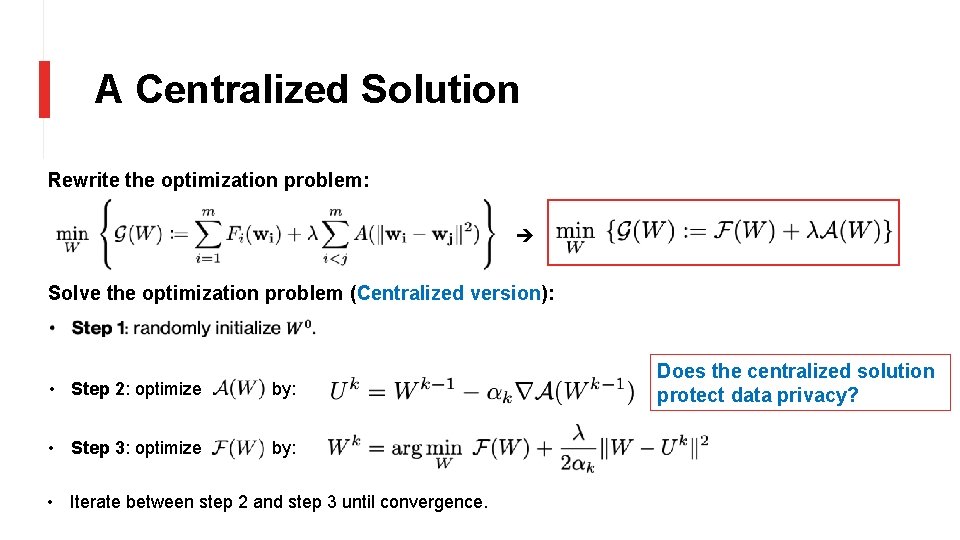

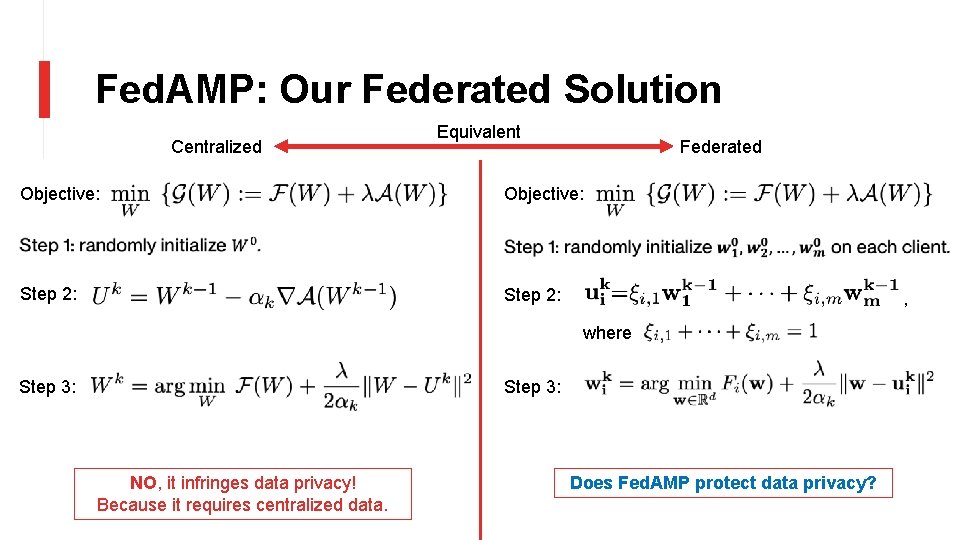

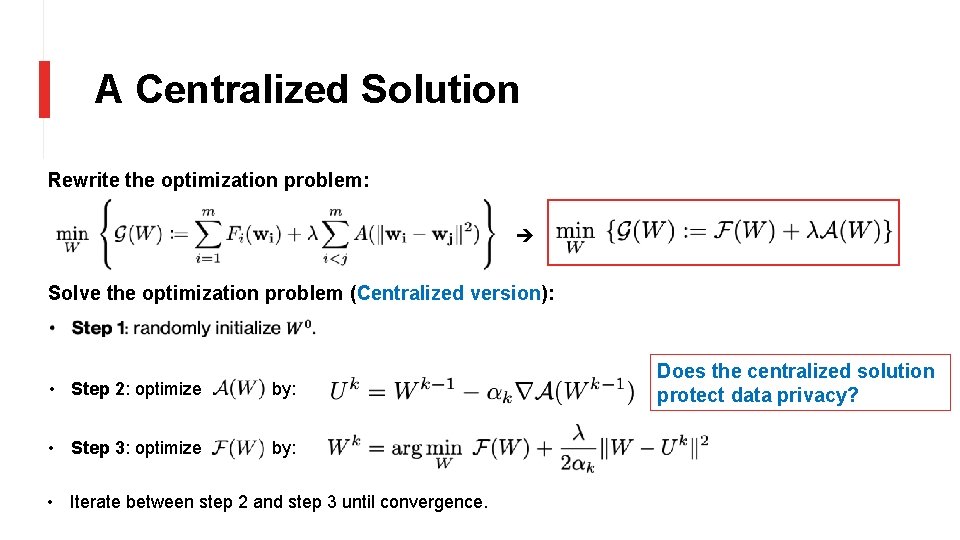

A Centralized Solution Rewrite the optimization problem: Solve the optimization problem (Centralized version): • Step 2: optimize by: • Step 3: optimize by: • Iterate between step 2 and step 3 until convergence. Does the centralized solution protect data privacy?

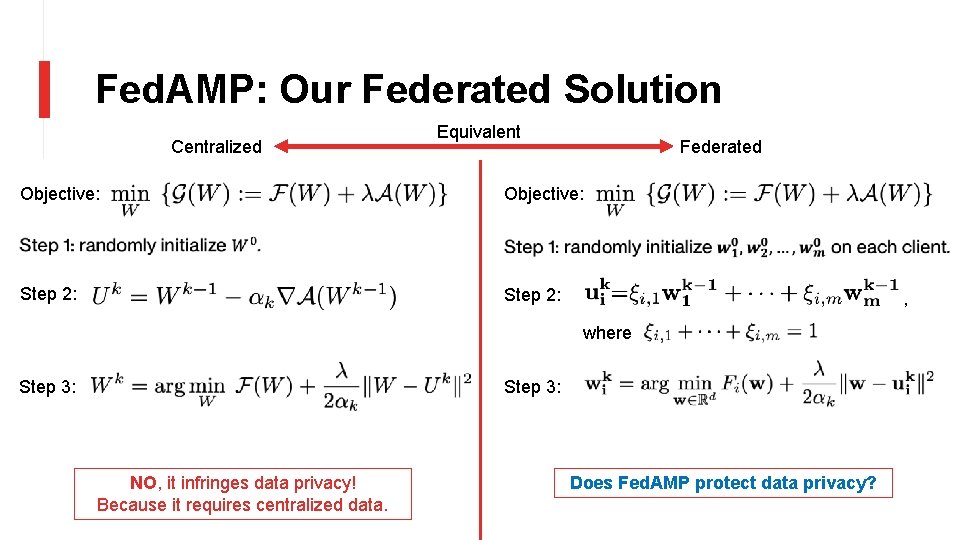

Fed. AMP: Our Federated Solution Centralized Equivalent Federated Objective: Step 2: , where Step 3: NO, it infringes data privacy! Because it requires centralized data. Does Fed. AMP protect data privacy?

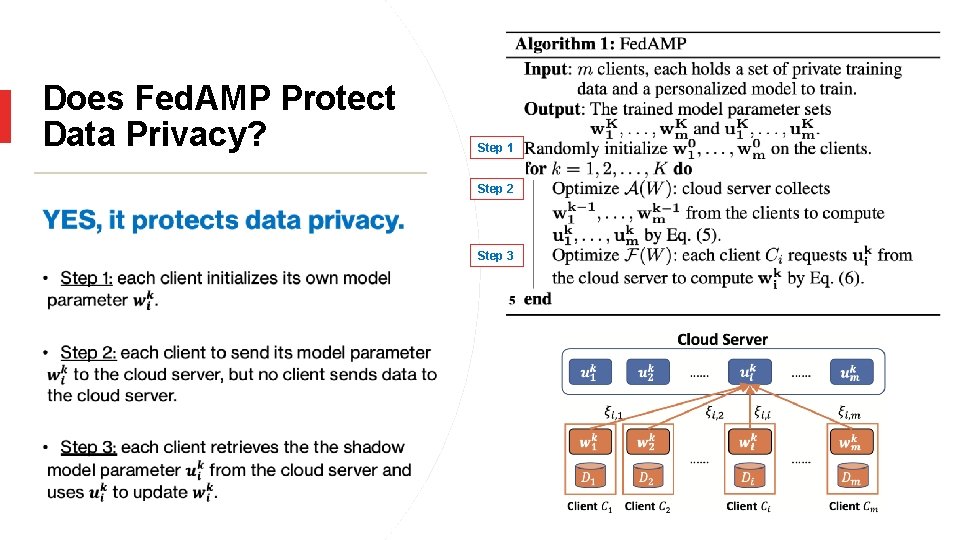

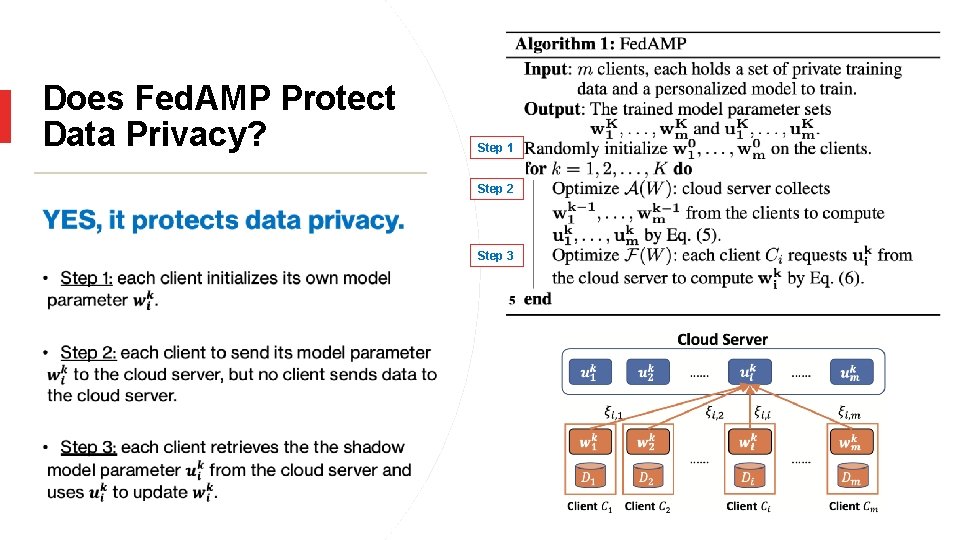

Does Fed. AMP Protect Data Privacy? Step 1 Step 2 Step 3

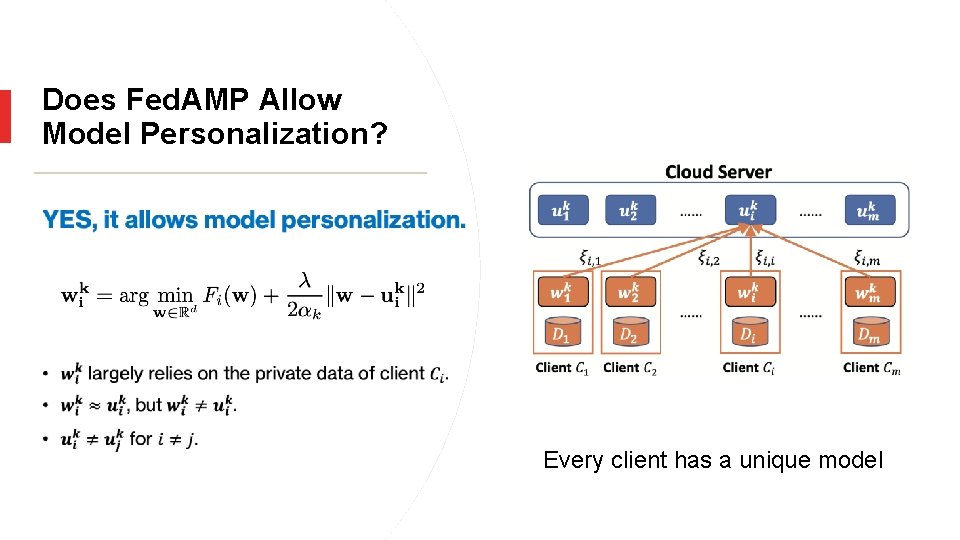

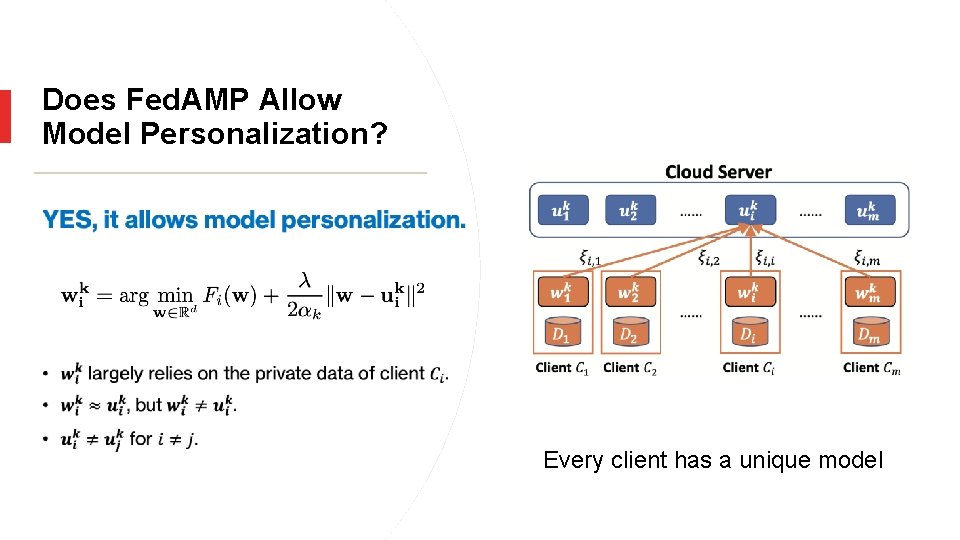

Does Fed. AMP Allow Model Personalization? Every client has a unique model

Does Fed. AMP Induce Collaboration? Who to collaborate more? Who to collaborate less?

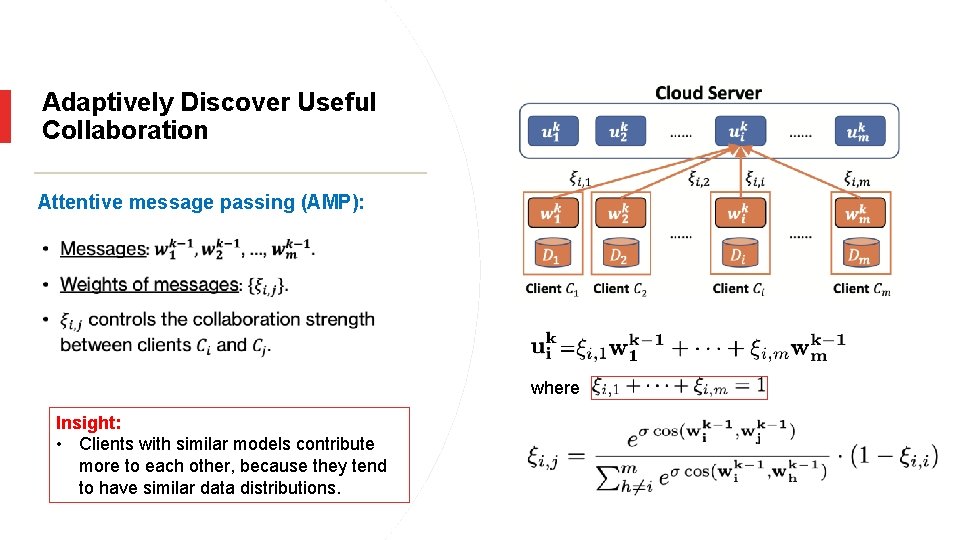

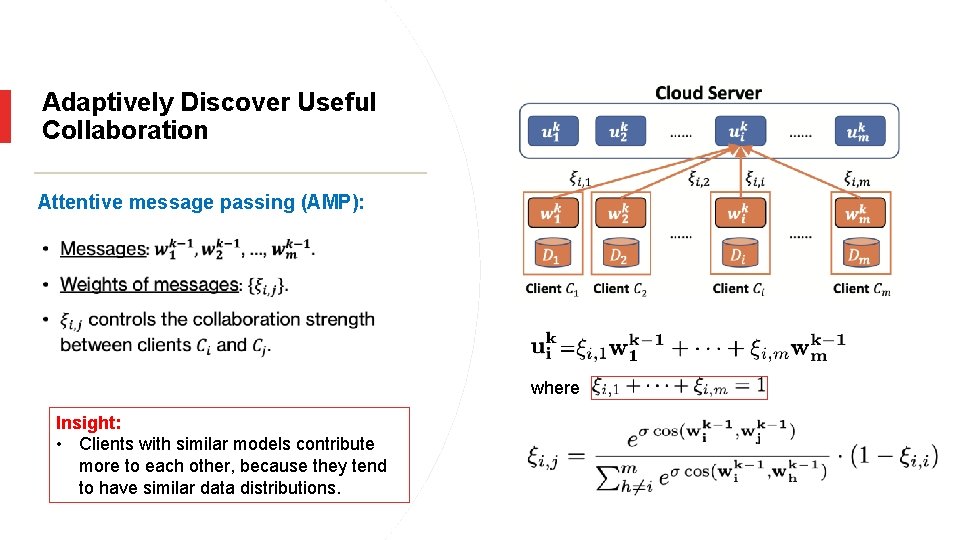

Adaptively Discover Useful Collaboration Attentive message passing (AMP): • where Insight: • Clients with similar models contribute more to each other, because they tend to have similar data distributions.

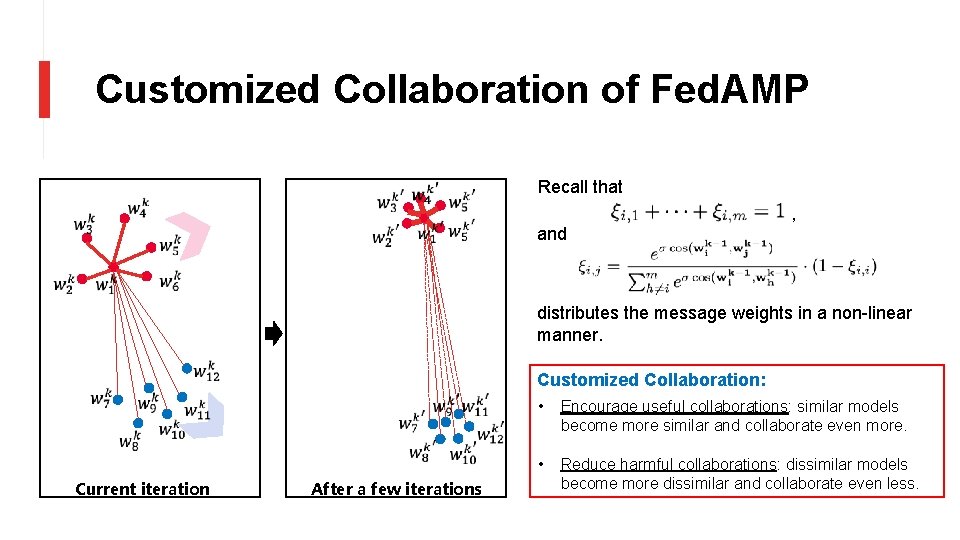

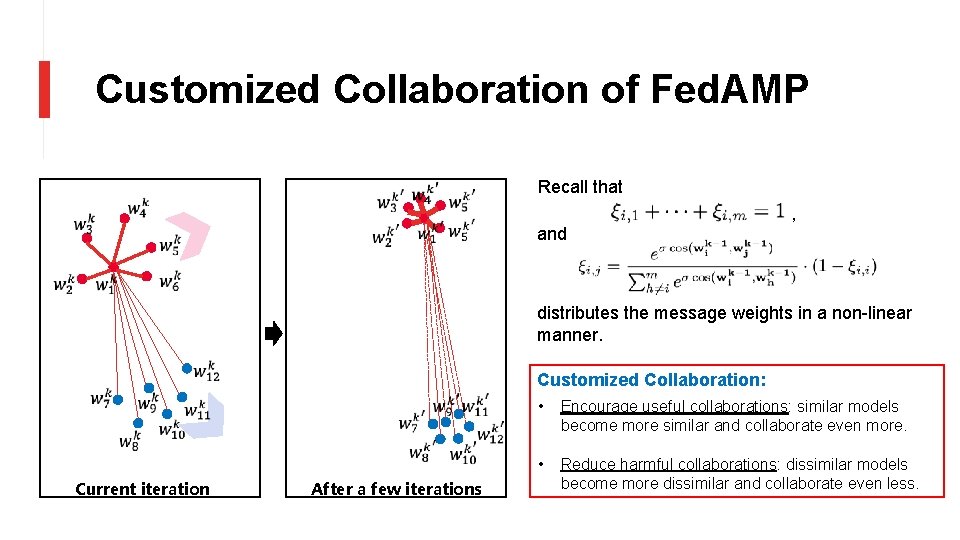

Customized Collaboration of Fed. AMP Recall that and , distributes the message weights in a non-linear manner. Customized Collaboration: Current iteration After a few iterations • Encourage useful collaborations: similar models become more similar and collaborate even more. • Reduce harmful collaborations: dissimilar models become more dissimilar and collaborate even less.

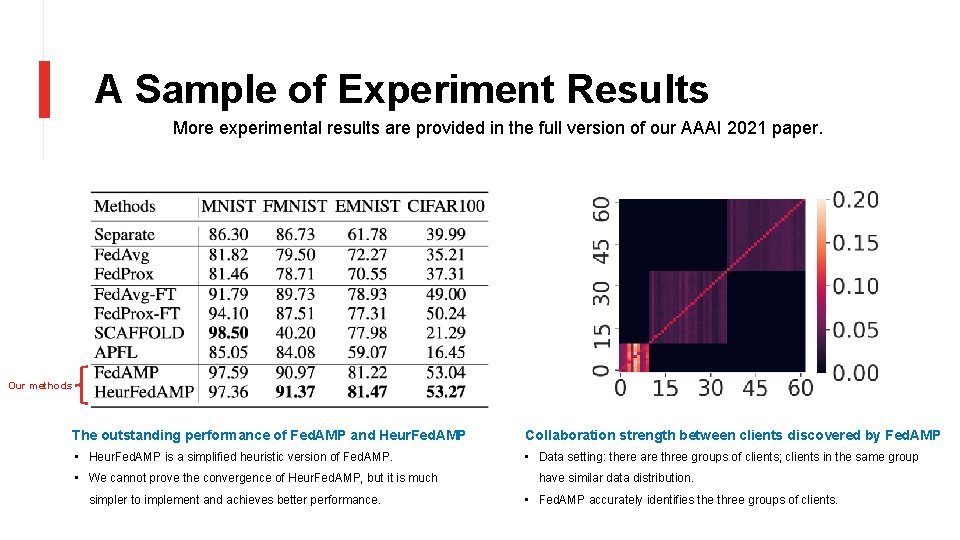

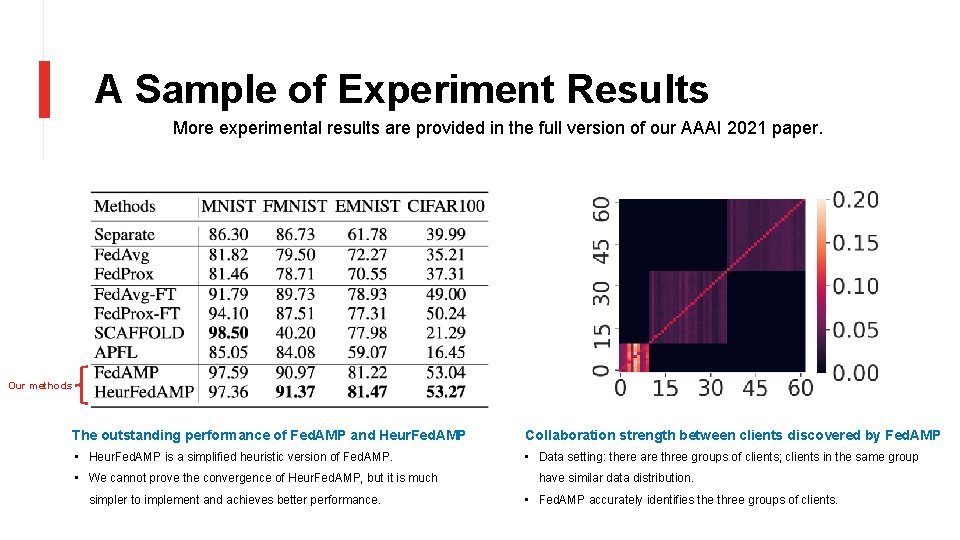

A Sample of Experiment Results More experimental results are provided in the full version of our AAAI 2021 paper. Our methods The outstanding performance of Fed. AMP and Heur. Fed. AMP Collaboration strength between clients discovered by Fed. AMP • Heur. Fed. AMP is a simplified heuristic version of Fed. AMP. • Data setting: there are three groups of clients; clients in the same group • We cannot prove the convergence of Heur. Fed. AMP, but it is much simpler to implement and achieves better performance. have similar data distribution. • Fed. AMP accurately identifies the three groups of clients.

![References 1 Qiang Yang Yang Liu Tianjian Chen and Yongxin Tong Federated machine References • [1] Qiang Yang, Yang Liu, Tianjian Chen and Yongxin Tong. Federated machine](https://slidetodoc.com/presentation_image_h2/b44580fa861066d324e3dfb4894be82e/image-15.jpg)

References • [1] Qiang Yang, Yang Liu, Tianjian Chen and Yongxin Tong. Federated machine learning: Concept and applications[J]. ACM Transactions on Intelligent Systems and Technology (TIST), 2019, 10(2): 12. • [2] Mc. Mahan, H. Brendan, Eider Moore, Daniel Ramage, and Seth Hampson. "Communication-efficient learning of deep networks from decentralized data. " AISTATS (2017). • [3] Li, Tian, Anit Kumar Sahu, Manzil Zaheer, Maziar Sanjabi, Ameet Talwalkar, and Virginia Smith. "Federated optimization in heterogeneous networks. " Sys. ML 2020. • [4] Smith, Virginia, Chao-Kai Chiang, Maziar Sanjabi, and Ameet S. Talwalkar. "Federated multi-task learning. " In Advances in Neural Information Processing Systems (Neur. IPS), pp. 4424 -4434. 2017.

Thanks Q&A

Yutao zhong

Yutao zhong One way function

One way function Personalized learning learner profile

Personalized learning learner profile Institute for personalized learning

Institute for personalized learning Federated learning

Federated learning Federated data mart

Federated data mart Hexiangnan

Hexiangnan Personalized mobile search engine ieee paper

Personalized mobile search engine ieee paper Zaponet

Zaponet Personalized patient education

Personalized patient education Contextual bandits for personalized recommendation

Contextual bandits for personalized recommendation Personalized recommendations

Personalized recommendations Personalized navigation

Personalized navigation Personalized statement messaging

Personalized statement messaging All-to-all personalized communication

All-to-all personalized communication Limitations of trait theory of leadership

Limitations of trait theory of leadership Social stories questionnaire

Social stories questionnaire