PMSE Personalized Mobile Search Engine IEEE TRANSACTIONS ON

PMSE: Personalized Mobile Search Engine IEEE TRANSACTIONS ON KNOWLEDGE AND DATA ENGINEERING, VOL. 25, NO. 4, APRIL 2013 2015/11/06 M 1 ikuta 1

1. INTRODUCTION A major problem in mobile search • The interactions between the users and search engines are limited by the small form factors of the mobile devices. • Mobile users tend to submit shorter, hence, more ambiguous queries compared to their web search counterparts. 2

1. INTRODUCTION ��Mobile search engines must be able to personalize the results according to the users’ profiles. p Approach to capturing a user’s interests • user’s clickthrough data [5], [10], [15], [18]. 3

1. INTRODUCTION u A search engine personalization method in most of previous work • based on users’ concept preferences [12]. • assumed that all concepts are of the same type. User’s concept preferences u A Personalized Mobile Search Engine (PMSE) separate concepts into location concepts and content Importan concepts. t in m Content concept (Content ontology) obile sea Location concept (Location ontology) rch 4

1. INTRODUCTION The introduction of location preferences offers PMSE an additional dimension for capturing a user’s interest and an opportunity to enhance search quality for users. Location concept Japan “hotel” Room rate Content concept facilites 5

1. INTRODUCTION GPS locations play an important role in mobile web search. Location concept Japan “hotel” Room rate Content concept facilites GPS Location 6

1. INTRODUCTION The rest of the paper is organized as follows: 2. Related work is reviewed 3. The architecture and system design of PMSE 4. Method for building the content and location ontologies. 5. The notion of content and location entropies, and show their usage in search personalization 6. The method to extract user preferences from the clickthrough data 7. The Ranking SVM (RSVM) method [10] for learning a linear weight vector to rank the search results 8. The performance results 9. conclude the paper 7

2. RELATED WORK The differences between existing works and theirs are 1. Without requiring extra efforts from the user. o Most existing location-based search systems users’ efforts 2. Adopt the server-client model o To train the user profiles quickly and efficiently 3. Address the issues of privacy preservation. o Existing works on personalization do not address the issues of privacy preservation. o Two privacy parameters o Maintain good ranking quality. 8

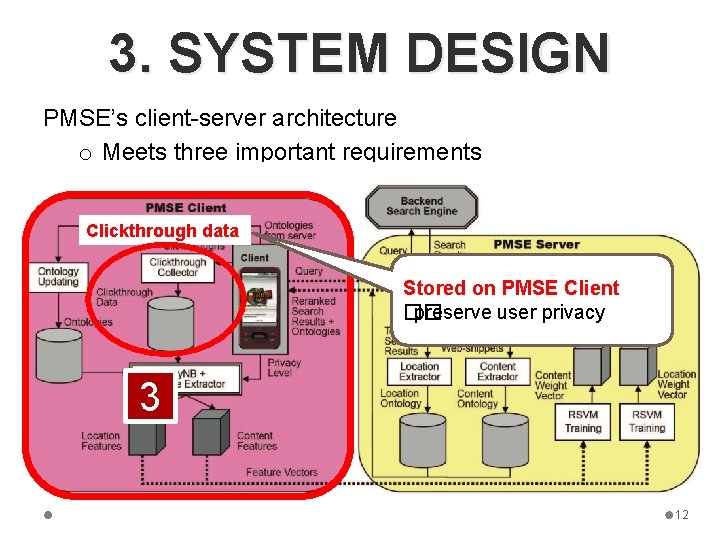

3. SYSTEM DESIGN PMSE’s client-server architecture o Meets three important requirements 9

3. SYSTEM DESIGN PMSE’s client-server architecture o Meets three important requirements 1 Computation-intensive tasks handled by PMSE server. ��the limited computational power on mobile devices 10

3. SYSTEM DESIGN PMSE’s client-server architecture o Meets three important requirements Data transmission between client and server 2 Minimized �� ensure fast and efficient processing of the search 11

3. SYSTEM DESIGN PMSE’s client-server architecture o Meets three important requirements Clickthrough data Stored on PMSE Client �� preserve user privacy 3 12

3. SYSTEM DESIGN n PMSE Client with limited computational power. Responsible for storing • The user clickthroughs • The ontologies derived from the PMSE server. Handle simple tasks • Update clickthoughs and ontologies • Create feature vectors • Display reranked search results 13

3. SYSTEM DESIGN n PMSE Server Handle heavy tasks • RSVM training • Reranking of search results RSVM: Ranking 14

3. SYSTEM DESIGN To minimize the data transmission client and server The PMSE client would only need to submit a query together with the feature vectors to the PMSE server Minimized ��only the essential data are transmitted The server would automatically return a set of reranked search results according to the preferences stated in the feature vectors. 15

3. SYSTEM DESIGN PMSE’s design addressed the issues: 1) Limited computational power on mobile device 2) Data transmission minimization. 16

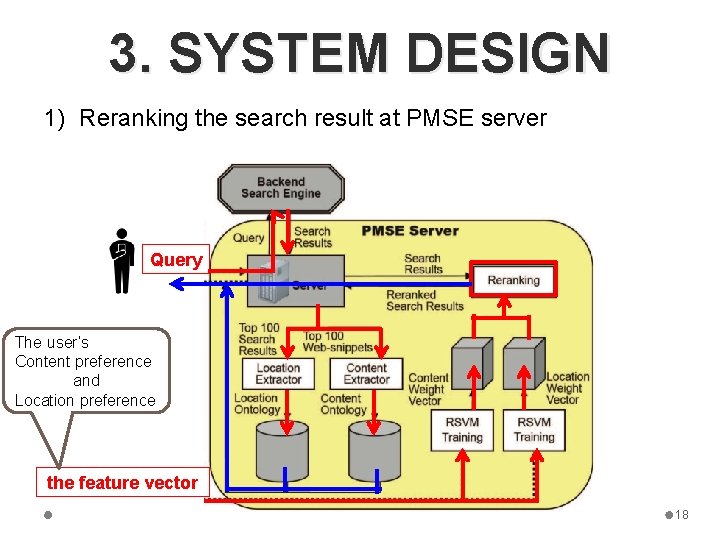

3. SYSTEM DESIGN PMSE consists of two major activites: 1) Reranking the search result at PMSE server 1) Ontology update and clickthrough collection at PMSE client 17

3. SYSTEM DESIGN 1) Reranking the search result at PMSE server Query The user’s Content preference and Location preference the feature vector 18

3. SYSTEM DESIGN 2) Ontology update and clickthrough collection at PMSE client Ontologies • Contains the concept space that models the relationships between the concepts extracted from the search results. Clickthroughs • the PMSE server does not know the exact set of documents that the user has clicked on. 19

3. SYSTEM DESIGN 2) Ontology update and clickthrough collection at PMSE client ��This design allows user privacy to be preserved in certain degre Two privacy parameters ( min. Distance and exp. Ratio ) control the amount of personal preferences exposed to the PMSE server Personal preferences Privacy level exposed to the PMSE server High Only limited personal information Low The full feature vector 20

4. USER INTEREST PROFILING PMSE uses “concepts” • To model the interests and preferences of a user. • Classified into two different types Importan t in mobi Content concept (Content ontology) le search Location concept (Location ontology) The concepts are modeled as ontologies • To capture the relationships between the concepts �� the characteristics of the content concepts and location concepts are different. 21

4. USER INTEREST PROFILING 4 -1. Content Ontology Query q ① Extracts all the keywords and phrases (excluding the stop words) from the web-snippets arising from q Ex. ) 楽天、トラベル、ホテル、予約、格安、ホテル、ビジ ネスホテル… web-snippets: the title, summary, and URL of a Webpage returned by search engines. ② If a keyword/phrase exists frequently in the web-snippets, they would treat it as an important concept related to the query. Ex. ) 予約、格安、ビジネスホテル… 22

4. USER INTEREST PROFILING 4 -1. Content Ontology sf(ci) : the snippet frequency of the keyword/phrase ci (the number of web-snippets containing ci) The support formula measure the importance of a particular keyword/phrase ci with respect to the query q: (1) |ci| : the number of terms in the keyword/phrase ci n n : the number of web-snippets returned If support(ci) > s ( s = 0. 03 in their experiments), they treat ci as a concept for q. 23

4. USER INTEREST PROFILING 4 -1. Content Ontology Adopt the following two propositions: (to determine the relationships between concepts for ontology formulation) n Similarity Two concepts which coexist a lot on the search results might represent the same topical interest. If coexist(ci, cj) > �� δ 1 (�� δ 1 is a threshold), then ci and cj are considered as similar. n Parent-child relationship More specific concepts often appear with general terms, while the reverse is not true. If pr(ci | cj) > �� δ 2 (�� δ 2 is a threshold), they mark ci as cj’s child. “meeting facility” “facilities” “meeting rromfacilities”, “swimming pool”, “meeting facility” 24

4. USER INTEREST PROFILING 4 -1. Content Ontology for q = “hotel” clickthroughs the feature vector 25

4. USER INTEREST PROFILING 4 -1. Content Ontology 26

4. USER INTEREST PROFILING 4 -2. Location Ontology Two important issues in location ontology formulation 1. Only very few of location concepts co-occur with the query terms in web-snippets. �� extract location concepts from the full documents. 2. Many geographical relationships among locations have already been captured as facts. �� create a predefined location ontology among city, province, region, and country Countries Regions Provinces cities 27

4. USER INTEREST PROFILING 4 -2. Location Ontology The predefined location ontology United States document d Los Angeles California Los Angels … … … A location concept related to d the feature vector 28

4. USER INTEREST PROFILING 4 -2. Location Ontology 29

5. DIVERSITY AND CONCEPT ENTROPY • An important issue is how to weigh the content preference and location preference in the integration step �� adjust the weights of content preference and location preference based on their effectiveness in the personalization process. 30

5. DIVERSITY AND CONCEPT ENTROPY 5 -1. Diversity of Content and Location Information Entropy to estimate the amount of content and location information retrieved by a query To formally characterize the content and location properties of the query content entropy HC(q) and location entropy HL(q) k : the number of content concepts extracted C = {c 1, c 2, …, ck} |ci| : the number of search results containing ci |C| = |c 1| + |c 2| + … +|ck| P( ci ) = |ci| / |C| m : the number of Location concepts extracted L = {l 1, l 2, …, lk} |li| : the number of search results containing li |L| = |l 1| + |l 2| + … +|lk| P( li ) = |li| / |L| In information theory [17] Entropy : the uncertainty associated with the information content of a message from the receiver’s point of view. 31

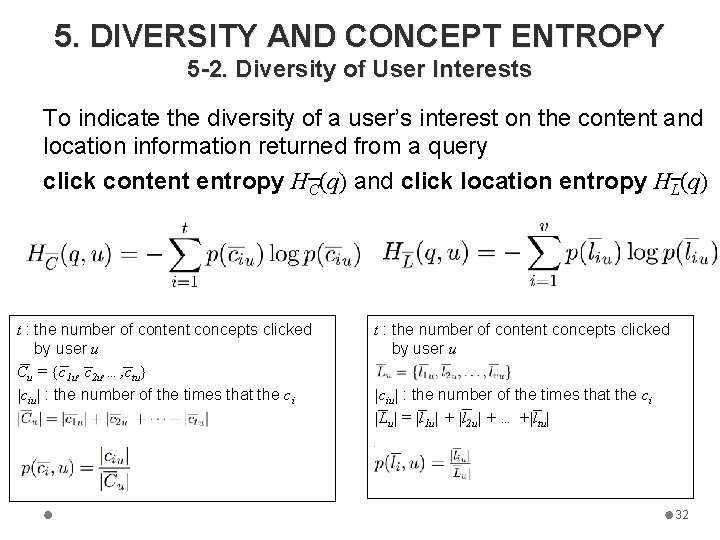

5. DIVERSITY AND CONCEPT ENTROPY 5 -2. Diversity of User Interests To indicate the diversity of a user’s interest on the content and location information returned from a query click content entropy HC(q) and click location entropy HL(q) t : the number of content concepts clicked by user u Cu = {c 1 u, c 2 u, …, ctu} |ciu| : the number of the times that the ci t : the number of content concepts clicked by user u |ciu| : the number of the times that the ci |Lu| = |l 1 u| + |l 2 u| + … +|ltu| 32

5. DIVERSITY AND CONCEPT ENTROPY 5 -3. Personalization Effectiveness content/location entropy A query result set Personalization Effectiveness High degree of ambiguity high Low Very focused low click content/ location entropies User’s intersts Personalization Effectiveness High Very broad low Low Focus on certain precise topic high Estimate the personalization effectiveness using the extracted content and location concepts with respect to user u as follows: 33

6. USER PREFERENCES AND PRIVACY PRESERVATION In this section, 1. Review a preference minig, namely Spy. NB method 2. Discuss how PMSE preserves user privacy 34

![6. USER PREFERENCES AND PRIVACY PRESERVATION Spy. NB [15] learns user behavior models from 6. USER PREFERENCES AND PRIVACY PRESERVATION Spy. NB [15] learns user behavior models from](http://slidetodoc.com/presentation_image_h/d20560ee649d73eb69036461803ecb22/image-35.jpg)

6. USER PREFERENCES AND PRIVACY PRESERVATION Spy. NB [15] learns user behavior models from preferences extracted from clickthrough data. P : positive samples ( the clicked documents ) U : unlabeled (unclicked) samples prediction PN : predicated negative samples ( PN U ) 35

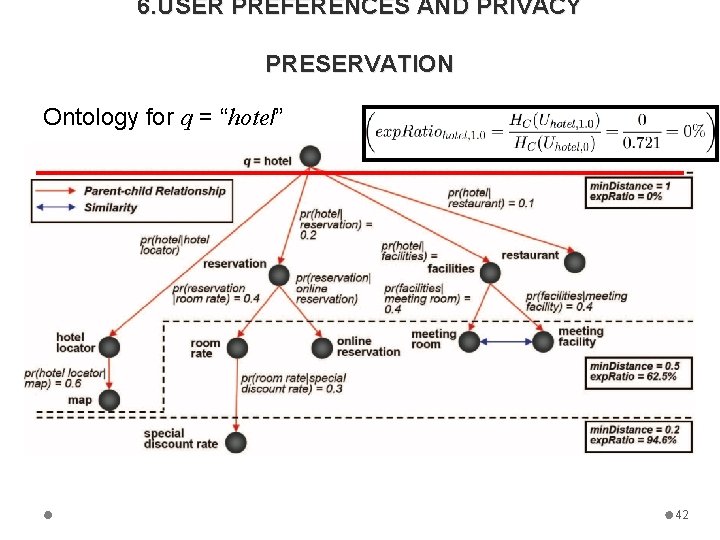

6. USER PREFERENCES AND PRIVACY PRESERVATION PMSE filters the ontologies according to the user’s privacy level setting To control the amount of personal information exposed out of users’ mobile devices n min. Distance measure whether a concept is far away from the root (i. e. , too specific) in the ontology-based user profiles. n exp. Ratio measure the amount of private information exposed in the user profiles. 36

6. USER PREFERENCES AND PRIVACY PRESERVATION Close relationship between privacy and personalization effectiveness. Privacy level Personal effectiveness High Low low tradeoff High 37

6. USER PREFERENCES AND PRIVACY PRESERVATION PMSE employs distance to filter the concepts in the ontology ci ci+1 n min. Distance Concept ci will be pruned when the following condition is satisfied: ci-1 : the direct parent of ci ck : the leaf concept, which is furthet away from in ontologydirect parent of ci D(ci-1, ck) = d(ci-1, ci) + d(ci, ci+1) + … + d(ck-1, ck) : the total distance from ci-1 to ck D(root, ci-1) : the total distance from root to ci-1 38

6. USER PREFERENCES AND PRIVACY PRESERVATION n exp. Ratio measure the amount of information being pruned in the filter user profiles Uq, 0 : conplete user profile Uq, p : the protected user profile for the query q with min. Distance = p the concept entropy of the user profiles Given HC(Uq, 0) and HC(Uq, p) the exposed privacy exp. Ratiop, q can be computed as 39

6. USER PREFERENCES AND PRIVACY PRESERVATION Ontology for q = “hotel” 40

6. USER PREFERENCES AND PRIVACY PRESERVATION Ontology for q = “hotel” 41

6. USER PREFERENCES AND PRIVACY PRESERVATION Ontology for q = “hotel” 42

7. PERSONALIZED RANKING FUNCTIONS Two issues in the RSVM training process: 1) how to extract the feature vectors for a document 2) how to combine the content and location weight vectors into one integrated weight vector. 43

7. PERSONALIZED RANKING FUNCTIONS content feature vector φC(q, d) location feature vector φL(q, d) extracted by taking into account the concepts existing in a documents and other related concepts in the ontology of the query Four different types of relationships: 1. Similarity 2. Ancestor 3. Descendant 4. Sibling 44

7. PERSONALIZED RANKING FUNCTIONS 1. Content feature vector If content concepts ci is in a web-snippet sk, their values are incremented in the content feature vector φC(q, dk) with the following equation: For other content concepts cj that are related to the content concept ci in the content ontology, they are incremented in the content feature vector �� φC(q, dk) according to the following equation 45

7. PERSONALIZED RANKING FUNCTIONS 2. Location feature vector If location concept li is in a web-snippet dk, its value is incremented in the location feature vector φC(q, dk) with the following equation: For other content concepts lj that are related to the concept li in the content ontology, they are incremented in the location feature vector �� φL(q, dk) according to the following equation 46

7. PERSONALIZED RANKING FUNCTIONS 7 -2. GPS data Combination of Weight Vectors content weight vector ωC, q, u and location weight vector ωL, q, u represent the content and location user profiles for a user u on a query q GPS locations Important information that can be useful in personalizing the search results. I want to find movies on show in the nearby cinemas… �� PMSE incorporates the GPS locations into the personalization process 47

7. PERSONALIZED RANKING FUNCTIONS 7 -2. GPS data Combination of Weight Vectors if a user has visited the GPS location lr, the weight of the location concept in ωL, q, u[lr] is incremented according the following equation: ωGPS(u, lr, tr) : the weight being added to the GPS location lr tr : the number of locayion visited since the user visit lr (tr = 0 means the current location) The weight ωGPS(u, lr, tr) is the following decay equation: ωGPS_0 : the initial weight for the decay function when tr = 0 48

7. PERSONALIZED RANKING FUNCTIONS 7 -2. GPS data Combination of Weight Vectors The set of location concepts {ls} • closely related to the GPS location lr in the location ontology (ls is the ancestor/descendant/sibling of lr) • also possible candidates that the user may be interested in. the weight of the location concept ls in the weight vector ωL, q, u[ls] is incremented according to the following equation: 49

7. PERSONALIZED RANKING FUNCTIONS 7 -2. GPS data Combination of Weight Vectors The set of location concepts {ls} • closely related to the GPS location lr in the location ontology (ls is the ancestor/descendant/sibling of lr) • also possible candidates that the user may be interested in. the weight of the location concept ls in the weight vector ωL, q, u[ls] is incremented according to the following equation: 50

7. PERSONALIZED RANKING FUNCTIONS 7 -2. GPS data Combination of Weight Vectors To optimize the personalization effect They use the following formula to combine the two weight vectors to obtain the final weight vector ωq, u for user u’s ranking. Let , then we get the following equation PMSE will rank the documents in the returned search according to the following formula: 51

8. EXPERIMENTAL EVALUATION 8 -1. Experiment Setup The experiment aims to answer the following question: “Given that a user is only interested in some specific aspects of a query, can PMSE generate a ranking function personalized to the user’s interest from the user’s clickthroughs? ” The difficulty of the evaluation • only the user who conducted the search can tell which of the results are relevant to his/ her search intent. • care must be taken to ensure that the users’ behaviors are not affected by experimental artifacts 52

8. EXPERIMENTAL EVALUATION 8 -1. Experiment Setup Two important issues are considered in the experiment setup ① it is not advisable to ask the user to conduct the same search on two systems PMSE The other 53

8. EXPERIMENTAL EVALUATION 8 -1. Experiment Setup Two important issues are considered in the experiment setup ② as a user becomes more experienced with the system, answers of the subsequent queries could become more and more accurate. query query limit the number of queries for each user to five ��the test queries by intention all have broad meanings. 54

8. EXPERIMENTAL EVALUATION 8 -1. Experiment Setup Flow of the evaluation process Fifty users, 250 test queries “Relevant” or “Fair” or “Irrelevant” The ranking of the “Relevant” documents in R and R’ is used to compute the average relevant rank (i. e. , ARR, the average rank of the relevant documents) 55

8. EXPERIMENTAL EVALUATION 8 -1. Experiment Setup The Four (HC(q), HL(q)) query classes 56

8. EXPERIMENTAL EVALUATION 8 -1. Experiment Setup The Five (HC(q), HL(q)) query classes 57

8. EXPERIMENTAL EVALUATION 8 -1. Experiment Setup The Four (HC(q), HL(q)) query classes 58

8. EXPERIMENTAL EVALUATION 8 -2. Ranking Quality ARRs for PMSE, Spy. NB, and baseline methods with different query/user classes. 59

8. EXPERIMENTAL EVALUATION 8 -3. Top Results as Noise ARRs for PMSE with top N results as noise. 60

8. EXPERIMENTAL EVALUATION 8 -5. GPS Location in Personalization Evaluate the impact of GPS locations in PMSE 61

8. EXPERIMENTAL EVALUATION 8 -5. GPS Location in Personalization Evaluate the impact of GPS locations in PMSE 62

8. EXPERIMENTAL EVALUATION 8 -6. Privacy versus Ranking Quality • evaluate PMSE’s privacy parameters, min. Distance and exp. Ratio, against the ranking quality. 63

9. COUNCLUSION • They proposed PMSE to extract and learn a user’s content and location preferences based on the user’s clickthrough. • To adapt to the user mobility, they incorporated the user’s GPS locations in the personalization process. • They observed that GPS locations help to improve retrieval effectiveness, especially for location queries. • They also proposed two privacy parameters, min. Distance and exp. Ratio, to address privacy issues in PMSE by allowing users to control the amount of personal information exposed to the PMSE server. • The privacy parameters facilitate smooth control of privacy exposure while maintaining good ranking quality. 64

- Slides: 64