Performance Optimizations for running NIM on GPUs Jacques

- Slides: 12

Performance Optimizations for running NIM on GPUs Jacques Middlecoff NOAA/OAR/ESRL/GSD/AB Jacques. Middlecoff@noaa. gov Mark Govett, Tom Henderson Jim Rosinski 10/3/2020 1

Goal for NIM n Optimize MPI communication between distributed processors Old topic n GPU speed gives it new urgency n n FIM example: 320 processors, 8192 footprint n Comm takes 2. 4% of dynamics computation time n NIM has similar comm and comp characteristics n 8192 points is in NIM sweet spot for the GPU n GPU 25 X speedup means comm takes n 10/3/2020 Still gives a 16 X speedup 2

Optimizations to be discussed n NIM: The halo to be communicated between processors is packed and unpacked on the GPU No copy of entire variable to and from the CPU� n About the same speed as the CPU n n Halo computation n Overlapping communication with computation n Mapped, pinned memory n NVIDIA GPUDirect technology 10/3/2020 3

Halo Computation n Redundant computation to avoid communication n Calculate values in the halo instead of MPI send n Trades computation time for communication time n GPUs create more opportunity for halo comp n NIM has halo comp for everything not requiring extra communication n NIM next step is to look at halo comp’s that require new but less often communication 10/3/2020 4

Overlapping Communication with Computation n Works best with a co-processor to handle comm n Overlap communication with other calculations between when a variable is set and used. n Not enough computation time on the GPU n Calculate perimeter first then do communication while calculating the interior Loop level: Not enough computation on the GPU n Subroutine level: Not enough computation time n Entire dynamics: Not feasible for NIM n 10/3/2020 5

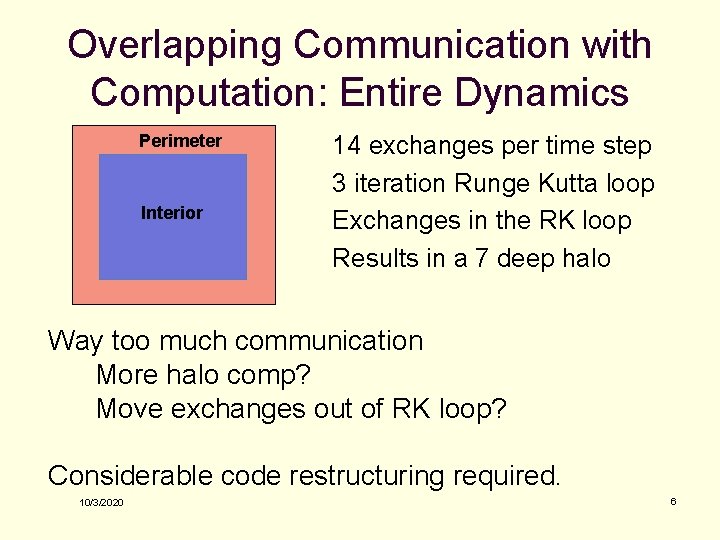

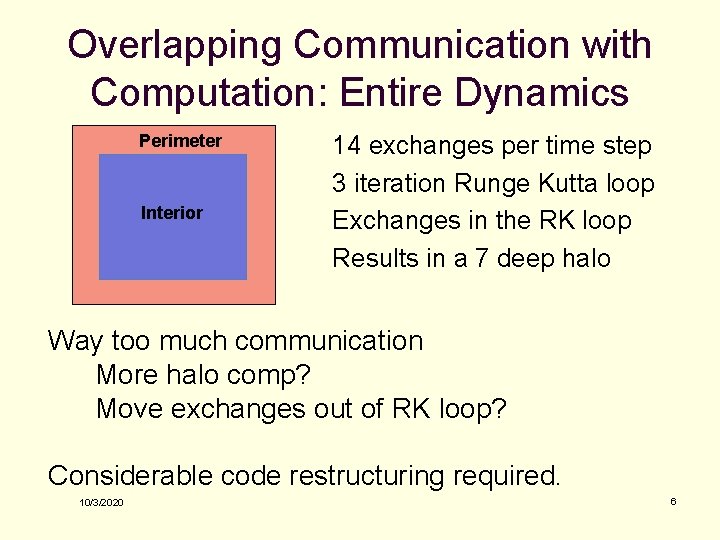

Overlapping Communication with Computation: Entire Dynamics Perimeter Interior 14 exchanges per time step 3 iteration Runge Kutta loop Exchanges in the RK loop Results in a 7 deep halo Way too much communication More halo comp? Move exchanges out of RK loop? Considerable code restructuring required. 10/3/2020 6

Mapped, Pinned Memory: Theory n Mapped, pinned memory is CPU memory n Mapped so GPU can access it across PCIe bus n Page-locked so the OS can’t swap it out n Limited amount n Integrated GPUs: Always a performance gain n Discrete GPUs (what we have) n Advantageous only in certain cases n The data is not cached on the GPU n Global loads and stores must be coalesced n Zero-copy: Both GPU and CPU can access data 10/3/2020 7

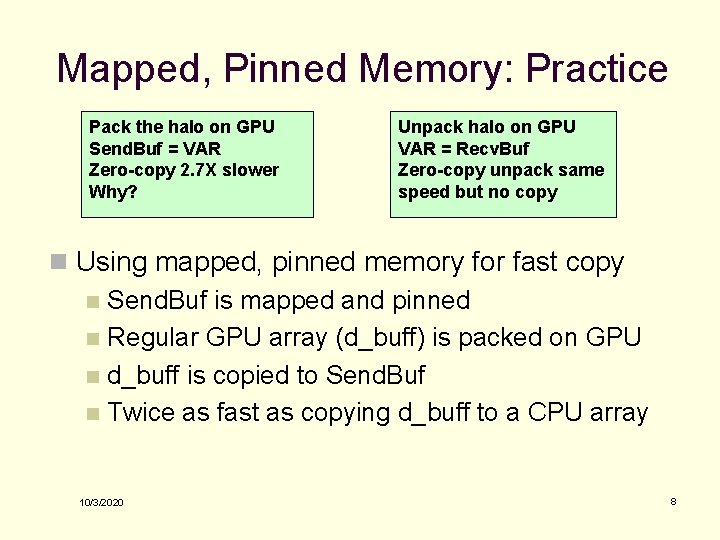

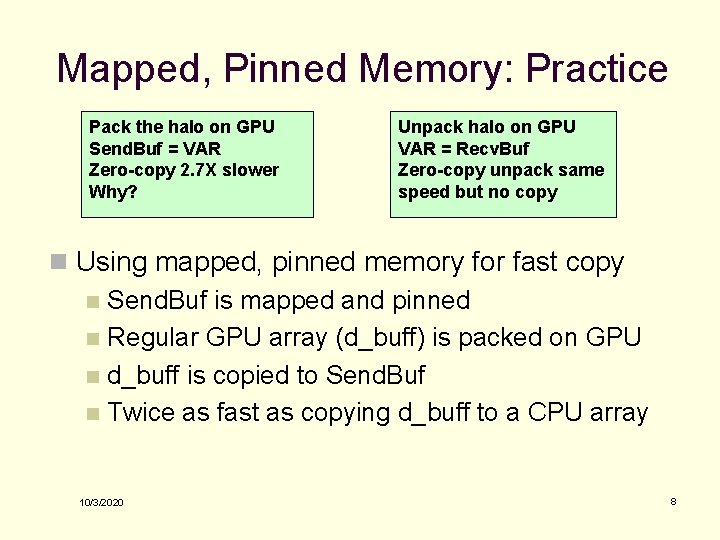

Mapped, Pinned Memory: Practice Pack the halo on GPU Send. Buf = VAR Zero-copy 2. 7 X slower Why? Unpack halo on GPU VAR = Recv. Buf Zero-copy unpack same speed but no copy n Using mapped, pinned memory for fast copy n Send. Buf is mapped and pinned n Regular GPU array (d_buff) is packed on GPU n d_buff is copied to Send. Buf n Twice as fast as copying d_buff to a CPU array 10/3/2020 8

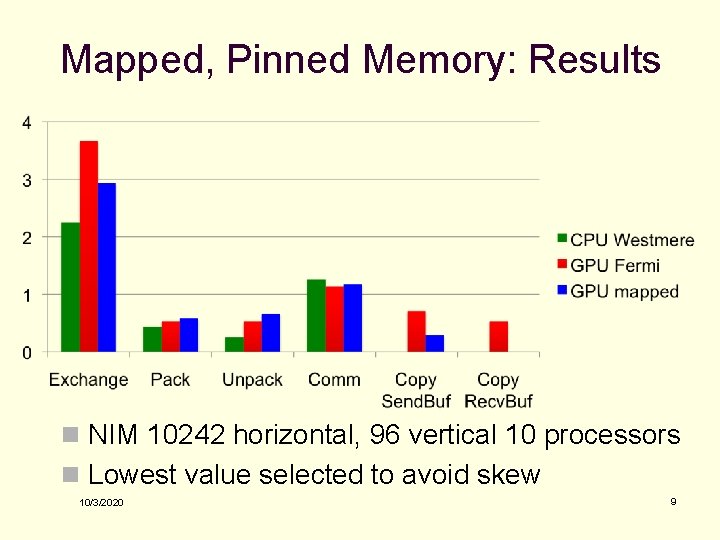

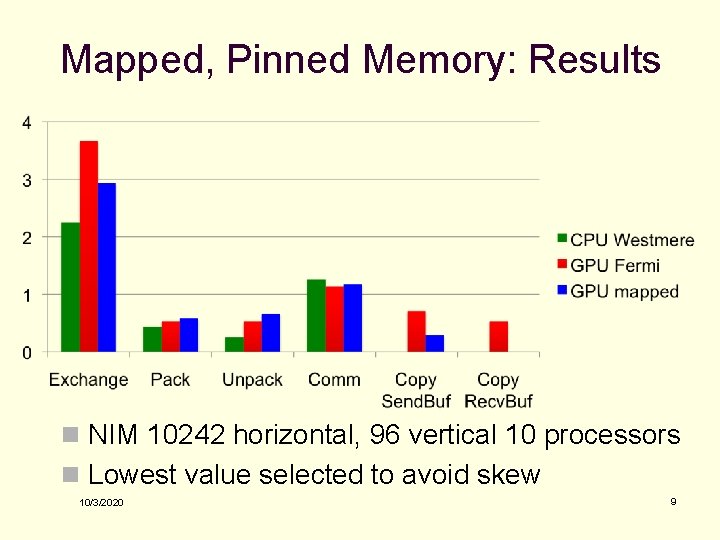

Mapped, Pinned Memory: Results n NIM 10242 horizontal, 96 vertical 10 processors n Lowest value selected to avoid skew 10/3/2020 9

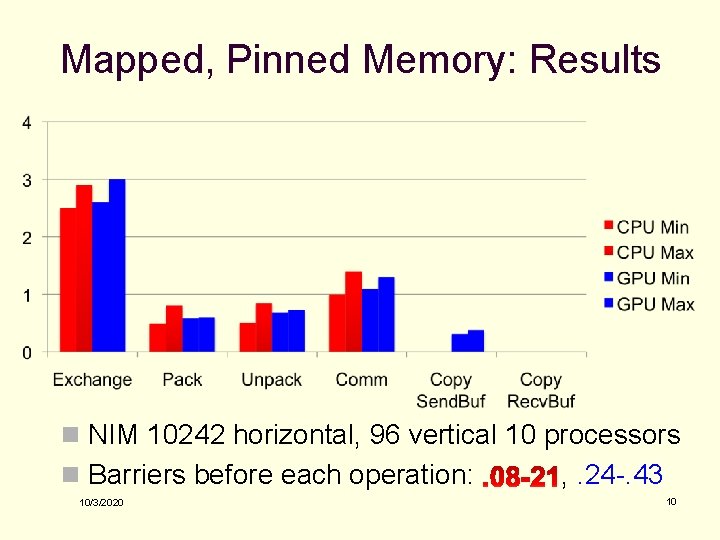

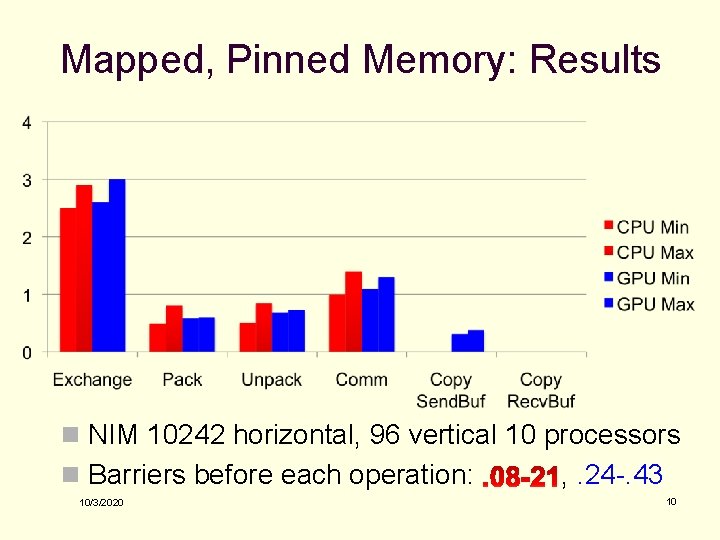

Mapped, Pinned Memory: Results n NIM 10242 horizontal, 96 vertical 10 processors n Barriers before each operation: 10/3/2020 , . 24 -. 43 10

NVIDIA GPUDirect Technology n Eliminates the CPU in interprocessor communication n Based on an interface between the GPU and Infini. Band Both devices share pinned memory buffers n Data written by GPU can be sent immediately by Infini. Band n n Overlapping communication with computation? n No longer a co-processor to do the comm? n We have this technology but have yet to install it 10/3/2020 11

Questions? 10/3/2020 12