Performance Computer Center CS NCTU What Is Performance

- Slides: 31

Performance

Computer Center, CS, NCTU What Is Performance? q Fast!? q “Performance” is meaningless in isolation q It only makes sense to talk about performance of a particular workload, and according to a particular set of metrics • Queries per second in Web server • Response latency in DNS server • Number of delivery per second in Mail server 2

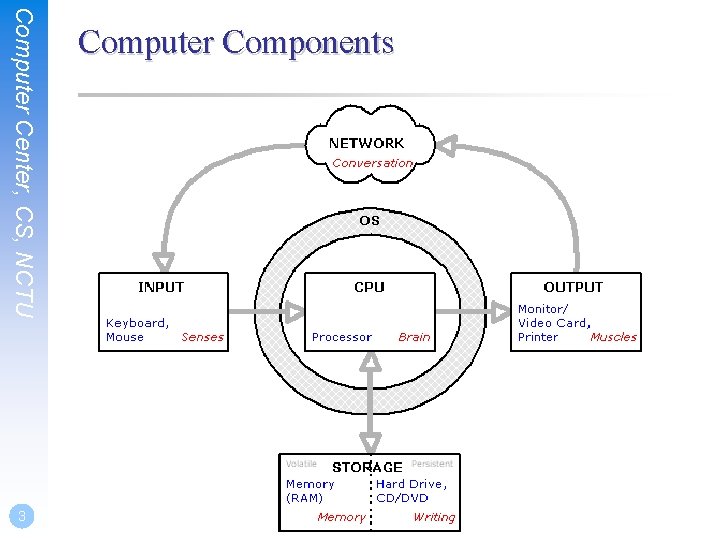

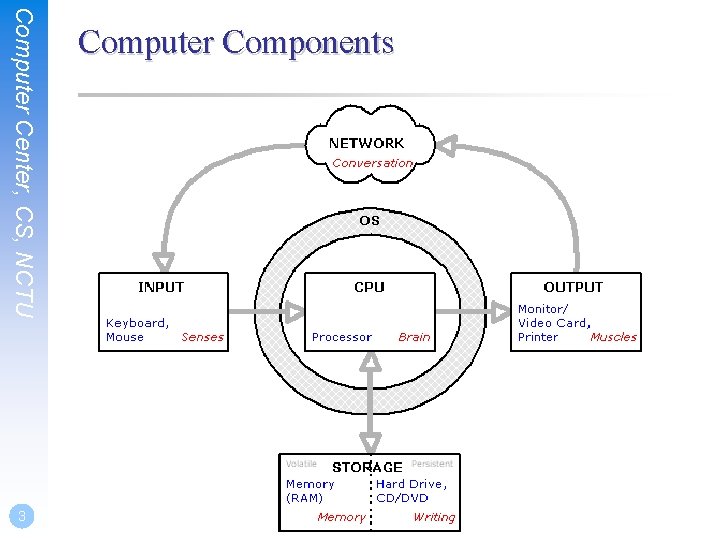

Computer Center, CS, NCTU 3 Computer Components

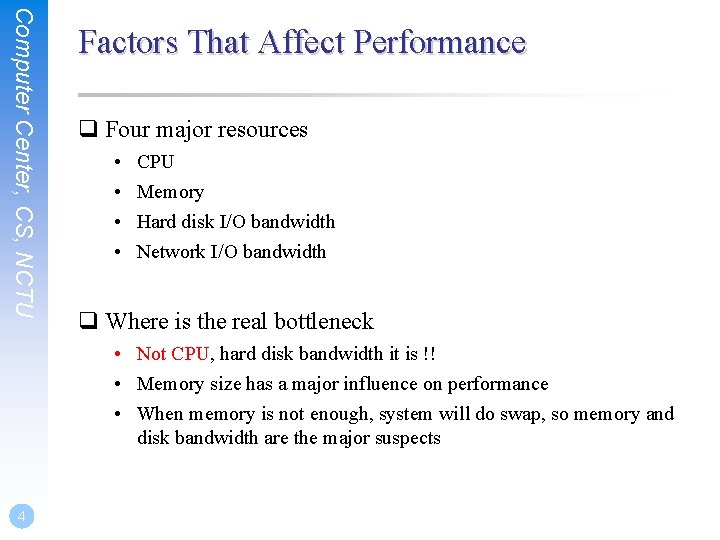

Computer Center, CS, NCTU Factors That Affect Performance q Four major resources • • CPU Memory Hard disk I/O bandwidth Network I/O bandwidth q Where is the real bottleneck • Not CPU, hard disk bandwidth it is !! • Memory size has a major influence on performance • When memory is not enough, system will do swap, so memory and disk bandwidth are the major suspects 4

Computer Center, CS, NCTU What is your system doing? q CPU use q Disk I/O q Network I/O q Application (mis-)configuration q Hardware limitations q System calls and interaction with the kernel q Multithreaded lock contention q Typically one or more of these elements will be the limiting factor in performance of your workload • Monitoring interval should not be too small 5

Computer Center, CS, NCTU 6 Analyzing CPU usage – (1) q Three information of CPU • Load average • Overall utilization Ø Help to identify whether the CPU resource is the system bottleneck • Per-process consumption Ø Identify specific process’s CPU utilization

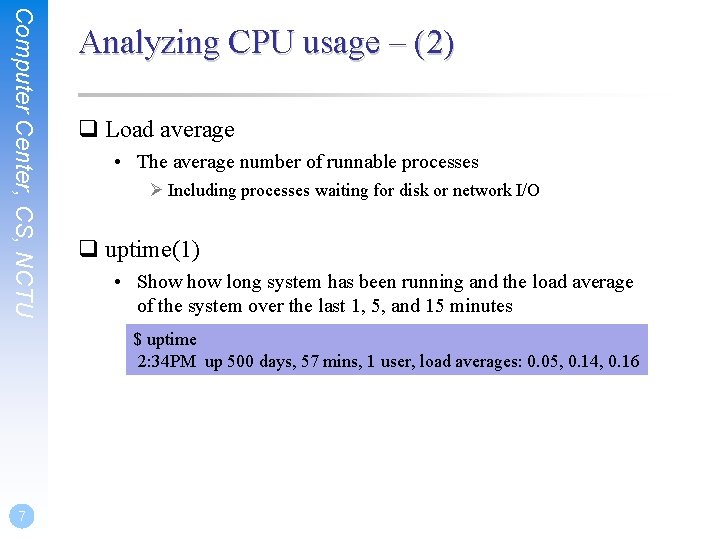

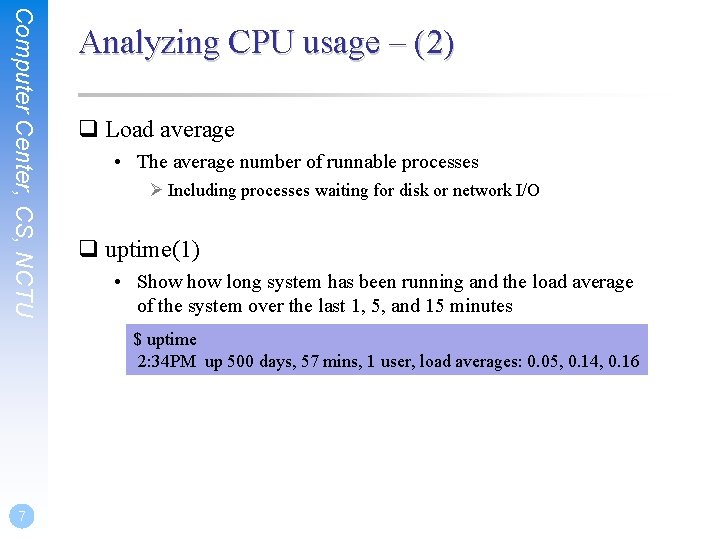

Computer Center, CS, NCTU Analyzing CPU usage – (2) q Load average • The average number of runnable processes Ø Including processes waiting for disk or network I/O q uptime(1) • Show long system has been running and the load average of the system over the last 1, 5, and 15 minutes $ uptime 2: 34 PM up 500 days, 57 mins, 1 user, load averages: 0. 05, 0. 14, 0. 16 7

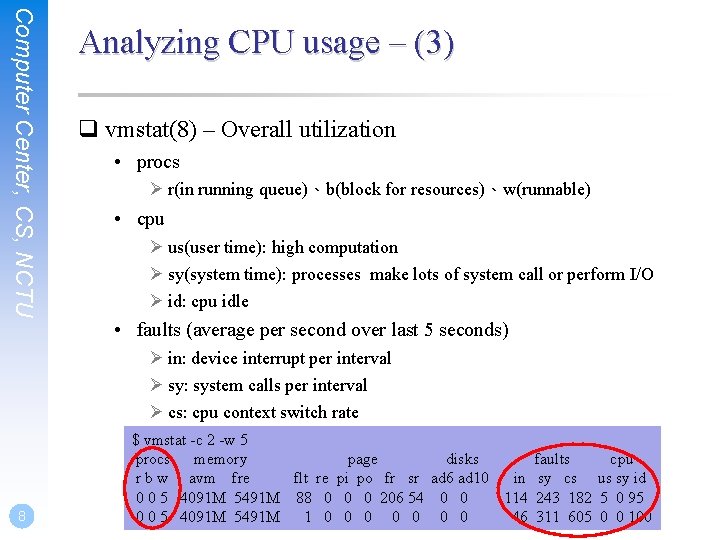

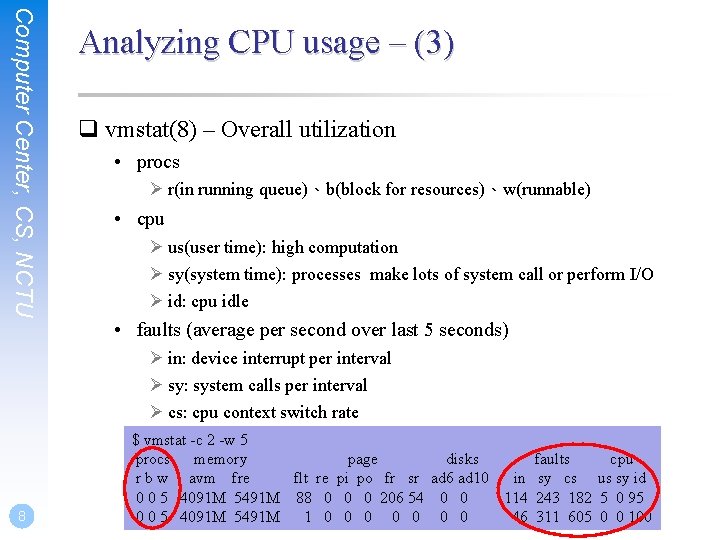

Computer Center, CS, NCTU Analyzing CPU usage – (3) q vmstat(8) – Overall utilization • procs Ø r(in running queue)、b(block for resources)、w(runnable) • cpu Ø us(user time): high computation Ø sy(system time): processes make lots of system call or perform I/O Ø id: cpu idle • faults (average per second over last 5 seconds) Ø in: device interrupt per interval Ø sy: system calls per interval Ø cs: cpu context switch rate 8 $ vmstat -c 2 -w 5 procs memory page disks faults cpu r b w avm fre flt re pi po fr sr ad 6 ad 10 in sy cs us sy id 0 0 5 4091 M 5491 M 88 0 0 0 206 54 0 0 114 243 182 5 0 95 0 0 5 4091 M 5491 M 1 0 0 0 0 46 311 605 0 0 100

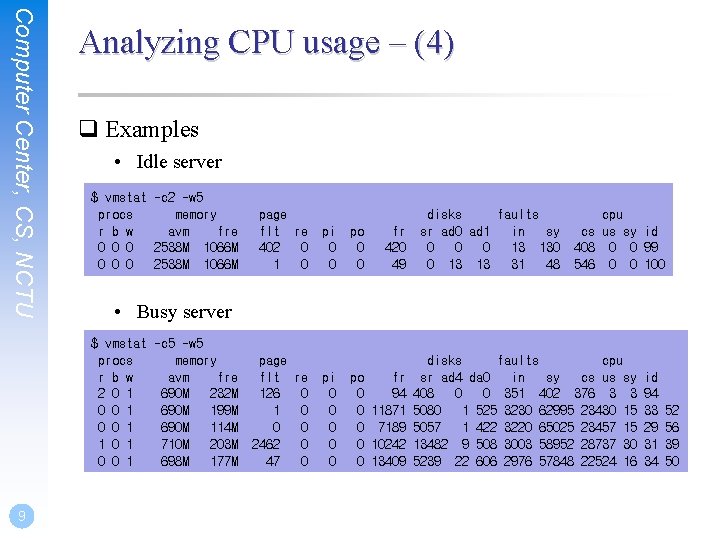

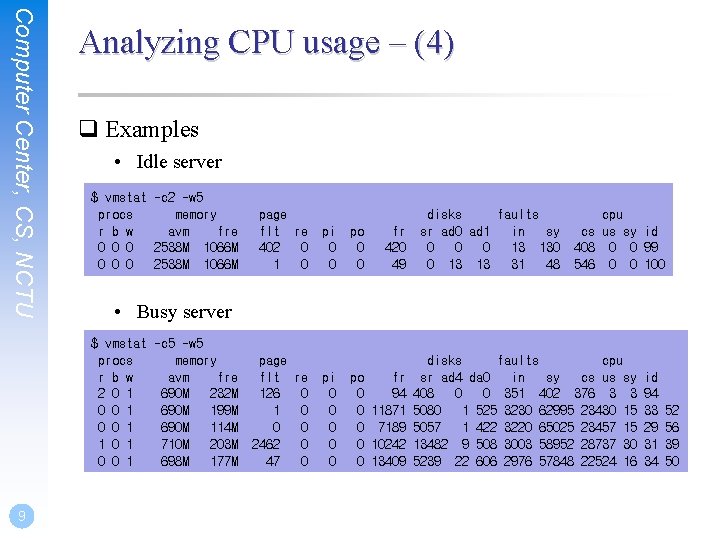

Computer Center, CS, NCTU Analyzing CPU usage – (4) q Examples • Idle server $ vmstat -c 2 -w 5 procs memory r b w avm fre 0 0 0 2538 M 1066 M pi 0 0 po 0 0 fr 420 49 disks faults sr ad 0 ad 1 in sy 0 0 0 13 13 31 48 cpu cs us sy id 408 0 0 99 546 0 0 100 • Busy server $ vmstat -c 5 -w 5 procs memory r b w avm fre 2 0 1 690 M 232 M 0 0 1 690 M 199 M 0 0 1 690 M 114 M 1 0 1 710 M 203 M 0 0 1 698 M 177 M 9 page flt re 402 0 1 0 page flt re 126 0 1 0 0 0 2462 0 47 0 pi 0 0 0 po fr 0 94 0 11871 0 7189 0 10242 0 13409 disks faults cpu sr ad 4 da 0 in sy cs us sy 408 0 0 351 402 376 3 3 5080 1 525 3230 62995 23430 15 5057 1 422 3220 65025 23457 15 13482 9 508 3003 58952 28737 30 5239 22 606 2976 57848 22524 16 id 94 33 29 31 34 52 56 39 50

Computer Center, CS, NCTU 10 Analyzing CPU usage – (5) q Per-process consumption • top command Ø Display and update information about the top cpu processes • ps command Ø Show process status

Computer Center, CS, NCTU Analyzing CPU usage – (6) q top(1) – Show a realtime overview of what CPU do • Paging to/from swap Ø Performance kiss of death • Spending lots of time in the kernel, or processing interrupts • Which processes/threads are using CPU • What they are doing inside the kernel Ø Eg. Biord/biowr/wdrain: disk I/O Ø sbwait: waiting for socket input Ø ucond/umtx: waiting on an application thread lock Ø Many more – Only documented in the source code • Context switchs Ø Voluntary: process blocks waiting for a resource Ø Involuntary: kernel decides the process should stop running for now 11

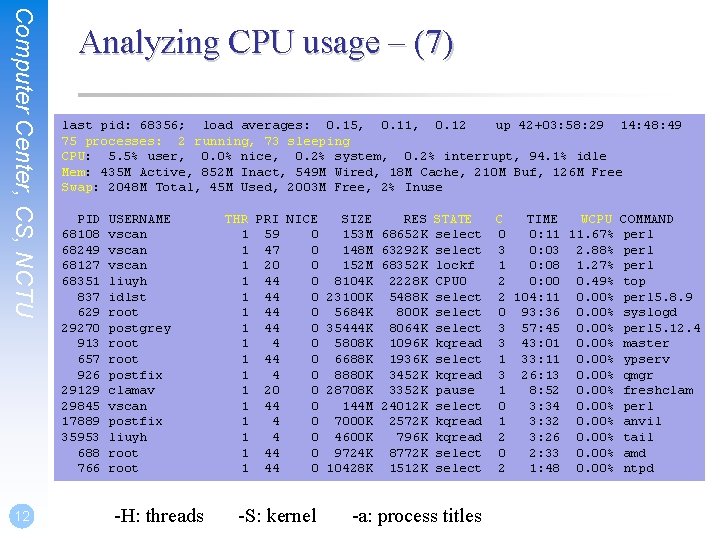

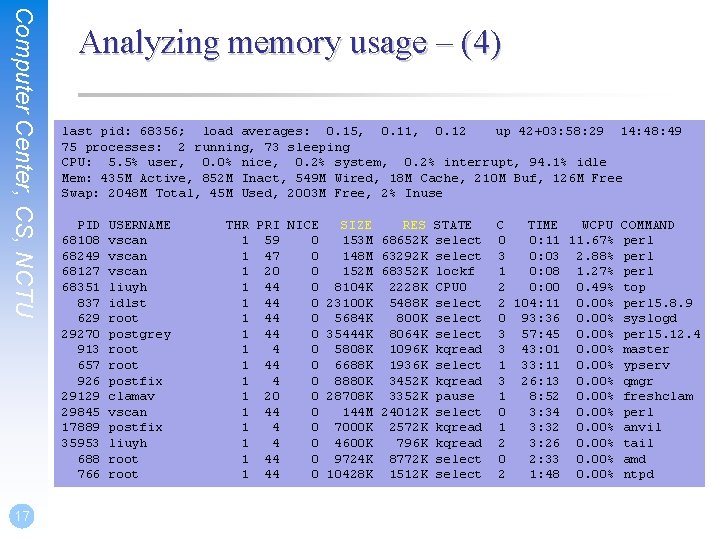

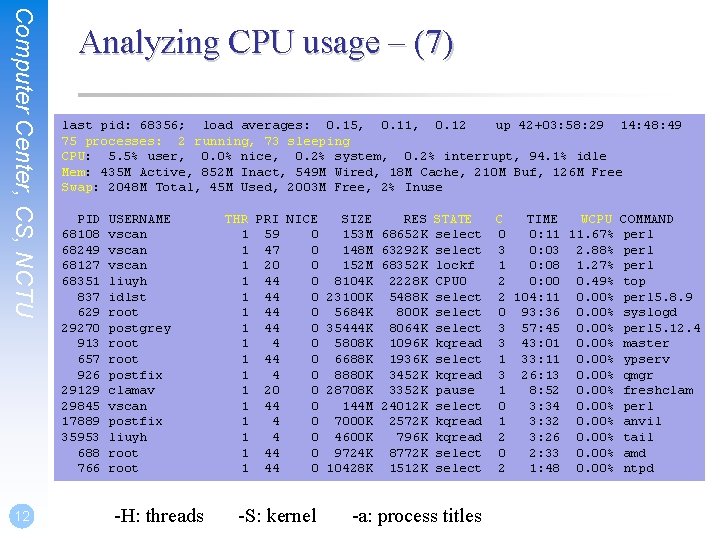

Computer Center, CS, NCTU 12 Analyzing CPU usage – (7) last pid: 68356; load averages: 0. 15, 0. 11, 0. 12 up 42+03: 58: 29 14: 48: 49 75 processes: 2 running, 73 sleeping CPU: 5. 5% user, 0. 0% nice, 0. 2% system, 0. 2% interrupt, 94. 1% idle Mem: 435 M Active, 852 M Inact, 549 M Wired, 18 M Cache, 210 M Buf, 126 M Free Swap: 2048 M Total, 45 M Used, 2003 M Free, 2% Inuse PID 68108 68249 68127 68351 837 629 29270 913 657 926 29129 29845 17889 35953 688 766 USERNAME vscan liuyh idlst root postgrey root postfix clamav vscan postfix liuyh root -H: threads THR PRI NICE SIZE RES STATE C TIME WCPU COMMAND 1 59 0 153 M 68652 K select 0 0: 11 11. 67% perl 1 47 0 148 M 63292 K select 3 0: 03 2. 88% perl 1 20 0 152 M 68352 K lockf 1 0: 08 1. 27% perl 1 44 0 8104 K 2228 K CPU 0 2 0: 00 0. 49% top 1 44 0 23100 K 5488 K select 2 104: 11 0. 00% perl 5. 8. 9 1 44 0 5684 K 800 K select 0 93: 36 0. 00% syslogd 1 44 0 35444 K 8064 K select 3 57: 45 0. 00% perl 5. 12. 4 1 4 0 5808 K 1096 K kqread 3 43: 01 0. 00% master 1 44 0 6688 K 1936 K select 1 33: 11 0. 00% ypserv 1 4 0 8880 K 3452 K kqread 3 26: 13 0. 00% qmgr 1 20 0 28708 K 3352 K pause 1 8: 52 0. 00% freshclam 1 44 0 144 M 24012 K select 0 3: 34 0. 00% perl 1 4 0 7000 K 2572 K kqread 1 3: 32 0. 00% anvil 1 4 0 4600 K 796 K kqread 2 3: 26 0. 00% tail 1 44 0 9724 K 8772 K select 0 2: 33 0. 00% amd 1 44 0 10428 K 1512 K select 2 1: 48 0. 00% ntpd -S: kernel -a: process titles

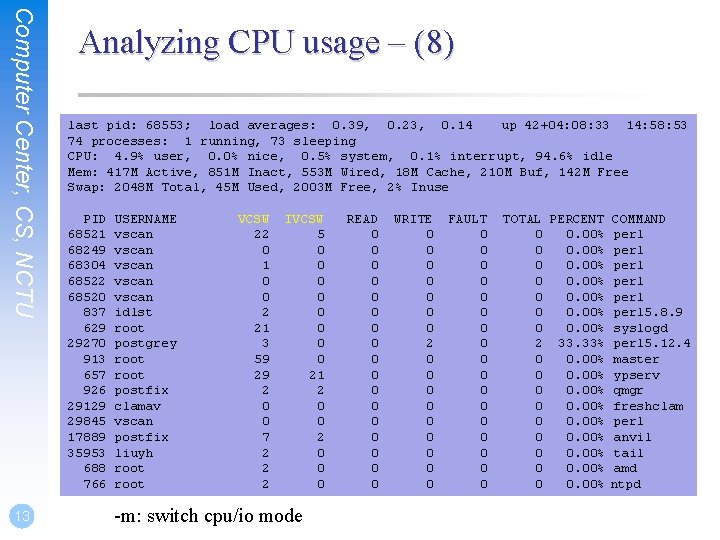

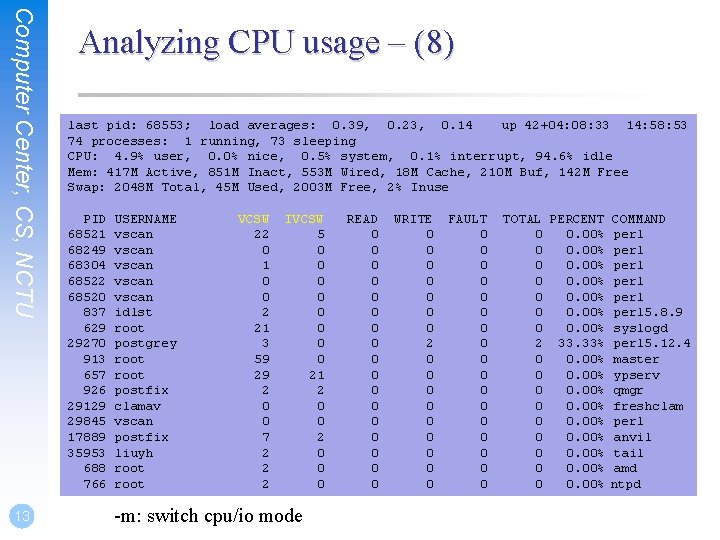

Computer Center, CS, NCTU 13 Analyzing CPU usage – (8) last pid: 68553; load averages: 0. 39, 0. 23, 0. 14 up 42+04: 08: 33 14: 58: 53 74 processes: 1 running, 73 sleeping CPU: 4. 9% user, 0. 0% nice, 0. 5% system, 0. 1% interrupt, 94. 6% idle Mem: 417 M Active, 851 M Inact, 553 M Wired, 18 M Cache, 210 M Buf, 142 M Free Swap: 2048 M Total, 45 M Used, 2003 M Free, 2% Inuse PID 68521 68249 68304 68522 68520 837 629 29270 913 657 926 29129 29845 17889 35953 688 766 USERNAME vscan vscan idlst root postgrey root postfix clamav vscan postfix liuyh root VCSW 22 0 1 0 0 2 21 3 59 29 2 0 0 7 2 2 2 IVCSW 5 0 0 0 0 21 2 0 0 0 -m: switch cpu/io mode READ 0 0 0 0 0 WRITE 0 0 0 0 2 0 0 0 0 0 FAULT 0 0 0 0 0 TOTAL PERCENT COMMAND 0 0. 00% perl 5. 8. 9 0 0. 00% syslogd 2 33. 33% perl 5. 12. 4 0 0. 00% master 0 0. 00% ypserv 0 0. 00% qmgr 0 0. 00% freshclam 0 0. 00% perl 0 0. 00% anvil 0 0. 00% tail 0 0. 00% amd 0 0. 00% ntpd

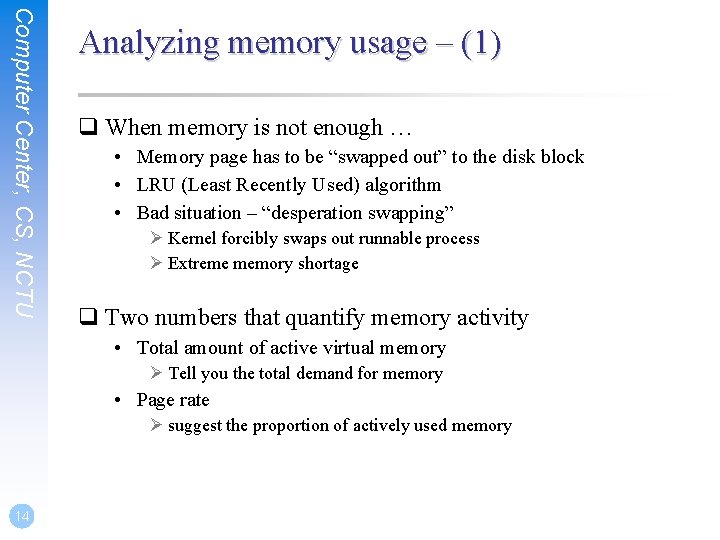

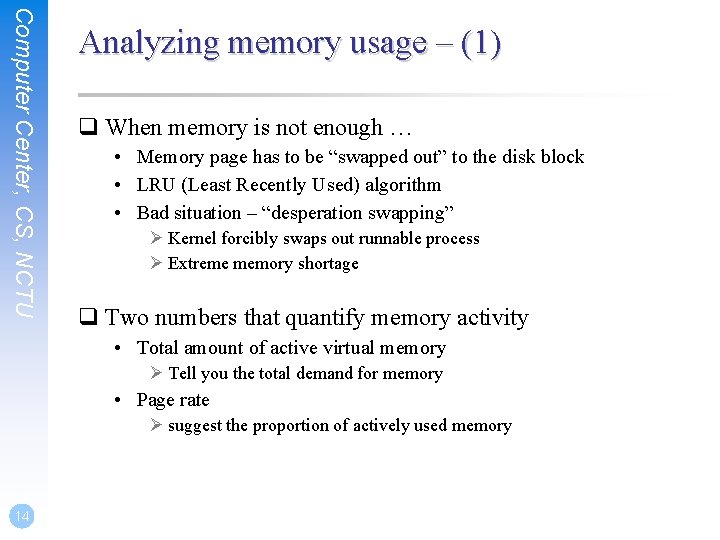

Computer Center, CS, NCTU Analyzing memory usage – (1) q When memory is not enough … • Memory page has to be “swapped out” to the disk block • LRU (Least Recently Used) algorithm • Bad situation – “desperation swapping” Ø Kernel forcibly swaps out runnable process Ø Extreme memory shortage q Two numbers that quantify memory activity • Total amount of active virtual memory Ø Tell you the total demand for memory • Page rate Ø suggest the proportion of actively used memory 14

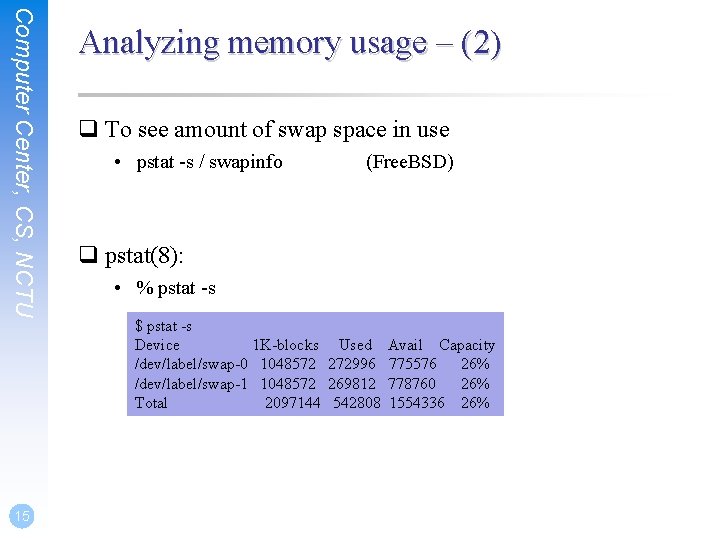

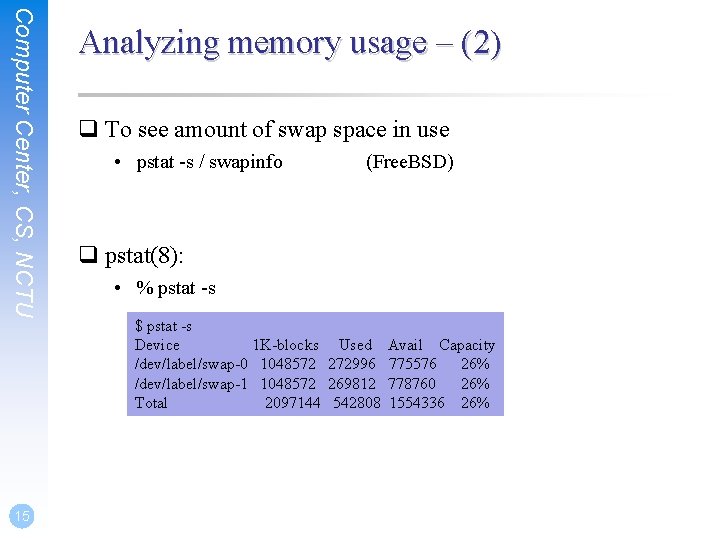

Computer Center, CS, NCTU 15 Analyzing memory usage – (2) q To see amount of swap space in use • pstat -s / swapinfo (Free. BSD) q pstat(8): • % pstat -s $ pstat -s Device 1 K-blocks Used Avail Capacity /dev/label/swap-0 1048572 272996 775576 26% /dev/label/swap-1 1048572 269812 778760 26% Total 2097144 542808 1554336 26%

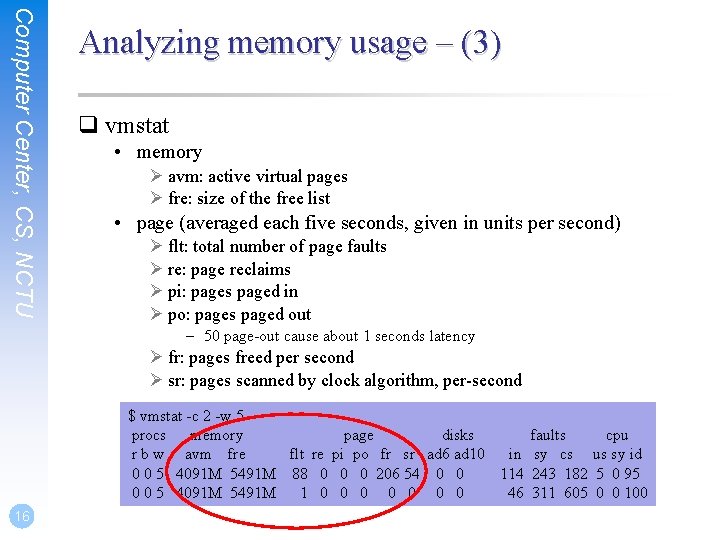

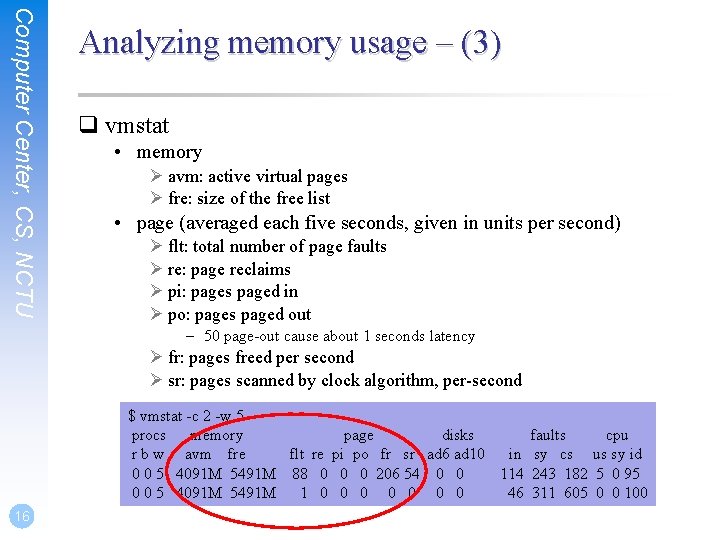

Computer Center, CS, NCTU Analyzing memory usage – (3) q vmstat • memory Ø avm: active virtual pages Ø fre: size of the free list • page (averaged each five seconds, given in units per second) Ø flt: total number of page faults Ø re: page reclaims Ø pi: pages paged in Ø po: pages paged out – 50 page-out cause about 1 seconds latency Ø fr: pages freed per second Ø sr: pages scanned by clock algorithm, per-second $ vmstat -c 2 -w 5 procs memory page disks faults cpu r b w avm fre flt re pi po fr sr ad 6 ad 10 in sy cs us sy id 0 0 5 4091 M 5491 M 88 0 0 0 206 54 0 0 114 243 182 5 0 95 0 0 5 4091 M 5491 M 1 0 0 0 0 46 311 605 0 0 100 16

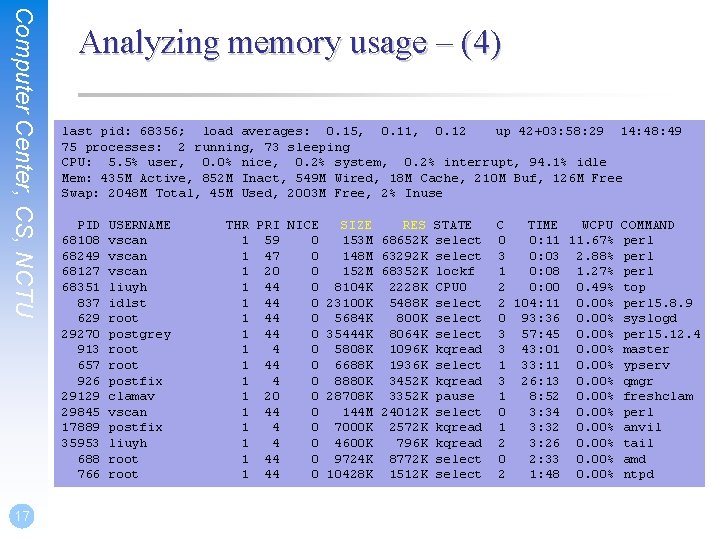

Computer Center, CS, NCTU 17 Analyzing memory usage – (4) last pid: 68356; load averages: 0. 15, 0. 11, 0. 12 up 42+03: 58: 29 14: 48: 49 75 processes: 2 running, 73 sleeping CPU: 5. 5% user, 0. 0% nice, 0. 2% system, 0. 2% interrupt, 94. 1% idle Mem: 435 M Active, 852 M Inact, 549 M Wired, 18 M Cache, 210 M Buf, 126 M Free Swap: 2048 M Total, 45 M Used, 2003 M Free, 2% Inuse PID 68108 68249 68127 68351 837 629 29270 913 657 926 29129 29845 17889 35953 688 766 USERNAME vscan liuyh idlst root postgrey root postfix clamav vscan postfix liuyh root THR PRI NICE SIZE RES STATE 1 59 0 153 M 68652 K select 1 47 0 148 M 63292 K select 1 20 0 152 M 68352 K lockf 1 44 0 8104 K 2228 K CPU 0 1 44 0 23100 K 5488 K select 1 44 0 5684 K 800 K select 1 44 0 35444 K 8064 K select 1 4 0 5808 K 1096 K kqread 1 44 0 6688 K 1936 K select 1 4 0 8880 K 3452 K kqread 1 20 0 28708 K 3352 K pause 1 44 0 144 M 24012 K select 1 4 0 7000 K 2572 K kqread 1 4 0 4600 K 796 K kqread 1 44 0 9724 K 8772 K select 1 44 0 10428 K 1512 K select C TIME WCPU COMMAND 0 0: 11 11. 67% perl 3 0: 03 2. 88% perl 1 0: 08 1. 27% perl 2 0: 00 0. 49% top 2 104: 11 0. 00% perl 5. 8. 9 0 93: 36 0. 00% syslogd 3 57: 45 0. 00% perl 5. 12. 4 3 43: 01 0. 00% master 1 33: 11 0. 00% ypserv 3 26: 13 0. 00% qmgr 1 8: 52 0. 00% freshclam 0 3: 34 0. 00% perl 1 3: 32 0. 00% anvil 2 3: 26 0. 00% tail 0 2: 33 0. 00% amd 2 1: 48 0. 00% ntpd

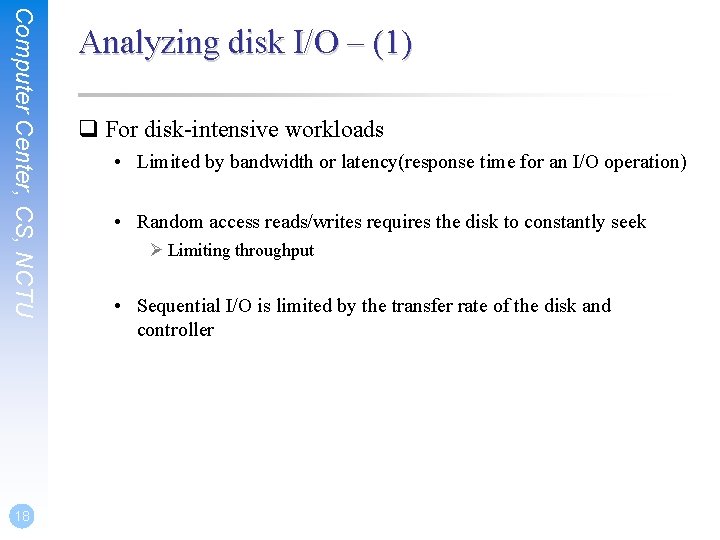

Computer Center, CS, NCTU 18 Analyzing disk I/O – (1) q For disk-intensive workloads • Limited by bandwidth or latency(response time for an I/O operation) • Random access reads/writes requires the disk to constantly seek Ø Limiting throughput • Sequential I/O is limited by the transfer rate of the disk and controller

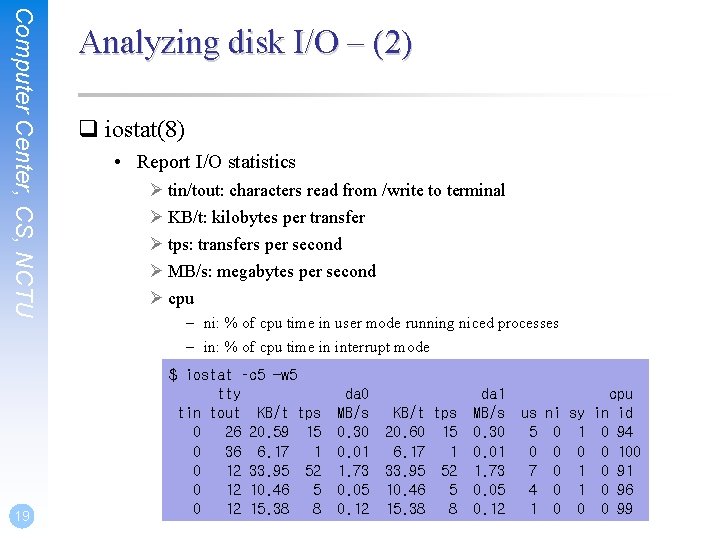

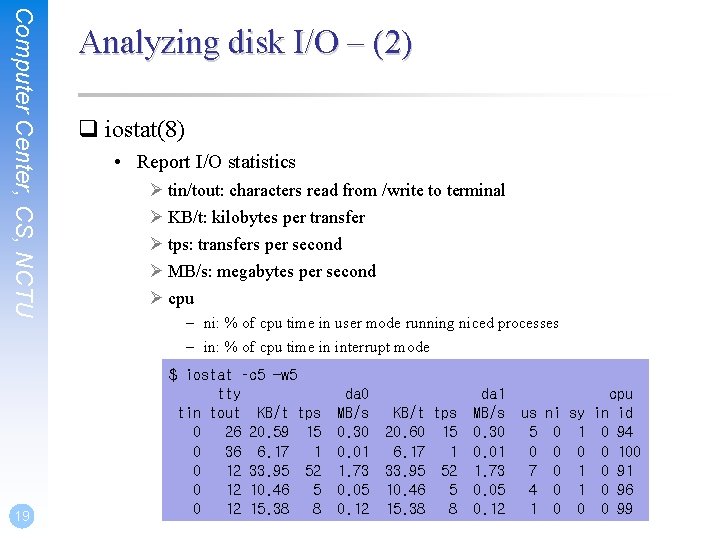

Computer Center, CS, NCTU 19 Analyzing disk I/O – (2) q iostat(8) • Report I/O statistics Ø tin/tout: characters read from /write to terminal Ø KB/t: kilobytes per transfer Ø tps: transfers per second Ø MB/s: megabytes per second Ø cpu – ni: % of cpu time in user mode running niced processes – in: % of cpu time in interrupt mode $ iostat –c 5 -w 5 tty tin tout KB/t tps 0 26 20. 59 15 0 36 6. 17 1 0 12 33. 95 52 0 12 10. 46 5 0 12 15. 38 8 da 0 MB/s 0. 30 0. 01 1. 73 0. 05 0. 12 KB/t tps 20. 60 15 6. 17 1 33. 95 52 10. 46 5 15. 38 8 da 1 MB/s 0. 30 0. 01 1. 73 0. 05 0. 12 us ni sy 5 0 1 0 0 0 7 0 1 4 0 1 1 0 0 cpu in id 0 94 0 100 0 91 0 96 0 99

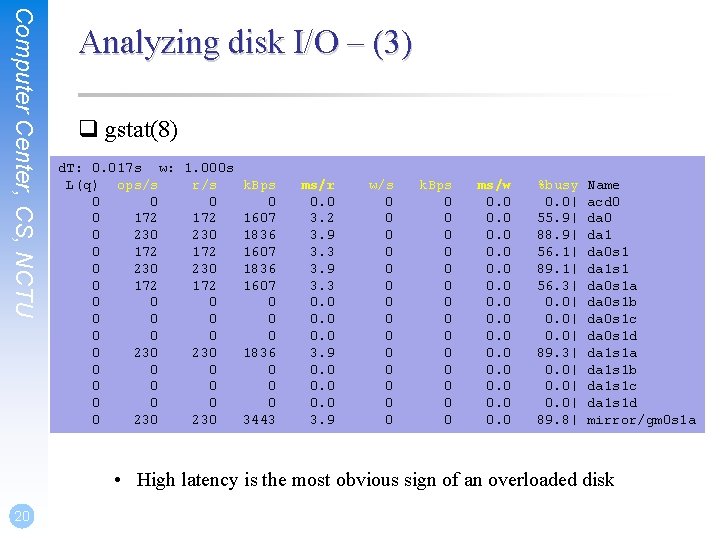

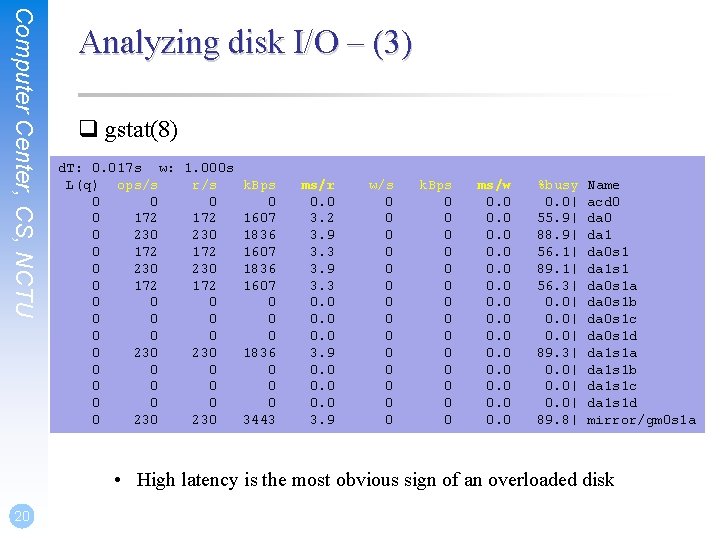

Computer Center, CS, NCTU Analyzing disk I/O – (3) q gstat(8) d. T: 0. 017 s w: 1. 000 s L(q) ops/s r/s k. Bps 0 0 0 172 172 1607 0 230 230 1836 0 172 1607 0 0 0 0 230 1836 0 0 0 0 230 3443 ms/r 0. 0 3. 2 3. 9 3. 3 0. 0 0. 0 3. 9 w/s 0 0 0 0 k. Bps 0 0 0 0 ms/w 0. 0 0. 0 %busy 0. 0| 55. 9| 88. 9| 56. 1| 89. 1| 56. 3| 0. 0| 89. 8| Name acd 0 da 1 da 0 s 1 da 1 s 1 da 0 s 1 a da 0 s 1 b da 0 s 1 c da 0 s 1 d da 1 s 1 a da 1 s 1 b da 1 s 1 c da 1 s 1 d mirror/gm 0 s 1 a • High latency is the most obvious sign of an overloaded disk 20

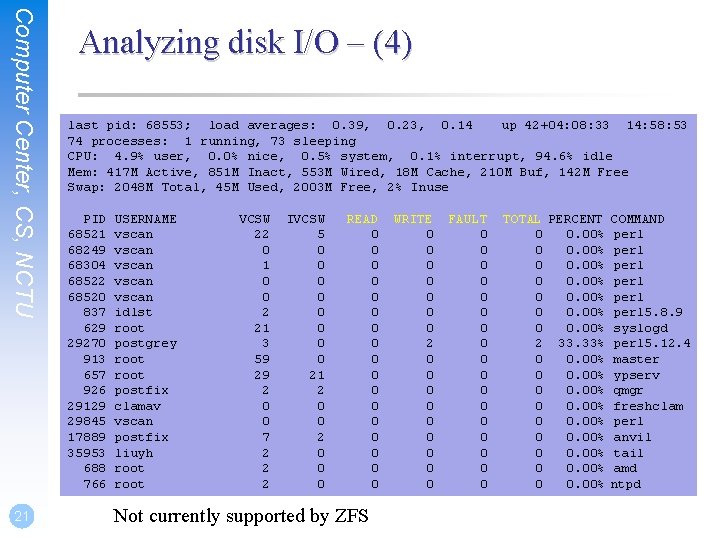

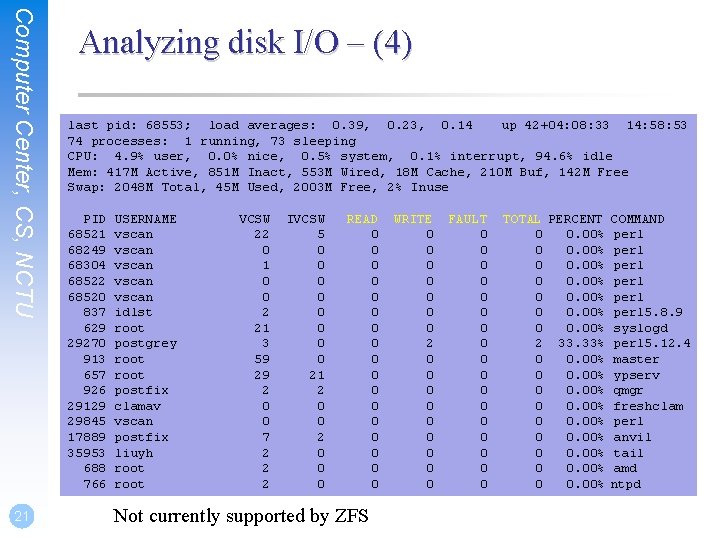

Computer Center, CS, NCTU 21 Analyzing disk I/O – (4) last pid: 68553; load averages: 0. 39, 0. 23, 0. 14 up 42+04: 08: 33 14: 58: 53 74 processes: 1 running, 73 sleeping CPU: 4. 9% user, 0. 0% nice, 0. 5% system, 0. 1% interrupt, 94. 6% idle Mem: 417 M Active, 851 M Inact, 553 M Wired, 18 M Cache, 210 M Buf, 142 M Free Swap: 2048 M Total, 45 M Used, 2003 M Free, 2% Inuse PID 68521 68249 68304 68522 68520 837 629 29270 913 657 926 29129 29845 17889 35953 688 766 USERNAME vscan vscan idlst root postgrey root postfix clamav vscan postfix liuyh root VCSW 22 0 1 0 0 2 21 3 59 29 2 0 0 7 2 2 2 IVCSW 5 0 0 0 0 21 2 0 0 0 READ 0 0 0 0 0 Not currently supported by ZFS WRITE 0 0 0 0 2 0 0 0 0 0 FAULT 0 0 0 0 0 TOTAL PERCENT COMMAND 0 0. 00% perl 5. 8. 9 0 0. 00% syslogd 2 33. 33% perl 5. 12. 4 0 0. 00% master 0 0. 00% ypserv 0 0. 00% qmgr 0 0. 00% freshclam 0 0. 00% perl 0 0. 00% anvil 0 0. 00% tail 0 0. 00% amd 0 0. 00% ntpd

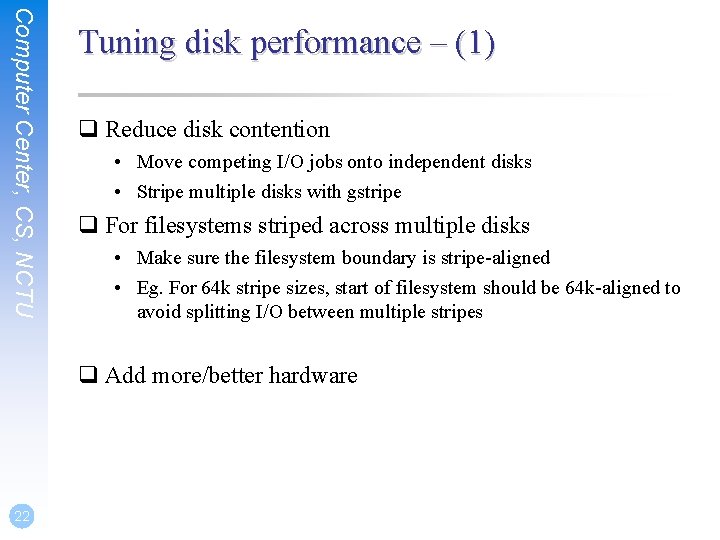

Computer Center, CS, NCTU Tuning disk performance – (1) q Reduce disk contention • Move competing I/O jobs onto independent disks • Stripe multiple disks with gstripe q For filesystems striped across multiple disks • Make sure the filesystem boundary is stripe-aligned • Eg. For 64 k stripe sizes, start of filesystem should be 64 k-aligned to avoid splitting I/O between multiple stripes q Add more/better hardware 22

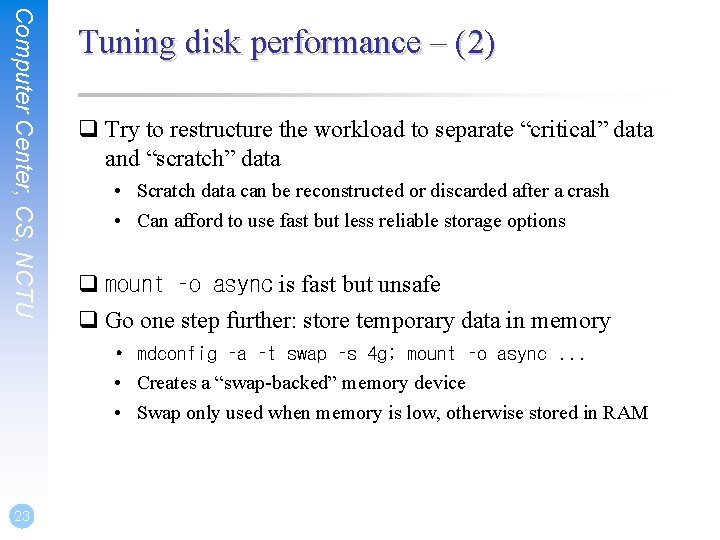

Computer Center, CS, NCTU Tuning disk performance – (2) q Try to restructure the workload to separate “critical” data and “scratch” data • Scratch data can be reconstructed or discarded after a crash • Can afford to use fast but less reliable storage options q mount –o async is fast but unsafe q Go one step further: store temporary data in memory • mdconfig –a –t swap –s 4 g; mount –o async. . . • Creates a “swap-backed” memory device • Swap only used when memory is low, otherwise stored in RAM 23

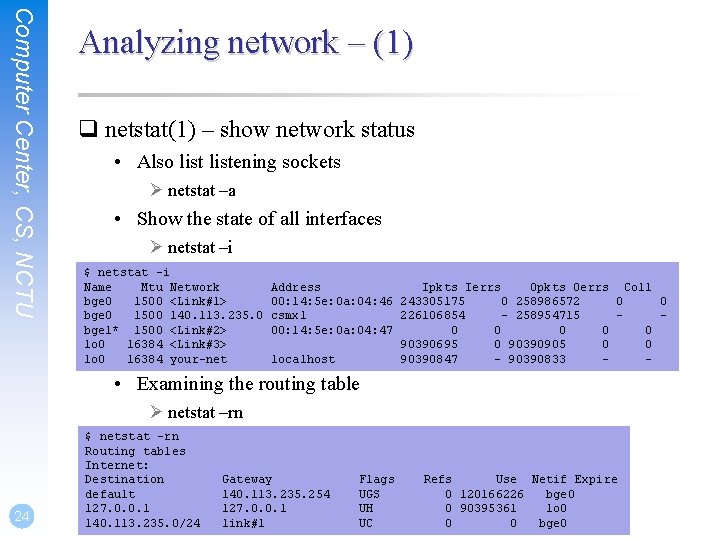

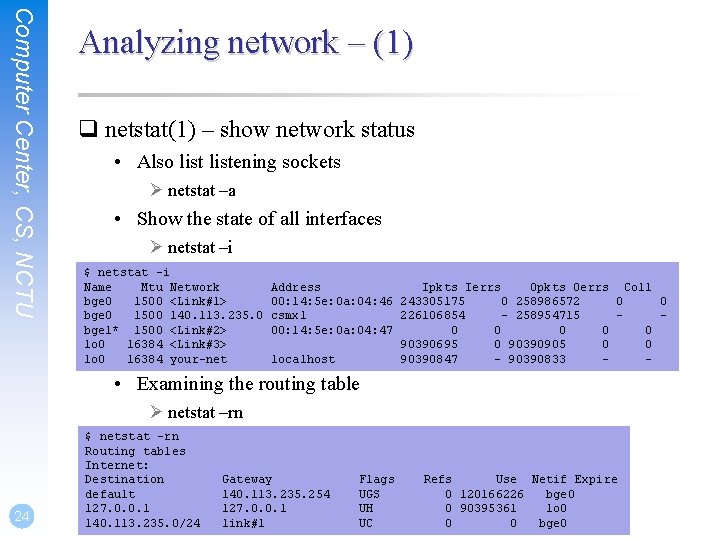

Computer Center, CS, NCTU Analyzing network – (1) q netstat(1) – show network status • Also listening sockets Ø netstat –a • Show the state of all interfaces Ø netstat –i $ netstat -i Name Mtu Network bge 0 1500 <Link#1> bge 0 1500 140. 113. 235. 0 bge 1* 1500 <Link#2> lo 0 16384 <Link#3> lo 0 16384 your-net Address Ipkts Ierrs Opkts Oerrs Coll 00: 14: 5 e: 0 a: 04: 46 243305175 0 258986572 0 0 csmx 1 226106854 - 258954715 00: 14: 5 e: 0 a: 04: 47 0 0 0 90390695 0 90390905 0 0 localhost 90390847 - 90390833 - • Examining the routing table Ø netstat –rn 24 $ netstat -rn Routing tables Internet: Destination default 127. 0. 0. 1 140. 113. 235. 0/24 Gateway 140. 113. 235. 254 127. 0. 0. 1 link#1 Flags UGS UH UC Refs Use Netif Expire 0 120166226 bge 0 0 90395361 lo 0 0 0 bge 0

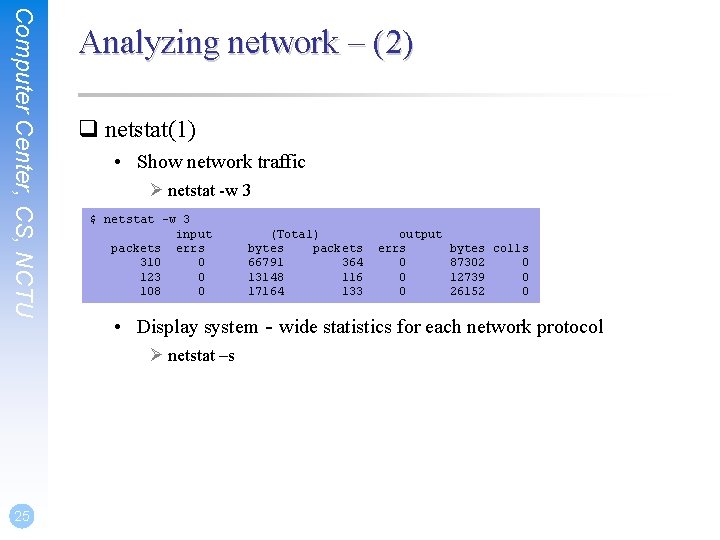

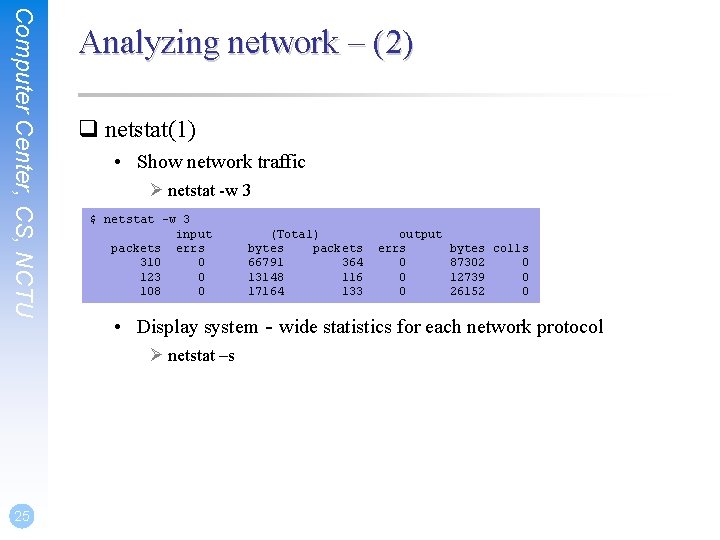

Computer Center, CS, NCTU Analyzing network – (2) q netstat(1) • Show network traffic Ø netstat -w 3 $ netstat -w 3 input packets errs 310 0 123 0 108 0 output errs 0 0 0 bytes colls 87302 0 12739 0 26152 0 • Display system‐wide statistics for each network protocol Ø netstat –s 25 (Total) bytes packets 66791 364 13148 116 17164 133

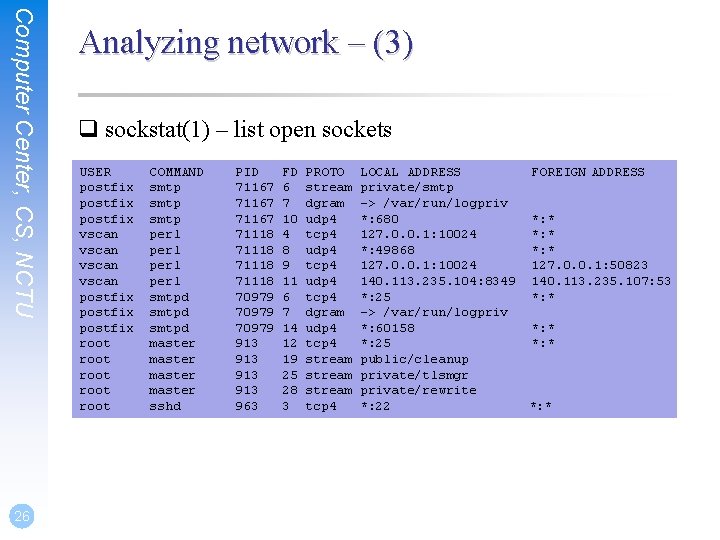

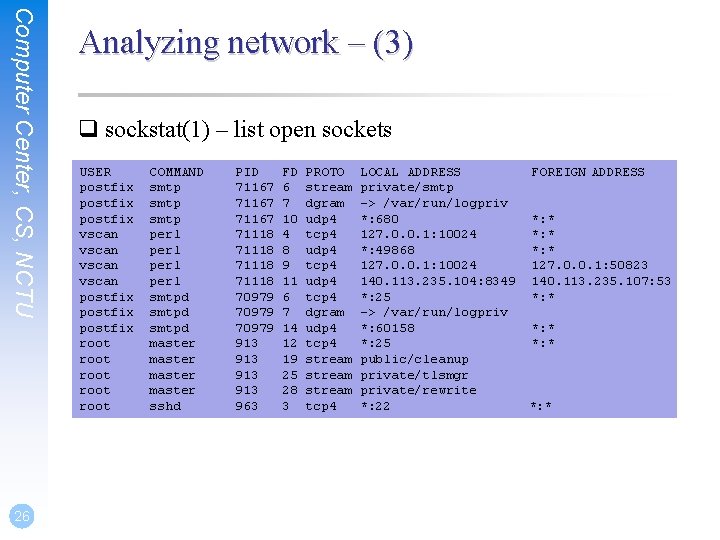

Computer Center, CS, NCTU 26 Analyzing network – (3) q sockstat(1) – list open sockets USER postfix vscan postfix root root COMMAND smtp perl smtpd master sshd PID 71167 71118 70979 913 913 963 FD 6 7 10 4 8 9 11 6 7 14 12 19 25 28 3 PROTO stream dgram udp 4 tcp 4 dgram udp 4 tcp 4 stream tcp 4 LOCAL ADDRESS FOREIGN ADDRESS private/smtp -> /var/run/logpriv *: 680 *: * 127. 0. 0. 1: 10024 *: * *: 49868 *: * 127. 0. 0. 1: 10024 127. 0. 0. 1: 50823 140. 113. 235. 104: 8349 140. 113. 235. 107: 53 *: 25 *: * -> /var/run/logpriv *: 60158 *: * *: 25 *: * public/cleanup private/tlsmgr private/rewrite *: 22 *: *

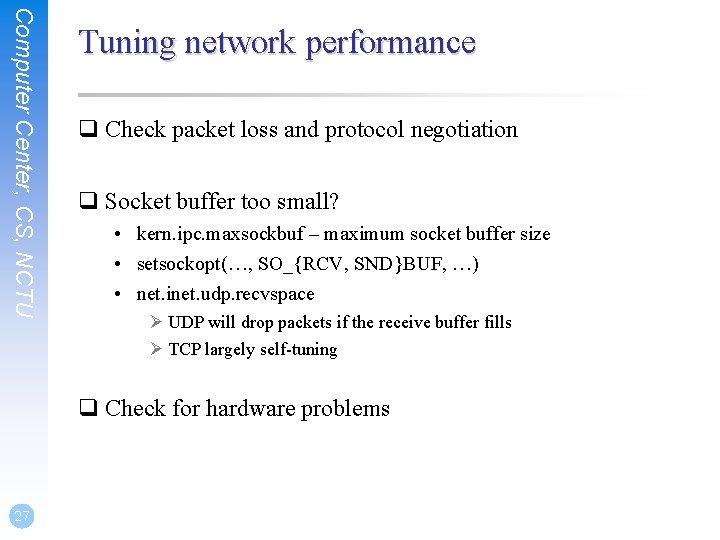

Computer Center, CS, NCTU Tuning network performance q Check packet loss and protocol negotiation q Socket buffer too small? • kern. ipc. maxsockbuf – maximum socket buffer size • setsockopt(…, SO_{RCV, SND}BUF, …) • net. inet. udp. recvspace Ø UDP will drop packets if the receive buffer fills Ø TCP largely self-tuning q Check for hardware problems 27

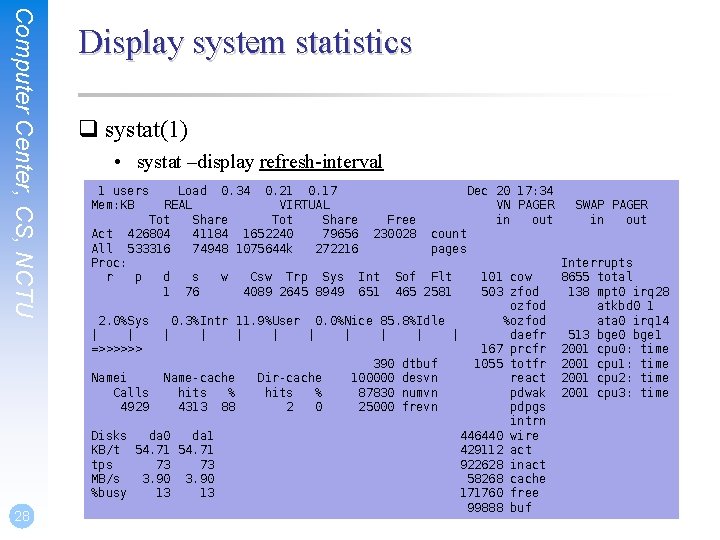

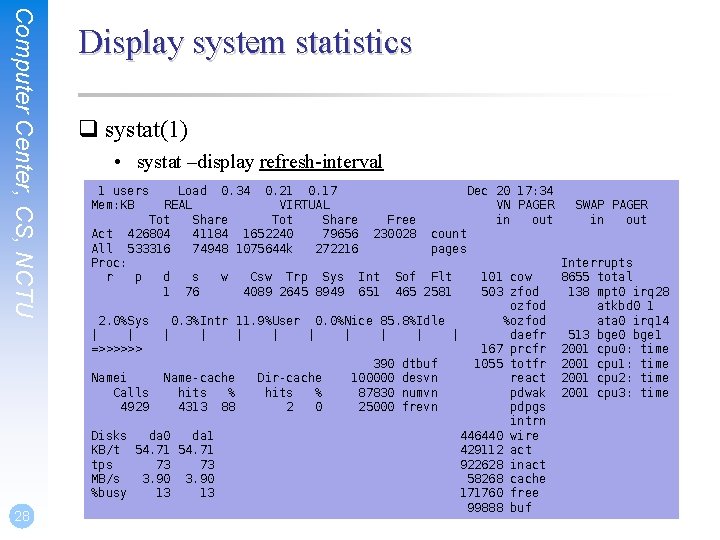

Computer Center, CS, NCTU 28 Display system statistics q systat(1) • systat –display refresh-interval 1 users Mem: KB Load 0. 34 0. 21 0. 17 REAL VIRTUAL Tot Share 426804 41184 1652240 79656 533316 74948 1075644 k 272216 Act All Proc: r p d 1 s 76 w Csw Trp Sys 4089 2645 8949 Free 230028 Int 651 Dec 20 17: 34 VN PAGER in out SWAP PAGER in out count pages Sof Flt 465 2581 101 cow 503 zfod ozfod 2. 0%Sys 0. 3%Intr 11. 9%User 0. 0%Nice 85. 8%Idle %ozfod | | | daefr =>>>>>> 167 prcfr 390 dtbuf 1055 totfr Namei Name-cache Dir-cache 100000 desvn react Calls hits % 87830 numvn pdwak 4929 4313 88 2 0 25000 frevn pdpgs intrn Disks da 0 da 1 446440 wire KB/t 54. 71 429112 act tps 73 73 922628 inact MB/s 3. 90 58268 cache %busy 13 13 171760 free 99888 buf Interrupts 8655 total 138 mpt 0 irq 28 atkbd 0 1 ata 0 irq 14 513 bge 0 bge 1 2001 cpu 0: time 2001 cpu 1: time 2001 cpu 2: time 2001 cpu 3: time

Computer Center, CS, NCTU When to throw hardware at the problem q Only once you have determined that a particular hardware resource is your limiting factor • More CPU cores will not solve a slow disk q Adding RAM can reduce the need for some disk I/O • More cached data, less paging from disk q Adding more CPU cores is not a magic bullet for CPU limited jobs • Some applications do not scale well • High CPU can be caused by resource contention Ø Recourse contention will make performance worse!!! 29

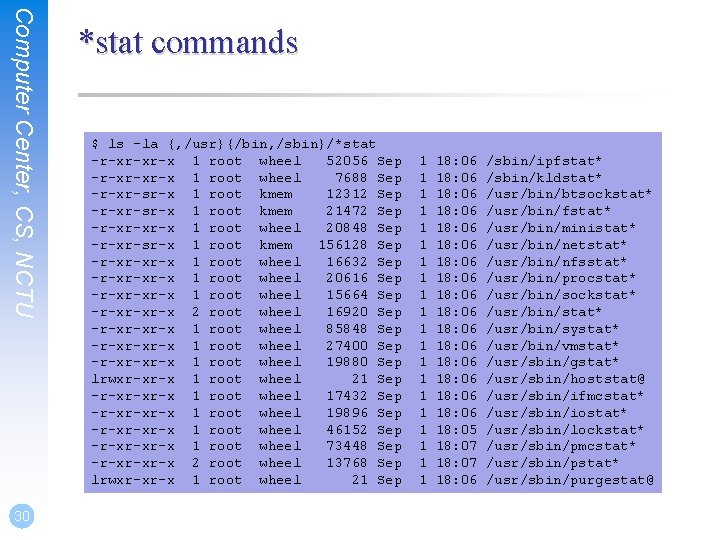

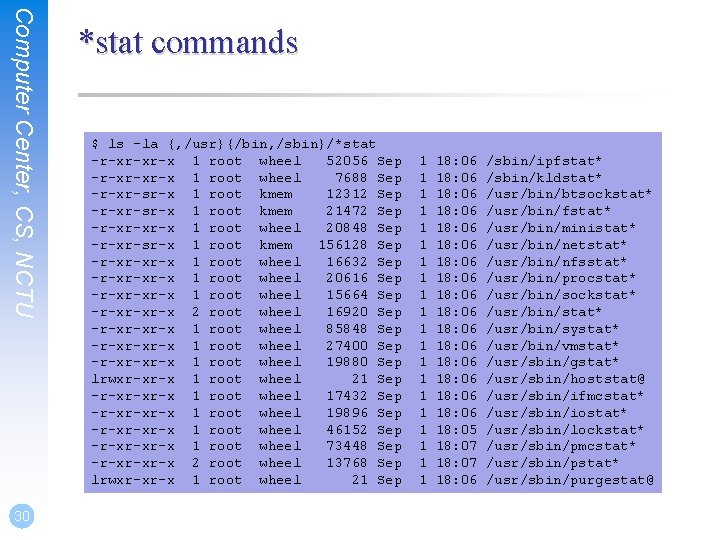

Computer Center, CS, NCTU 30 *stat commands $ ls -la {, /usr}{/bin, /sbin}/*stat -r-xr-xr-x 1 root wheel 52056 Sep -r-xr-xr-x 1 root wheel 7688 Sep -r-xr-sr-x 1 root kmem 12312 Sep -r-xr-sr-x 1 root kmem 21472 Sep -r-xr-xr-x 1 root wheel 20848 Sep -r-xr-sr-x 1 root kmem 156128 Sep -r-xr-xr-x 1 root wheel 16632 Sep -r-xr-xr-x 1 root wheel 20616 Sep -r-xr-xr-x 1 root wheel 15664 Sep -r-xr-xr-x 2 root wheel 16920 Sep -r-xr-xr-x 1 root wheel 85848 Sep -r-xr-xr-x 1 root wheel 27400 Sep -r-xr-xr-x 1 root wheel 19880 Sep lrwxr-xr-x 1 root wheel 21 Sep -r-xr-xr-x 1 root wheel 17432 Sep -r-xr-xr-x 1 root wheel 19896 Sep -r-xr-xr-x 1 root wheel 46152 Sep -r-xr-xr-x 1 root wheel 73448 Sep -r-xr-xr-x 2 root wheel 13768 Sep lrwxr-xr-x 1 root wheel 21 Sep 1 1 1 1 1 18: 06 18: 06 18: 06 18: 06 18: 05 18: 07 18: 06 /sbin/ipfstat* /sbin/kldstat* /usr/bin/btsockstat* /usr/bin/fstat* /usr/bin/ministat* /usr/bin/netstat* /usr/bin/nfsstat* /usr/bin/procstat* /usr/bin/sockstat* /usr/bin/systat* /usr/bin/vmstat* /usr/sbin/gstat* /usr/sbin/hoststat@ /usr/sbin/ifmcstat* /usr/sbin/iostat* /usr/sbin/lockstat* /usr/sbin/pmcstat* /usr/sbin/purgestat@

Computer Center, CS, NCTU Further Reading q Help! My system is slow! • http: //people. freebsd. org/~kris/scaling/Help_my_system_is_slow. pdf • Further topics Ø Device I/O Ø Tracing system calls used by individual process Ø Activity inside the kernel – – Lock profiling Sleepqueue profiling Hardware performance counters Kernel turing Ø Benchmarking techniques – ministat(1) 31