ORNL Net 100 status July 31 2002 ORNL

- Slides: 12

ORNL Net 100 status July 31, 2002

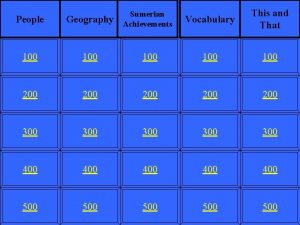

ORNL Net 100 • Focus Areas (first year) – TCP optimizations (WAD, AIMD, VMSS, ns, atou) – Network tool evaluation (iperf, webd, traced, java/web 100) – Bulk transfer optimization, Grid. FTP (LBL/NERSC/ORNL) • Today’s agenda – Activities since Denver meeting – Current activities / WAD status – Future work/needs U. S. Department of Energy Oak Ridge National Laboratory UT-BATTELLE

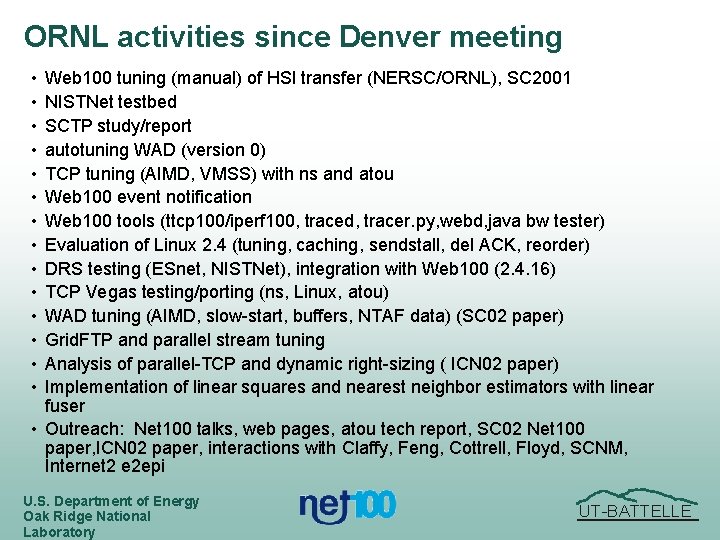

ORNL activities since Denver meeting • • • • Web 100 tuning (manual) of HSI transfer (NERSC/ORNL), SC 2001 NISTNet testbed SCTP study/report autotuning WAD (version 0) TCP tuning (AIMD, VMSS) with ns and atou Web 100 event notification Web 100 tools (ttcp 100/iperf 100, traced, tracer. py, webd, java bw tester) Evaluation of Linux 2. 4 (tuning, caching, sendstall, del ACK, reorder) DRS testing (ESnet, NISTNet), integration with Web 100 (2. 4. 16) TCP Vegas testing/porting (ns, Linux, atou) WAD tuning (AIMD, slow-start, buffers, NTAF data) (SC 02 paper) Grid. FTP and parallel stream tuning Analysis of parallel-TCP and dynamic right-sizing ( ICN 02 paper) Implementation of linear squares and nearest neighbor estimators with linear fuser • Outreach: Net 100 talks, web pages, atou tech report, SC 02 Net 100 paper, ICN 02 paper, interactions with Claffy, Feng, Cottrell, Floyd, SCNM, Internet 2 e 2 epi U. S. Department of Energy Oak Ridge National Laboratory UT-BATTELLE

Web 100 tools • Post-transfer statistics – Java bandwidth tester (53% have pkt loss) – ttcp 100/iperf 100 – Web 100 daemon • avoid modifying applications • log designated paths/ports/variables • Tracer daemon # traced config file #local lport remote rport 0. 0 0 124. 55. 182. 7 0. 0 0 134. 67. 45. 9 #v=value d=delta d Pkts. Out d Pkts. Retrans v Current. Cwnd v Sampled. RTT 0 0 – collect Web 100 variables at 0. 1 second intervals – config file specifies • source/port dest/port • web 100 variables (current/delta) – log to disk with timestamp and CID – plot/analyze flows/aggregates – C and python (LBL-based) U. S. Department of Energy Oak Ridge National Laboratory UT-BATTELLE

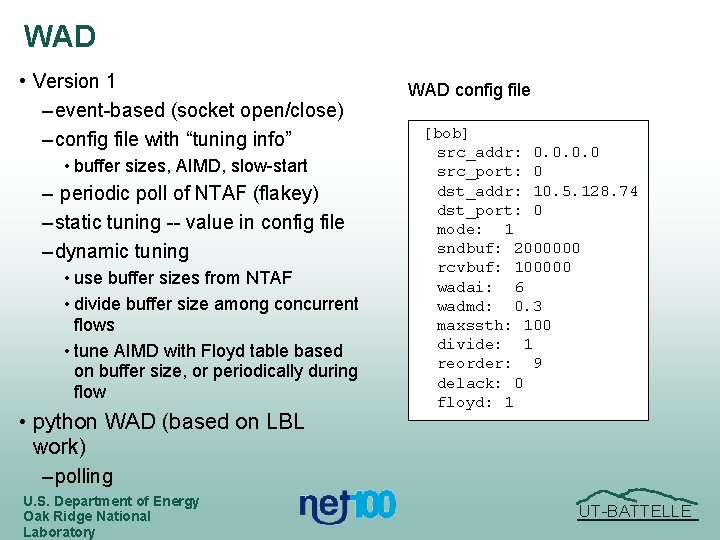

WAD • Version 1 – event-based (socket open/close) – config file with “tuning info” • buffer sizes, AIMD, slow-start – periodic poll of NTAF (flakey) – static tuning -- value in config file – dynamic tuning • use buffer sizes from NTAF • divide buffer size among concurrent flows • tune AIMD with Floyd table based on buffer size, or periodically during flow • python WAD (based on LBL work) WAD config file [bob] src_addr: 0. 0 src_port: 0 dst_addr: 10. 5. 128. 74 dst_port: 0 mode: 1 sndbuf: 2000000 rcvbuf: 100000 wadai: 6 wadmd: 0. 3 maxssth: 100 divide: 1 reorder: 9 delack: 0 floyd: 1 – polling U. S. Department of Energy Oak Ridge National Laboratory UT-BATTELLE

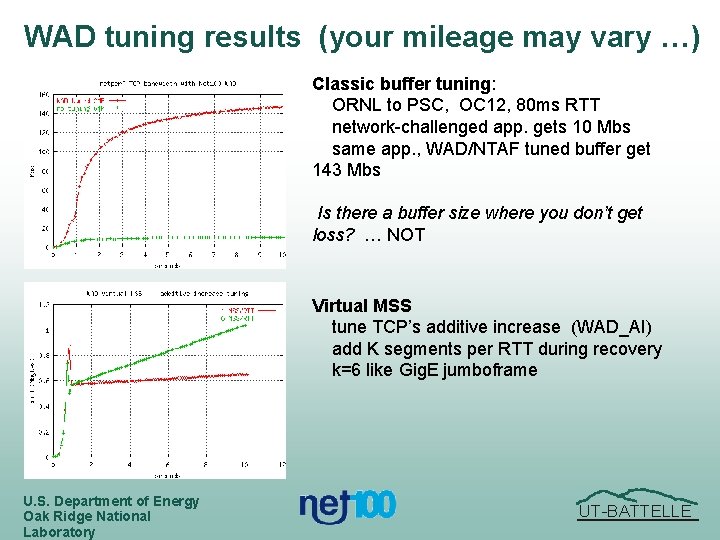

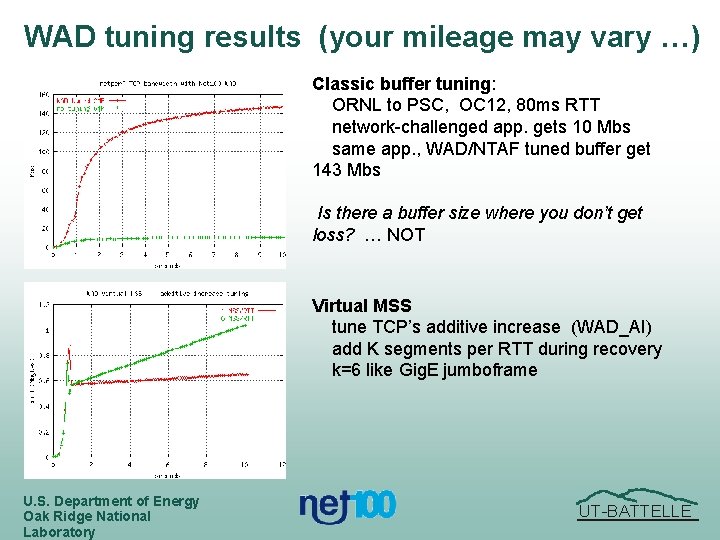

WAD tuning results (your mileage may vary …) Classic buffer tuning: ORNL to PSC, OC 12, 80 ms RTT network-challenged app. gets 10 Mbs same app. , WAD/NTAF tuned buffer get 143 Mbs Is there a buffer size where you don’t get loss? … NOT Virtual MSS tune TCP’s additive increase (WAD_AI) add K segments per RTT during recovery k=6 like Gig. E jumboframe U. S. Department of Energy Oak Ridge National Laboratory UT-BATTELLE

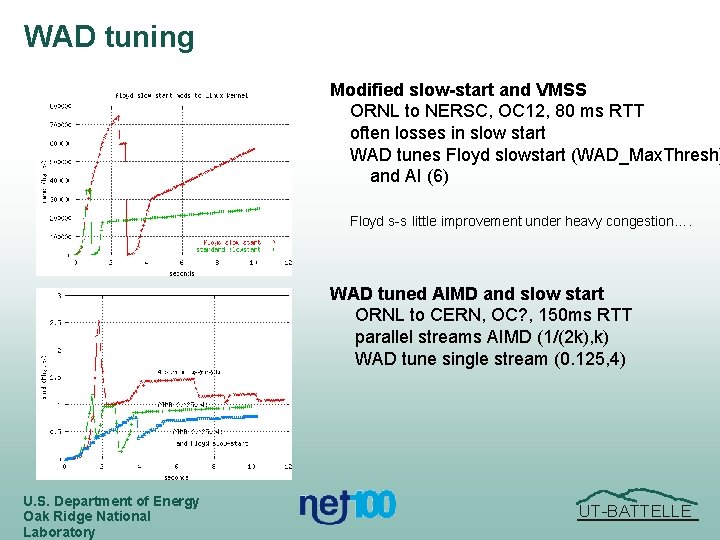

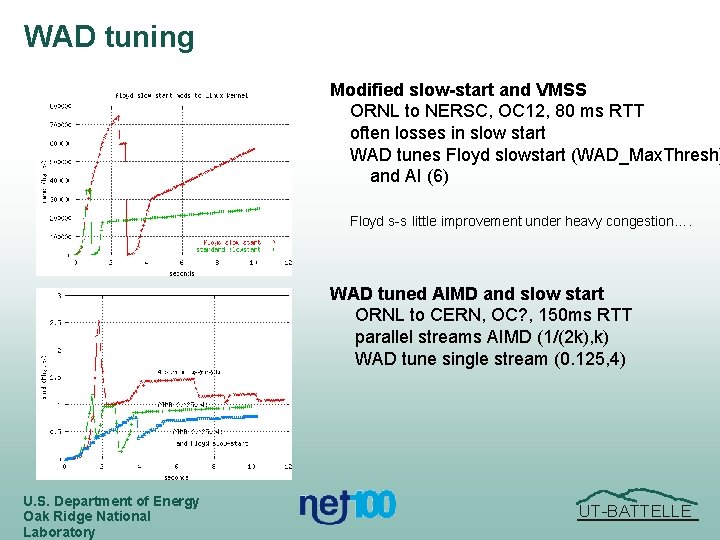

WAD tuning Modified slow-start and VMSS ORNL to NERSC, OC 12, 80 ms RTT often losses in slow start WAD tunes Floyd slowstart (WAD_Max. Thresh) and AI (6) Floyd s-s little improvement under heavy congestion…. WAD tuned AIMD and slow start ORNL to CERN, OC? , 150 ms RTT parallel streams AIMD (1/(2 k), k) WAD tune single stream (0. 125, 4) U. S. Department of Energy Oak Ridge National Laboratory UT-BATTELLE

WAD tuning: Floyd AIMD adjust AIMD as function of cwnd (loss assum bigger cwnd: bigger increment, smaller red tested with ns and atou (continuous) WAD implementation pre-tune based on target buffer size (aggre continuous tuning (0. 1 second) discrete rather than continuous add to Linux 2. 4 (soon) How to select AIMD? Jumbo, parallel equivalent, Floyd, others ? U. S. Department of Energy Oak Ridge National Laboratory UT-BATTELLE

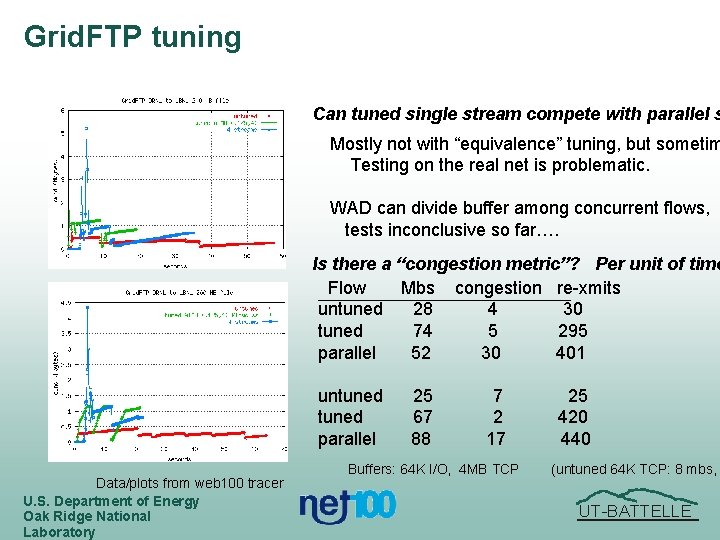

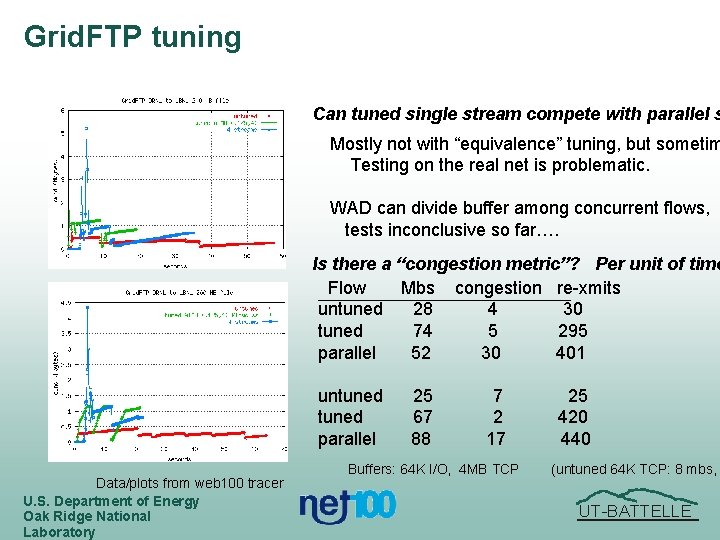

Grid. FTP tuning Can tuned single stream compete with parallel s Mostly not with “equivalence” tuning, but sometim Testing on the real net is problematic. WAD can divide buffer among concurrent flows, tests inconclusive so far…. Is there a “congestion metric”? Per unit of time Flow Mbs congestion re-xmits untuned 28 4 30 tuned 74 5 295 parallel 52 30 401 untuned parallel Data/plots from web 100 tracer U. S. Department of Energy Oak Ridge National Laboratory 25 67 88 7 2 17 Buffers: 64 K I/O, 4 MB TCP 25 420 440 (untuned 64 K TCP: 8 mbs, UT-BATTELLE

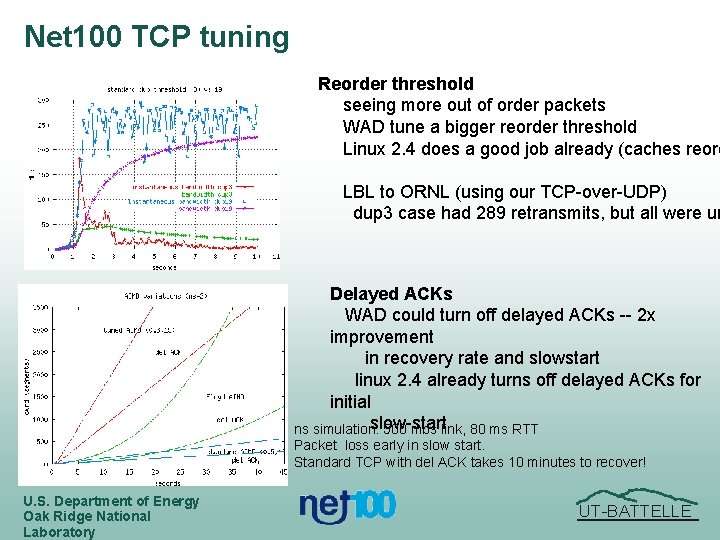

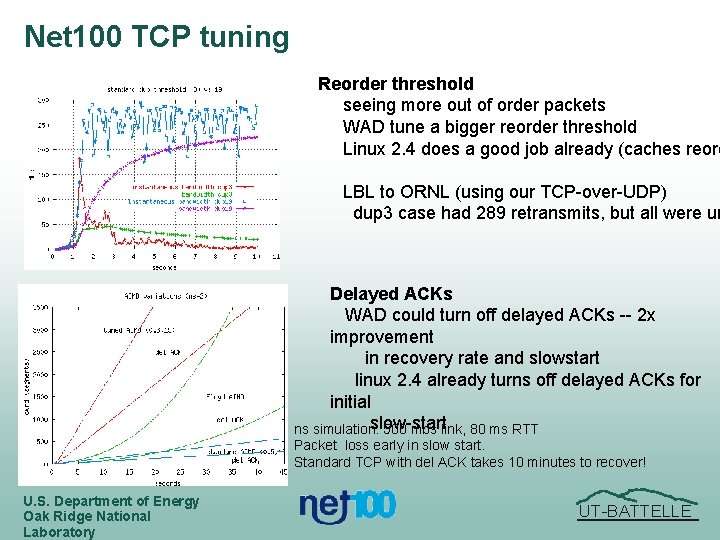

Net 100 TCP tuning Reorder threshold seeing more out of order packets WAD tune a bigger reorder threshold Linux 2. 4 does a good job already (caches reord LBL to ORNL (using our TCP-over-UDP) dup 3 case had 289 retransmits, but all were un Delayed ACKs WAD could turn off delayed ACKs -- 2 x improvement in recovery rate and slowstart linux 2. 4 already turns off delayed ACKs for initial slow-start ns simulation: 500 mbs link, 80 ms RTT Packet loss early in slow start. Standard TCP with del ACK takes 10 minutes to recover! U. S. Department of Energy Oak Ridge National Laboratory UT-BATTELLE

In progress. . . • WAD enhancement and testing – delayed ACK – Floyd AIMD (WAD and/or kernel) – tuning with NTAF data – distribution to other Net 100 sites • Grid. FTP tuning (ORNL/PSC/LBL) • python WAD with netlink • parallel stream tuning • TCP optimization studies (AIMD, Vegas) • Addition of postdoc U. S. Department of Energy Oak Ridge National Laboratory UT-BATTELLE

ORNL Yr 1 Milestones 1. - 2. Deploy Web 100 at ORNL and NERSC nodes to develop Net 100 expertise Develop and demonstrate Web 100 -aware data transfer application for Probe/HPSS testing between NERSC and ORNL - 3. Contribute to test and evaluation of existing end-to-end tools 4. Get access to ESnet ORNL and NERSC routers and investigate possible realtime feedback to application (e. g. using SNMP) - 5. Explore transport optimizations for single TCP flows 6. Develop file transfer application/protocol to support out-of-order packet arrivals - 7. Deploy a small emulator testbed to test transport protocol modifications and out-of-order resilient protocols/applications 8. Explore tuning the IBM/AIX 5. 1 TCP stack and investigate extending it with Net 100 mods 9. Test net 100 tools on ESnet's OC 48 testbed 10. Publish tools and tips on web page and in formal publications and presentations U. S. Department of Energy Oak Ridge National Laboratory UT-BATTELLE