Intro to CADES Compute and Data Environment for

- Slides: 8

Intro to CADES: Compute and Data Environment for Science Ramie Wilkerson Oak Ridge National Laboratory ORNL is managed by UT-Battelle for the US Department of Energy

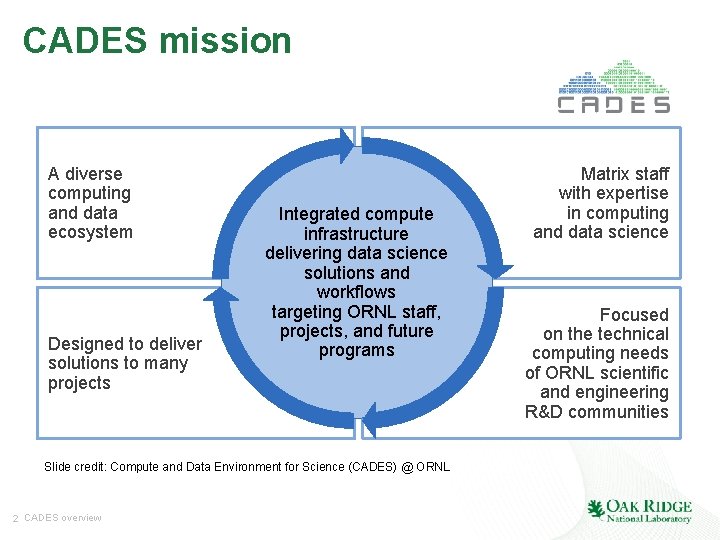

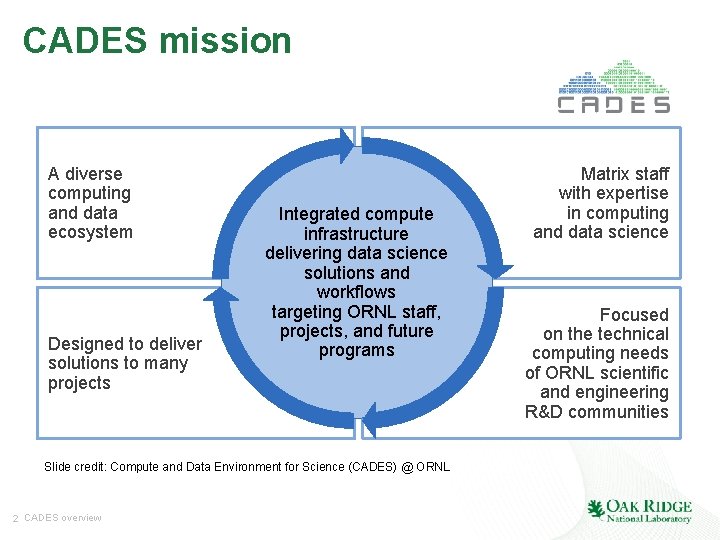

CADES mission A diverse computing and data ecosystem Designed to deliver solutions to many projects Integrated compute infrastructure delivering data science solutions and workflows targeting ORNL staff, projects, and future programs Slide credit: Compute and Data Environment for Science (CADES) @ ORNL 2 CADES overview Matrix staff with expertise in computing and data science Focused on the technical computing needs of ORNL scientific and engineering R&D communities

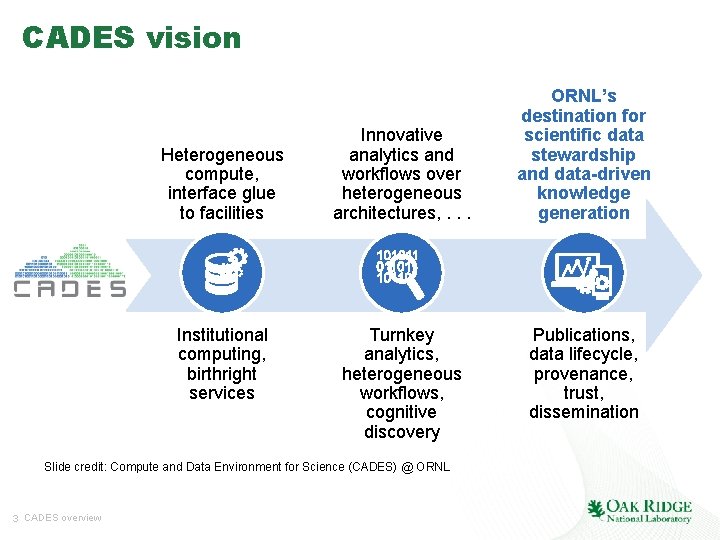

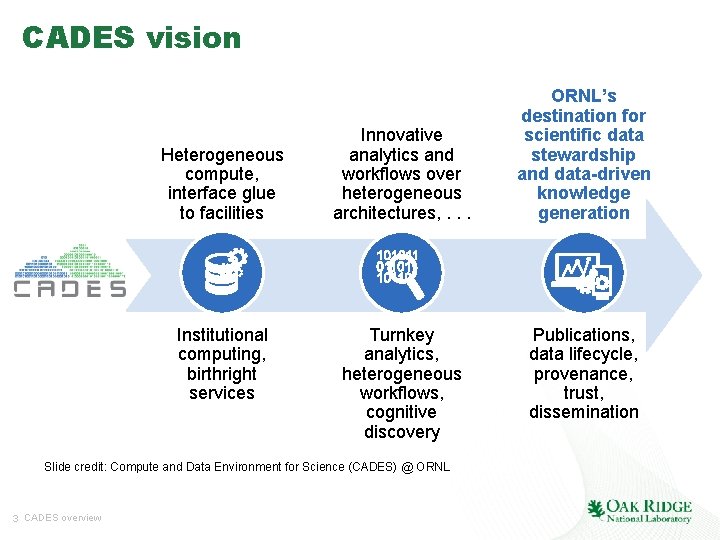

CADES vision Heterogeneous compute, interface glue to facilities Institutional computing, birthright services Innovative analytics and workflows over heterogeneous architectures, . . . Turnkey analytics, heterogeneous workflows, cognitive discovery Slide credit: Compute and Data Environment for Science (CADES) @ ORNL 3 CADES overview ORNL’s destination for scientific data stewardship and data-driven knowledge generation Publications, data lifecycle, provenance, trust, dissemination

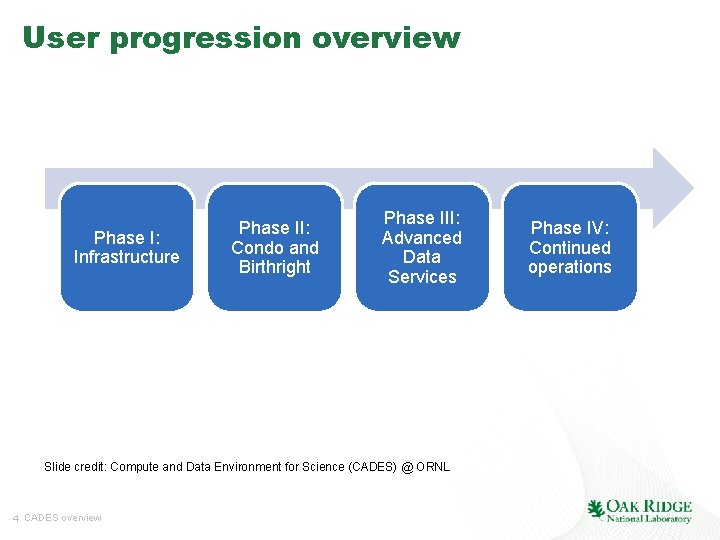

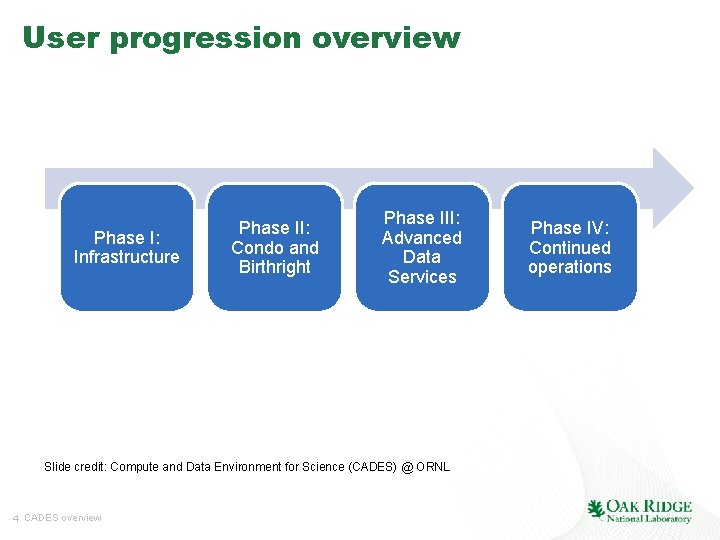

User progression overview Phase I: Infrastructure Phase II: Condo and Birthright Phase III: Advanced Data Services Slide credit: Compute and Data Environment for Science (CADES) @ ORNL 4 CADES overview Phase IV: Continued operations

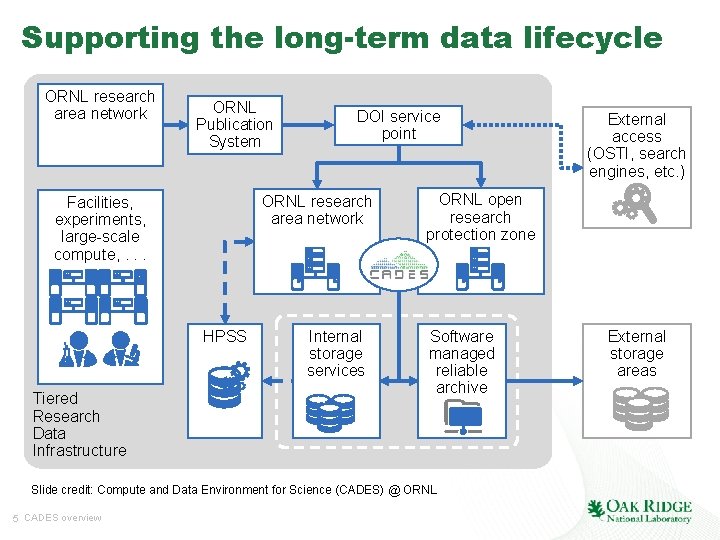

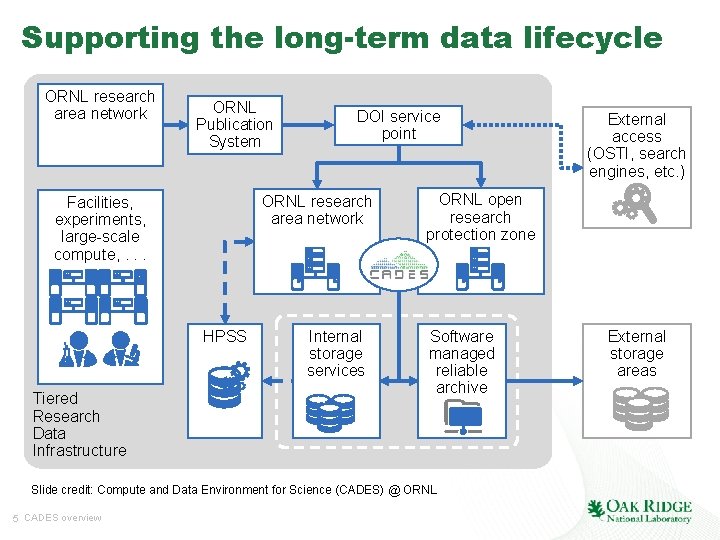

Supporting the long-term data lifecycle ORNL research area network ORNL Publication System ORNL research area network Facilities, experiments, large-scale compute, . . . HPSS Tiered Research Data Infrastructure DOI service point Internal storage services ORNL open research protection zone Software managed reliable archive Slide credit: Compute and Data Environment for Science (CADES) @ ORNL 5 CADES overview External access (OSTI, search engines, etc. ) External storage areas

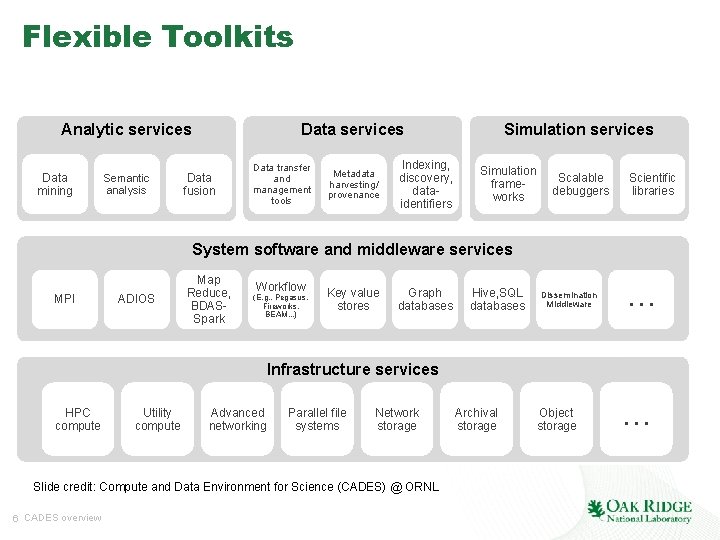

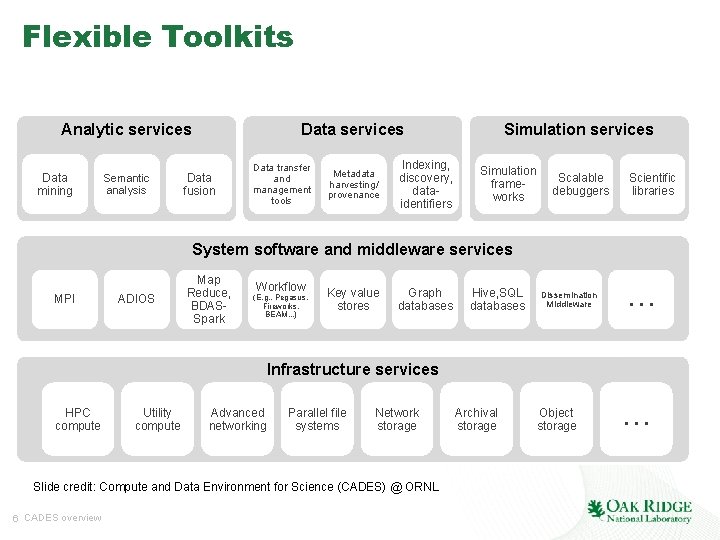

Flexible Toolkits Analytic services Data mining Semantic analysis Data services Data fusion Data transfer and management tools Metadata harvesting/ provenance Simulation services Indexing, discovery, dataidentifiers Simulation frameworks Scalable debuggers Scientific libraries System software and middleware services MPI ADIOS Map Reduce, BDASSpark Workflow (E. g. , Pegasus, Fireworks, BEAM, . . ) Key value stores Graph databases Hive, SQL databases Dissemination Middleware . . . Infrastructure services HPC compute Utility compute Advanced networking Parallel file systems Network storage Slide credit: Compute and Data Environment for Science (CADES) @ ORNL 6 CADES overview Archival storage Object storage . . .

Some CADES systems on the floor today Heterogeneous (Titan entry point) XK 7 racks • • Single socket AMD Interlagos 16 -core CPU per node Single NVIDIA K 20 X (Kepler) GPU per node Total 184 compute nodes; 2, 944 total cores 32 GB memory/node (+ 6 GB for GPU) Graph Cray Urika. GD graph analytics/ query engine Heterogeneous memory/ compute Cray Urika. XA (Hadoop) • 504 cores data • 5. 4 TB memory analytics • 576 TB local storage platform Private/ hybrid cloud Flexible, high performance compute environment OIC 7 CADES overview • • 4, 092 cores 8 TB memory 7 high memory systems, 240 cores 6 TB memory • 10, 368 cores • 41 TB memory Condo compute Shared memory compute • 8, 192 hardware threads • 2 TB memory • 125 TB local storage SGI UV 300 • 96 Intel E 7 processors (8 sockets each 12 core) • 6 Tbytes memory • Federated compute clusters ~10, 500 cores

Compute and Data Environment for Science … a popular destination with over 2 million visitors a year! 8 CADES overview