Oak Ridge Leadership Computing Facility www olcf ornl

- Slides: 26

Oak Ridge Leadership Computing Facility www. olcf. ornl. gov Don Maxwell HPC Technical Coordinator October 8, 2010 Presented To: HPC User Forum, Stuttgart

Oak Ridge Leadership Computing Facility • Mission: Deploy and operate the computational resources required to tackle global challenges – Providing world-class computational resources and specialized services for the most computationally intensive problems – Providing stable hardware/software path of increasing scale to maximize productive applications development – Deliver transforming discoveries in materials, biology, climate, energy technologies, etc. – Provide the ability to investigate otherwise inaccessible systems, from supernovae to nuclear reactors to energy grid dynamics 2 2 Managed by UT-Battelle for the Department of Energy

Our vision for sustained leadership and scientific impact • Provide the world’s most powerful open resource for capability computing • Follow a well-defined path for maintaining world leadership in this critical area • Attract the brightest talent and partnerships from all over the world • Deliver cutting-edge science relevant to the missions of DOE and key federal and state agencies • Unique opportunity for multi-agency collaboration for science based on synergy of requirements and technology 3

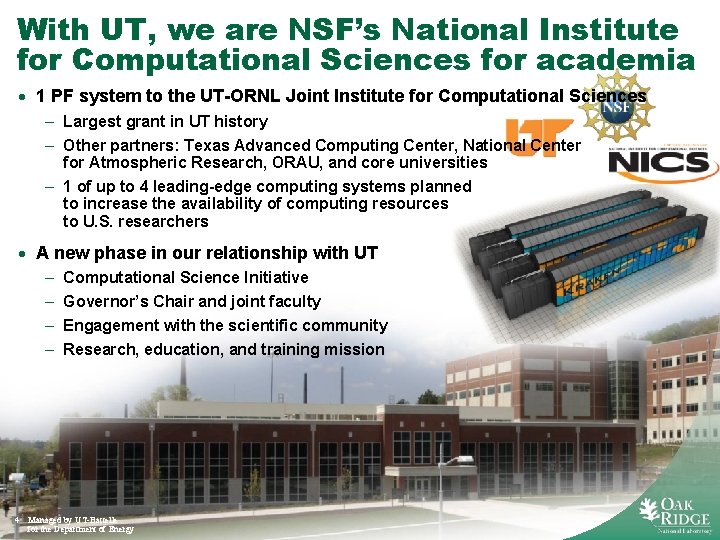

With UT, we are NSF’s National Institute for Computational Sciences for academia · 1 PF system to the UT-ORNL Joint Institute for Computational Sciences – Largest grant in UT history – Other partners: Texas Advanced Computing Center, National Center for Atmospheric Research, ORAU, and core universities – 1 of up to 4 leading-edge computing systems planned to increase the availability of computing resources to U. S. researchers · A new phase in our relationship with UT – – 4 4 Computational Science Initiative Governor’s Chair and joint faculty Engagement with the scientific community Research, education, and training mission Managed by UT-Battelle for the Department of Energy 4

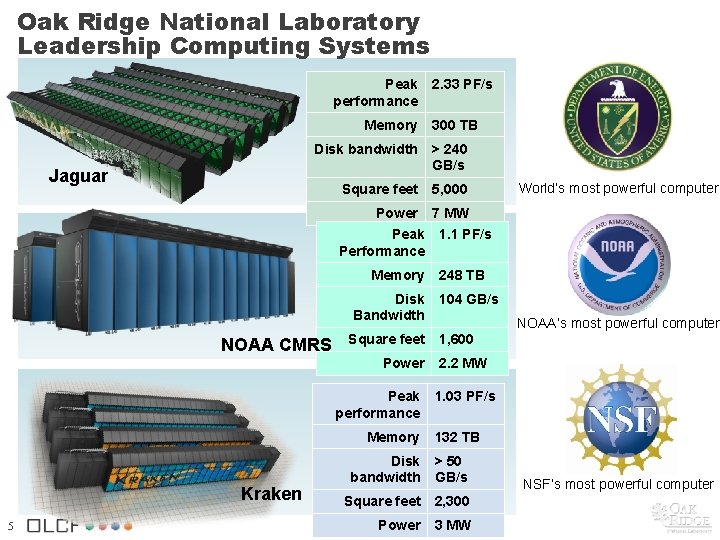

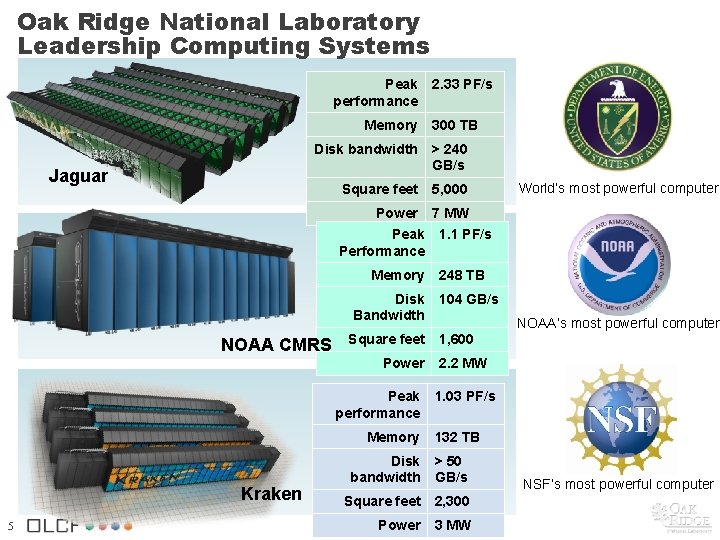

Oak Ridge National Laboratory Leadership Computing Systems Peak performance Memory 2. 33 PF/s 300 TB Disk bandwidth > 240 GB/s Square feet 5, 000 Jaguar World’s most powerful computer Power 7 MW Peak 1. 1 PF/s Performance Memory Disk Bandwidth NOAA CMRS Square feet Power Peak performance Memory Kraken 5 248 TB 104 GB/s NOAA’s most powerful computer 1, 600 2. 2 MW 1. 03 PF/s 132 TB Disk bandwidth > 50 GB/s Square feet 2, 300 Power 3 MW NSF’s most powerful computer

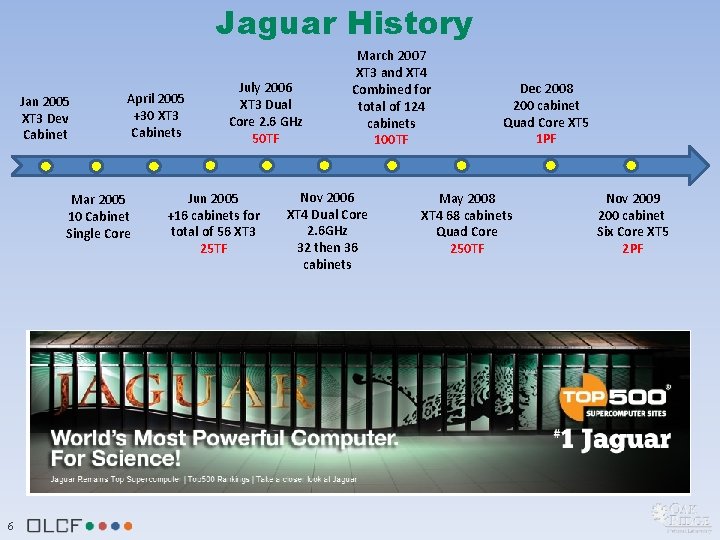

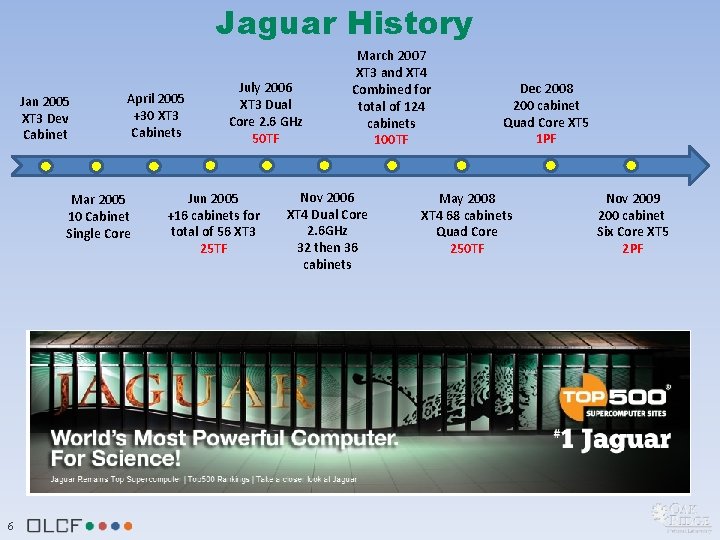

Jaguar History Jan 2005 XT 3 Dev Cabinet April 2005 +30 XT 3 Cabinets Mar 2005 10 Cabinet Single Core 6 July 2006 XT 3 Dual Core 2. 6 GHz 50 TF Jun 2005 +16 cabinets for total of 56 XT 3 25 TF March 2007 XT 3 and XT 4 Combined for total of 124 cabinets 100 TF Nov 2006 XT 4 Dual Core 2. 6 GHz 32 then 36 cabinets Dec 2008 200 cabinet Quad Core XT 5 1 PF May 2008 XT 4 68 cabinets Quad Core 250 TF Nov 2009 200 cabinet Six Core XT 5 2 PF

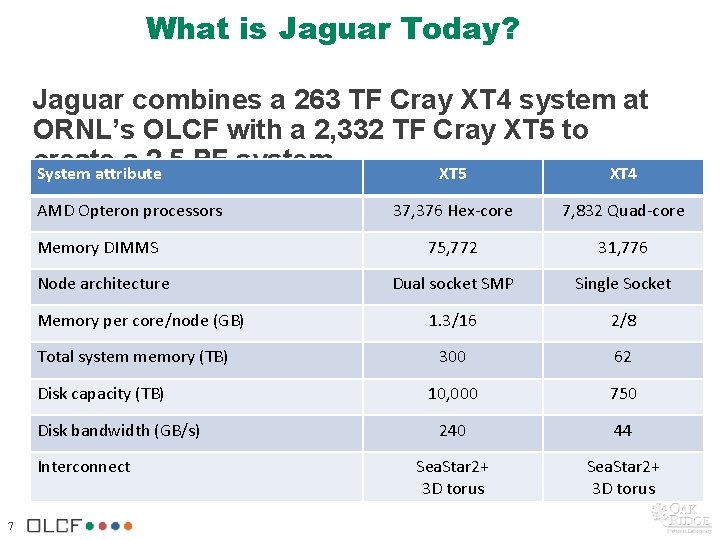

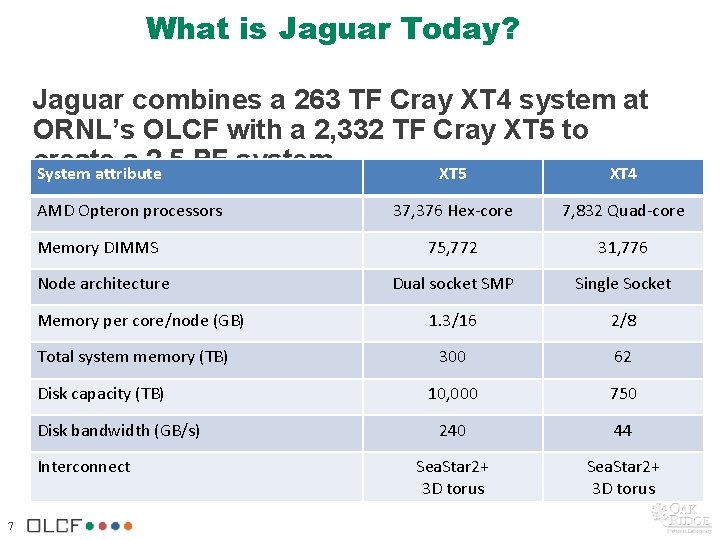

What is Jaguar Today? Jaguar combines a 263 TF Cray XT 4 system at ORNL’s OLCF with a 2, 332 TF Cray XT 5 to create a 2. 5 PF system System attribute XT 5 XT 4 AMD Opteron processors Memory DIMMS Node architecture Memory per core/node (GB) Total system memory (TB) Disk capacity (TB) Disk bandwidth (GB/s) Interconnect 7 37, 376 Hex-core 7, 832 Quad-core 75, 772 31, 776 Dual socket SMP Single Socket 1. 3/16 2/8 300 62 10, 000 750 240 44 Sea. Star 2+ 3 D torus

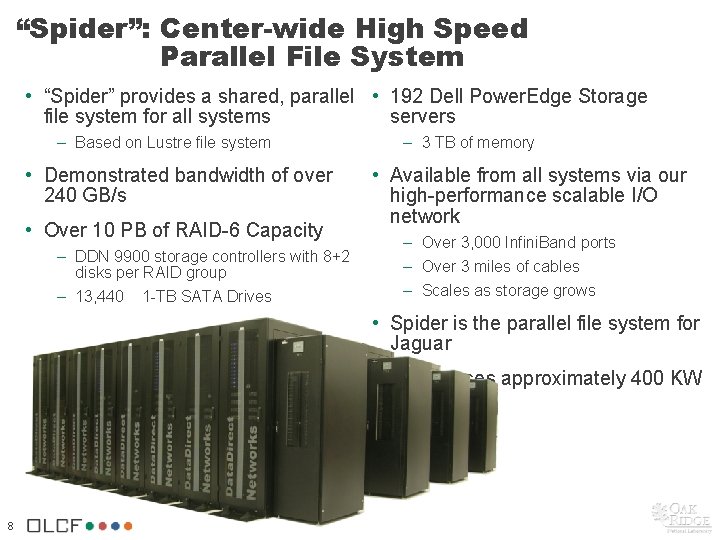

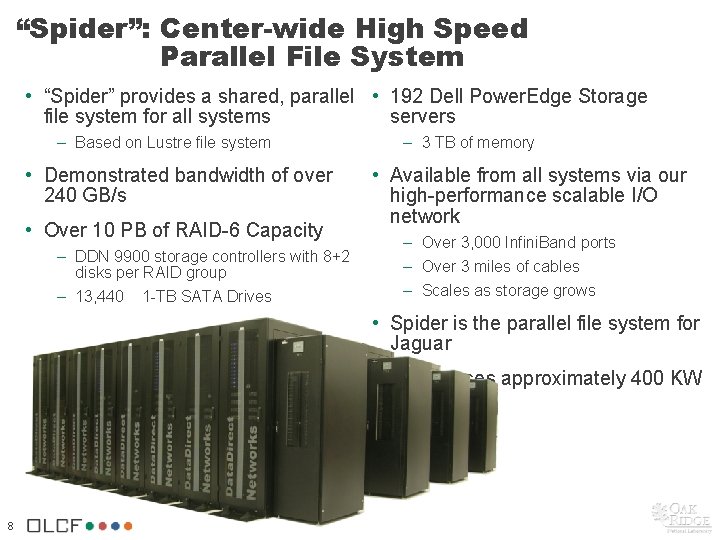

“Spider”: Center-wide High Speed Parallel File System • “Spider” provides a shared, parallel • 192 Dell Power. Edge Storage file system for all systems servers – Based on Lustre file system • Demonstrated bandwidth of over 240 GB/s • Over 10 PB of RAID-6 Capacity – DDN 9900 storage controllers with 8+2 disks per RAID group – 13, 440 1 -TB SATA Drives – 3 TB of memory • Available from all systems via our high-performance scalable I/O network – Over 3, 000 Infini. Band ports – Over 3 miles of cables – Scales as storage grows • Spider is the parallel file system for Jaguar • Spider uses approximately 400 KW of power 8

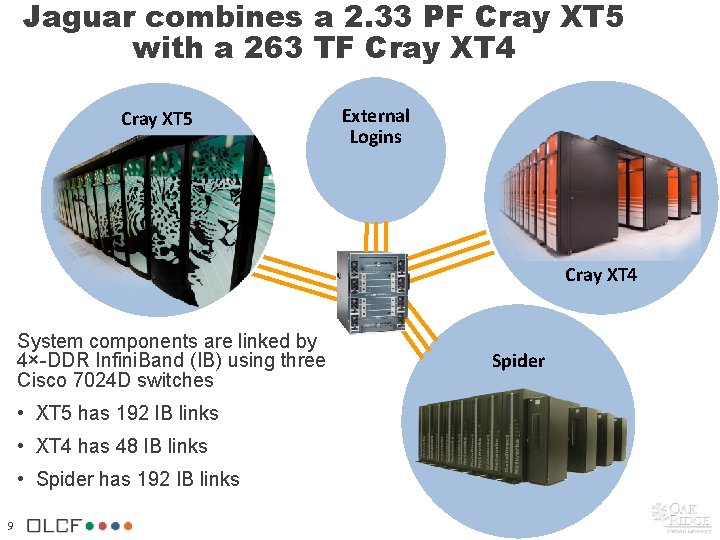

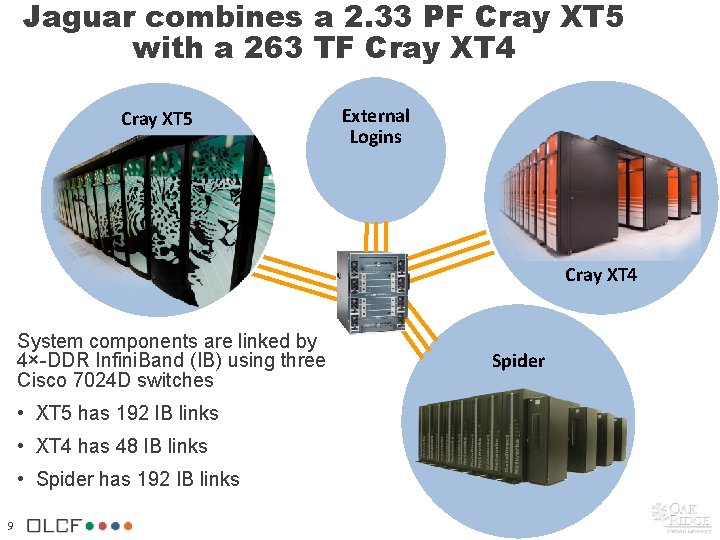

Jaguar combines a 2. 33 PF Cray XT 5 with a 263 TF Cray XT 4 Cray XT 5 External Logins Cray XT 4 System components are linked by 4×-DDR Infini. Band (IB) using three Cisco 7024 D switches • XT 5 has 192 IB links • XT 4 has 48 IB links • Spider has 192 IB links 9 Spider

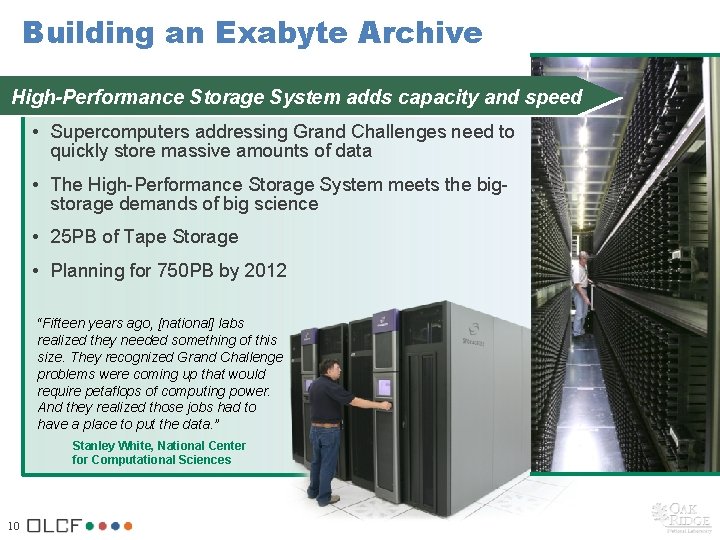

Building an Exabyte Archive High-Performance Storage System adds capacity and speed • Supercomputers addressing Grand Challenges need to quickly store massive amounts of data • The High-Performance Storage System meets the bigstorage demands of big science • 25 PB of Tape Storage • Planning for 750 PB by 2012 “Fifteen years ago, [national] labs realized they needed something of this size. They recognized Grand Challenge problems were coming up that would require petaflops of computing power. And they realized those jobs had to have a place to put the data. ” Stanley White, National Center for Computational Sciences 10

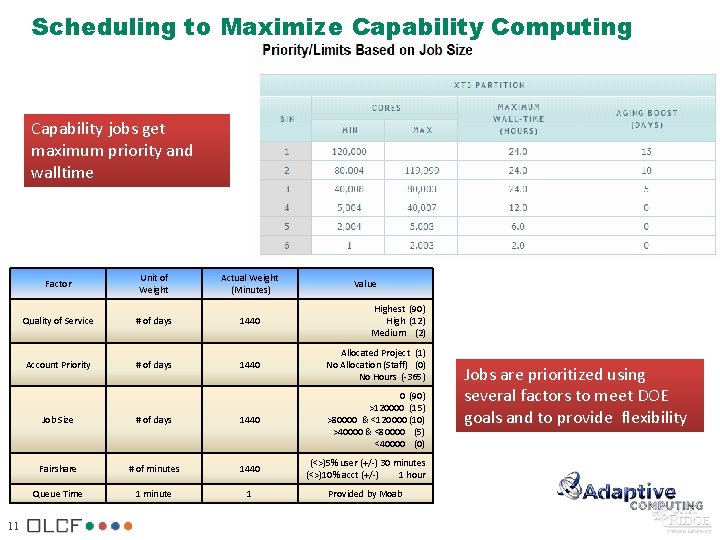

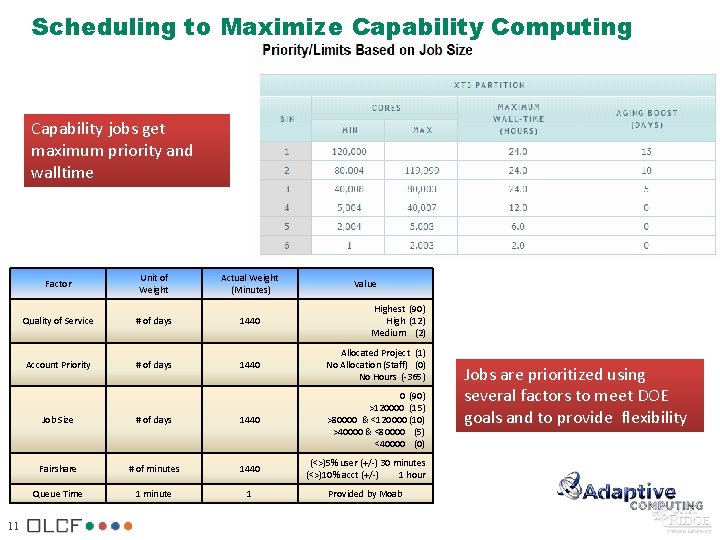

Scheduling to Maximize Capability Computing Capability jobs get maximum priority and walltime 11 Factor Unit of Weight Actual Weight (Minutes) Quality of Service # of days 1440 Highest (90) High (12) Medium (2) Account Priority # of days 1440 Allocated Project (1) No Allocation (Staff) (0) No Hours (-365) Value Job Size # of days 1440 0 (90) >120000 (15) >80000 & <120000 (10) >40000 & <80000 (5) <40000 (0) Fairshare # of minutes 1440 (<>)5% user (+/-) 30 minutes (<>)10% acct (+/-) 1 hour Queue Time 1 minute 1 Provided by Moab Jobs are prioritized using several factors to meet DOE goals and to provide flexibility

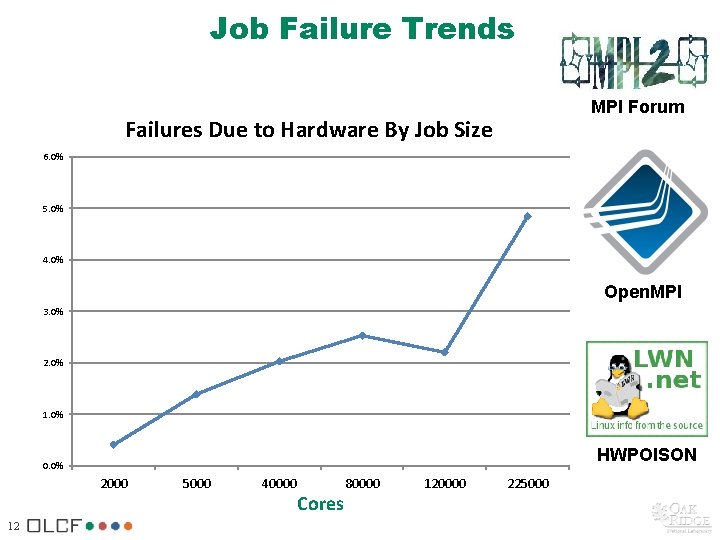

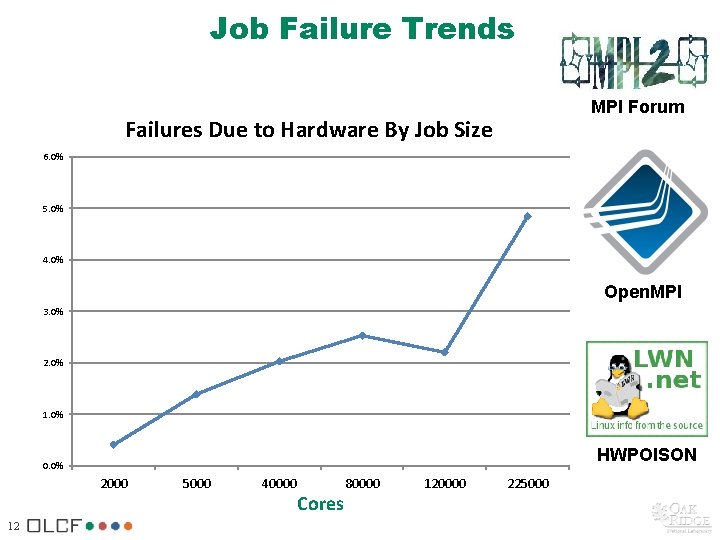

Job Failure Trends MPI Forum Failures Due to Hardware By Job Size 6. 0% 5. 0% 4. 0% Open. MPI 3. 0% 2. 0% 1. 0% HWPOISON 0. 0% 2000 12 5000 40000 Cores 80000 120000 225000

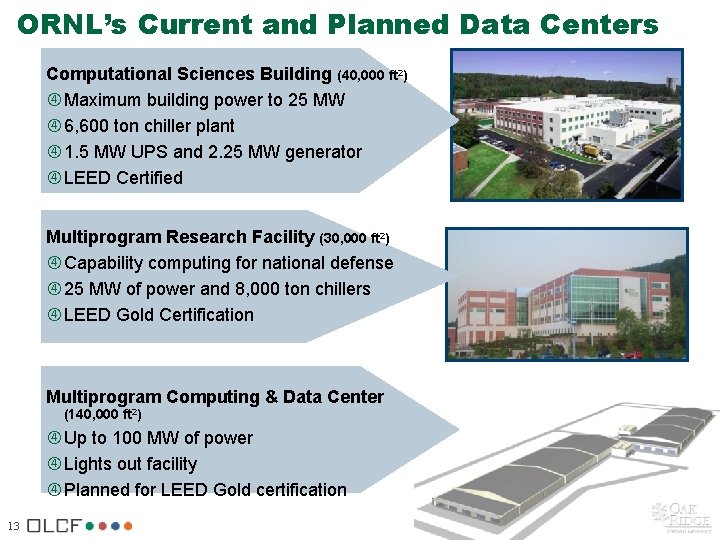

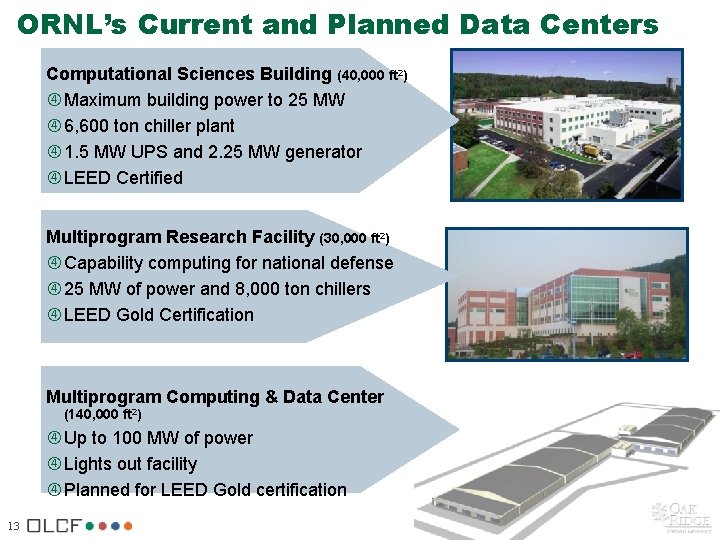

ORNL’s Current and Planned Data Centers Computational Sciences Building (40, 000 ft 2) Maximum building power to 25 MW 6, 600 ton chiller plant 1. 5 MW UPS and 2. 25 MW generator LEED Certified Multiprogram Research Facility (30, 000 ft 2) Capability computing for national defense 25 MW of power and 8, 000 ton chillers LEED Gold Certification Multiprogram Computing & Data Center (140, 000 ft 2) Up to 100 MW of power Lights out facility Planned for LEED Gold certification 13

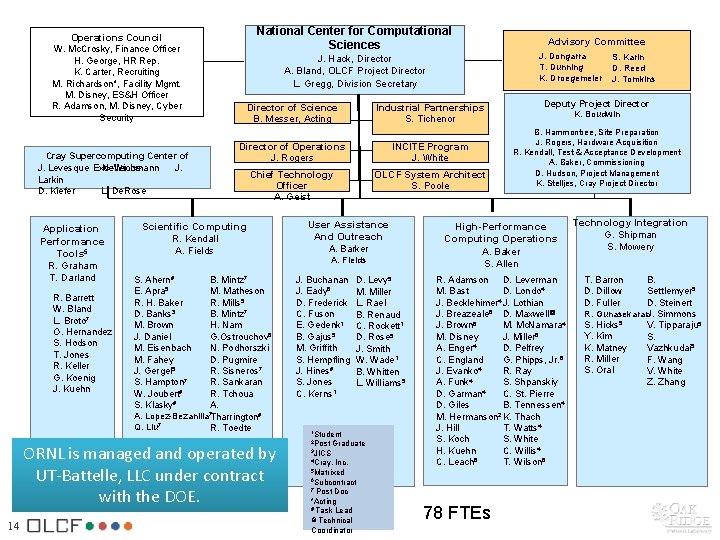

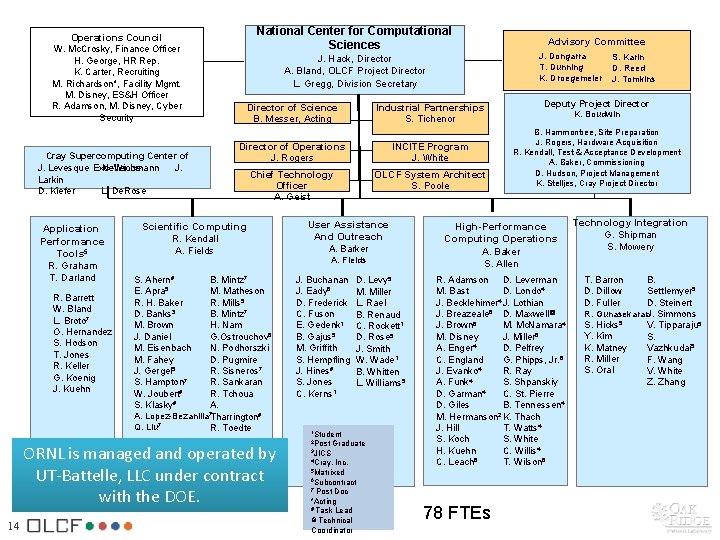

National Center for Computational Sciences Operations Council W. Mc. Crosky, Finance Officer H. George, HR Rep. K. Carter, Recruiting M. Richardson*, Facility Mgmt. M. Disney, ES&H Officer R. Adamson, M. Disney, Cyber Security Cray Supercomputing Center of J. Levesque Excellence N. Wichmann J. Larkin D. Kiefer L. De. Rose Application Performance Tools 5 R. Graham T. Darland R. Barrett W. Bland L. Broto 7 O. Hernandez S. Hodson T. Jones R. Keller G. Koenig J. Kuehn J. Hack, Director A. Bland, OLCF Project Director L. Gregg, Division Secretary Director of Science B. Messer, Acting Director of Operations J. Rogers INCITE Program J. White Chief Technology Officer A. Geist OLCF System Architect S. Poole Scientific Computing R. Kendall A. Fields S. Ahern# E. Apra 5 R. H. Baker D. Banks 3 M. Brown J. Daniel M. Eisenbach M. Fahey J. Gergel 5 S. Hampton 7 W. Joubert# S. Klasky# B. Mintz 7 M. Matheson R. Mills 5 B. Mintz 7 H. Nam G. Ostrouchov 5 N. Podhorszki D. Pugmire R. Sisneros 7 R. Sankaran R. Tchoua A. A. Lopez-Bezanilla 7 Tharrington# Q. Liu 7 R. Toedte ORNL is managed and operated by UT-Battelle, LLC under contract with the DOE. 14 Industrial Partnerships S. Tichenor User Assistance And Outreach A. Barker A. Fields J. Buchanan J. Eady 5 D. Frederick C. Fuson E. Gedenk 1 B. Gajus 5 M. Griffith S. Hempfling J. Hines# S. Jones C. Kerns 1 D. Levy 5 M. Miller L. Rael B. Renaud C. Rockett 1 D. Rose 5 J. Smith W. Wade 1 B. Whitten L. Williams 5 1 Student 2 Post Graduate 3 JICS 4 Cray, Inc. K. Boudwin B. Hammontree, Site Preparation J. Rogers, Hardware Acquisition R. Kendall, Test & Acceptance Development A. Baker, Commissioning D. Hudson, Project Management K. Stelljes, Cray Project Director R. Adamson D. Leverman M. Bast D. Londo 4 4 J. Becklehimer J. Lothian J. Breazeale 6 D. Maxwell@ J. Brown 6 M. Mc. Namara 4 M. Disney J. Miller 6 A. Enger 4 D. Pelfrey C. England G. Phipps, Jr. 6 4 J. Evanko R. Ray A. Funk 4 S. Shpanskiy D. Garman 4 C. St. Pierre D. Giles B. Tennessen 4 M. Hermanson 2 K. Thach J. Hill T. Watts 4 S. Koch S. White H. Kuehn C. Willis 4 6 C. Leach T. Wilson 6 6 Subcontract 7 78 FTEs S. Karin D. Reed J. Tomkins Deputy Project Director High-Performance Computing Operations A. Baker S. Allen 5 Matrixed Post Doc *Acting # Task Lead @ Technical Coordinator Advisory Committee J. Dongarra T. Dunning K. Droegemeier Technology Integration G. Shipman S. Mowery T. Barron D. Dillow D. Fuller B. Settlemyer 5 D. Steinert R. Gunasekaran. J. Simmons S. Hicks 5 V. Tipparaju 5 Y. Kim S. K. Matney Vazhkudai 5 R. Miller F. Wang S. Oral V. White Z. Zhang

Scientific Computing facilitates the delivery of leadership science by partnering with users to effectively utilize computational science, visualization and workflow technologies on OLCF resources through: 15 • Science team liaisons • Developing, tuning, and scaling current and future applications • Providing visualizations to present scientific results and augment discovery processes

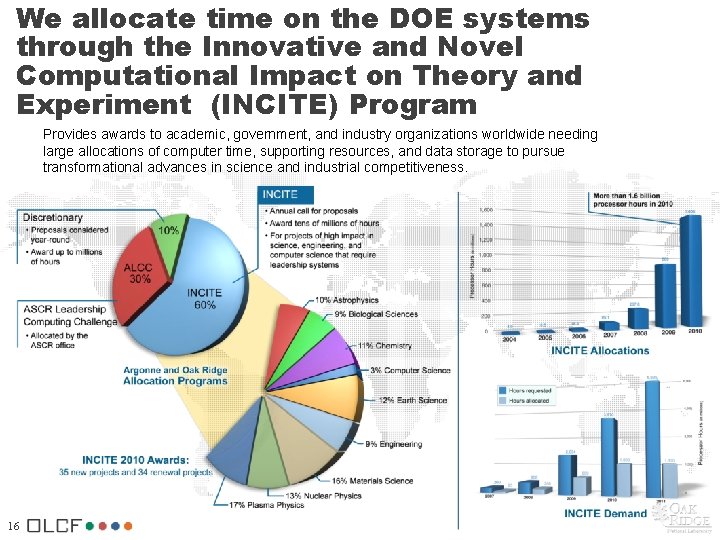

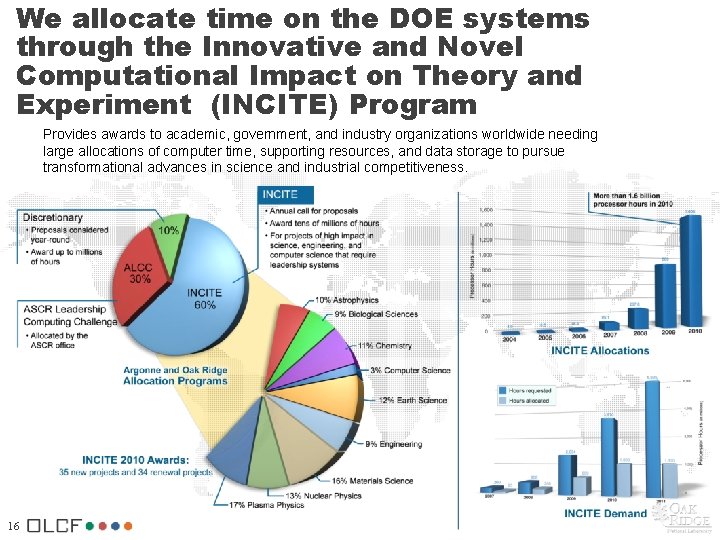

We allocate time on the DOE systems through the Innovative and Novel Computational Impact on Theory and Experiment (INCITE) Program Provides awards to academic, government, and industry organizations worldwide needing large allocations of computer time, supporting resources, and data storage to pursue transformational advances in science and industrial competitiveness. 16

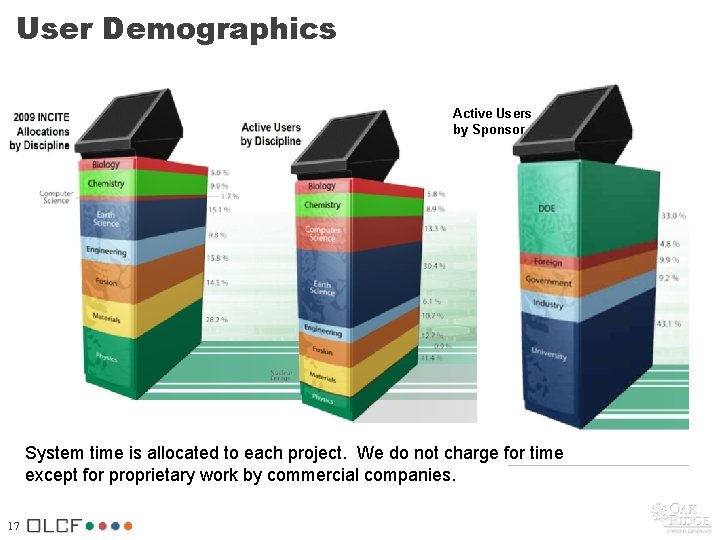

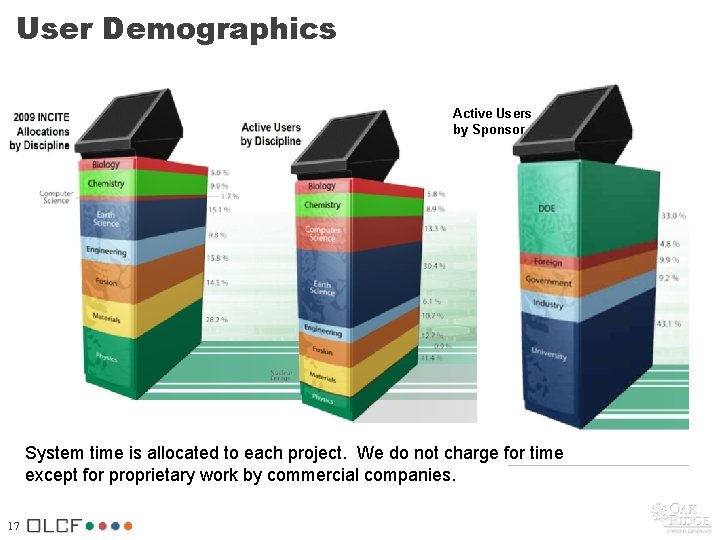

User Demographics Active Users by Sponsor System time is allocated to each project. We do not charge for time except for proprietary work by commercial companies. 17

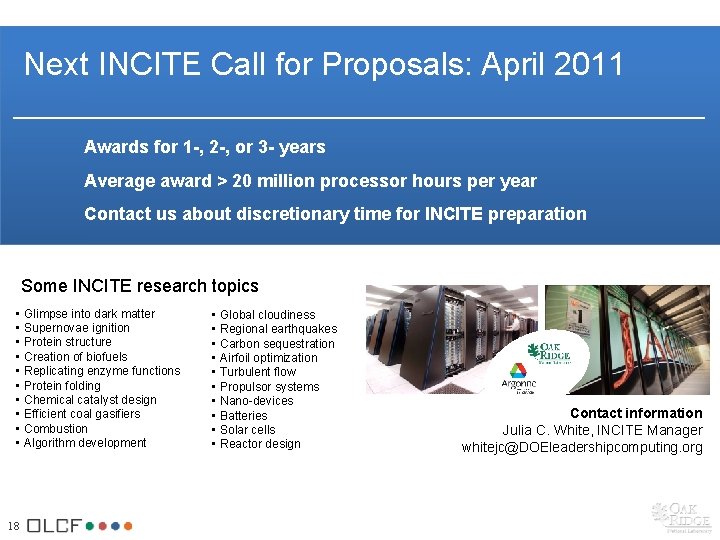

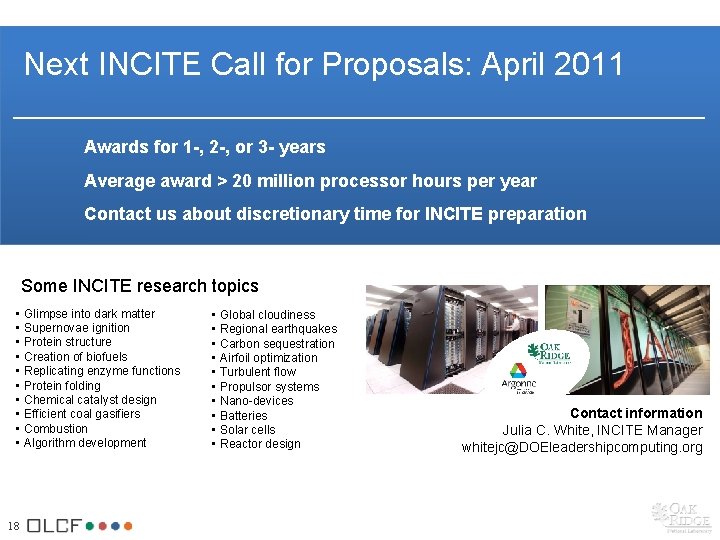

Next INCITE Call for Proposals: April 2011 Awards for 1 -, 2 -, or 3 - years Average award > 20 million processor hours per year Contact us about discretionary time for INCITE preparation Some INCITE research topics • Glimpse into dark matter • Supernovae ignition • Protein structure • Creation of biofuels • Replicating enzyme functions • Protein folding • Chemical catalyst design • Efficient coal gasifiers • Combustion • Algorithm development 18 • Global cloudiness • Regional earthquakes • Carbon sequestration • Airfoil optimization • Turbulent flow • Propulsor systems • Nano-devices • Batteries • Solar cells • Reactor design Contact information Julia C. White, INCITE Manager whitejc@DOEleadershipcomputing. org

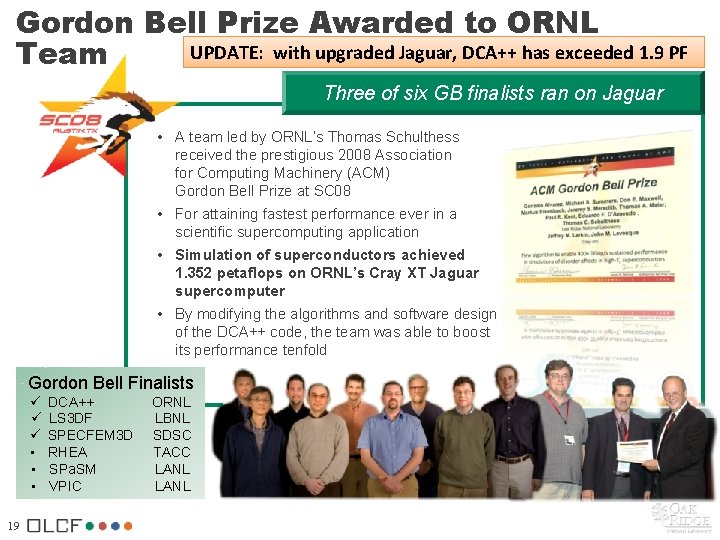

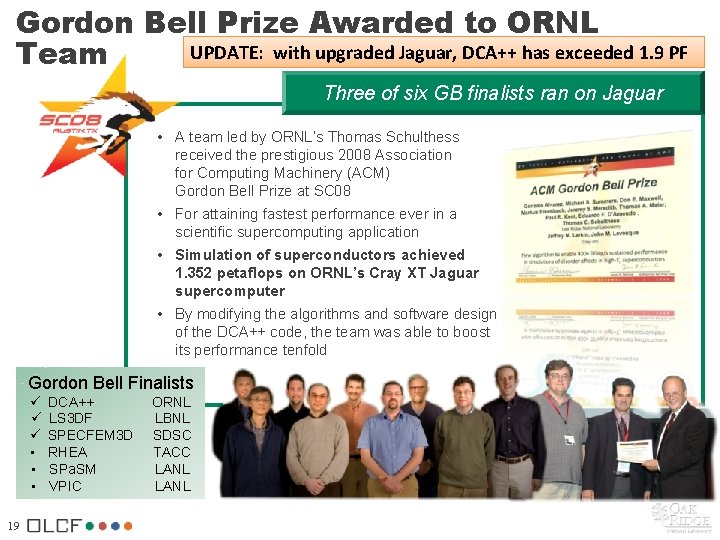

Gordon Bell Prize Awarded to ORNL UPDATE: with upgraded Jaguar, DCA++ has exceeded 1. 9 PF Team Three of six GB finalists ran on Jaguar • A team led by ORNL’s Thomas Schulthess received the prestigious 2008 Association for Computing Machinery (ACM) Gordon Bell Prize at SC 08 • For attaining fastest performance ever in a scientific supercomputing application • Simulation of superconductors achieved 1. 352 petaflops on ORNL’s Cray XT Jaguar supercomputer • By modifying the algorithms and software design of the DCA++ code, the team was able to boost its performance tenfold Gordon Bell Finalists ü ü ü • • • 19 DCA++ LS 3 DF SPECFEM 3 D RHEA SPa. SM VPIC ORNL LBNL SDSC TACC LANL

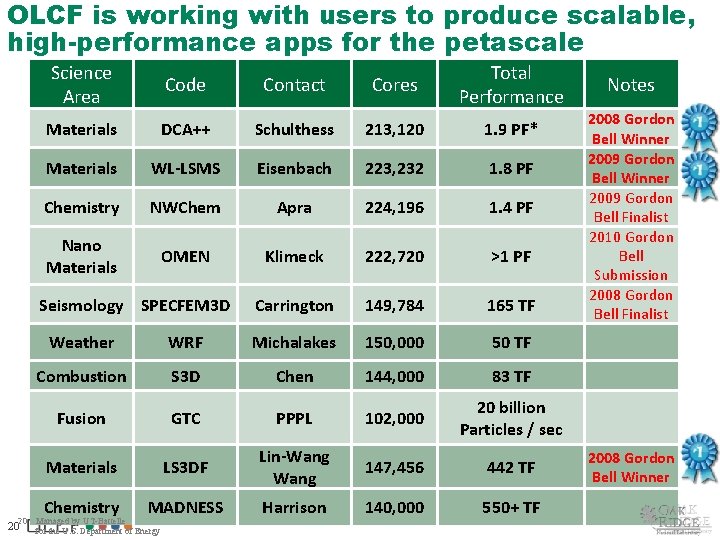

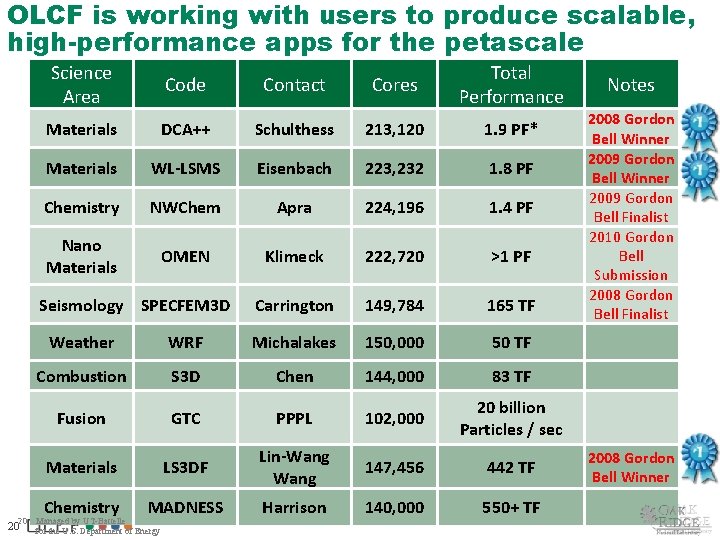

OLCF is working with users to produce scalable, high-performance apps for the petascale Science Area Code Contact Cores Total Performance Materials DCA++ Schulthess 213, 120 1. 9 PF* Materials WL-LSMS Eisenbach 223, 232 1. 8 PF Chemistry NWChem Apra 224, 196 1. 4 PF Nano Materials OMEN Klimeck 222, 720 >1 PF Carrington 149, 784 165 TF Seismology SPECFEM 3 D 20 20 Weather WRF Michalakes 150, 000 50 TF Combustion S 3 D Chen 144, 000 83 TF Fusion GTC PPPL 102, 000 20 billion Particles / sec Materials LS 3 DF Lin-Wang 147, 456 442 TF Chemistry MADNESS Harrison 140, 000 550+ TF Managed by UT-Battelle for the U. S. Department of Energy Notes 2008 Gordon Bell Winner 2009 Gordon Bell Finalist 2010 Gordon Bell Submission 2008 Gordon Bell Finalist 2008 Gordon Bell Winner

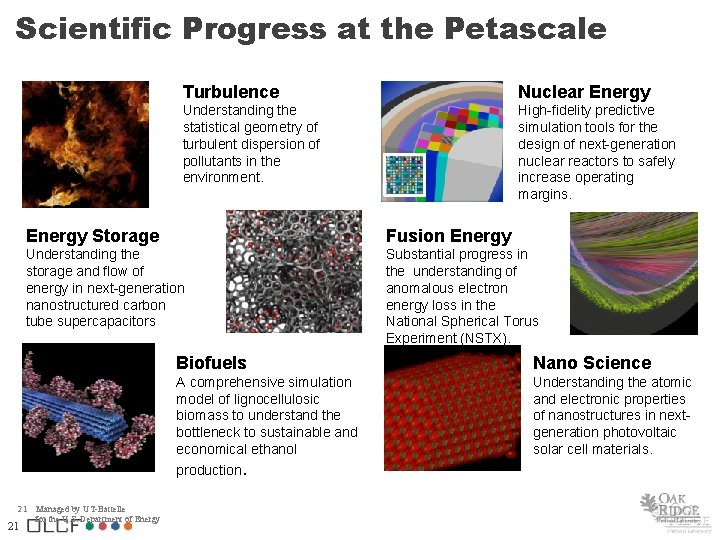

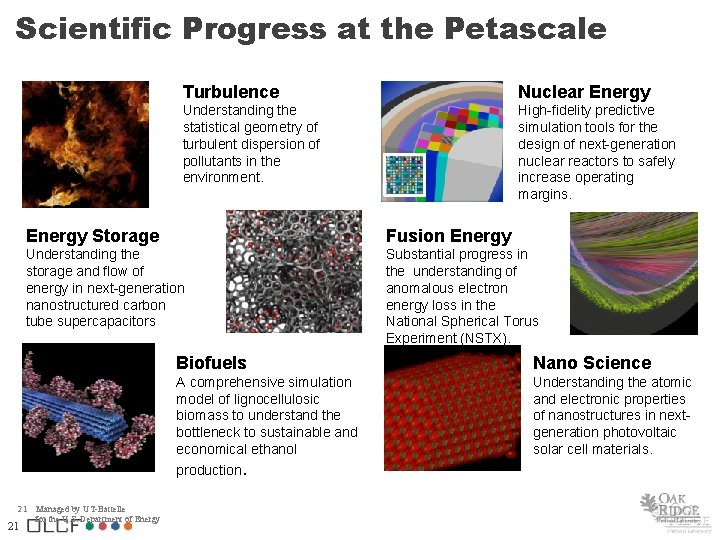

Scientific Progress at the Petascale Nuclear Energy Understanding the statistical geometry of turbulent dispersion of pollutants in the environment. High-fidelity predictive simulation tools for the design of next-generation nuclear reactors to safely increase operating margins. Energy Storage Fusion Energy Understanding the storage and flow of energy in next-generation nanostructured carbon tube supercapacitors Substantial progress in the understanding of anomalous electron energy loss in the National Spherical Torus Experiment (NSTX). 21 21 Turbulence Managed by UT-Battelle for the U. S. Department of Energy Biofuels Nano Science A comprehensive simulation model of lignocellulosic biomass to understand the bottleneck to sustainable and economical ethanol production. Understanding the atomic and electronic properties of nanostructures in nextgeneration photovoltaic solar cell materials.

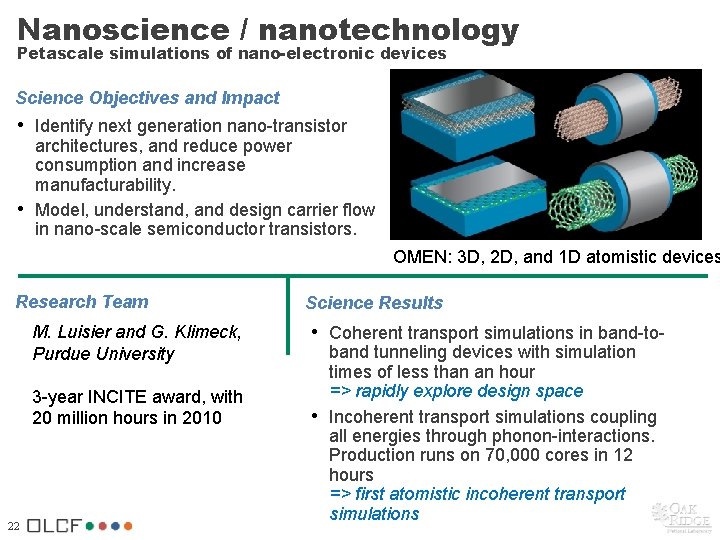

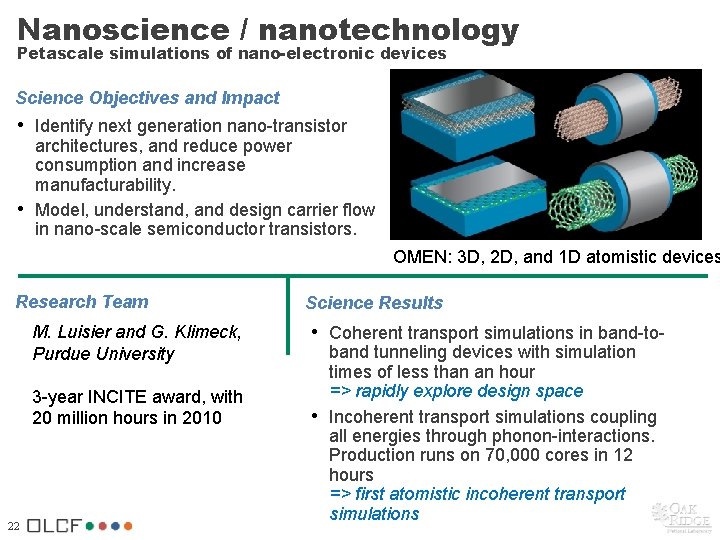

Nanoscience / nanotechnology Petascale simulations of nano-electronic devices Science Objectives and Impact • Identify next generation nano-transistor • architectures, and reduce power consumption and increase manufacturability. Model, understand, and design carrier flow in nano-scale semiconductor transistors. OMEN: 3 D, 2 D, and 1 D atomistic devices Research Team M. Luisier and G. Klimeck, Purdue University 3 -year INCITE award, with 20 million hours in 2010 22 Science Results • Coherent transport simulations in band-to- • band tunneling devices with simulation times of less than an hour => rapidly explore design space Incoherent transport simulations coupling all energies through phonon-interactions. Production runs on 70, 000 cores in 12 hours => first atomistic incoherent transport simulations

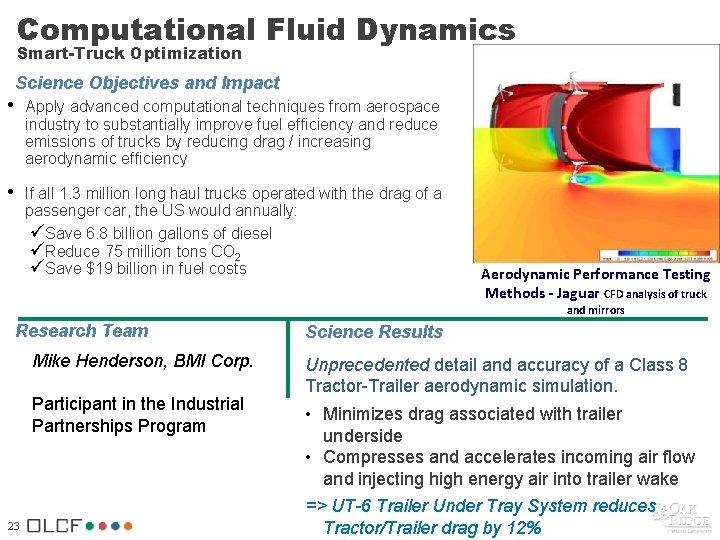

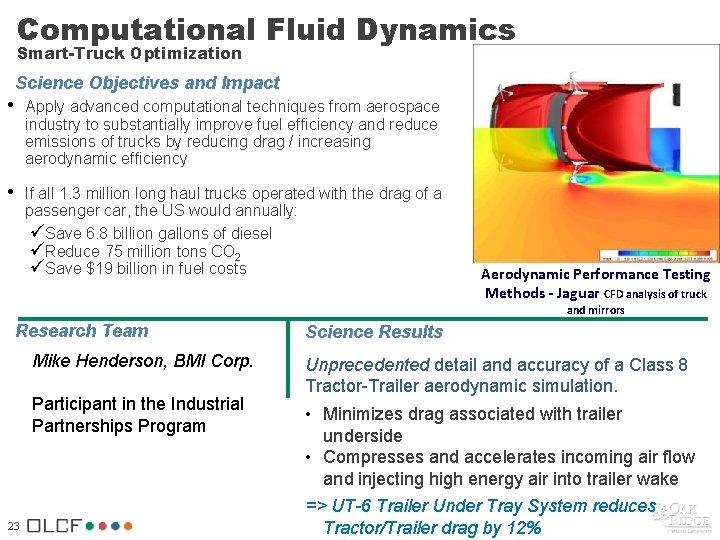

Computational Fluid Dynamics Smart-Truck Optimization Science Objectives and Impact • Apply advanced computational techniques from aerospace industry to substantially improve fuel efficiency and reduce emissions of trucks by reducing drag / increasing aerodynamic efficiency • If all 1. 3 million long haul trucks operated with the drag of a passenger car, the US would annually: üSave 6. 8 billion gallons of diesel üReduce 75 million tons CO 2 üSave $19 billion in fuel costs Aerodynamic Performance Testing Methods - Jaguar CFD analysis of truck and mirrors Research Team Mike Henderson, BMI Corp. Participant in the Industrial Partnerships Program 23 Science Results Unprecedented detail and accuracy of a Class 8 Tractor-Trailer aerodynamic simulation. • Minimizes drag associated with trailer underside • Compresses and accelerates incoming air flow and injecting high energy air into trailer wake => UT-6 Trailer Under Tray System reduces Tractor/Trailer drag by 12%

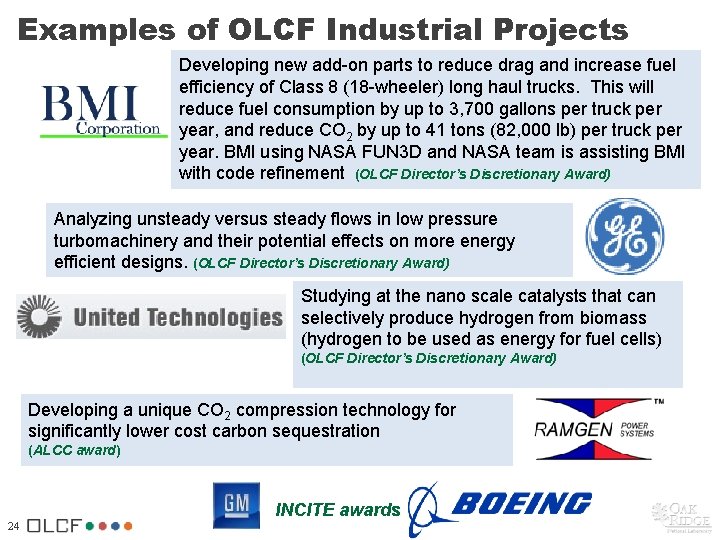

Examples of OLCF Industrial Projects Developing new add-on parts to reduce drag and increase fuel efficiency of Class 8 (18 -wheeler) long haul trucks. This will reduce fuel consumption by up to 3, 700 gallons per truck per year, and reduce CO 2 by up to 41 tons (82, 000 lb) per truck per year. BMI using NASA FUN 3 D and NASA team is assisting BMI with code refinement (OLCF Director’s Discretionary Award) Analyzing unsteady versus steady flows in low pressure turbomachinery and their potential effects on more energy efficient designs. (OLCF Director’s Discretionary Award) Studying at the nano scale catalysts that can selectively produce hydrogen from biomass (hydrogen to be used as energy for fuel cells) (OLCF Director’s Discretionary Award) Developing a unique CO 2 compression technology for significantly lower cost carbon sequestration (ALCC award) INCITE awards 24

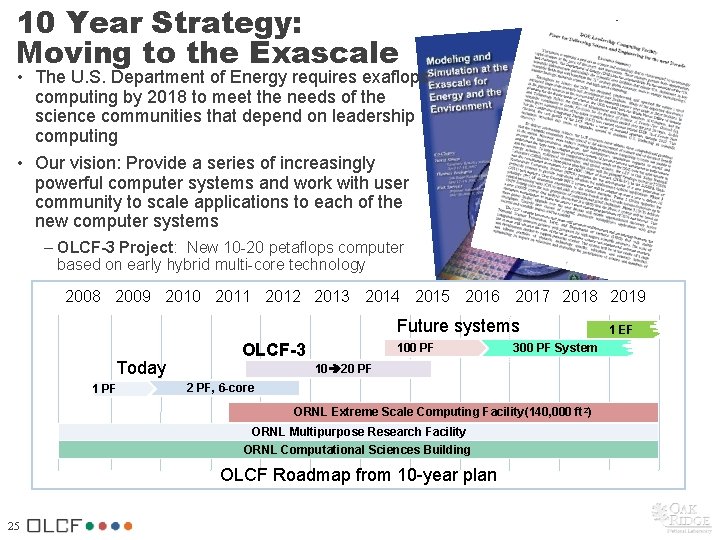

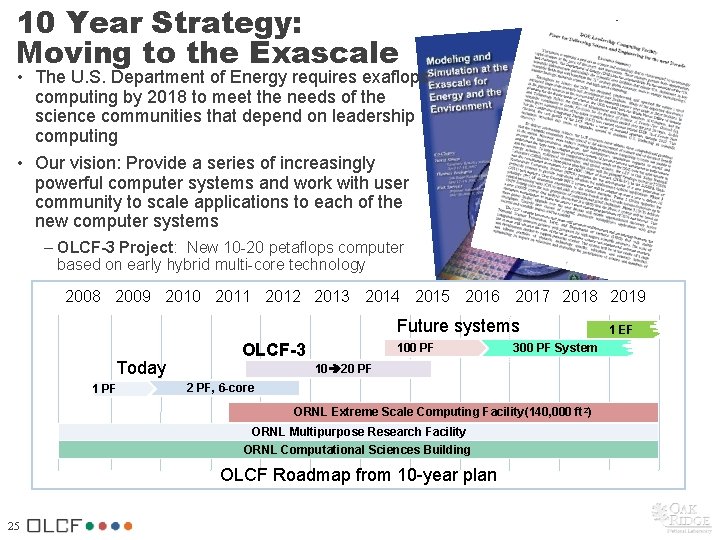

10 Year Strategy: Moving to the Exascale • The U. S. Department of Energy requires exaflops computing by 2018 to meet the needs of the science communities that depend on leadership computing • Our vision: Provide a series of increasingly powerful computer systems and work with user community to scale applications to each of the new computer systems – OLCF-3 Project: New 10 -20 petaflops computer based on early hybrid multi-core technology 2008 2009 2010 2011 2012 2013 2014 2015 2016 2017 2018 2019 Future systems Today 1 PF OLCF-3 100 PF 300 PF System 10 20 PF 2 PF, 6 -core ORNL Extreme Scale Computing Facility(140, 000 ft 2) ORNL Multipurpose Research Facility ORNL Computational Sciences Building OLCF Roadmap from 10 -year plan 25 1 EF

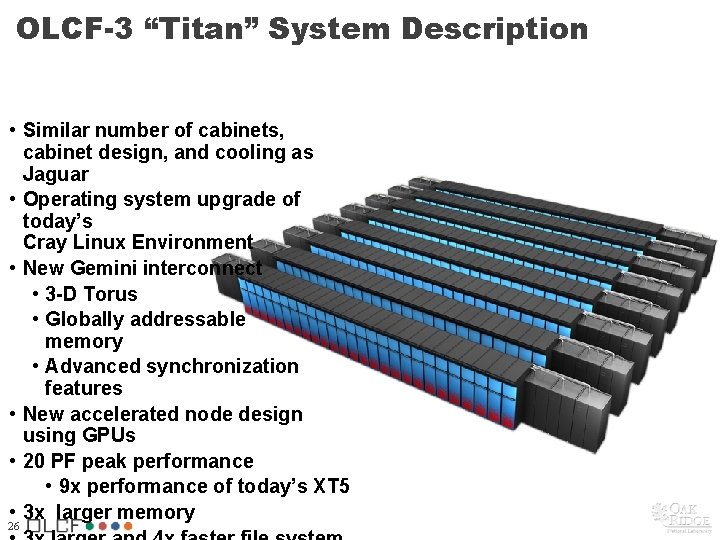

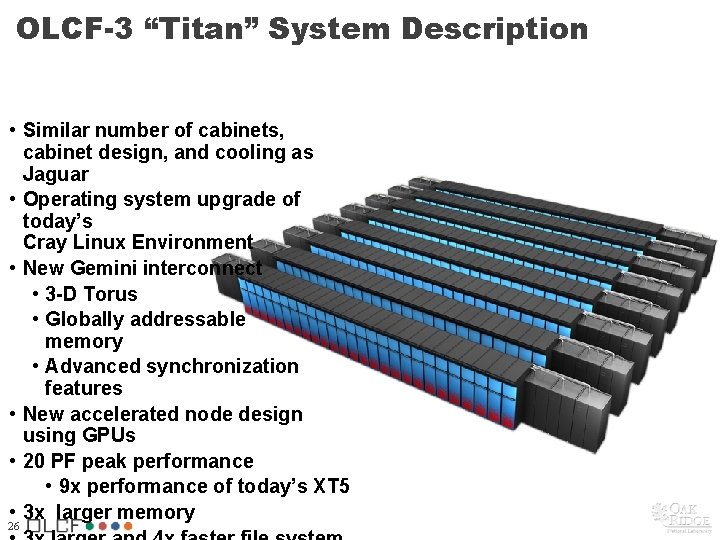

OLCF-3 “Titan” System Description • Similar number of cabinets, cabinet design, and cooling as Jaguar • Operating system upgrade of today’s Cray Linux Environment • New Gemini interconnect • 3 -D Torus • Globally addressable memory • Advanced synchronization features • New accelerated node design using GPUs • 20 PF peak performance • 9 x performance of today’s XT 5 • 3 x larger memory 26