Open v Switch HW offload over DPDK India

- Slides: 19

Open v. Switch HW offload over DPDK India DPDK Summit - Bangalore March-2018 © 2018 Mellanox Technologies 1

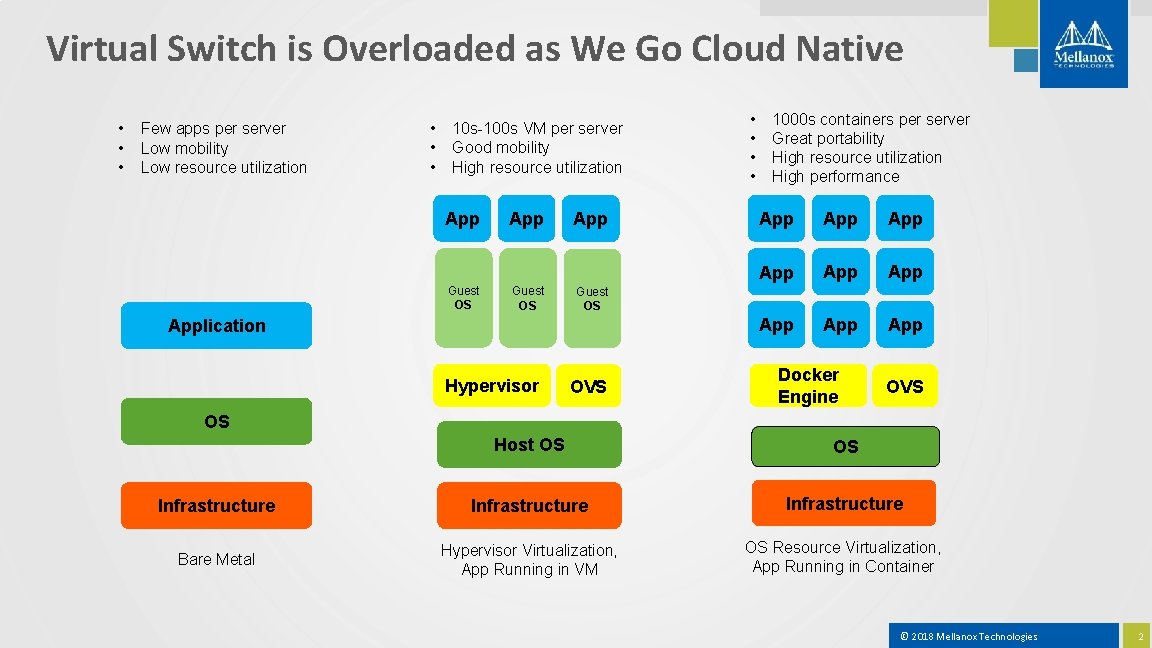

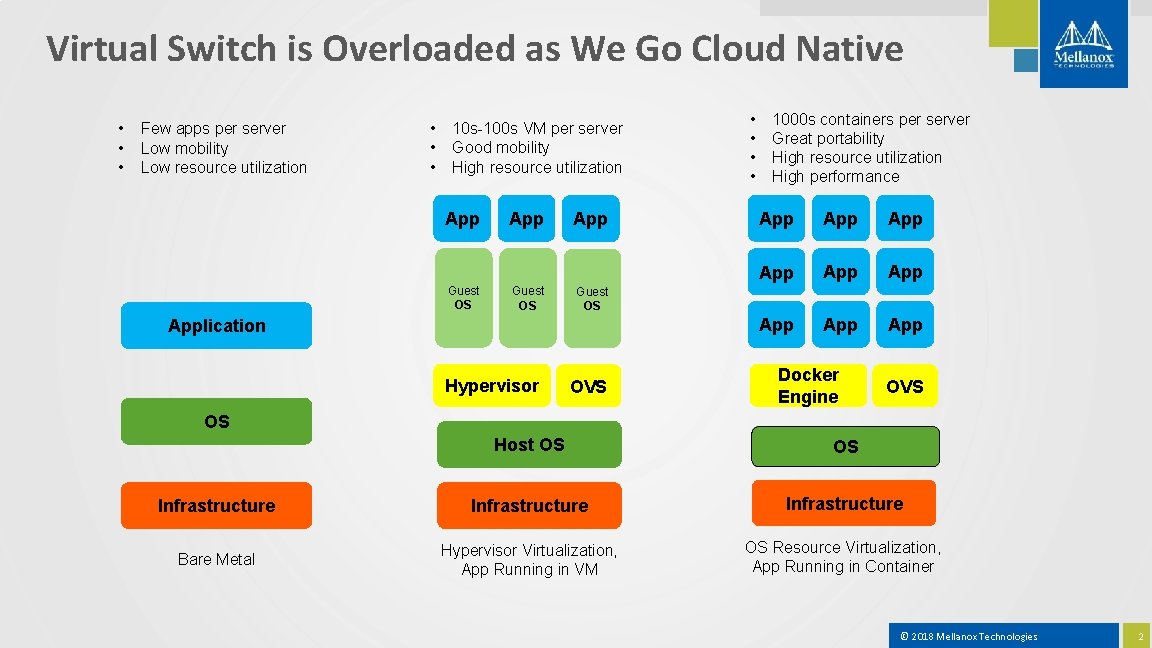

Virtual Switch is Overloaded as We Go Cloud Native • • • Few apps per server Low mobility Low resource utilization • • • 10 s-100 s VM per server Good mobility High resource utilization App Guest OS App 1000 s containers per server Great portability High resource utilization High performance App App App Guest OS Application Hypervisor • • OVS Docker Engine OVS OS Host OS OS Infrastructure Bare Metal Hypervisor Virtualization, App Running in VM OS Resource Virtualization, App Running in Container © 2018 Mellanox Technologies 2

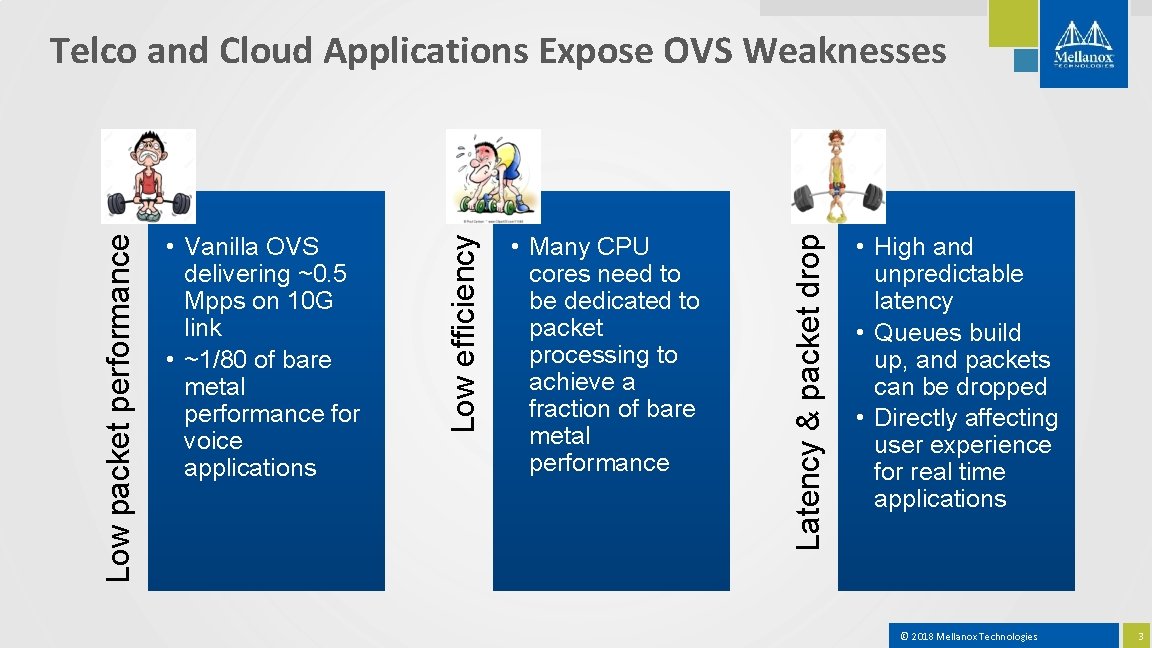

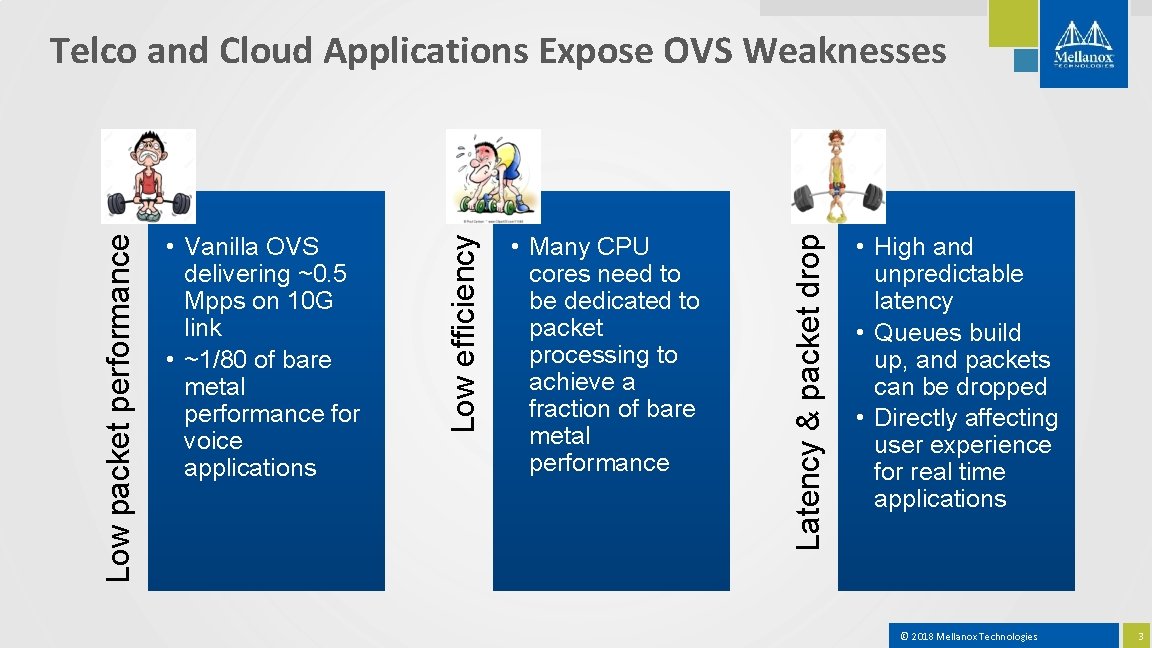

• Many CPU cores need to be dedicated to packet processing to achieve a fraction of bare metal performance Latency & packet drop • Vanilla OVS delivering ~0. 5 Mpps on 10 G link • ~1/80 of bare metal performance for voice applications Low efficiency Low packet performance Telco and Cloud Applications Expose OVS Weaknesses • High and unpredictable latency • Queues build up, and packets can be dropped • Directly affecting user experience for real time applications © 2018 Mellanox Technologies 3

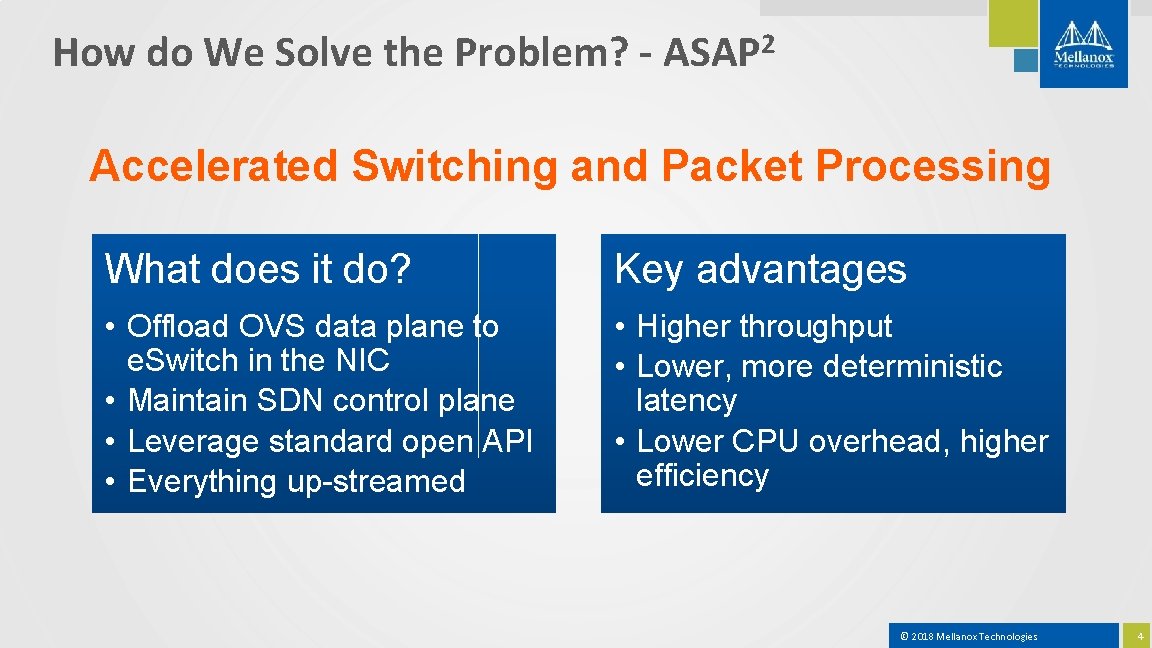

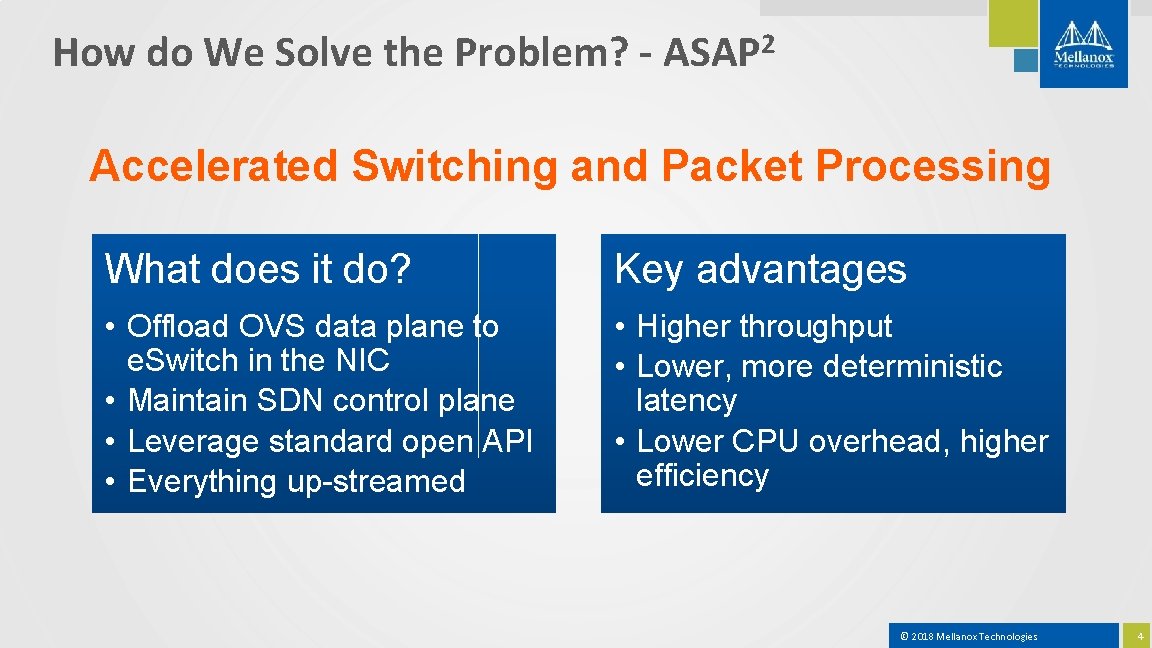

How do We Solve the Problem? - ASAP 2 Accelerated Switching and Packet Processing What does it do? Key advantages • Offload OVS data plane to e. Switch in the NIC • Maintain SDN control plane • Leverage standard open API • Everything up-streamed • Higher throughput • Lower, more deterministic latency • Lower CPU overhead, higher efficiency © 2018 Mellanox Technologies 4

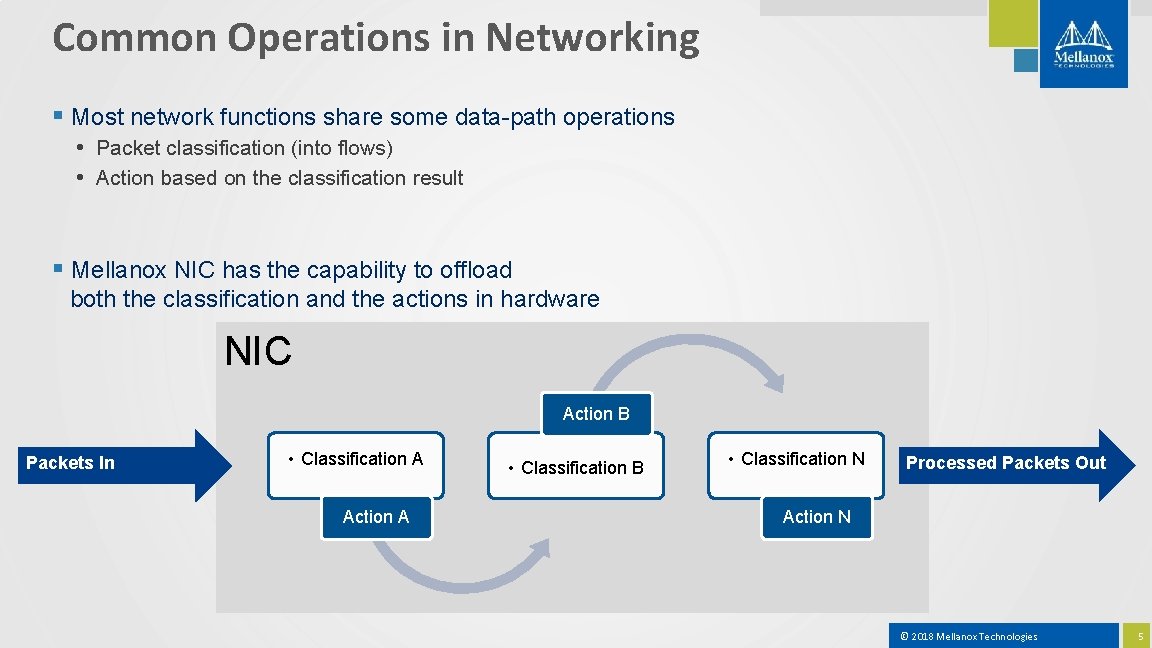

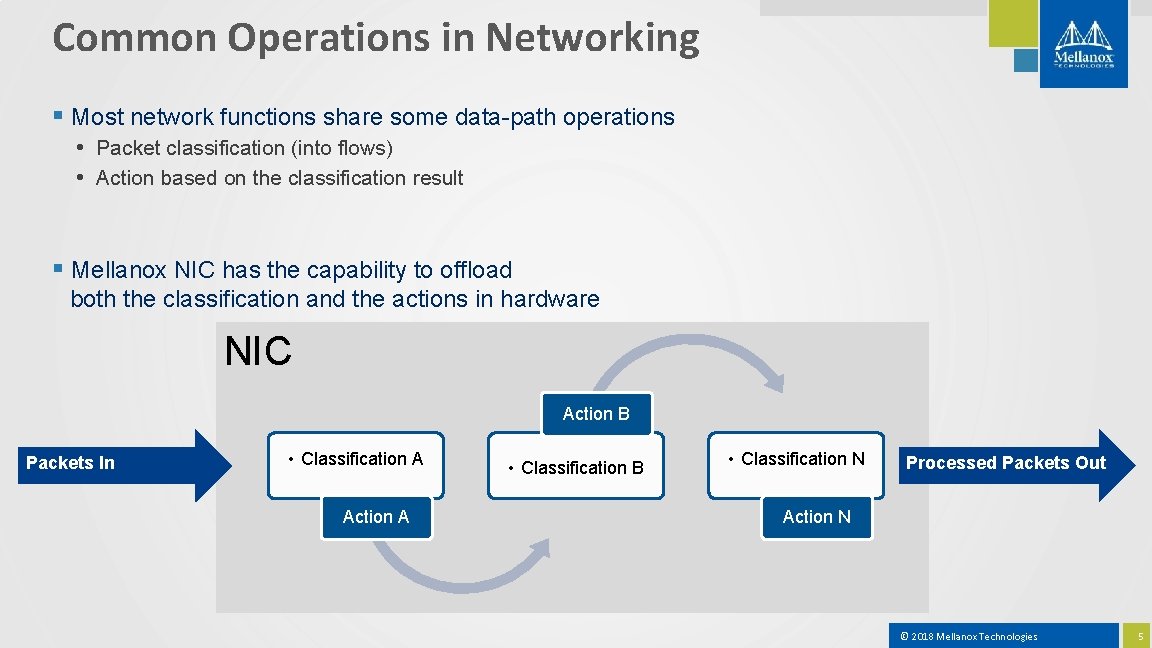

Common Operations in Networking § Most network functions share some data-path operations • Packet classification (into flows) • Action based on the classification result § Mellanox NIC has the capability to offload both the classification and the actions in hardware NIC Action B Packets In • Classification A Action A • Classification B • Classification N Processed Packets Out Action N © 2018 Mellanox Technologies 5

Accelerated Virtual Switch (ASAP 2 -Flex) © 2018 Mellanox Technologies 6

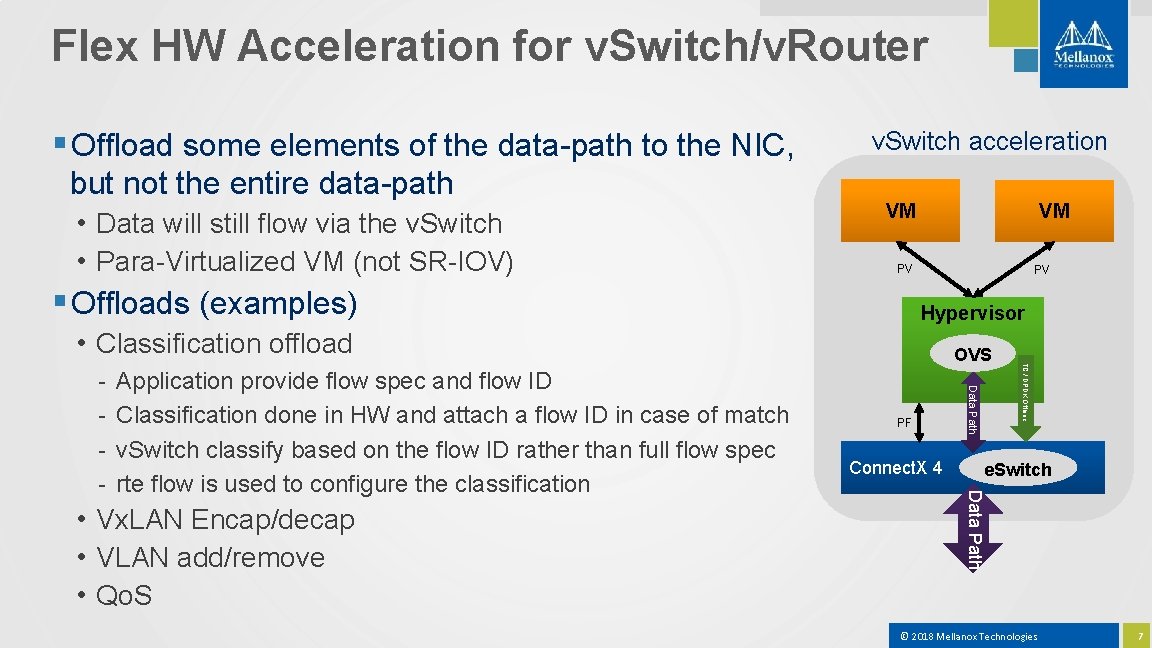

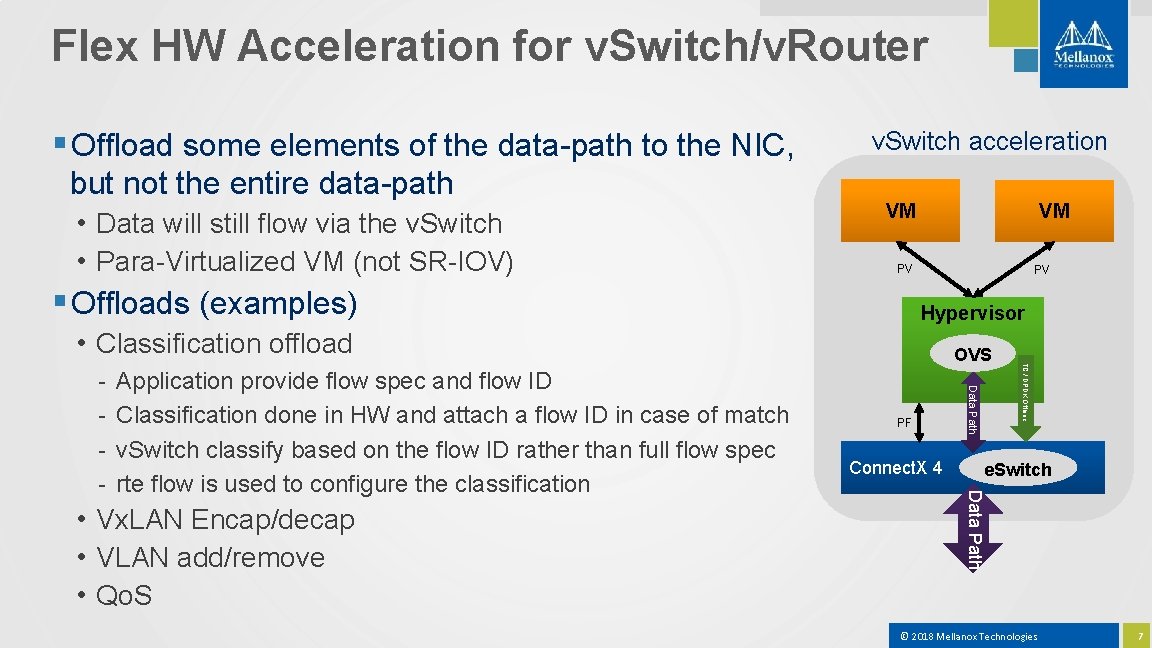

Flex HW Acceleration for v. Switch/v. Router § Offload some elements of the data-path to the NIC, but not the entire data-path • Data will still flow via the v. Switch • Para-Virtualized VM (not SR-IOV) v. Switch acceleration VM VM PV § Offloads (examples) PV Hypervisor • Classification offload Connect. X 4 TC / DPDK Offload • Vx. LAN Encap/decap • VLAN add/remove • Qo. S PF e. Switch Data Path Application provide flow spec and flow ID Classification done in HW and attach a flow ID in case of match v. Switch classify based on the flow ID rather than full flow spec rte flow is used to configure the classification Data Path - OVS © 2018 Mellanox Technologies 7

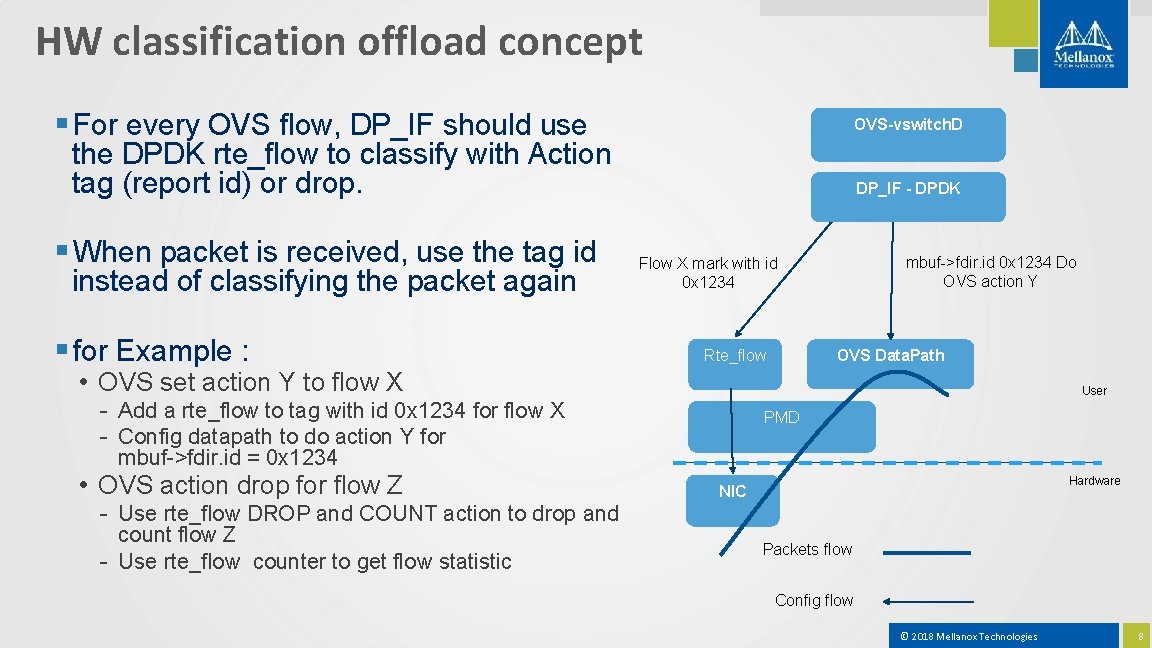

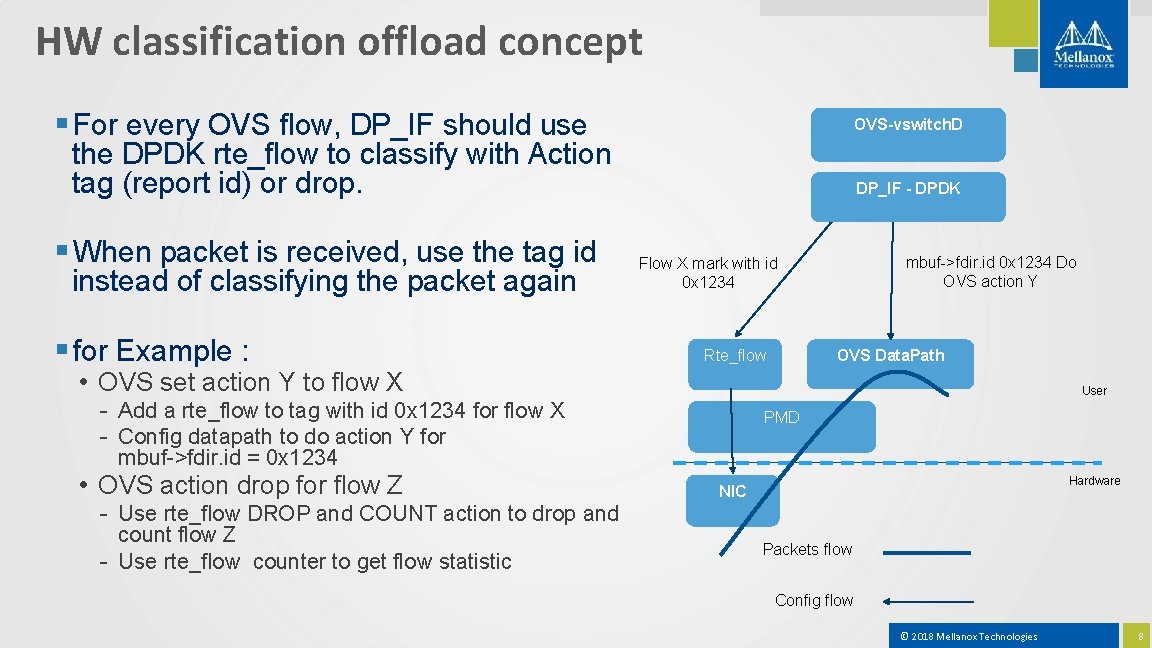

HW classification offload concept § For every OVS flow, DP_IF should use OVS-vswitch. D the DPDK rte_flow to classify with Action tag (report id) or drop. § When packet is received, use the tag id instead of classifying the packet again § for Example : DP_IF - DPDK mbuf->fdir. id 0 x 1234 Do OVS action Y Flow X mark with id 0 x 1234 Rte_flow OVS Data. Path • OVS set action Y to flow X User - Add a rte_flow to tag with id 0 x 1234 for flow X - Config datapath to do action Y for PMD mbuf->fdir. id = 0 x 1234 • OVS action drop for flow Z - Use rte_flow DROP and COUNT action to drop and count flow Z - Use rte_flow counter to get flow statistic Hardware NIC Packets flow Config flow © 2018 Mellanox Technologies 8

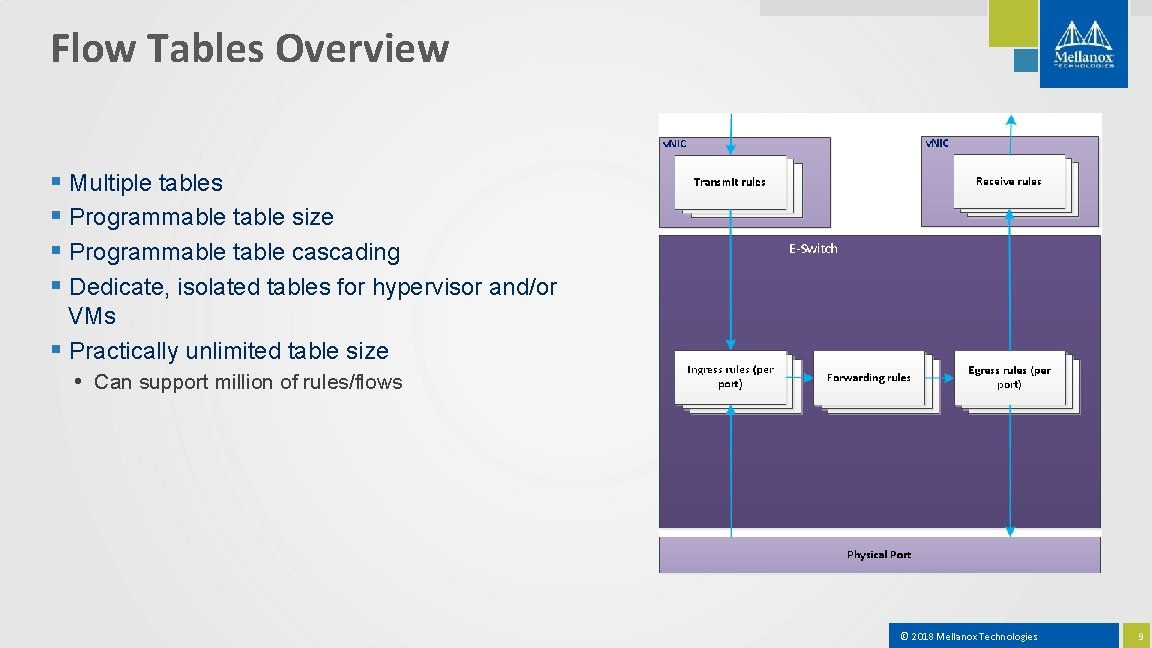

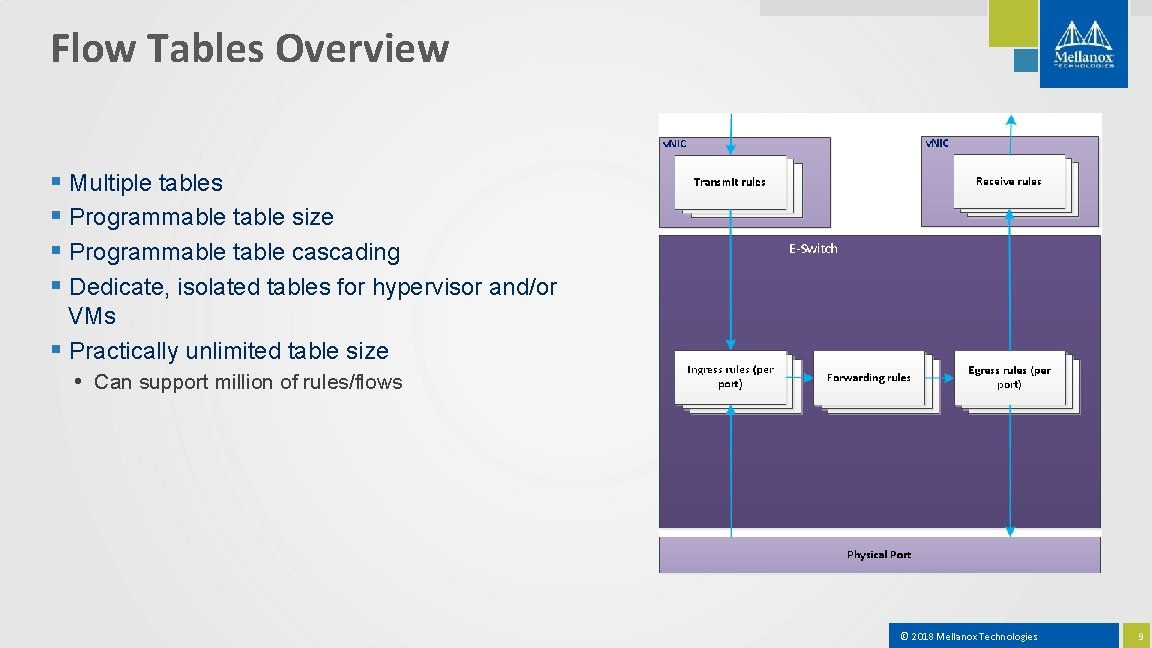

Flow Tables Overview § Multiple tables § Programmable table size § Programmable table cascading § Dedicate, isolated tables for hypervisor and/or VMs § Practically unlimited table size • Can support million of rules/flows © 2018 Mellanox Technologies 9

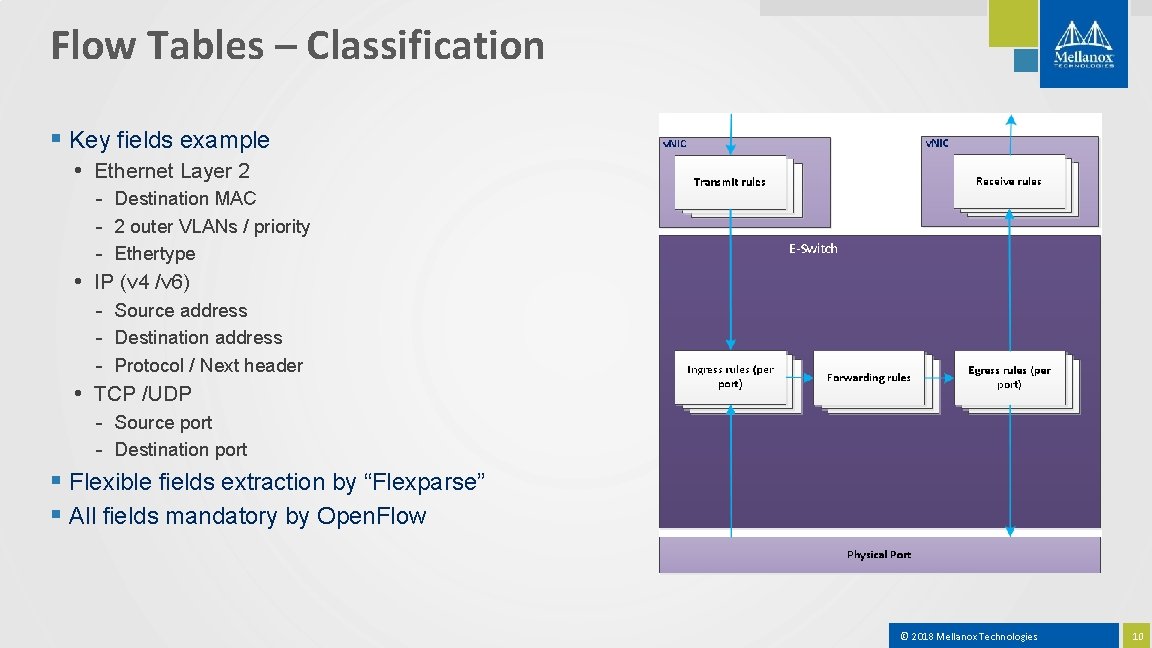

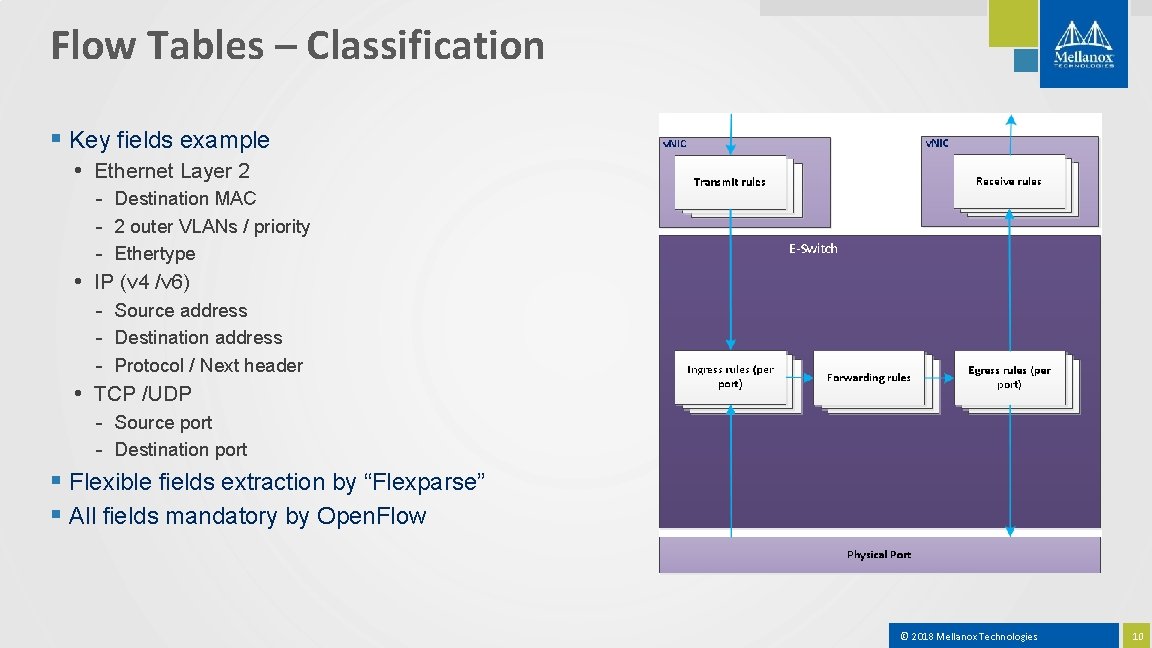

Flow Tables – Classification § Key fields example • Ethernet Layer 2 - Destination MAC - 2 outer VLANs / priority - Ethertype • IP (v 4 /v 6) - Source address - Destination address - Protocol / Next header • TCP /UDP - Source port - Destination port § Flexible fields extraction by “Flexparse” § All fields mandatory by Open. Flow © 2018 Mellanox Technologies 10

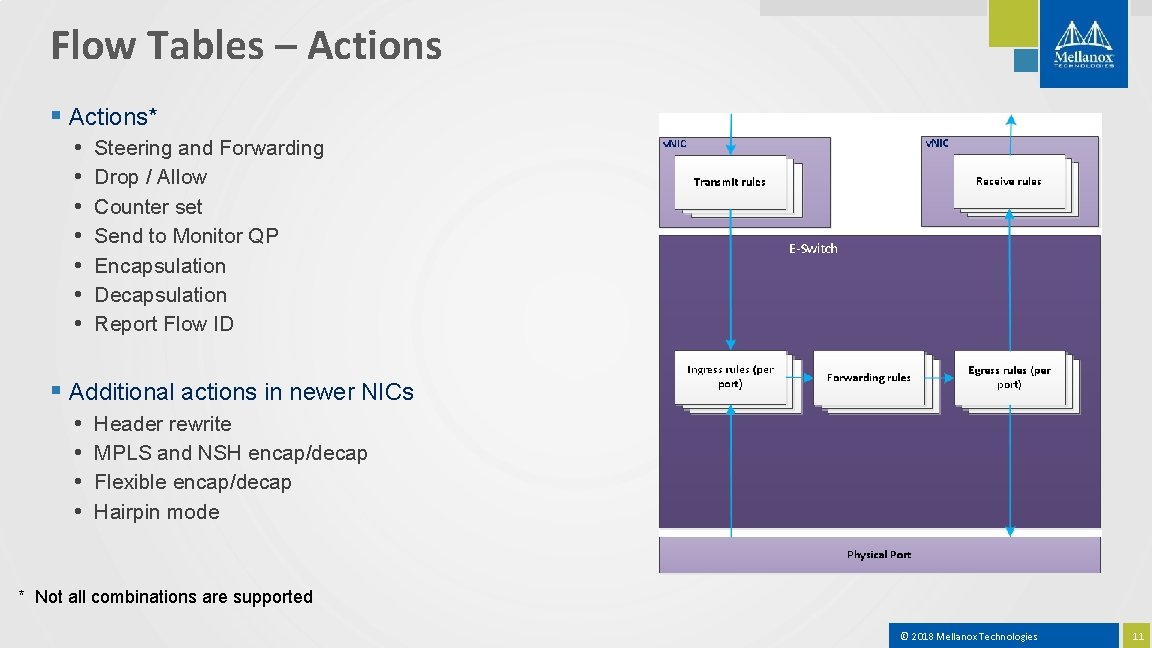

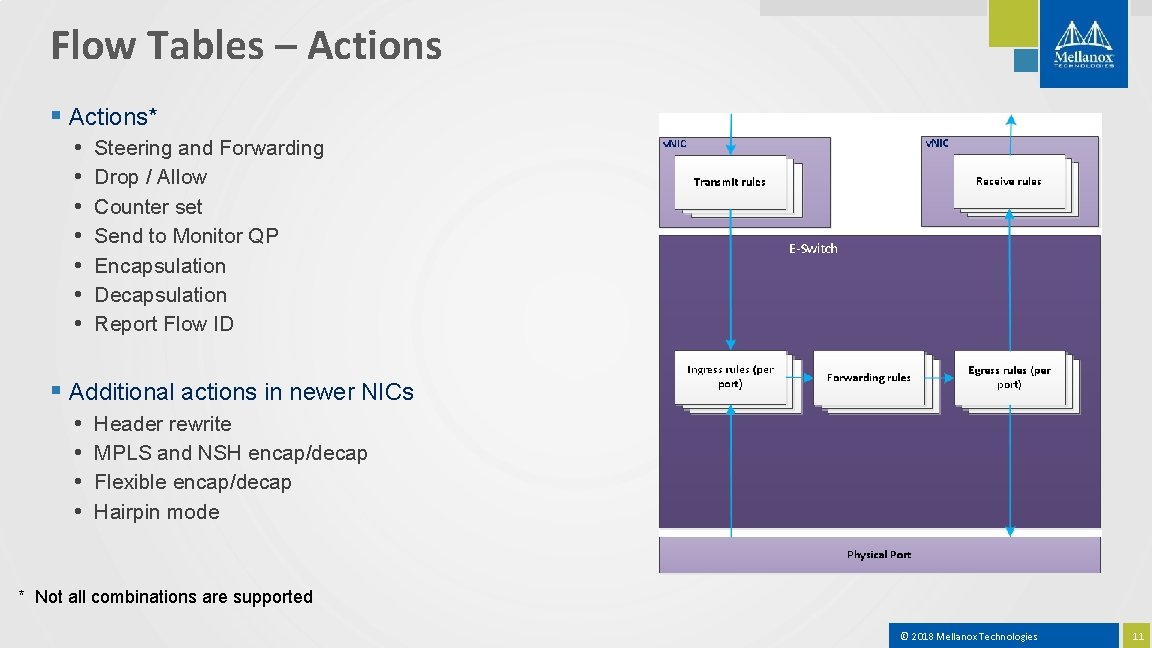

Flow Tables – Actions § Actions* • • Steering and Forwarding Drop / Allow Counter set Send to Monitor QP Encapsulation Decapsulation Report Flow ID § Additional actions in newer NICs • • Header rewrite MPLS and NSH encap/decap Flexible encap/decap Hairpin mode * Not all combinations are supported © 2018 Mellanox Technologies 11

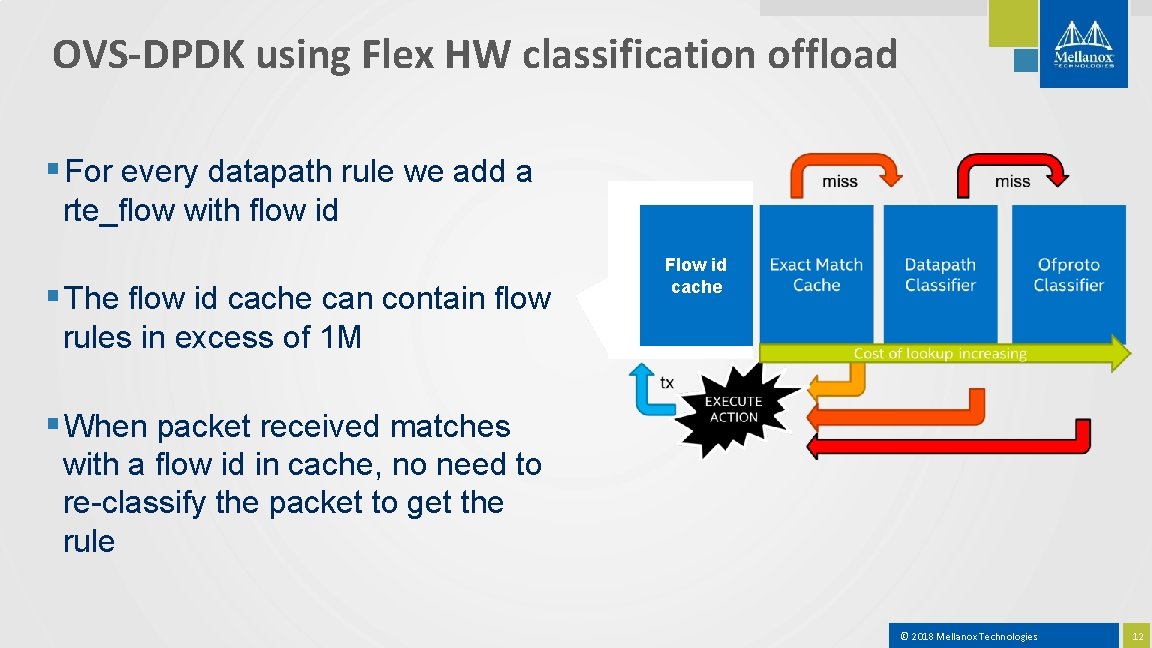

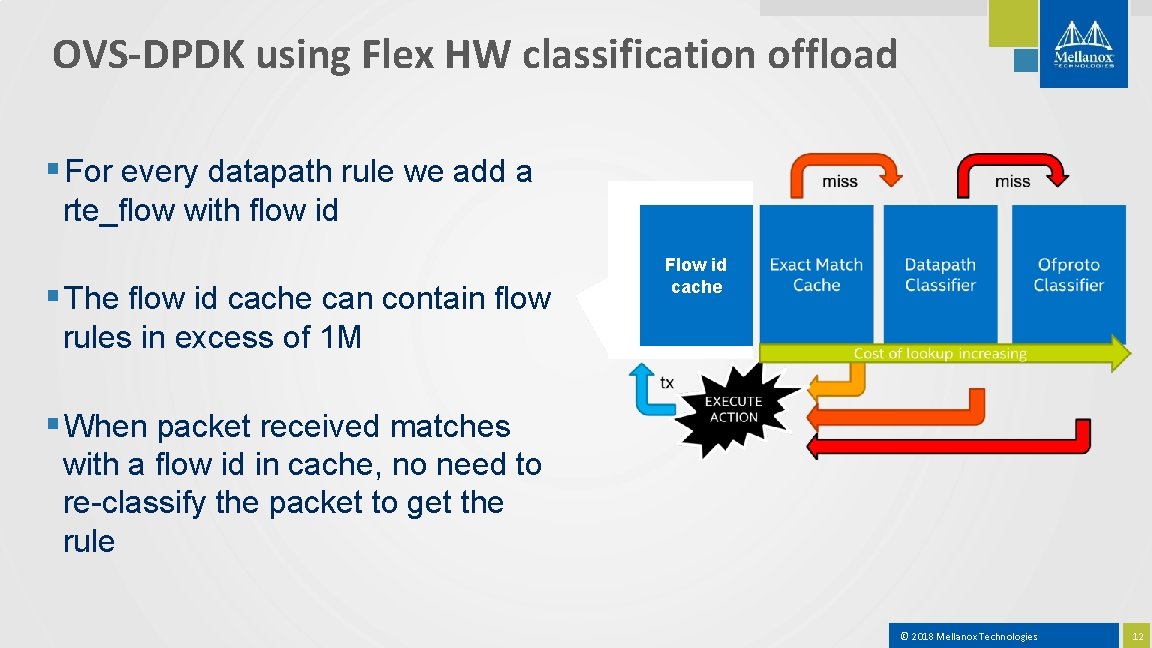

OVS-DPDK using Flex HW classification offload § For every datapath rule we add a rte_flow with flow id § The flow id cache can contain flow Flow id cache rules in excess of 1 M § When packet received matches with a flow id in cache, no need to re-classify the packet to get the rule © 2018 Mellanox Technologies 12

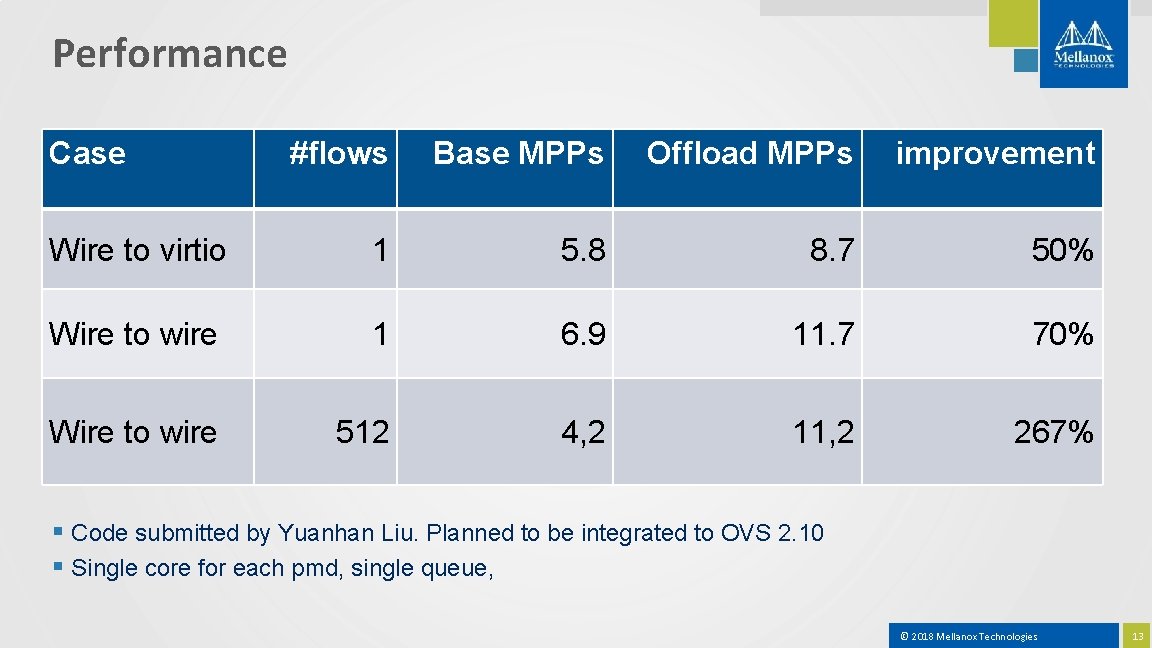

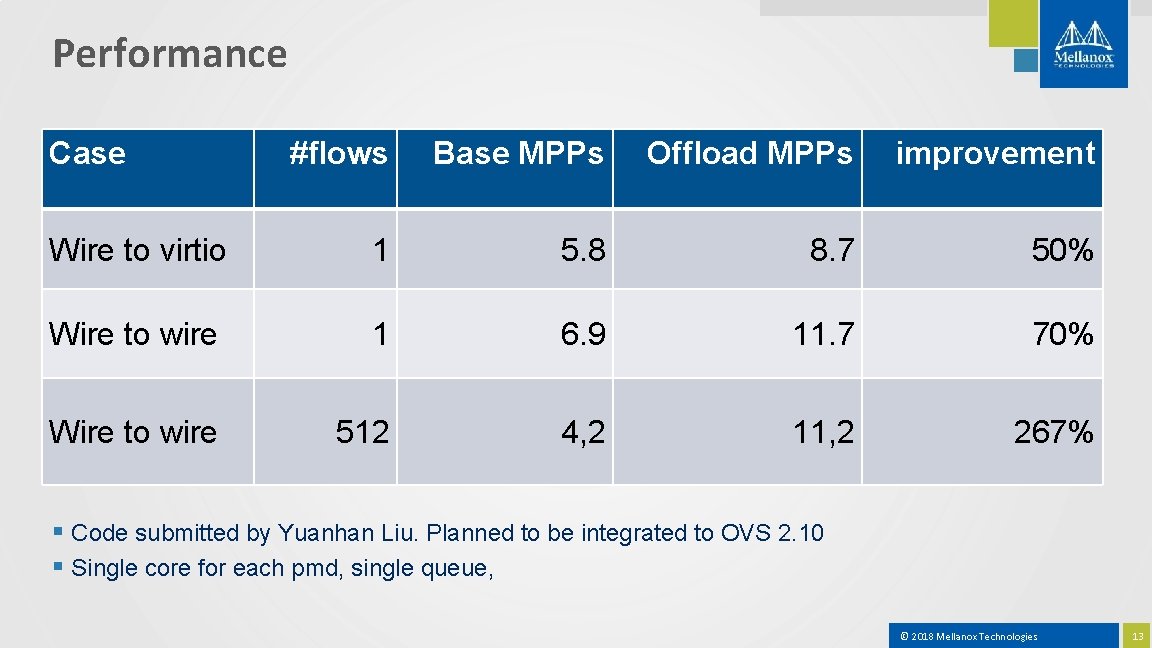

Performance Case #flows Base MPPs Offload MPPs improvement Wire to virtio 1 5. 8 8. 7 50% Wire to wire 1 6. 9 11. 7 70% Wire to wire 512 4, 2 11, 2 267% § Code submitted by Yuanhan Liu. Planned to be integrated to OVS 2. 10 § Single core for each pmd, single queue, © 2018 Mellanox Technologies 13

Full OVS Offload (ASAP 2 -Direct) © 2018 Mellanox Technologies 14

Full HW Offload for v. Switch/v. Router acceleration § Offload the whole packet processing onto the embedded switch § Split control plane and forwarding plane § Forwarding plane – use the embedded switch § Remove the cost of dataplane in SW § SRIOV based © 2018 Mellanox Technologies 15

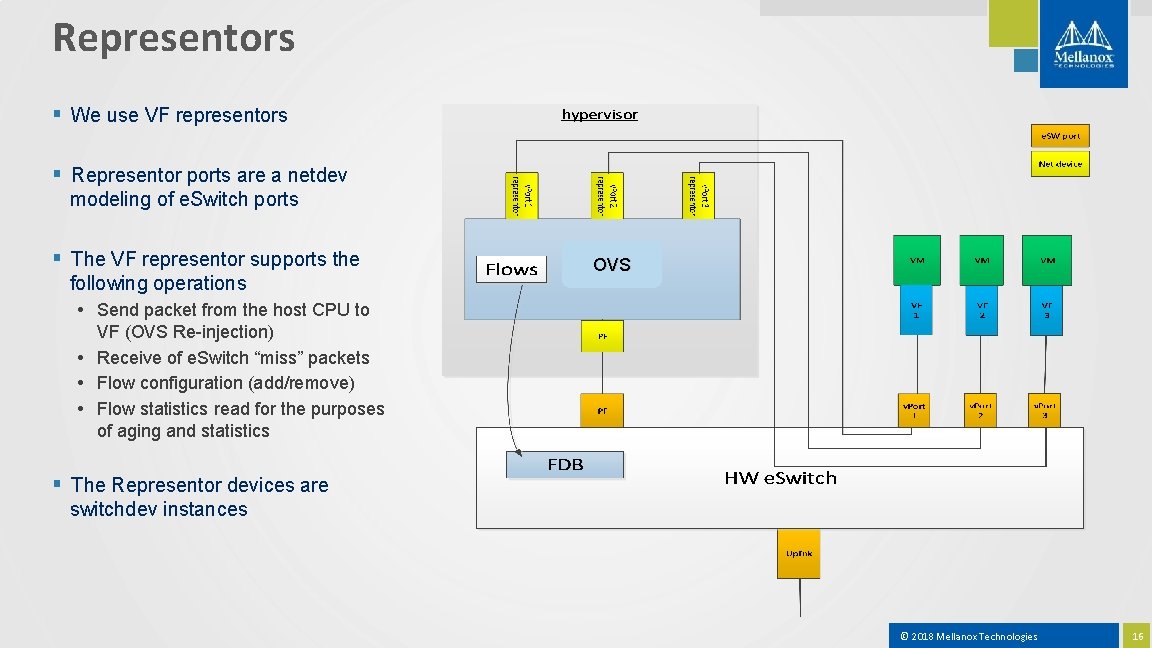

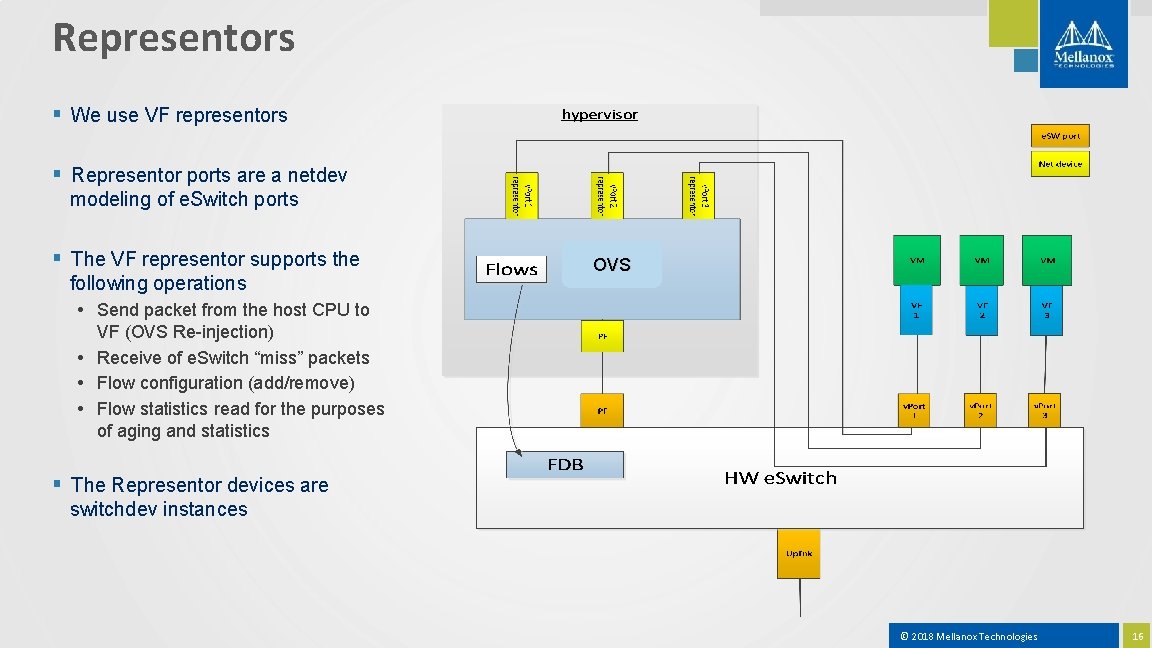

Representors § We use VF representors § Representor ports are a netdev modeling of e. Switch ports § The VF representor supports the following operations • Send packet from the host CPU to OVS VF (OVS Re-injection) • Receive of e. Switch “miss” packets • Flow configuration (add/remove) • Flow statistics read for the purposes of aging and statistics § The Representor devices are switchdev instances © 2018 Mellanox Technologies 16

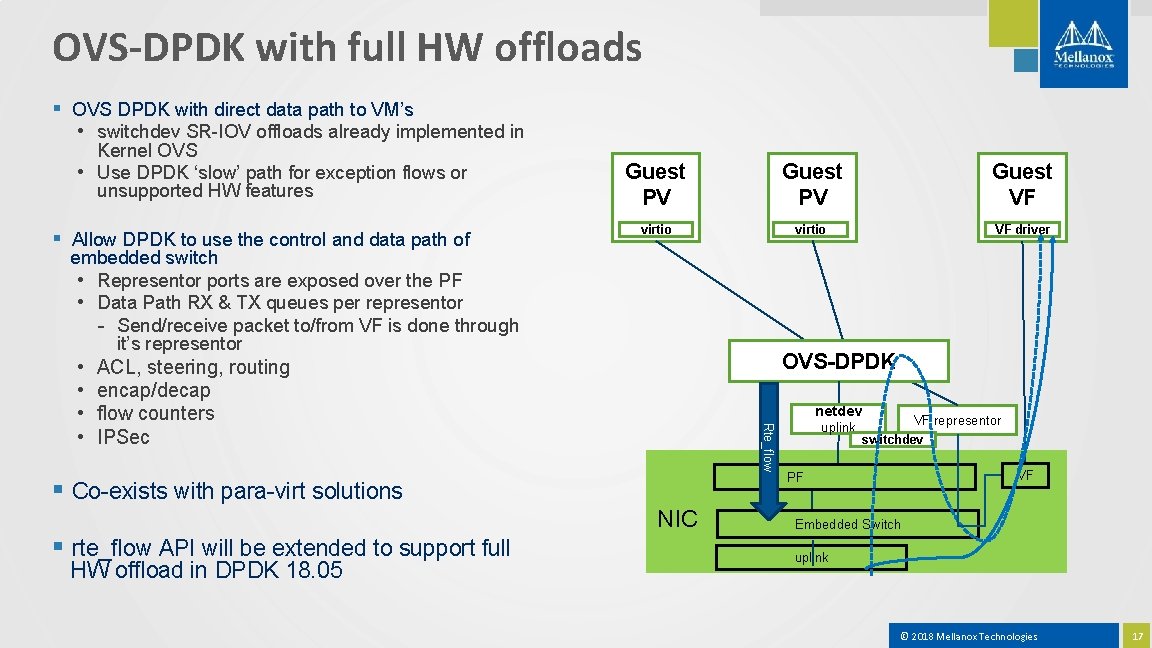

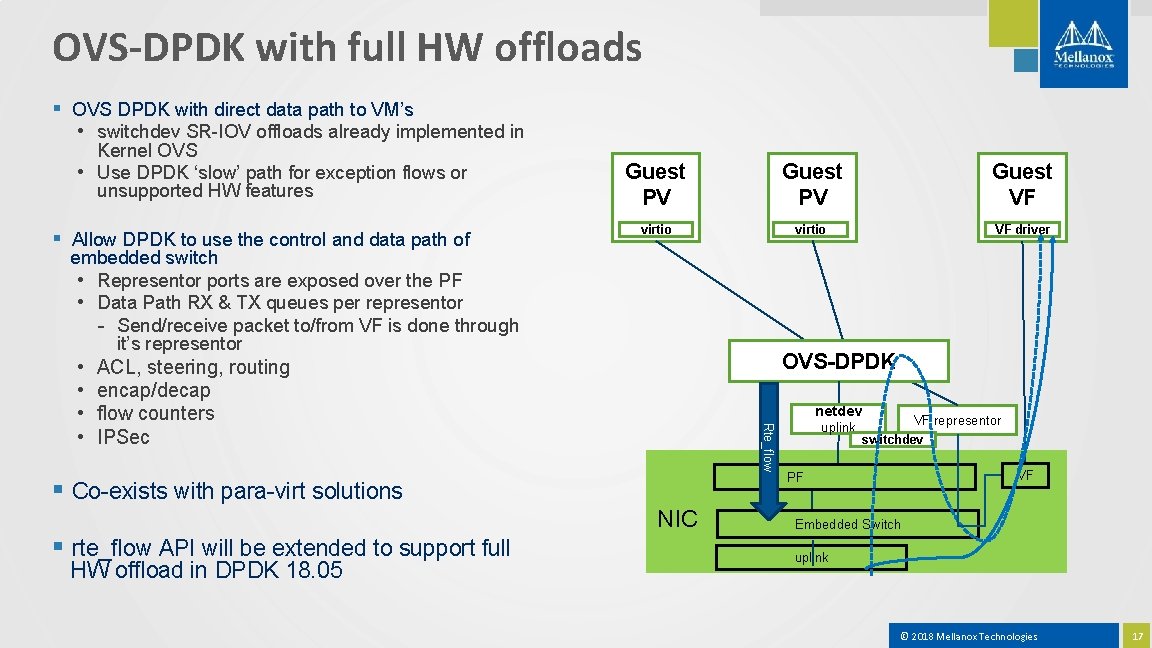

OVS-DPDK with full HW offloads § OVS DPDK with direct data path to VM’s • switchdev SR-IOV offloads already implemented in Kernel OVS • Use DPDK ‘slow’ path for exception flows or unsupported HW features § Allow DPDK to use the control and data path of Guest PV Guest VF virtio VF driver embedded switch • Representor ports are exposed over the PF • Data Path RX & TX queues per representor - Send/receive packet to/from VF is done through it’s representor OVS-DPDK ACL, steering, routing encap/decap flow counters IPSec netdev Rte_flow • • § Co-exists with para-virt solutions NIC § rte_flow API will be extended to support full HW offload in DPDK 18. 05 uplink VF representor switchdev VF PF Embedded Switch uplink © 2018 Mellanox Technologies 17

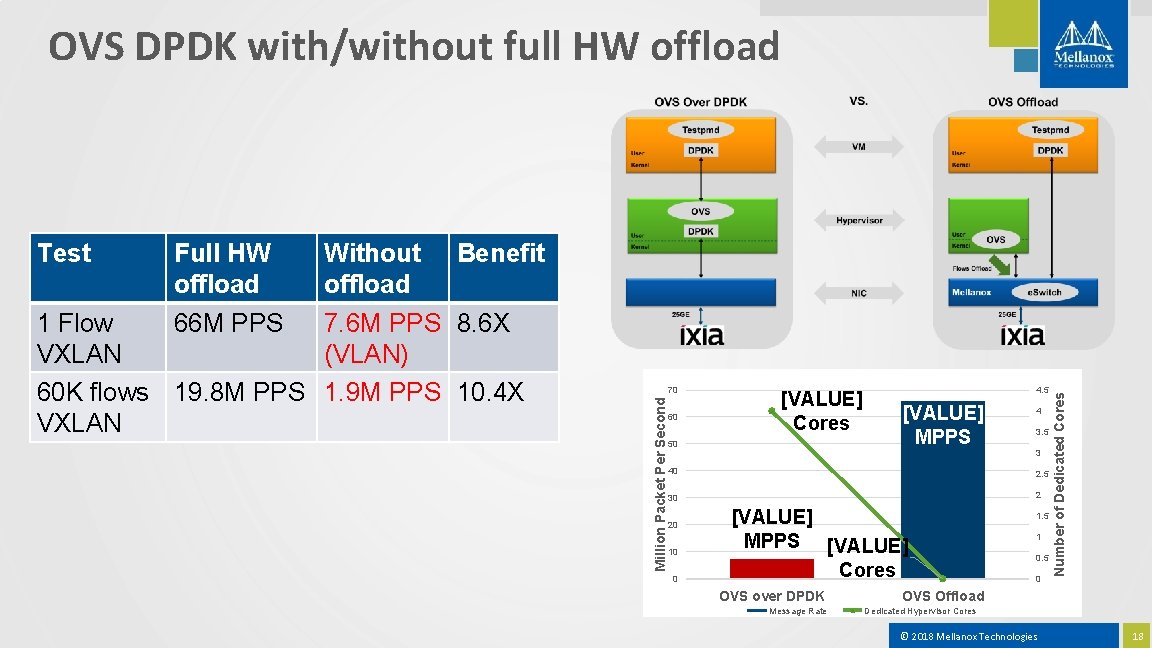

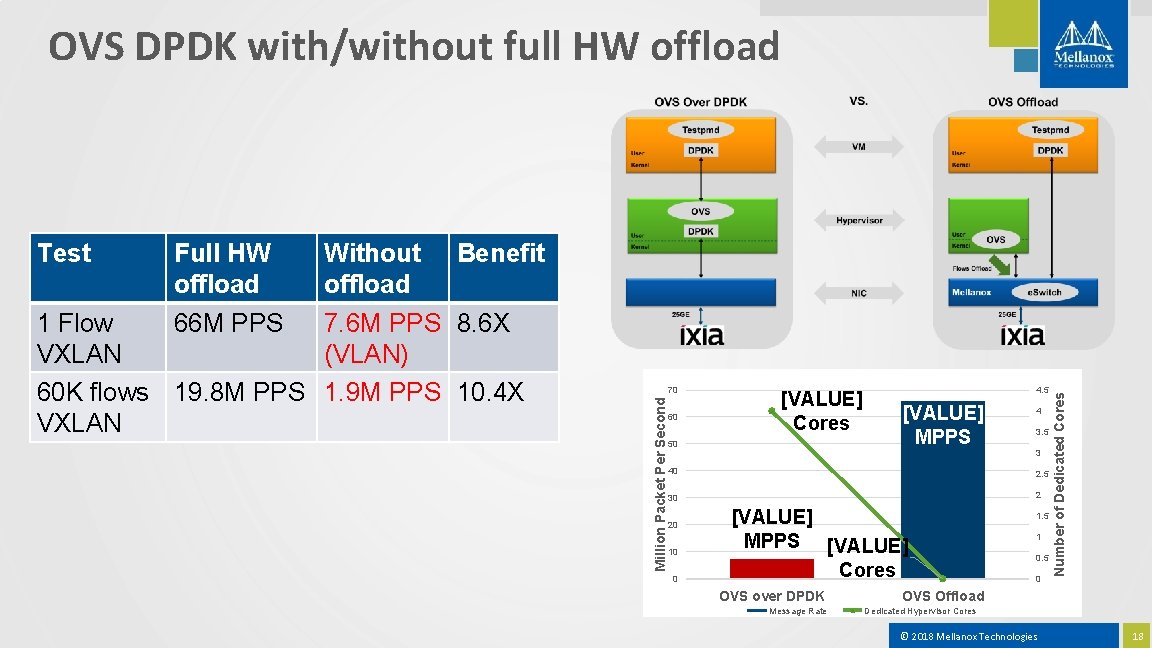

OVS DPDK with/without full HW offload Without Benefit offload 1 Flow 7. 6 M PPS 8. 6 X VXLAN (VLAN) 60 K flows 19. 8 M PPS 1. 9 M PPS 10. 4 X VXLAN 70 60 [VALUE] Cores 50 4. 5 [VALUE] MPPS 4 3. 5 3 40 2. 5 30 2 20 10 0 [VALUE] MPPS [VALUE] Cores OVS over DPDK Message Rate 1. 5 1 0. 5 0 Number of Dedicated Cores Full HW offload 66 M PPS Million Packet Per Second Test OVS Offload Dedicated Hypervisor Cores © 2018 Mellanox Technologies 18

Thank You © 2018 Mellanox Technologies 19