NERSC PVP Roadmap Jonathan Carter NERSC User Services

- Slides: 18

NERSC PVP Roadmap Jonathan Carter NERSC User Services jcarter@nersc. gov 510 486 -7514 Sherwood Fusion Conference 1

NERSC The Department of Energy, Office of Science Supercomputer Facility n Unclassified, open facility; serving over 2000 users in all scientific disciplines relevant to the DOE mission n 25 th anniversary in 1999 n Sherwood Fusion Conference 2

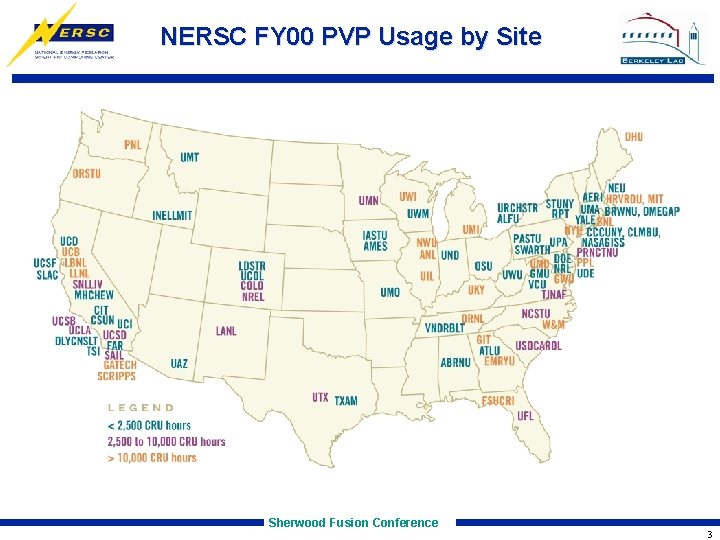

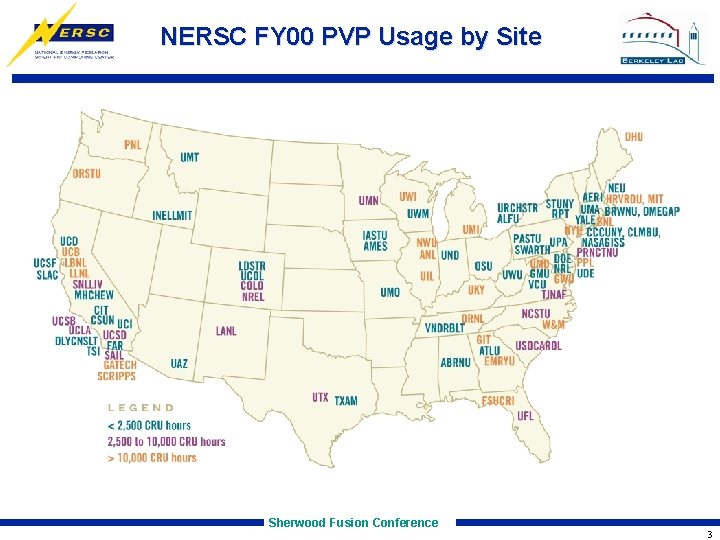

NERSC FY 00 PVP Usage by Site Sherwood Fusion Conference 3

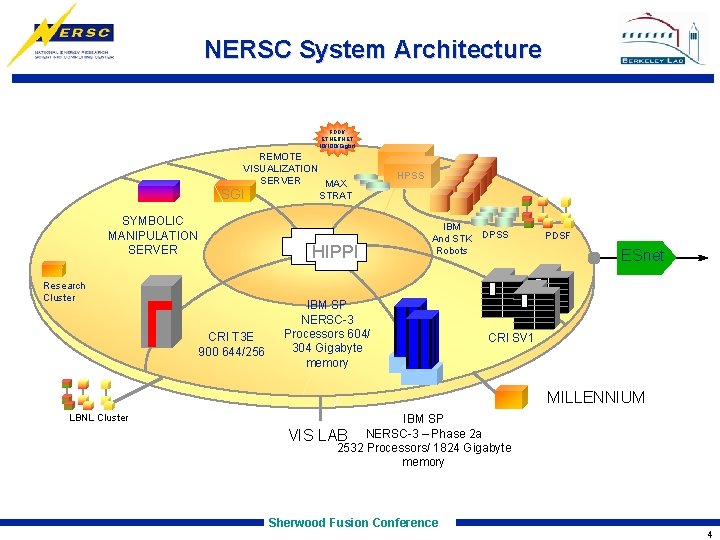

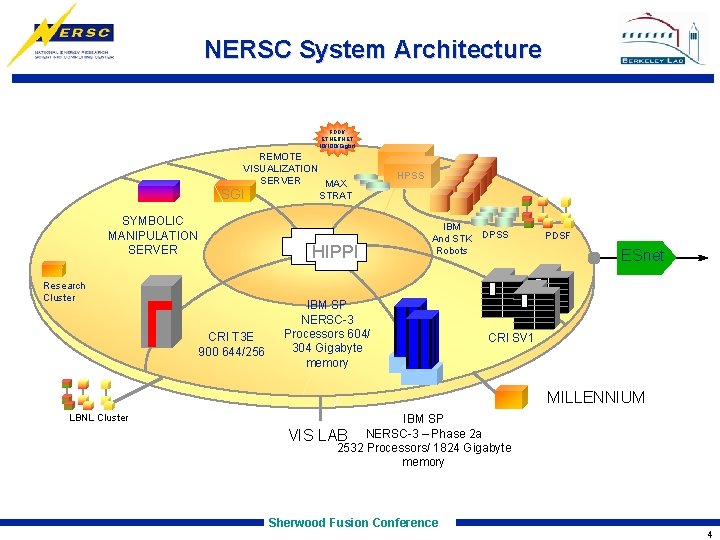

NERSC System Architecture FDDI/ ETHERNET 10/100/Gigbit REMOTE VISUALIZATION SERVER MAX SGI STRAT SYMBOLIC MANIPULATION SERVER HIPPI Research Cluster CRI T 3 E 900 644/256 HPSS IBM And STK Robots IBM SP NERSC-3 Processors 604/ 304 Gigabyte memory DPSS PDSF ESnet CRI SV 1 MILLENNIUM LBNL Cluster VIS IBM SP LAB NERSC-3 – Phase 2 a 2532 Processors/ 1824 Gigabyte memory Sherwood Fusion Conference 4

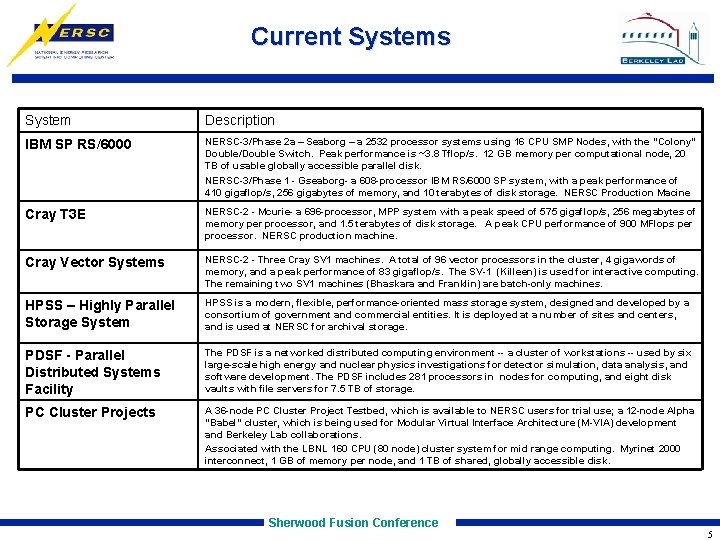

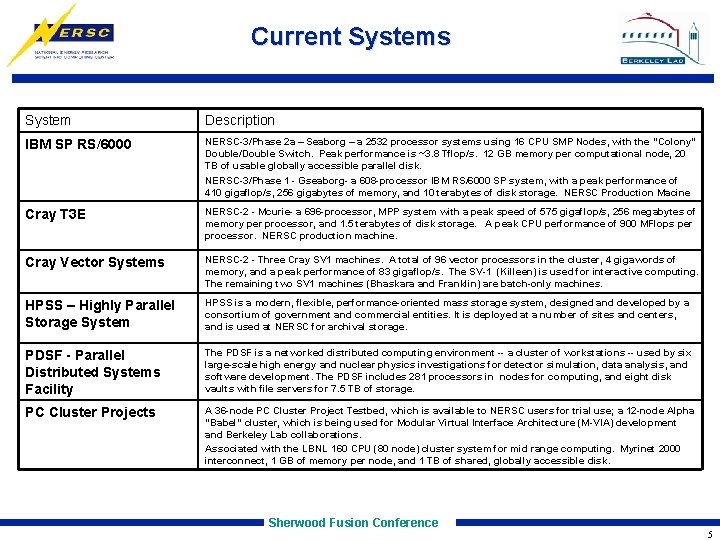

Current Systems System Description IBM SP RS/6000 NERSC-3/Phase 2 a – Seaborg – a 2532 processor systems using 16 CPU SMP Nodes, with the “Colony” Double/Double Switch. Peak performance is ~3. 8 Tflop/s. 12 GB memory per computational node, 20 TB of usable globally accessible parallel disk. NERSC-3/Phase 1 - Gseaborg- a 608 -processor IBM RS/6000 SP system, with a peak performance of 410 gigaflop/s, 256 gigabytes of memory, and 10 terabytes of disk storage. NERSC Production Macine Cray T 3 E NERSC-2 - Mcurie- a 696 -processor, MPP system with a peak speed of 575 gigaflop/s, 256 megabytes of memory per processor, and 1. 5 terabytes of disk storage. A peak CPU performance of 900 MFlops per processor. NERSC production machine. Cray Vector Systems NERSC-2 - Three Cray SV 1 machines. A total of 96 vector processors in the cluster, 4 gigawords of memory, and a peak performance of 83 gigaflop/s. The SV-1 (Killeen) is used for interactive computing. The remaining two SV 1 machines (Bhaskara and Franklin) are batch-only machines. HPSS – Highly Parallel Storage System HPSS is a modern, flexible, performance-oriented mass storage system, designed and developed by a consortium of government and commercial entities. It is deployed at a number of sites and centers, and is used at NERSC for archival storage. PDSF - Parallel Distributed Systems Facility The PDSF is a networked distributed computing environment -- a cluster of workstations -- used by six large-scale high energy and nuclear physics investigations for detector simulation, data analysis, and software development. The PDSF includes 281 processors in nodes for computing, and eight disk vaults with file servers for 7. 5 TB of storage. PC Cluster Projects A 36 -node PC Cluster Project Testbed, which is available to NERSC users for trial use; a 12 -node Alpha "Babel" cluster, which is being used for Modular Virtual Interface Architecture (M-VIA) development and Berkeley Lab collaborations. Associated with the LBNL 160 CPU (80 node) cluster system for mid range computing. Myrinet 2000 interconnect, 1 GB of memory per node, and 1 TB of shared, globally accessible disk. Sherwood Fusion Conference 5

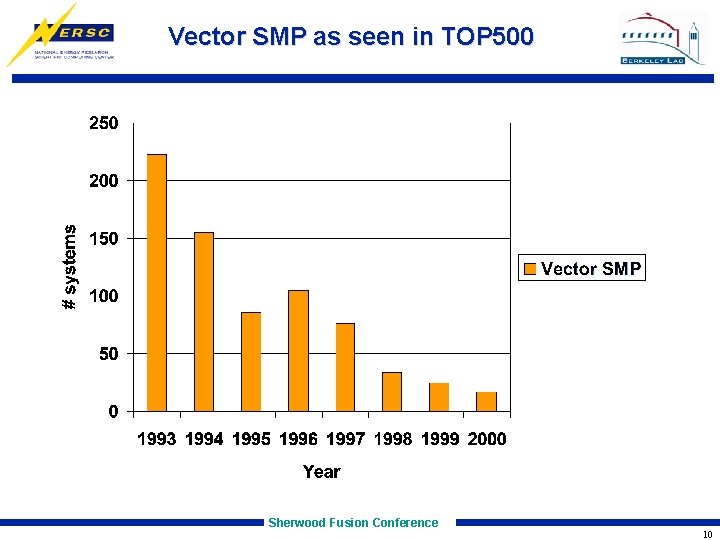

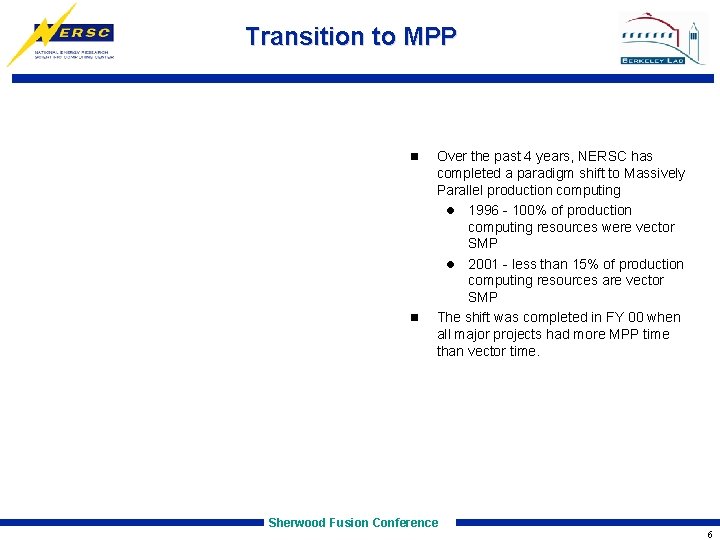

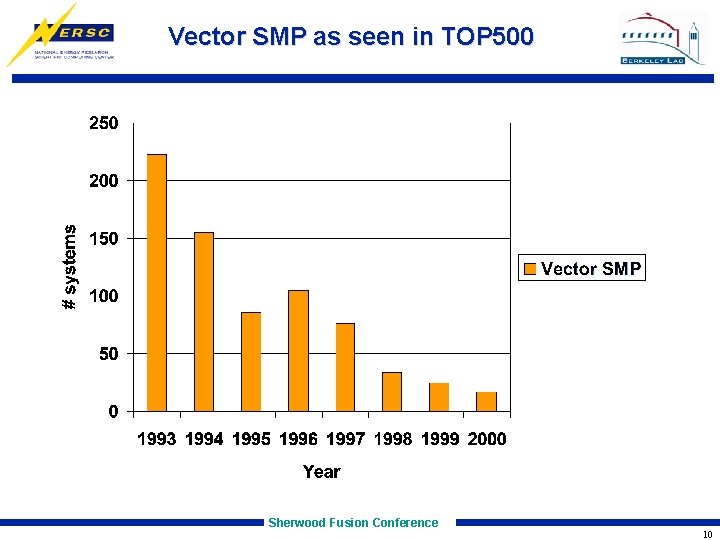

Transition to MPP n n Over the past 4 years, NERSC has completed a paradigm shift to Massively Parallel production computing l 1996 - 100% of production computing resources were vector SMP l 2001 - less than 15% of production computing resources are vector SMP The shift was completed in FY 00 when all major projects had more MPP time than vector time. Sherwood Fusion Conference 6

FY 00 MPP Users/Usage by Discipline Sherwood Fusion Conference 7

FY 00 PVP Users/Usage by Discipline Sherwood Fusion Conference 8

Vector SMP as seen in TOP 500 Sherwood Fusion Conference 10

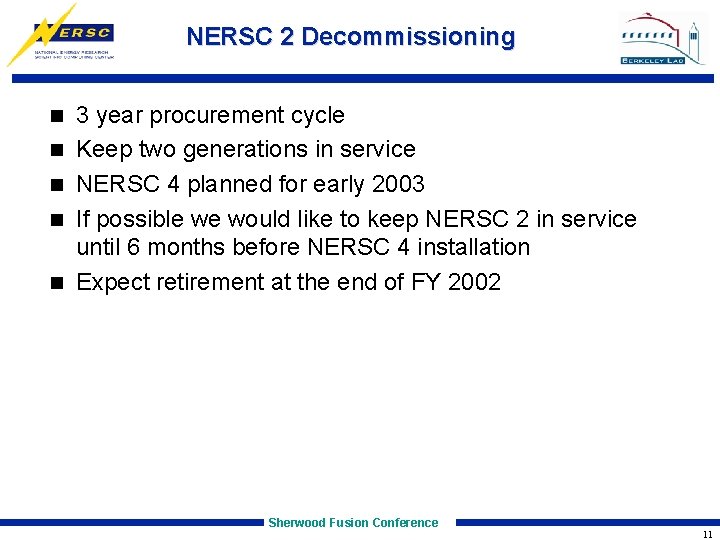

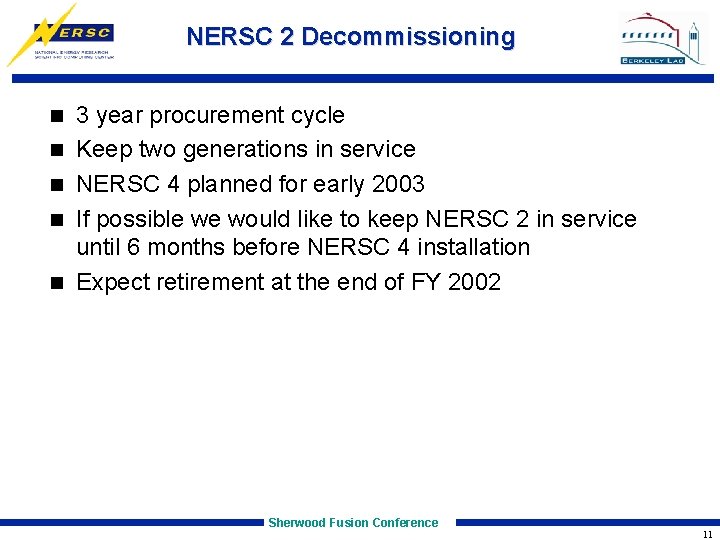

NERSC 2 Decommissioning n n n 3 year procurement cycle Keep two generations in service NERSC 4 planned for early 2003 If possible we would like to keep NERSC 2 in service until 6 months before NERSC 4 installation Expect retirement at the end of FY 2002 Sherwood Fusion Conference 11

Cray PVP Systems The line of Unicos PVP machines started with the XMP is coming to an end with the SV 1 ex n SV 2 inherits more of Unicos/mk and IRIX architecture than Unicos architecture n NERSC is actively involved in the providing requirements for the SV 2 n Sherwood Fusion Conference 12

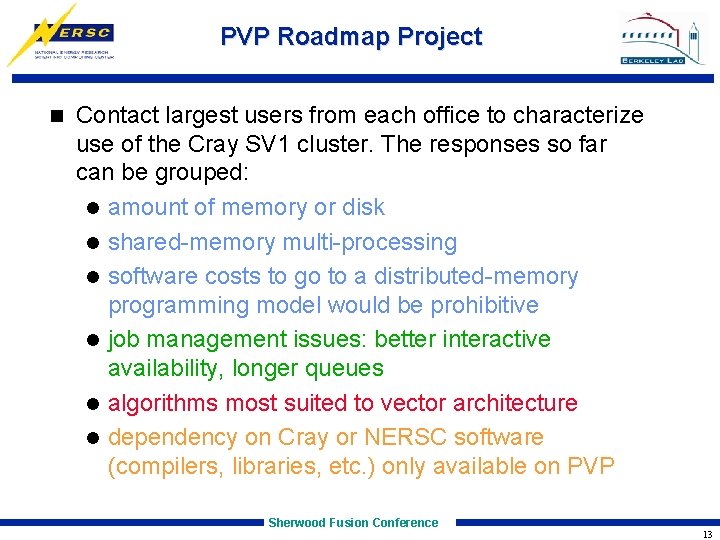

PVP Roadmap Project n Contact largest users from each office to characterize use of the Cray SV 1 cluster. The responses so far can be grouped: l amount of memory or disk l shared-memory multi-processing l software costs to go to a distributed-memory programming model would be prohibitive l job management issues: better interactive availability, longer queues l algorithms most suited to vector architecture l dependency on Cray or NERSC software (compilers, libraries, etc. ) only available on PVP Sherwood Fusion Conference 13

SMP Clusters A possible solution to the first three points is to use individual nodes of an SMP cluster as a replacement n Upcoming technology is equal to, or exceeds, memory, disk available on Cray SV 1 on a per node basis n Phase 2 A IBM SP has 12 GB memory per node, 16 375 MHz Power 3 CPU n We intend to experiment with ‘PVP-like’ jobs on certain nodes of new SP n Sherwood Fusion Conference 14

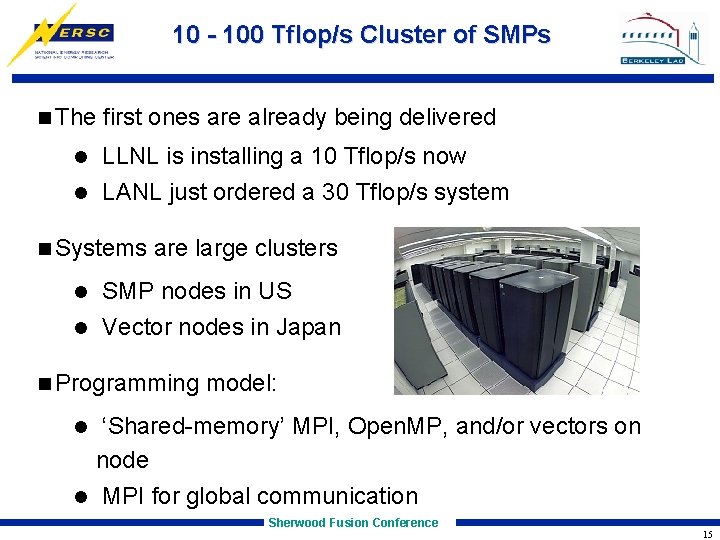

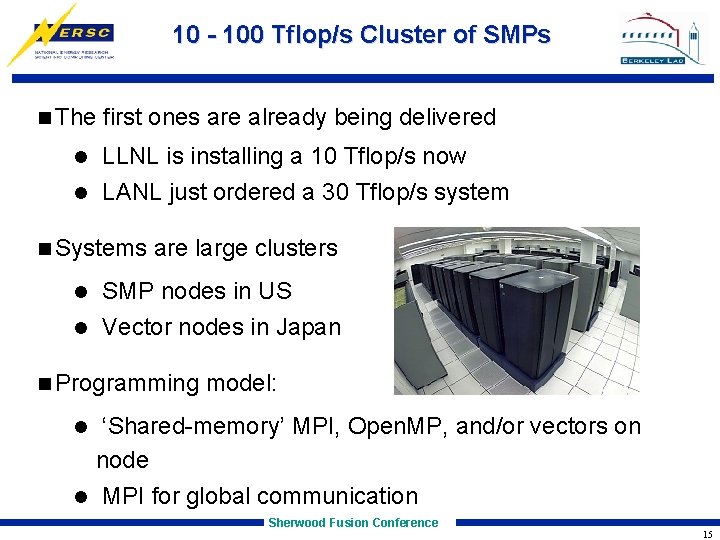

10 - 100 Tflop/s Cluster of SMPs n The first ones are already being delivered LLNL is installing a 10 Tflop/s now l LANL just ordered a 30 Tflop/s system l n Systems are large clusters SMP nodes in US l Vector nodes in Japan l n Programming model: ‘Shared-memory’ MPI, Open. MP, and/or vectors on node l MPI for global communication l Sherwood Fusion Conference 15

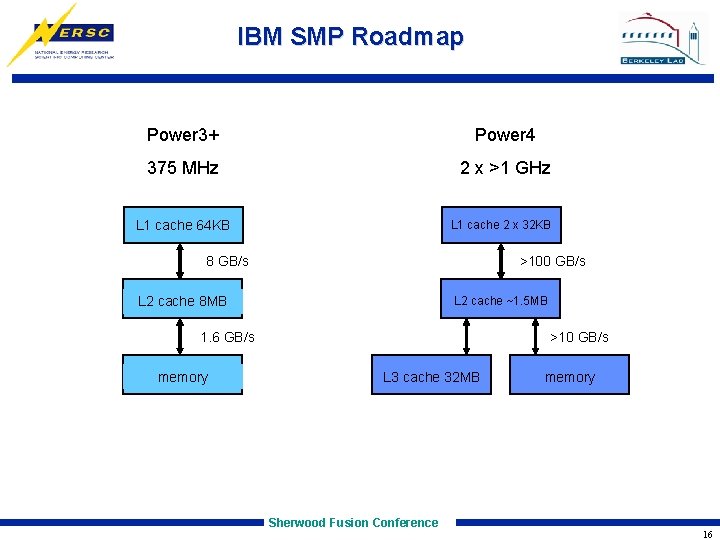

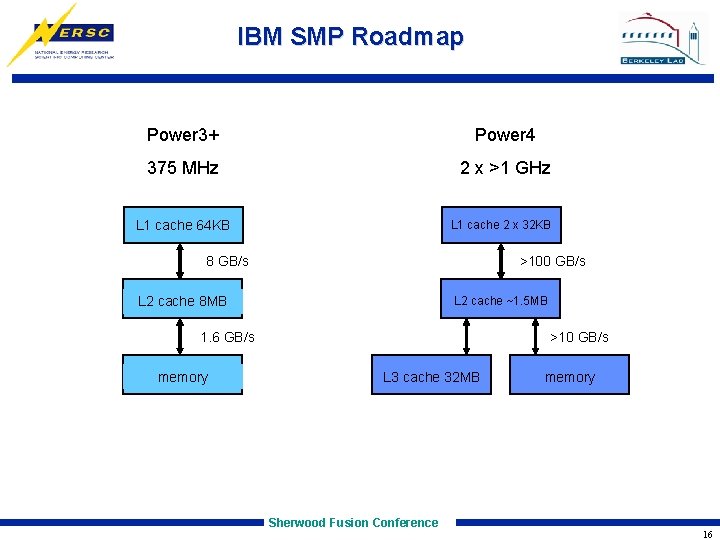

IBM SMP Roadmap Power 3+ Power 4 375 MHz 2 x >1 GHz L 1 cache 64 KB L 1 cache 2 x 32 KB 8 GB/s >100 GB/s L 2 cache ~1. 5 MB L 2 cache 8 MB 1. 6 GB/s memory >10 GB/s L 3 cache 32 MB Sherwood Fusion Conference memory 16

Job Management MPP systems dictated a different style of management than PVP n Due to lack or overhead of gang-scheduling, and somewhat more instability: l queues are generally shorter, l interactive use more limited n With an SMP cluster, could manage some nodes in a similar style to PVP n Sherwood Fusion Conference 17

Vector Architecture Gap is closing between commodity processors and custom vector processors n Undertake a short study to benchmark a selection of PVP codes, vector vs. latest scalar technology n Sherwood Fusion Conference 18

Software Migration Issues Legacy software with Cray Fortran extensions, Cray library calls, or assembly language l some vendors provide compiler flags, wrappers to other libraries which mimic Cray n UNICOS unformatted data l currently in discussions with IBM n CTSS file conversion routines n Unsupported libraries, tv 80, graflib, baselib n Sherwood Fusion Conference 19