Multilayer Perceptron MLP Regression Andrew Marshall Context In

- Slides: 17

Multilayer Perceptron (MLP) Regression Andrew Marshall

Context • In my project, microstructures are generated, and their 2 point statistics are correlated • Structure-property linkages are developed via regression. Examples: linear regression, multivariate polynomial regression, GPR • Artificial neural networks (ANNs) allow a computer to “learn” a problem by changing the importance assigned to each input as it obtains more information; ANNs may be used to work on regression problems

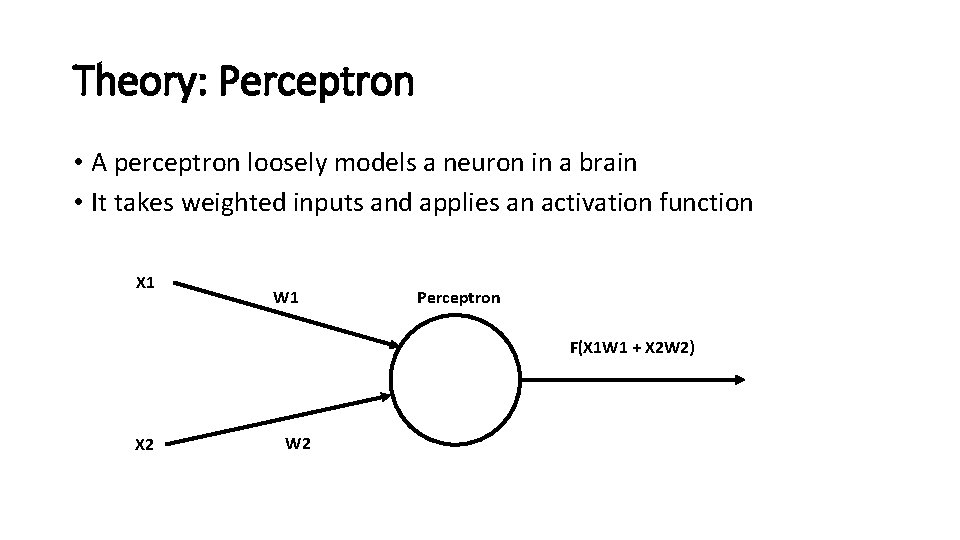

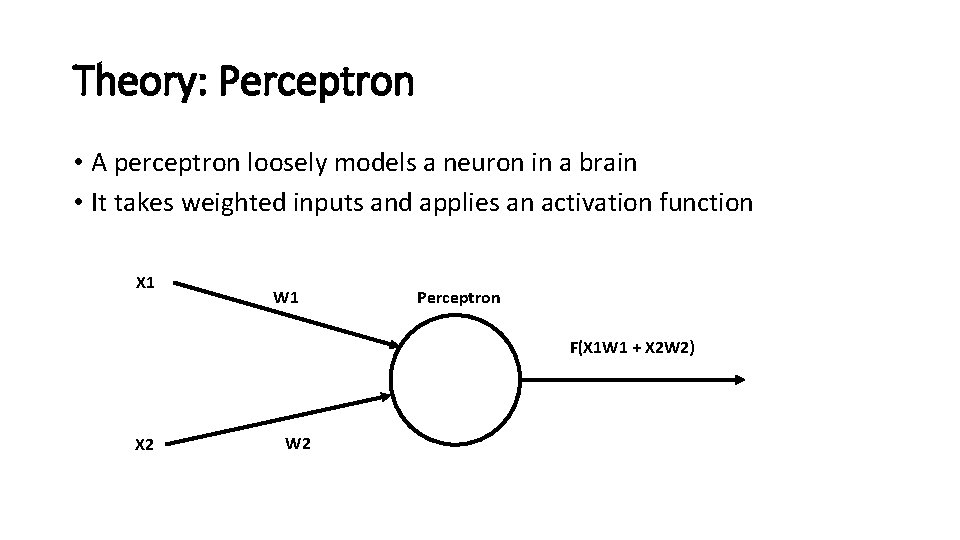

Theory: Perceptron • A perceptron loosely models a neuron in a brain • It takes weighted inputs and applies an activation function X 1 W 1 Perceptron F(X 1 W 1 + X 2 W 2) X 2 W 2

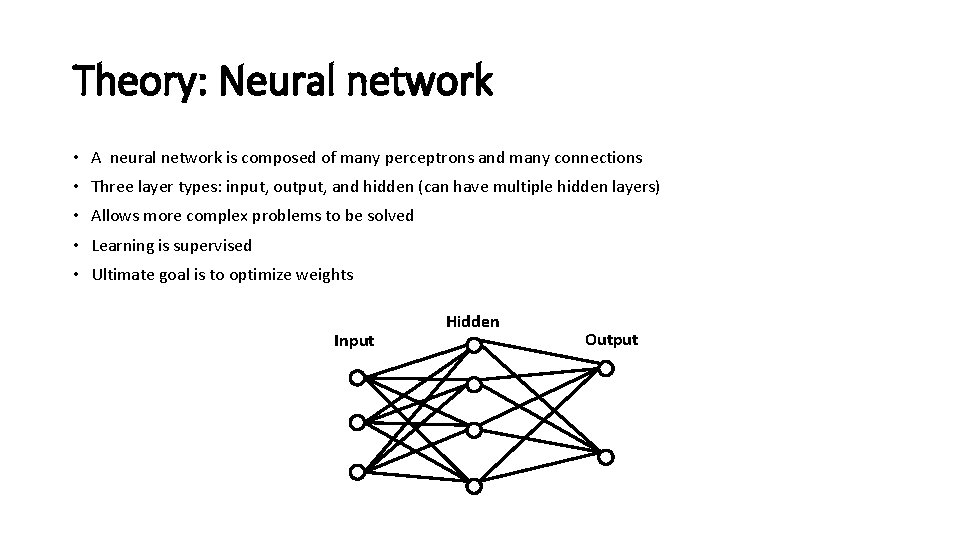

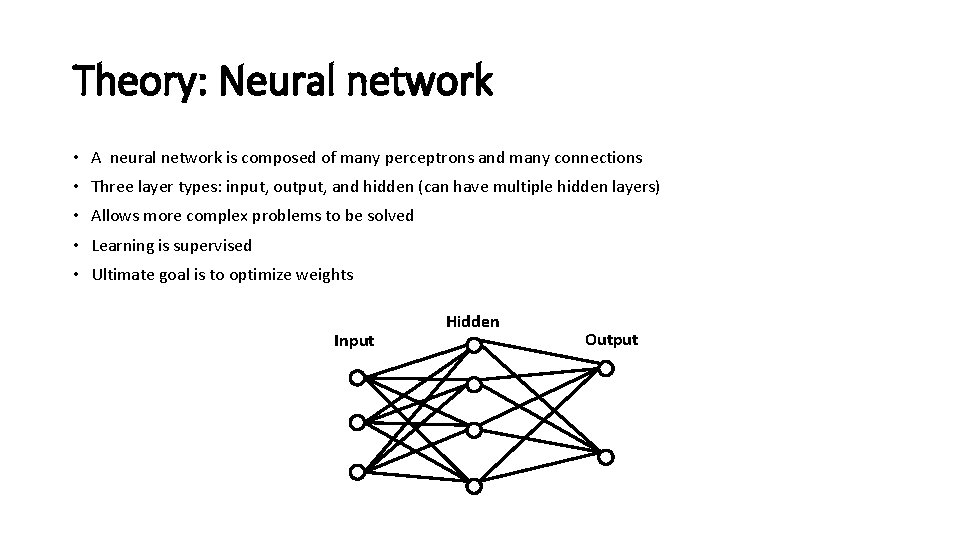

Theory: Neural network • A neural network is composed of many perceptrons and many connections • Three layer types: input, output, and hidden (can have multiple hidden layers) • Allows more complex problems to be solved • Learning is supervised • Ultimate goal is to optimize weights Input Hidden Output

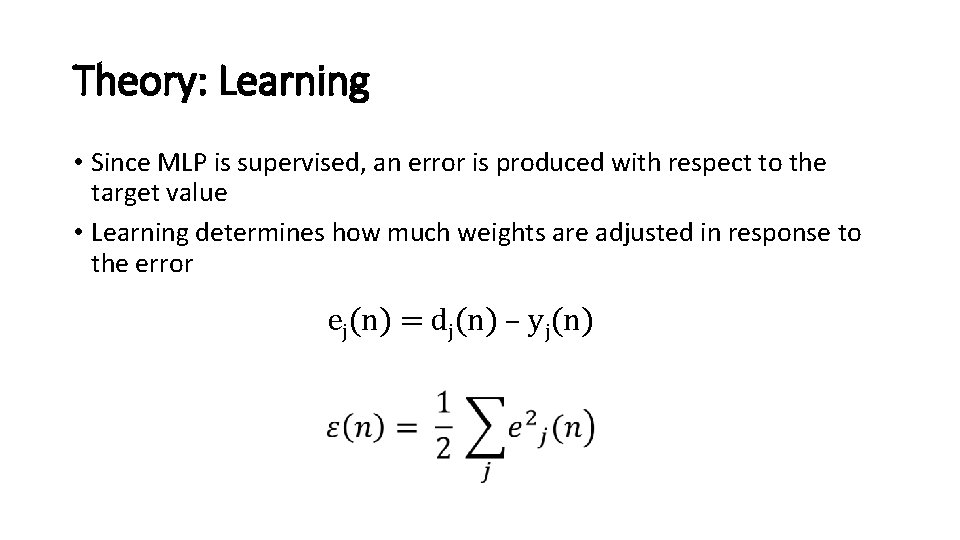

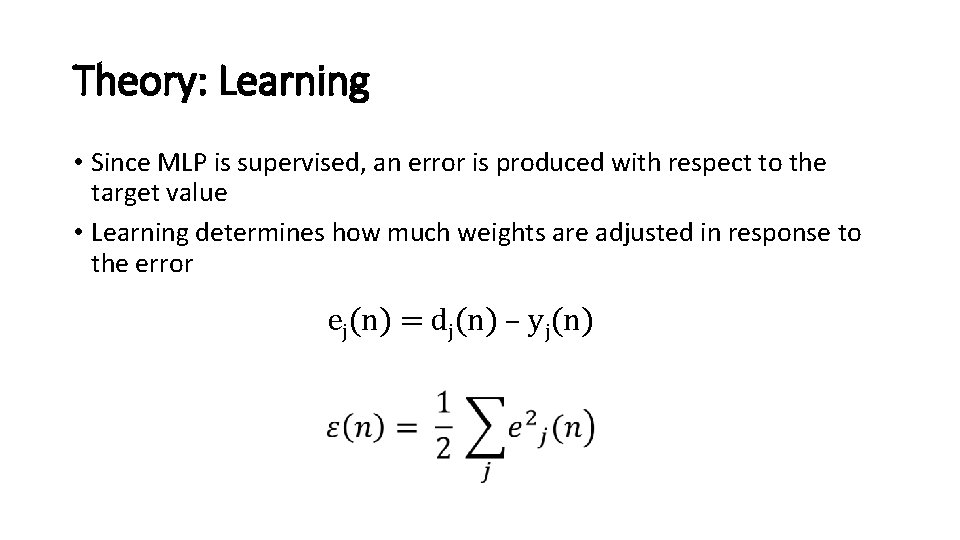

Theory: Learning • Since MLP is supervised, an error is produced with respect to the target value • Learning determines how much weights are adjusted in response to the error ej(n) = dj(n) – yj(n)

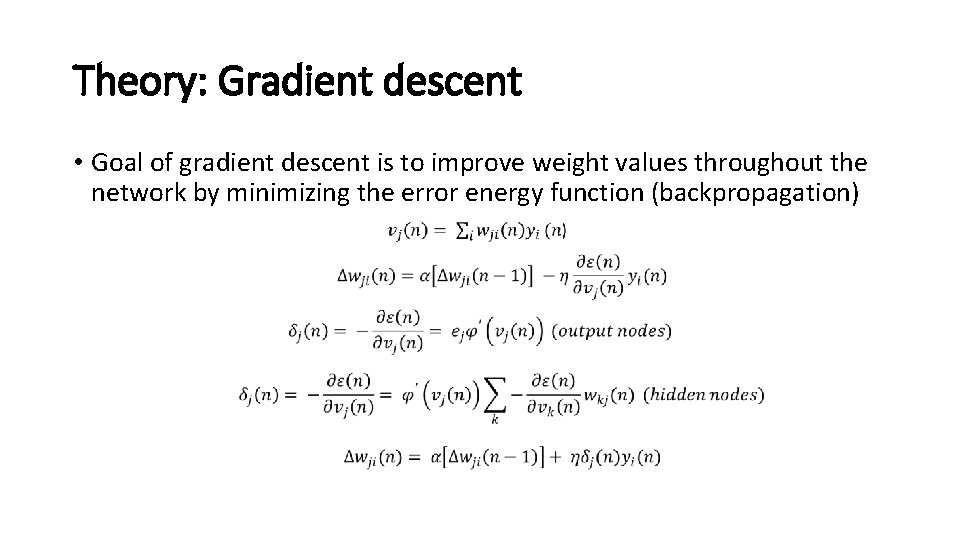

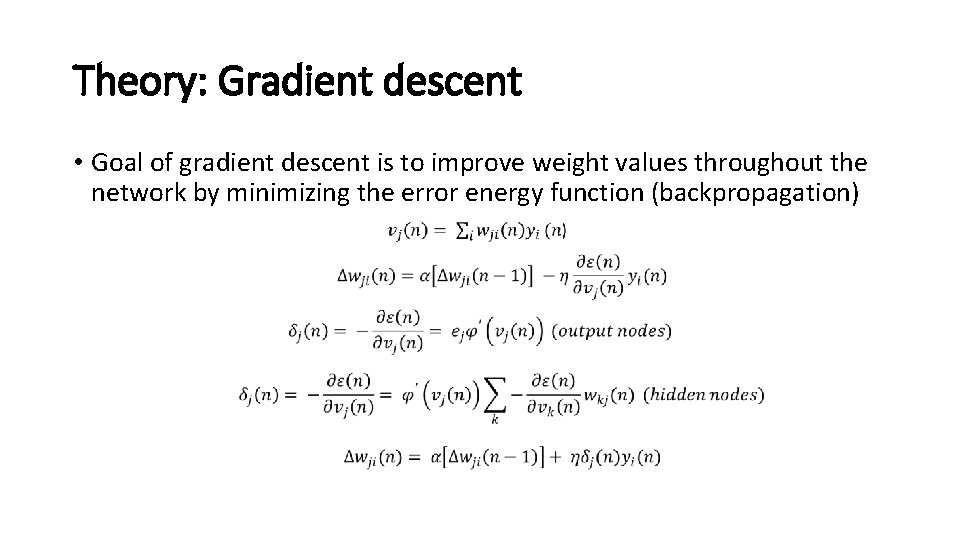

Theory: Gradient descent • Goal of gradient descent is to improve weight values throughout the network by minimizing the error energy function (backpropagation)

Function: sklearn. neural_network. MLPRegressor • Python-based MLP tool used to solve regression problems • Parameters: • • • Activation function Solver Learning rate Regularization parameter Many others, some of which only apply to certain solvers

Example problem: abalone shells • Dataset of 4177 abalone shells is given • In this case, 7 numeric predictors are used to try to predict a single output (number of rings) • Data was split 3500 (calibration set) + 677 (validation set)

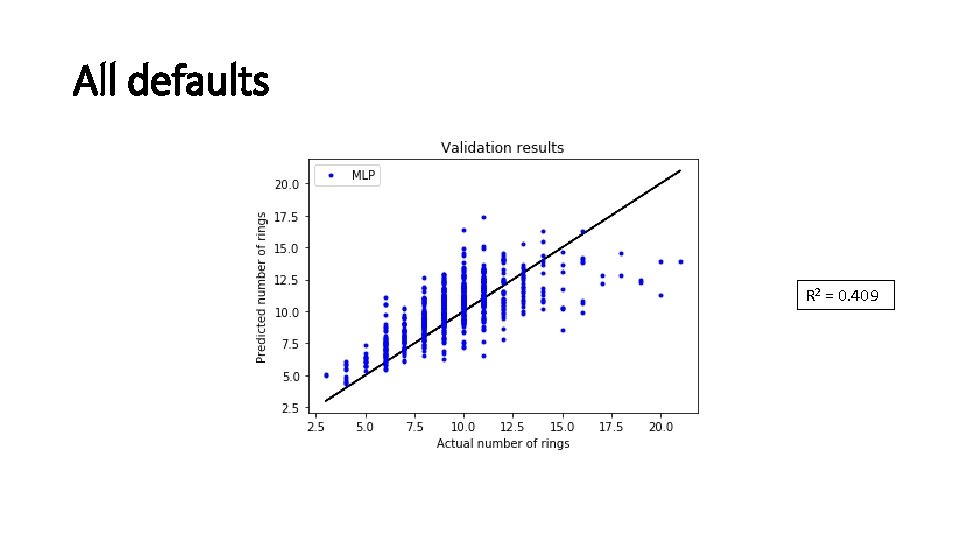

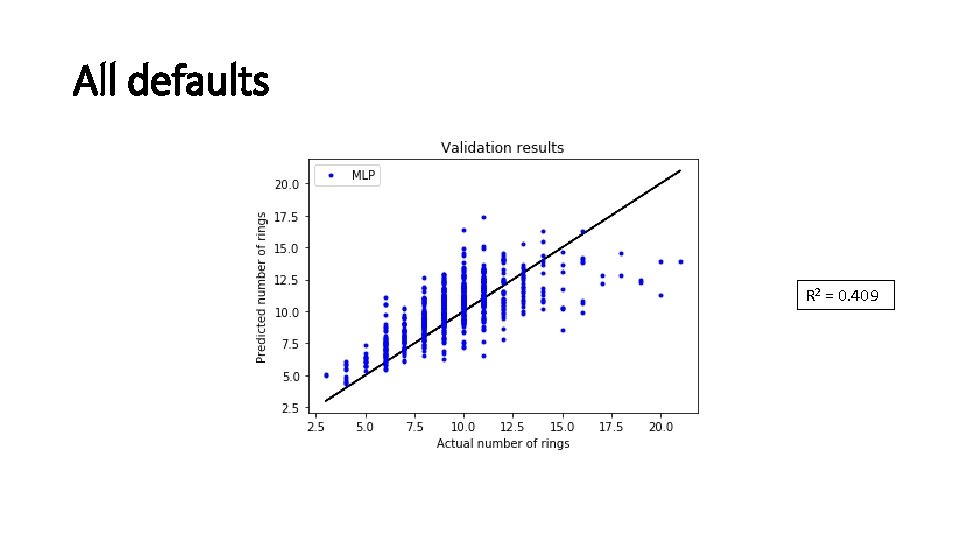

All defaults R 2 = 0. 409

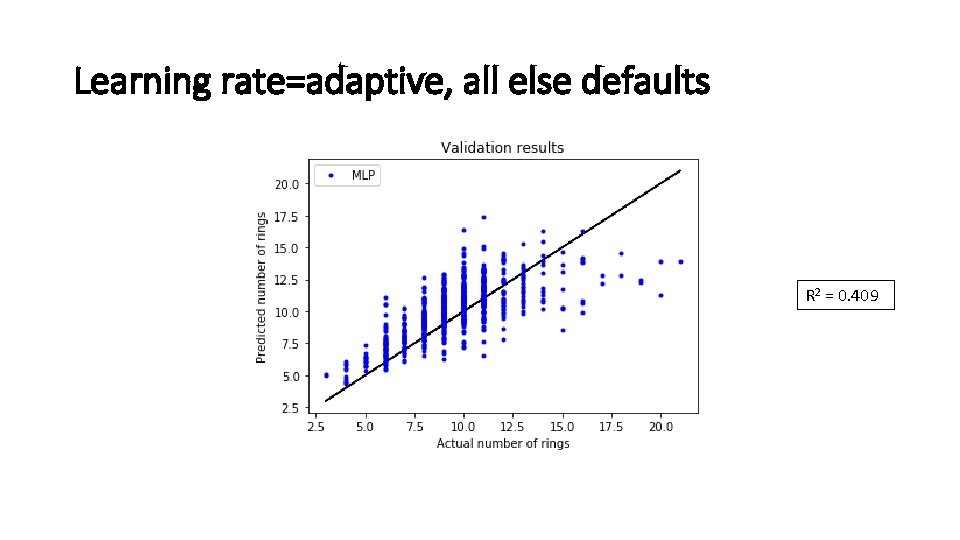

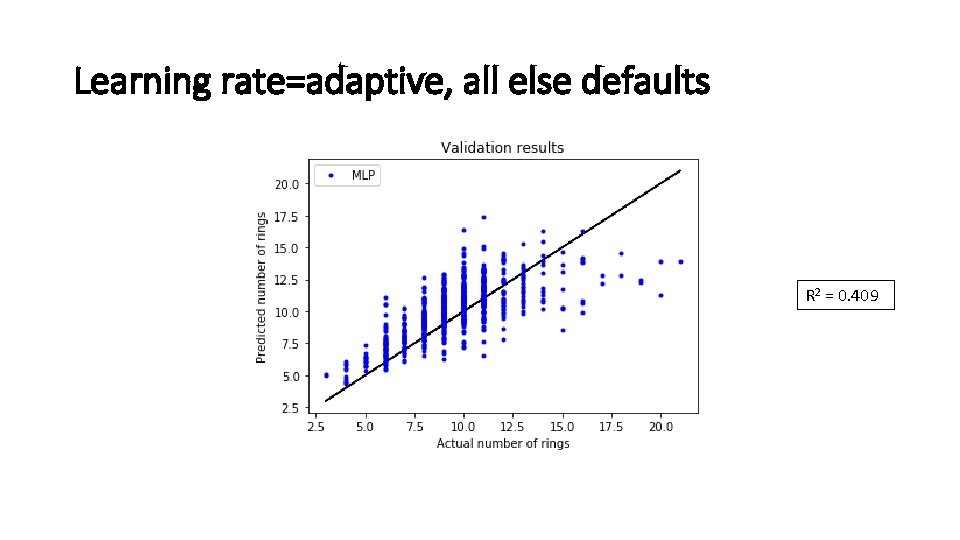

Learning rate=adaptive, all else defaults R 2 = 0. 409

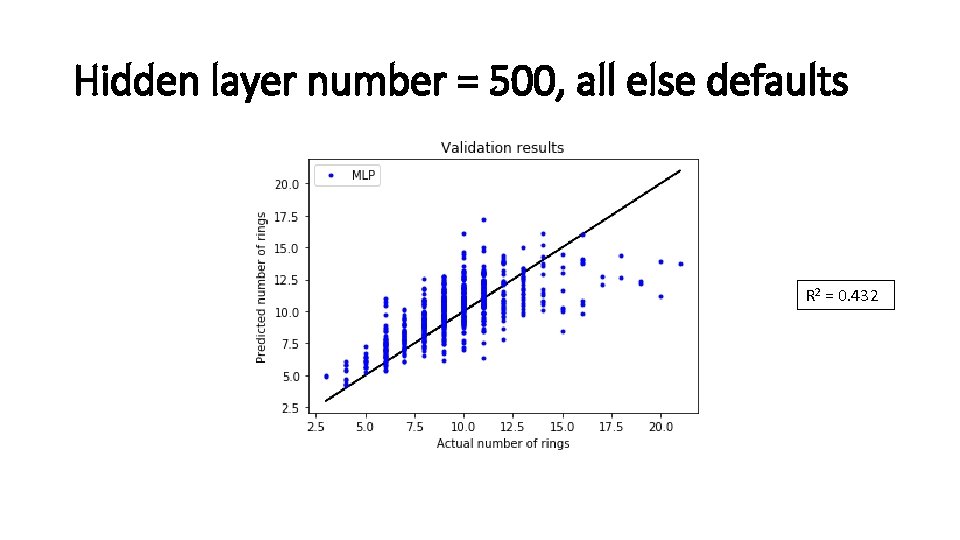

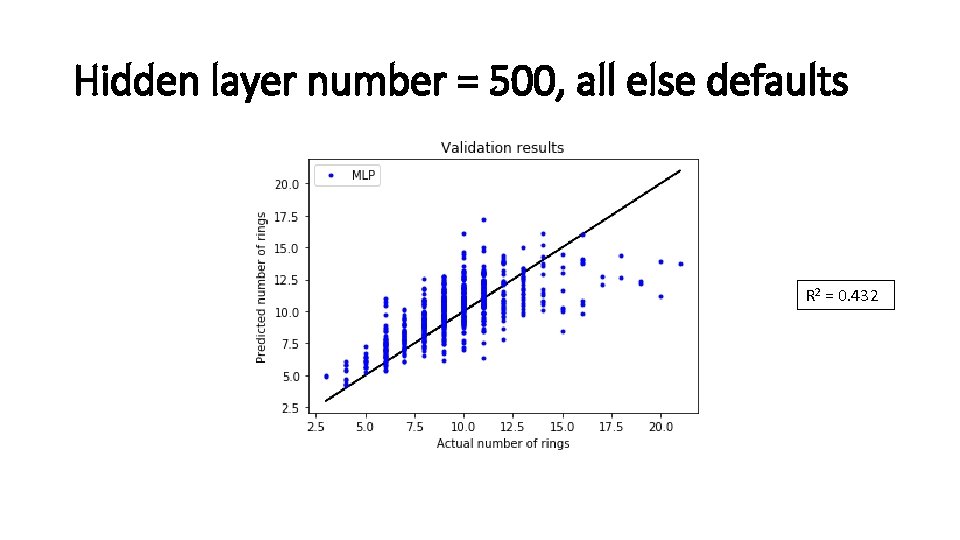

Hidden layer number = 500, all else defaults R 2 = 0. 432

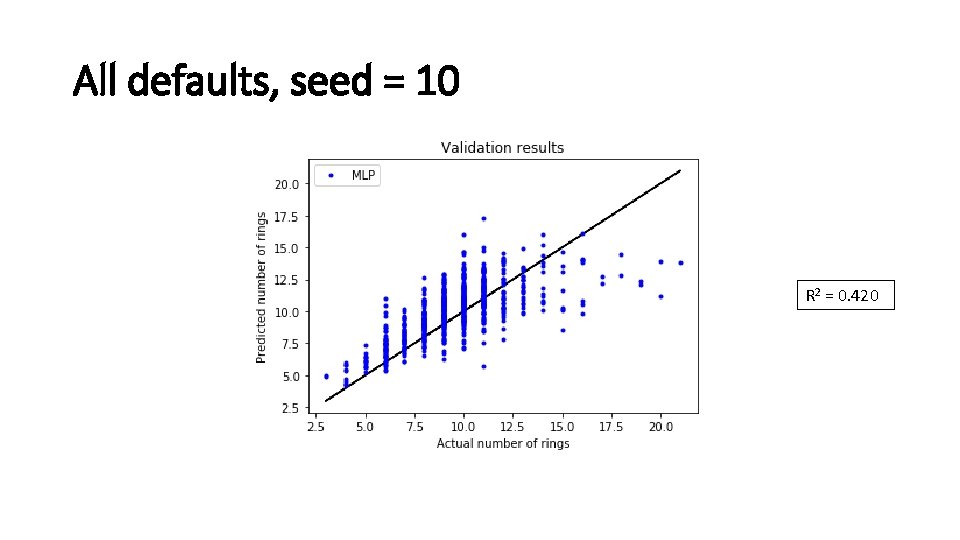

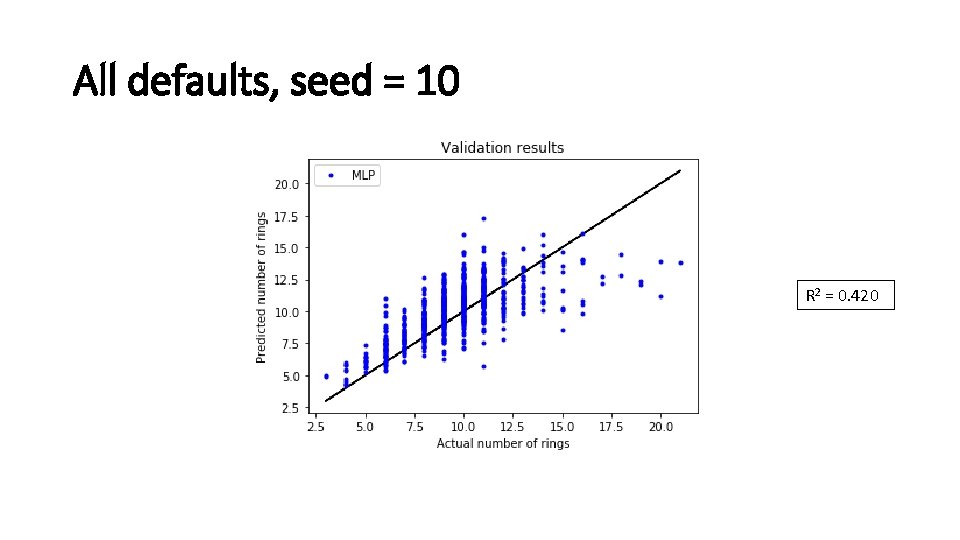

All defaults, seed = 10 R 2 = 0. 420

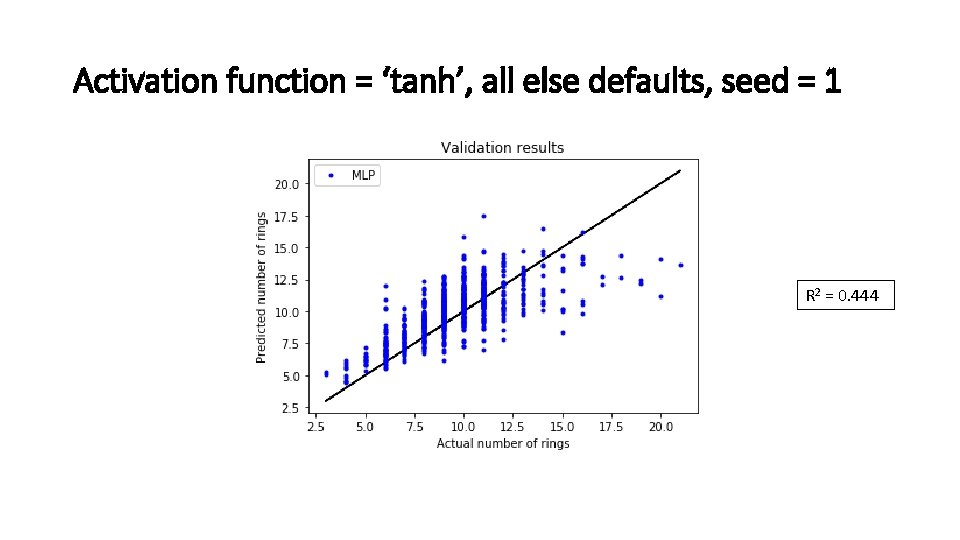

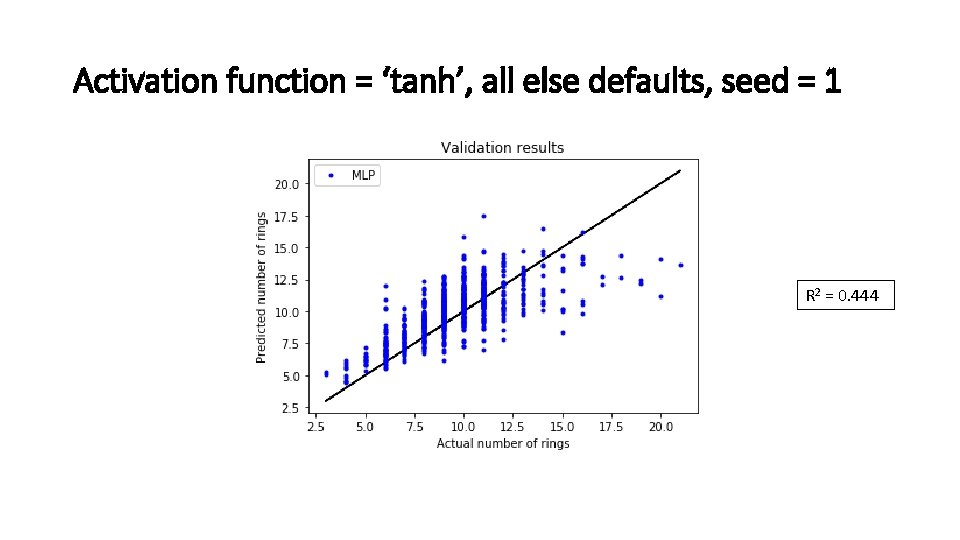

Activation function = ‘tanh’, all else defaults, seed = 1 R 2 = 0. 444

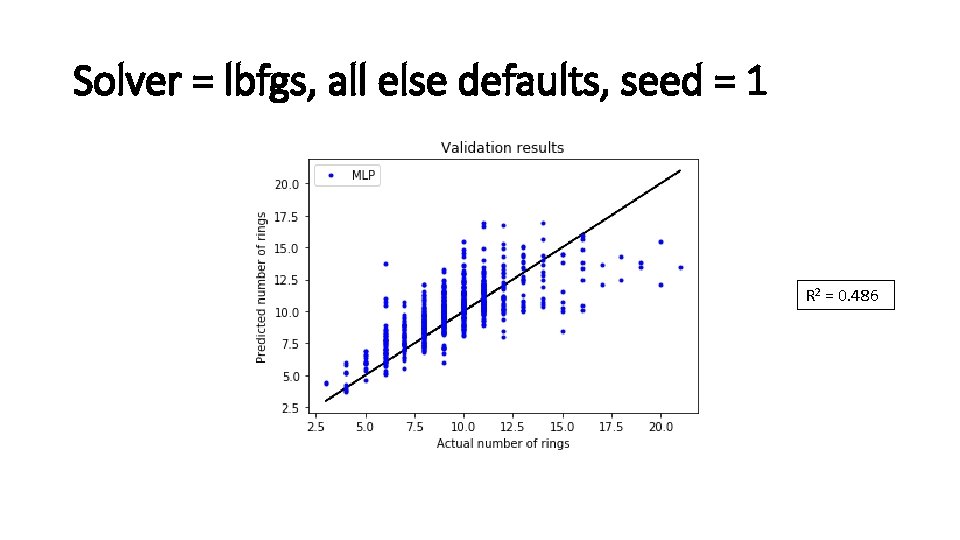

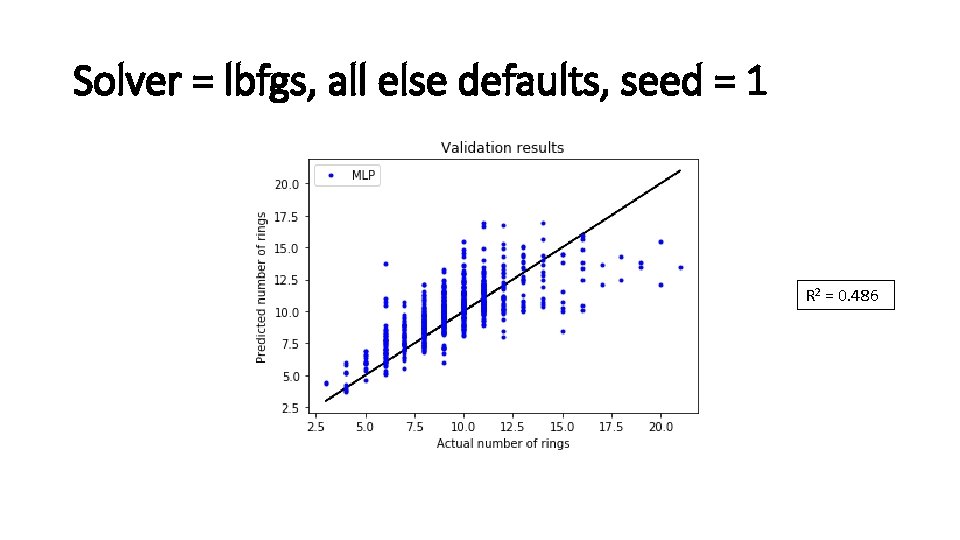

Solver = lbfgs, all else defaults, seed = 1 R 2 = 0. 486

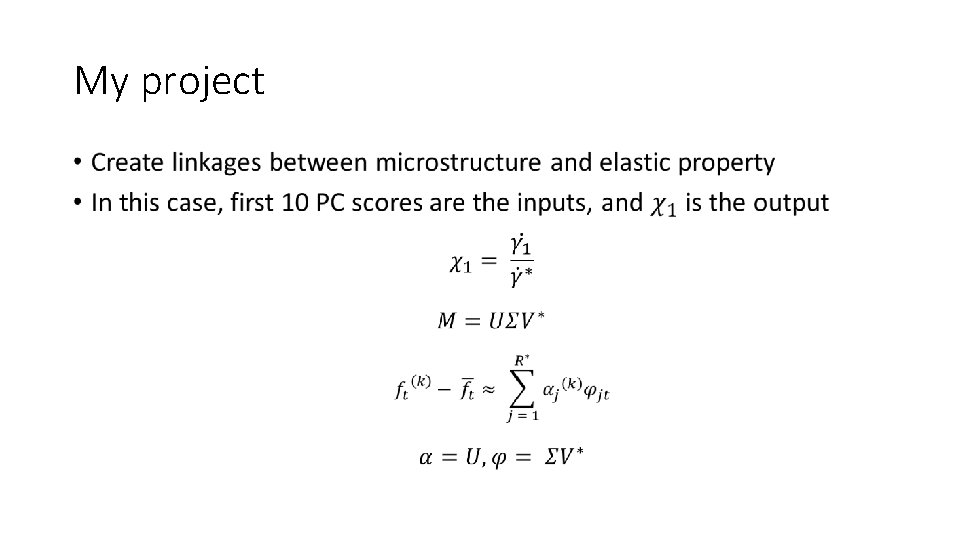

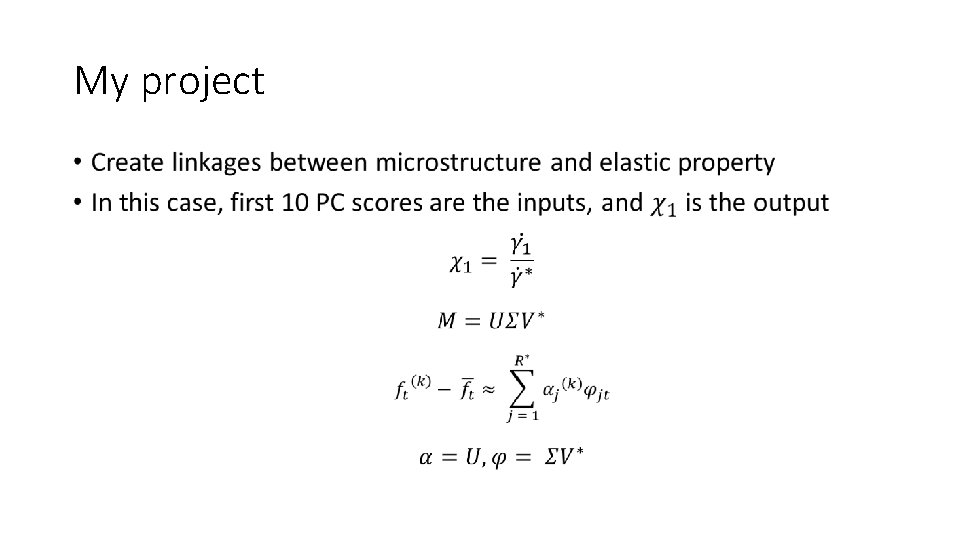

My project •

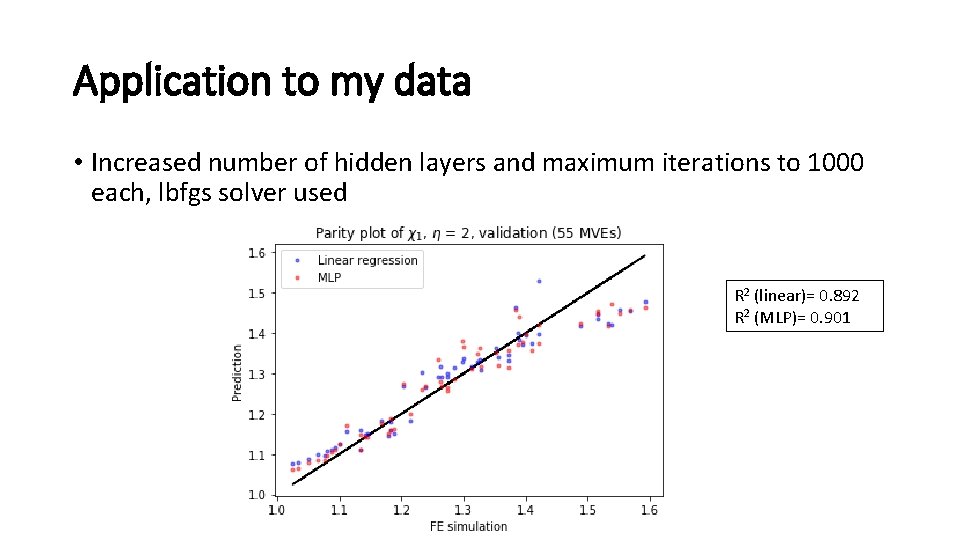

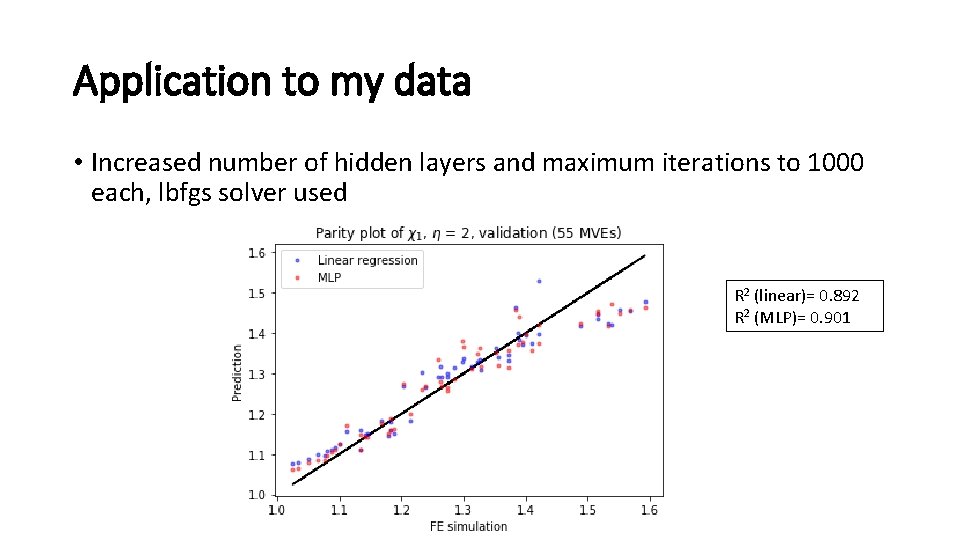

Application to my data • Increased number of hidden layers and maximum iterations to 1000 each, lbfgs solver used R 2 (linear)= 0. 892 R 2 (MLP)= 0. 901

Next Steps • Investigate other learning methods such as support vector machines • Improve model fits through hyperparameter tuning • Apply machine learning model to elastic constant data from generated microstructures • Determine method to calculate an uncertainty value for a prediction; this value will be used to determine whether or not the generated microstructure will be submitted for simulation