Neural Networks Multilayer Perceptron MLP Oscar Herrera Alcntara

- Slides: 14

Neural Networks Multilayer Perceptron (MLP) Oscar Herrera Alcántara heoscar@yahoo. com

Outline n Neuron n n Artificial neural networks Activation functions n Perceptrons n Multilayer perceptrons n Backpropagation Generalization n Introduction to Artificial Intelligence APSU

Neuron n A neuron is a cell in the brain q q q collection, processing, and dissemination of electrical signals neurons of > 20 types, synapses, 1 ms-10 ms cycle time brain’s information processing relies on networks of such neurons Introduction to Artificial Intelligence APSU

Biological Motivation n dendrites: nerve fibres carrying electrical signals to the cell n cell body: computes a non-linear function of its inputs n axon: single long fiber that carries the electrical signal from the cell body to other neurons n synapse: the point of contact between the axon of one cell and the dendrite of another, regulating a chemical connection whose strength affects the input to the cell. Introduction to Artificial Intelligence APSU

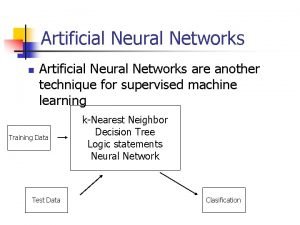

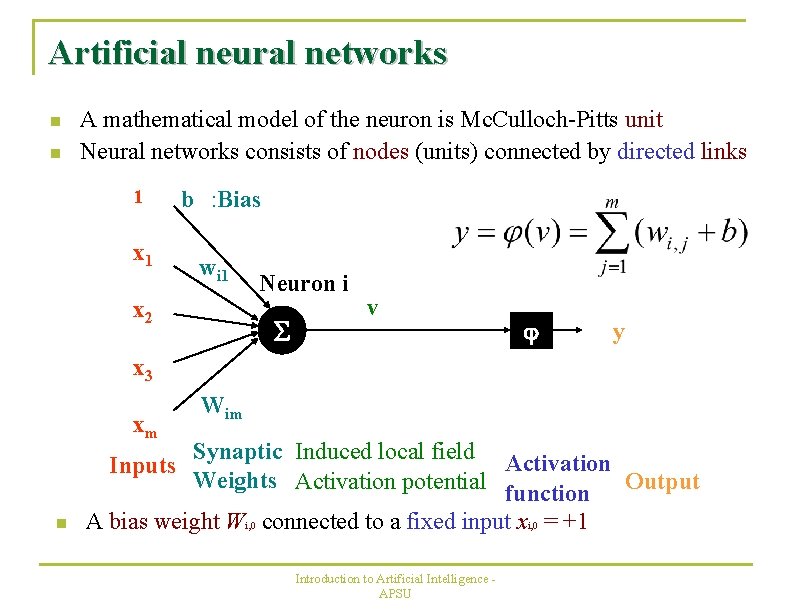

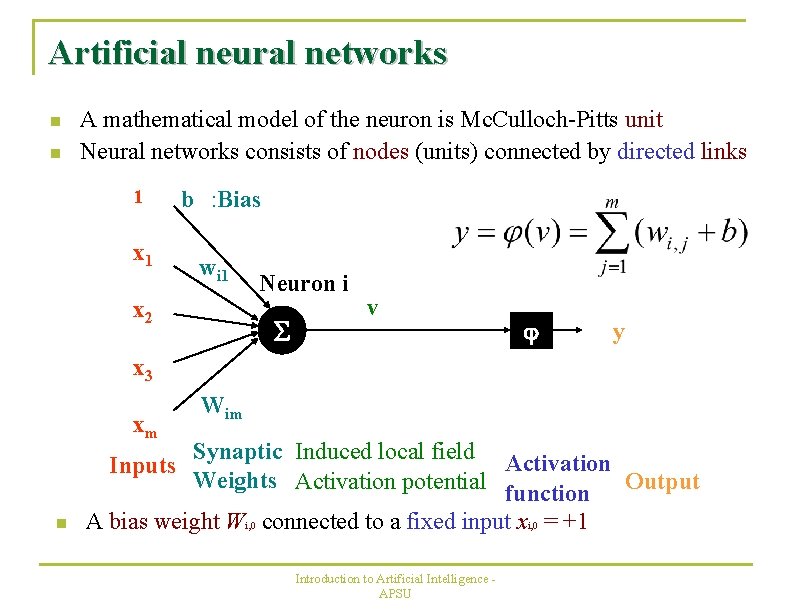

Artificial neural networks n n A mathematical model of the neuron is Mc. Culloch-Pitts unit Neural networks consists of nodes (units) connected by directed links 1 x 1 b : Bias wi 1 x 2 Neuron i S v j y x 3 xm n Wim Synaptic Induced local field Activation Inputs Weights Activation potential function Output A bias weight Wi, 0 connected to a fixed input xi, 0 = +1 Introduction to Artificial Intelligence APSU

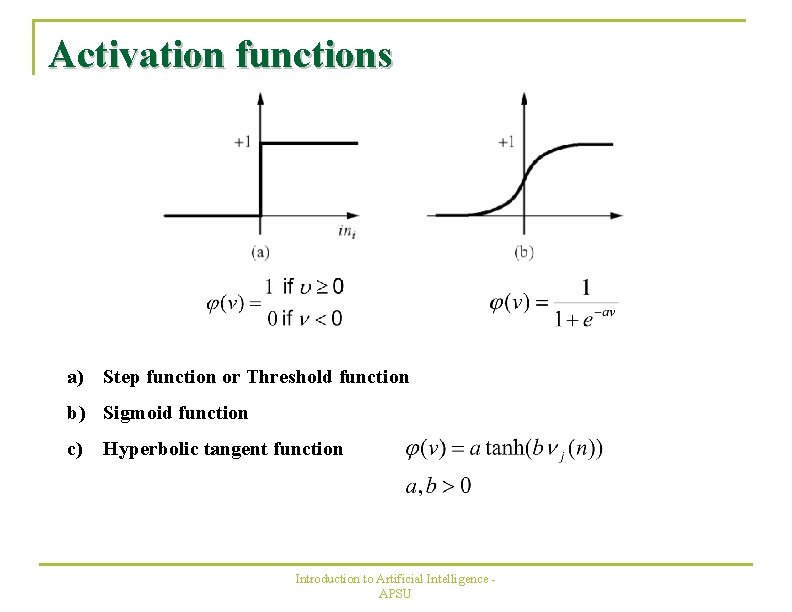

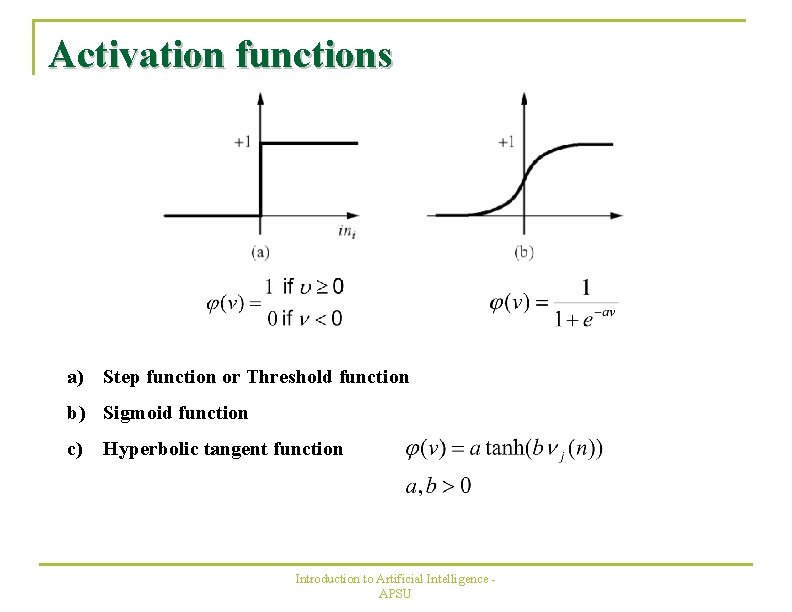

Activation functions a) Step function or Threshold function b) Sigmoid function c) Hyperbolic tangent function Introduction to Artificial Intelligence APSU

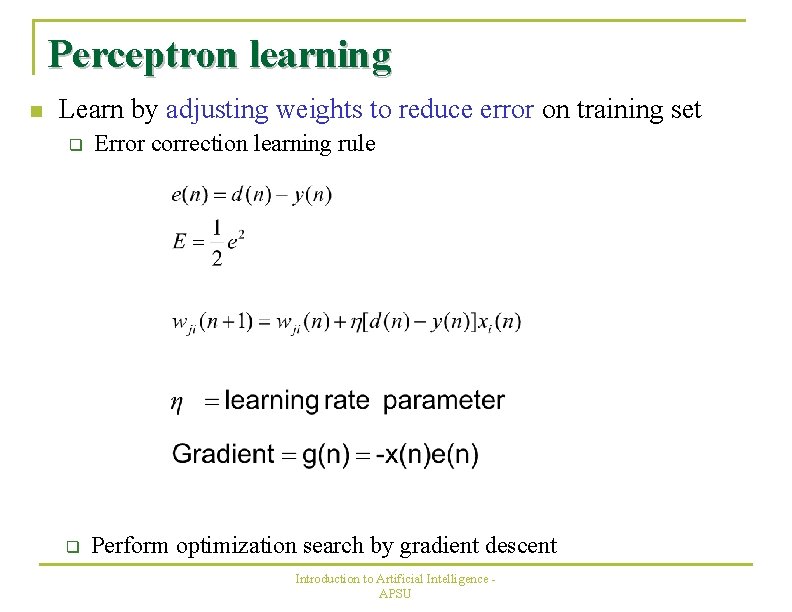

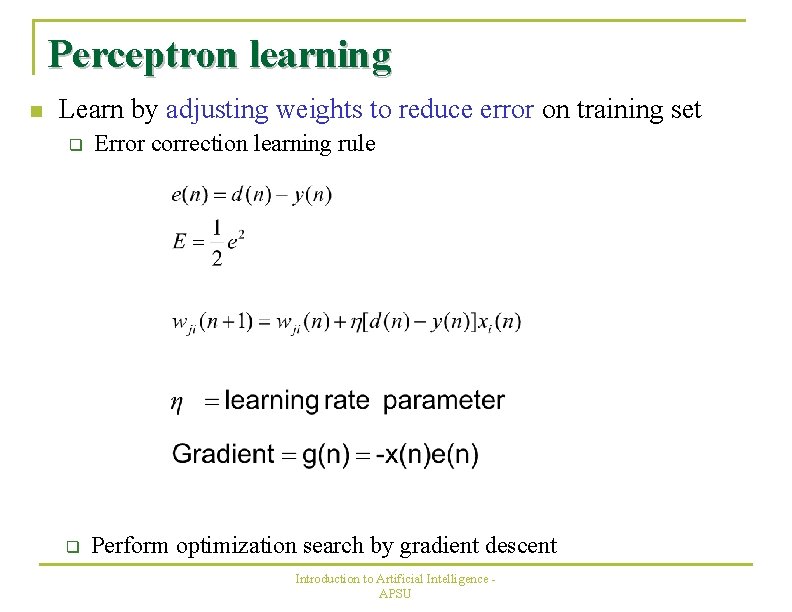

Perceptron learning n Learn by adjusting weights to reduce error on training set q Error correction learning rule q Perform optimization search by gradient descent Introduction to Artificial Intelligence APSU

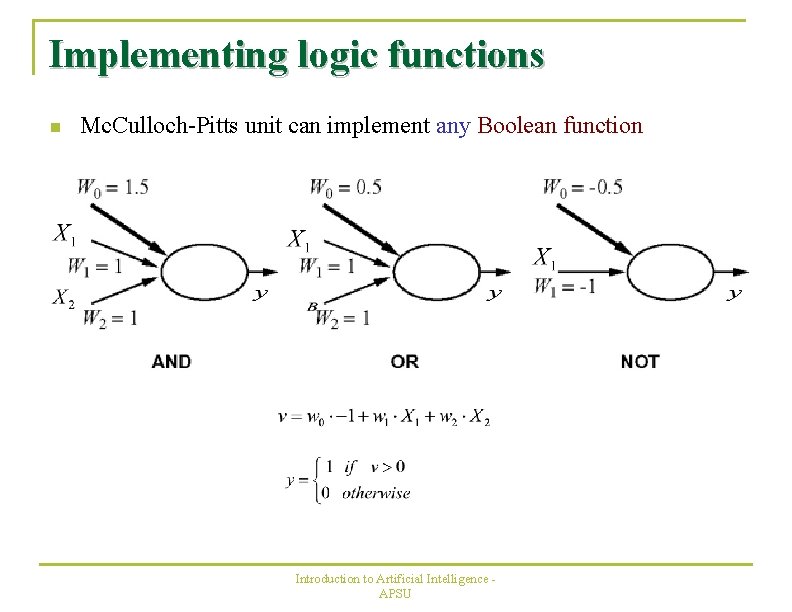

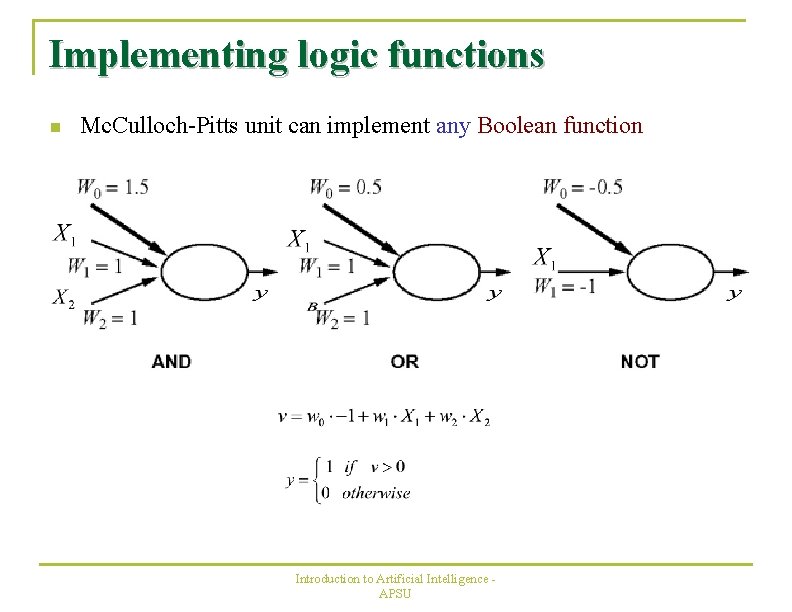

Implementing logic functions n Mc. Culloch-Pitts unit can implement any Boolean function Introduction to Artificial Intelligence APSU

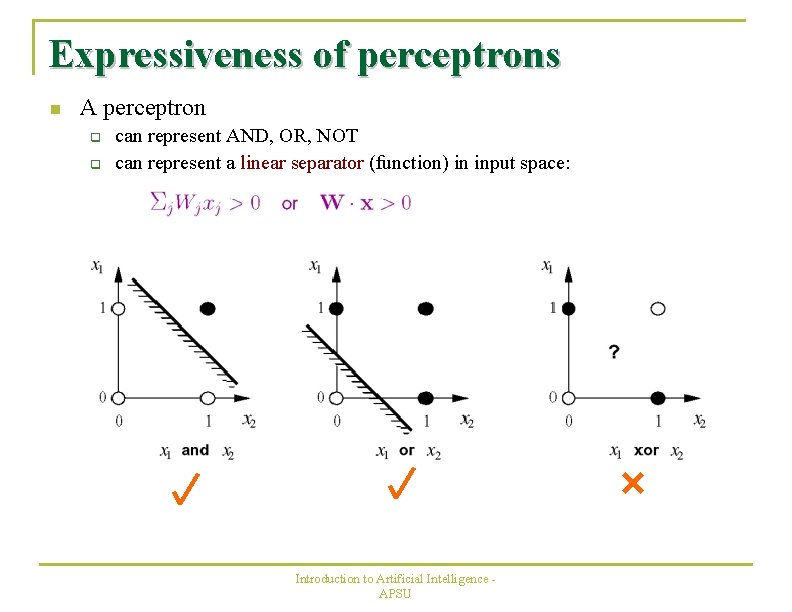

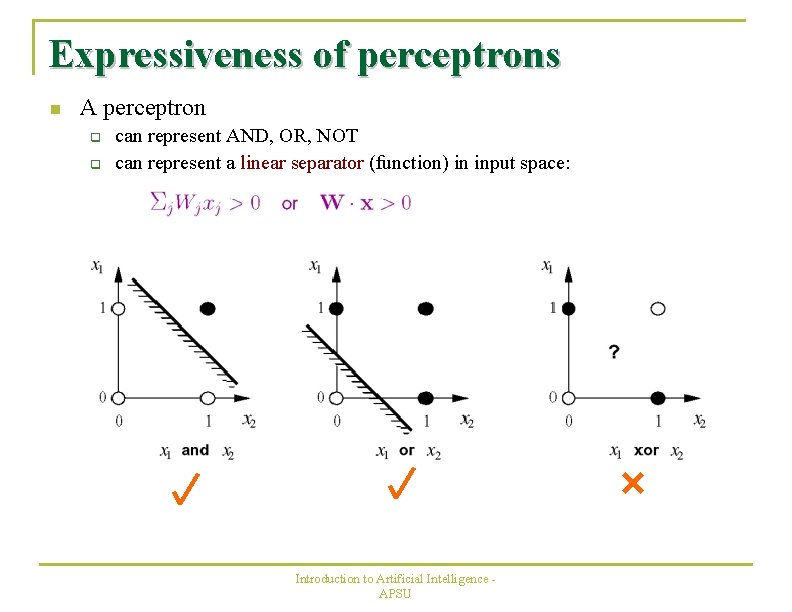

Expressiveness of perceptrons n A perceptron q q can represent AND, OR, NOT can represent a linear separator (function) in input space: Introduction to Artificial Intelligence APSU

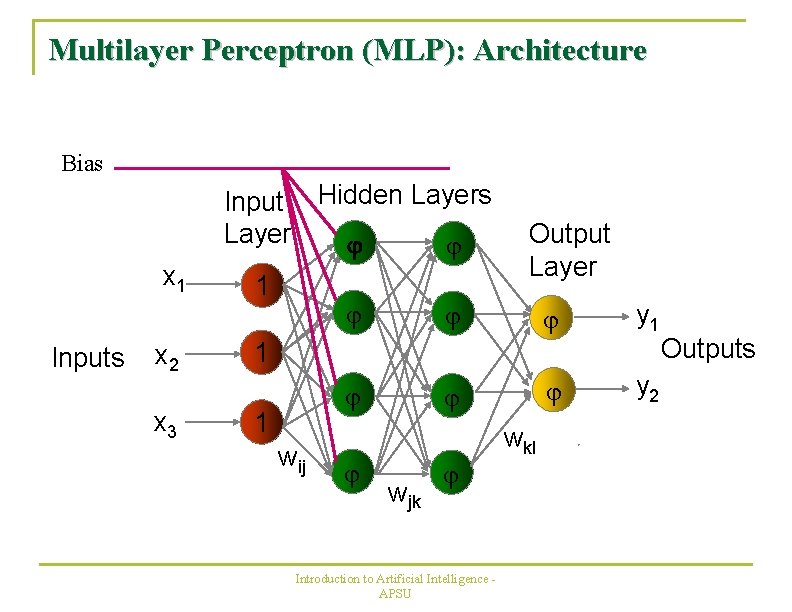

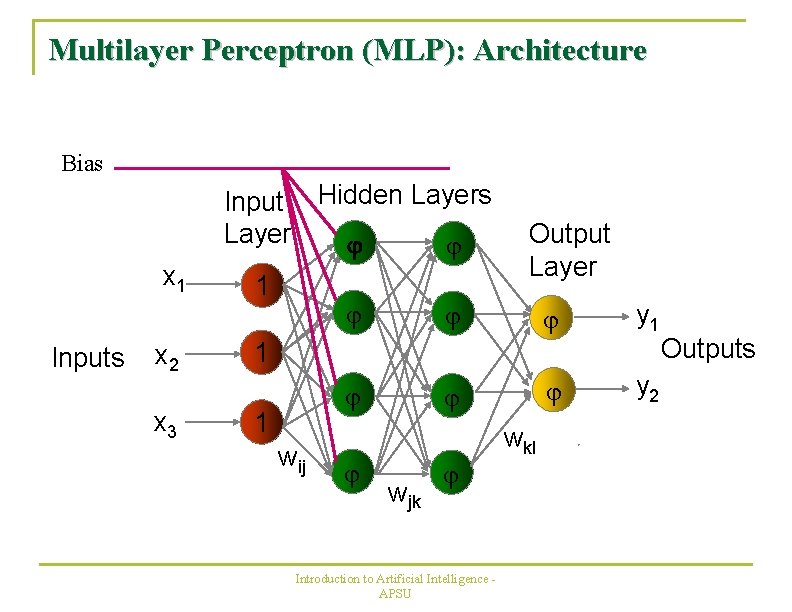

Multilayer Perceptron (MLP): Architecture Bias Input Hidden Layers Layer j j x 1 Inputs x 2 x 3 1 j Output Layer j j y 1 j y 2 1 j 1 wij j j wjk j Introduction to Artificial Intelligence APSU wkl Outputs

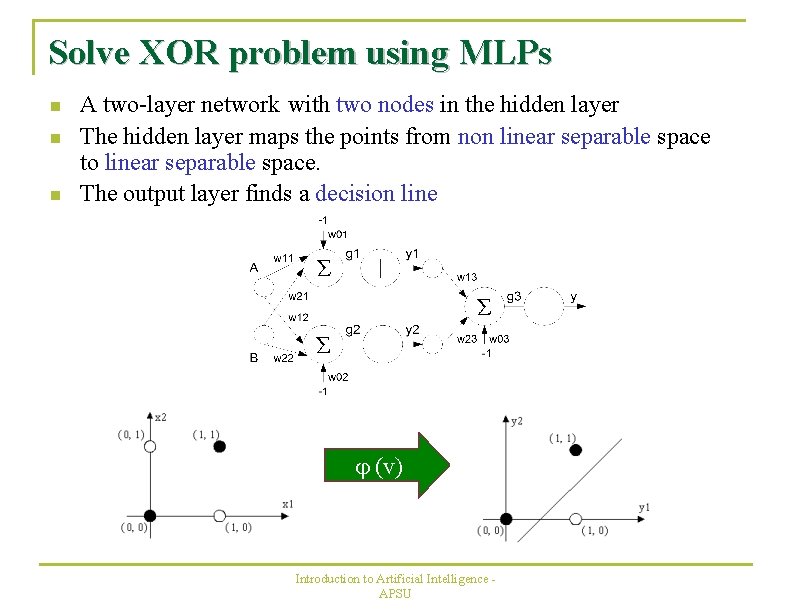

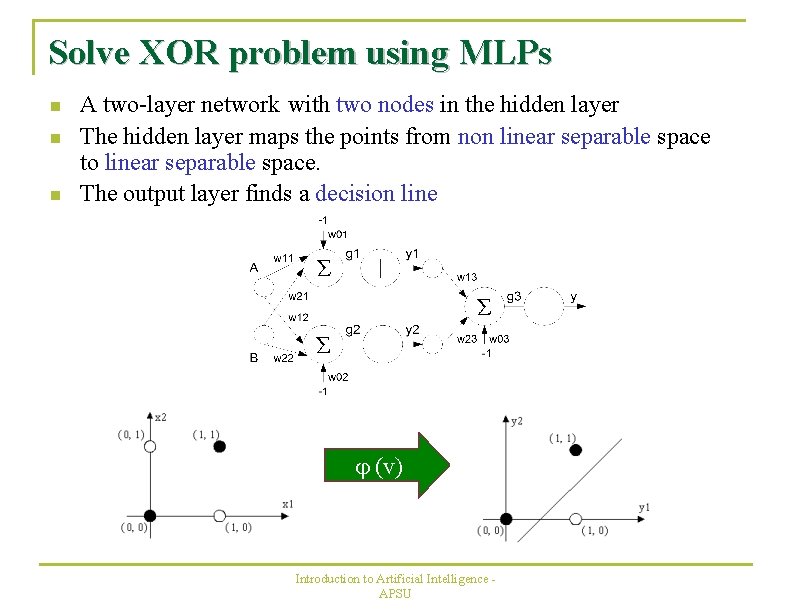

Solve XOR problem using MLPs n n n A two-layer network with two nodes in the hidden layer The hidden layer maps the points from non linear separable space to linear separable space. The output layer finds a decision line j (v) Introduction to Artificial Intelligence APSU

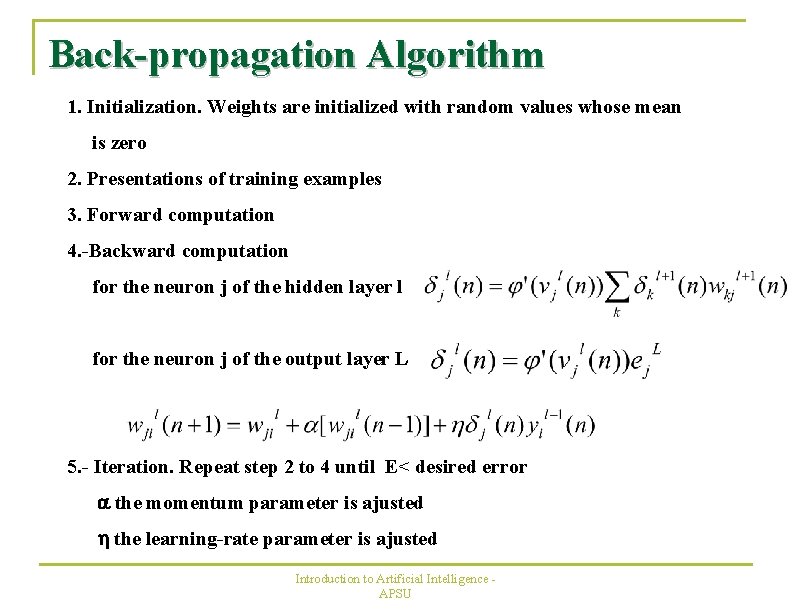

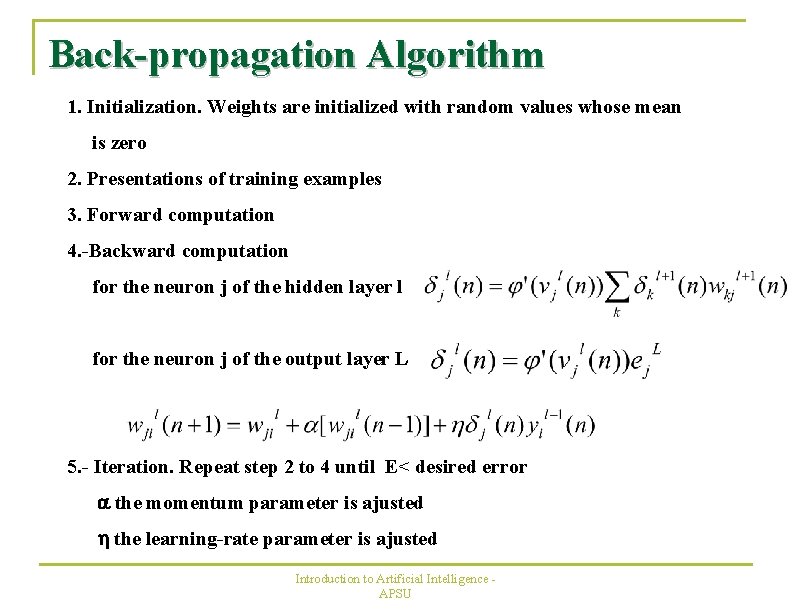

Back-propagation Algorithm 1. Initialization. Weights are initialized with random values whose mean is zero 2. Presentations of training examples 3. Forward computation 4. -Backward computation for the neuron j of the hidden layer l for the neuron j of the output layer L 5. - Iteration. Repeat step 2 to 4 until E< desired error a the momentum parameter is ajusted h the learning-rate parameter is ajusted Introduction to Artificial Intelligence APSU

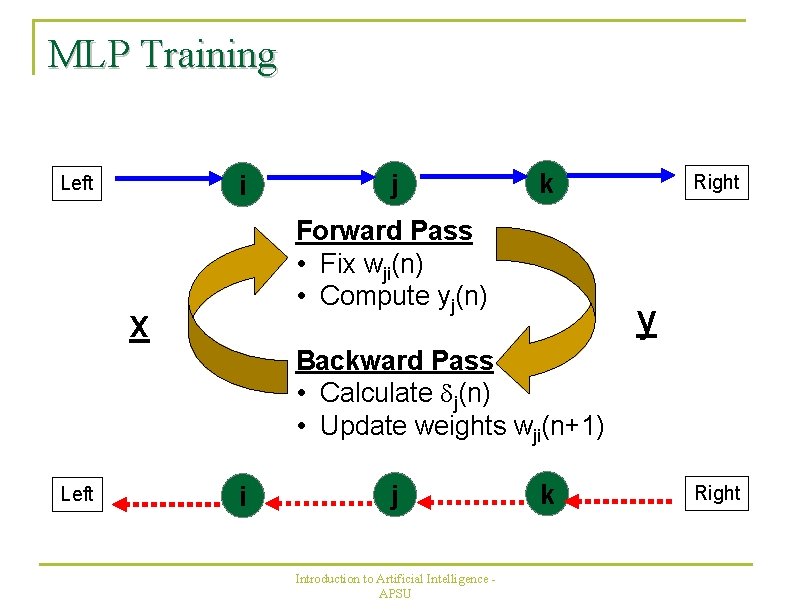

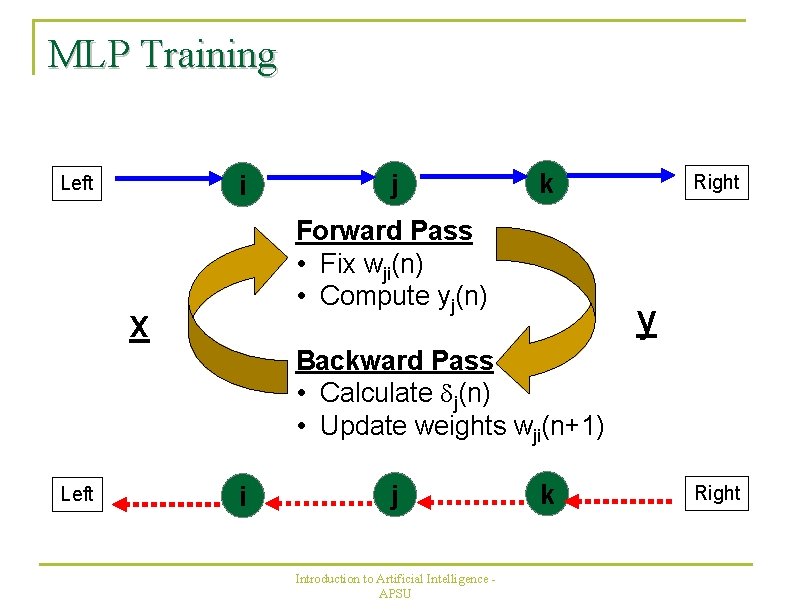

MLP Training i Left j k Forward Pass • Fix wji(n) • Compute yj(n) x Right y Backward Pass • Calculate dj(n) • Update weights wji(n+1) Left i j Introduction to Artificial Intelligence APSU k Right

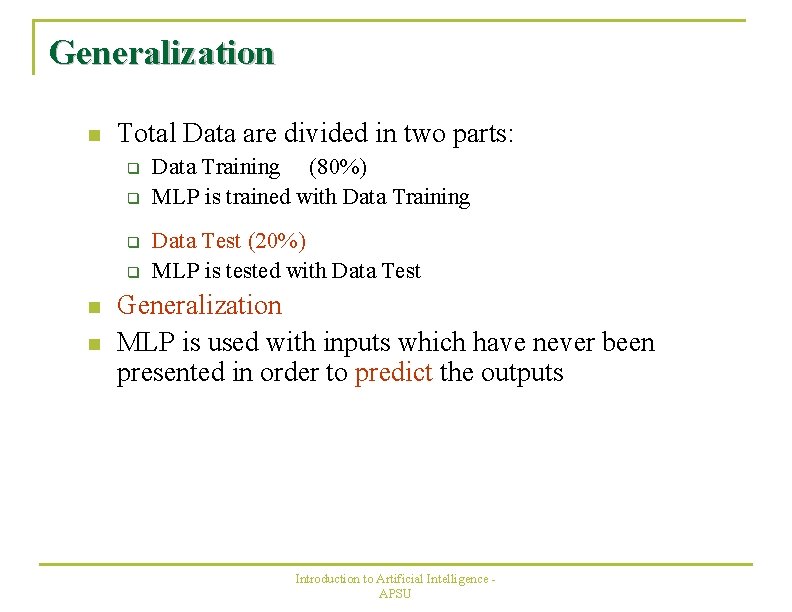

Generalization n Total Data are divided in two parts: q q n n Data Training (80%) MLP is trained with Data Training Data Test (20%) MLP is tested with Data Test Generalization MLP is used with inputs which have never been presented in order to predict the outputs Introduction to Artificial Intelligence APSU

Multilayer perceptron calculation example

Multilayer perceptron calculation example Multilayer perceptron

Multilayer perceptron Bagdt

Bagdt Miguel herrera maritza herrera

Miguel herrera maritza herrera Multilayer neural network

Multilayer neural network Building cisco multilayer switched networks

Building cisco multilayer switched networks 11-747 neural networks for nlp

11-747 neural networks for nlp Style transfer

Style transfer Neural networks and learning machines

Neural networks and learning machines Threshold logic unit

Threshold logic unit Lstm components

Lstm components Few shot learning with graph neural networks

Few shot learning with graph neural networks Freed et al 2001 ib psychology

Freed et al 2001 ib psychology Neuraltools neural networks

Neuraltools neural networks Perceptron xor

Perceptron xor