Minimum Spanning Trees Displaying Semantic Similarity Wodzisaw Duch

- Slides: 18

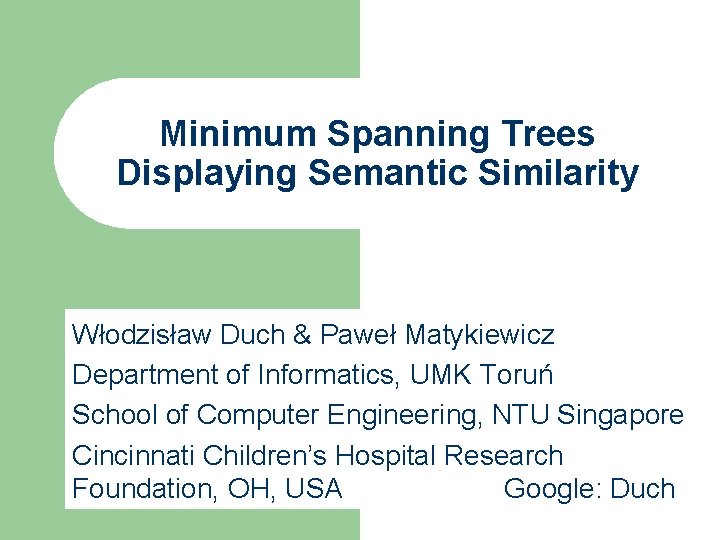

Minimum Spanning Trees Displaying Semantic Similarity Włodzisław Duch & Paweł Matykiewicz Department of Informatics, UMK Toruń School of Computer Engineering, NTU Singapore Cincinnati Children’s Hospital Research Foundation, OH, USA Google: Duch

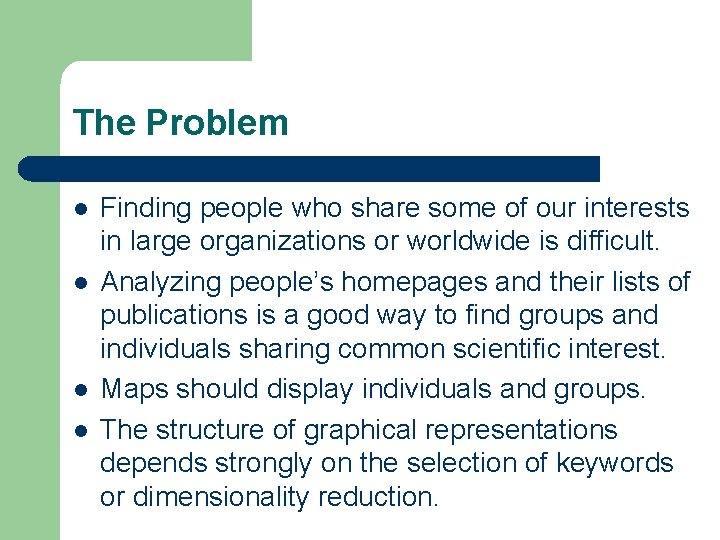

The Problem l l Finding people who share some of our interests in large organizations or worldwide is difficult. Analyzing people’s homepages and their lists of publications is a good way to find groups and individuals sharing common scientific interest. Maps should display individuals and groups. The structure of graphical representations depends strongly on the selection of keywords or dimensionality reduction.

The Data l Reuters-215785 datasets, with 5 categories and 1 – 176 elements per category. l 124 Personal Web Pages of the School of Electrical and Electronic Engineering (EEE) of the Nanyang Technological University (NTU) in Singapore, with 5 categories (control, microelectronics, information, circuit, power), and 14 – 41 documents per category.

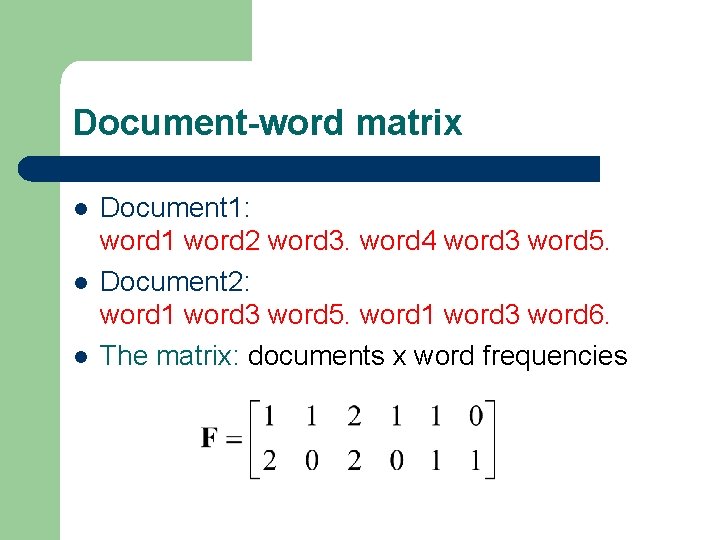

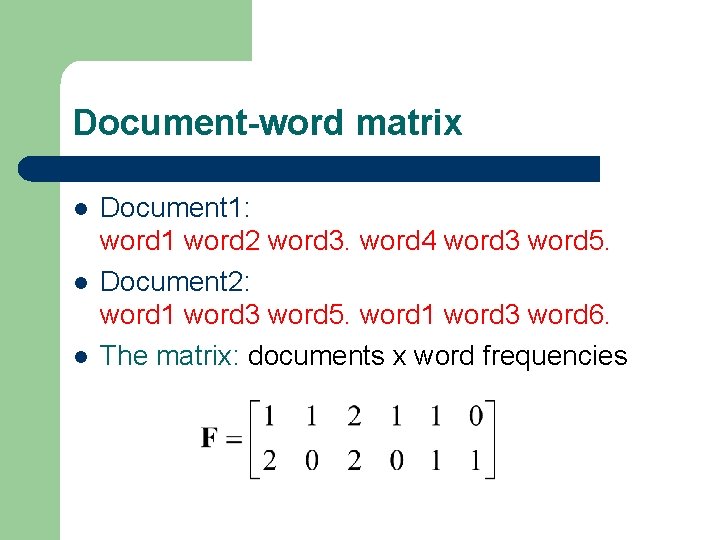

Document-word matrix l l l Document 1: word 1 word 2 word 3. word 4 word 3 word 5. Document 2: word 1 word 3 word 5. word 1 word 3 word 6. The matrix: documents x word frequencies

Methods used l Inverse document frequency and term weighting. l Simple selection of relevant terms. l Latent Semantic Analysis (LSA) for dimensionality reduction. l Minimum Spanning Trees for visual representation. l Touch. Graph XML visualization of MST trees.

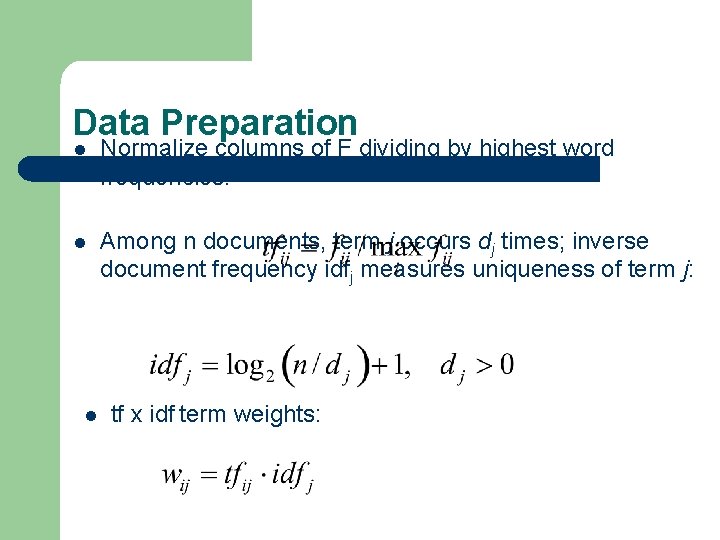

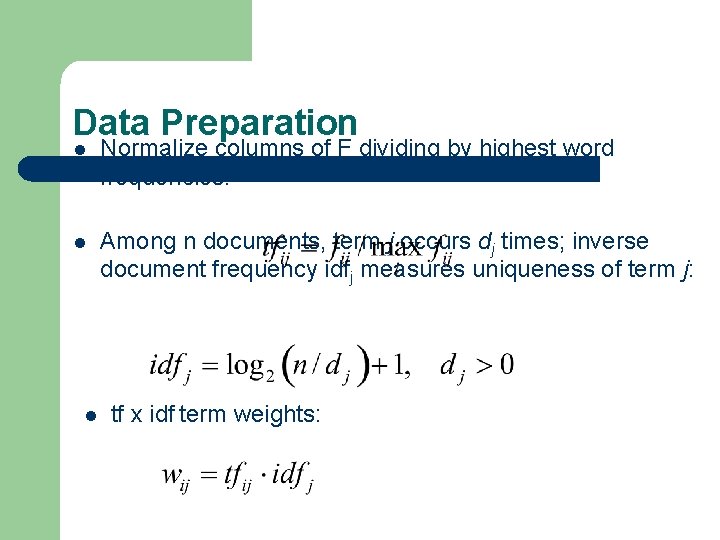

Data Preparation l Normalize columns of F dividing by highest word frequencies: l Among n documents, term j occurs dj times; inverse document frequency idfj measures uniqueness of term j: l tf x idf term weights:

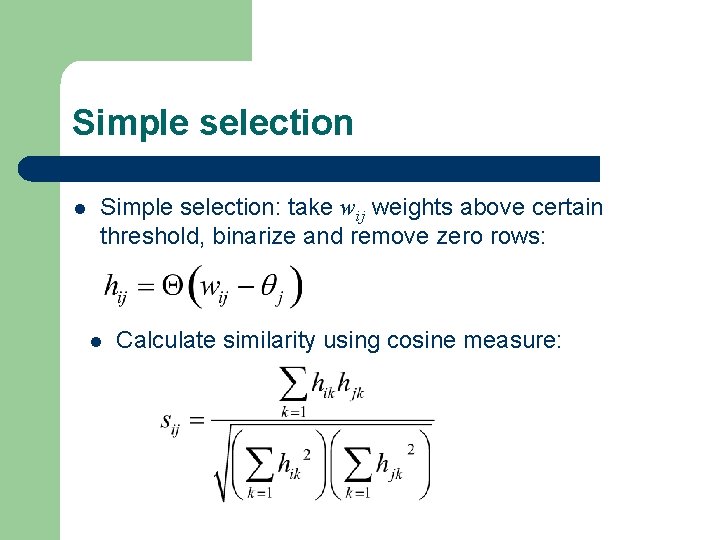

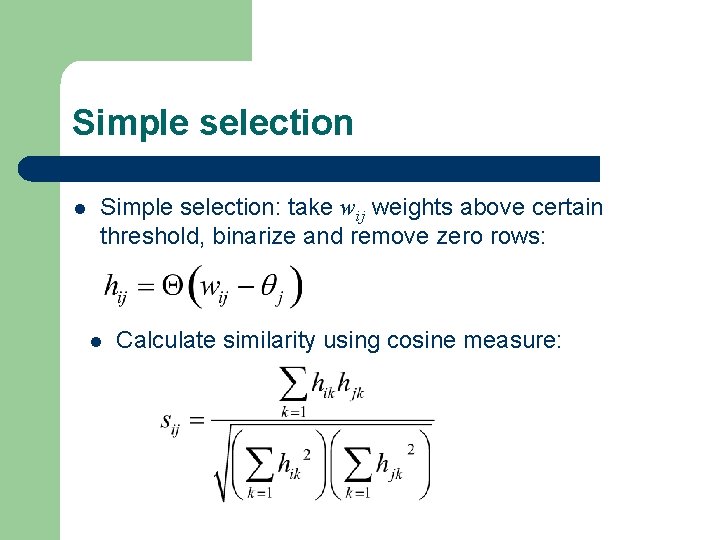

Simple selection l Simple selection: take wij weights above certain threshold, binarize and remove zero rows: l Calculate similarity using cosine measure:

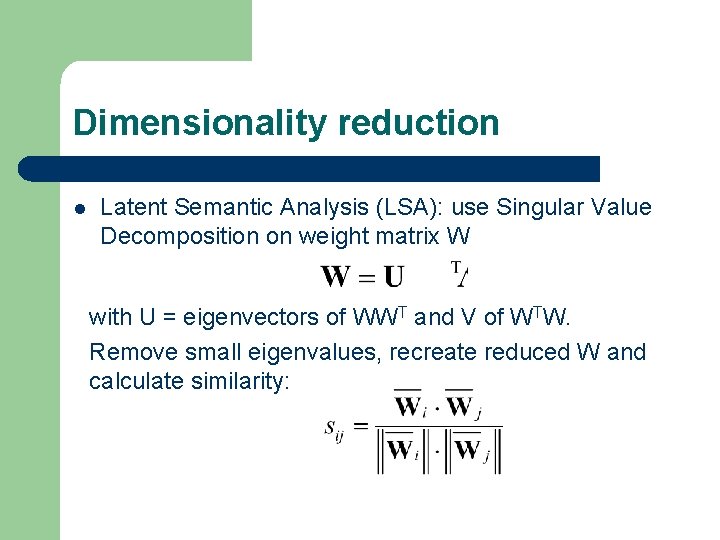

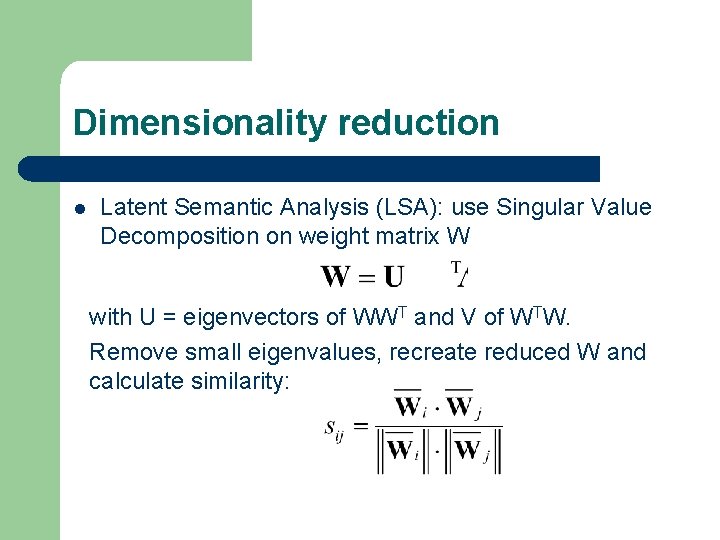

Dimensionality reduction l Latent Semantic Analysis (LSA): use Singular Value Decomposition on weight matrix W with U = eigenvectors of WWT and V of WTW. Remove small eigenvalues, recreate reduced W and calculate similarity:

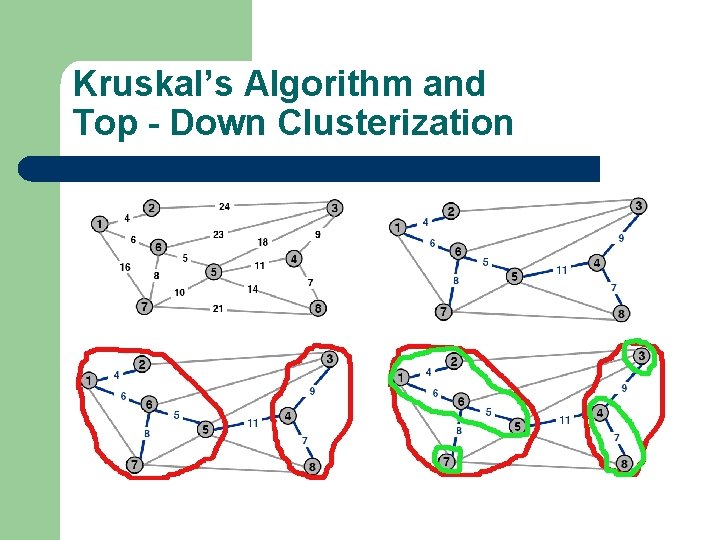

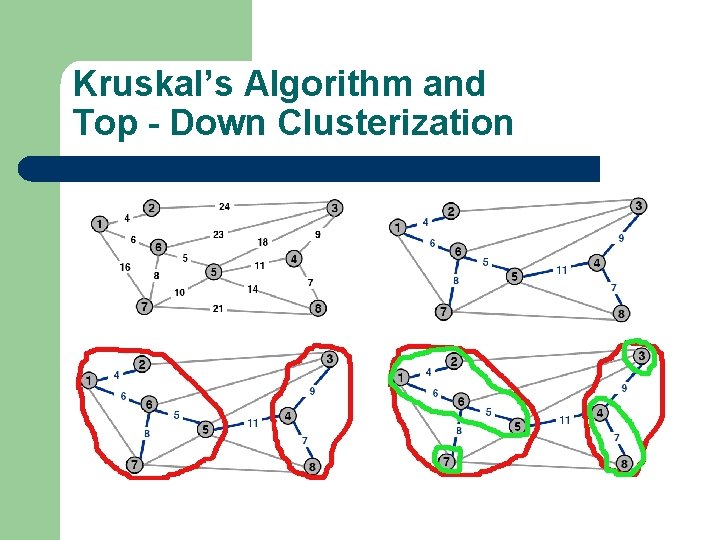

Kruskal’s Algorithm and Top - Down Clusterization

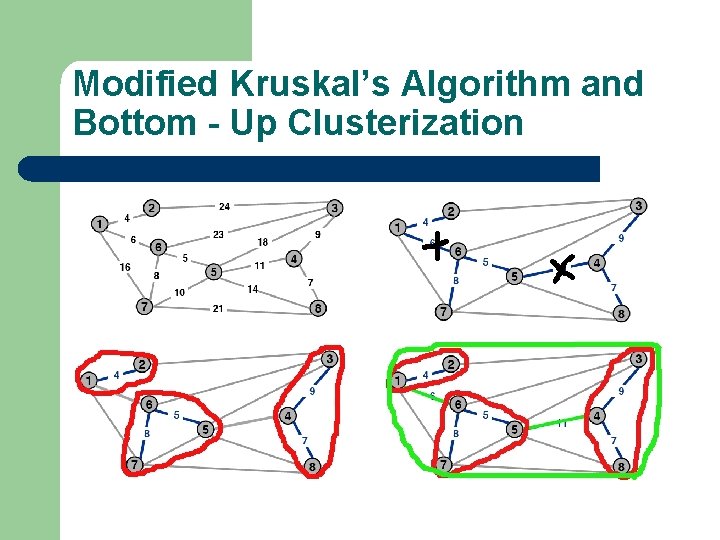

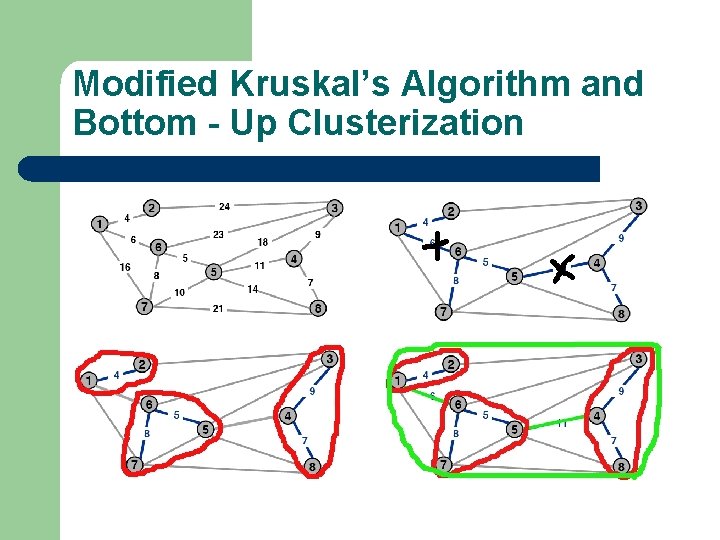

Modified Kruskal’s Algorithm and Bottom - Up Clusterization

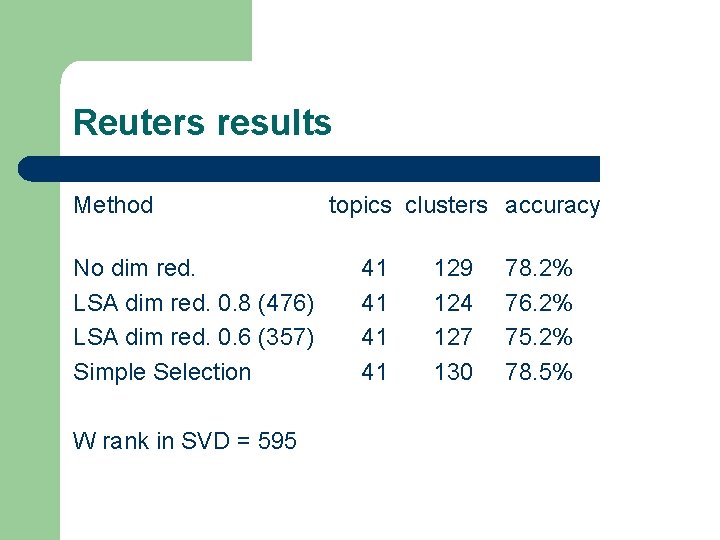

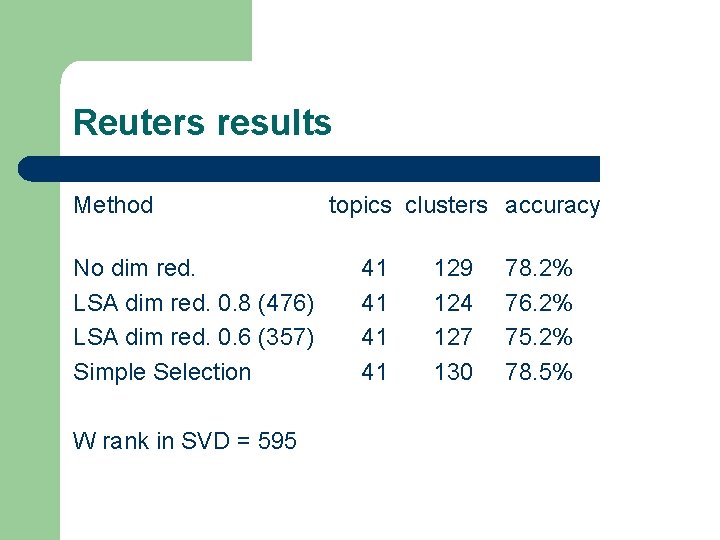

Reuters results Method No dim red. LSA dim red. 0. 8 (476) LSA dim red. 0. 6 (357) Simple Selection W rank in SVD = 595 topics clusters accuracy 41 41 129 124 127 130 78. 2% 76. 2% 75. 2% 78. 5%

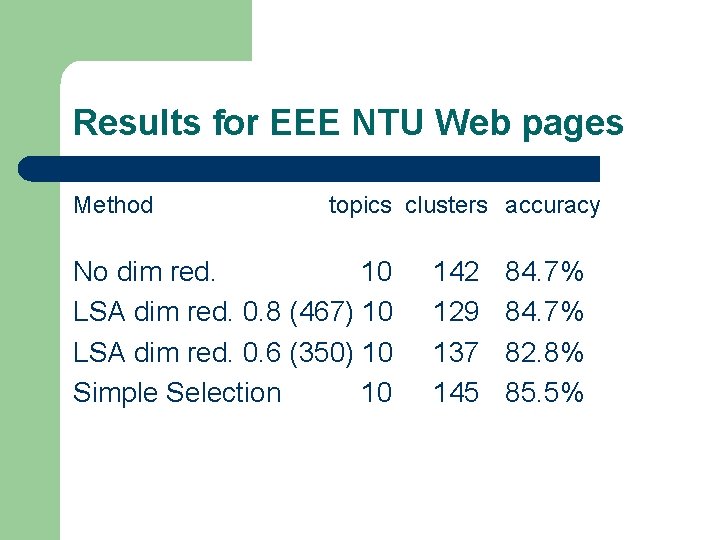

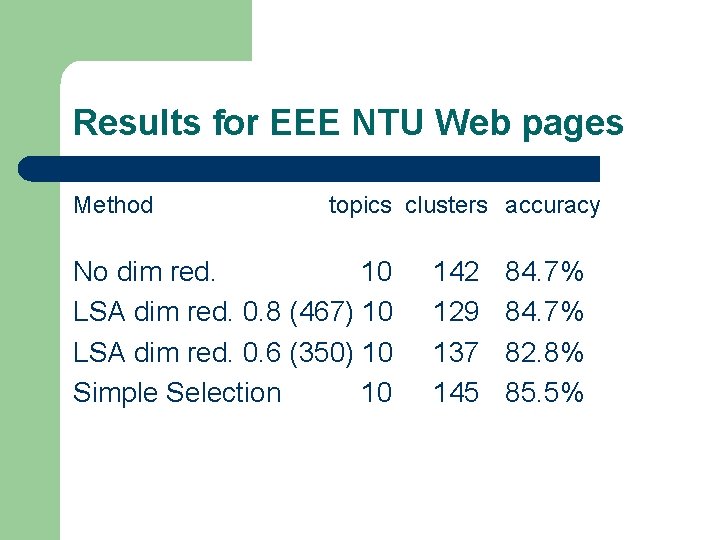

Results for EEE NTU Web pages Method topics clusters accuracy No dim red. 10 LSA dim red. 0. 8 (467) 10 LSA dim red. 0. 6 (350) 10 Simple Selection 10 142 129 137 145 84. 7% 82. 8% 85. 5%

Examples l l Touch. Graph Link. Browser http: //www. neuron. m 4 u. pl/search

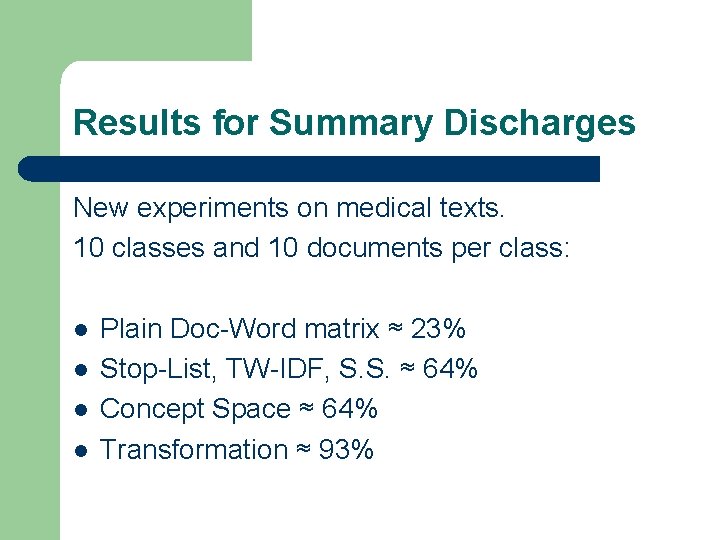

Results for Summary Discharges New experiments on medical texts. 10 classes and 10 documents per class: l l Plain Doc-Word matrix ≈ 23% Stop-List, TW-IDF, S. S. ≈ 64% Concept Space ≈ 64% Transformation ≈ 93%

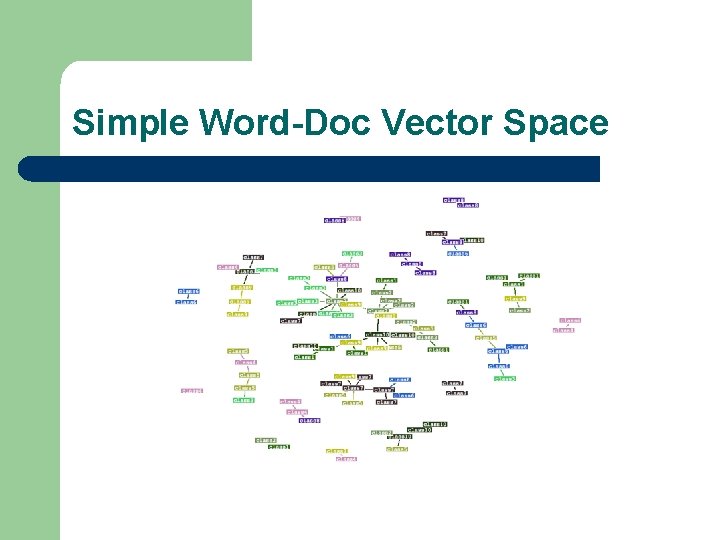

Simple Word-Doc Vector Space

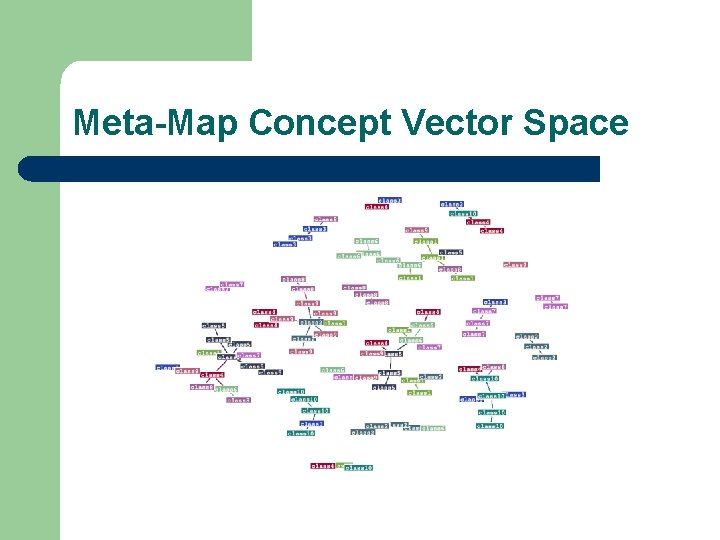

Meta-Map Concept Vector Space

Concept Vector Space after transformation

Summary l l In real application knowledge-based approach is needed to select only useful words and to parse their web pages. Other visualization methods (like MDS) may be explored. People have many interests and thus may belong to several topic groups. Could be a very useful tool to create new shared interest groups in the Internet.