Measurement and data collection Measurement is the process

- Slides: 22

Measurement and data collection Measurement is the process of assigning numbers to variables by counting, ranking and comparing objects or events

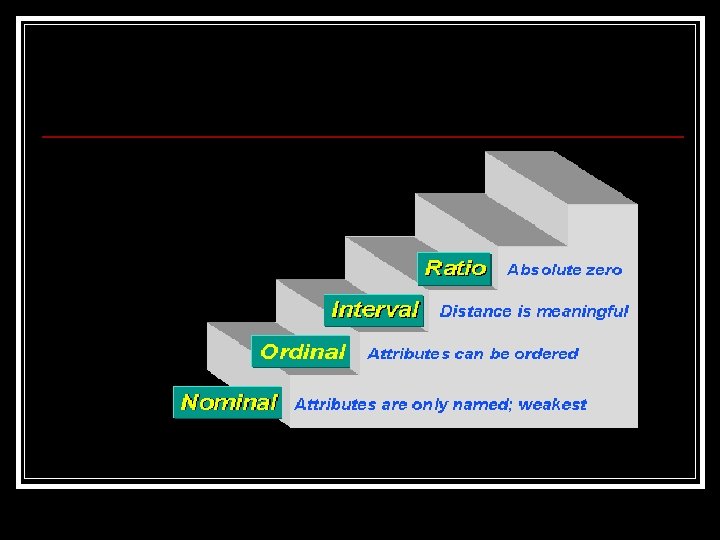

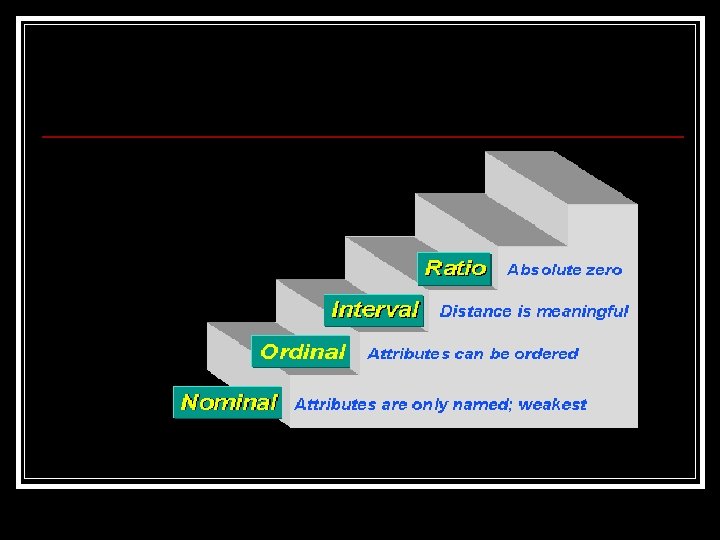

n Levels of measurement: n Nominal n Ordinal n Interval n Ratio

Nominal level of measurement : categories that are distinct from each other such as gender, religion, marital status n They are symbols that have no quantitative value n Lowest level of measurement n

Ordinal level of measurement: n The exact differences between the ranks cannot be specified such as it indicates order rather than exact quantity n Example: anxiety level: mild, moderate, severe. Statistics used involve frequency distributions and percentages n

n n n Interval level of measurement: They are real numbers and the difference between the ranks can be specified. They are actual numbers on a scale of measurement Example: body temperature on the Celsius thermometer as in 36. 2, 37. 2 etc. means there is a difference of 1. 0 degree in body temperature

n n Ratio level of measurement: Is the highest level of data where data can categorized, ranked, difference between ranks can be specified and a true or natural zero point can be identified A zero point means that there is a total absence of the quantity being measured. Example: total amount of money

n Data collection process n What will be collected? n How will the data be collected ? n Who will collect the data ? n Where will the data be collected? n When will the data be collected?

n Data collection methods: n Self report questionnaires n Interviews n Physiological measures n Attitude scales n Psychological tests n Observational methods

n Data collection instruments: n Research instruments, (tools) n A researcher can choose n Use of existing instruments n Developing an instrument n Pilot study: a small scale, trial run of actual research project

n Criteria for selection of a data-collection instrument: n 1. Practicality of instrument: cost and appropriateness for the study population n 2. Reliability: consistency and stability , measured by the use of co relational procedures: correlation coefficient (-1. 0 and +1. 0) between two sets of scores or between the ratings of two judges

n Reliability: 1. Stability reliability: consistency over time (test reliability) 2. Equivalence reliability (inter rater or inter observer reliability) 3. Internal consistency reliability

n Reliability Activity n What would you think of a bathroom scale that gave you drastically different readings every time you stepped on it? n How about a standardized entrance examination that gave different scores each time it was used on the same individual? n Background The inconsistency in these test results would indicate an unreliable instrument. We want our test instruments to measure whatever they are supposed to measure with consistency. That is that any errors, or differences between test scores for an individual, would result from chance and not from systematic error. n

2. Equivalence reliability (inter rater or inter observer reliability): the degree to which different forms of an instrument obtain the same results, or two observers using a single instrument obtain the same results. n Example: using two different forms of questions n

3. Internal consistency reliability: addresses the extent to which all items on an instrument measure the same variable n Example: all items measure depression if one item measuring guilt then it is not internally consistent n Split half method n

n Reliability of an instrument is determined by correlation coefficient n Range is -1. 0 and +1. 0 n Above 0. 7 is considered satisfactory

n n Validity of the instrument: The degree to which an instrument measures what it is supposed to be measuring. The greater the validity of an instrument the more confidence one can have that the instrument will obtain data that will answer the research questions or test the research hypotheses

n Types of validity: n Face validity n Content validity n Criterion validity n Construct validity

n Face validity: a brief and hasty examination of an instrument. n Use of experts in the content area

n Content validity: Concerned with the scope or range of items used to measure the variable, i. e number and type of items to measure the concept

n Criterion validity: the extent to which an instrument corresponds to or is correlated with some criterion n Two types: Concurrent n Predictive n

n Construct validity: the degree to which an instrument measures the construct that is supposed to measure