Louisiana Tech University College of Engineering and Science

- Slides: 24

Louisiana Tech University College of Engineering and Science Using Item Analysis to Adjust Testing and Topical Coverage in Individual Courses Bernd S. W. Schröder College of Engineering and Science

ABET’s requirements § “The institution must evaluate, advise, and monitor students to determine its success in meeting program objectives. ” (From criterion 1) § “(The institution must have) a system of ongoing evaluation that demonstrates achievement of these objectives and uses the results to improve the effectiveness of the program. ” (2 d) College of Engineering and Science

ABET’s requirements (cont. ) § “Each program must have an assessment process with documented results. Evidence must be given that the results are applied to the further development and improvement of the program. The assessment process must demonstrate that the outcomes important to the mission of the institution and the objectives of the program, including those listed above, are being measured. ” (postlude to a-k) College of Engineering and Science

ABET’s requirements and us § Long term assessment is the only way to go, but how can more immediate data be obtained? § Large feedback loops need to be complemented with small feedback loops § All this costs time and money § Still feels foreign to some faculty § “Free” tools that “do something for me” would be nice College of Engineering and Science

Data that is immediately available § § Faculty give LOTS of tests Tests are graded and returned Next term we make a new test … and we may wonder why things do not improve College of Engineering and Science

Presenter’s context § Adjustment of topical coverage in Louisiana Tech University’s integrated curriculum § Presenter had not taught all courses previously § Some material was moved to “nontraditional” places § How do we find out what works well? College of Engineering and Science

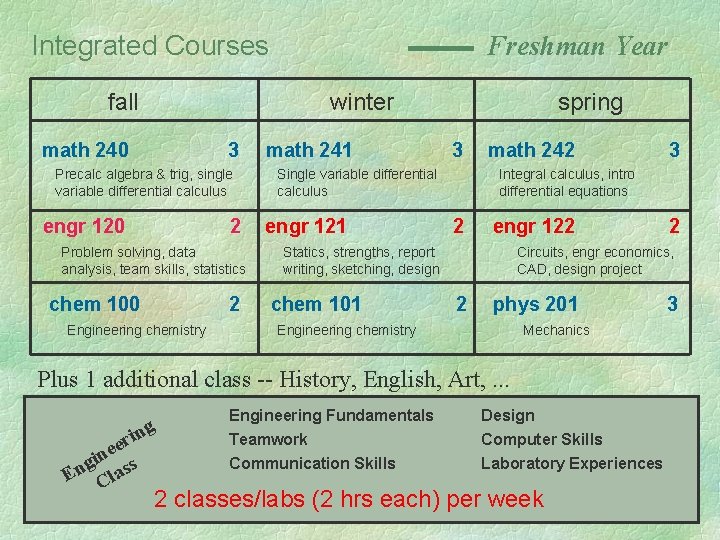

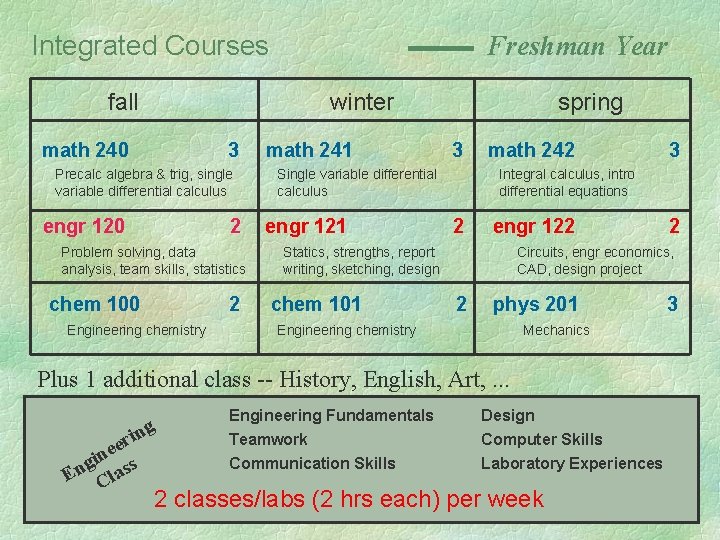

Integrated Courses fall Freshman Year winter math 240 3 Precalc algebra & trig, single variable differential calculus engr 120 2 Problem solving, data analysis, team skills, statistics chem 100 2 Engineering chemistry math 241 spring 3 Single variable differential calculus engr 121 math 242 Integral calculus, intro differential equations 2 engr 122 Statics, strengths, report writing, sketching, design chem 101 er e gin ass n E Cl 2 Circuits, engr economics, CAD, design project 2 phys 201 Mechanics Engineering chemistry Plus 1 additional class -- History, English, Art, . . . ing 3 Engineering Fundamentals Design Teamwork Communication Skills Computer Skills Laboratory Experiences 2 classes/labs (2 hrs each) per week 3

Integrated Courses fall Sophomore Year winter math 242 3 Basic statistics, multivariable integral calculus engr 220 Statics and strengths 3 math 245 engr 221 3 engr 222 physics 202 Thermodynamics 3 Electric and magnetic fields, optics Plus 1 additional class -- History, English, Art, . . . En gin Cl eer as in s g 3 Sequences, series, differential equations EE applications and circuits 2 Engineering materials math 244 Multivariable differential calculus, vector analysis 3 memt 201 spring Engineering Fundamentals Design Teamwork Communication Skills Statistics & Engr Economics Laboratory Experiences 3 hours lab & 2. 5 hours lecture per week 3

Implementation Schedule § AY 1997 -98: One pilot group of 40 § AY 1998 -99: One pilot group of 120 § AY 1999 -2000 Full implementation College of Engineering and Science

Item analysis § Structured method to analyze (MC) test data § Can detect “good” and “bad” test questions l l Awkward formulation “Blindsided” students § Can detect problem areas in the instruction l l Difficult material Teaching that was less than optimal § Plus, data that usually is lost is stored College of Engineering and Science

“But I don’t give tests. . . ” § Do you grade projects, presentations, lab reports with a rubric? l Scores are sums of scores on parts § Do you evaluate surveys? (Gloria asked) l Individual questions may have numerical responses (Likert scale) § Item analysis is applicable to situations in which many “scores” are to be analyzed College of Engineering and Science

Literature § R. M. Zurawski, Making the Most of Exams: Procedures for Item Analysis, The National Teaching and Learning Forum, vol. 7, nr. 6, 1998, 1 -4 l http: //www. ntlf. com § http: //ericae. net/ft/tamu/Espy. htm (Bio!) § http: //ericae. net/ (On-line Library) College of Engineering and Science

Underlying Assumptions in Literature § Multiple Choice § Homogeneous test § Need to separate high from low § Are these valid for our tests? (This will affect how we use the data. ) College of Engineering and Science

How does it work? § Input all individual scores in a spreadsheet l l If you use any calculating device to do this already, then this step is free the same goes for machine recorded scores (multiple choice, surveys) § Compute averages, correlations etc. l But what does it tell us? (Presentation based on actual and “cooked” sample data. ) College of Engineering and Science

Item Difficulty § Compute the average score of students on the given item § Is a high/low average good or bad? § How do we react? College of Engineering and Science

Comparison Top vs. Bottom § General idea: high performers should outperform low performers on all test items § Compare average scores of top X% to average scores of bottom X% § Problems on which the top group outscores the bottom group by about 30% are good separators (retain) § Advantage: Simple College of Engineering and Science

Comparison Top vs. Bottom § Problems on which the bottom group scores near or above the top group should be analyzed l l l Is the formulation intelligible? Was the material taught adequately? Was the objective clear to everyone? Does the problem simply address a different learning style? Is there a problem with the top performers? College of Engineering and Science

Comparison Student Group vs. Rest § Can analyze strengths and weaknesses of specific demographics (even vs. odd, 11 -20 vs. rest). § Knowing a weakness and doing something about it unfortunately need not be the same thing. (3 NT) College of Engineering and Science

Comparison Class vs. Class § If the test is given to several groups of individuals, then scores of the different groups can be compared § Differences in scores can sometimes be traced to differences in teaching style § Similarity in scores can reassure faculty that a particular subject may have been genuinely “easy” or “hard”. College of Engineering and Science

Correlation § Related material should have scores that correlate § Individual problem scores should correlate with the total? (What if very different skills are tested on the same test? ) College of Engineering and Science

Correlation and Separation § Often the two are correlated but “cross fires” can occur l l Questions with same correlation can have different separations and vice versa A question may separate well, yet not correlate well and vice versa College of Engineering and Science

Distractor Analysis § Incorrect MC item that was not selected by anyone should be replaced § Possible by slightly misusing the tool. College of Engineering and Science

Data remains available § Many faculty look to old tests (of their own or from others) when making a new test § Past problems are often forgotten § Item analysis provides a detailed record of the outcome and allows faculty to re-think testing and teaching strategies § Anyone who has spent time thinking about curving may want to spend this time on item analysis College of Engineering and Science

Consequences of the Evaluation 2 (Gloria’s law: E=mc ) § Don’t panic, keep data with test § Better test design § Identification of challenging parts of the class leads to adjustment in coverage § Students are better prepared for following classes College of Engineering and Science

Latech prototyping lab

Latech prototyping lab Louisiana tech electrical engineering

Louisiana tech electrical engineering Louisiana state university biomedical engineering

Louisiana state university biomedical engineering Louisiana tech computer science

Louisiana tech computer science Low tech and high tech assistive technology

Low tech and high tech assistive technology Ucf college of engineering and computer science

Ucf college of engineering and computer science College of engineering science and technology

College of engineering science and technology Uc ceas

Uc ceas College of engineering, king abdulaziz university

College of engineering, king abdulaziz university 詹景裕

詹景裕 Salahaddin university college of education

Salahaddin university college of education Oussep

Oussep Louisiana judicial college

Louisiana judicial college Mathematics ____ my favorite subject

Mathematics ____ my favorite subject Summer smart scholarship selu

Summer smart scholarship selu Ull ulink

Ull ulink First tech challenge engineering notebook examples

First tech challenge engineering notebook examples Material science and engineering iit delhi

Material science and engineering iit delhi Engineering expo virginia tech

Engineering expo virginia tech Hog tech university

Hog tech university Texa tech university

Texa tech university Czech tech university

Czech tech university Texas tech student business services

Texas tech student business services Wake tech scott northern campus

Wake tech scott northern campus Zeal dnyanganga college of engineering and research

Zeal dnyanganga college of engineering and research