Lecture 6 Vikas Ashok Word Representations Why Represent

![Distributional Semantics • [Firth 1957]: Word meaning by the company it keeps. • A Distributional Semantics • [Firth 1957]: Word meaning by the company it keeps. • A](https://slidetodoc.com/presentation_image_h2/5519d3d68c27c5f34c573127c6ebc5cc/image-5.jpg)

![Examples • vector[Queen] = vector[King] - vector[Man] + vector[Woman] From Girish K’s slides Examples • vector[Queen] = vector[King] - vector[Man] + vector[Woman] From Girish K’s slides](https://slidetodoc.com/presentation_image_h2/5519d3d68c27c5f34c573127c6ebc5cc/image-7.jpg)

- Slides: 20

Lecture 6 Vikas Ashok

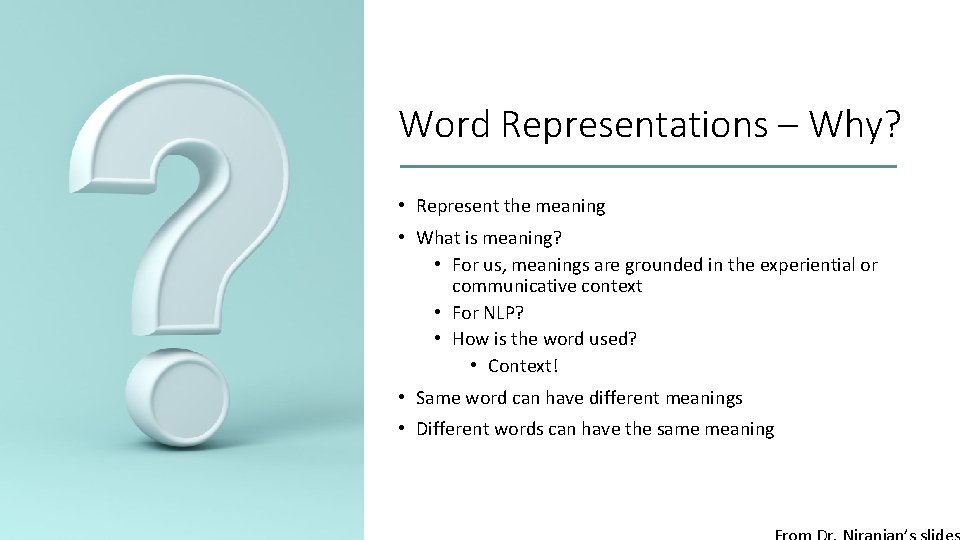

Word Representations – Why? • Represent the meaning • What is meaning? • For us, meanings are grounded in the experiential or communicative context • For NLP? • How is the word used? • Context! • Same word can have different meanings • Different words can have the same meaning

• How the word gets used? • In what contexts is the word typically used? • What roles does the word play in those contexts? Word Representation - Properties • Similar contexts => similar representations. • Function (word) -> k dimensional vector. • The components of the vector hopefully capture the main semantics of words. • Aspects of meaning.

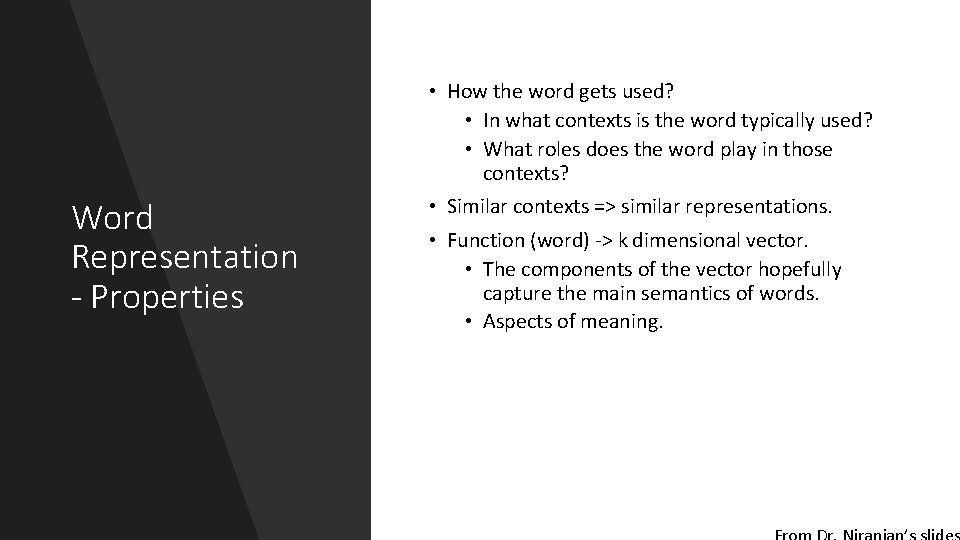

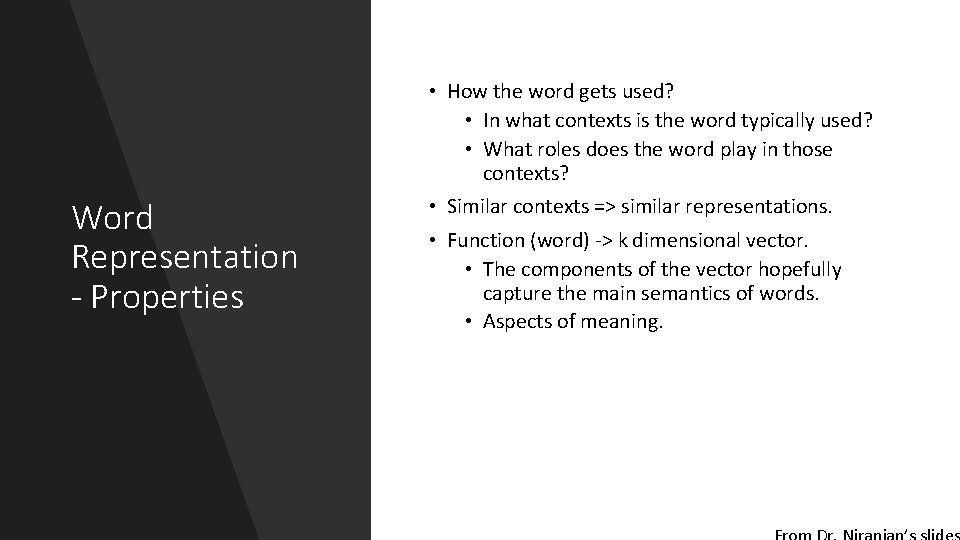

One-Hot Encoding • Not a good solution – why? • Vectors are huge for huge vocabularies • All words equidistant – similar and dissimilar words

![Distributional Semantics Firth 1957 Word meaning by the company it keeps A Distributional Semantics • [Firth 1957]: Word meaning by the company it keeps. • A](https://slidetodoc.com/presentation_image_h2/5519d3d68c27c5f34c573127c6ebc5cc/image-5.jpg)

Distributional Semantics • [Firth 1957]: Word meaning by the company it keeps. • A word’s meaning can be determined by the contexts in which it appears. • That is, we can represent a word by its context. • But contexts are words too!

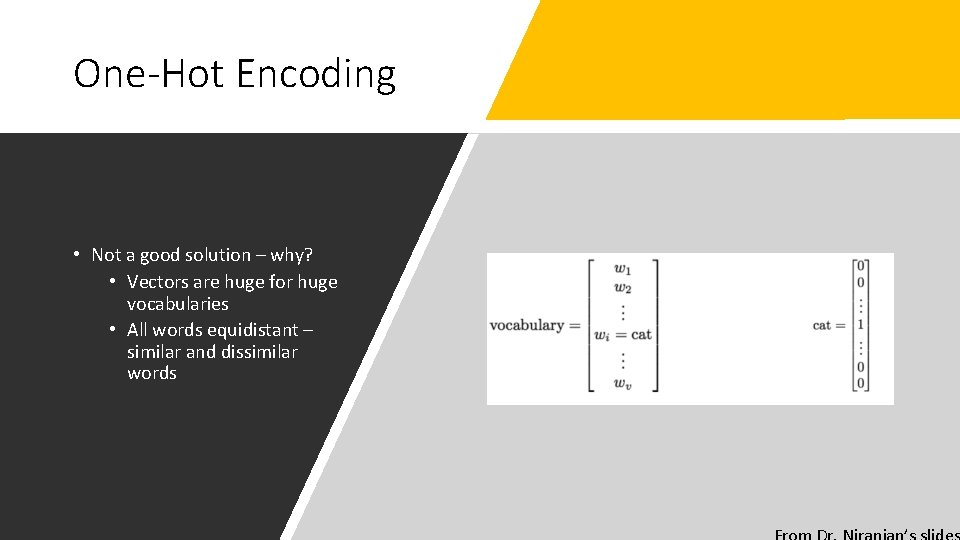

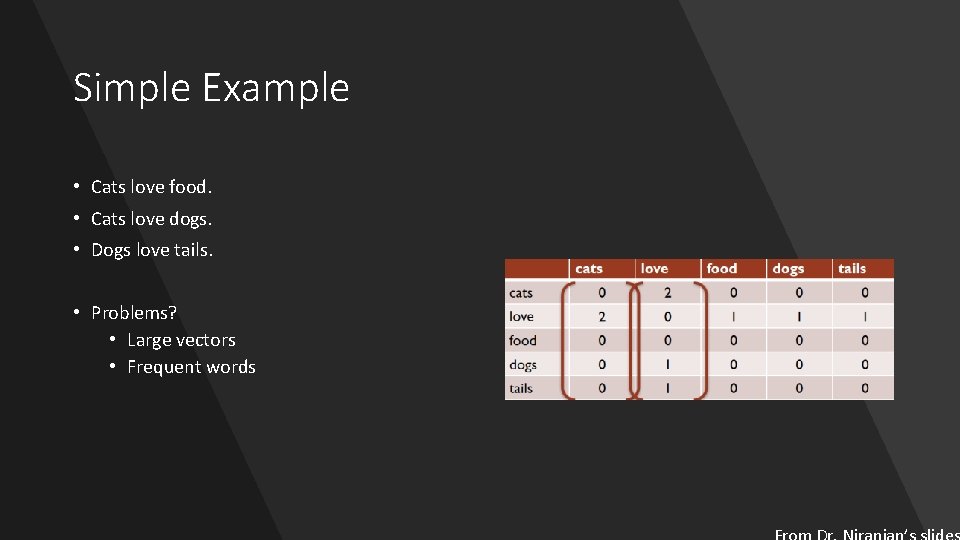

Simple Example • Cats love food. • Cats love dogs. • Dogs love tails. • Problems? • Large vectors • Frequent words

![Examples vectorQueen vectorKing vectorMan vectorWoman From Girish Ks slides Examples • vector[Queen] = vector[King] - vector[Man] + vector[Woman] From Girish K’s slides](https://slidetodoc.com/presentation_image_h2/5519d3d68c27c5f34c573127c6ebc5cc/image-7.jpg)

Examples • vector[Queen] = vector[King] - vector[Man] + vector[Woman] From Girish K’s slides

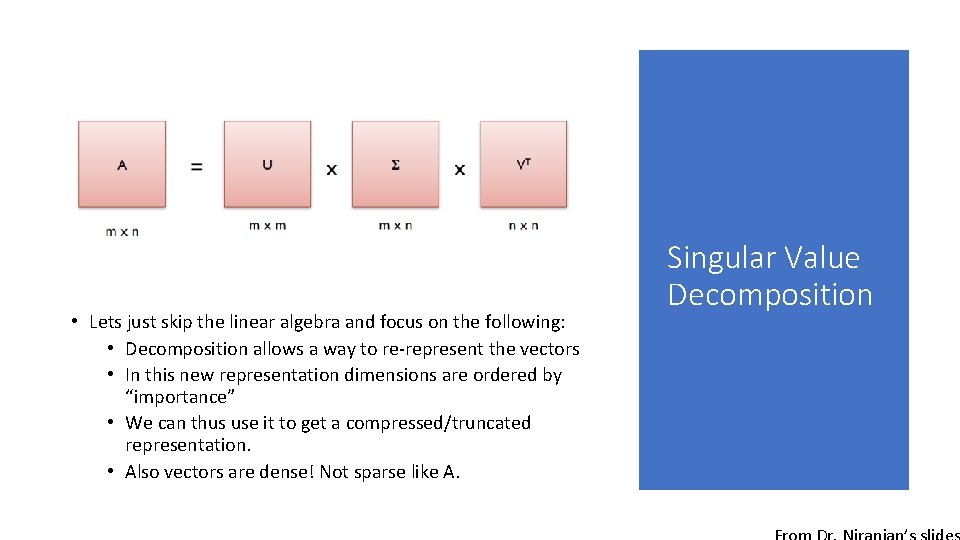

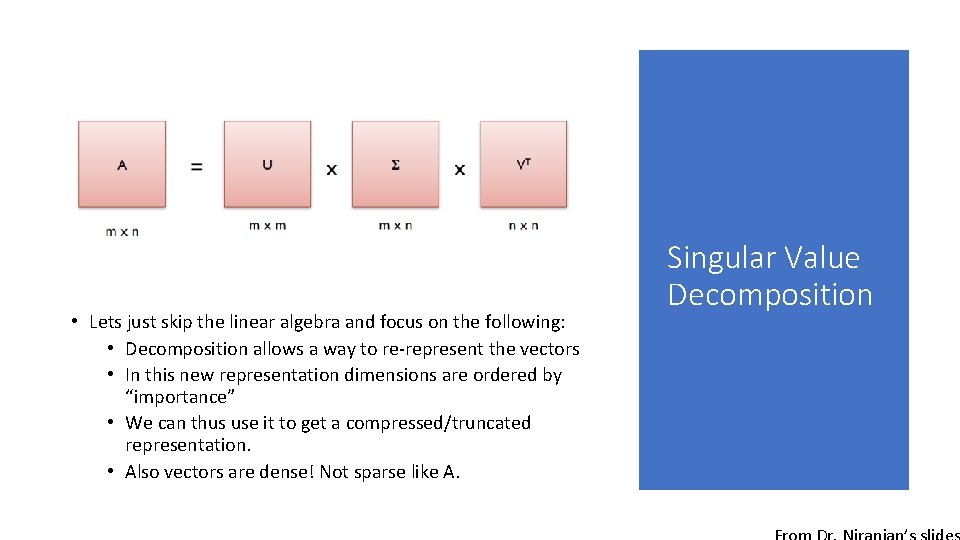

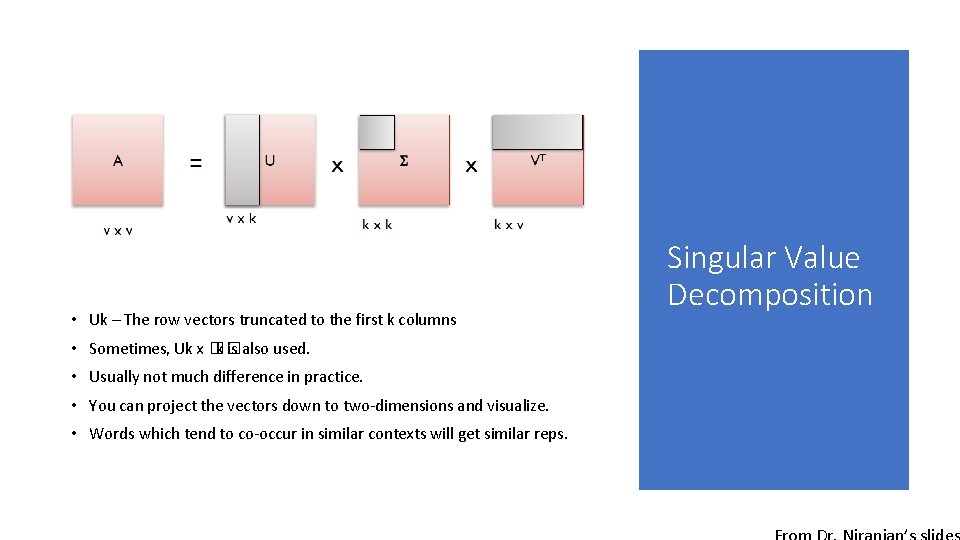

• Lets just skip the linear algebra and focus on the following: • Decomposition allows a way to re-represent the vectors • In this new representation dimensions are ordered by “importance” • We can thus use it to get a compressed/truncated representation. • Also vectors are dense! Not sparse like A. Singular Value Decomposition

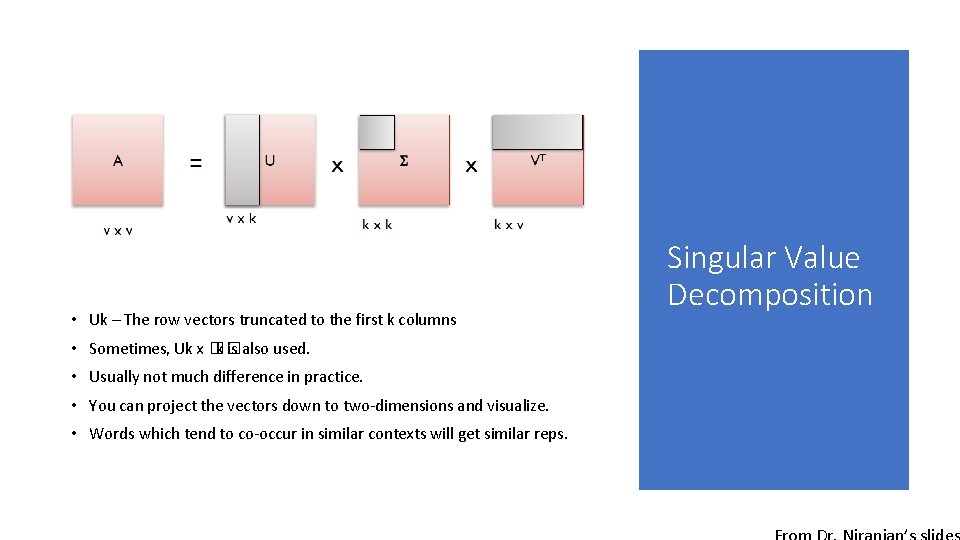

• Uk – The row vectors truncated to the first k columns • Sometimes, Uk x �� k is also used. • Usually not much difference in practice. • You can project the vectors down to two-dimensions and visualize. • Words which tend to co-occur in similar contexts will get similar reps. Singular Value Decomposition

Singular Value Decomposition • High Complexity • High Maintenance • Alternative Option: Treat word representations as the learning target. • Learn representations that maximize some objective on a training set. • Three examples • Skip-grams Word 2 vec • Glo. Ve • Neural Language Models (Bengio)

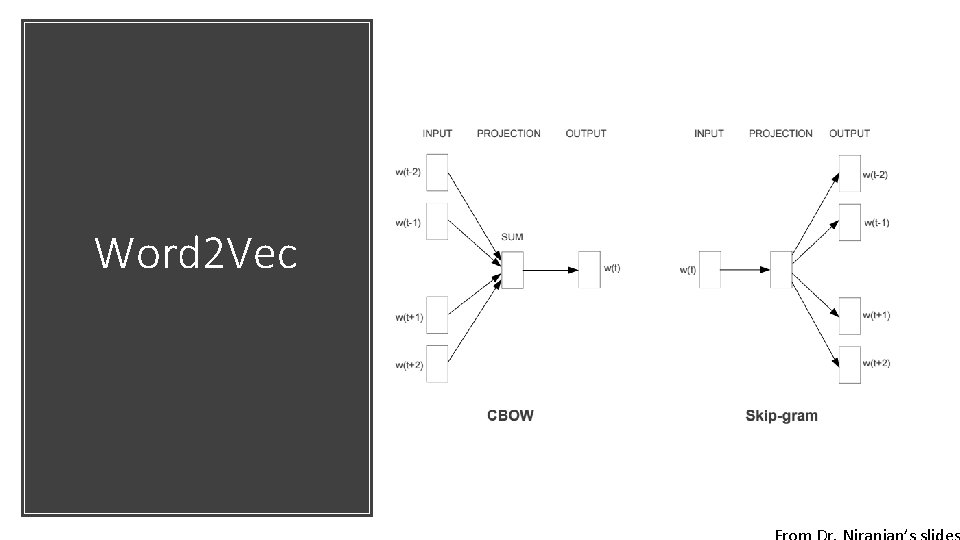

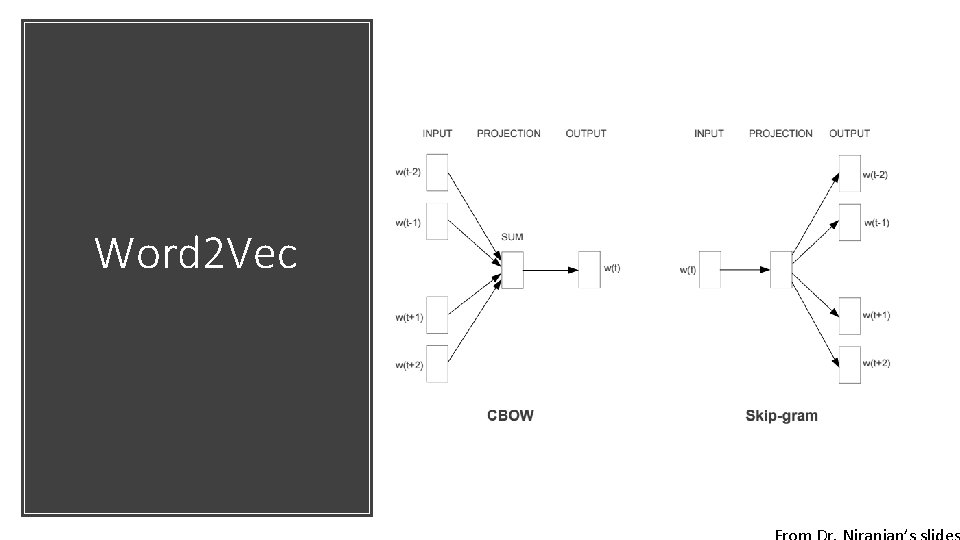

Word 2 Vec

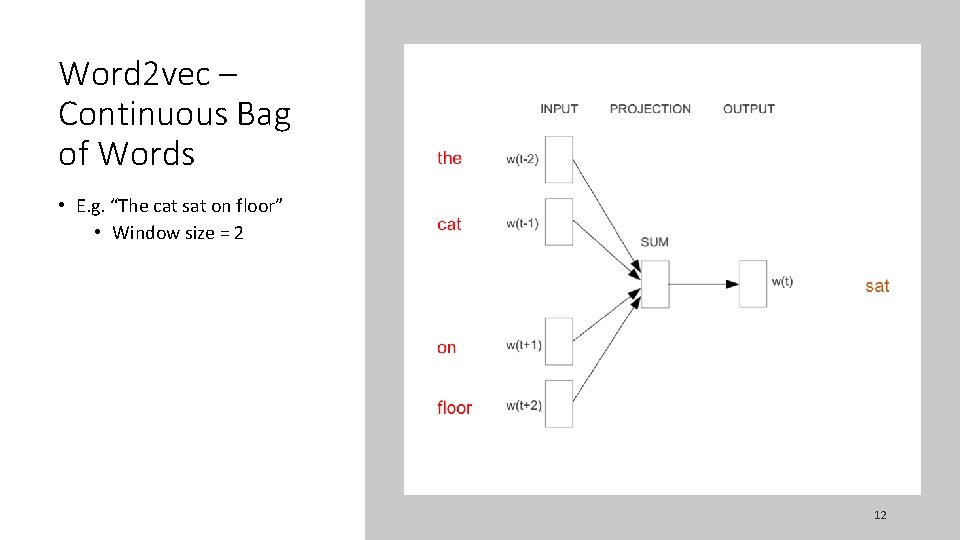

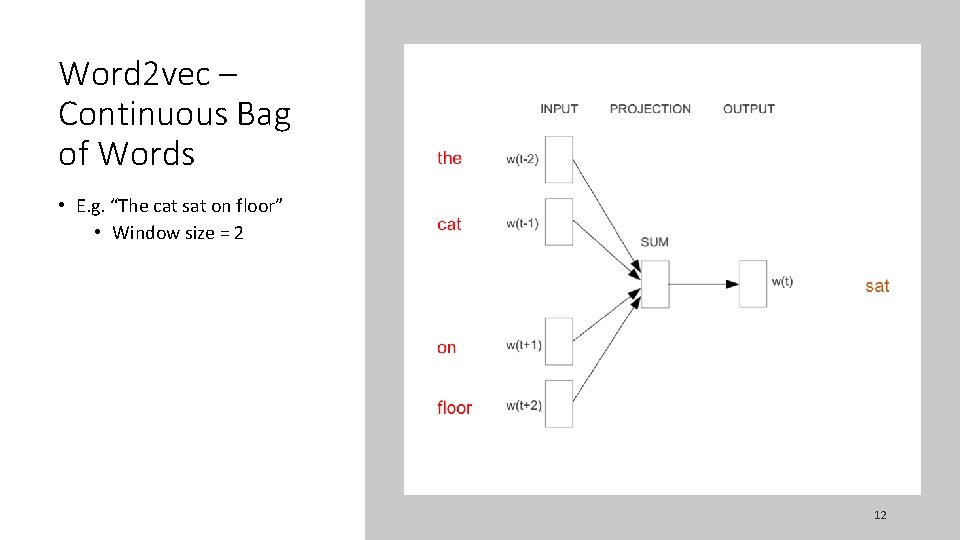

Word 2 vec – Continuous Bag of Words • E. g. “The cat sat on floor” • Window size = 2 12

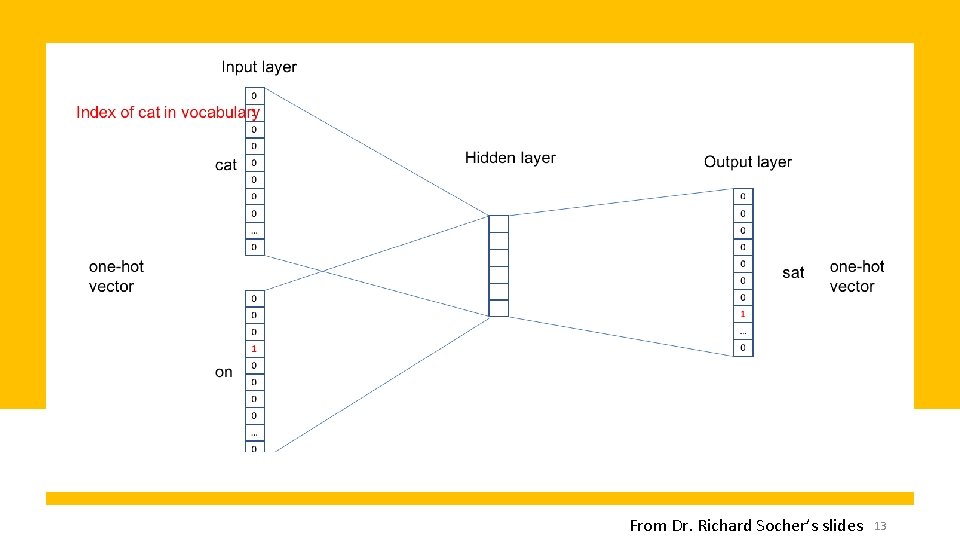

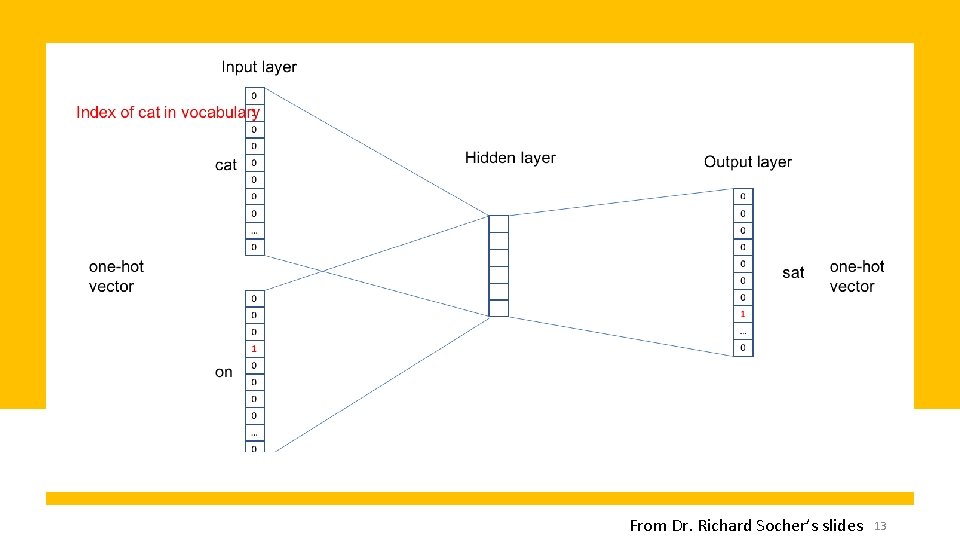

From Dr. Richard Socher’s slides 13

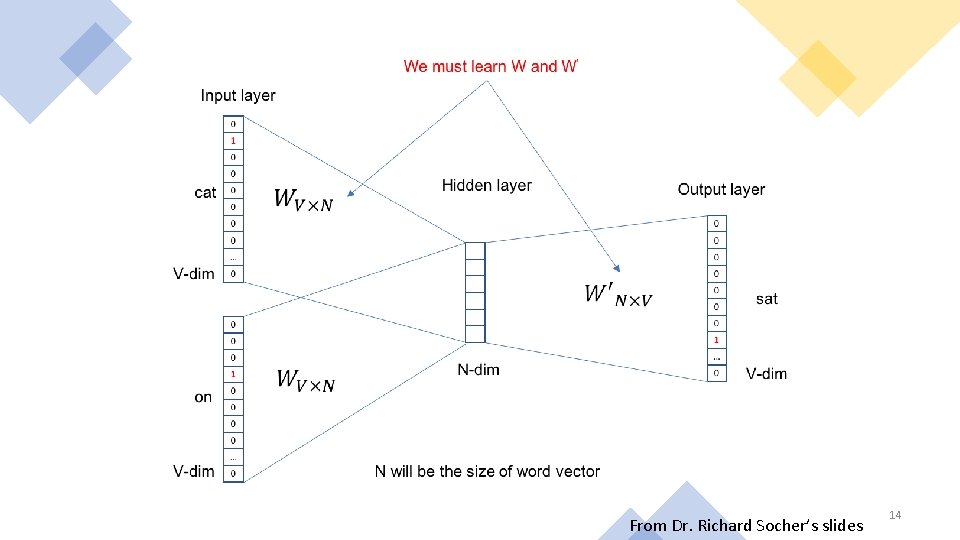

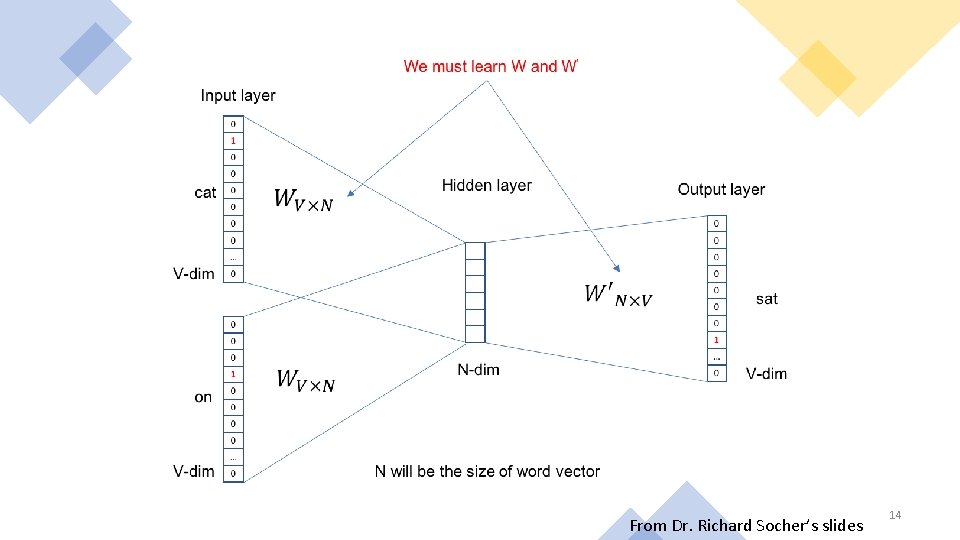

From Dr. Richard Socher’s slides 14

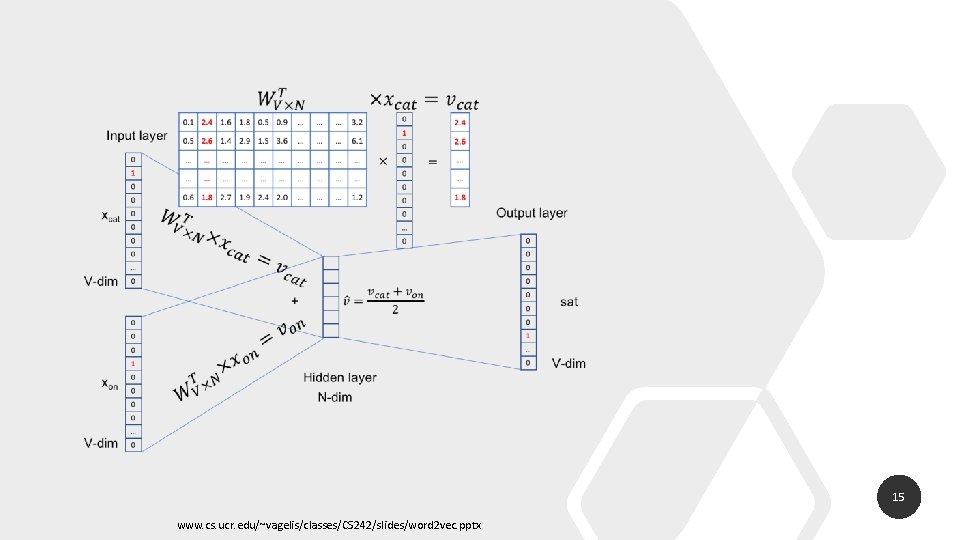

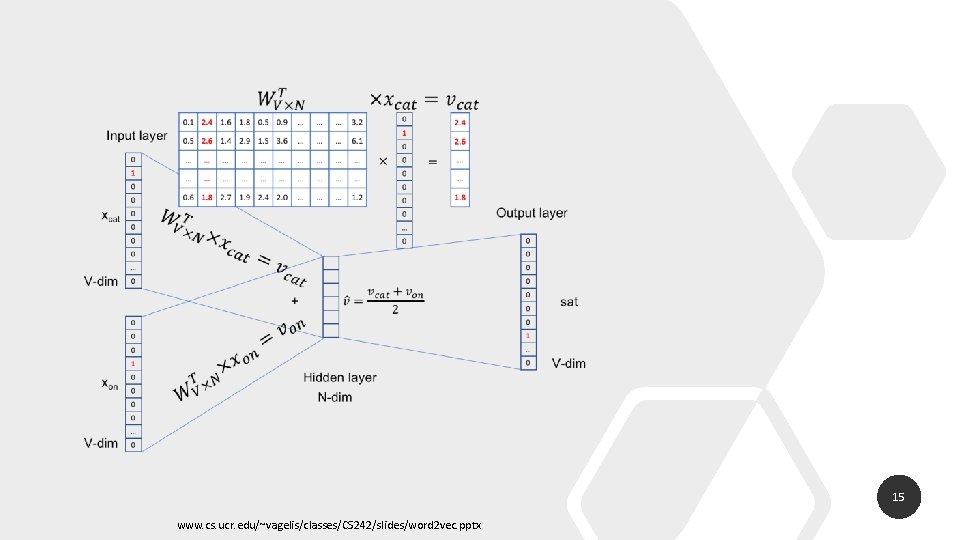

15 www. cs. ucr. edu/~vagelis/classes/CS 242/slides/word 2 vec. pptx

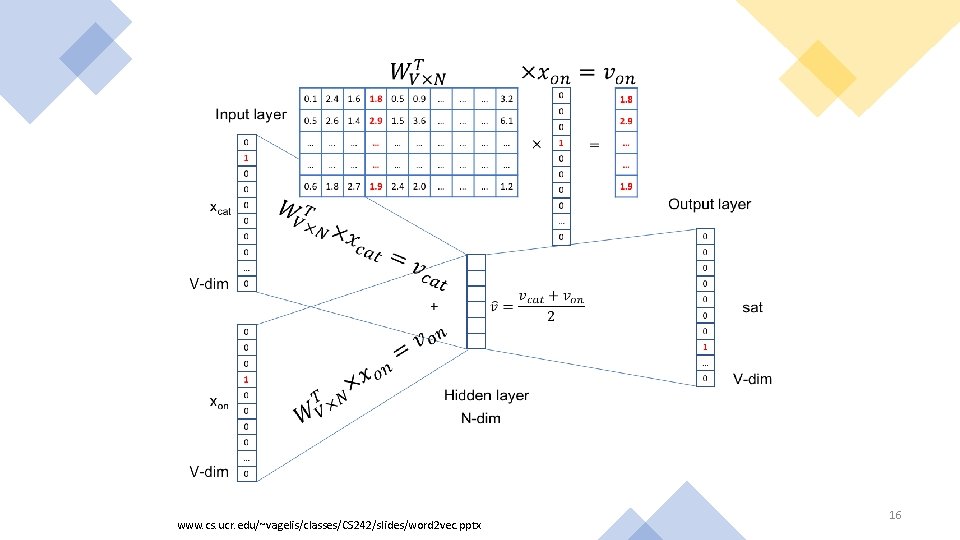

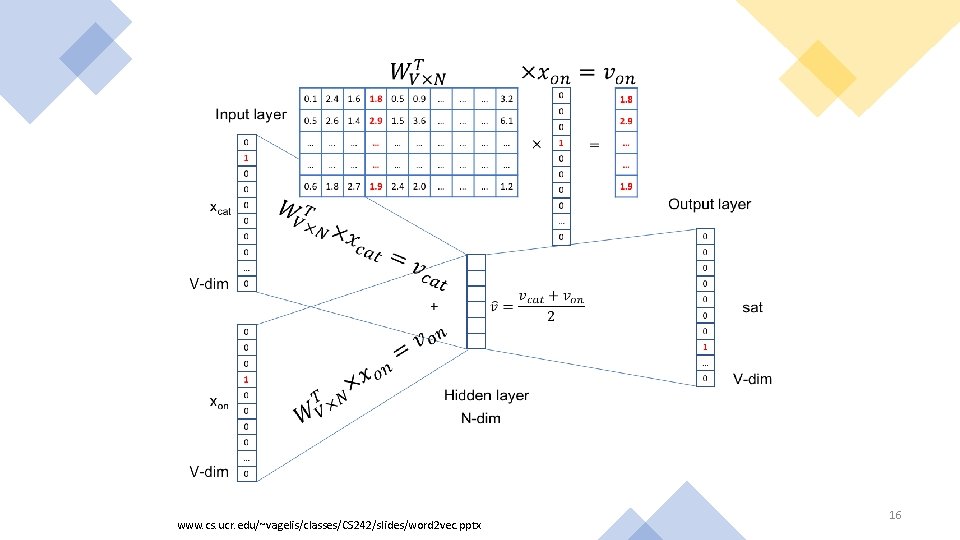

www. cs. ucr. edu/~vagelis/classes/CS 242/slides/word 2 vec. pptx 16

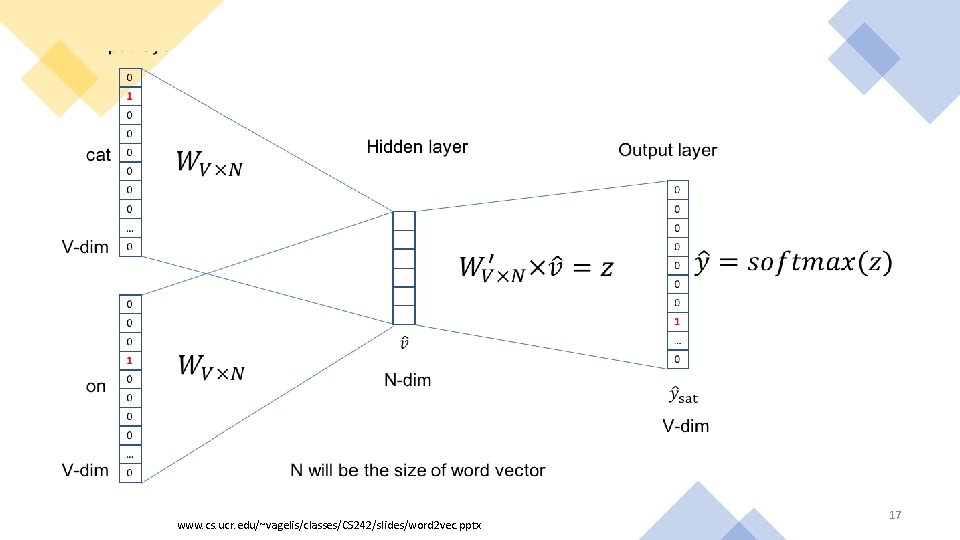

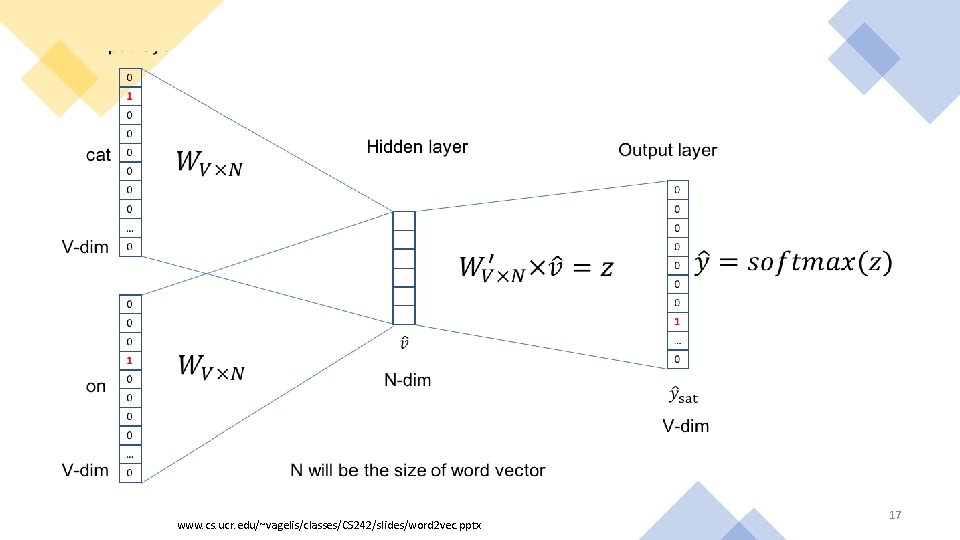

www. cs. ucr. edu/~vagelis/classes/CS 242/slides/word 2 vec. pptx 17

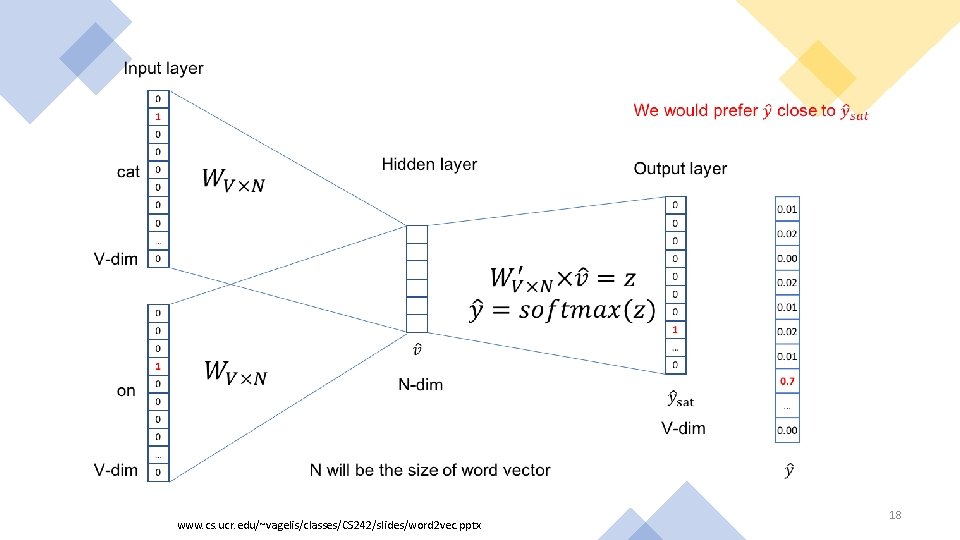

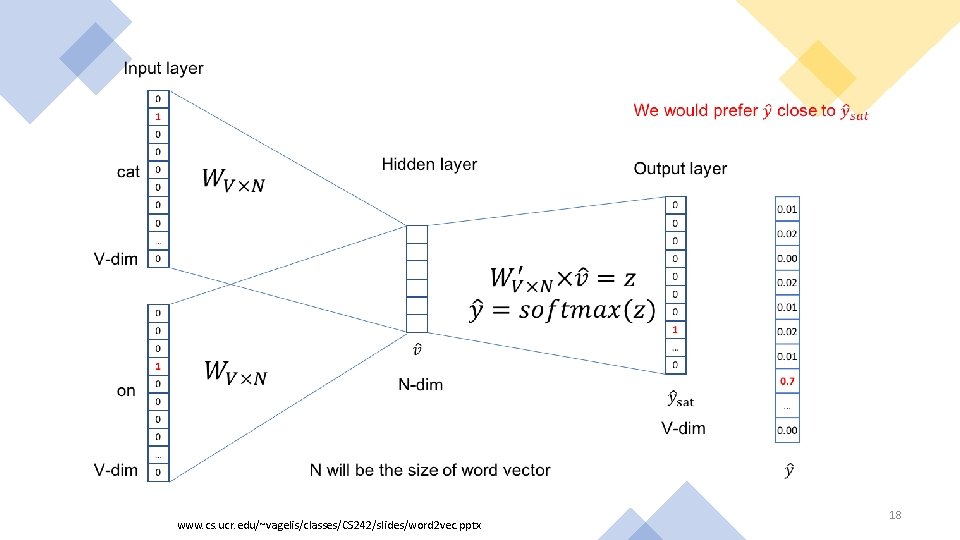

www. cs. ucr. edu/~vagelis/classes/CS 242/slides/word 2 vec. pptx 18

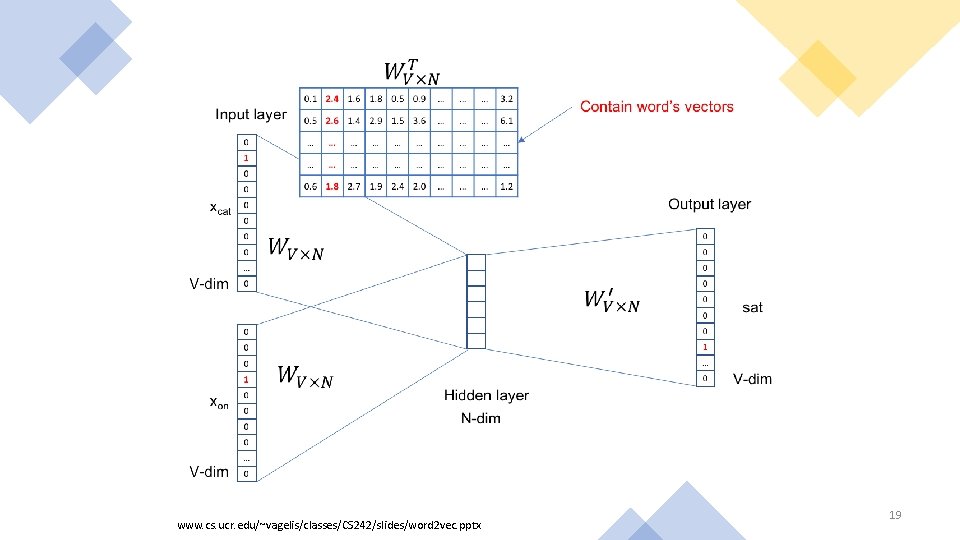

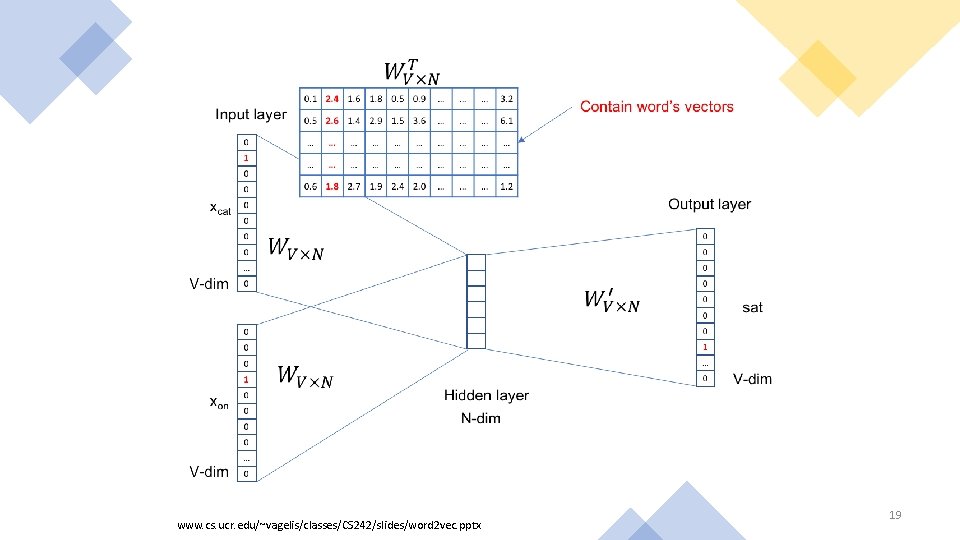

www. cs. ucr. edu/~vagelis/classes/CS 242/slides/word 2 vec. pptx 19

Resources • Stanford CS 224 d: Deep Learning for NLP • http: //cs 224 d. stanford. edu/index. html • The best • “word 2 vec Parameter Learning Explained”, Xin Rong • https: //ronxin. github. io/wevi/ • Word 2 Vec Tutorial - The Skip-Gram Model • http: //mccormickml. com/2016/04/19/word 2 vec-tutorial-the-skip-gram-model/ • Improvements and pre-trained models for word 2 vec: • https: //nlp. stanford. edu/projects/glove/ • https: //fasttext. cc/ (by Facebook) 20