John Ward Interim Associate Dean Millersville University Suzanne

- Slides: 33

John Ward Interim Associate Dean Millersville University Suzanne Mc. Cotter Associate Dean, College of Education and Human Services Montclair State University

§ CAEP requirements for validity and reliability § Consideration of whether requirements undermine institutional voice § Reporting on survey of institutions § Report on case example of use of Lawshe Method § Validity and reliability theory and how it applies to teacher preparation. § Your ideas, questions, and concerns (throughout presentation)

§ Validity type and procedures described (construct, content, concurrent, predictive). § Pilot for new assessments § Details process for interpreting results of assessment § Use research based process. § Reviewed by more than 2 stakeholders

§ 3. 2 Alternatives to standardized testing for admissions § 3. 3 Assessment of nonacademic qualities § Standard 4 – Alumni – throughout the standard § 5. 2 The provider’s quality assurance system relies on relevant, verifiable, representative, cumulative and actionable measures, and produces empirical evidence that interpretations of data are valid and consistent. § Generally as it influences the team’s interpretation of evidence. § Not true: if you do not have validity evidence, data are ignored by reviewers. § Not specifically listed in Standard 1

§ Cochran. Smith Critique of CAEP: “There should be a conceptual shift away from teacher education accountability that is primarily bureaucratic or marketbased and toward teacher education responsibility that is primarily professional and that acknowledges the shared responsibility of teacher education programs, schools, and policymakers to prepare and support teachers. ” § NCATE – Conceptual Framework not adopted by CAEP. Erskine Dottin: “NCATE’s call for teacher education units to have a conceptual framework provided the welcome challenge …to help create the kind of ‘foundational conversation’ that would directly relate to PK– 12 practice and to the preparation of PK– 12 teachers and other school personnel. ” § TEAC – 2 nd principle: It is inquiry driven, starting from the faculty’s own questions and curiosity about the program’s accomplishments

§ Standards for Educational and Psychological Testing handbook (AERA, APA, NCME) defined: "validity refers to the degree to which evidence and theory support the interpretations of test scores for proposed uses of tests. ” [when used for more than one purpose, each intended interpretation must be validated. ] "It is incorrect to use the unqualified phrase 'the validity of the test. "

§ What are the intended purposes of assessment at your institution?

§Please answer survey questions we asked. Data will not be used beyond this presentation. §https: //tinyurl. com/AACTEValidity

Does CAEP requirement for validity and reliability enhance or undermine institutional voice?

§ We downloaded list of TEAC and NCATE institutions and year they were expected to go up for accreditation. § We selected institutions who were expected to be reviewed between Fall 18 and Spring of 2022. § Graduate assistant looked up email addresses of dean § Made clear we did not represent CAEP § Sent to 382 institutions § 78 responses – 53 complete responses

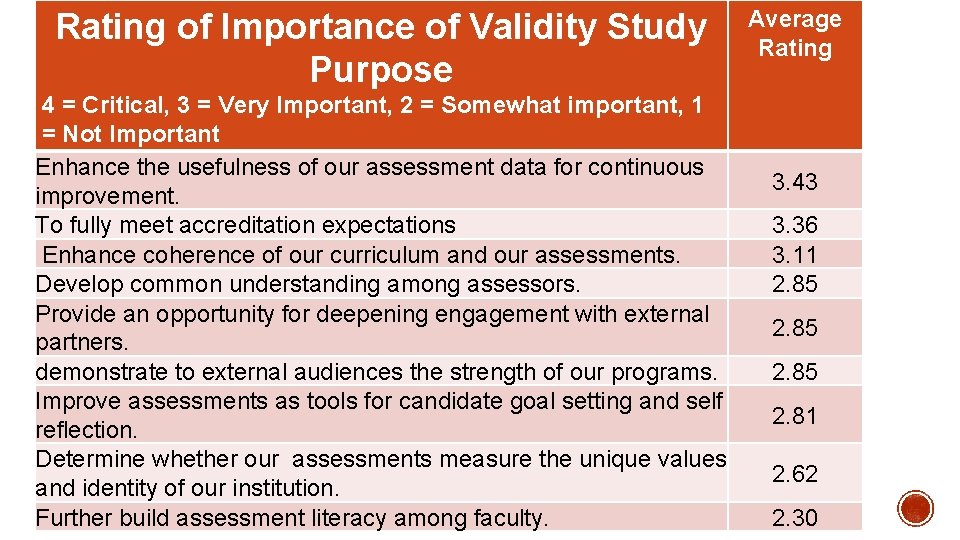

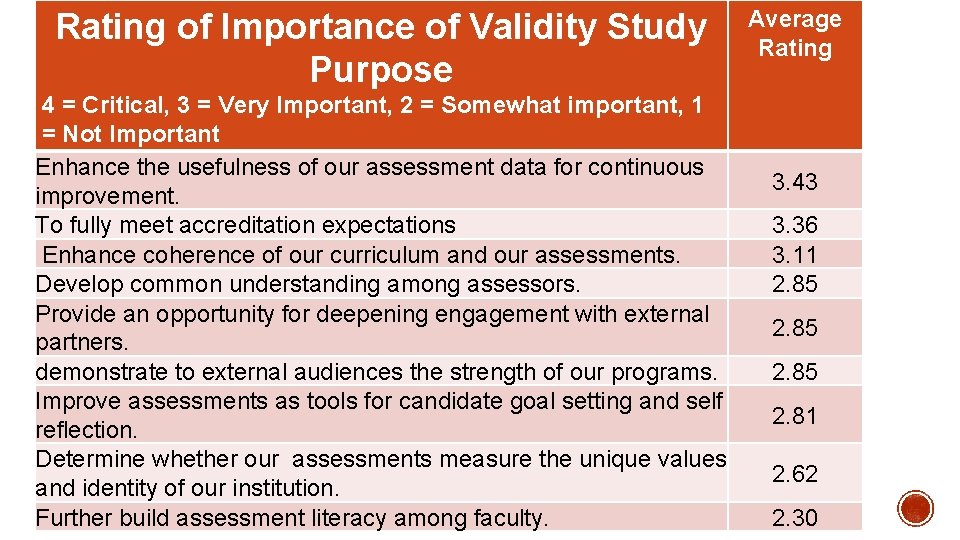

Rating of Importance of Validity Study Purpose 4 = Critical, 3 = Very Important, 2 = Somewhat important, 1 = Not Important Enhance the usefulness of our assessment data for continuous improvement. To fully meet accreditation expectations Enhance coherence of our curriculum and our assessments. Develop common understanding among assessors. Provide an opportunity for deepening engagement with external partners. demonstrate to external audiences the strength of our programs. Improve assessments as tools for candidate goal setting and self reflection. Determine whether our assessments measure the unique values and identity of our institution. Further build assessment literacy among faculty. Average Rating 3. 43 3. 36 3. 11 2. 85 2. 81 2. 62 2. 30

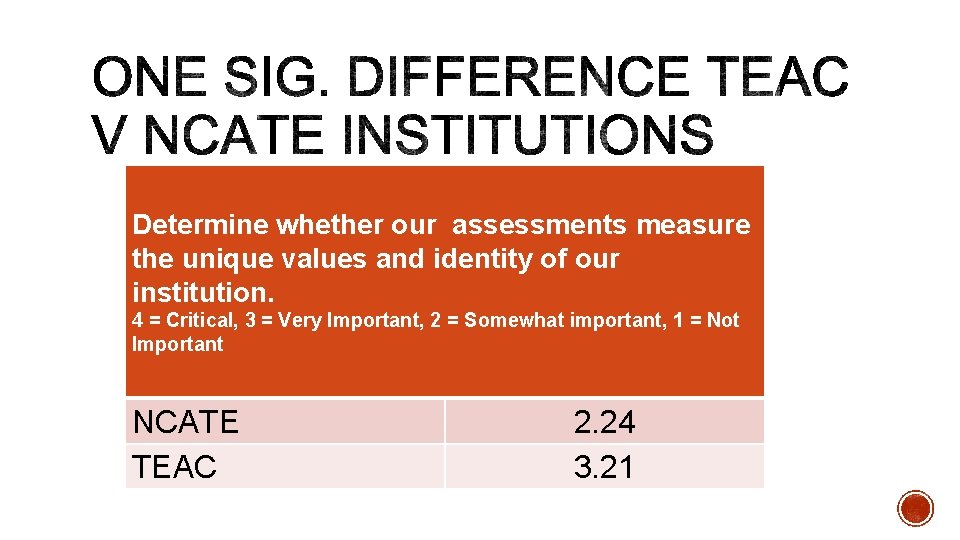

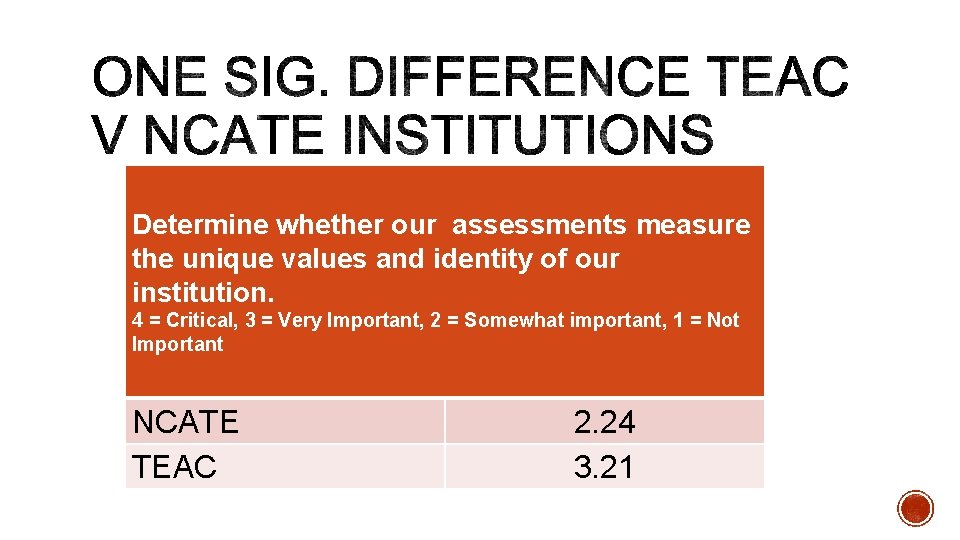

Determine whether our assessments measure the unique values and identity of our institution. 4 = Critical, 3 = Very Important, 2 = Somewhat important, 1 = Not Important NCATE TEAC 2. 24 3. 21

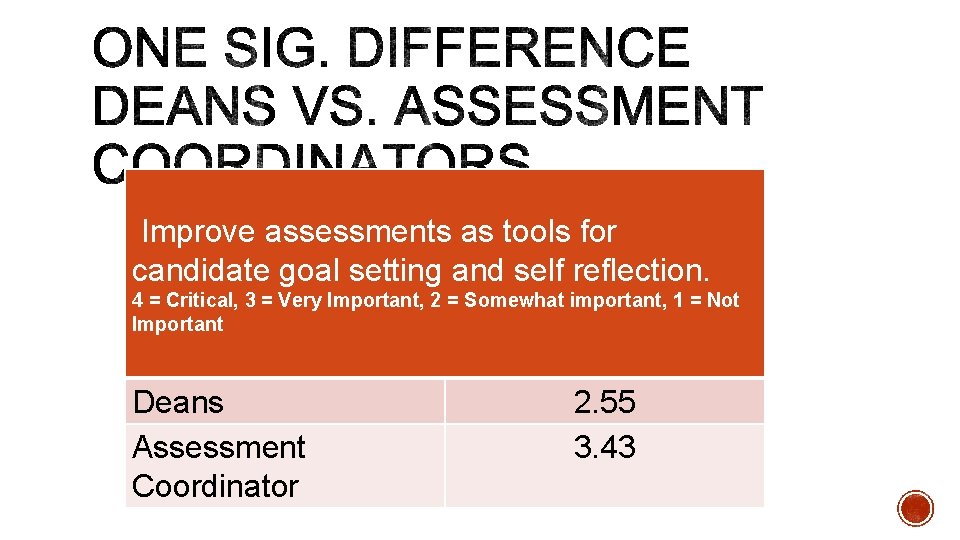

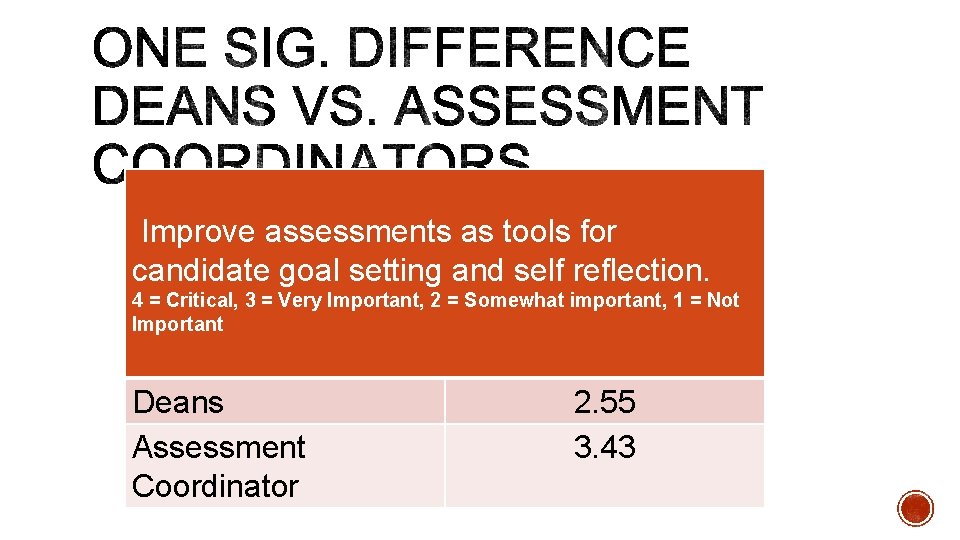

Improve assessments as tools for candidate goal setting and self reflection. 4 = Critical, 3 = Very Important, 2 = Somewhat important, 1 = Not Important Deans Assessment Coordinator 2. 55 3. 43

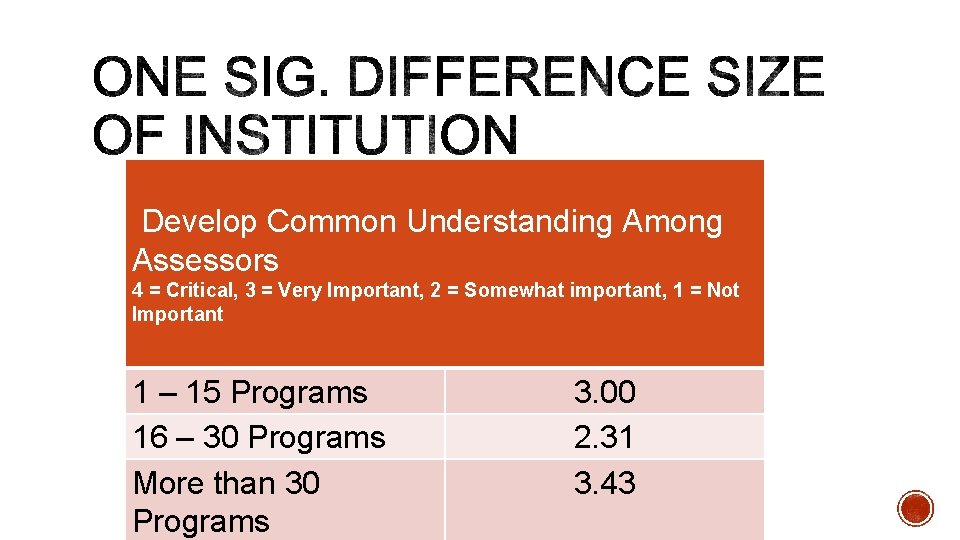

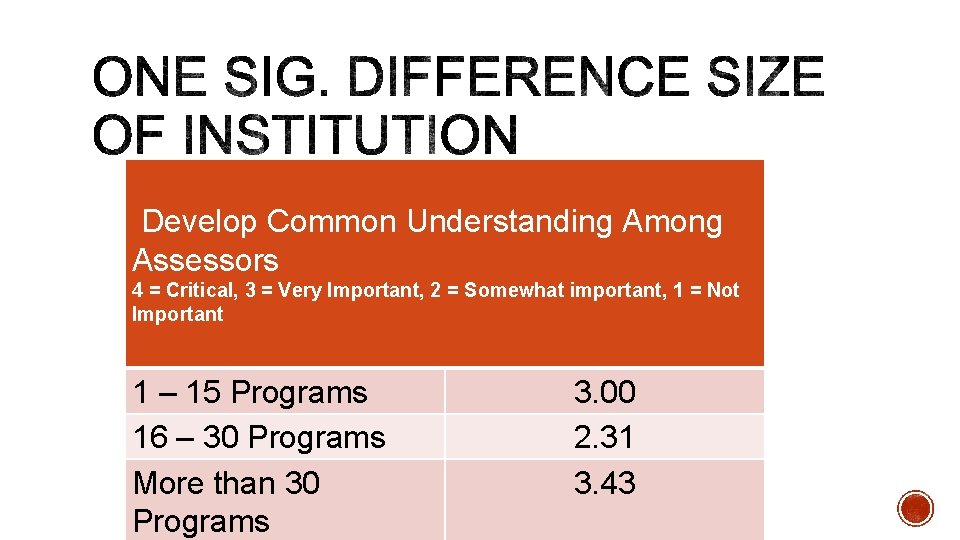

Develop Common Understanding Among Assessors 4 = Critical, 3 = Very Important, 2 = Somewhat important, 1 = Not Important 1 – 15 Programs 16 – 30 Programs More than 30 Programs 3. 00 2. 31 3. 43

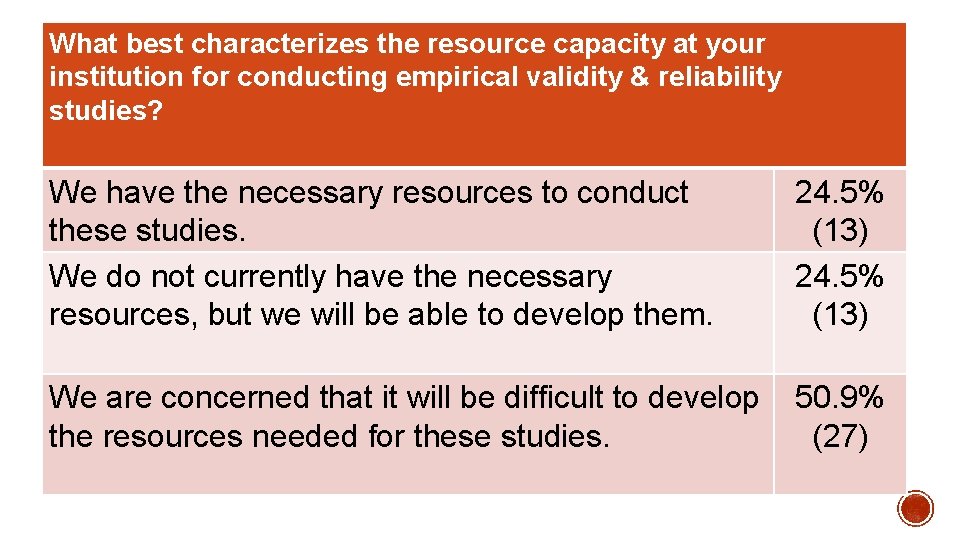

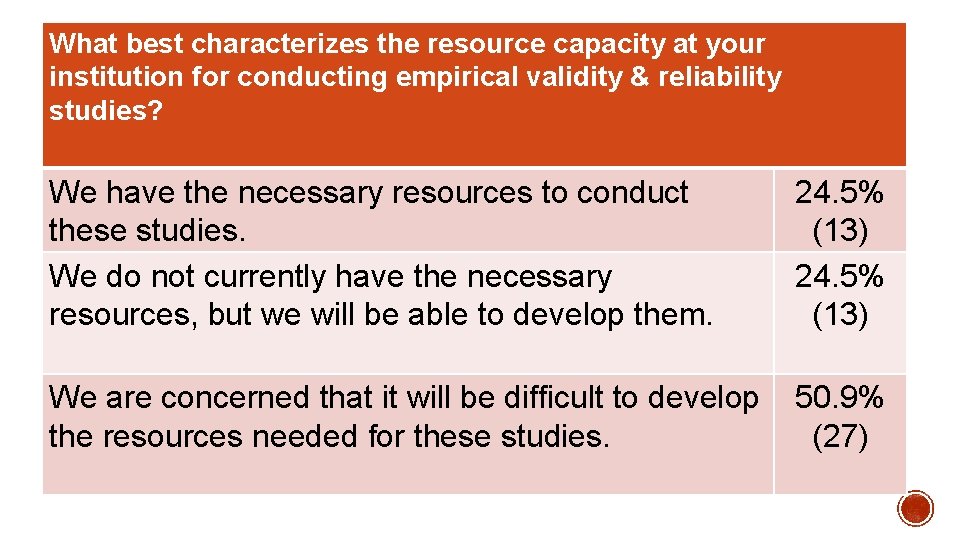

What best characterizes the resource capacity at your institution for conducting empirical validity & reliability studies? We have the necessary resources to conduct these studies. We do not currently have the necessary resources, but we will be able to develop them. 24. 5% (13) We are concerned that it will be difficult to develop the resources needed for these studies. 50. 9% (27)

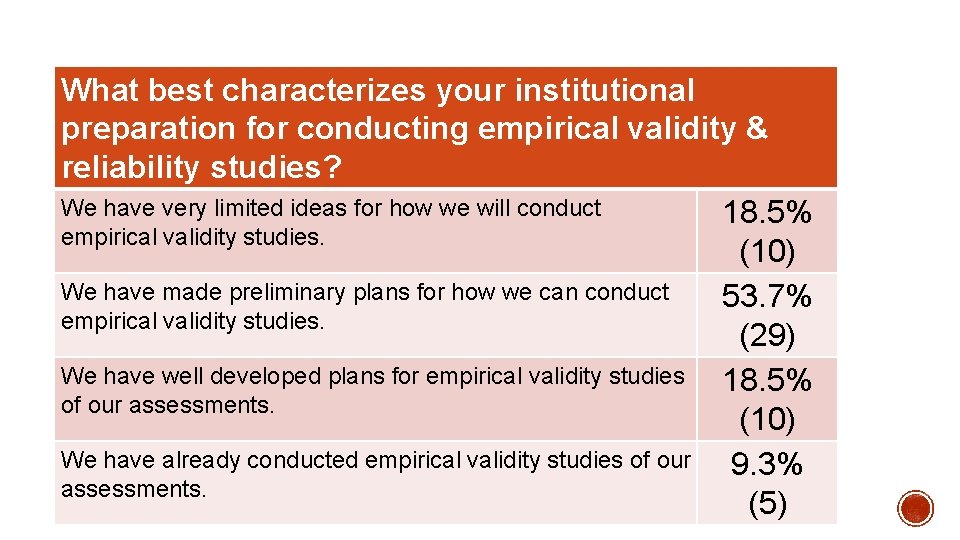

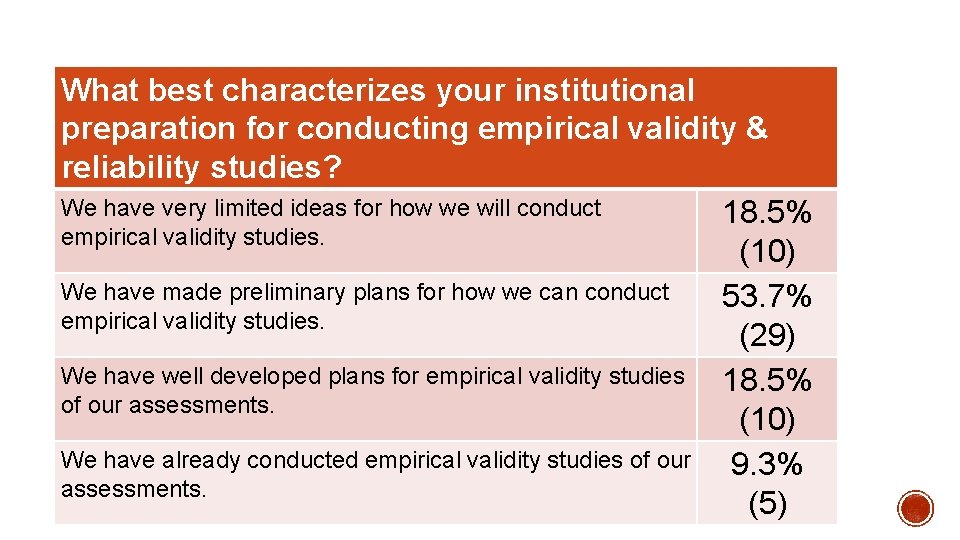

What best characterizes your institutional preparation for conducting empirical validity & reliability studies? We have very limited ideas for how we will conduct 18. 5% empirical validity studies. (10) We have made preliminary plans for how we can conduct 53. 7% empirical validity studies. (29) We have well developed plans for empirical validity studies 18. 5% of our assessments. (10) We have already conducted empirical validity studies of our 9. 3% assessments. (5)

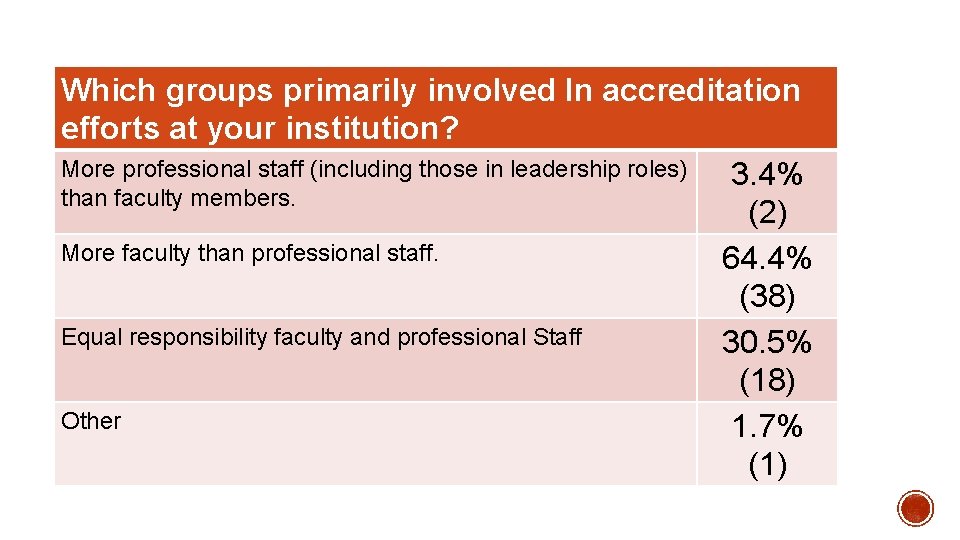

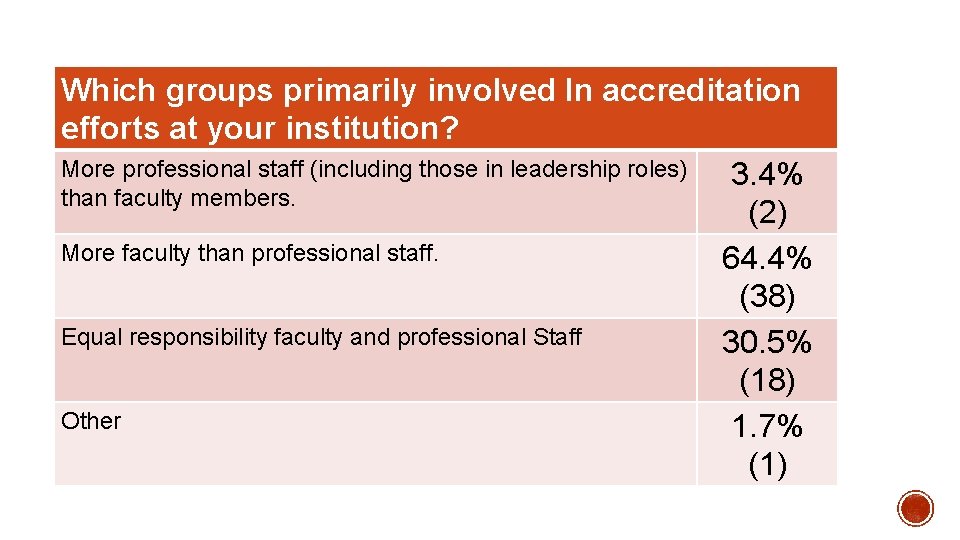

Which groups primarily involved In accreditation efforts at your institution? More professional staff (including those in leadership roles) 3. 4% than faculty members. (2) More faculty than professional staff. 64. 4% (38) Equal responsibility faculty and professional Staff 30. 5% (18) Other 1. 7% (1)

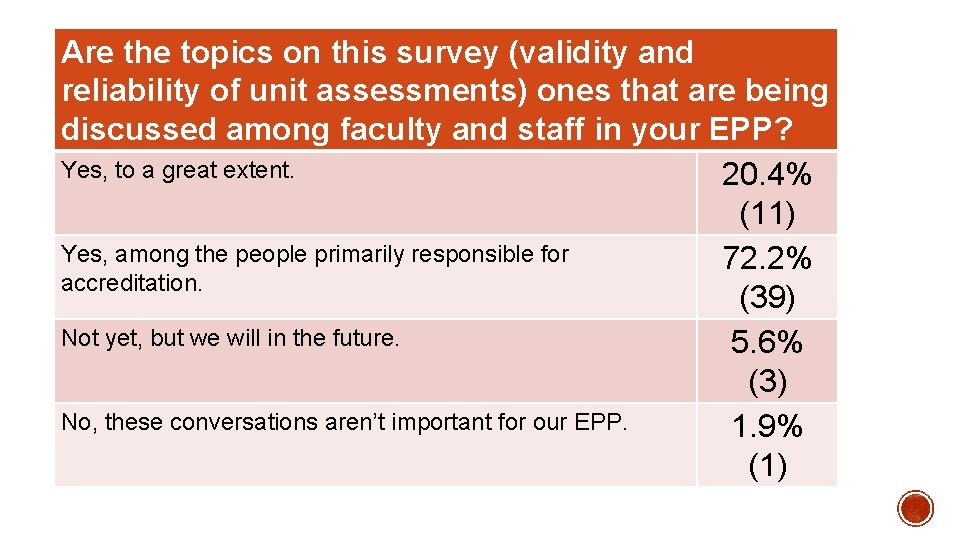

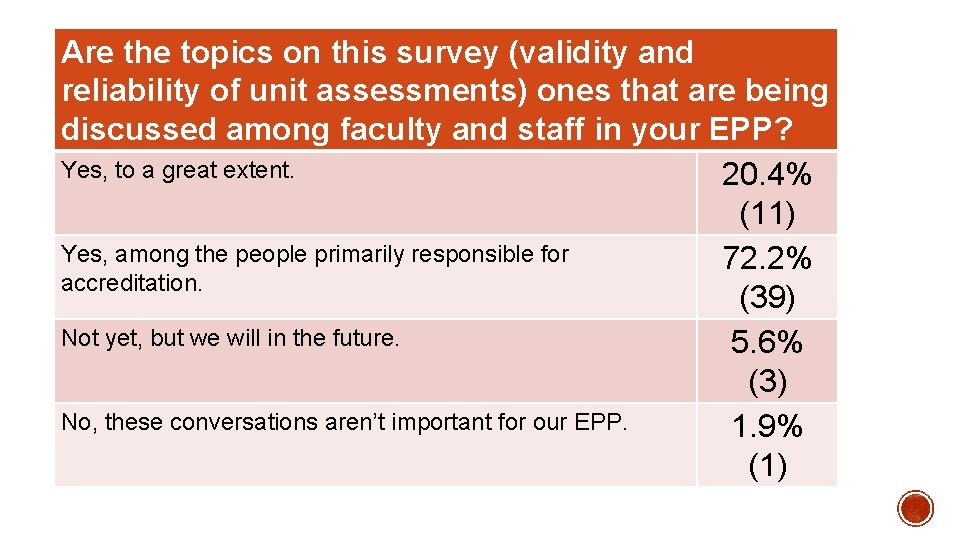

Are the topics on this survey (validity and reliability of unit assessments) ones that are being discussed among faculty and staff in your EPP? Yes, to a great extent. 20. 4% (11) Yes, among the people primarily responsible for 72. 2% accreditation. (39) Not yet, but we will in the future. 5. 6% (3) No, these conversations aren’t important for our EPP. 1. 9% (1)

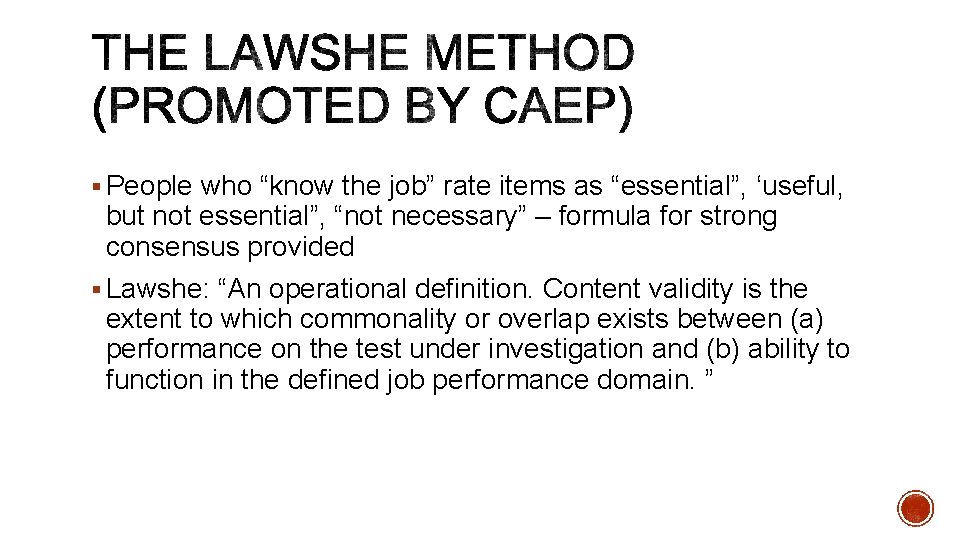

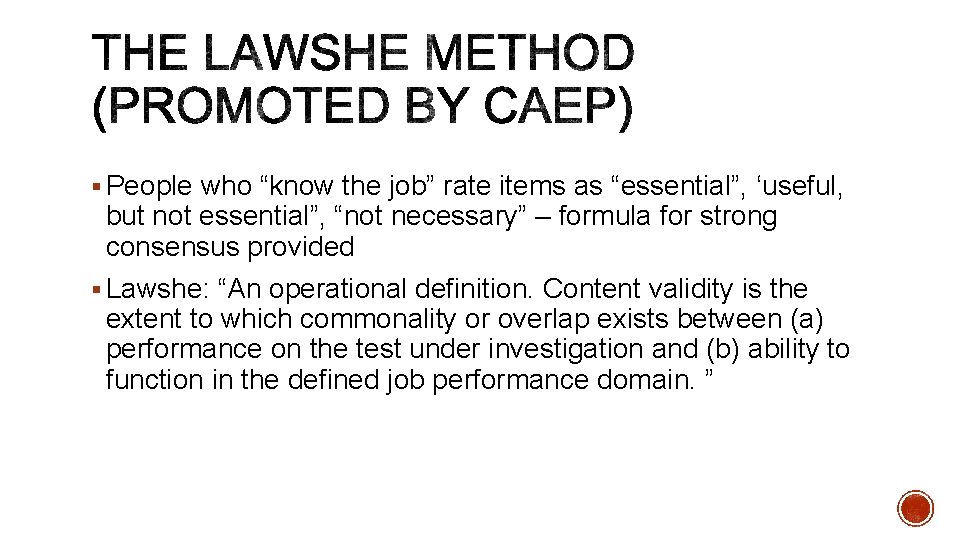

§ People who “know the job” rate items as “essential”, ‘useful, but not essential”, “not necessary” – formula for strong consensus provided § Lawshe: “An operational definition. Content validity is the extent to which commonality or overlap exists between (a) performance on the test under investigation and (b) ability to function in the defined job performance domain. ”

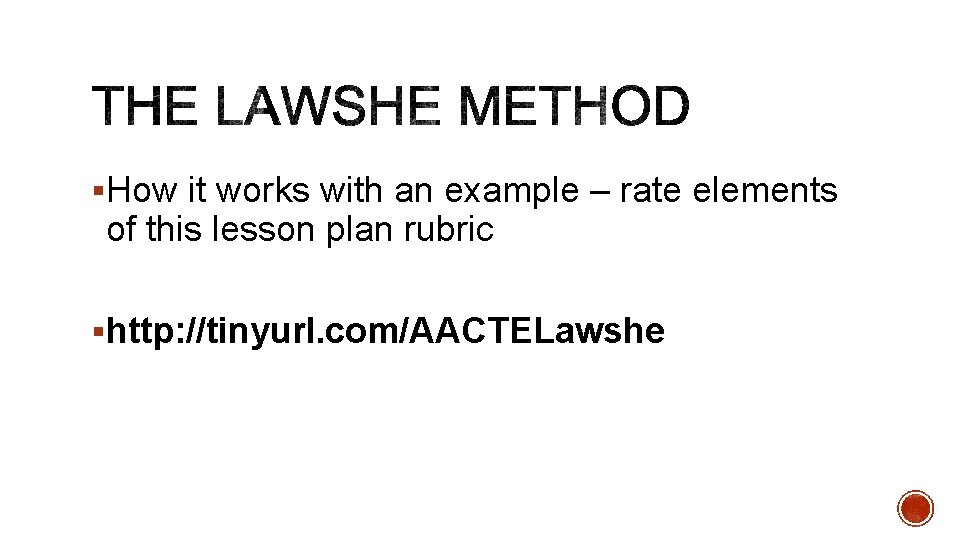

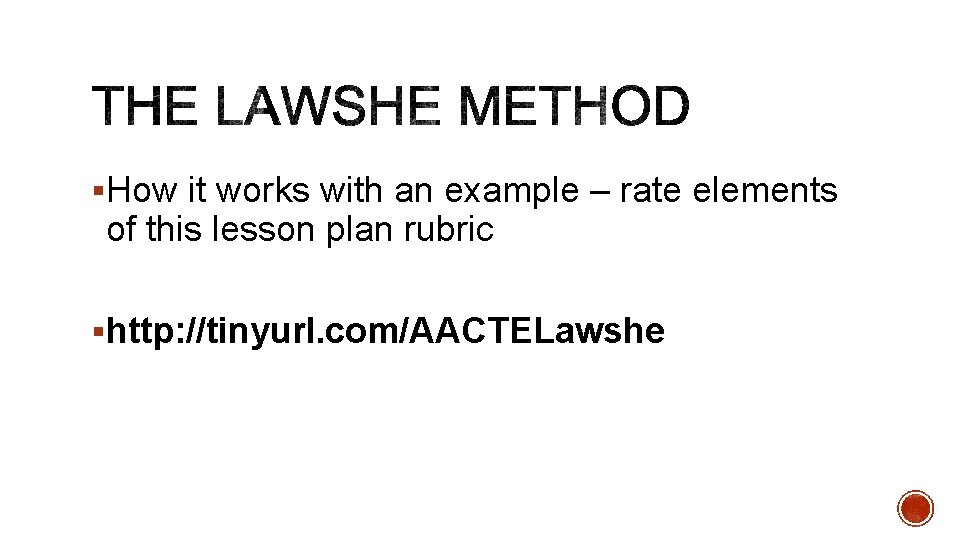

§How it works with an example – rate elements of this lesson plan rubric §http: //tinyurl. com/AACTELawshe

§ Thanks to Gwen Price, Unit Accreditation Officer Edinboro University PA. § John attended her full day event involving participants using Lawshe method to validate unit instruments and later interviewed her

§ Strong mix 30 participants: school administrators, general education teachers, and special education teachers, university faculty, 1 undergrad, and 1 graduate student. § Defined content validity for participants as approval by external experts. § Provided participants with unit assessment rubrics, i. PADS opening automatically to screen with links to a Lawshe style survey question for each row of each rubric + open -ended response. § Table discussion followed by individual scoring. § Validated 7 assessments in a single day event and they could run the statistics on it the next day. § Eliminated one element of Teacher Work Sample rubric (context) & made minor adjustments based on results.

§ Very efficient process § Sense of “validation” that what they are doing is the right thing. § Enhancement of reputation of university for school partners. § Empowering – because we validated internally developed assessments.

§ Don’t be afraid of the process. She delayed doing it for a long time, but in the end it worked very well. § Biggest challenge is getting substitutes for teachers. Slight imbalance toward too many administrator participants. § Procedures at tables and focus of conversation has to be decided in advance. § Think through all logistics – what to provide in paper and what electronically. § Feels reliability is bigger challenge – advises developing validity evidence first.

§What are your good ideas to share?

§ Darling-Hammond found very modest effect size relationships for PACT (pre-cursor to ed. TPA) comparing the lowest group of PACT scoring preservice teachers with the highest scoring group and found effect size impact on value-added assessments of test subscores between. 13 and. 18. § ETS published extensive validity evidence for ed. TPA, but did not include predictive validity evidence. § ETS “credentialing examinations are not intended to predict individual performance in a specific job but rather to provide evidence that candidates have acquired the knowledge, skills, and judgment required for effective performance. ”

§ Messick: "The last aspect of validity, the consequential aspect, includes evidence of implications of score interpretation, both intended and unintended as well as shortand long-term consequences. ” § Used for inequitable impacts of assessment – for example on underrepresented candidates. § Or for investigation of educative consequences of assessment. (Darling-Hammond made this argument for PACT). § Ask candidates – was the assessment and feedback from the assessment useful for your growth as a professional?

§ Names type of reliability (test-retest, parallel forms, inter-rater, internal. consistency) § Training of scorers and checking on inter-rater agreement and reliability are documented. § The described steps meet accepted research standards for establishing reliability.

§ Kappa – reported interrater reliability values for rubric assessments range from. 2 to. 63 (Jonsson, Gunilla, Svingby 2007) § majority of estimates interrater agreement falling in the range of 55– 75% (Jonsson, Gunilla, Svingby 2007)

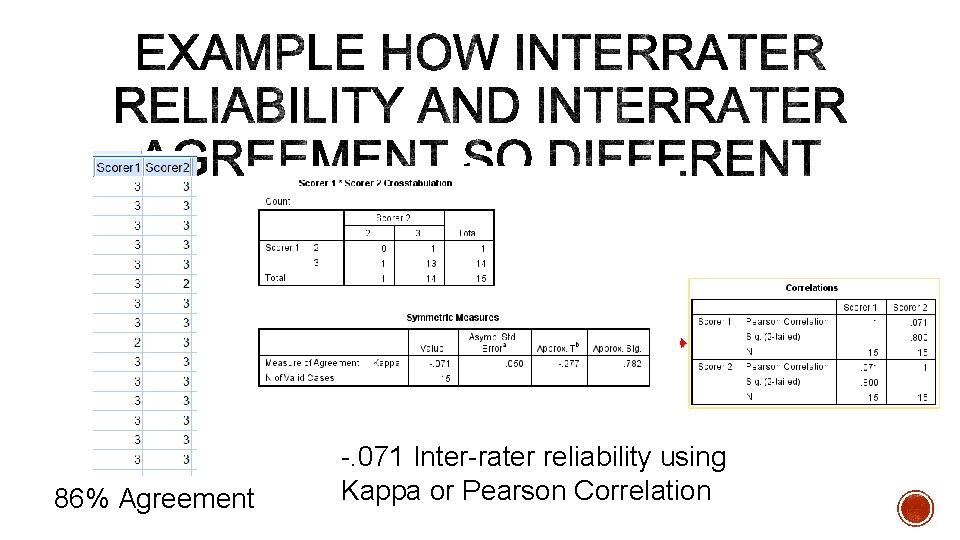

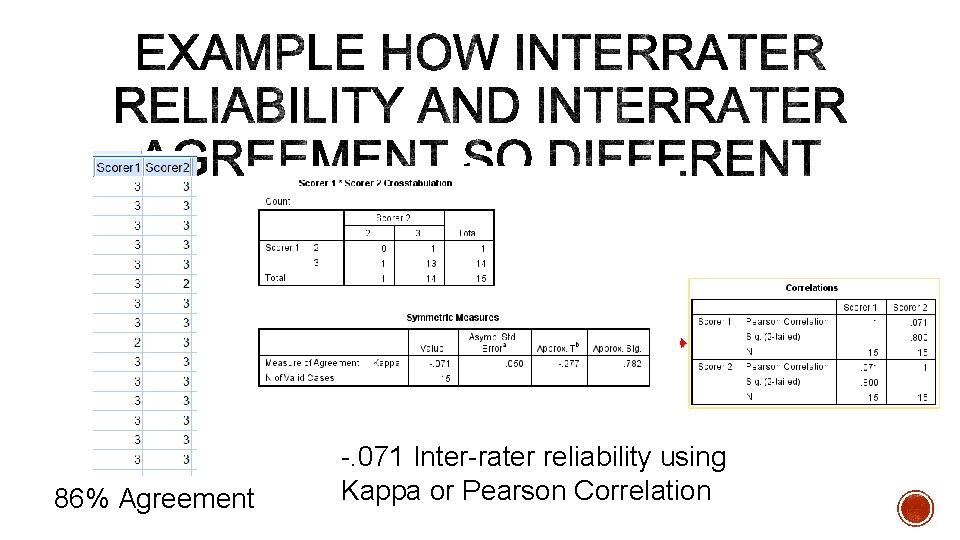

86% Agreement -. 071 Inter-rater reliability using Kappa or Pearson Correlation

§ How do reliability studies contribute to or detract from institutional values? § Cost in terms of time, resources, money § Building relationships & community around teacher preparation

§ Standard reliability approaches commonly used with surveys / multiple choice assessments do not apply § Test Retest § Parallel Forms § Internal Consistency § Interrater reliability – § Used for 2 raters and common set of items to be scored. Does not apply to Cooperating Teacher – University Supervisor. § Very difficult to do with student teaching - performance over an entire semester

§ AERA, APA, & NCME. (2014). Standards for Educational and Psychological Testing. Washington, D. C. : American Educational Research Association. § Cochran. Smith, M. , Stern, R. , Gabriel, J. , Sánchez, A. M. , Keefe, E. S. , Fernández, M. B. , Baker, M. (2016). Holding Teacher Preparation Accountable. § Darling-Hammond, L. , Newton, S. P. , & Wei, R. C. (2013). Developing and assessing beginning teacher effectiveness: the potential of performance assessments. Educational Assessment, Evaluation and Accountability, 25(3), 179– 204. § Jonsson, A. , & Svingby, G. (2007). The use of scoring rubrics: Reliability, validity and educational consequences. Educational Research Review, 2(2), 130– 144. § Lawshe, C. H. (1975). A quantitative approach to content validity. Personnel Psychology, 28(4), 563– 575.

Millersville bookstore

Millersville bookstore Millersville university weather forecast

Millersville university weather forecast Dean d dean

Dean d dean Lingual split bone technique

Lingual split bone technique Autolab millersville

Autolab millersville Kevin robinson millersville

Kevin robinson millersville Millersville autolab

Millersville autolab Millersville student lodging

Millersville student lodging Tax collector orlando university

Tax collector orlando university Dr john ward geriatrician newcastle

Dr john ward geriatrician newcastle Mbcs membership

Mbcs membership Promotion from associate professor to professor

Promotion from associate professor to professor Tecniche associate al pensiero computazionale

Tecniche associate al pensiero computazionale What does this drawing indicate about the inca civilization

What does this drawing indicate about the inca civilization Lone star college nursing acceptance

Lone star college nursing acceptance Incose asep certification

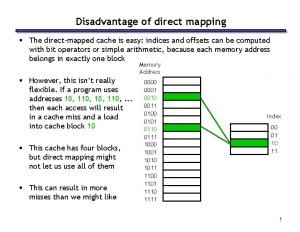

Incose asep certification Direct mapped cache

Direct mapped cache Cern hr

Cern hr Associate degree in the netherlands

Associate degree in the netherlands Berstoff gearbox repair

Berstoff gearbox repair Jeannie watkins

Jeannie watkins Rcog eportfolio

Rcog eportfolio Associate degree duo gift

Associate degree duo gift Analyst hierarchy

Analyst hierarchy Harper college

Harper college Iter project associate

Iter project associate Aad program

Aad program Los angeles harbor city college

Los angeles harbor city college Involves scrutinizing any information that you read or hear

Involves scrutinizing any information that you read or hear Delta chi flag

Delta chi flag Associate degree rmit

Associate degree rmit Adobe certified associate certification programs

Adobe certified associate certification programs Cincinnati state associate degrees

Cincinnati state associate degrees Safety associate

Safety associate