ITALK Tutoring Spotter ITALK Tutoring Spotter Challenges This

- Slides: 24

ITALK: Tutoring Spotter

ITALK: Tutoring Spotter Challenges: This task focusses on the analysis of features that may help to detect tutoring behaviour, as proposed by Csibra & Gergeley (2006), in parent-child interactions. The data underlying this project is based on 65 parents-child dyads, in which the task for the parents was to demonstrate 10 objects and their functions to their children. The analyses are carried out based on quantitative and qualitative methods using computational tracking systems of human motion developed by Fritsch et al. (2005) as well as speech based analysis tools.

ITALK: Tutoring Spotter Goal: The goal is to derive feature sets that are suitable to detect tutoring behaviour in an interaction partner and that can be used to implement a “tutoring spotter”. The project continues on multimodal analysis of the collected data at different levels of granularity in order to find out on which levels the variability occurs. Based on these results further experiments to gather data from parent-infant or tutor-robot interactions may be needed. In order to evaluate the tutoring spotter it will be integrated in an interactive robot system (implemented on i. Cub) where the effect of the robot’s reaction (i. e. signalling attention) upon the detection of tutoring behaviour on the behaviour of the tutor will be analysed in more detail.

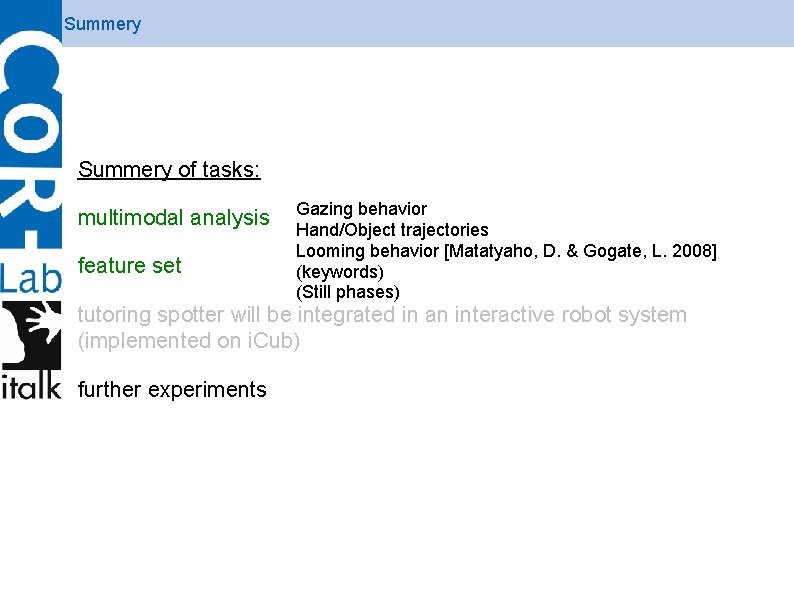

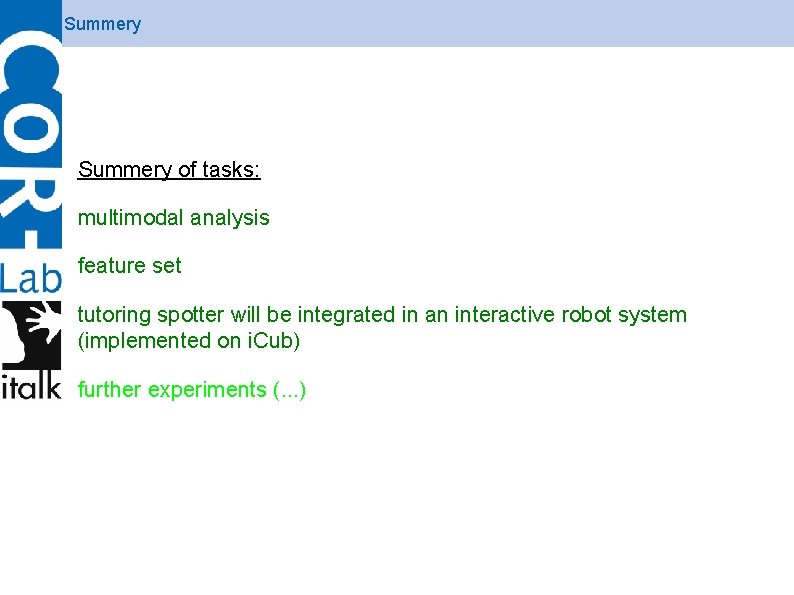

ITALK: Tutoring Spotter Summery of tasks: 1. multimodal analysis 2. feature set 3. tutoring spotter will be integrated in an interactive robot system (implemented on i. Cub) 4. further experiments

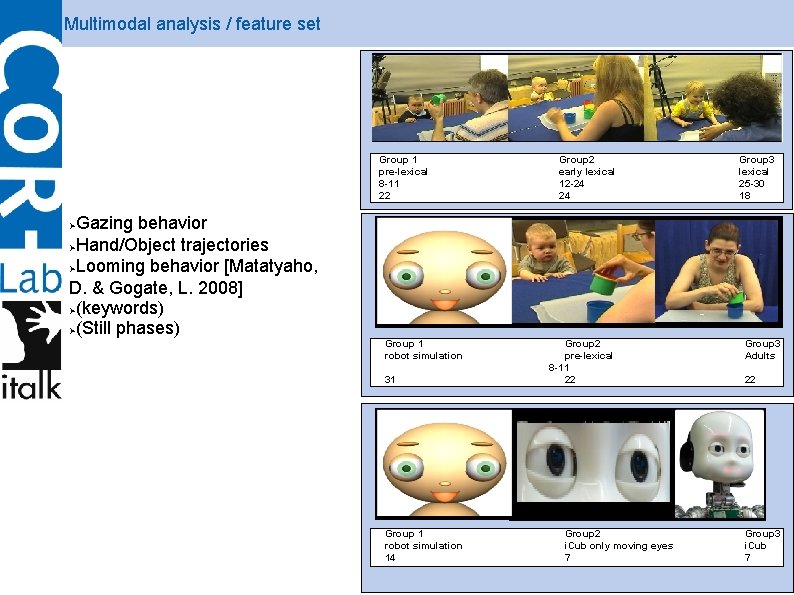

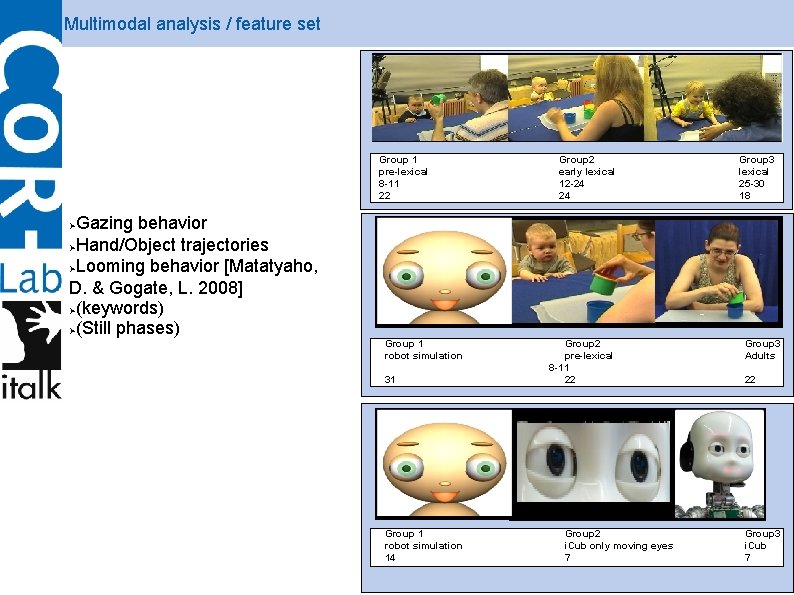

Multimodal analysis / feature set Group 1 pre-lexical 8 -11 22 Gazing behavior Hand/Object trajectories Looming behavior [Matatyaho, D. & Gogate, L. 2008] (keywords) (Still phases) Group 2 early lexical 12 -24 24 Group 3 lexical 25 -30 18 Group 1 robot simulation 31 Group 1 robot simulation 14 Group 2 pre-lexical 8 -11 22 Group 2 i. Cub only moving eyes 7 Group 3 Adults 22 Group 3 i. Cub 7

Summery of tasks: multimodal analysis feature set Gazing behavior Hand/Object trajectories Looming behavior [Matatyaho, D. & Gogate, L. 2008] (keywords) (Still phases) tutoring spotter will be integrated in an interactive robot system (implemented on i. Cub) further experiments

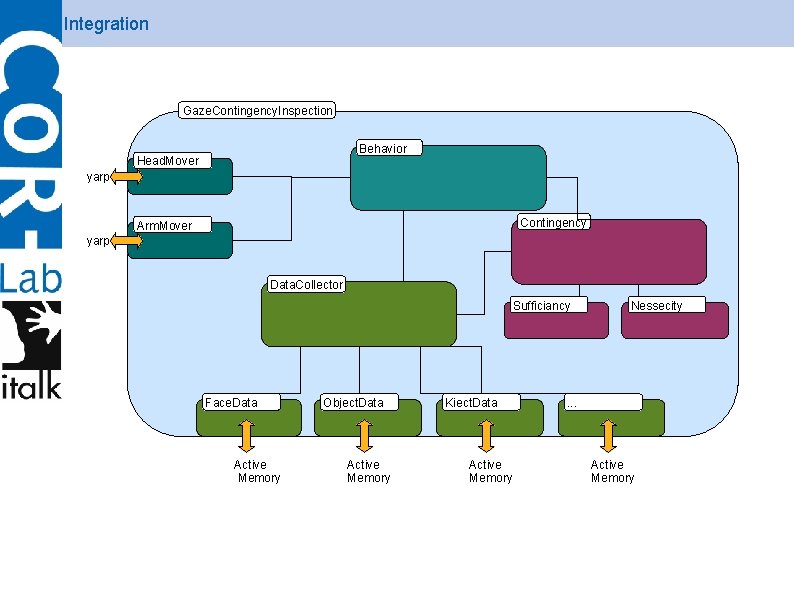

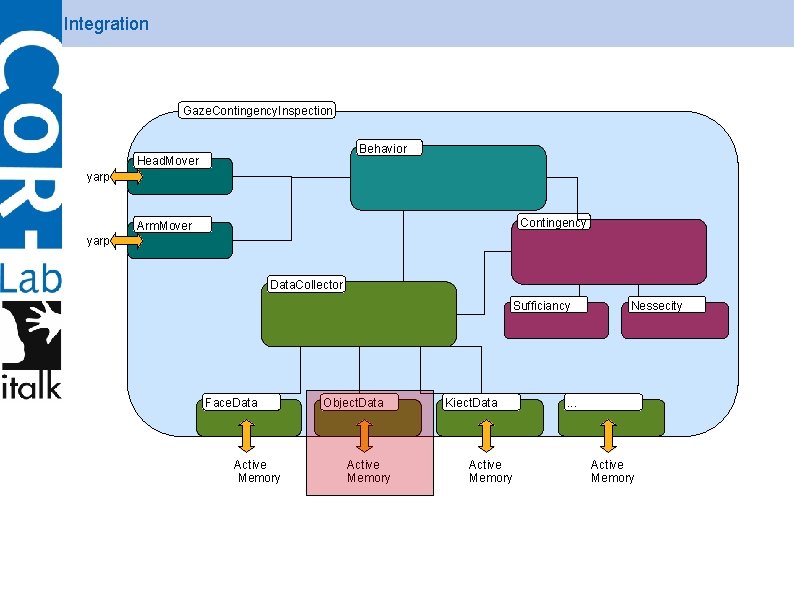

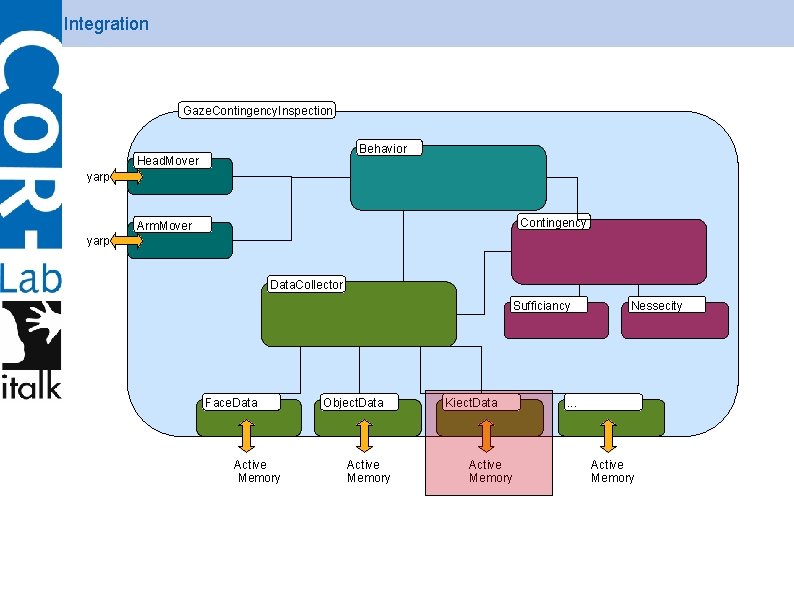

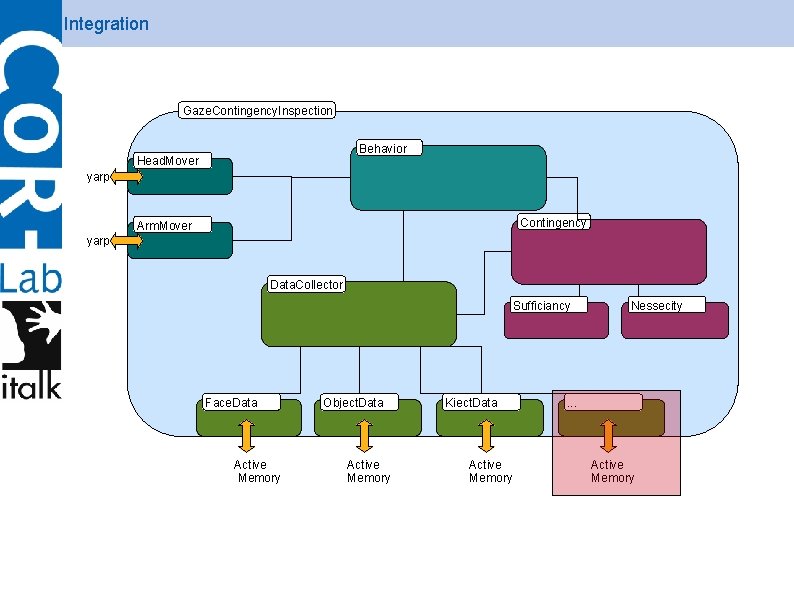

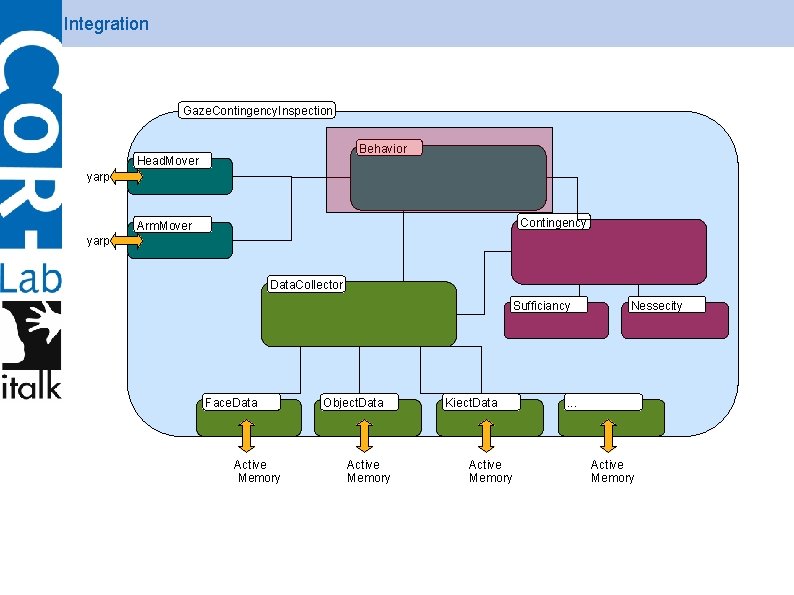

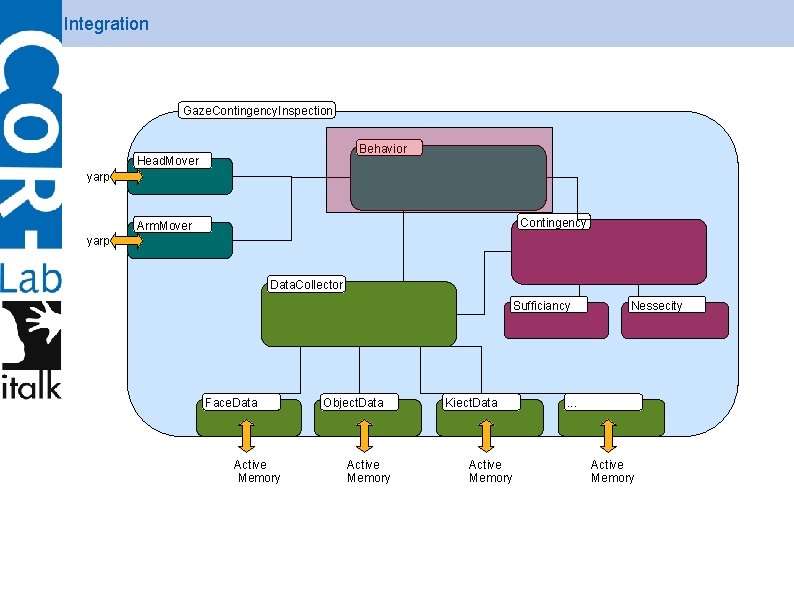

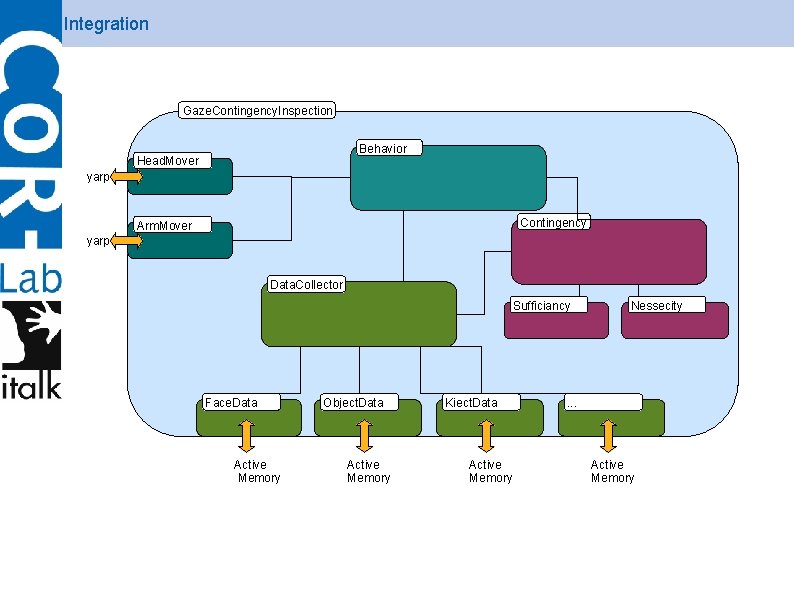

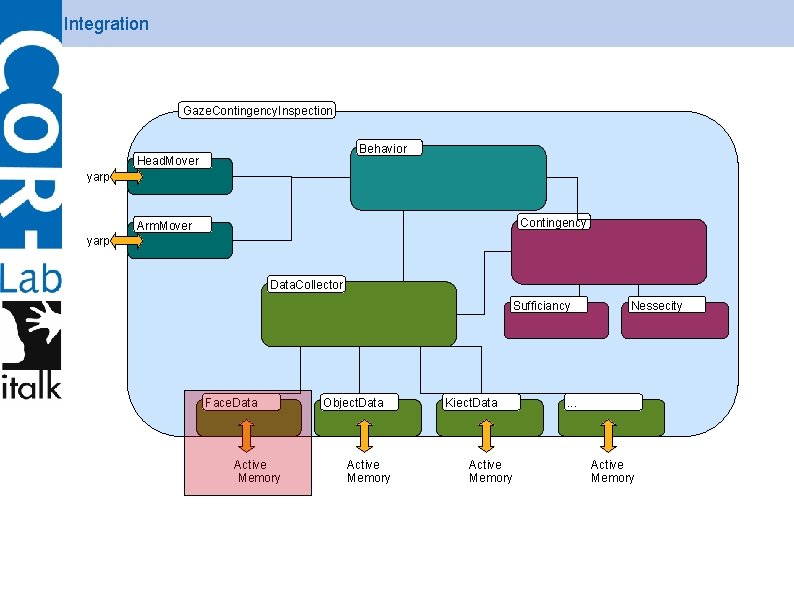

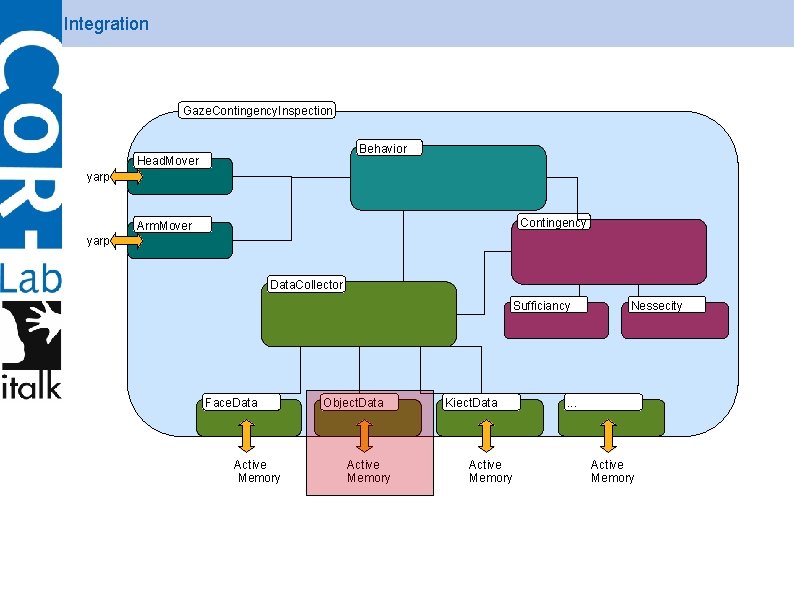

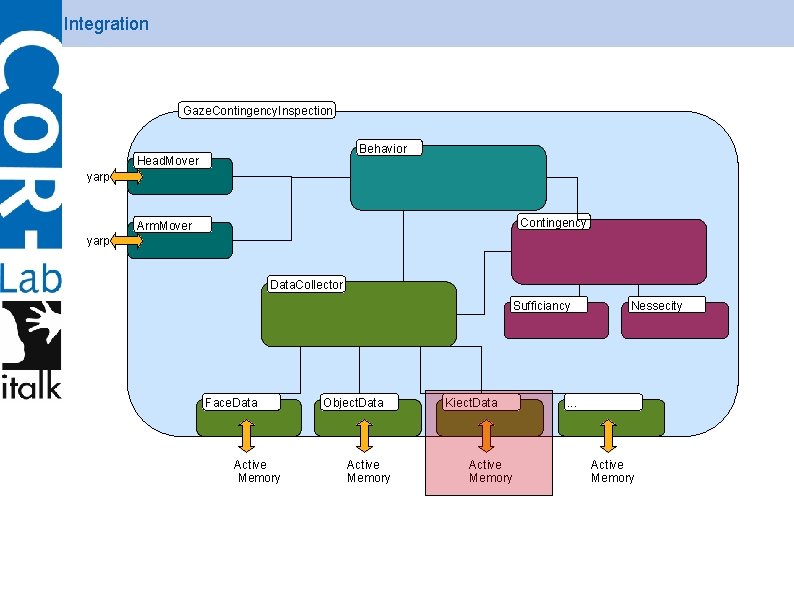

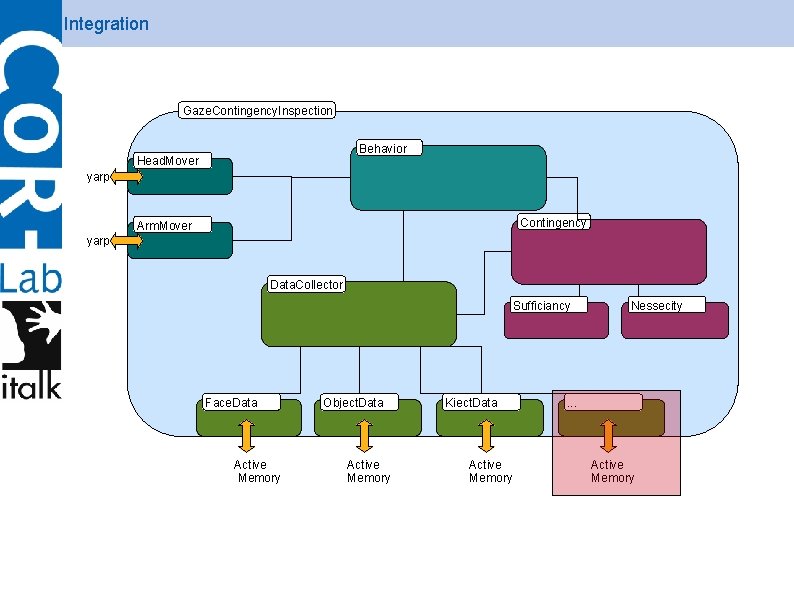

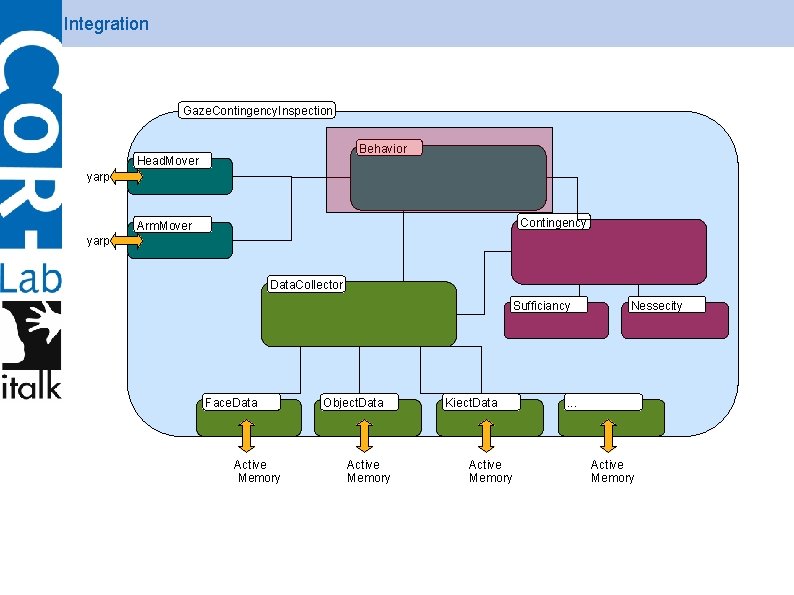

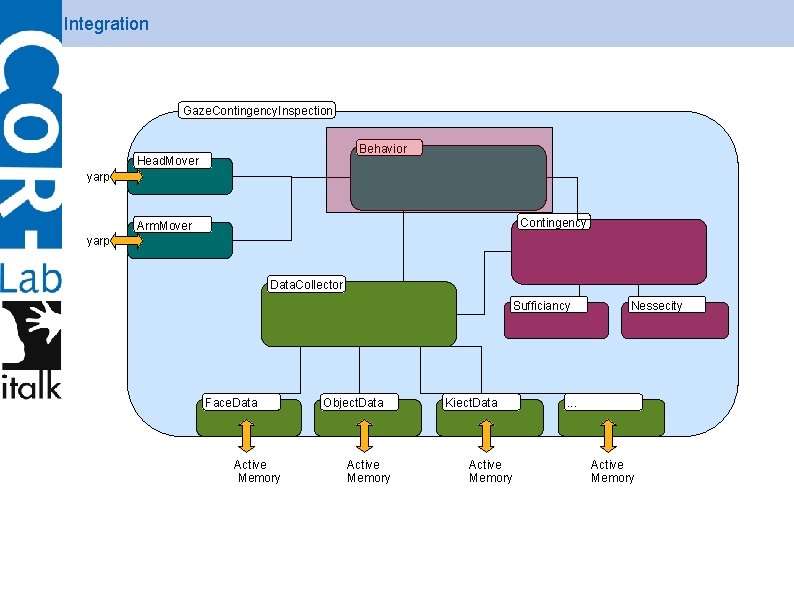

Integration Gaze. Contingency. Inspection Behavior Head. Mover yarp Contingency Arm. Mover yarp Data. Collector Sufficiancy Face. Data Active Memory Object. Data Active Memory Kiect. Data Active Memory Nessecity . . . Active Memory

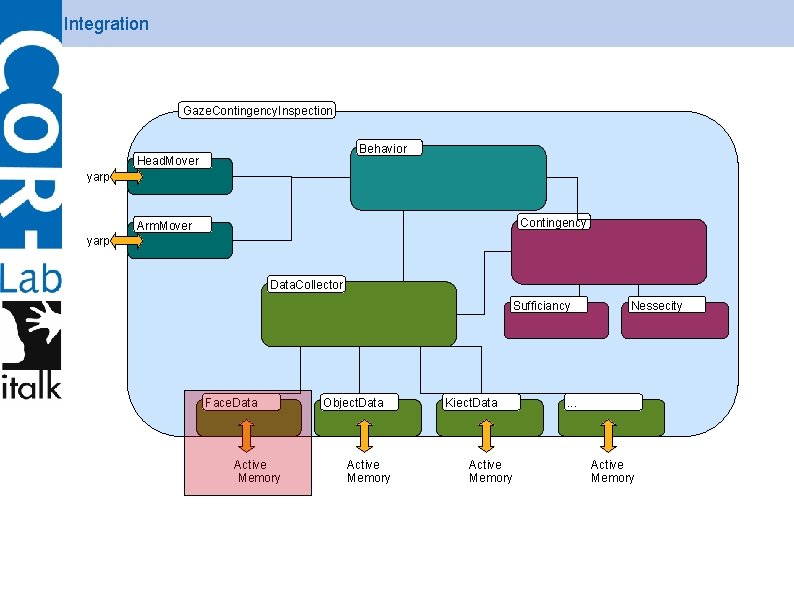

Integration Gaze. Contingency. Inspection Behavior Head. Mover yarp Contingency Arm. Mover yarp Data. Collector Sufficiancy Face. Data Active Memory Object. Data Active Memory Kiect. Data Active Memory Nessecity . . . Active Memory

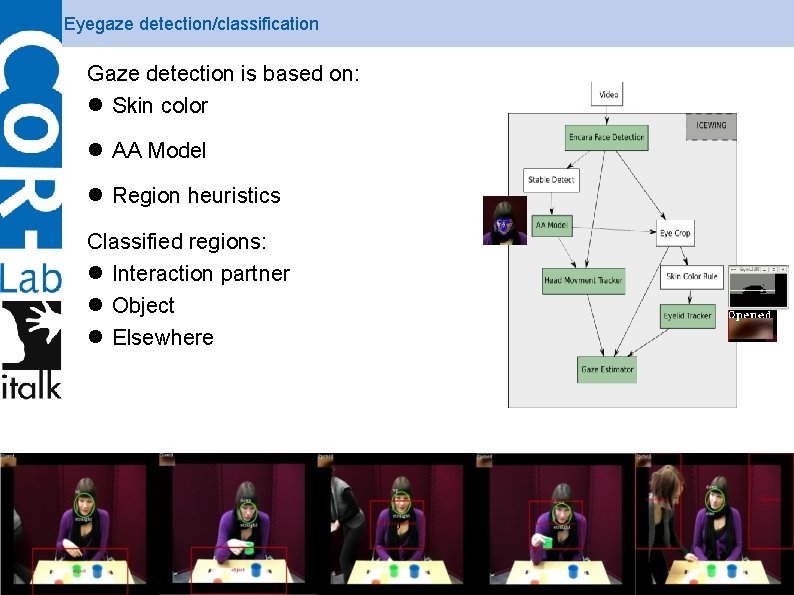

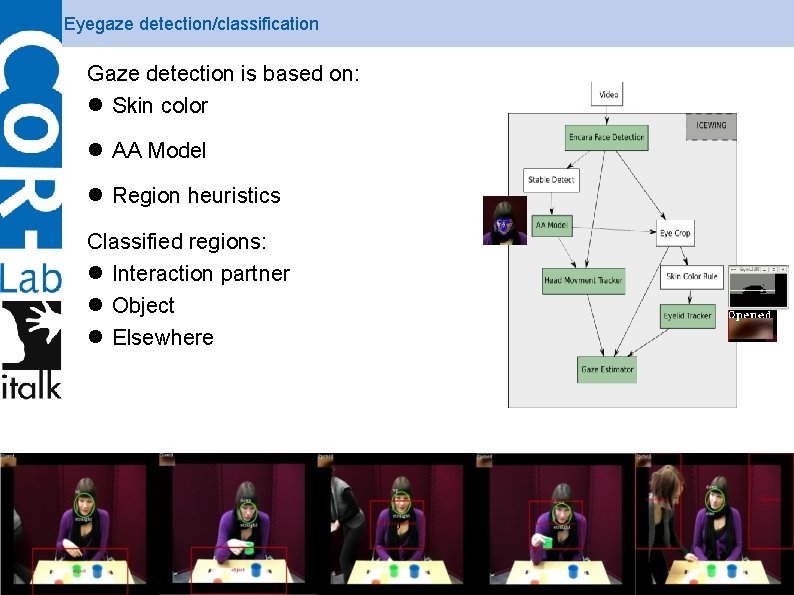

Eyegaze detection/classification Gaze detection is based on: Skin color AA Model Region heuristics Classified regions: Interaction partner Object Elsewhere 0

Integration Gaze. Contingency. Inspection Behavior Head. Mover yarp Contingency Arm. Mover yarp Data. Collector Sufficiancy Face. Data Active Memory Object. Data Active Memory Kiect. Data Active Memory Nessecity . . . Active Memory

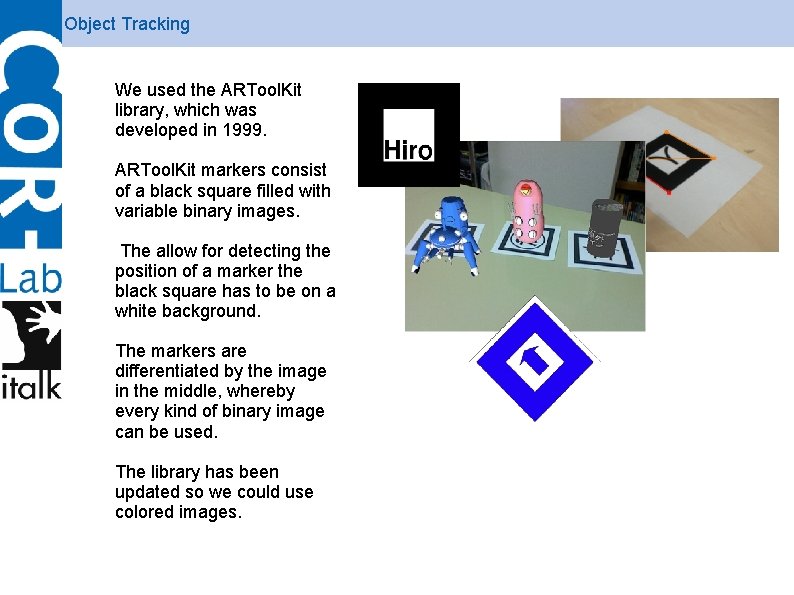

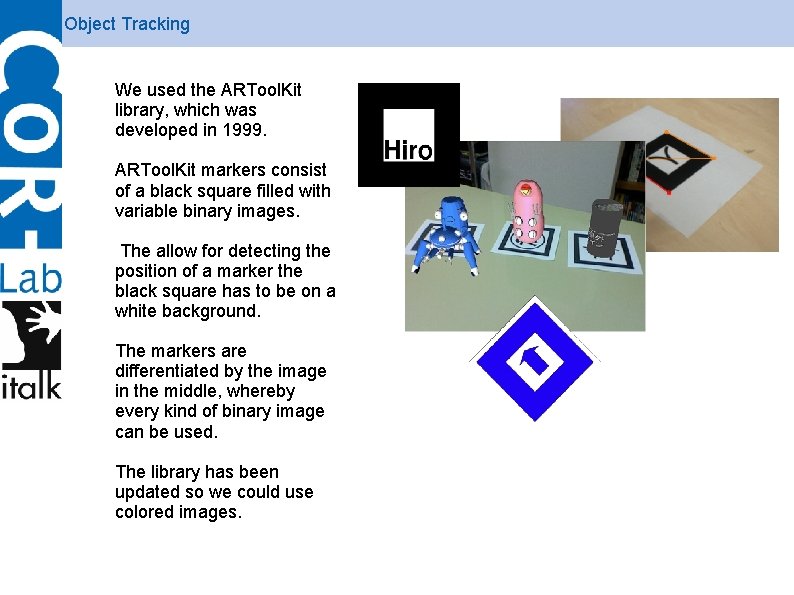

Object Tracking We used the ARTool. Kit library, which was developed in 1999. ARTool. Kit markers consist of a black square filled with variable binary images. The allow for detecting the position of a marker the black square has to be on a white background. The markers are differentiated by the image in the middle, whereby every kind of binary image can be used. The library has been updated so we could use colored images.

Integration Gaze. Contingency. Inspection Behavior Head. Mover yarp Contingency Arm. Mover yarp Data. Collector Sufficiancy Face. Data Active Memory Object. Data Active Memory Kiect. Data Active Memory Nessecity . . . Active Memory

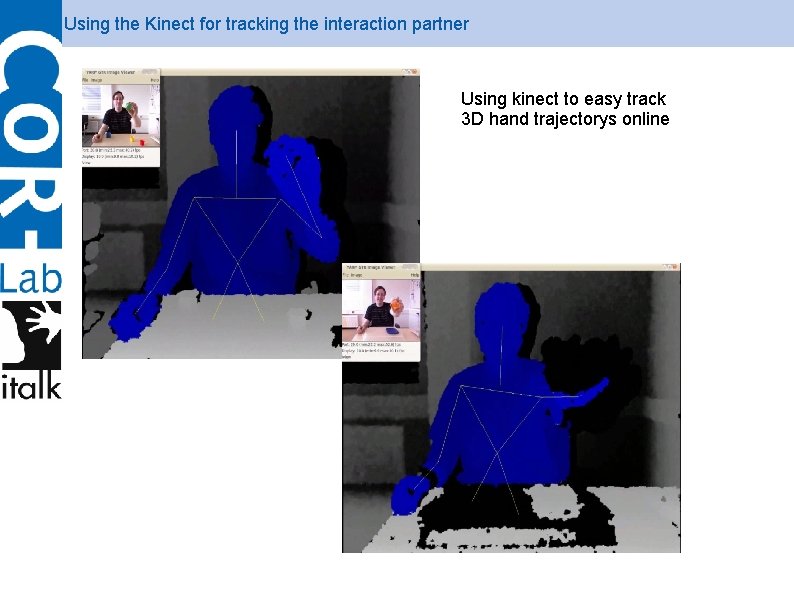

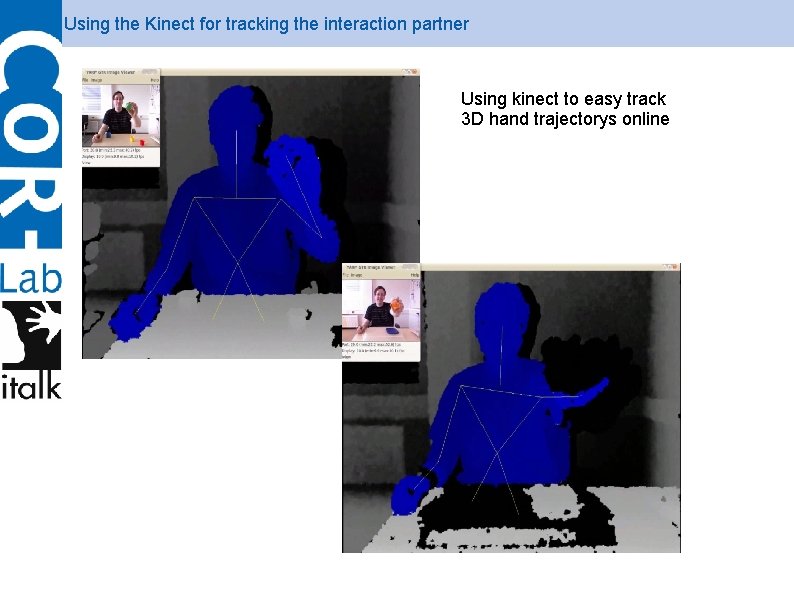

Using the Kinect for tracking the interaction partner Using kinect to easy track 3 D hand trajectorys online

Integration Gaze. Contingency. Inspection Behavior Head. Mover yarp Contingency Arm. Mover yarp Data. Collector Sufficiancy Face. Data Active Memory Object. Data Active Memory Kiect. Data Active Memory Nessecity . . . Active Memory

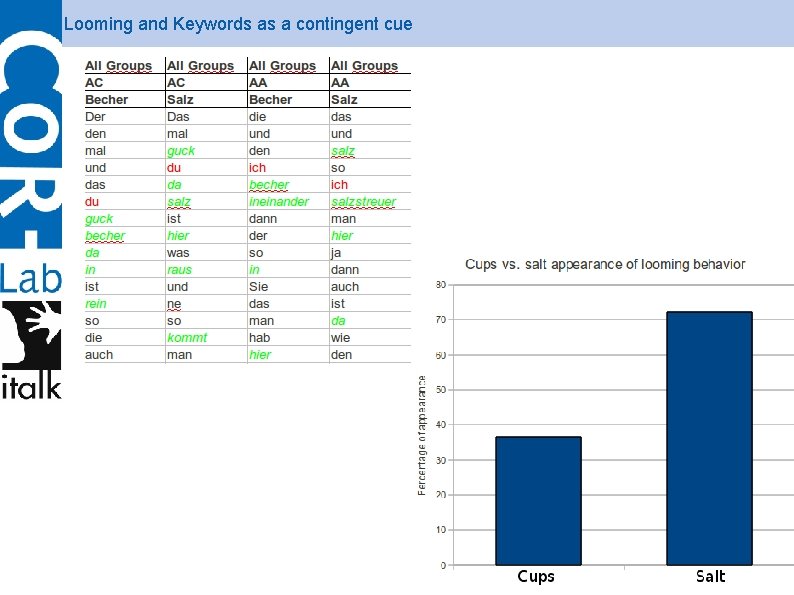

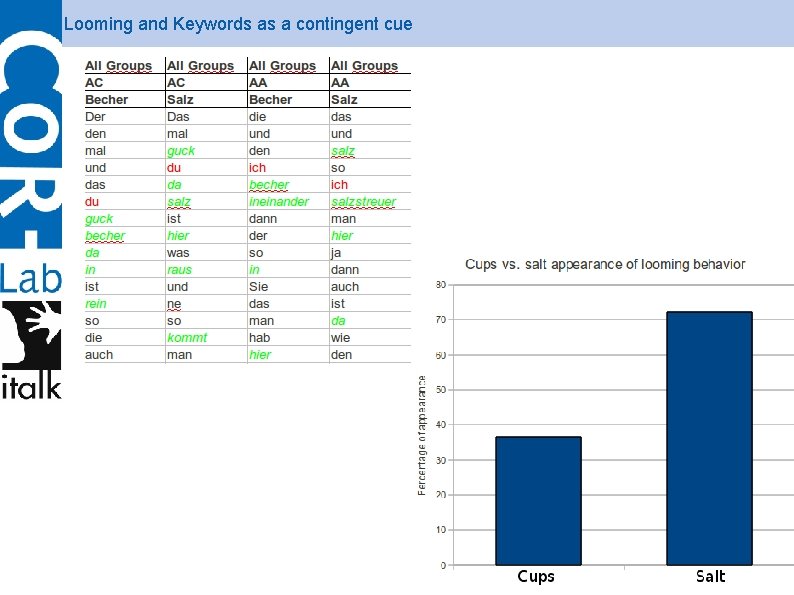

Looming and Keywords as a contingent cue

Integration Gaze. Contingency. Inspection Behavior Head. Mover yarp Contingency Arm. Mover yarp Data. Collector Sufficiancy Face. Data Active Memory Object. Data Active Memory Kiect. Data Active Memory Nessecity . . . Active Memory

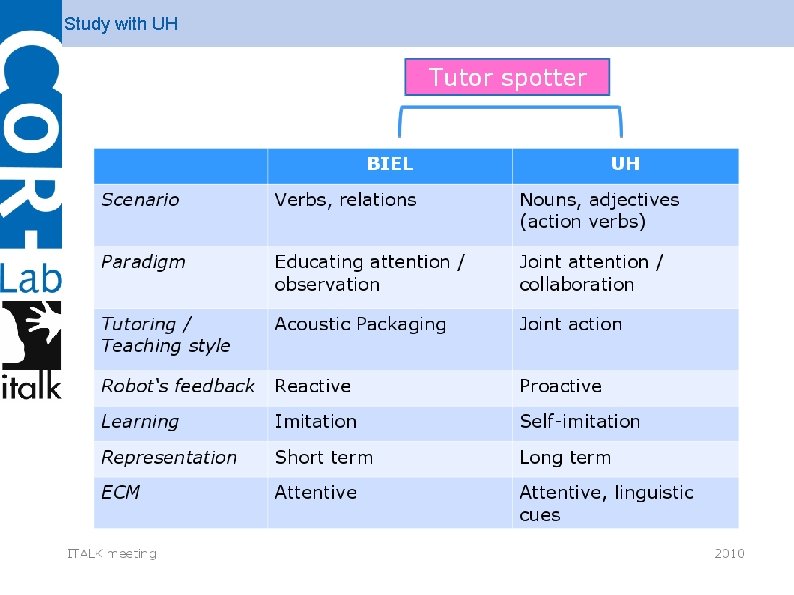

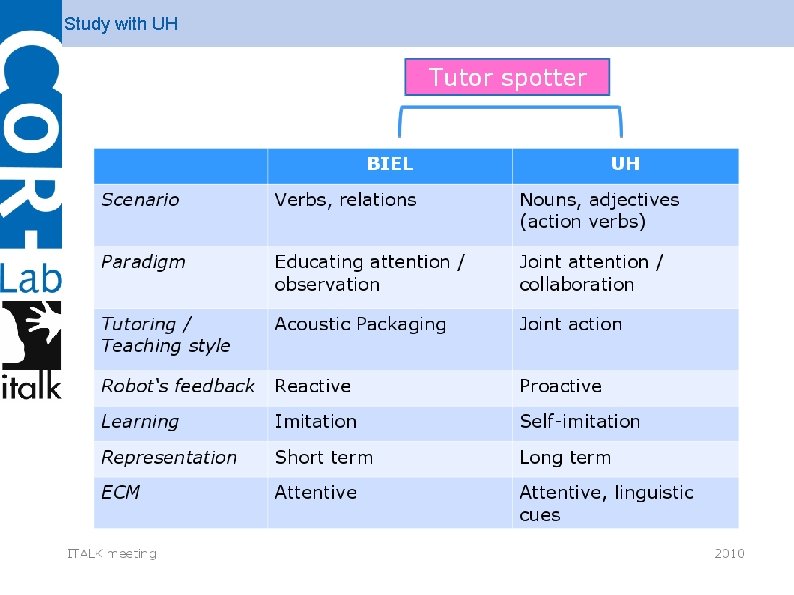

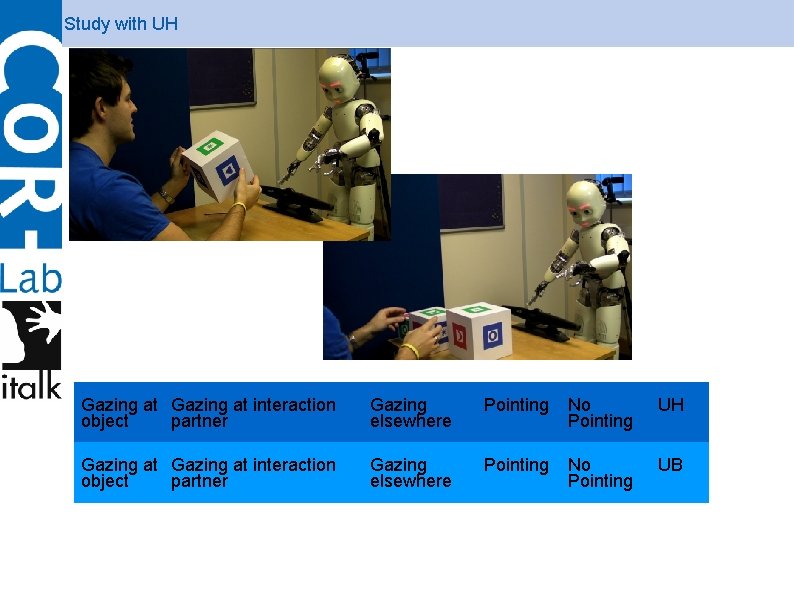

Study with UH

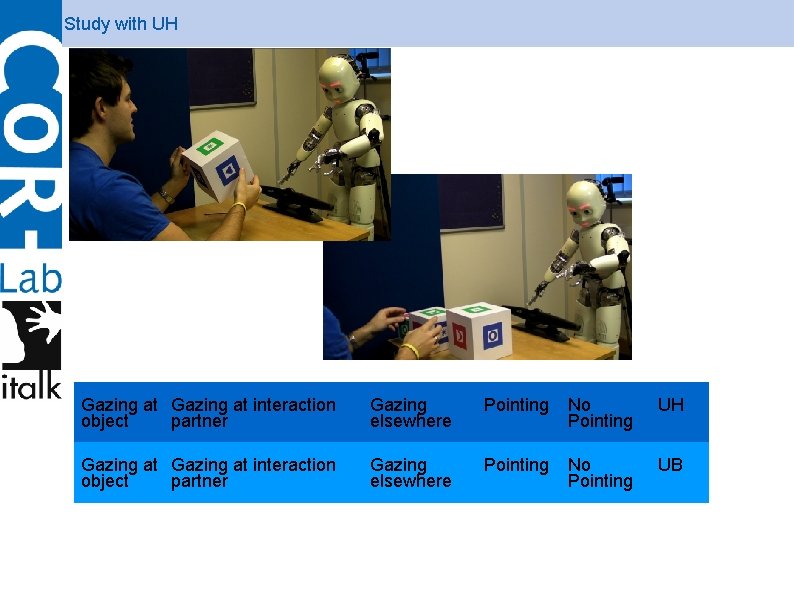

Study with UH Gazing at interaction object partner Gazing elsewhere Pointing No Pointing UB

Study with UH

Summery of tasks: multimodal analysis feature set tutoring spotter will be integrated in an interactive robot system (implemented on i. Cub) further experiments

Integration Gaze. Contingency. Inspection Behavior Head. Mover yarp Contingency Arm. Mover yarp Data. Collector Sufficiancy Face. Data Active Memory Object. Data Active Memory Kiect. Data Active Memory Nessecity . . . Active Memory

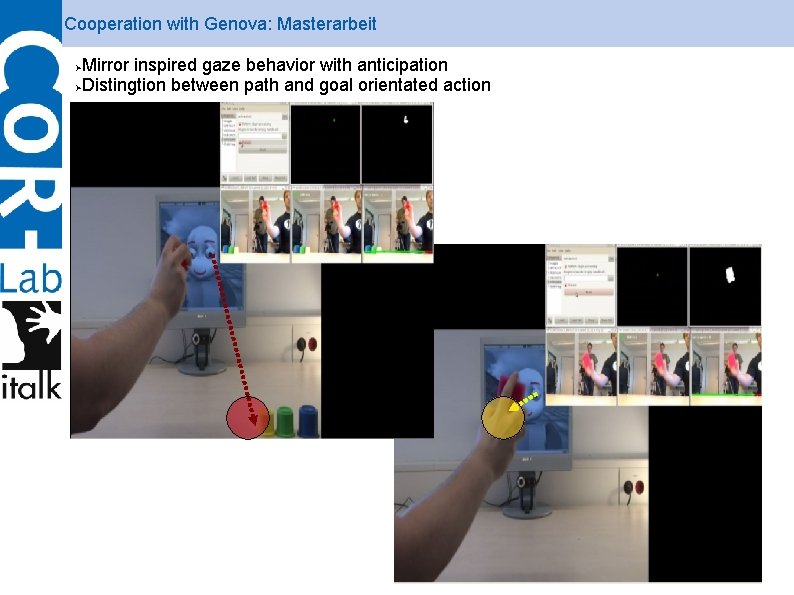

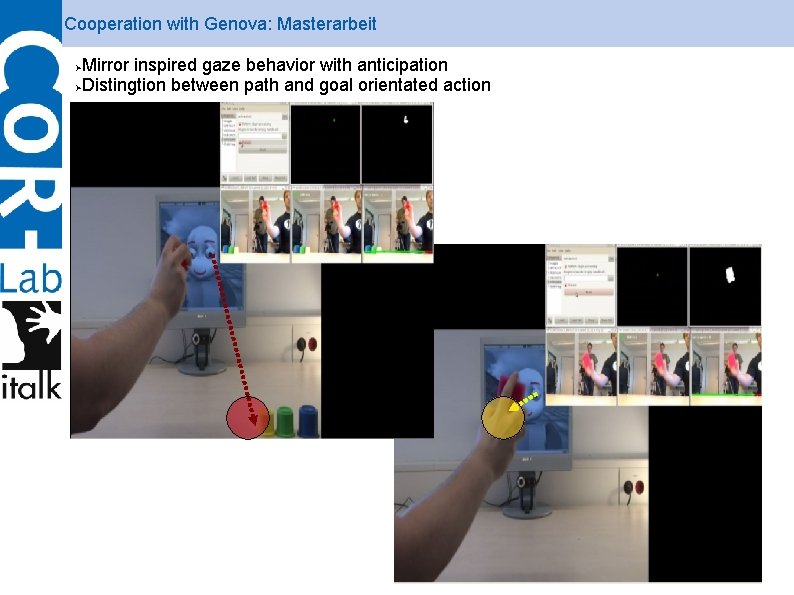

Cooperation with Genova: Masterarbeit Mirror inspired gaze behavior with anticipation Distingtion between path and goal orientated action

Cooperation with Genova/Schweden: Study ITALK&Robot. Doc So far we do not have detailed infant gazing behavior (eye tracking) How does speech affect anticipation behavior of infants How is the robot perceived by infants (tool or human like) Expertise from cooperation partner: Genua → gaze studie with robot concerning anticipation, mirror neurons Schweden → experts in gaze studies with children Bielefeld → speech, robot, gazing behavior

Summery of tasks: multimodal analysis feature set tutoring spotter will be integrated in an interactive robot system (implemented on i. Cub) further experiments (. . . )

Hand signals for forklift

Hand signals for forklift Sådan spotter du en narcissist

Sådan spotter du en narcissist Car spotter 5500

Car spotter 5500 Spotter network placefile

Spotter network placefile Car bid spotter

Car bid spotter Ppe spotter training

Ppe spotter training Tutoring

Tutoring Cdli tutoring

Cdli tutoring Smarty cats tutoring msu

Smarty cats tutoring msu Academic engagement

Academic engagement Elisabeth chemouni

Elisabeth chemouni Scholarly tutoring

Scholarly tutoring Tutoring čechy

Tutoring čechy Tutoring cycle

Tutoring cycle Small group tutoring

Small group tutoring Msi tutoring ucsc

Msi tutoring ucsc Rpi alac tutoring

Rpi alac tutoring Istituto leonardo da vinci parigi

Istituto leonardo da vinci parigi Tutoring cycle

Tutoring cycle Accuplacer tutoring

Accuplacer tutoring Vptl tutoring stanford

Vptl tutoring stanford Tutoring shawlands

Tutoring shawlands Youtube.com

Youtube.com Il mutuo insegnamento

Il mutuo insegnamento Iep house uri

Iep house uri