HPC in China Xiaomei Zhang IHEP 2019 03

- Slides: 14

HPC in China Xiaomei Zhang IHEP 2019 -03 -05

HPC in China v Six National Super. Computing Center (SC) in China, located in six provinces l Tianjin, Chang. Sha, Ji. Nan, Guang. Zhou, Shen. Zhen,Wu. Xi v Cities and universities has also it own SC, but in smaller scale v CAS (Chinese Academy of Sciences) has its own supercomputing center, located in Beijing l Managed by Computer Network Information Center (CNIC) of CAS 2

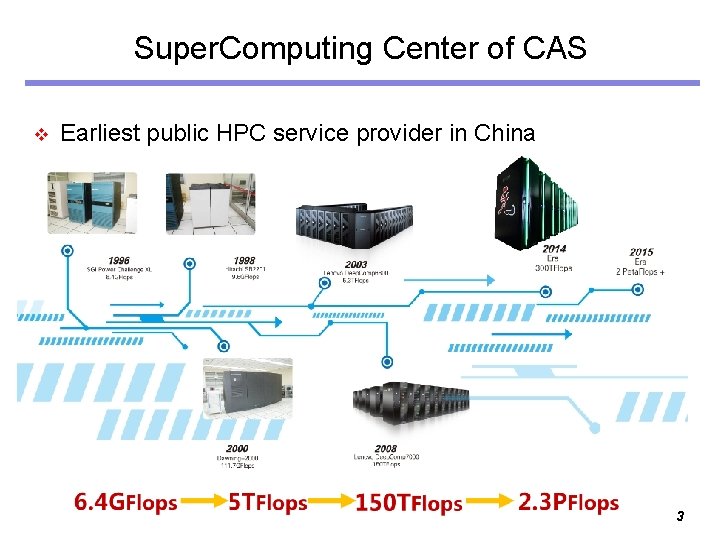

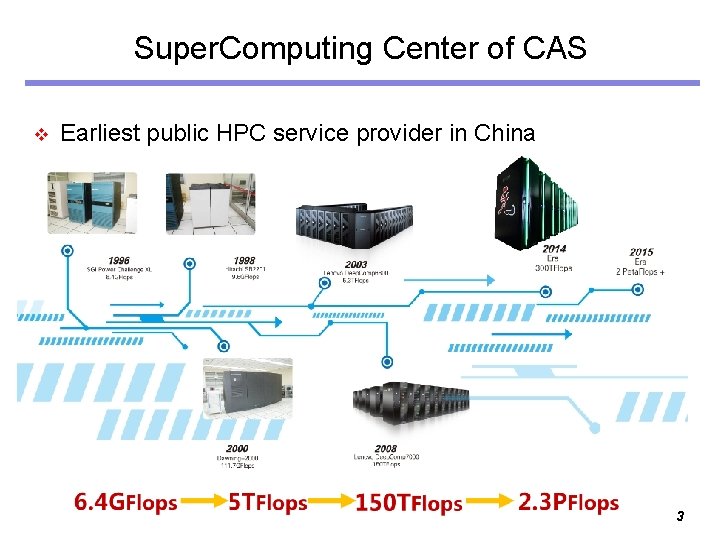

Super. Computing Center of CAS v Earliest public HPC service provider in China 3

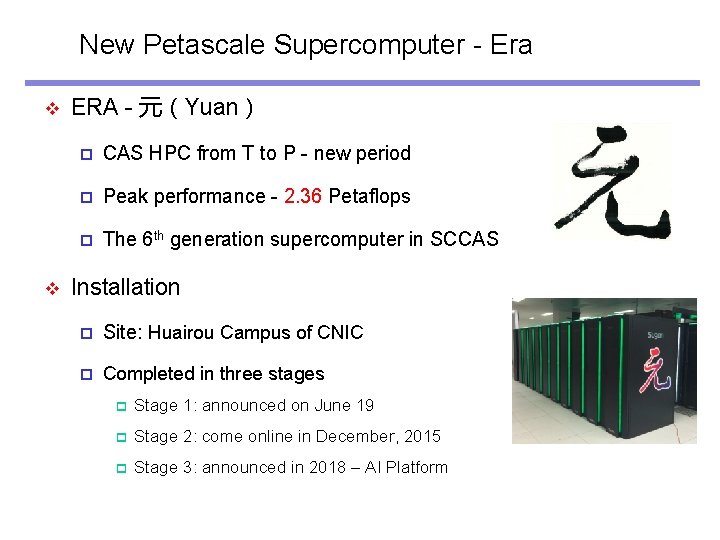

New Petascale Supercomputer - Era v v ERA - 元(Yuan) p CAS HPC from T to P - new period p Peak performance - 2. 36 Petaflops p The 6 th generation supercomputer in SCCAS Installation p Site: Huairou Campus of CNIC p Completed in three stages p Stage 1: announced on June 19 p Stage 2: come online in December, 2015 p Stage 3: announced in 2018 – AI Platform

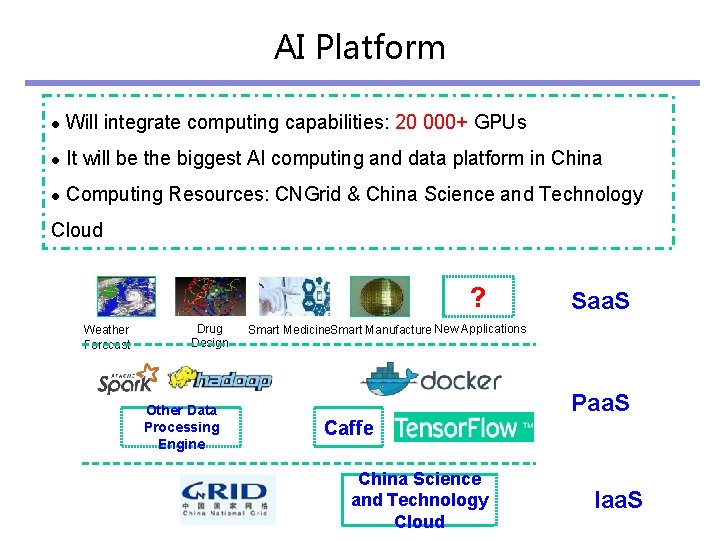

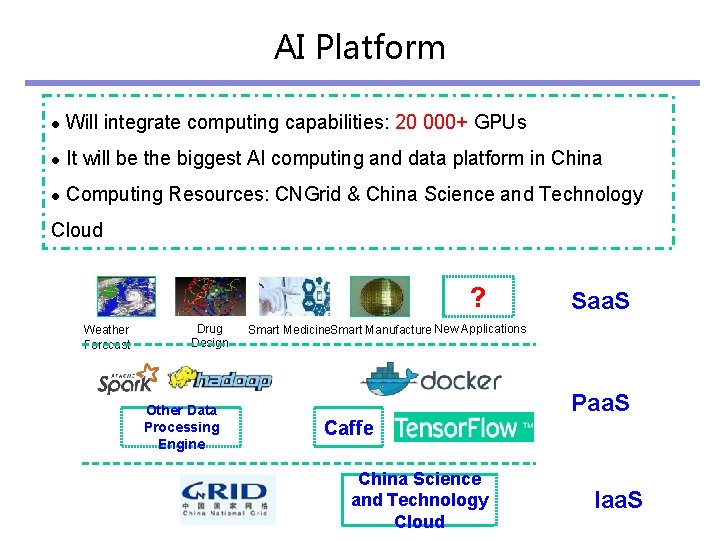

AI Platform l Will integrate computing capabilities: 20 000+ GPUs l It will be the biggest AI computing and data platform in China l Computing Resources: CNGrid & China Science and Technology Cloud ? Weather Forecast Drug Design Other Data Processing Engine Saa. S Smart Medicine. Smart Manufacture New Applications Caffe China Science and Technology Cloud Paa. S Iaa. S

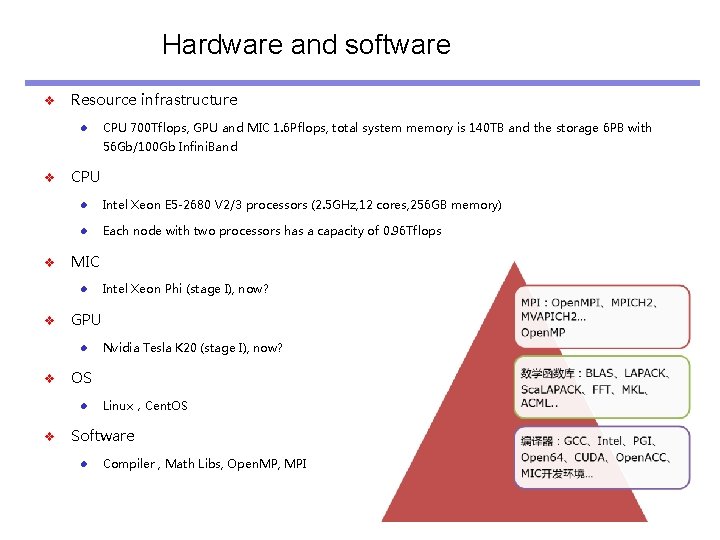

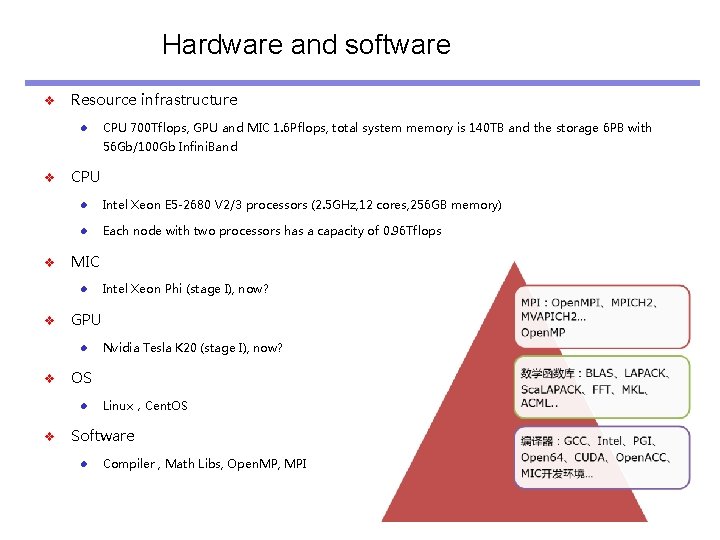

Hardware and software v Resource infrastructure l CPU 700 Tflops, GPU and MIC 1. 6 Pflops, total system memory is 140 TB and the storage 6 PB with 56 Gb/100 Gb Infini. Band v v CPU l Intel Xeon E 5 -2680 V 2/3 processors (2. 5 GHz, 12 cores, 256 GB memory) l Each node with two processors has a capacity of 0. 96 Tflops MIC l v GPU l v Nvidia Tesla K 20 (stage I), now? OS l v Intel Xeon Phi (stage I), now? Linux,Cent. OS Software l Compiler , Math Libs, Open. MP, MPI

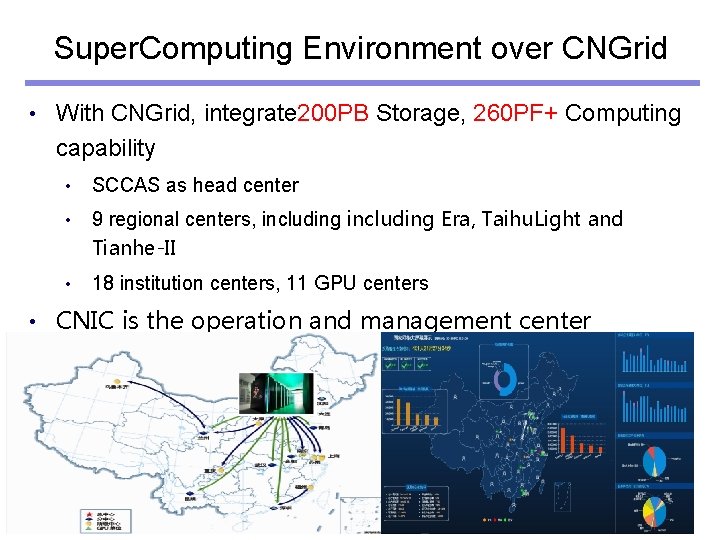

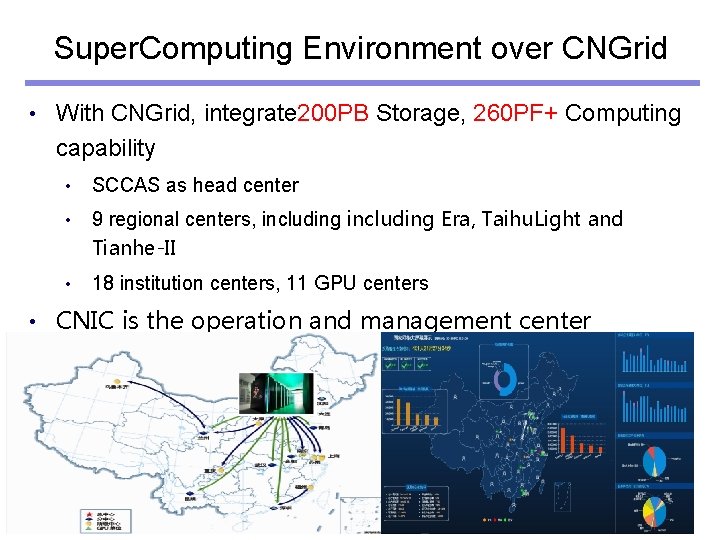

Super. Computing Environment over CNGrid • • With CNGrid, integrate 200 PB Storage, 260 PF+ Computing capability • SCCAS as head center • 9 regional centers, including Era, Taihu. Light and Tianhe-II • 18 institution centers, 11 GPU centers CNIC is the operation and management center 7

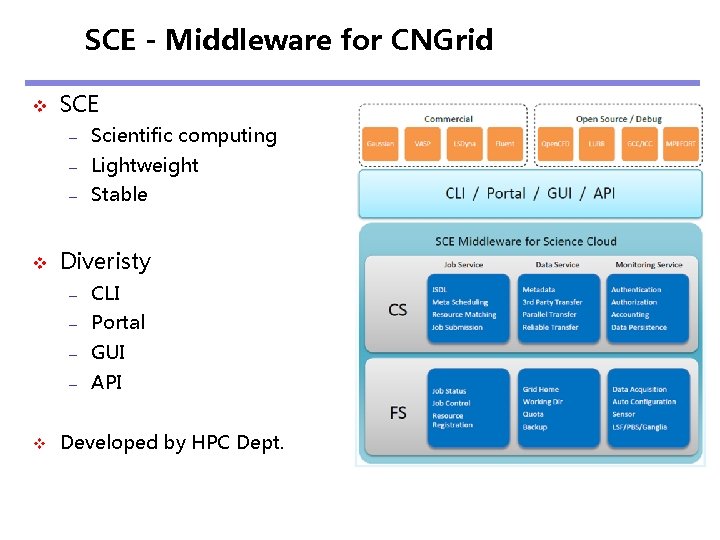

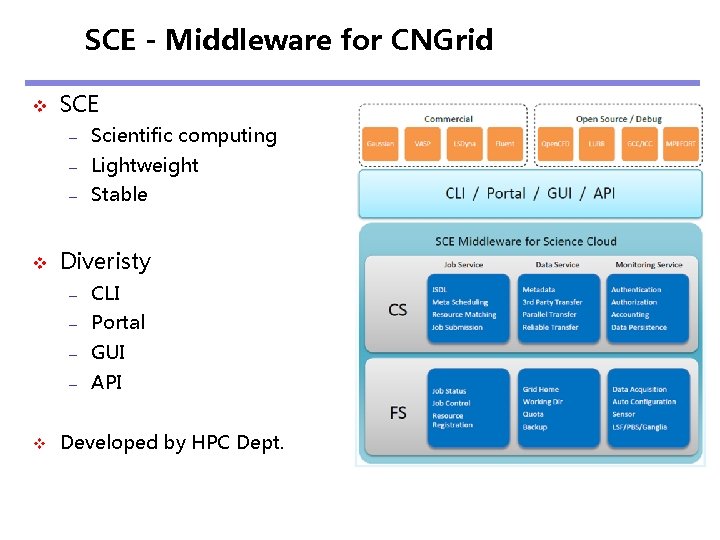

SCE - Middleware for CNGrid v v v SCE – Scientific computing – Lightweight – Stable Diveristy – CLI – Portal – GUI – API Developed by HPC Dept.

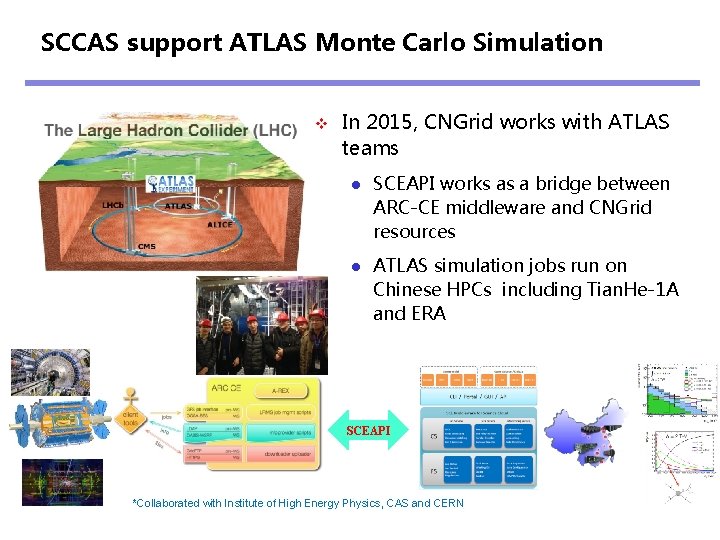

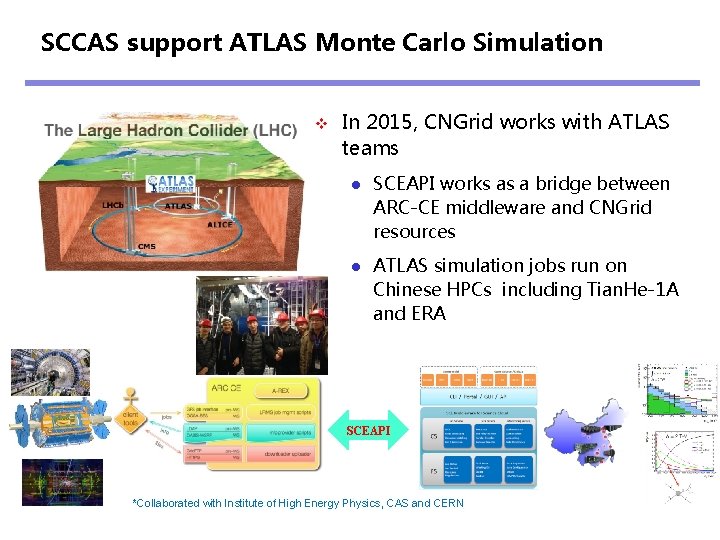

SCCAS support ATLAS Monte Carlo Simulation v In 2015, CNGrid works with ATLAS teams l SCEAPI works as a bridge between ARC-CE middleware and CNGrid resources l ATLAS simulation jobs run on Chinese HPCs including Tian. He-1 A and ERA SCEAPI *Collaborated with Institute of High Energy Physics, CAS and CERN

The top 2 SC center in China v Sunway Taihu. Light l Developed by China’s National Research Center of Parallel Computer Engineering & Technology (NRCPC) l At the National Supercomputing Center in Wuxi, Jiangsu province l 93 Pflop/s v Tianhe-2 A (Milky Way-2 A) l Developed by China’s National University of Defense Technology (NUDT) l At the National Supercomputer Center in Guangzhou l 61. 4 Pflop/s 10

Wuxi National Supercomputing Center v Own the Sunway Taihu. Light system v One thing need to know v l Its CPU was designed by Shanghai High-Performance Integrated Circuit Design Center n Sunway SW 26010 260 C l Not general x 86 CPU architecture Need much efforts to migrate HEP software to run on it with good performance 11

Guang. Zhou National SC Center v Own Tianhe-2 A system l 16, 000 nodes l Each node: 2*12 -core Intel Xeon E 5 -2692 v 2 + 2 Matrix-2000 l Memory for each node: 64 GB l Interconnect: proprietary internal high-speed interconnect TH 2 Express-2 + 14 Gbps x 8 Lane l Network interface: 2 Gigabit Ethernet interface l Storage: 19 PB, 1 TB/s v Resources can be provided through cloud platform v Support Big Data & Deep Learning 12

Tian. Jin National SC Center v ~10 PFlops v Tianhe-1 was deployed v l 7168 nodes, 4. 7 PFlops l Intel X 5670, 2. 93 GHz, 12 core/node, 24/48 GB memory New system l Intel E 5 -2690 V 4, 2. 6 GHz,28 core /node,128 GB v Tianhe-3 is being deployed v No direct access from public network l Access through VPN l WN has no access to outside, even login node 13

Use and Charge v All the SC centers are not free, even for science and education v Normal price published (for example, Tianhe-II ) l Normal nodes: 0. 1 RMB/core. hour(2*12 Intel Xeon E 5 -2692 v 2 | 64 GB memory) l Storage: 2000 yuan/TB/year v Special price for large scale of usage in science? Need further confirmation v In SCCAS case, l “In case of large consumption of computing resources, it need to seek financial support from project cooperation” l “For special use of HPC resources, the charge policy can be 14 negotiated case by case”