CEPC Computing Xiaomei Zhang Nov 2018 Time Line

- Slides: 29

CEPC Computing Xiaomei Zhang Nov. 2018

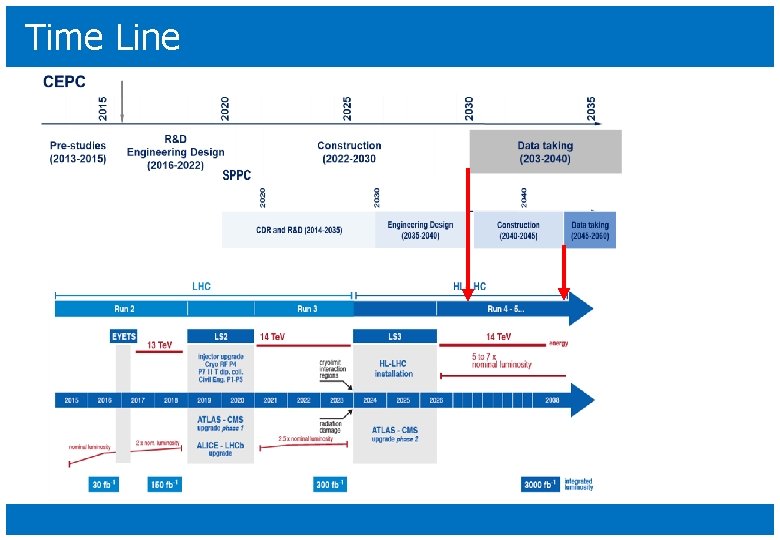

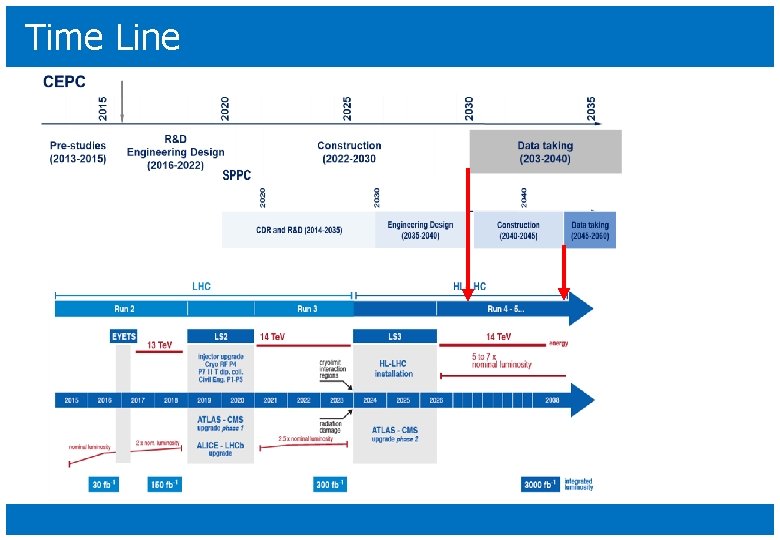

Time Line

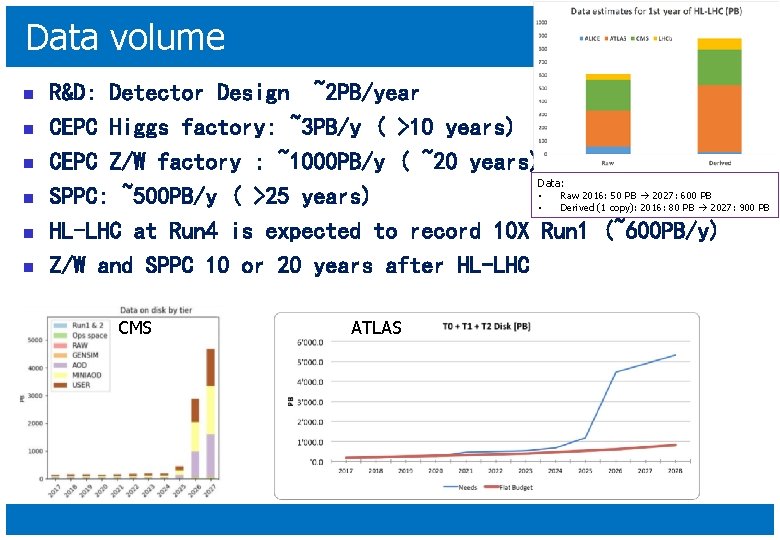

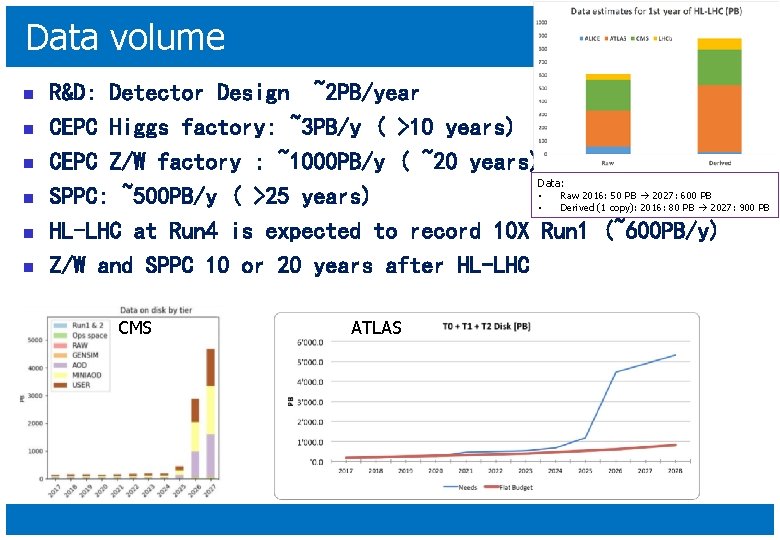

Data volume n n n R&D: Detector Design ~2 PB/year CEPC Higgs factory: ~3 PB/y ( >10 years) CEPC Z/W factory : ~1000 PB/y ( ~20 years) Data: • Raw 2016: 50 PB 2027: 600 PB SPPC: ~500 PB/y ( >25 years) • Derived (1 copy): 2016: 80 PB 2027: 900 PB HL-LHC at Run 4 is expected to record 10 X Run 1 (~600 PB/y) Z/W and SPPC 10 or 20 years after HL-LHC CMS ATLAS

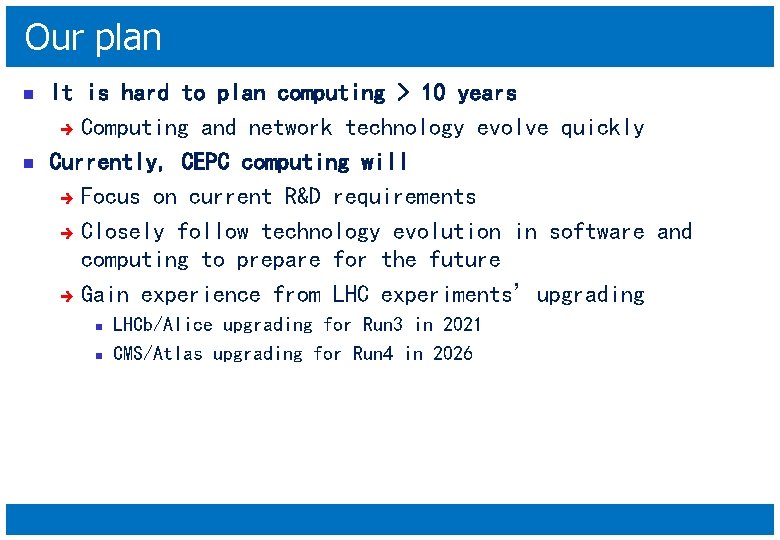

Our plan n n It is hard to plan computing > 10 years è Computing and network technology evolve quickly Currently, CEPC computing will è Focus on current R&D requirements è Closely follow technology evolution in software and computing to prepare for the future è Gain experience from LHC experiments’upgrading n n LHCb/Alice upgrading for Run 3 in 2021 CMS/Atlas upgrading for Run 4 in 2026

Computing status in R&D

Computing requirements n n CEPC simulation for detector design needs at least 2 K dedicated CPU cores and 2 PB each year è Currently no direct funding to meet requirements, no dedicated computing resources Distributed computing becomes the main way to collect free resources for this stage è Contributions from collaborations è Share IHEP resources from other experiments through HTCondor and IHEPCloud è BOINC volunteer computing (in progress, seen in Poster) è Commercial Cloud (potential)

Distributed computing n n The distributed computing system for CEPC have been built up based on DIRAC in 2015 DIRAC provides a framework and solution for experiments to setup their own distributed computing system è n Good cooperation with DIRAC community è è n Originally from LHCb, now widely used by other communities, such as BELLEII,ILC,etc Join DIRAC consortium in 2016 Join the efforts on common need The system have considered current CEPC computing requirements, resource situation and manpower è è Use existing grid solutions as much as possible from WLCG Keep system as simple as possible for users and sites

Computing model n IHEP as central site è è è n Remote sites è è n è IHEP -> Sites, stdhep files from EG distributed to Sites -> IHEP, output MC data directly transfer back to IHEP from jobs To be improved è è n MC production including Mokka simu + Marlin recon No requirements of storage Data flow è n Event Generation(EG), analysis, simulation Hold central storage for all experiment data Hold central database for detector geometry EG + simu + recon can run in a whole workflow to reduce data movements Geometry description move to DD 4 HEP and can be accessed through CVMFS in a more reliable way With more resources involved, scaling will be considered, eg. extended to multi-tier infrastructure with more SEs available……

Network n IHEP international Network provides a good basis for distributed computing è è 20 Gbps outbound,10 Gbps to Europe/USA In 2018 IHEP joined LHCONE, with CSTNet and CERNET n è LHCONE is a virtual link dedicated to LHC CEPC international cooperation also can benefit from LHCONE 9

Resources n 6 Active Sites è QMUL from England IPAS from Taiwan plays a great role Resource: ~2500 CPU cores, shared resources with other experiments Resource types include Cluster, Grid , Cloud Encourage more sites to join us! è n n n from England, Taiwan, China Universities(4) Site Name CPU Cores CLOUD. IHEPOPENNEBULA. cn 24 CLUSTER. IHEP-Condor. cn 48 CLOUD. IHEPCLOUD. cn 200 GRID. QMUL. uk 1600 CLUSTER. IPAS. tw 500 CLUSTER. SJTU. cn 100 Total (Active) 2472 QMUL: Queen Mary University of London IPAS: Institute of Physics, Academia Sinica

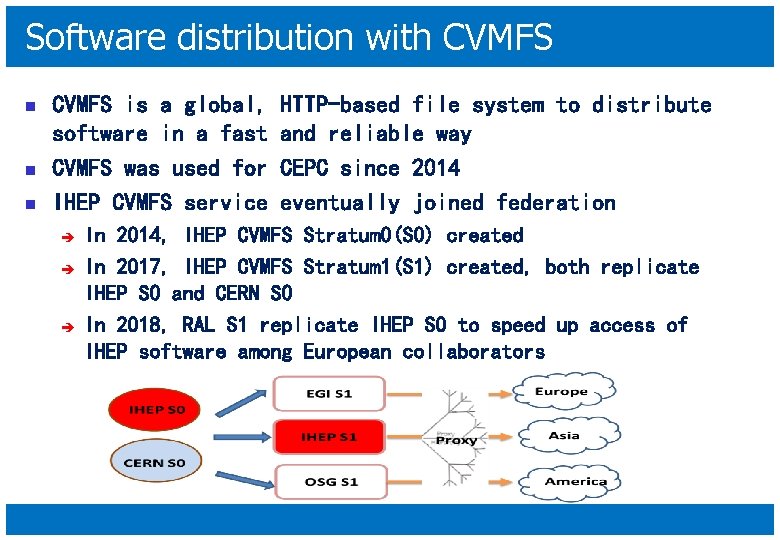

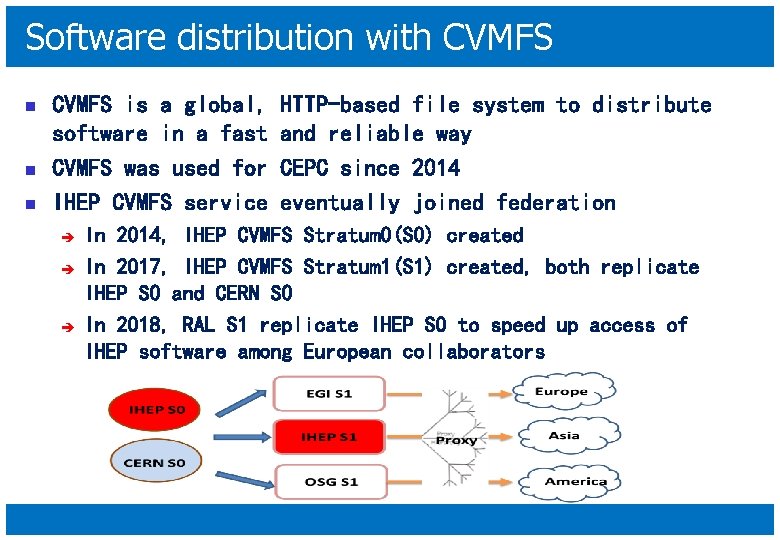

Software distribution with CVMFS n n n CVMFS is a global, software in a fast CVMFS was used for IHEP CVMFS service è è è HTTP-based file system to distribute and reliable way CEPC since 2014 eventually joined federation In 2014, IHEP CVMFS Stratum 0(S 0) created In 2017, IHEP CVMFS Stratum 1(S 1) created, both replicate IHEP S 0 and CERN S 0 In 2018, RAL S 1 replicate IHEP S 0 to speed up access of IHEP software among European collaborators

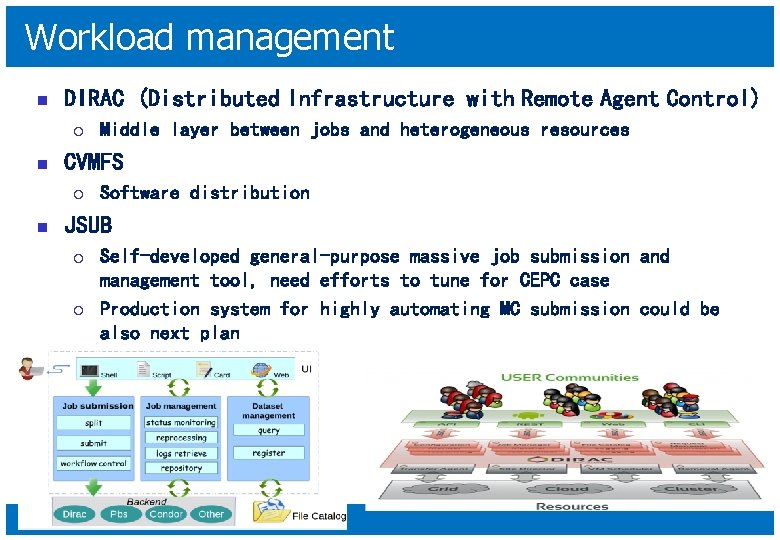

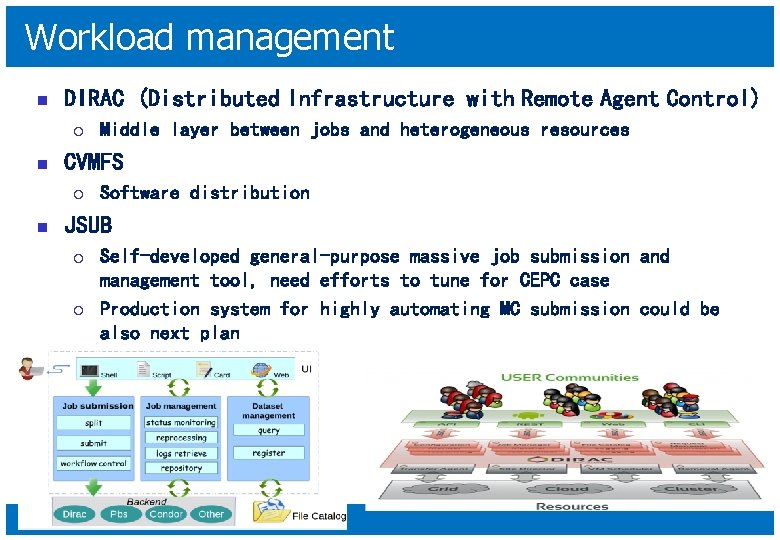

Workload management n DIRAC (Distributed Infrastructure with Remote Agent Control) ¡ n CVMFS ¡ n Middle layer between jobs and heterogeneous resources Software distribution JSUB ¡ ¡ Self-developed general-purpose massive job submission and management tool, need efforts to tune for CEPC case Production system for highly automating MC submission could be also next plan

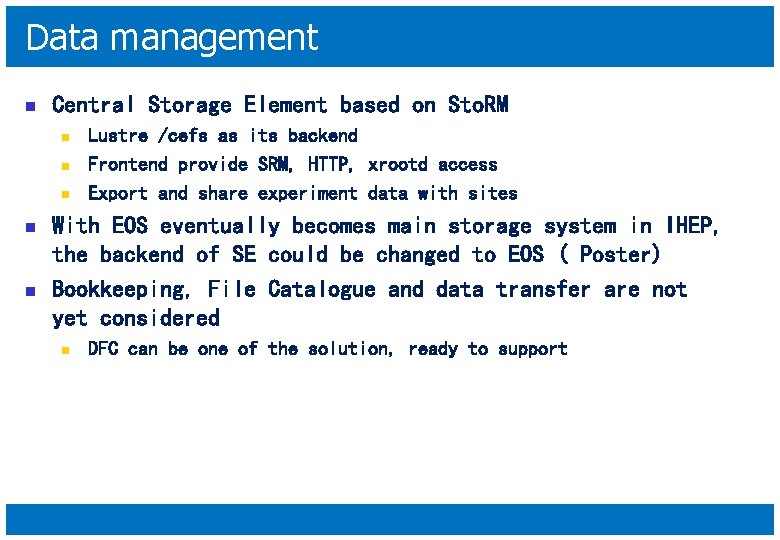

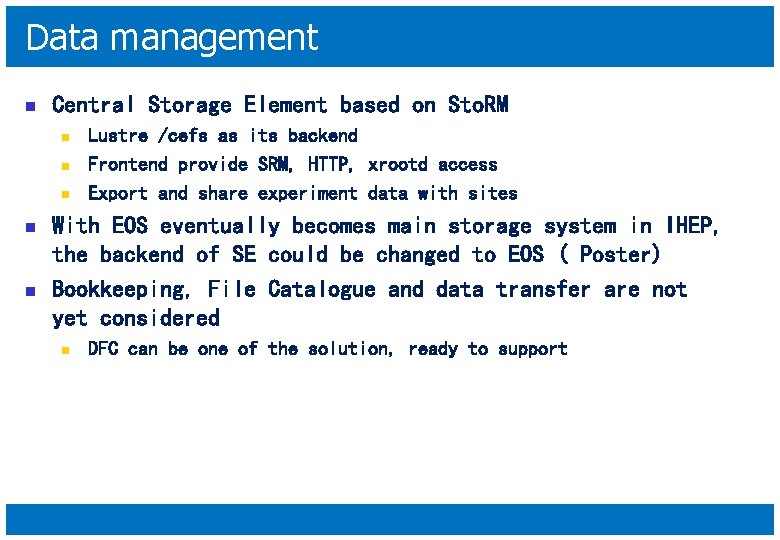

Data management n Central Storage Element based on Sto. RM n n n Lustre /cefs as its backend Frontend provide SRM, HTTP, xrootd access Export and share experiment data with sites With EOS eventually becomes main storage system in IHEP, the backend of SE could be changed to EOS ( Poster) Bookkeeping, File Catalogue and data transfer are not yet considered n DFC can be one of the solution, ready to support

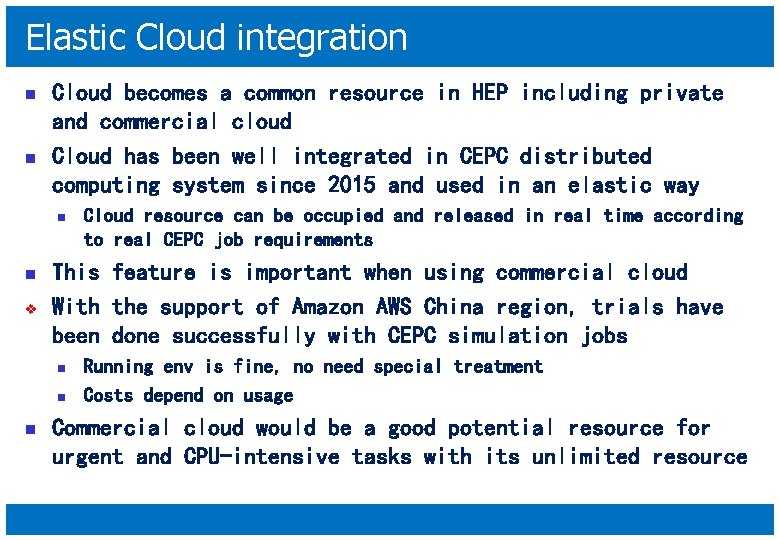

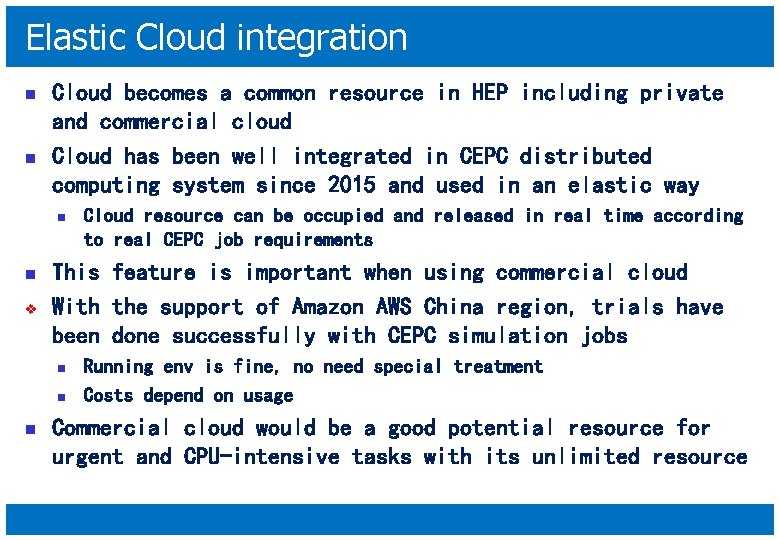

Elastic Cloud integration n n Cloud becomes a common resource in HEP including private and commercial cloud Cloud has been well integrated in CEPC distributed computing system since 2015 and used in an elastic way n n v This feature is important when using commercial cloud With the support of Amazon AWS China region, trials have been done successfully with CEPC simulation jobs n n n Cloud resource can be occupied and released in real time according to real CEPC job requirements Running env is fine, no need special treatment Costs depend on usage Commercial cloud would be a good potential resource for urgent and CPU-intensive tasks with its unlimited resource

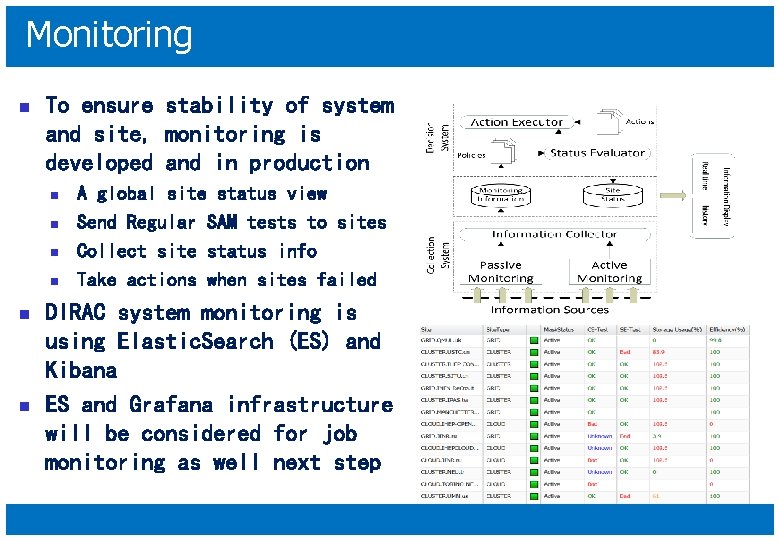

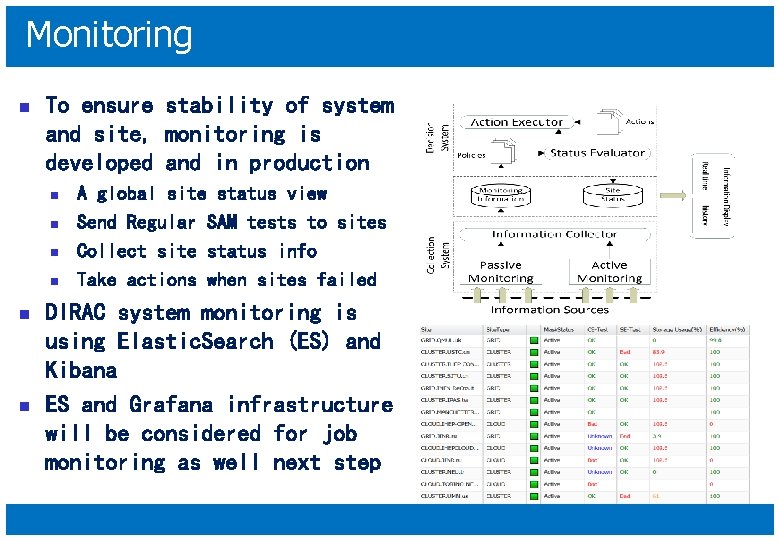

Monitoring n To ensure stability of system and site, monitoring is developed and in production n n n A global site status view Send Regular SAM tests to sites Collect site status info Take actions when sites failed DIRAC system monitoring is using Elastic. Search (ES) and Kibana ES and Grafana infrastructure will be considered for job monitoring as well next step

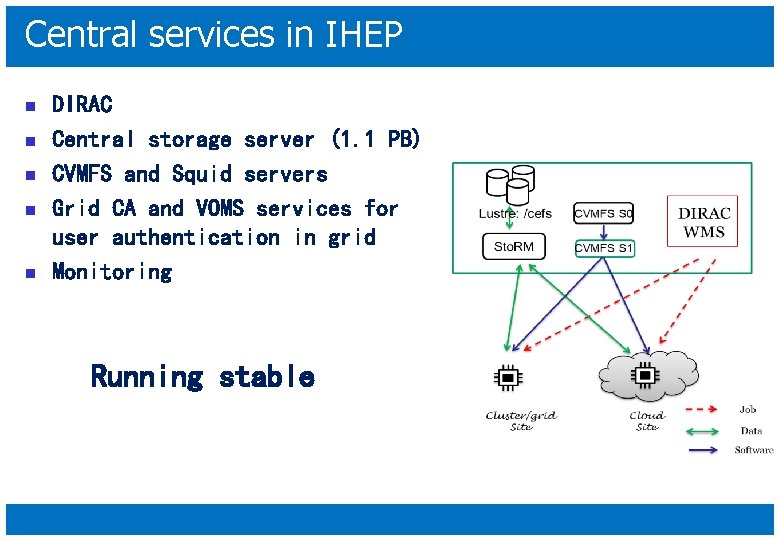

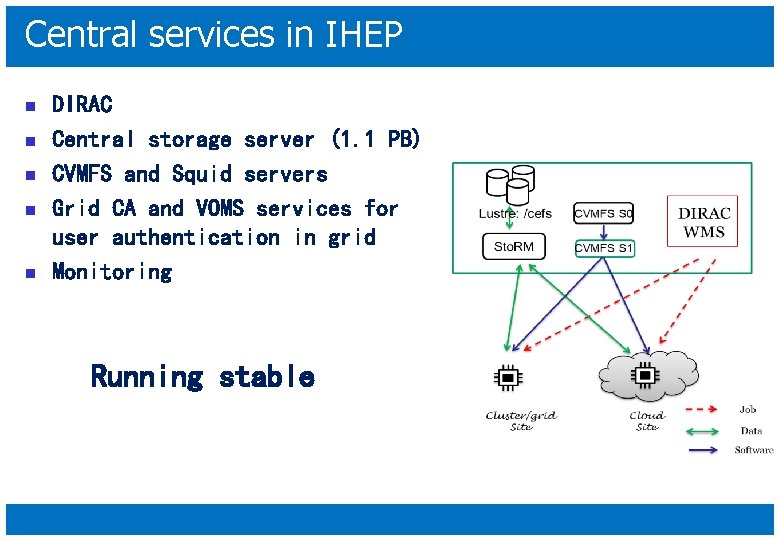

Central services in IHEP n n n DIRAC Central storage server (1. 1 PB) CVMFS and Squid servers Grid CA and VOMS services for user authentication in grid Monitoring Running stable

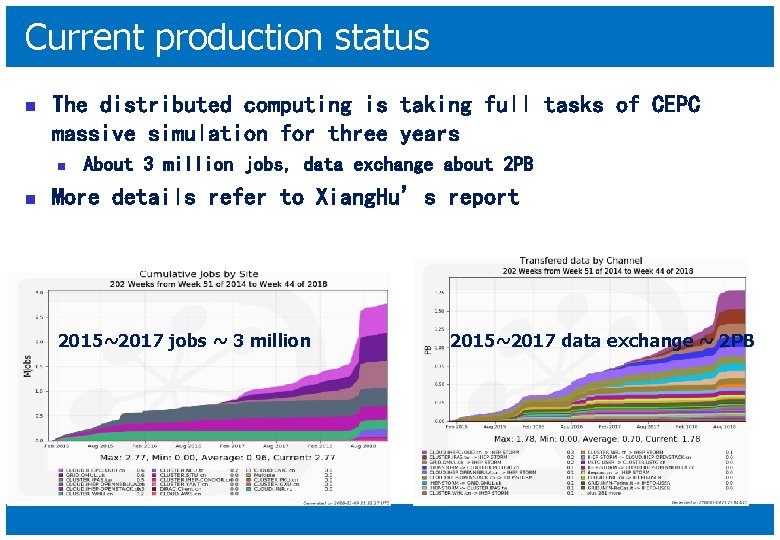

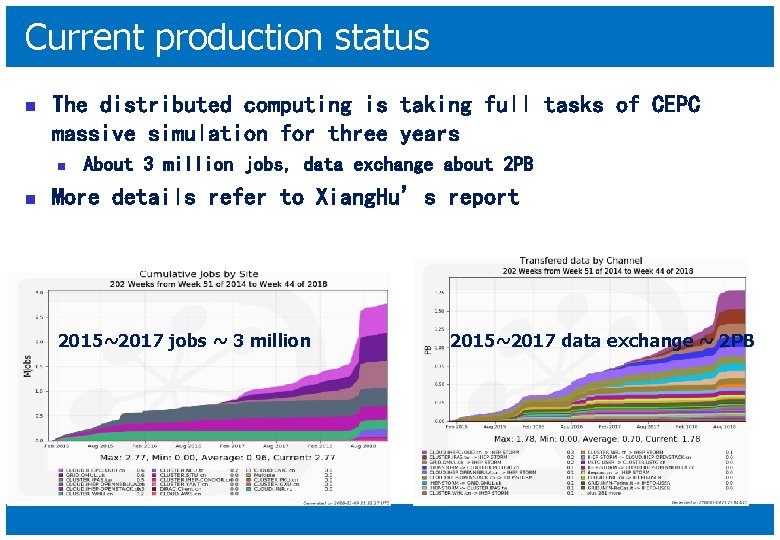

Current production status n The distributed computing is taking full tasks of CEPC massive simulation for three years n n About 3 million jobs, data exchange about 2 PB More details refer to Xiang. Hu’s report 2015~2017 jobs ~ 3 million 2015~2017 data exchange ~ 2 PB

On-going research and plan

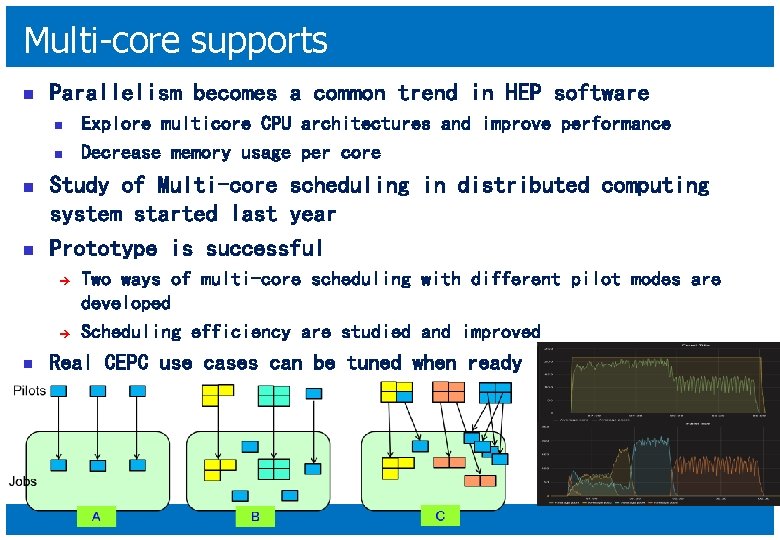

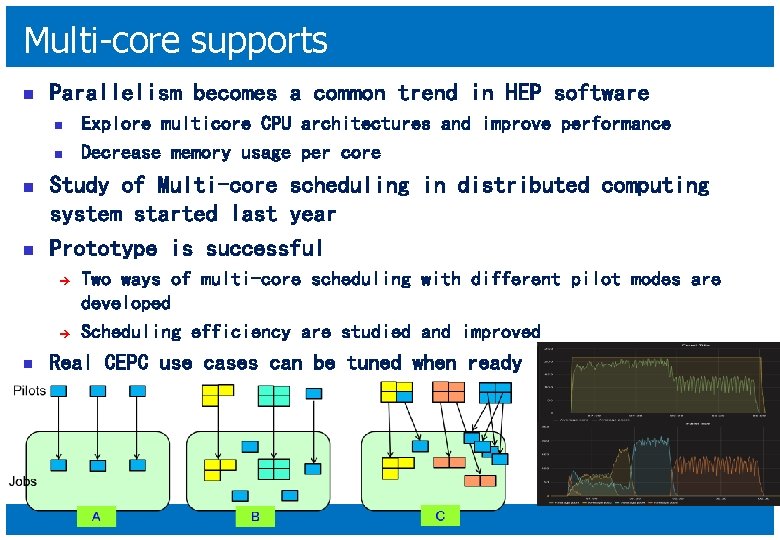

Multi-core supports n Parallelism becomes a common trend in HEP software n n Study of Multi-core scheduling in distributed computing system started last year Prototype is successful è è n Explore multicore CPU architectures and improve performance Decrease memory usage per core Two ways of multi-core scheduling with different pilot modes are developed Scheduling efficiency are studied and improved Real CEPC use cases can be tuned when ready

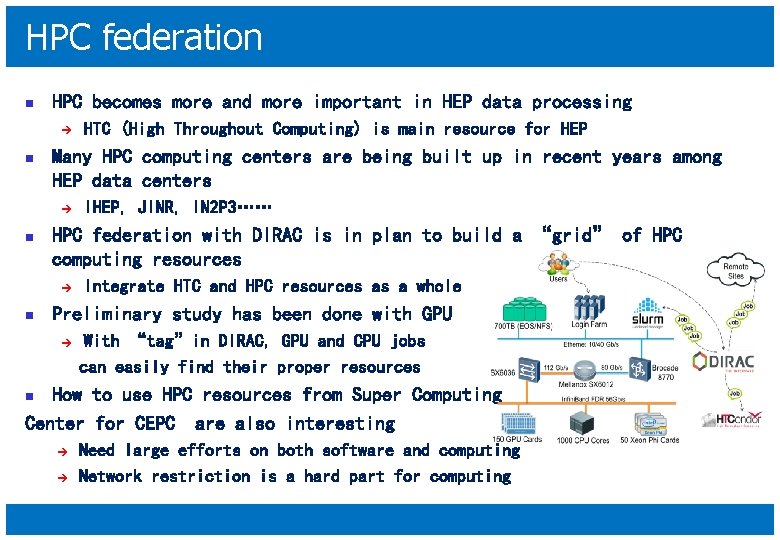

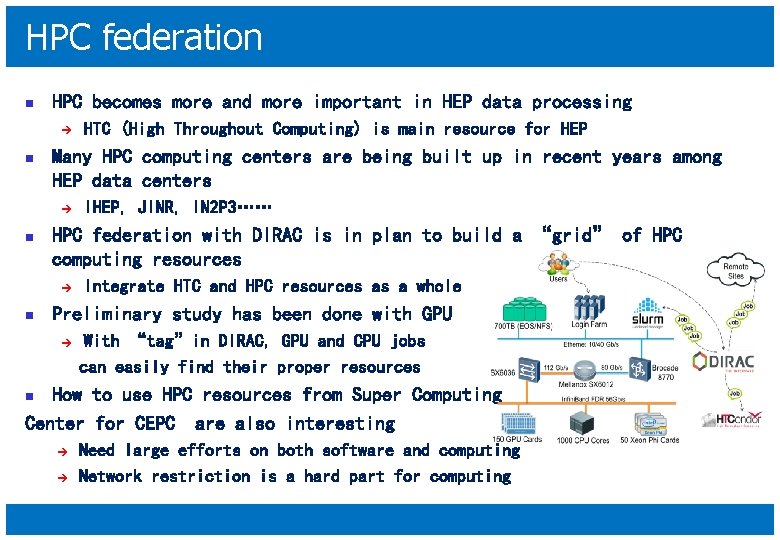

HPC federation n HPC becomes more and more important in HEP data processing è n Many HPC computing centers are being built up in recent years among HEP data centers è n IHEP, JINR, IN 2 P 3…… HPC federation with DIRAC is in plan to build a “grid” of HPC computing resources è n HTC (High Throughout Computing) is main resource for HEP Integrate HTC and HPC resources as a whole Preliminary study has been done with GPU è With “tag”in DIRAC, GPU and CPU jobs can easily find their proper resources How to use HPC resources from Super Computing Center for CEPC are also interesting n è è Need large efforts on both software and computing Network restriction is a hard part for computing

Light-weight virtualization n n VM implement virtualization, but heavy with penalty Singularity based on container tech provides OS portability è è è n Now Singularity are used in two ways è è n Sites can start Singularity from jobs in their batch system Distribute computing can start Singularity by pilots according to experiment OS requirement Two ways have already been tried out and used in production è n But very light, no penalty Also good isolation of jobs for security Only need configuration for site admin Cluster. IHEP-Condor. cn is one of the example A typical use case è è è Site want to upgrade to SL 7 But experiment software want to remain < SL 7 We can configure to run SL 6 singularity at sites

More study related to WLCG future Evolutions n n WLCG AAI ( Authorization and Authentication Infrastructure ) è A next generation, token-based VO-aware AAI is being designed to take place of X. 509 certificate and VOMS to ease federations DOMA ( Data Organization, Management and Access ) è Data Federation protocol: gridftp to xrootd or http è Efficient hierarchical access to data: Data Lake and Data Cache è Common data management and transfer system: Rucio è New model is being proposed to adapt to new needs driven by changing algorithms and data processing needs n fast access to training datasets for Machine Learning, high granularity access to event data, rapid high throughput access for a future analysis facilities…

Future: Everything is possible

Machine Learning n n n ML is more and more widely in experiment software It could be one of the biggest evolution happened to ways of simulation, reconstruction, analysis This also greatly change the way of using computing in HEP è GPU, quantum computing could dominate the future?

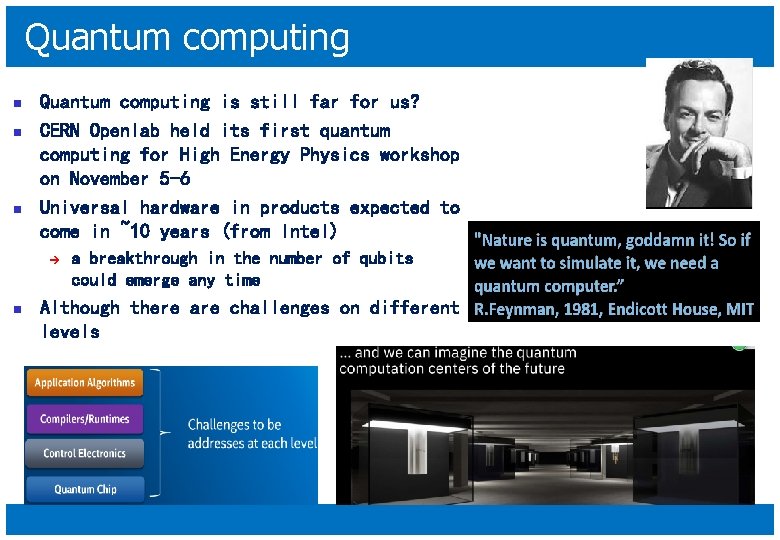

Quantum computing n n n Quantum computing is still far for us? CERN Openlab held its first quantum computing for High Energy Physics workshop on November 5 -6 Universal hardware in products expected to come in ~10 years (from Intel) è n a breakthrough in the number of qubits could emerge any time Although there are challenges on different levels

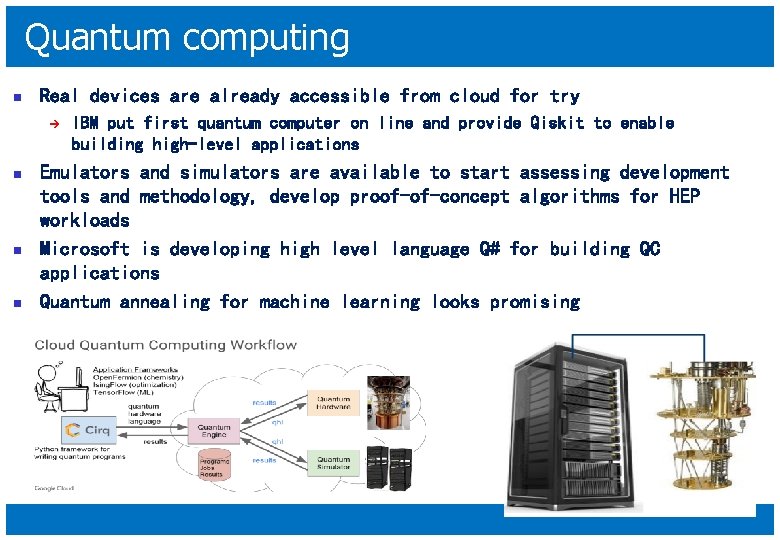

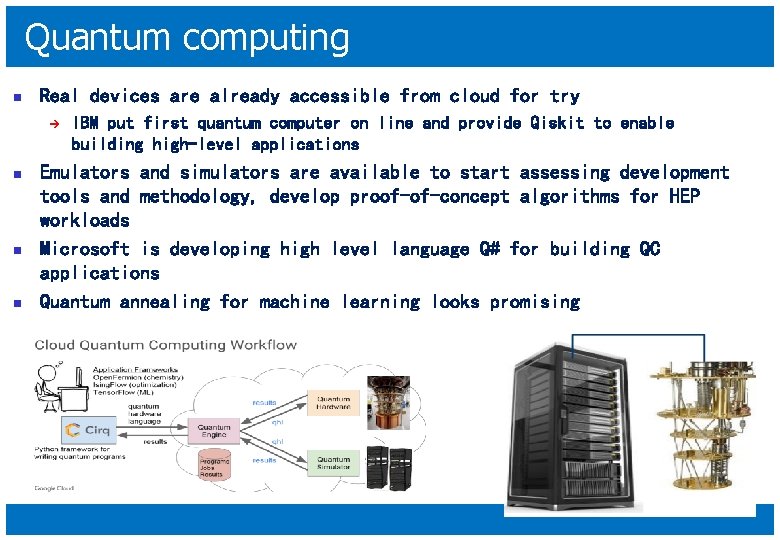

Quantum computing n Real devices are already accessible from cloud for try è n n n IBM put first quantum computer on line and provide Qiskit to enable building high-level applications Emulators and simulators are available to start assessing development tools and methodology, develop proof-of-concept algorithms for HEP workloads Microsoft is developing high level language Q# for building QC applications Quantum annealing for machine learning looks promising

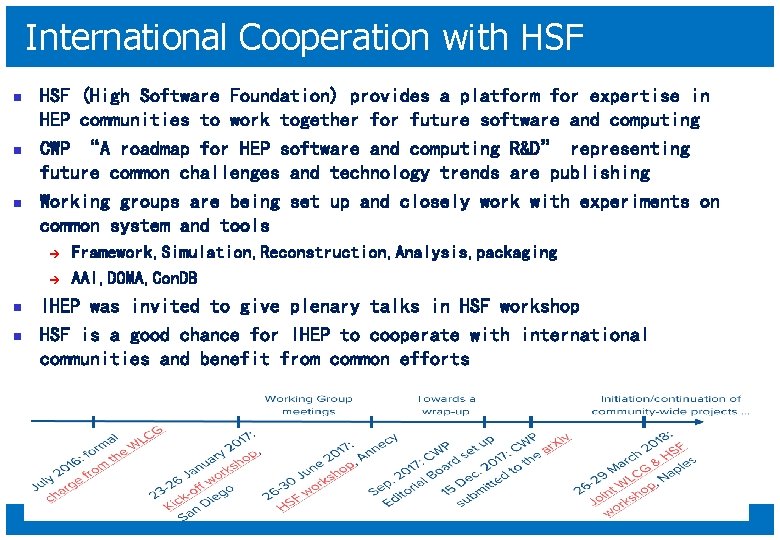

International Cooperation with HSF n n n HSF (High Software Foundation) provides a platform for expertise in HEP communities to work together for future software and computing CWP “A roadmap for HEP software and computing R&D” representing future common challenges and technology trends are publishing Working groups are being set up and closely work with experiments on common system and tools è è n n Framework, Simulation, Reconstruction, Analysis, packaging AAI, DOMA, Con. DB IHEP was invited to give plenary talks in HSF workshop HSF is a good chance for IHEP to cooperate with international communities and benefit from common efforts

Summary n n The distributed computing system is working well for current CEPC R&D phase New technology study is on-going with same directions as WLCG, preparing for the future CEPC International Cooperation with LHC community through HSF is establishing up Computing in 10 or 20 years not clear, we closely follow technology evolution

Thank you!