High Performance Computing Course Notes 2006 2007 Course

![Maximising Performance How is performance maximised? Reduce the time per instruction (cycle time) [1]: Maximising Performance How is performance maximised? Reduce the time per instruction (cycle time) [1]:](https://slidetodoc.com/presentation_image/91e3f405c3b56980df74b60d0967ae32/image-15.jpg)

- Slides: 15

High Performance Computing Course Notes 2006 -2007 Course Administration

Course Administration Course organiser: Dr. Ligang He http: //www. dcs. warwick. ac. uk/~liganghe Contact details Email: liganghe@dcs. warwick. ac. uk Office hours: Monday: 2 pm-3 pm Wednesday: 2 pm-3 pm Computer Science, University of Warwick 2

Course Administration Course Format 15 CATs 30 hours Assessment: 70% examined, 30% coursework Coursework details announced in week 5 Coursework deadline in week 10 Computer Science, University of Warwick 3

Learning Objectives By the end of the course, you should understand: àThe role of HPC in science and engineering àCommonly used HPC platforms and parallel programming models àThe means by which to measure, analyse and assess the performance of HPC applications and their supporting hardware àMechanisms for evaluating the suitability of different HPC solutions to common problems in computational science àThe role of administration, scheduling, code portability and data management in an HPC environment, with particular reference to grid computing àThe potential benefits and pitfalls of Grid Computing Computer Science, University of Warwick 4

Materials The slides will be made available on-line after each lecture Relevant papers and on-line resources will be made available on-line throughout the course. à Download and read suggested papers. Questions in the exam will be based on the content of the papers as well as details from the notes. à Computer Science, University of Warwick 5

Coursework will involve the development of a parallel and distributed application using the Message Passing Interface (MPI). It will involve performance analysis and modelling. Your program will be assessed by a written report and a “demo session”. Please attend the C and MPI tutorials! à Week 1 -4, 9 am-10 am every Tuesday: introducing the C language in CS 101 àWeek 5 -6, 9 am-10 am every Tuesday: introducing how to write simple MPI programs in the MSc lab Computer Science, University of Warwick 6

High Performance Computing Course Notes 2006 -2007 HPC Fundamentals

Introduction What is High Performance Computing (HPC)? Difficult to define - it’s a moving target. • Later 1980 s, a supercomputer performs 100 m FLOPS • Today, a 2 G Hz desktop/laptop performs a few giga FLOPS • Today, a supercomputer performs tens of Tera FLOPS (Top 500) • High performance: O(1000) more powerful than the latest desktops Driven by demand of computation-intensive applications from various areas • Medical and Biology (e. g. simulation of brains) • Finance (e. g. modelling the world economy) • Military and Defence (e. g. modelling explosion of nuclear weapons) • Engineering (e. g. simulations of a car crash or a new airplane design) Computer Science, University of Warwick 8

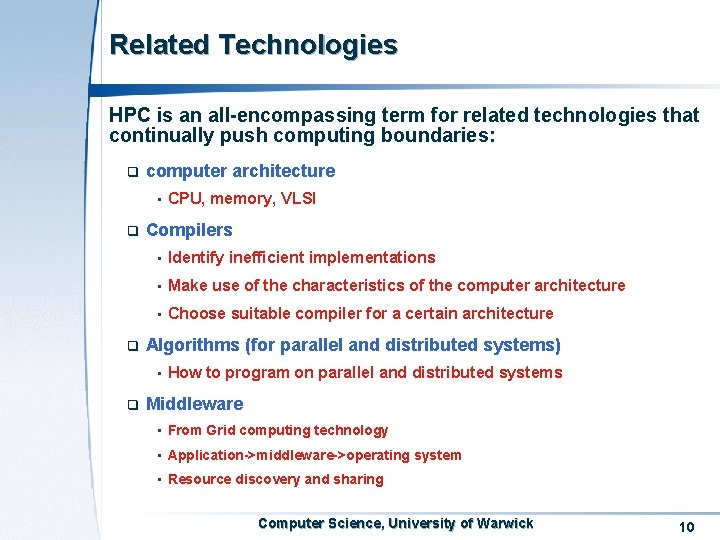

An Example of Demands in Computing Capability Project: Blue Brain aim: construct a simulated brain Building blocks of a brain are neurocortical columns A column consists of about 60, 000 neurons Human brain contains millions of such columns First stage: simulate a single column (each processor acting as one or two neurons) Then: simulate a small network of columns Ultimate goal: simulate the whole human brain IBM contributes Blue Gene supercomputer Computer Science, University of Warwick 9

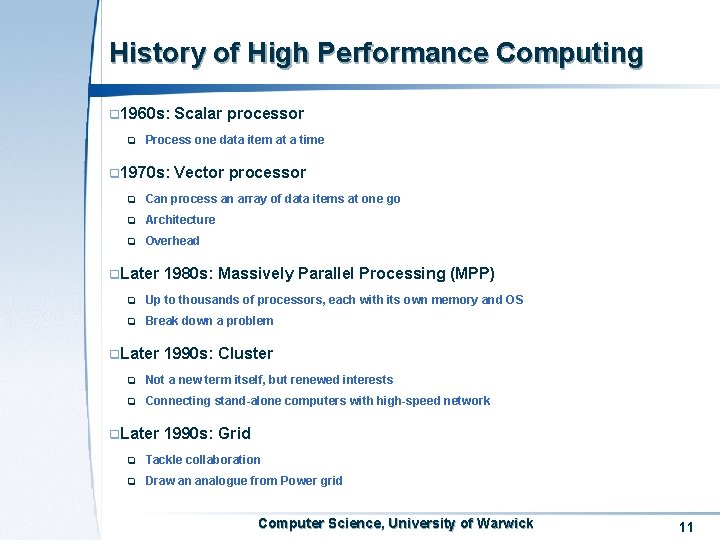

Related Technologies HPC is an all-encompassing term for related technologies that continually push computing boundaries: computer architecture • Compilers • Identify inefficient implementations • Make use of the characteristics of the computer architecture • Choose suitable compiler for a certain architecture Algorithms (for parallel and distributed systems) • CPU, memory, VLSI How to program on parallel and distributed systems Middleware • From Grid computing technology • Application->middleware->operating system • Resource discovery and sharing Computer Science, University of Warwick 10

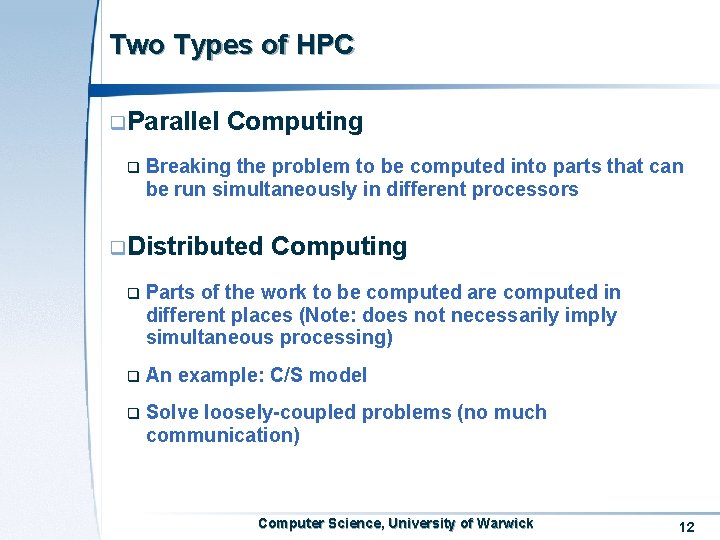

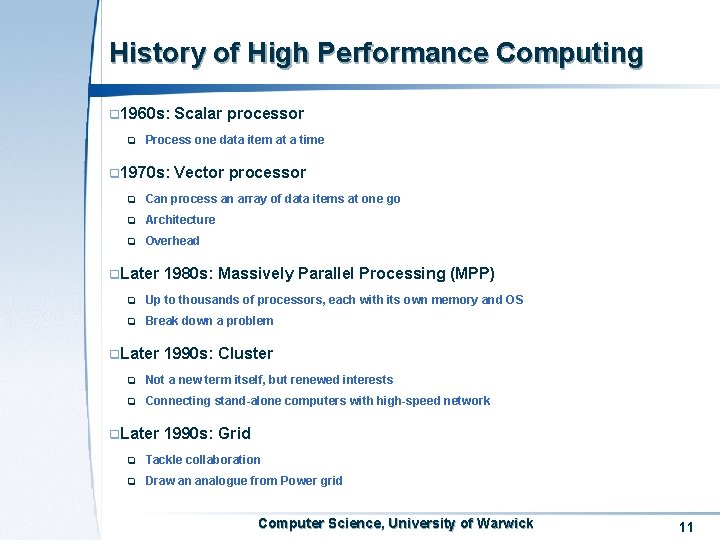

History of High Performance Computing 1960 s: Scalar processor Process one data item at a time 1970 s: Vector processor Can process an array of data items at one go Architecture Overhead Later 1980 s: Massively Parallel Processing (MPP) Up to thousands of processors, each with its own memory and OS Break down a problem Later 1990 s: Cluster Not a new term itself, but renewed interests Connecting stand-alone computers with high-speed network Later 1990 s: Grid Tackle collaboration Draw an analogue from Power grid Computer Science, University of Warwick 11

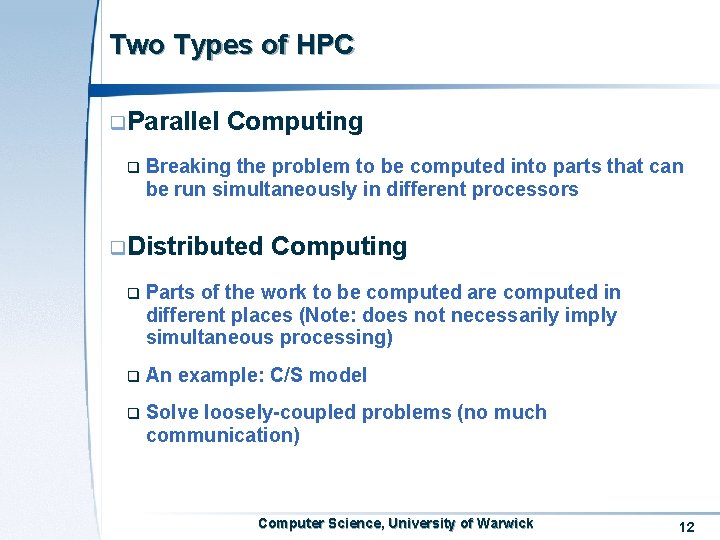

Two Types of HPC Parallel Computing Breaking the problem to be computed into parts that can be run simultaneously in different processors Distributed Computing Parts of the work to be computed are computed in different places (Note: does not necessarily imply simultaneous processing) An example: C/S model Solve loosely-coupled problems (no much communication) Computer Science, University of Warwick 12

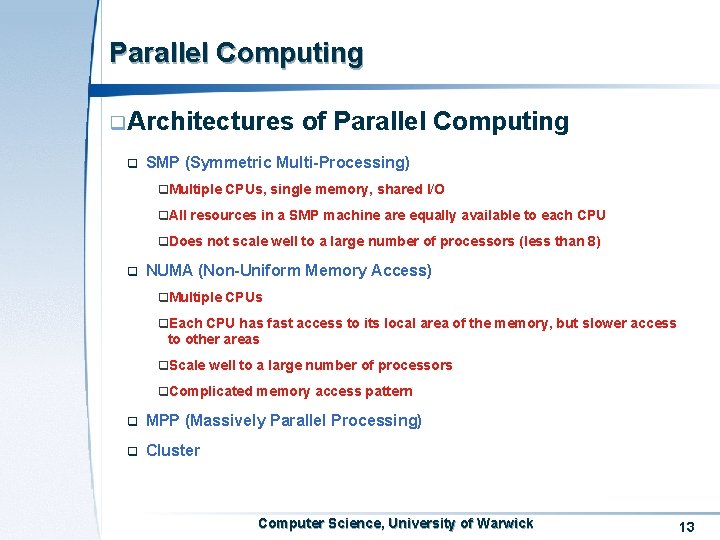

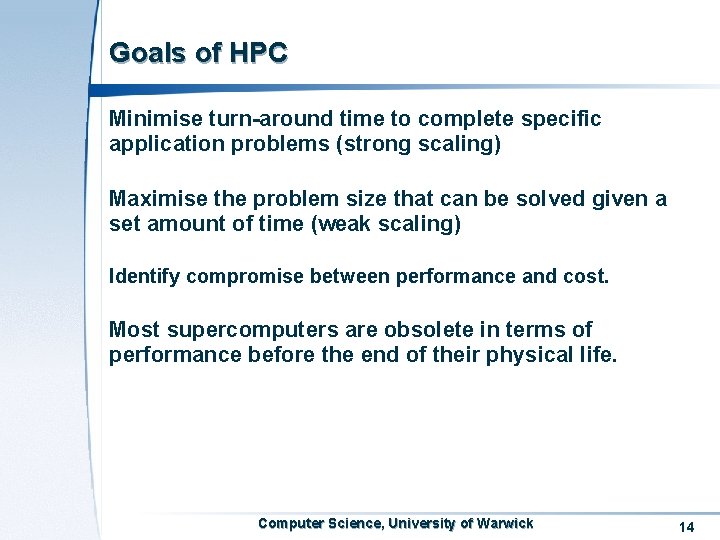

Parallel Computing Architectures of Parallel Computing SMP (Symmetric Multi-Processing) Multiple CPUs, single memory, shared I/O All resources in a SMP machine are equally available to each CPU Does not scale well to a large number of processors (less than 8) NUMA (Non-Uniform Memory Access) Multiple CPUs Each CPU has fast access to its local area of the memory, but slower access to other areas Scale well to a large number of processors Complicated memory access pattern MPP (Massively Parallel Processing) Cluster Computer Science, University of Warwick 13

Goals of HPC Minimise turn-around time to complete specific application problems (strong scaling) Maximise the problem size that can be solved given a set amount of time (weak scaling) Identify compromise between performance and cost. Most supercomputers are obsolete in terms of performance before the end of their physical life. Computer Science, University of Warwick 14

![Maximising Performance How is performance maximised Reduce the time per instruction cycle time 1 Maximising Performance How is performance maximised? Reduce the time per instruction (cycle time) [1]:](https://slidetodoc.com/presentation_image/91e3f405c3b56980df74b60d0967ae32/image-15.jpg)

Maximising Performance How is performance maximised? Reduce the time per instruction (cycle time) [1]: clock rate. In crease the number of instructions executed per-cycle [2]: pipelining. Allow multiple processors to work on different parts of the same program at the same time [3]: parallel execution. When performance is gained from [1] and [2] There is a limit to how quick processors will operate. Speed of light and electricity. Heat dissipation. Power consumption A instruction processing procedure cannot be divided into infinite stages When performance improvements come from [3] Overhead of communications Computer Science, University of Warwick 15