GPU Accelerated Free Surface Flows Using Smoothed Particle

- Slides: 14

GPU Accelerated Free Surface Flows Using Smoothed Particle Hydrodynamics Christopher Mc. Cabe, Derek Causon and Clive Mingham Centre for Mathematical Modelling & Flow Analysis Manchester Metropolitan University MANCHESTER M 1 5 GD United Kingdom 1

Smoothed Particle Hydrodynamics �Highly parallel algorithm �Tracks particle movement rather than calculates physical properties at fixed points in space �Ideal for modelling of free surface flows Ø Wave interactions with floating structures Ø Environmental scenarios v Dambreaks v Flooding and coastal inundation 2

Problem Specification and Particle Configuration � 2 D Dambreak of 8 m x 8 m body of water. � 4096 (64 x 64) real particles, with 1024 virtual particles to describe the fixed solid boundaries. �Obstacle in path of dam break flow. �Algorithm based on “Simulating Free Surface Flows with SPH” by J J Monaghan, Journal of Computational Physics 110, 399 -406 (1994) 3

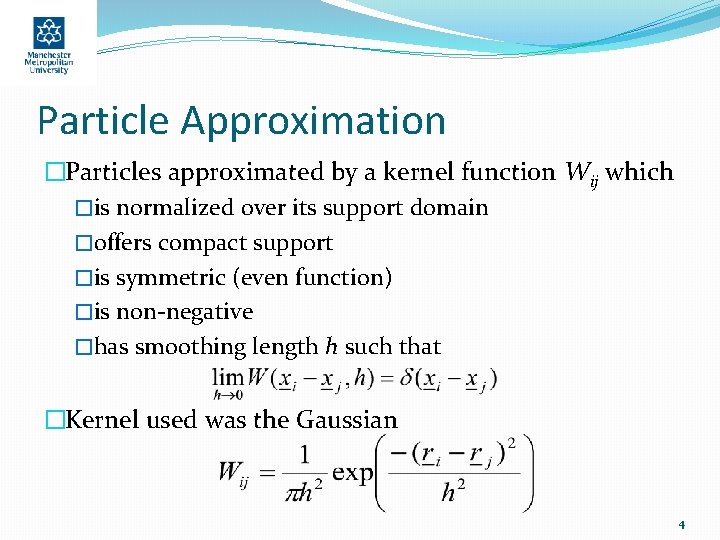

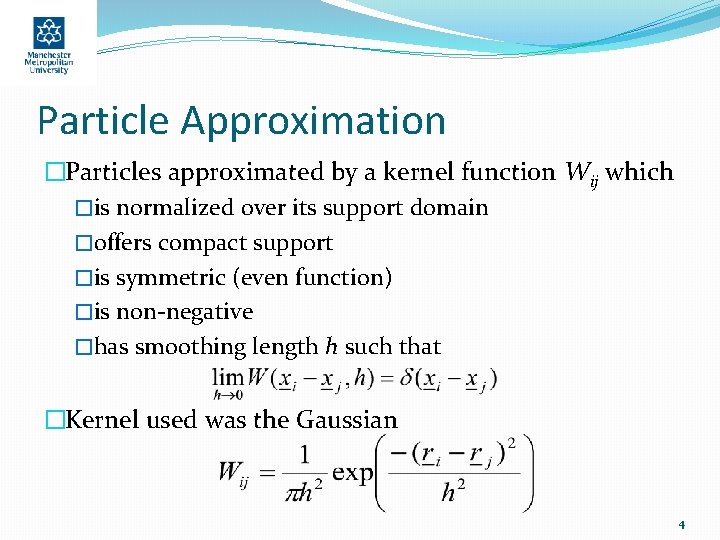

Particle Approximation �Particles approximated by a kernel function Wij which �is normalized over its support domain �offers compact support �is symmetric (even function) �is non-negative �has smoothing length h such that �Kernel used was the Gaussian 4

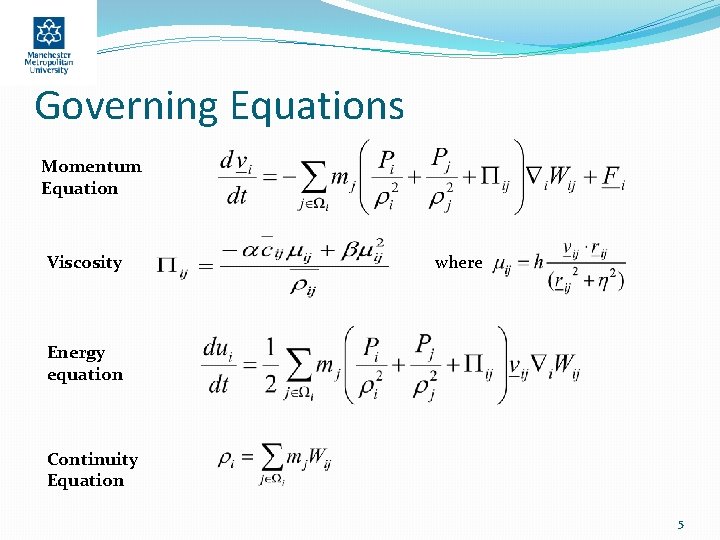

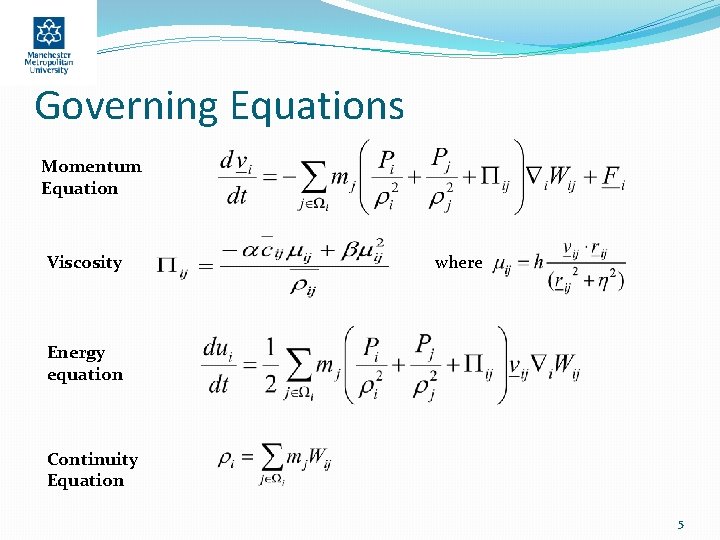

Governing Equations Momentum Equation Viscosity where Energy equation Continuity Equation 5

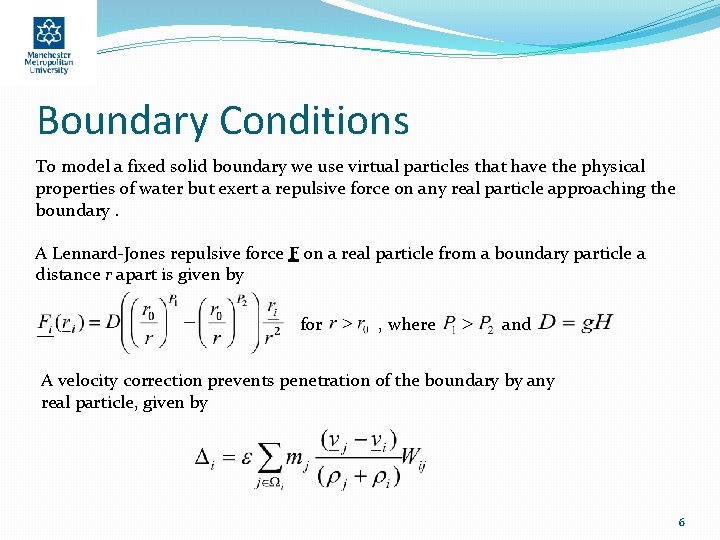

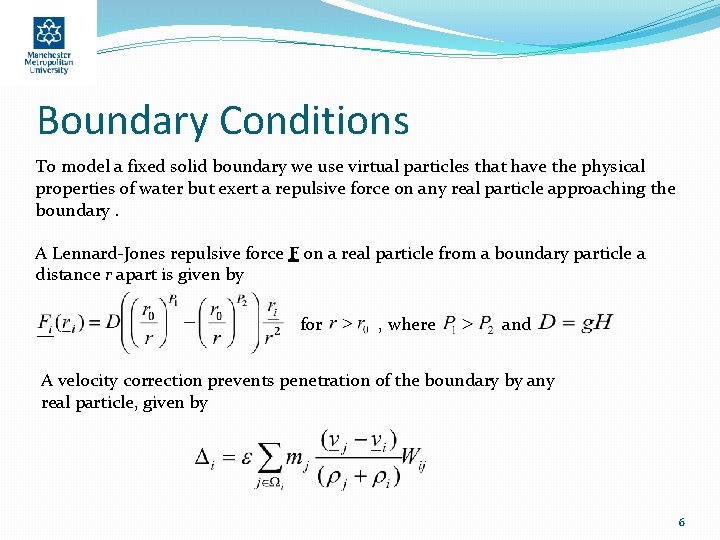

Boundary Conditions To model a fixed solid boundary we use virtual particles that have the physical properties of water but exert a repulsive force on any real particle approaching the boundary. A Lennard-Jones repulsive force F on a real particle from a boundary particle a distance r apart is given by for , where and A velocity correction prevents penetration of the boundary by any real particle, given by 6

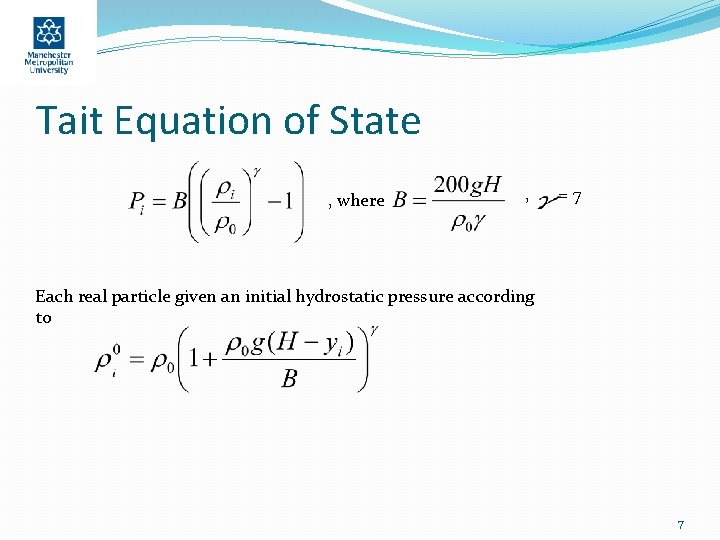

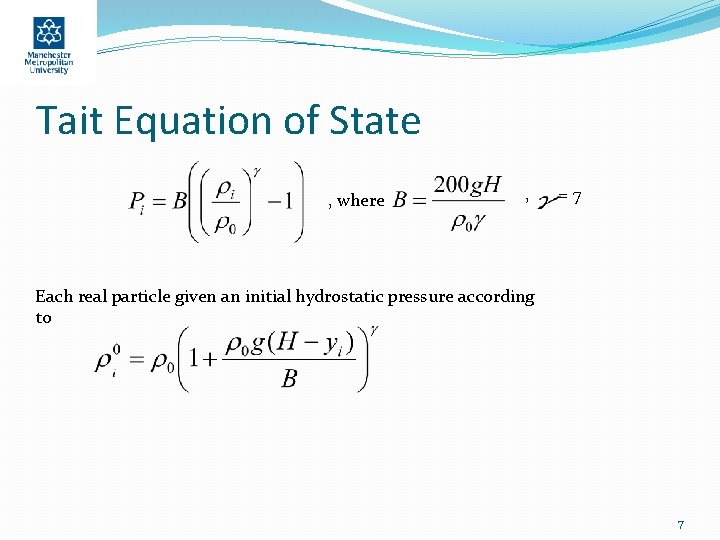

Tait Equation of State , where , =7 Each real particle given an initial hydrostatic pressure according to 7

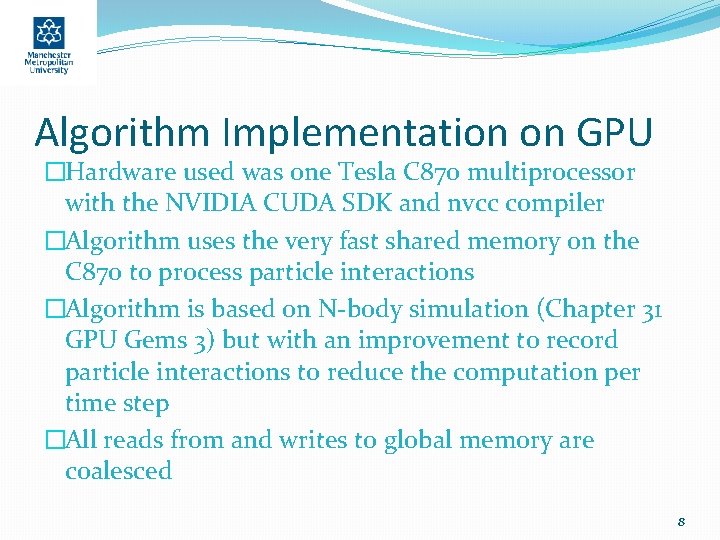

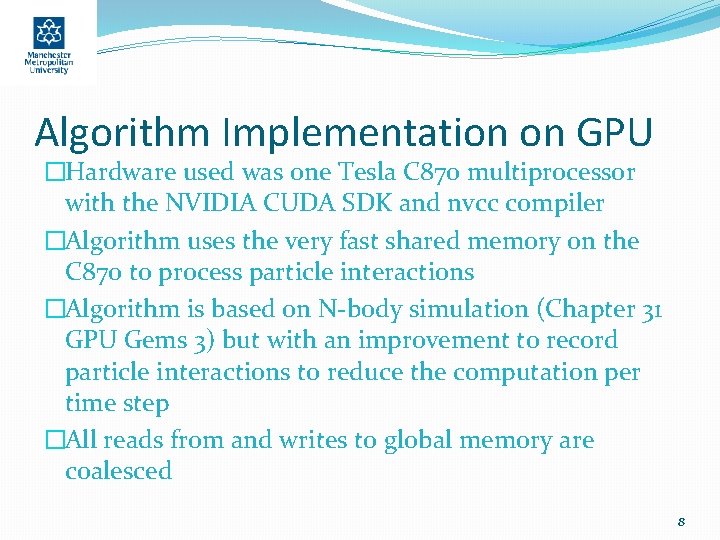

Algorithm Implementation on GPU �Hardware used was one Tesla C 870 multiprocessor with the NVIDIA CUDA SDK and nvcc compiler �Algorithm uses the very fast shared memory on the C 870 to process particle interactions �Algorithm is based on N-body simulation (Chapter 31 GPU Gems 3) but with an improvement to record particle interactions to reduce the computation per time step �All reads from and writes to global memory are coalesced 8

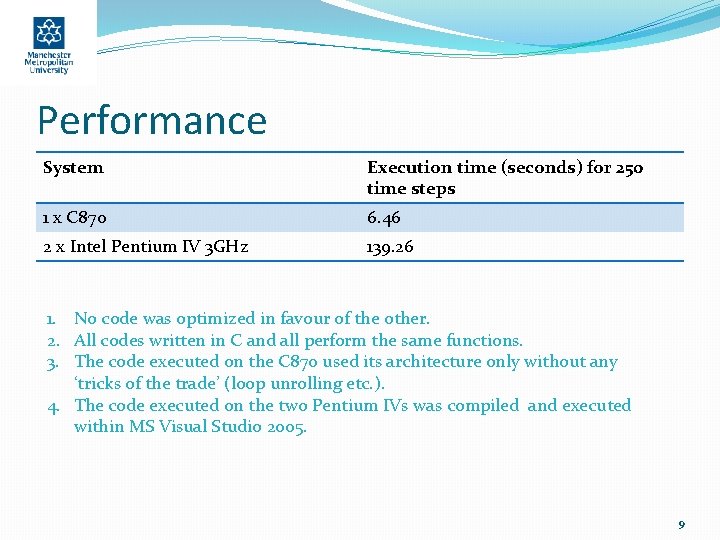

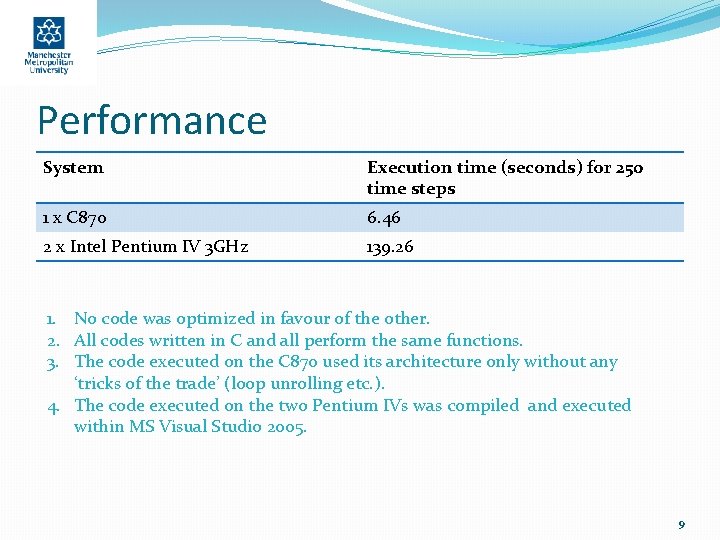

Performance System Execution time (seconds) for 250 time steps 1 x C 870 6. 46 2 x Intel Pentium IV 3 GHz 139. 26 1. No code was optimized in favour of the other. 2. All codes written in C and all perform the same functions. 3. The code executed on the C 870 used its architecture only without any ‘tricks of the trade’ (loop unrolling etc. ). 4. The code executed on the two Pentium IVs was compiled and executed within MS Visual Studio 2005. 9

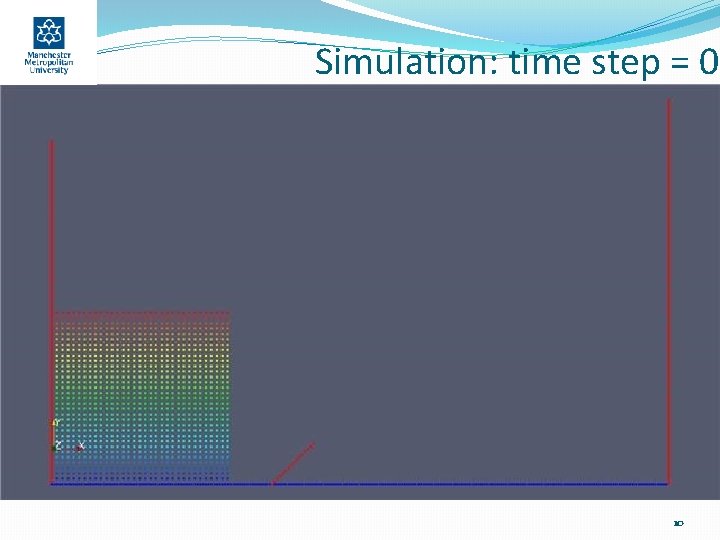

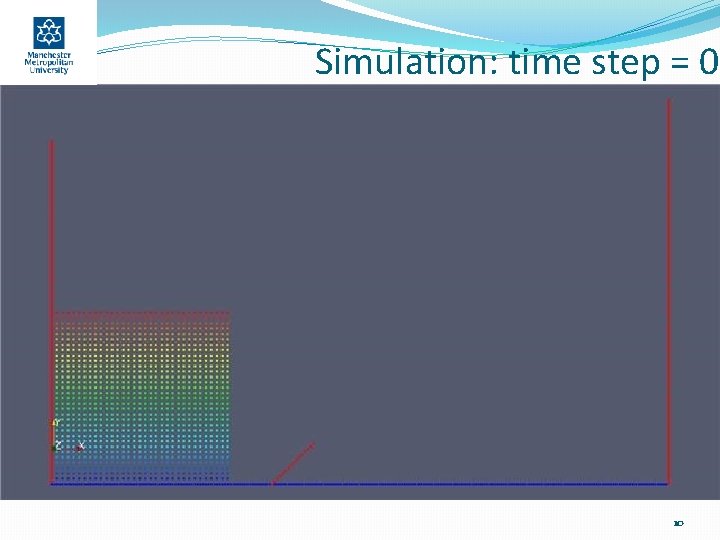

Simulation: time step = 0 10

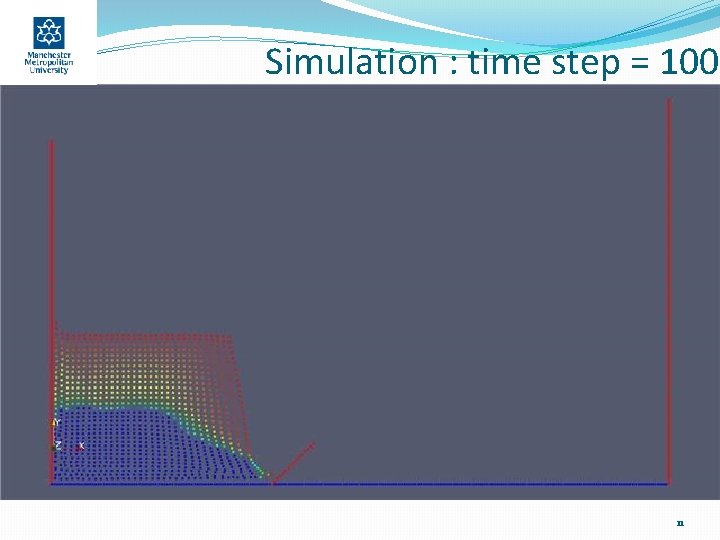

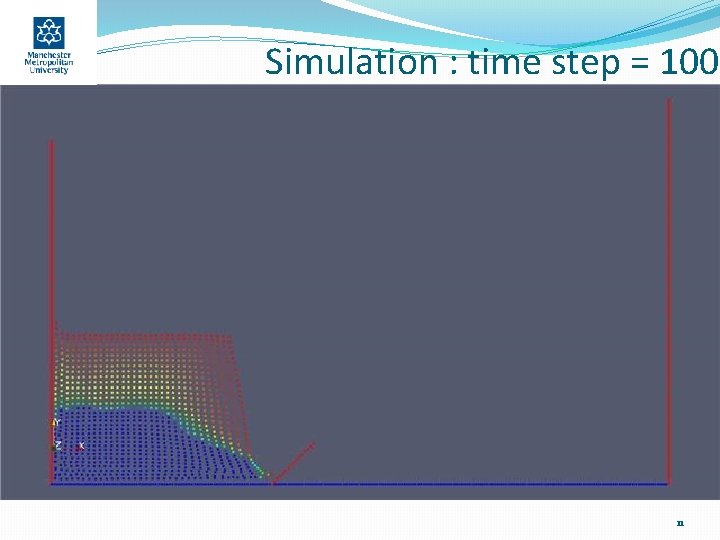

Simulation : time step = 100 11

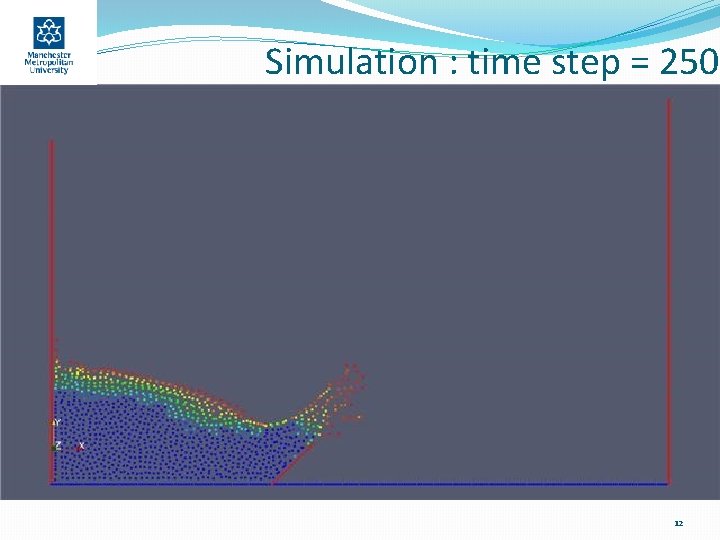

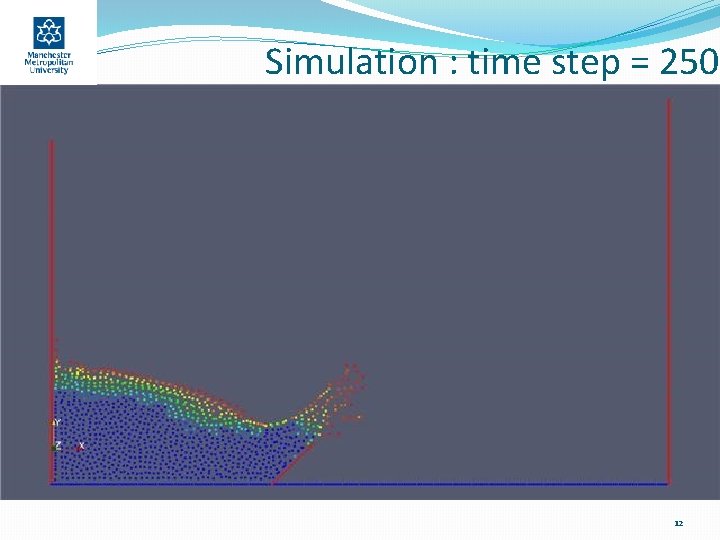

Simulation : time step = 250 12

Conclusions �The Nvidia C 870 accelerated the code execution by a significant factor. �The algorithm is based on a mathematical formulation. �Results are very realistic. 13

Future Work �Implement more accurate and faster SPH algorithms �Consider larger and different benchmark problems �Use multiple GPUs 14