Final Exam Review Page Replacement CPU Scheduling IPC

- Slides: 38

Final Exam Review Page Replacement CPU Scheduling IPC

Paging Revisitted • If a page is not in physical memory – find the page on disk – find a free frame – bring the page into memory • What if there is no free frame in memory?

Page Replacement • Basic idea – if there is a free page in memory, use it – if not, select a victim frame – write the victim out to disk – read the desired page into the now free frame – update page tables – restart the process

Page Replacement • Main objective of a good replacement algorithm is to achieve a low page fault rate – insure that heavily used pages stay in memory – the replaced page should not be needed for some time • Secondary objective is to reduce latency of a page fault – efficient code – replace pages that do not need to be written out

First-In, First-Out (FIFO) • The oldest page in physical memory is the one selected for replacement • Very simple to implement – keep a list • victims are chosen from the tail • new pages in are placed at the head

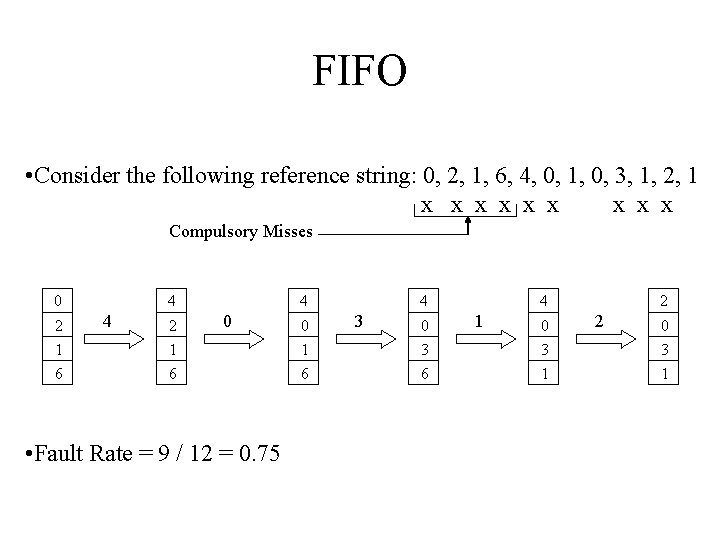

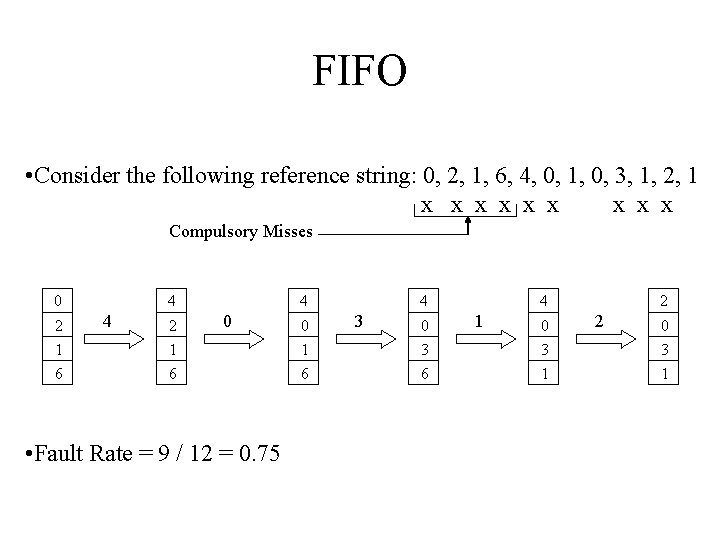

FIFO • Consider the following reference string: 0, 2, 1, 6, 4, 0, 1, 0, 3, 1, 2, 1 x x x x x Compulsory Misses 0 2 1 6 4 4 2 1 6 4 0 • Fault Rate = 9 / 12 = 0. 75 0 1 6 4 3 0 3 6 4 1 0 3 1 2 2 0 3 1

FIFO Issues • Poor replacement policy • Evicts the oldest page in the system – usually a heavily used variable should be around for a long time – FIFO replaces the oldest page - perhaps the one with the heavily used variable • FIFO does not consider page usage

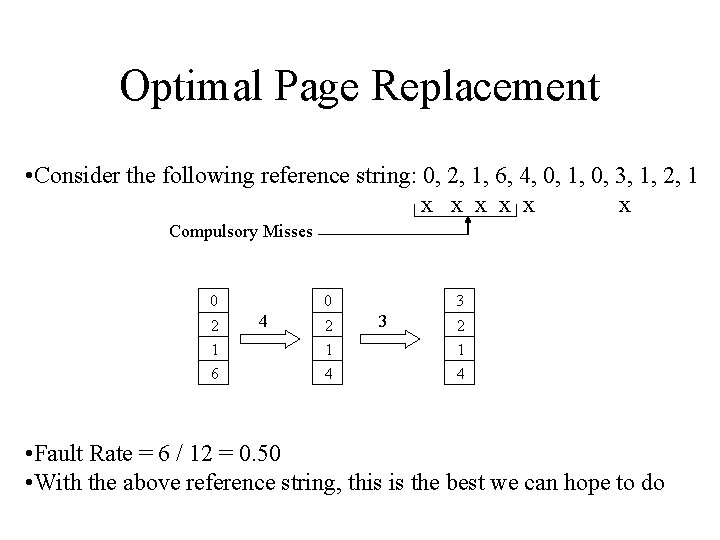

Optimal Page Replacement • Often called Balady’s Min • Basic idea – replace the page that will not be referenced for the longest time • This gives the lowest possible fault rate • Impossible to implement • Does provide a good measure for other techniques

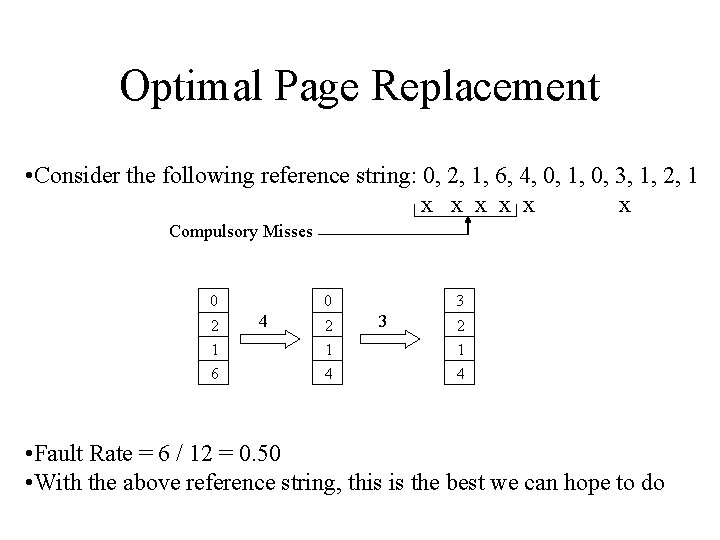

Optimal Page Replacement • Consider the following reference string: 0, 2, 1, 6, 4, 0, 1, 0, 3, 1, 2, 1 x x x Compulsory Misses 0 2 1 6 0 4 2 1 4 3 3 2 1 4 • Fault Rate = 6 / 12 = 0. 50 • With the above reference string, this is the best we can hope to do

Least Recently Used (LRU) • Basic idea – replace the page in memory that has not been accessed for the longest time • Optimal policy looking back in time – as opposed to forward in time – fortunately, programs tend to follow similar behavior

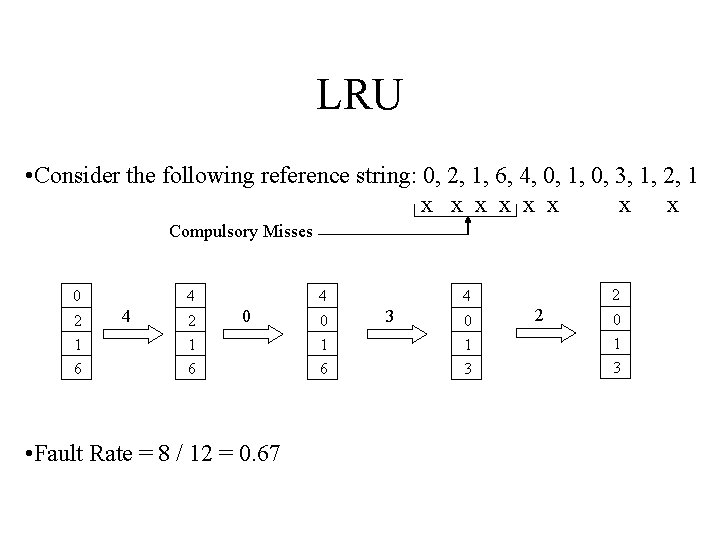

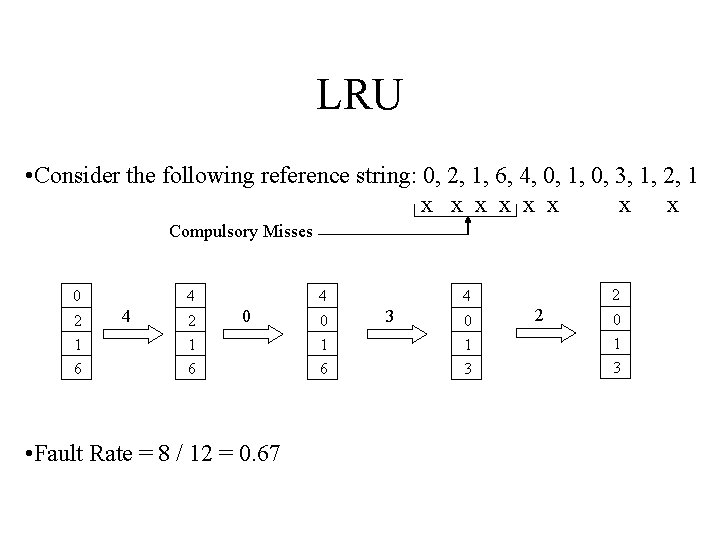

LRU • Consider the following reference string: 0, 2, 1, 6, 4, 0, 1, 0, 3, 1, 2, 1 x x x x Compulsory Misses 0 2 1 6 4 4 2 1 6 4 0 • Fault Rate = 8 / 12 = 0. 67 0 1 6 2 4 3 0 1 3 2 0 1 3

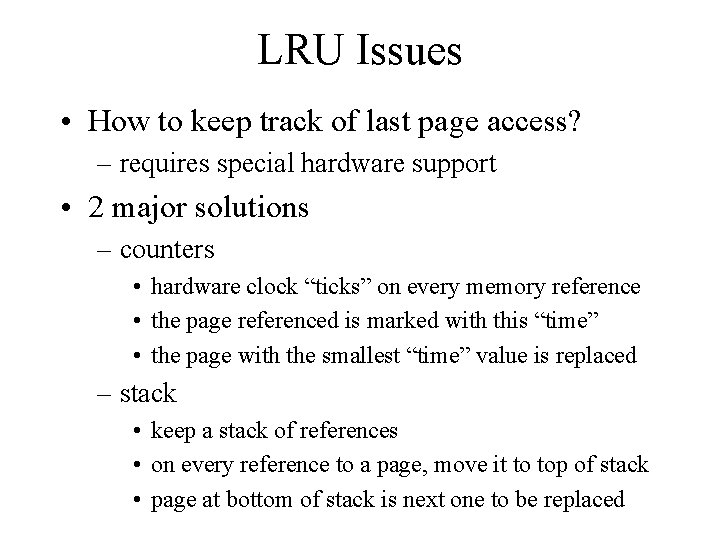

LRU Issues • How to keep track of last page access? – requires special hardware support • 2 major solutions – counters • hardware clock “ticks” on every memory reference • the page referenced is marked with this “time” • the page with the smallest “time” value is replaced – stack • keep a stack of references • on every reference to a page, move it to top of stack • page at bottom of stack is next one to be replaced

LRU Issues • Both techniques just listed require additional hardware – remember, memory reference are very common – impractical to invoke software on every memory reference • LRU is not used very often • Instead, we will try to approximate LRU

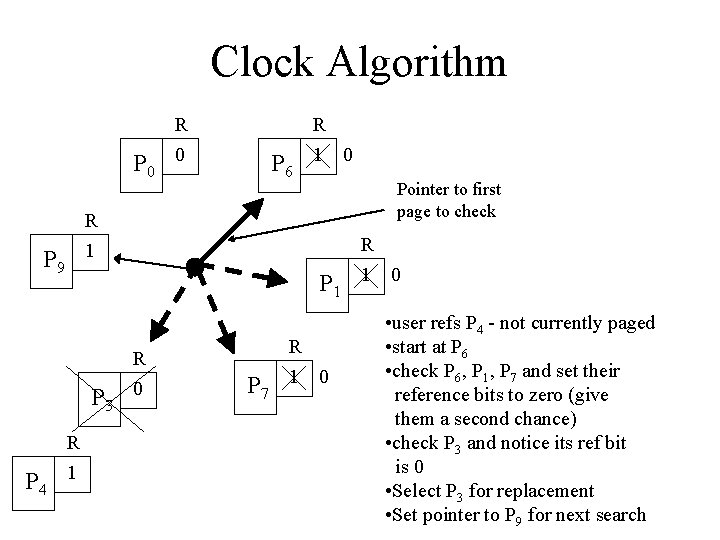

Clock Algorithm (Second Chance) • On page fault, search through pages • If a page’s reference bit is set to 1 – set its reference bit to zero and skip it (give it a second chance) • If a page’s reference bit is set to 0 – select this page for replacement • Always start the search from where the last search left off

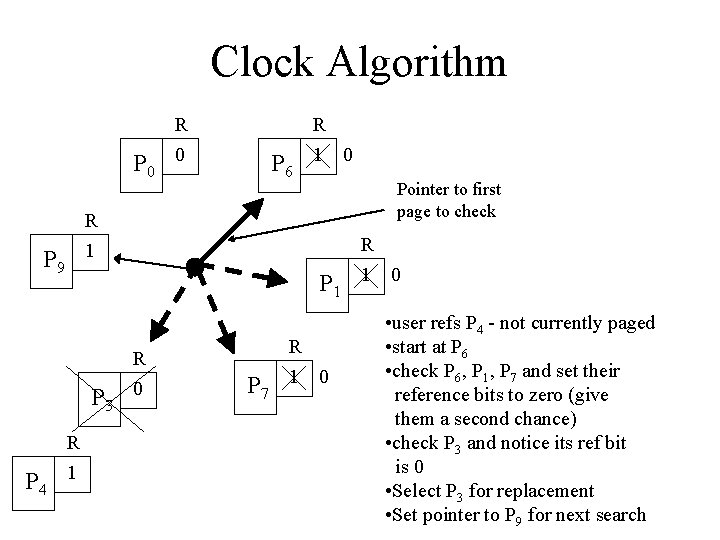

Clock Algorithm R R P 0 0 R 1 P 9 Pointer to first page to check R P 1 1 0 P 3 P 4 P 6 1 0 R 1 R 0 P 7 R 1 0 • user refs P 4 - not currently paged • start at P 6 • check P 6, P 1, P 7 and set their reference bits to zero (give them a second chance) • check P 3 and notice its ref bit is 0 • Select P 3 for replacement • Set pointer to P 9 for next search

CPU SCHEDULING Scheduling Algorithms FIRST-COME, FIRST SERVED: · · ( FCFS) same as FIFO Simple, fair, but poor performance. Average queueing time may be long. What are the average queueing and residence times for this scenario? How do average queueing and residence times depend on ordering of these processes in the queue? 16

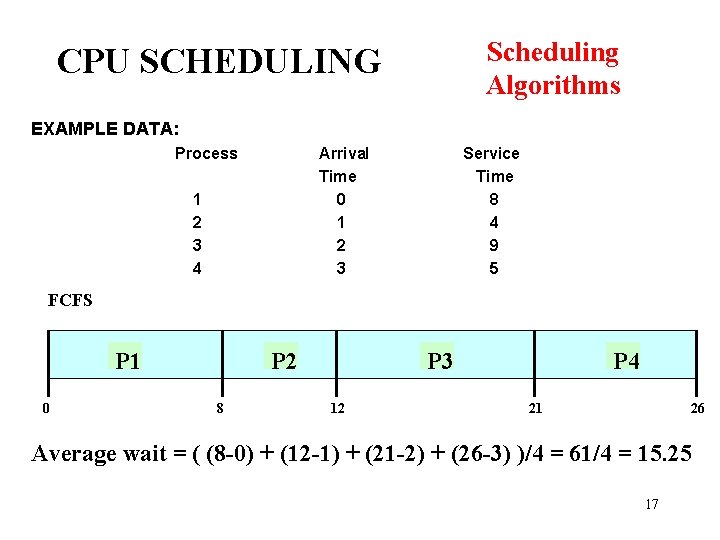

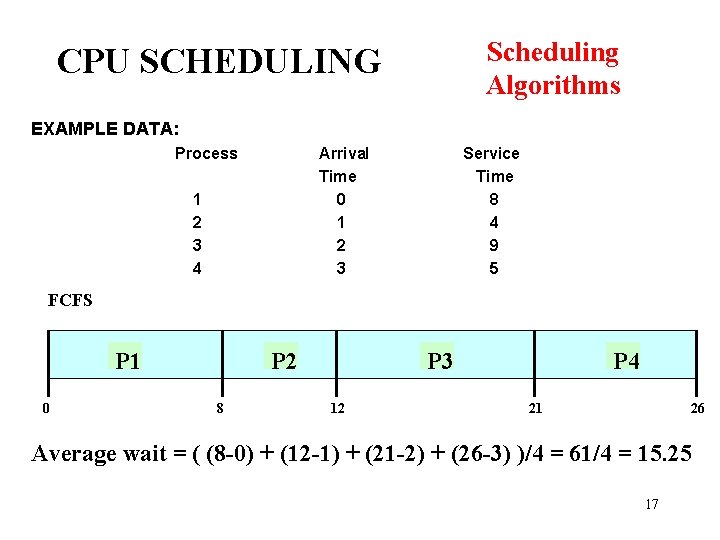

Scheduling Algorithms CPU SCHEDULING EXAMPLE DATA: Process Arrival Time 0 1 2 3 4 Service Time 8 4 9 5 FCFS P 1 0 P 2 8 P 3 12 P 4 21 26 Average wait = ( (8 -0) + (12 -1) + (21 -2) + (26 -3) )/4 = 61/4 = 15. 25 17

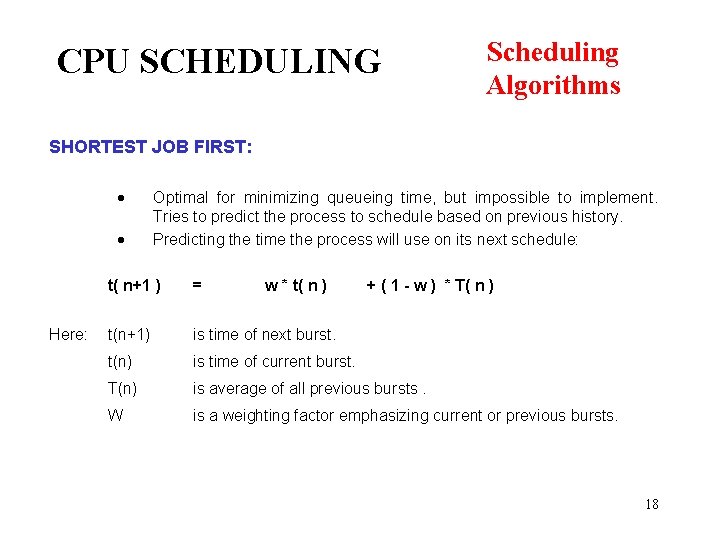

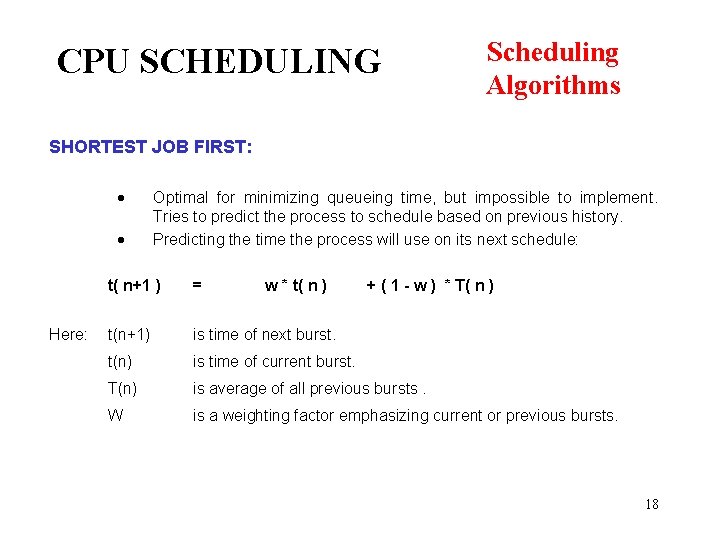

CPU SCHEDULING Scheduling Algorithms SHORTEST JOB FIRST: · · Here: Optimal for minimizing queueing time, but impossible to implement. Tries to predict the process to schedule based on previous history. Predicting the time the process will use on its next schedule: t( n+1 ) = w * t( n ) + ( 1 - w ) * T( n ) t(n+1) is time of next burst. t(n) is time of current burst. T(n) is average of all previous bursts. W is a weighting factor emphasizing current or previous bursts. 18

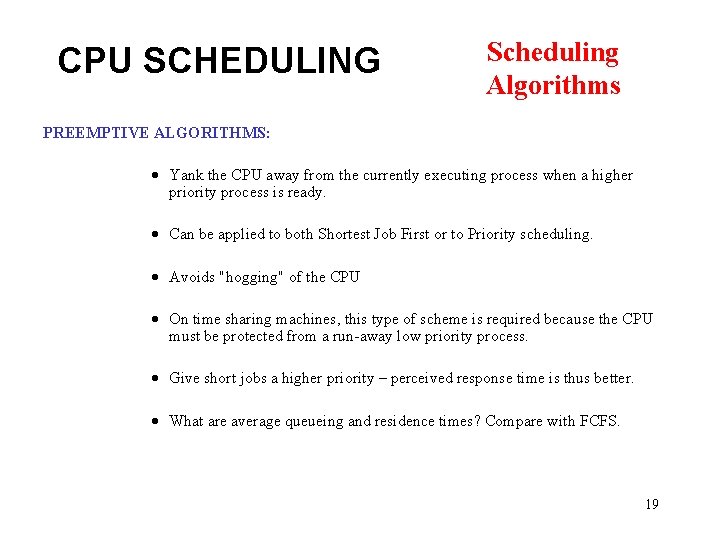

CPU SCHEDULING Scheduling Algorithms PREEMPTIVE ALGORITHMS: · Yank the CPU away from the currently executing process when a higher priority process is ready. · Can be applied to both Shortest Job First or to Priority scheduling. · Avoids "hogging" of the CPU · On time sharing machines, this type of scheme is required because the CPU must be protected from a run-away low priority process. · Give short jobs a higher priority – perceived response time is thus better. · What are average queueing and residence times? Compare with FCFS. 19

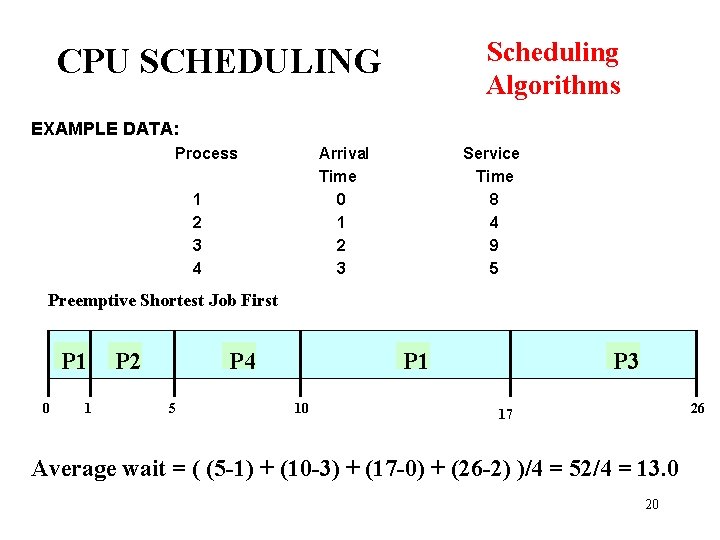

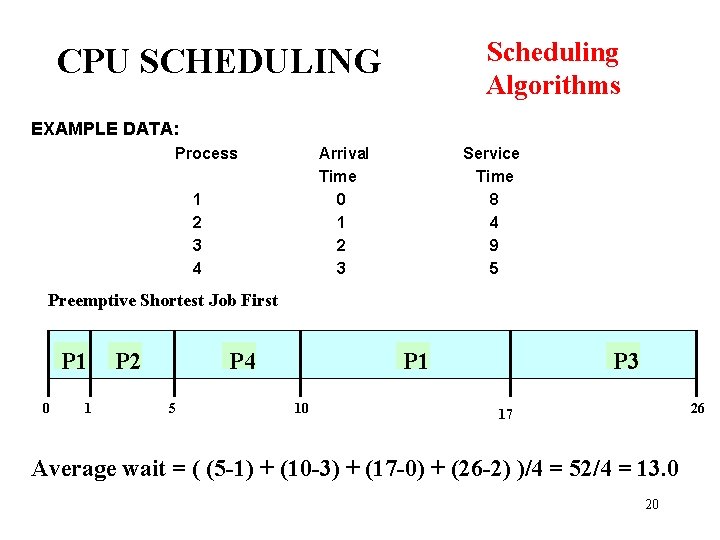

Scheduling Algorithms CPU SCHEDULING EXAMPLE DATA: Process Arrival Time 0 1 2 3 4 Service Time 8 4 9 5 Preemptive Shortest Job First P 1 0 1 P 2 P 4 5 P 1 10 P 3 26 17 Average wait = ( (5 -1) + (10 -3) + (17 -0) + (26 -2) )/4 = 52/4 = 13. 0 20

CPU SCHEDULING Scheduling Algorithms PRIORITY BASED SCHEDULING: · Assign each process a priority. Schedule highest priority first. All processes within same priority are FCFS. · Priority may be determined by user or by some default mechanism. The system may determine the priority based on memory requirements, time limits, or other resource usage. · Starvation occurs if a low priority process never runs. Solution: build aging into a variable priority. · Delicate balance between giving favorable response for interactive jobs, but not starving batch jobs. 21

CPU SCHEDULING Scheduling Algorithms ROUND ROBIN: · Use a timer to cause an interrupt after a predetermined time. Preempts if task exceeds it’s quantum. · Train of events Dispatch Time slice occurs OR process suspends on event Put process on some queue and dispatch next · Use numbers in last example to find queueing and residence times. (Use quantum = 4 sec. ) · Definitions: – Context Switch Changing the processor from running one task (or process) to another. Implies changing memory. – Processor Sharing Use of a small quantum such that each process runs frequently at speed 1/n. – Reschedule latency How long it takes from when a process requests to run, until it finally gets control of the CPU. 22

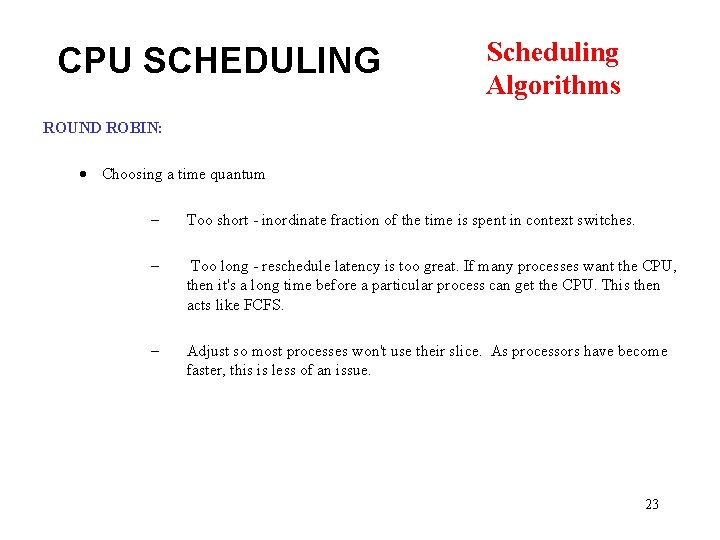

CPU SCHEDULING Scheduling Algorithms ROUND ROBIN: · Choosing a time quantum – Too short - inordinate fraction of the time is spent in context switches. – Too long - reschedule latency is too great. If many processes want the CPU, then it's a long time before a particular process can get the CPU. This then acts like FCFS. – Adjust so most processes won't use their slice. As processors have become faster, this is less of an issue. 23

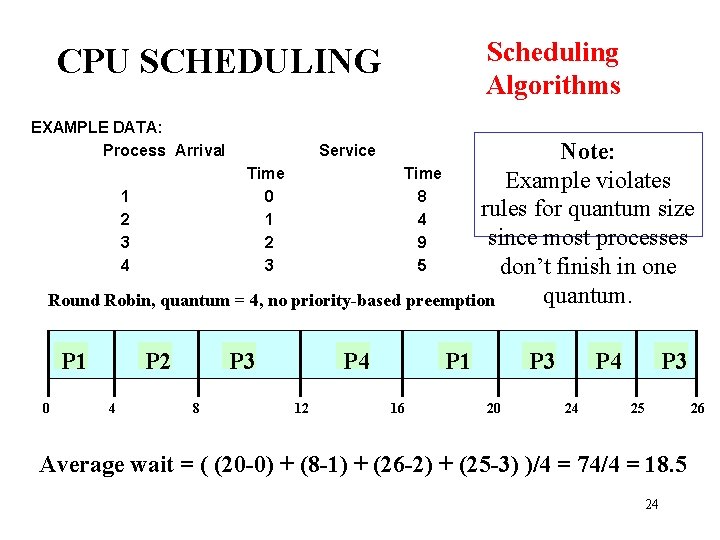

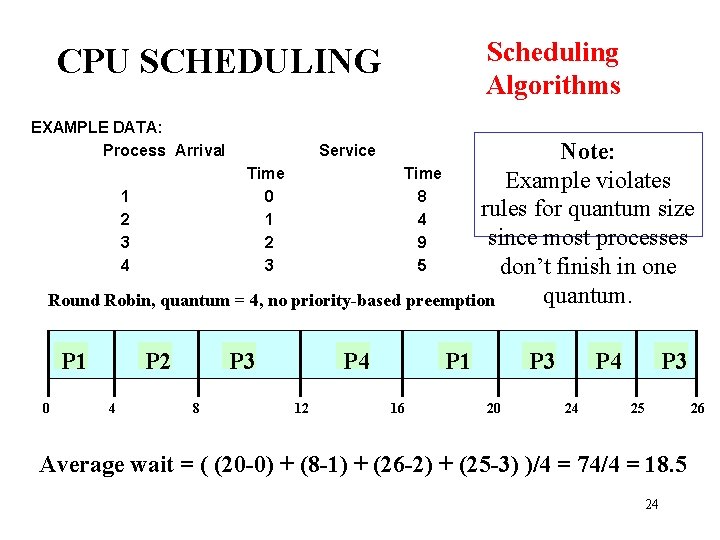

Scheduling Algorithms CPU SCHEDULING EXAMPLE DATA: Process Arrival Note: Time Example violates 1 0 8 rules for quantum size 2 1 4 since most processes 3 2 9 4 3 5 don’t finish in one quantum. Round Robin, quantum = 4, no priority-based preemption P 1 0 P 2 4 Service P 3 8 P 4 12 P 1 16 P 3 20 P 4 24 P 3 25 Average wait = ( (20 -0) + (8 -1) + (26 -2) + (25 -3) )/4 = 74/4 = 18. 5 24 26

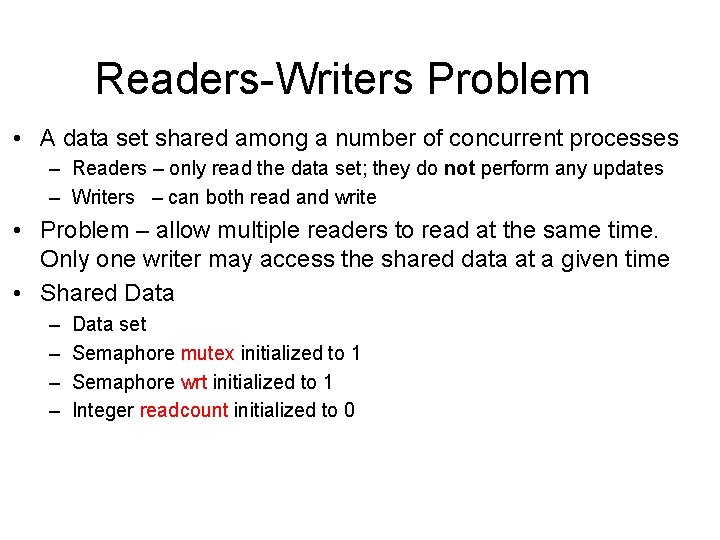

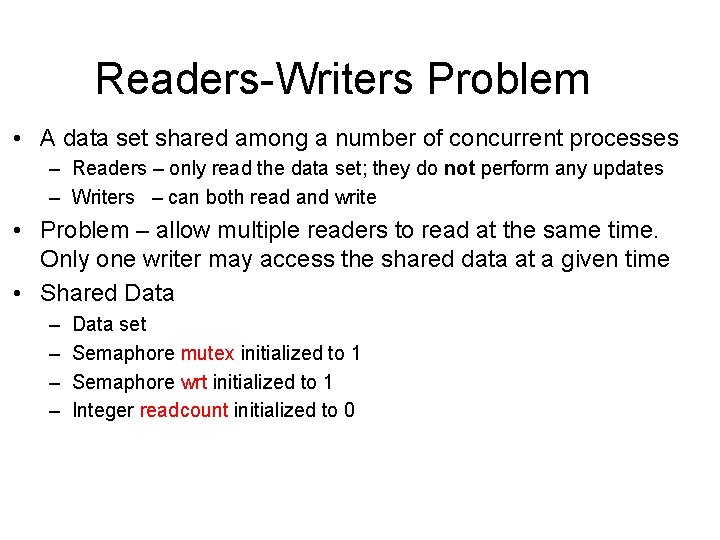

Readers-Writers Problem • A data set shared among a number of concurrent processes – Readers – only read the data set; they do not perform any updates – Writers – can both read and write • Problem – allow multiple readers to read at the same time. Only one writer may access the shared data at a given time • Shared Data – – Data set Semaphore mutex initialized to 1 Semaphore wrt initialized to 1 Integer readcount initialized to 0

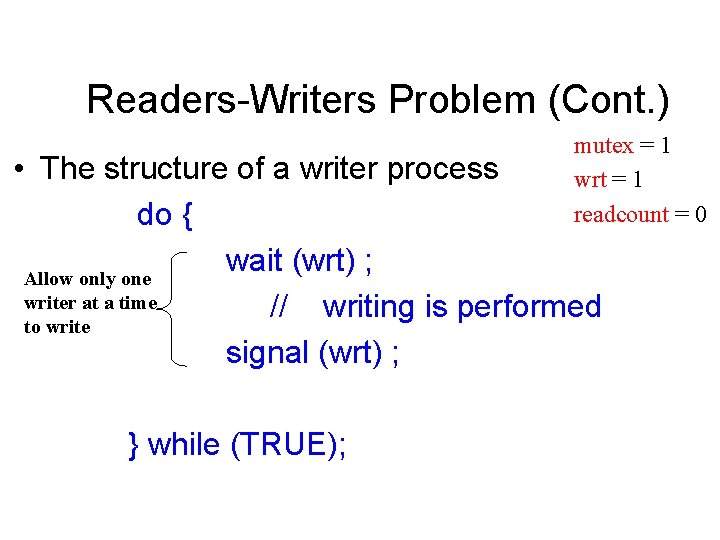

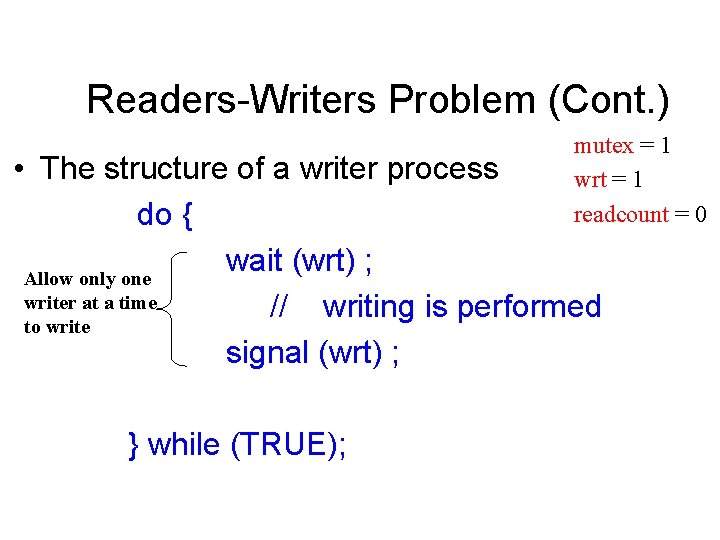

Readers-Writers Problem (Cont. ) mutex = 1 wrt = 1 readcount = 0 • The structure of a writer process do { wait (wrt) ; Allow only one writer at a time // writing is performed to write signal (wrt) ; } while (TRUE);

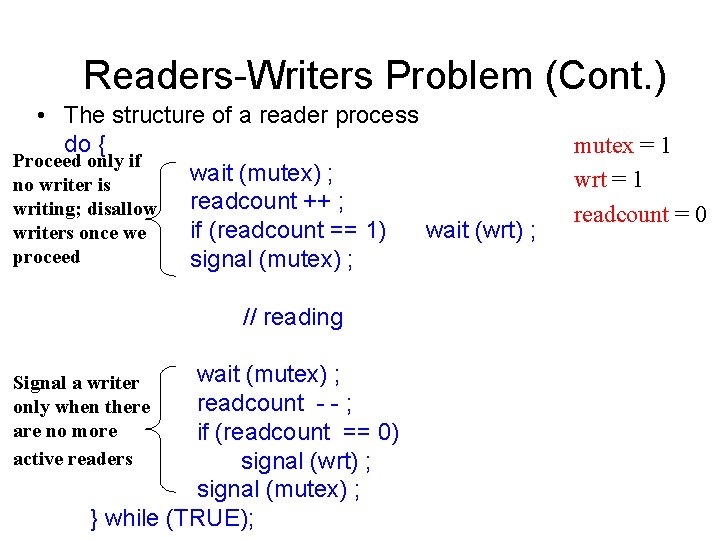

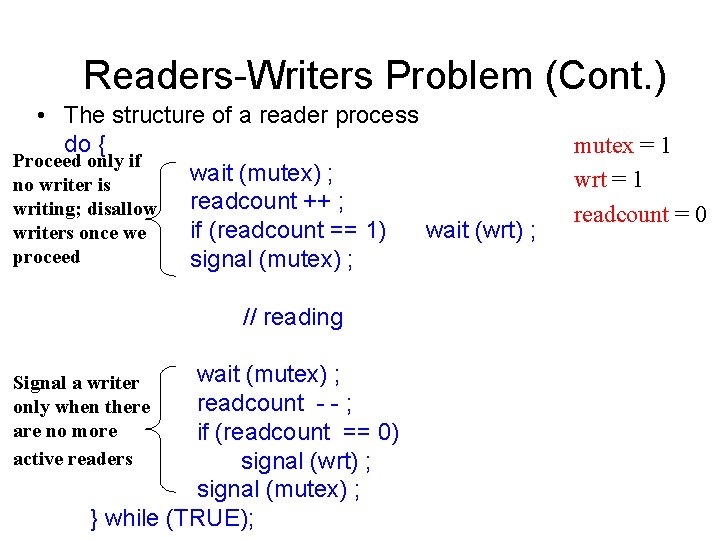

Readers-Writers Problem (Cont. ) • The structure of a reader process do { Proceed only if wait (mutex) ; no writer is readcount ++ ; writing; disallow if (readcount == 1) wait (wrt) ; writers once we proceed signal (mutex) ; // reading wait (mutex) ; readcount - - ; if (readcount == 0) signal (wrt) ; signal (mutex) ; } while (TRUE); Signal a writer only when there are no more active readers mutex = 1 wrt = 1 readcount = 0

Readers/Writers • Note that starvation is said to occur only when a process, despite getting CPU, does not get to make use of it – Only writers are starved, not readers • Can we eliminate starvation for writers?

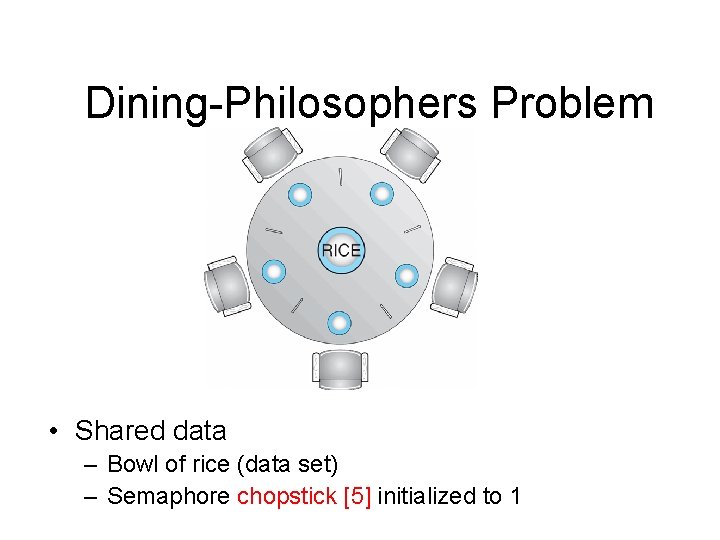

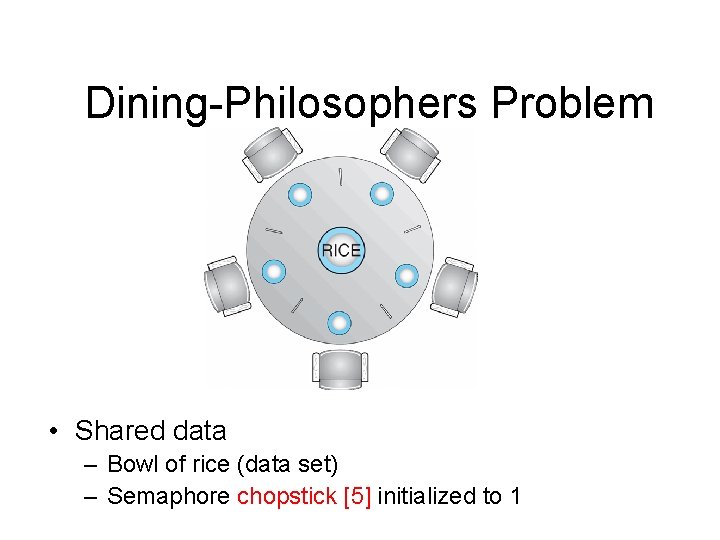

Dining-Philosophers Problem • Shared data – Bowl of rice (data set) – Semaphore chopstick [5] initialized to 1

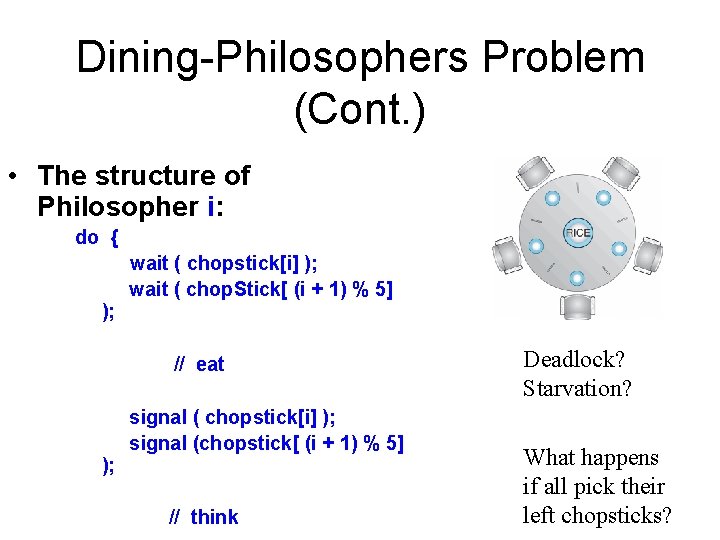

Dining-Philosophers Problem (Cont. ) • The structure of Philosopher i: do { ); wait ( chopstick[i] ); wait ( chop. Stick[ (i + 1) % 5] // eat ); signal ( chopstick[i] ); signal (chopstick[ (i + 1) % 5] // think Deadlock? Starvation? What happens if all pick their left chopsticks?

Dining-Philosophers Problem (Cont. ) • Idea #1: Make the two wait operations occur together as an atomic unit (and the two signal operations) – Use another binary semaphore for this – Any problems with this?

Dining-Philosophers Problem (Cont. ) • Idea #2: Does it help if one of the neighbors picks their left chopstick first and the other picks their right chopstick first? • What is the most # phils. that can eat simultaneously?

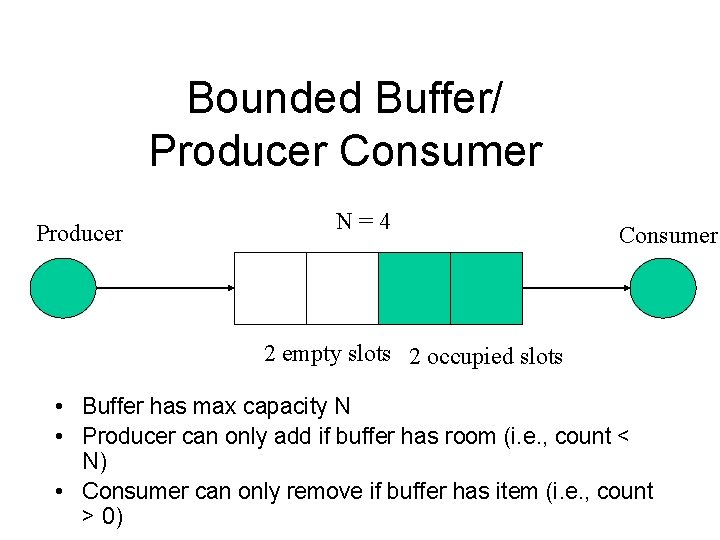

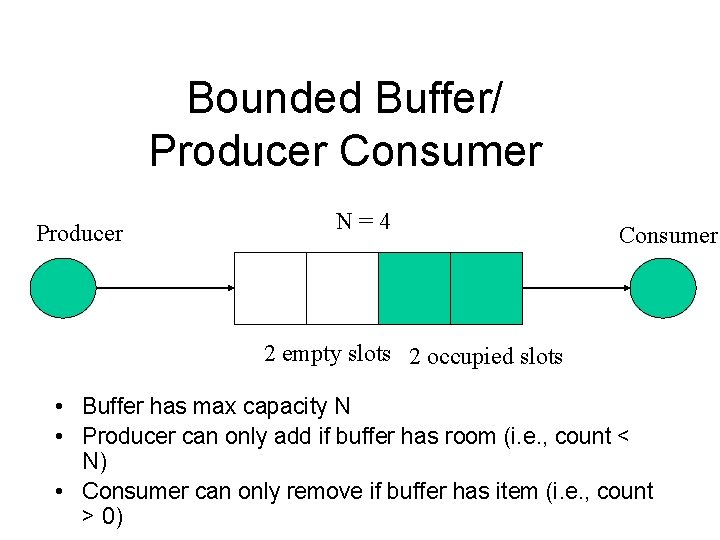

Bounded Buffer/ Producer Consumer Producer N=4 Consumer 2 empty slots 2 occupied slots • Buffer has max capacity N • Producer can only add if buffer has room (i. e. , count < N) • Consumer can only remove if buffer has item (i. e. , count > 0)

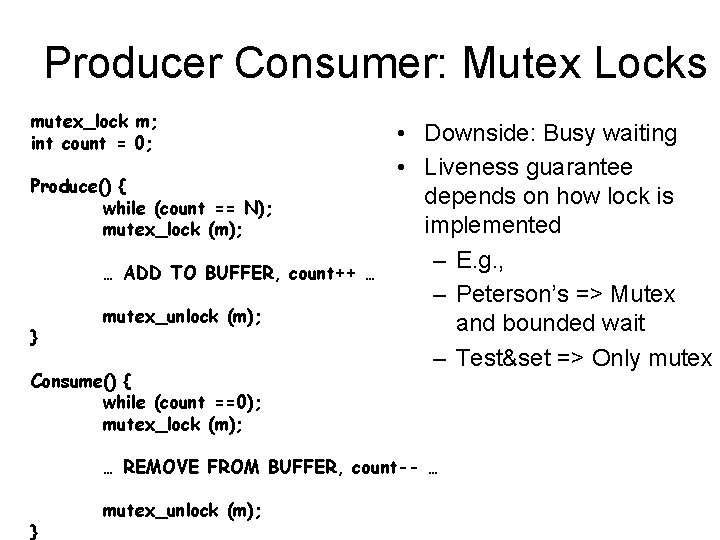

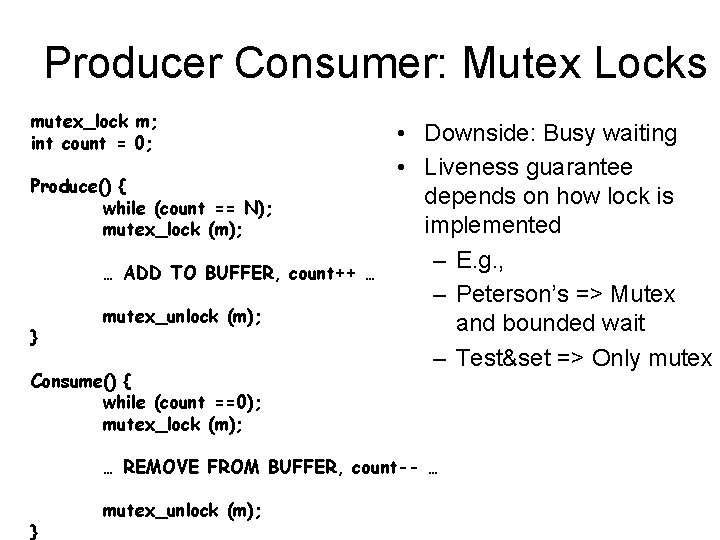

Producer Consumer: Mutex Locks mutex_lock m; int count = 0; Produce() { while (count == N); mutex_lock (m); … ADD TO BUFFER, count++ … } mutex_unlock (m); Consume() { while (count ==0); mutex_lock (m); • Downside: Busy waiting • Liveness guarantee depends on how lock is implemented – E. g. , – Peterson’s => Mutex and bounded wait – Test&set => Only mutex … REMOVE FROM BUFFER, count-- … } mutex_unlock (m);

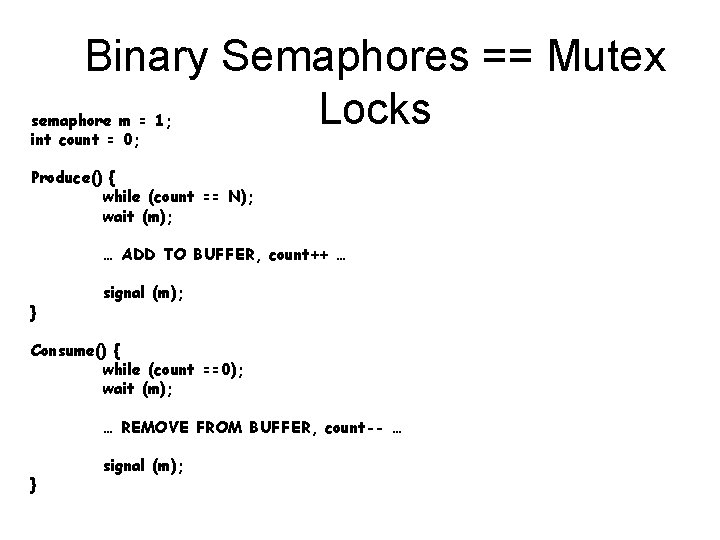

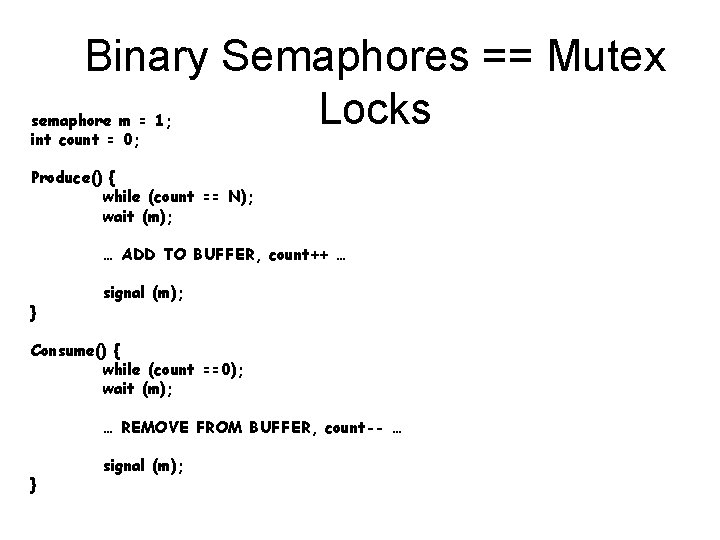

Binary Semaphores == Mutex Locks semaphore m = 1; int count = 0; Produce() { while (count == N); wait (m); … ADD TO BUFFER, count++ … } signal (m); Consume() { while (count ==0); wait (m); … REMOVE FROM BUFFER, count-- … } signal (m);

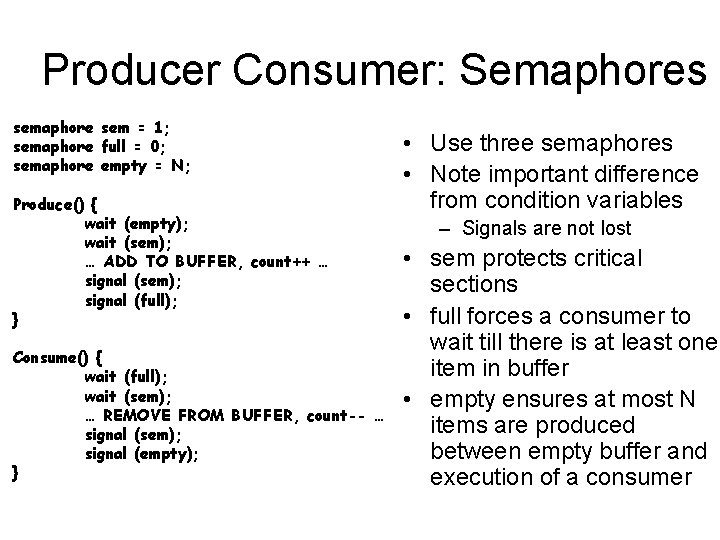

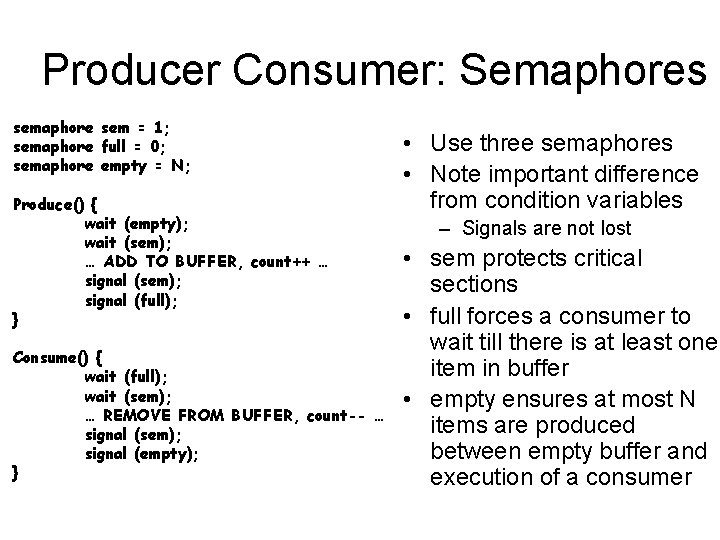

Producer Consumer: Semaphores semaphore sem = 1; semaphore full = 0; semaphore empty = N; Produce() { wait (empty); wait (sem); … ADD TO BUFFER, count++ … signal (sem); signal (full); } Consume() { wait (full); wait (sem); … REMOVE FROM BUFFER, count-- … signal (sem); signal (empty); } • Use three semaphores • Note important difference from condition variables – Signals are not lost • sem protects critical sections • full forces a consumer to wait till there is at least one item in buffer • empty ensures at most N items are produced between empty buffer and execution of a consumer

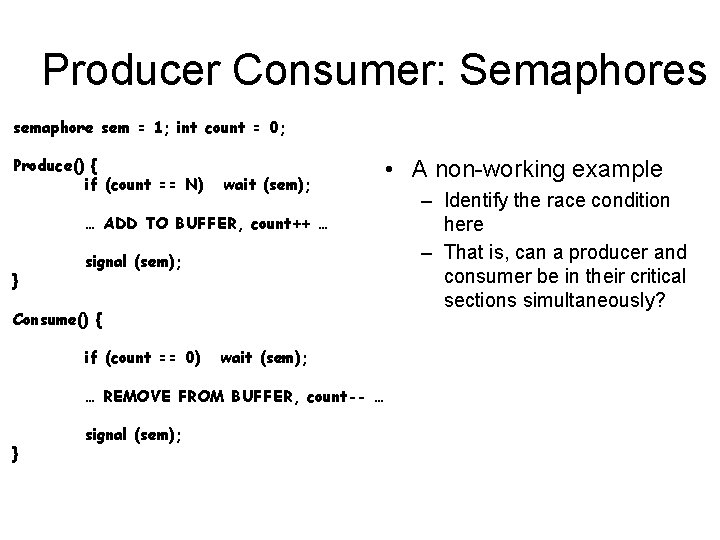

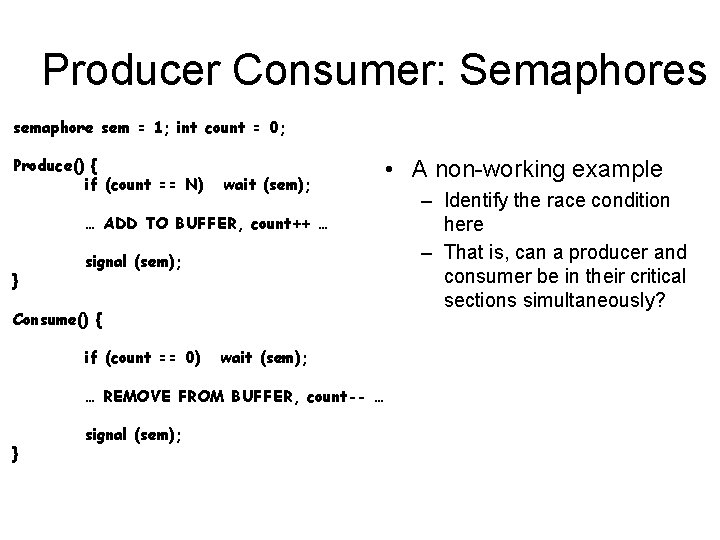

Producer Consumer: Semaphores semaphore sem = 1; int count = 0; Produce() { if (count == N) wait (sem); … ADD TO BUFFER, count++ … } signal (sem); Consume() { if (count == 0) wait (sem); … REMOVE FROM BUFFER, count-- … } signal (sem); • A non-working example – Identify the race condition here – That is, can a producer and consumer be in their critical sections simultaneously?

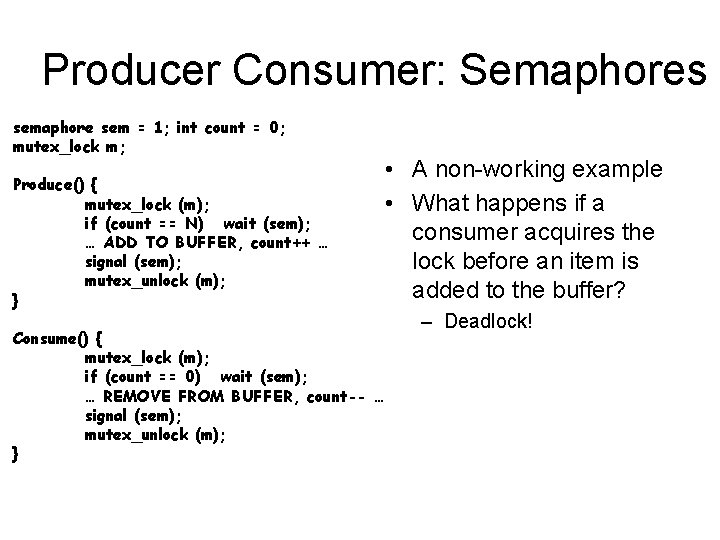

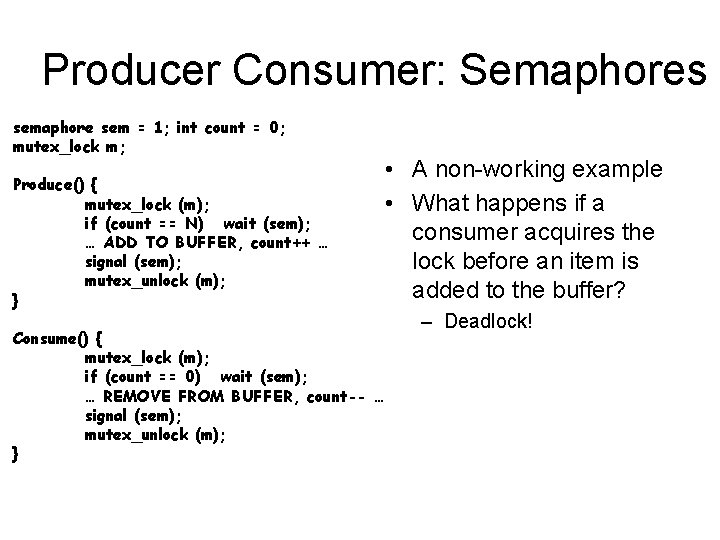

Producer Consumer: Semaphores semaphore sem = 1; int count = 0; mutex_lock m; Produce() { mutex_lock (m); if (count == N) wait (sem); … ADD TO BUFFER, count++ … signal (sem); mutex_unlock (m); } Consume() { mutex_lock (m); if (count == 0) wait (sem); … REMOVE FROM BUFFER, count-- … signal (sem); mutex_unlock (m); } • A non-working example • What happens if a consumer acquires the lock before an item is added to the buffer? – Deadlock!