Exploiting Load Latency Tolerance for Relaxing Cache Design

![Related Work n n Load Latency Tolerance [Srinivasan & Lebeck, MICRO 98] u All Related Work n n Load Latency Tolerance [Srinivasan & Lebeck, MICRO 98] u All](https://slidetodoc.com/presentation_image_h2/ed097688f7efd49e55eb5505a0a9fbed/image-3.jpg)

![Related Work(contd. ) n n Locality vs. Criticality [Srinivasan et. al. , ISCA 01] Related Work(contd. ) n n Locality vs. Criticality [Srinivasan et. al. , ISCA 01]](https://slidetodoc.com/presentation_image_h2/ed097688f7efd49e55eb5505a0a9fbed/image-4.jpg)

![Processor Configuration Similar to Alpha 21264 using Simple. Scalar-3. 0 [Austin, Burger 97] Fetch Processor Configuration Similar to Alpha 21264 using Simple. Scalar-3. 0 [Austin, Burger 97] Fetch](https://slidetodoc.com/presentation_image_h2/ed097688f7efd49e55eb5505a0a9fbed/image-12.jpg)

- Slides: 18

Exploiting Load Latency Tolerance for Relaxing Cache Design Constraints Ramu Pyreddy, Gary Tyson Advanced Computer Architecture Laboratory University of Michigan

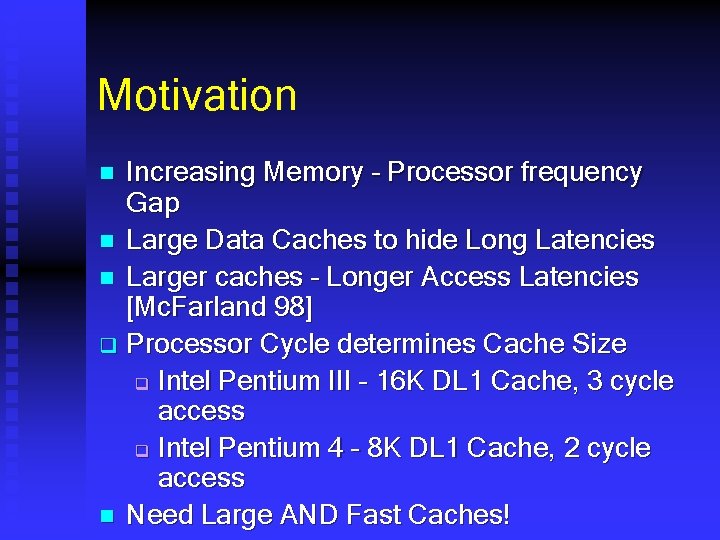

Motivation Increasing Memory – Processor frequency Gap n Large Data Caches to hide Long Latencies n Larger caches – Longer Access Latencies [Mc. Farland 98] q Processor Cycle determines Cache Size q Intel Pentium III – 16 K DL 1 Cache, 3 cycle access q Intel Pentium 4 – 8 K DL 1 Cache, 2 cycle access n Need Large AND Fast Caches! n

![Related Work n n Load Latency Tolerance Srinivasan Lebeck MICRO 98 u All Related Work n n Load Latency Tolerance [Srinivasan & Lebeck, MICRO 98] u All](https://slidetodoc.com/presentation_image_h2/ed097688f7efd49e55eb5505a0a9fbed/image-3.jpg)

Related Work n n Load Latency Tolerance [Srinivasan & Lebeck, MICRO 98] u All Loads are NOT equal u Determining Criticality – Very Complex u Sophisticated Simulator with Rollback Non-Critical Buffer [Fisk & Bahar, ICCD 99] u Determining Criticality – Performance Degradation/Dependency Chains u Non-Critical Buffer – Victim Cache for noncritical loads u Small Performance Improvements (upto 4%)

![Related Workcontd n n Locality vs Criticality Srinivasan et al ISCA 01 Related Work(contd. ) n n Locality vs. Criticality [Srinivasan et. al. , ISCA 01]](https://slidetodoc.com/presentation_image_h2/ed097688f7efd49e55eb5505a0a9fbed/image-4.jpg)

Related Work(contd. ) n n Locality vs. Criticality [Srinivasan et. al. , ISCA 01] u Determining Criticality – Practical Heuristics u Potential for Improvement – 40% u Locality is better than Criticality Non-Vital Loads [Rakvic et. al. , HPCA 02] u Determining Criticality – Run-time Heuristics u Small and fast Vital cache for Vital Loads u 17% Performance Improvement

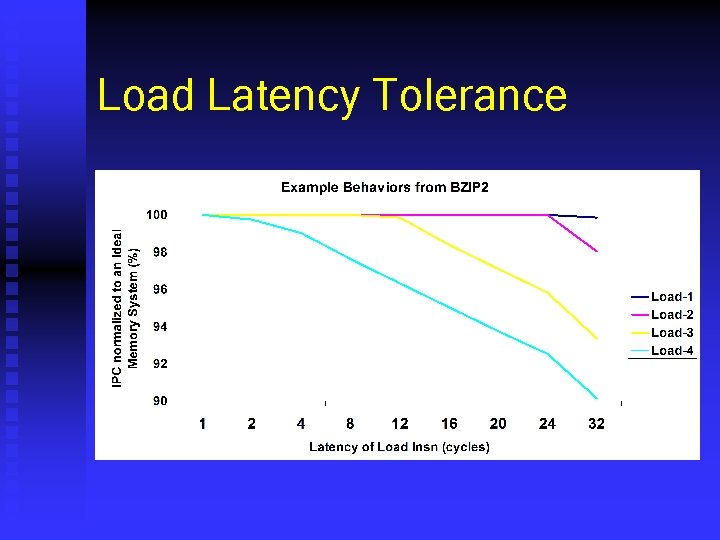

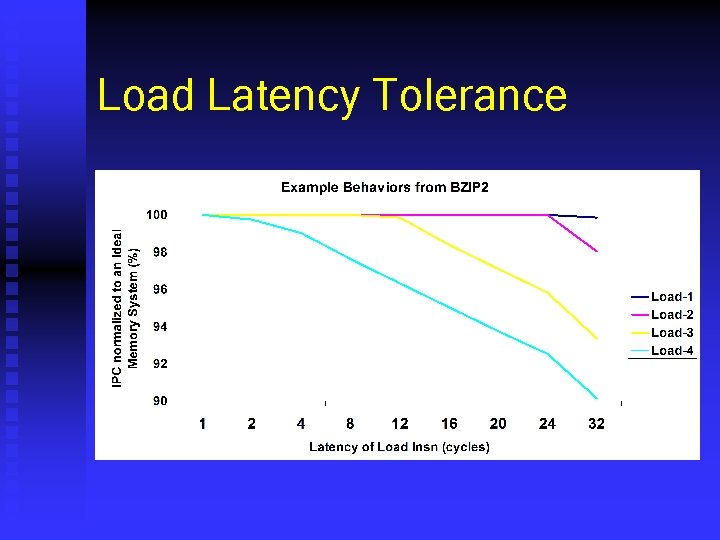

Load Latency Tolerance

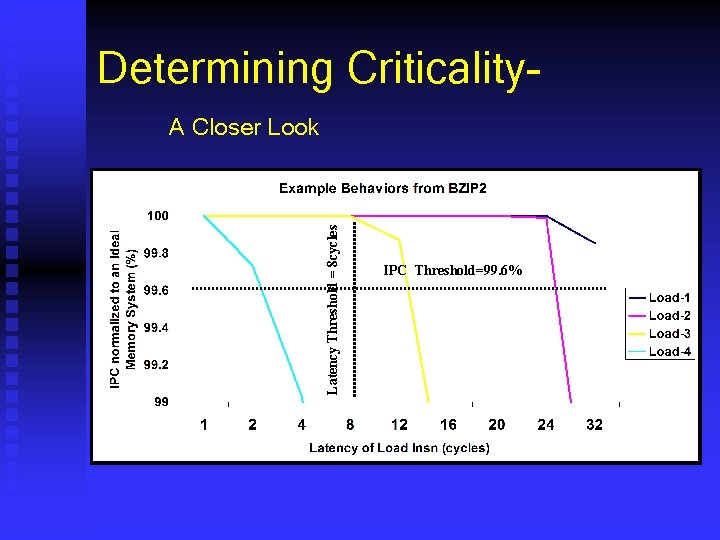

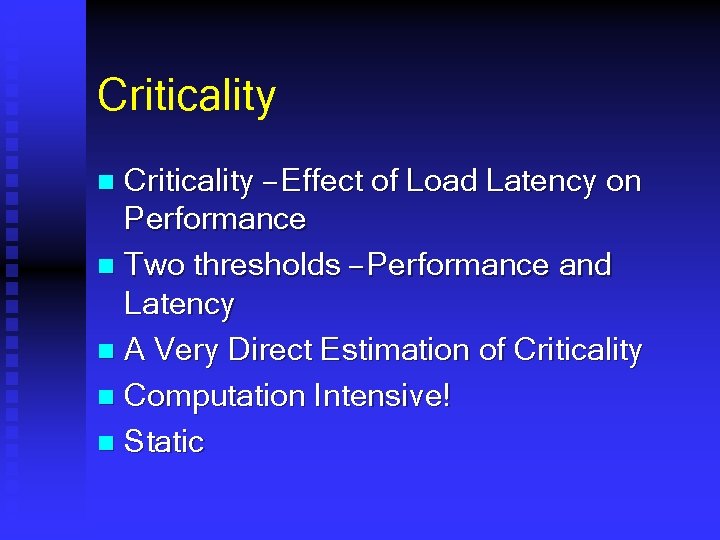

Criticality – Effect of Load Latency on Performance n Two thresholds – Performance and Latency n A Very Direct Estimation of Criticality n Computation Intensive! n Static n

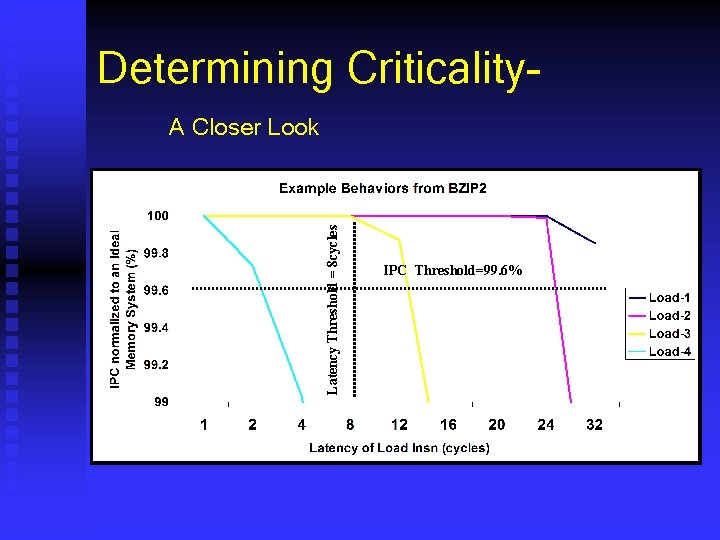

Determining Criticality- Latency Threshold = 8 cycles A Closer Look IPC Threshold=99. 6%

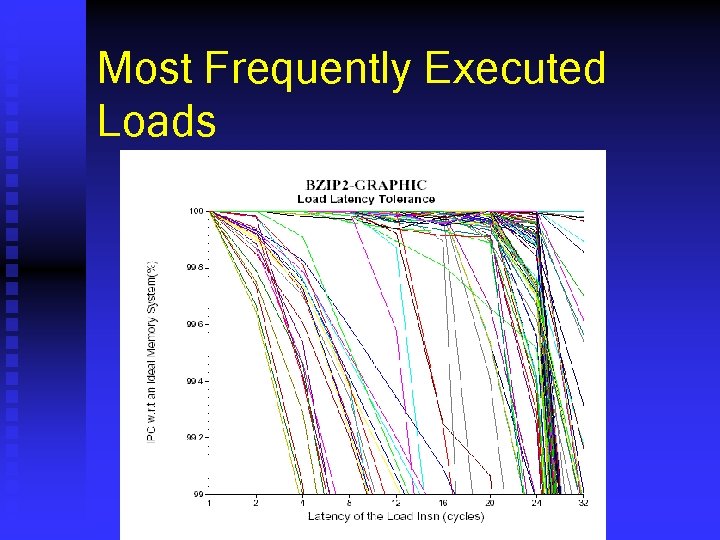

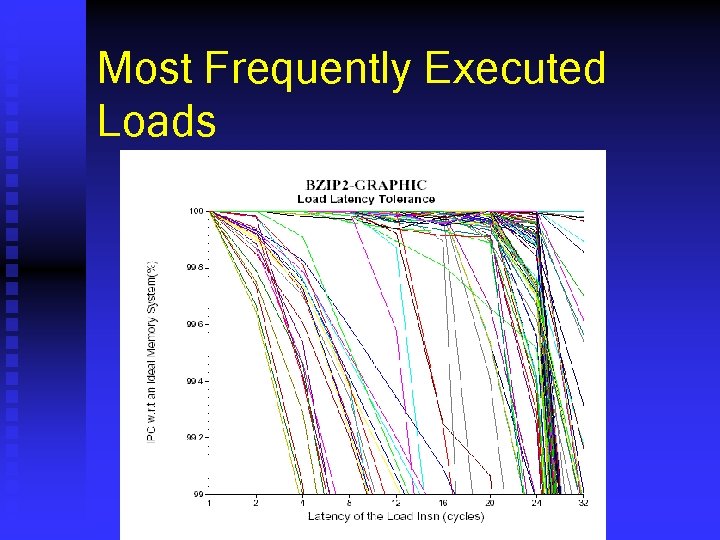

Most Frequently Executed Loads

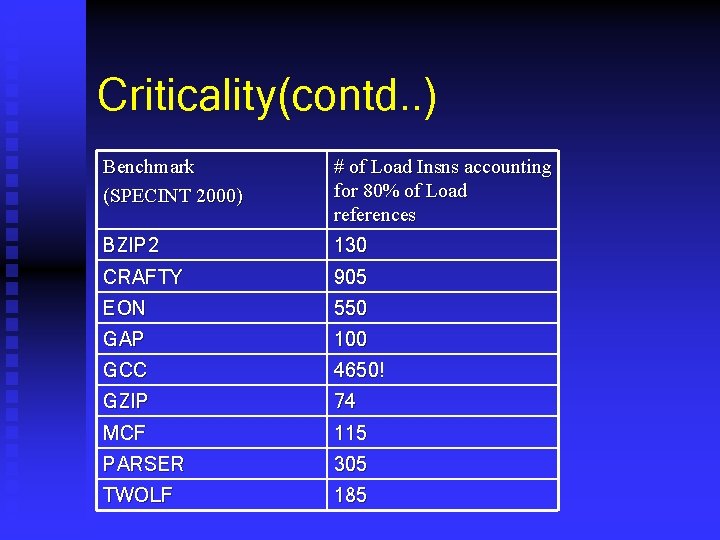

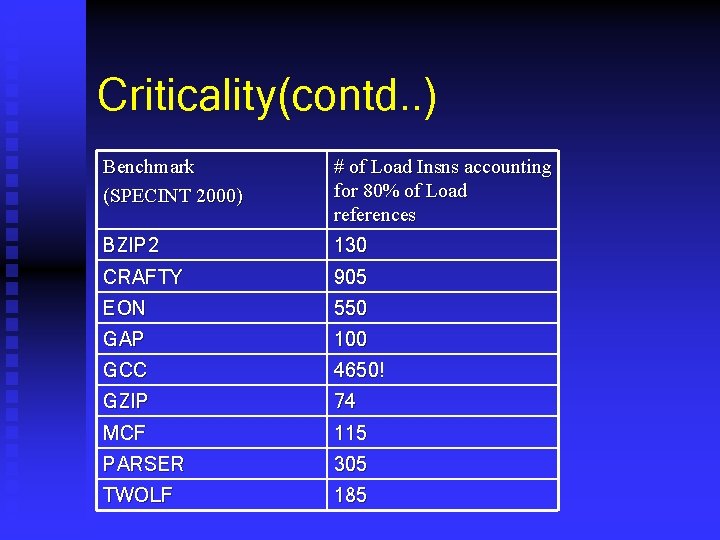

Criticality(contd. . ) Benchmark (SPECINT 2000) # of Load Insns accounting for 80% of Load references BZIP 2 130 CRAFTY 905 EON 550 GAP 100 GCC 4650! GZIP 74 MCF 115 PARSER 305 TWOLF 185

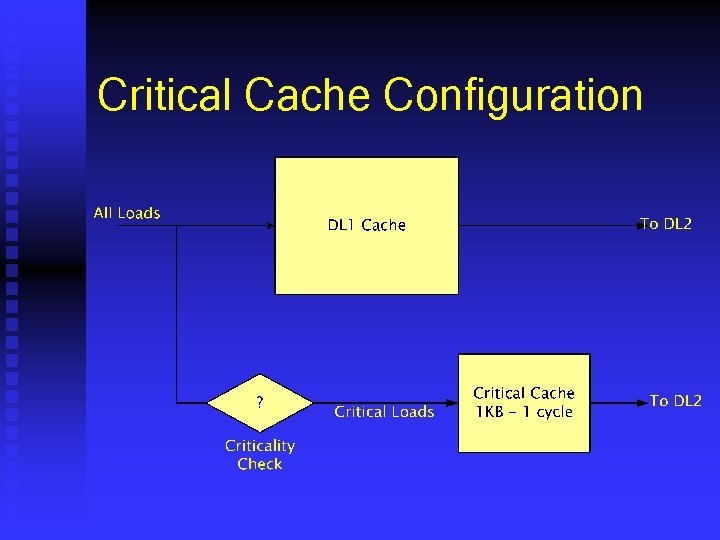

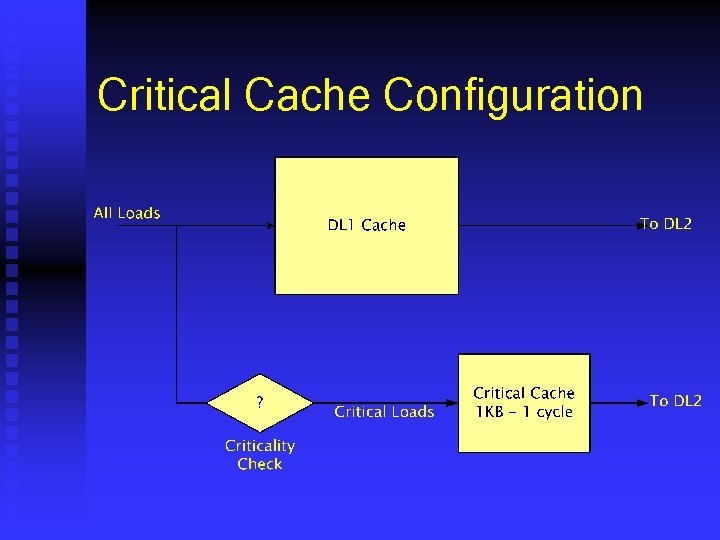

Critical Cache Configuration

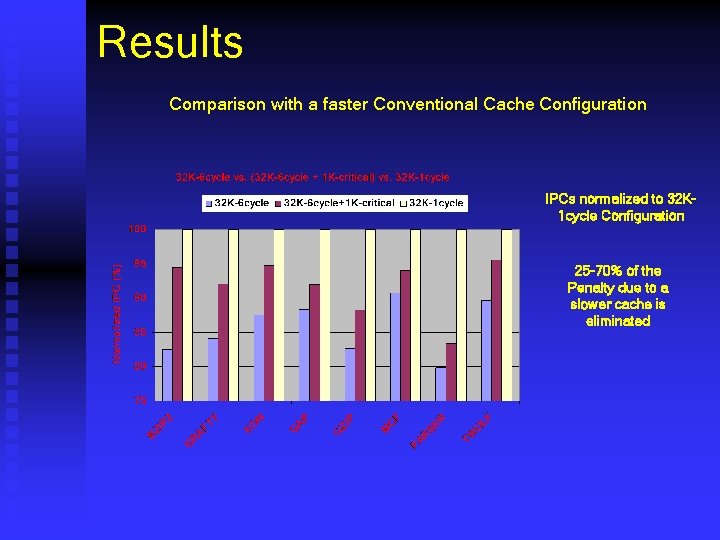

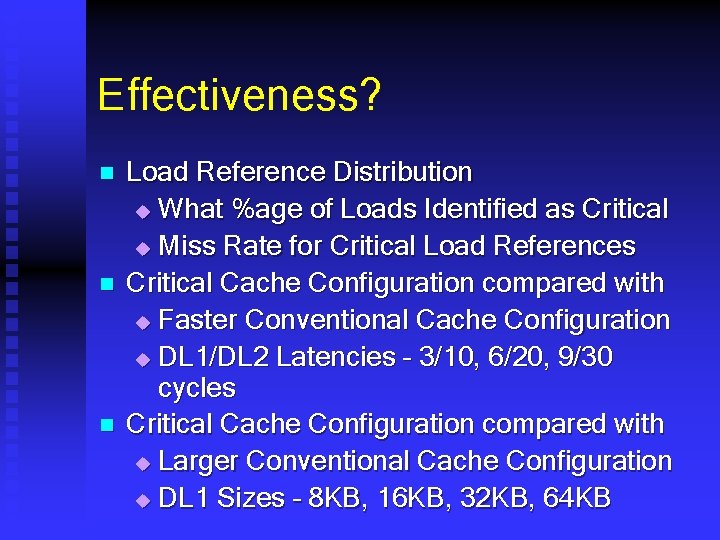

Effectiveness? n n n Load Reference Distribution u What %age of Loads Identified as Critical u Miss Rate for Critical Load References Critical Cache Configuration compared with u Faster Conventional Cache Configuration u DL 1/DL 2 Latencies – 3/10, 6/20, 9/30 cycles Critical Cache Configuration compared with u Larger Conventional Cache Configuration u DL 1 Sizes – 8 KB, 16 KB, 32 KB, 64 KB

![Processor Configuration Similar to Alpha 21264 using Simple Scalar3 0 Austin Burger 97 Fetch Processor Configuration Similar to Alpha 21264 using Simple. Scalar-3. 0 [Austin, Burger 97] Fetch](https://slidetodoc.com/presentation_image_h2/ed097688f7efd49e55eb5505a0a9fbed/image-12.jpg)

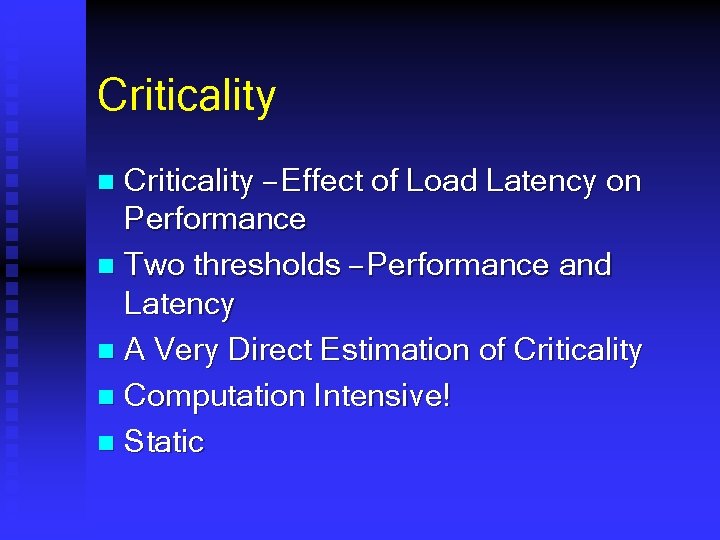

Processor Configuration Similar to Alpha 21264 using Simple. Scalar-3. 0 [Austin, Burger 97] Fetch Width 8 instructions per cycle Fetch Queue Size 64 Branch Predictor 2 Level, 4 K entry level 2 Branch Target Buffer 2 K entries, 8 way associative Issue Width 4 instructions per cycle Decode Width 4 instructions per cycle RUU Size 128 Load/Store Queue Size 32 Instruction Cache 64 KB, 2 -way, 64 byte lines L 2 Cache 1 MB, 2 -way, 128 byte lines Memory Latency 64 cycles

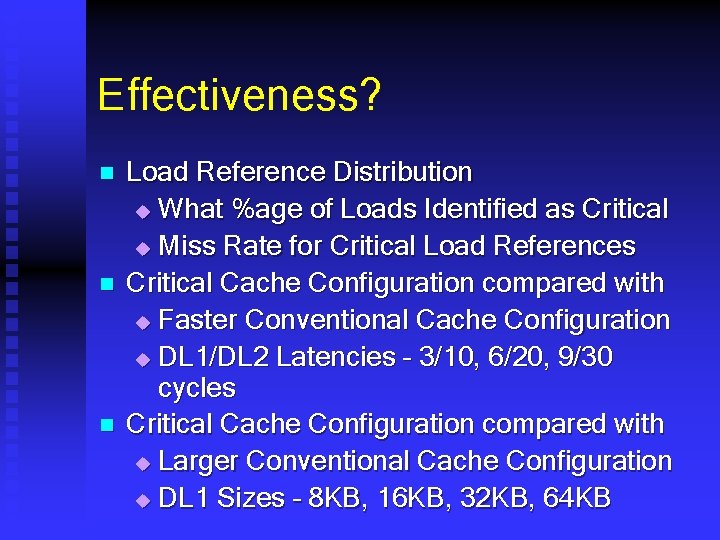

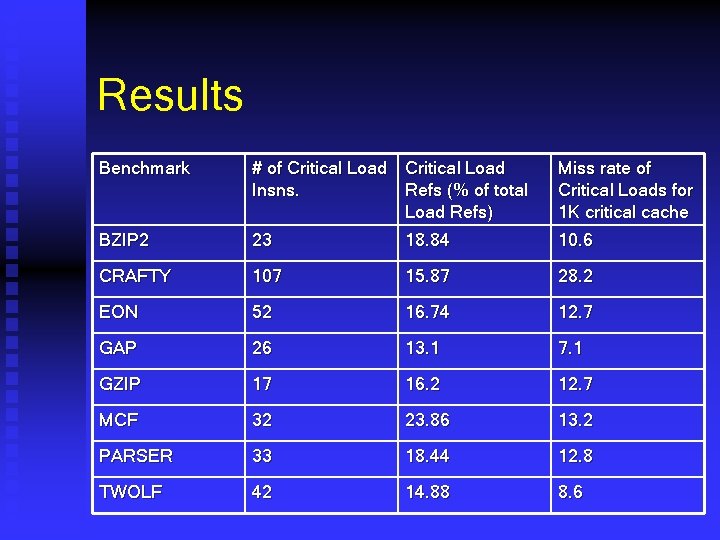

Results Benchmark # of Critical Load Insns. Refs (% of total Load Refs) Miss rate of Critical Loads for 1 K critical cache BZIP 2 23 18. 84 10. 6 CRAFTY 107 15. 87 28. 2 EON 52 16. 74 12. 7 GAP 26 13. 1 7. 1 GZIP 17 16. 2 12. 7 MCF 32 23. 86 13. 2 PARSER 33 18. 44 12. 8 TWOLF 42 14. 88 8. 6

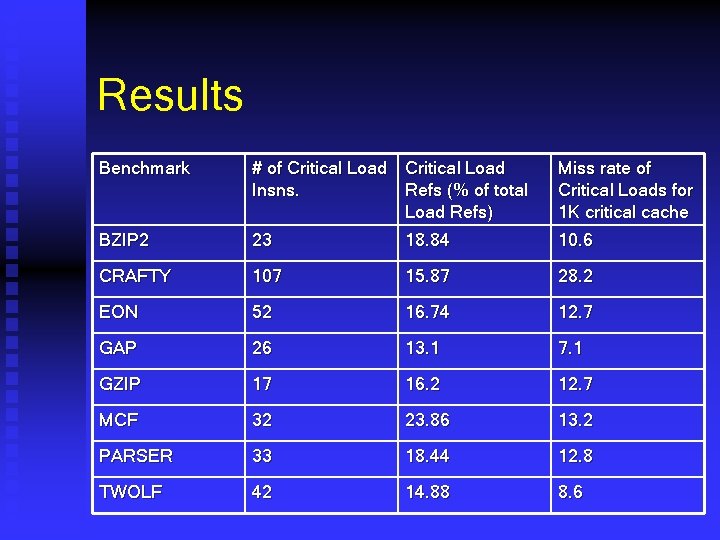

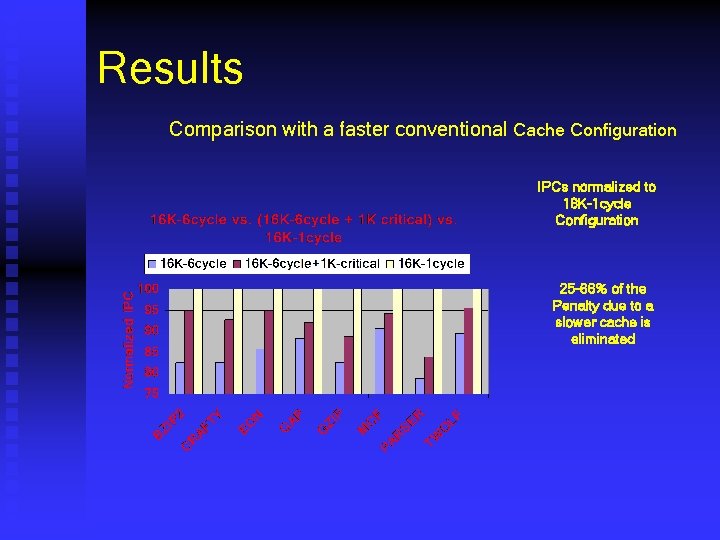

Results Comparison with a faster conventional Cache Configuration IPCs normalized to 16 K-1 cycle Configuration 25 -66% of the Penalty due to a slower cache is eliminated

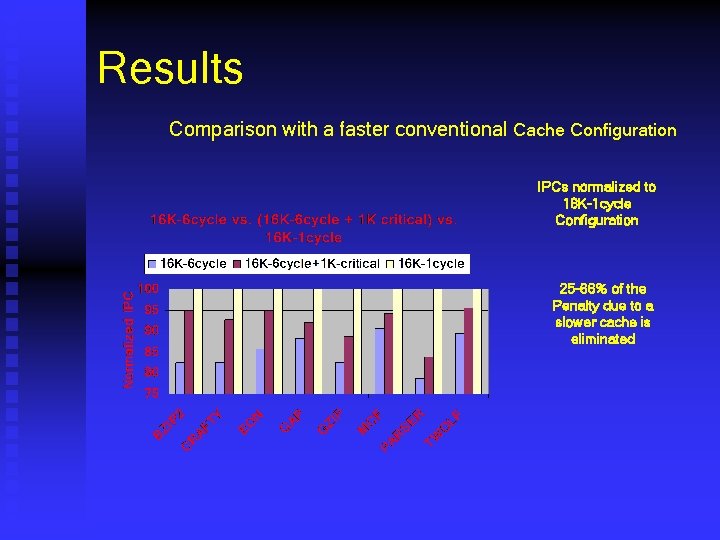

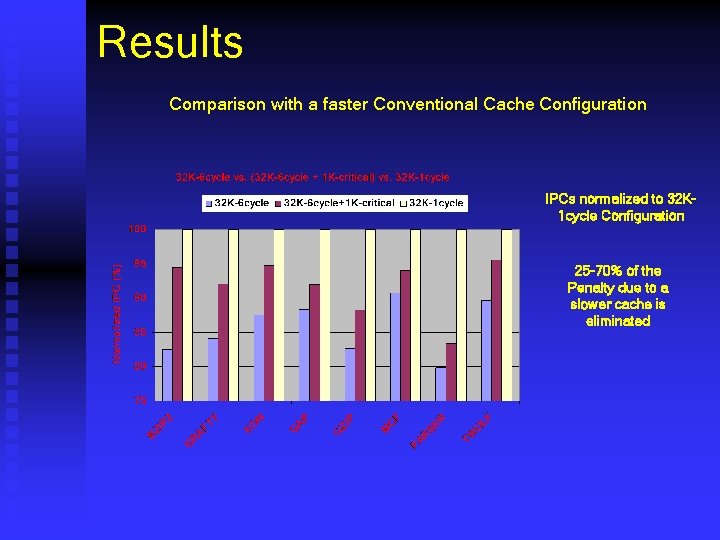

Results Comparison with a faster Conventional Cache Configuration IPCs normalized to 32 K 1 cycle Configuration 25 -70% of the Penalty due to a slower cache is eliminated

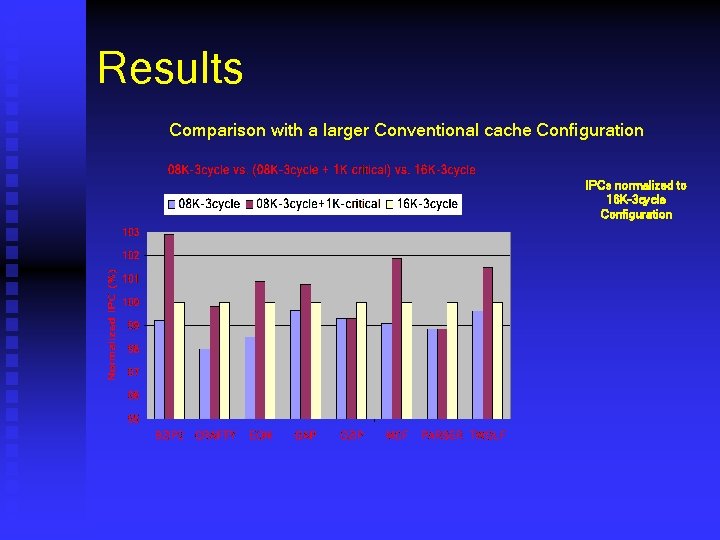

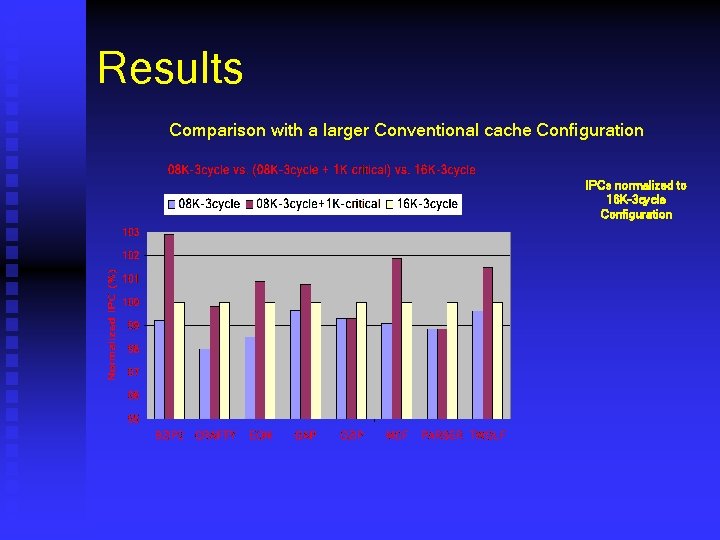

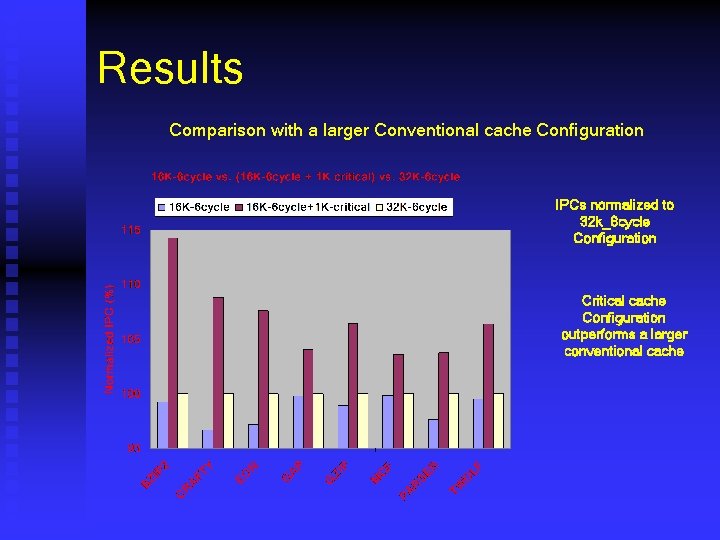

Results Comparison with a larger Conventional cache Configuration IPCs normalized to 16 K-3 cycle Configuration

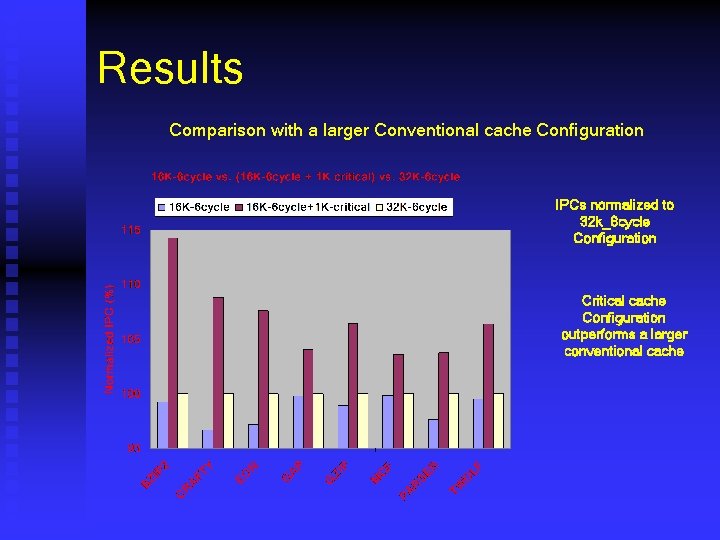

Results Comparison with a larger Conventional cache Configuration IPCs normalized to 32 k_6 cycle Configuration Critical cache Configuration outperforms a larger conventional cache

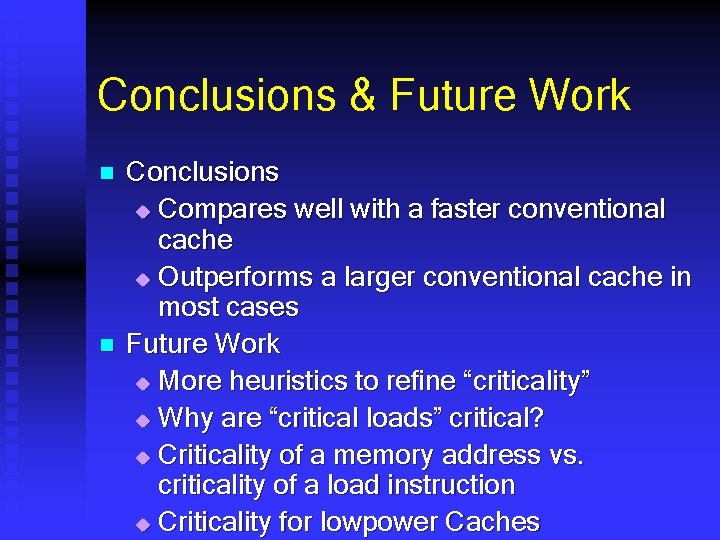

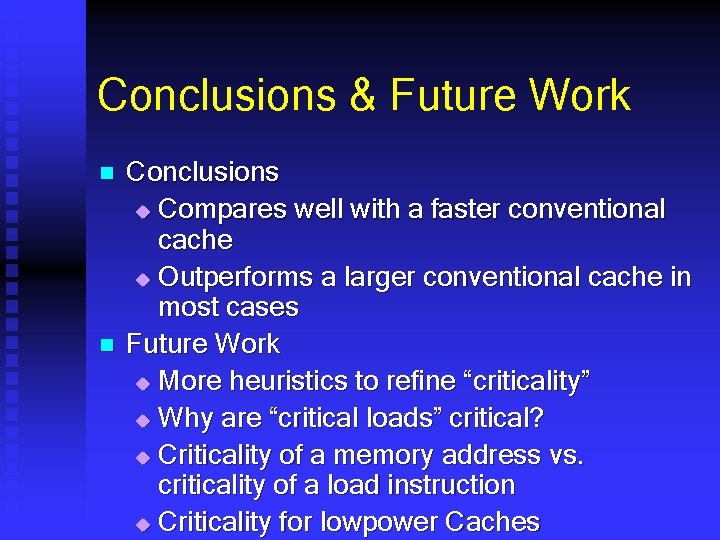

Conclusions & Future Work n n Conclusions u Compares well with a faster conventional cache u Outperforms a larger conventional cache in most cases Future Work u More heuristics to refine “criticality” u Why are “critical loads” critical? u Criticality of a memory address vs. criticality of a load instruction u Criticality for lowpower Caches