evaluating annotation seminar Resources for computational linguistics WS

- Slides: 21

evaluating annotation seminar „Resources for computational linguistics“ WS 05/06 tutors: Ivana Kruijff-Korbayová & Magdalena Wolska Michaela Regneri Nov. 14 th 2005

overview ¢ evaluating systems precision and recall l F-value l annotation reliability ¢ kappa – a reliability metric ¢ kappa and its motivation l computing kappa (esp. „p(E)“) l problems & open questions l

evaluating systems two measures for reliability of a system (intuitively transparent): ¢ the system shall recognize as much as possible: the measure of recall = ¢ number of found (good) candidates number of terms in the reference list

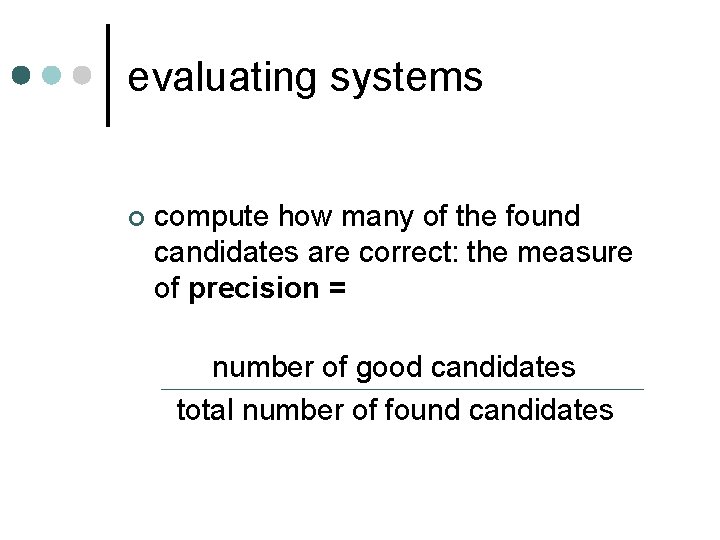

evaluating systems ¢ compute how many of the found candidates are correct: the measure of precision = number of good candidates total number of found candidates

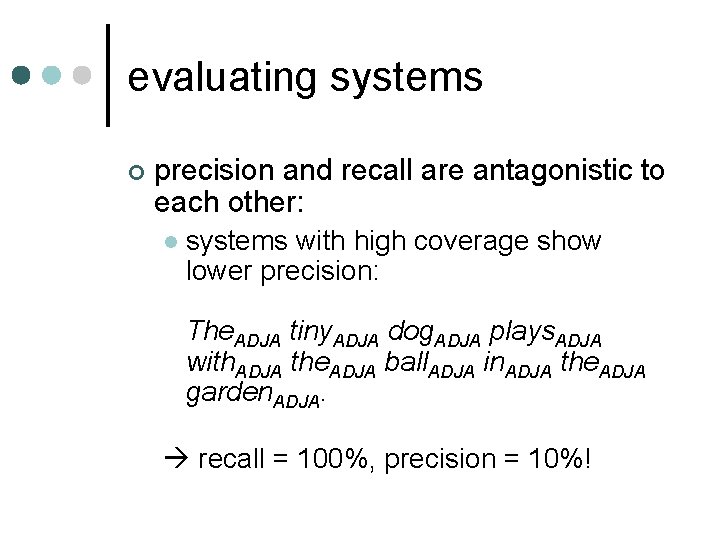

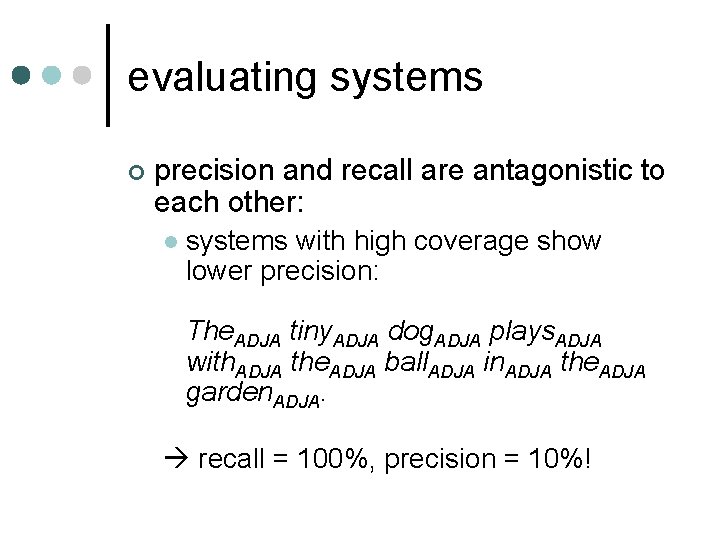

evaluating systems ¢ precision and recall are antagonistic to each other: l systems with high coverage show lower precision: The. ADJA tiny. ADJA dog. ADJA plays. ADJA with. ADJA the. ADJA ball. ADJA in. ADJA the. ADJA garden. ADJA. recall = 100%, precision = 10%!

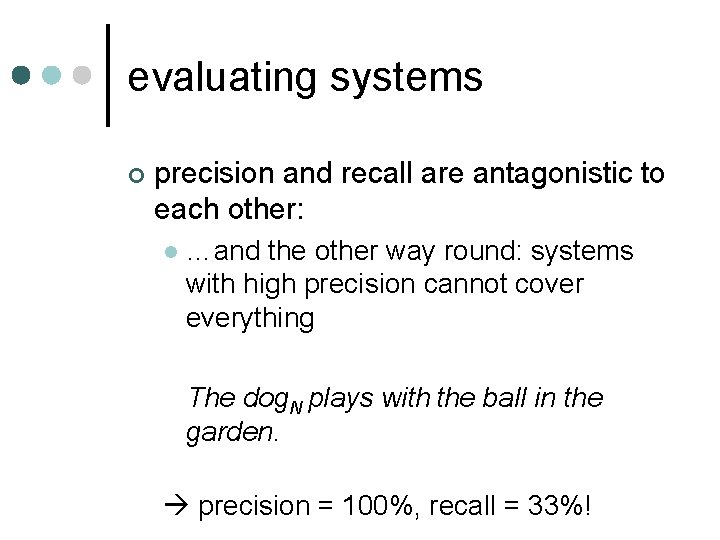

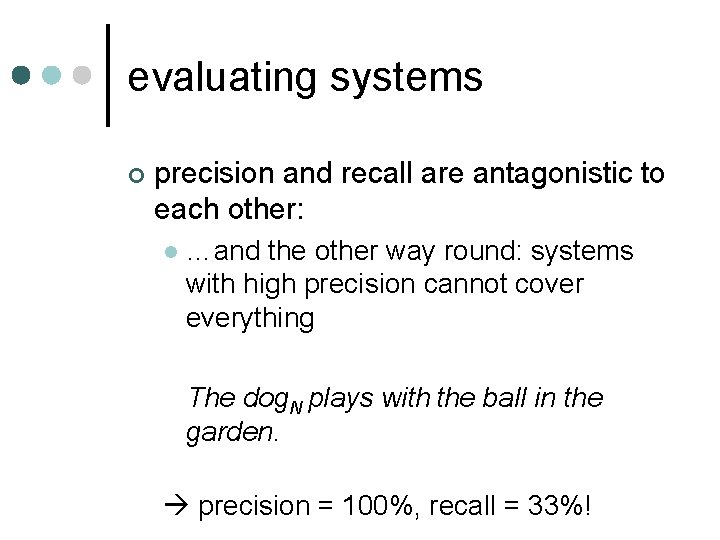

evaluating systems ¢ precision and recall are antagonistic to each other: l …and the other way round: systems with high precision cannot cover everything The dog. N plays with the ball in the garden. precision = 100%, recall = 33%!

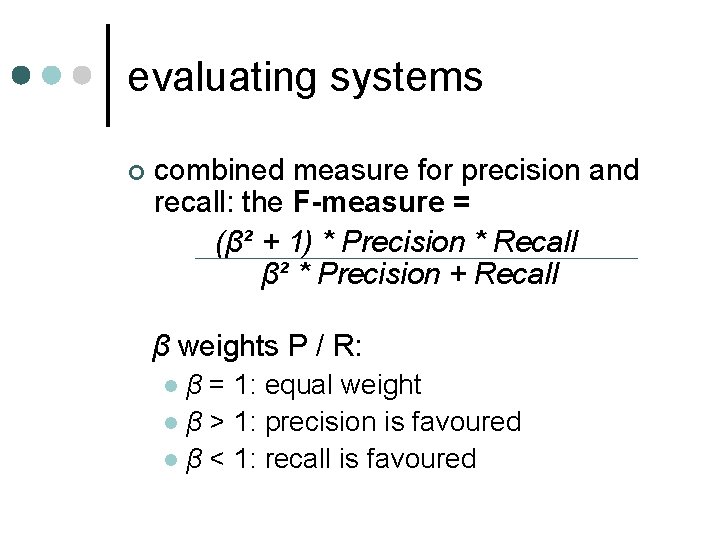

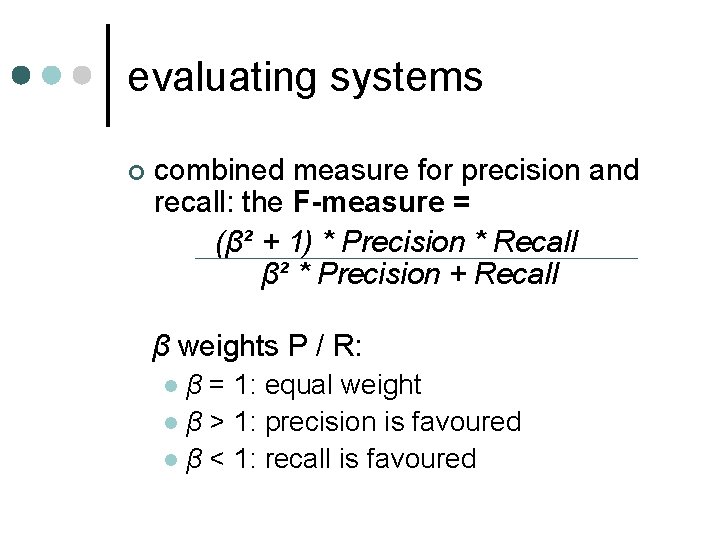

evaluating systems ¢ combined measure for precision and recall: the F-measure = (β² + 1) * Precision * Recall β² * Precision + Recall β weights P / R: β = 1: equal weight l β > 1: precision is favoured l β < 1: recall is favoured l

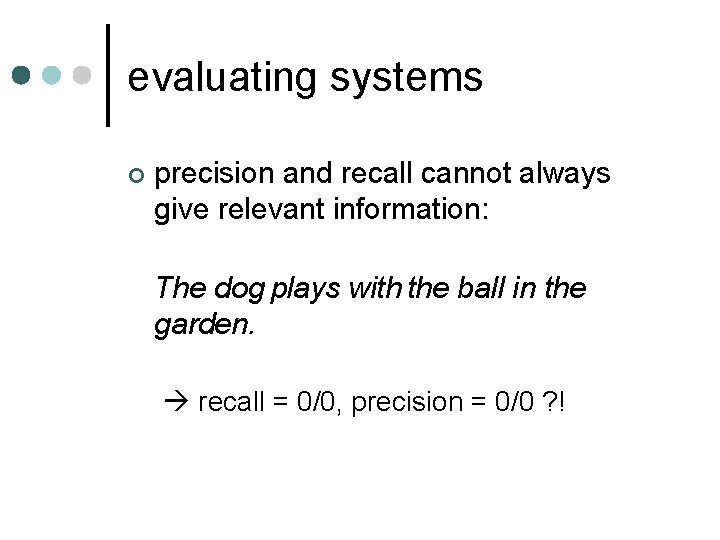

evaluating systems ¢ precision and recall cannot always give relevant information: The dog plays with the ball in the garden. recall = 0/0, precision = 0/0 ? !

evaluating systems ¢ precision / recall l generally used for evaluating IR / IE tasks l several other applications, e. g. machine translation, coreference annotation

annotation reliability „every annotation task is subject to unintended errors“ ¢ evaluating annotation to measure the reliability of annotated corpora (resp. annotators) ¢ inner-annotator agreement: measurement of an annotator‘s „consequence“ over time ¢

annotation reliability ¢ inter-annotator agreement: two (or more) annotators get the same annotation task l possibly comparison to an expert annotator l

kappa coefficient ¢ kappa coefficient (κ) to measure agreement between two annotators introduced for students of medicine to evaluate their diagnoses l difference of agreement found („P(A)“) and the agreement expected by chance („P(E)“) l

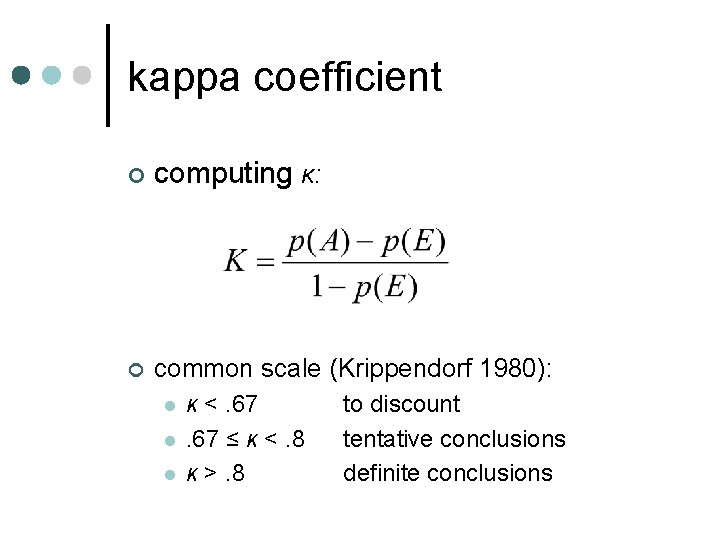

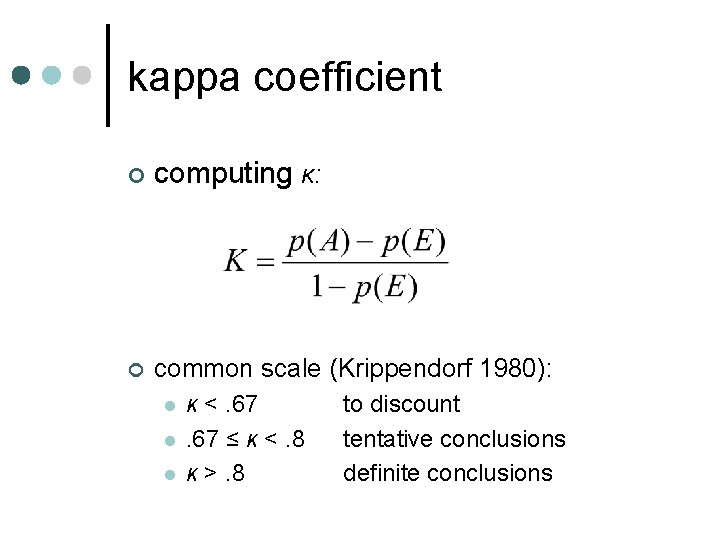

kappa coefficient ¢ computing κ: ¢ common scale (Krippendorf 1980): l l l κ <. 67 ≤ κ <. 8 κ >. 8 to discount tentative conclusions definite conclusions

kappa coefficient ¢ depends on the computation of p(E); relevant: 1) number of categories 2) their distribution ¢ different methods for computing p(E) (mainly two)

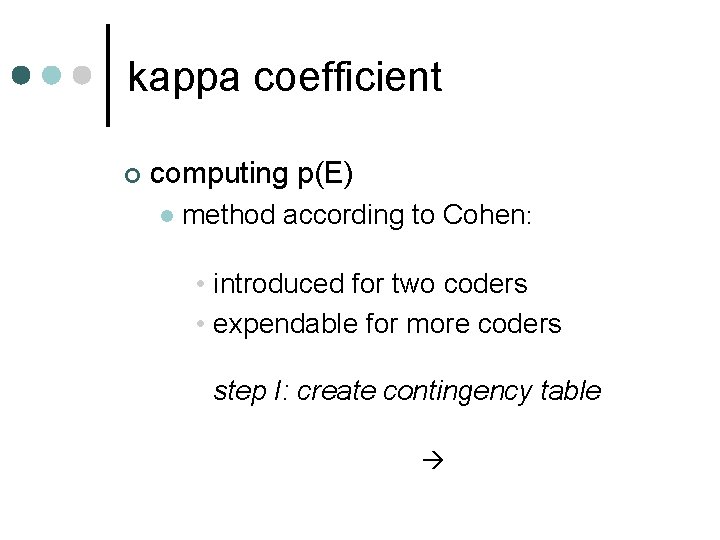

kappa coefficient ¢ computing p(E) l method according to Cohen: • introduced for two coders • expendable for more coders step I: create contingency table

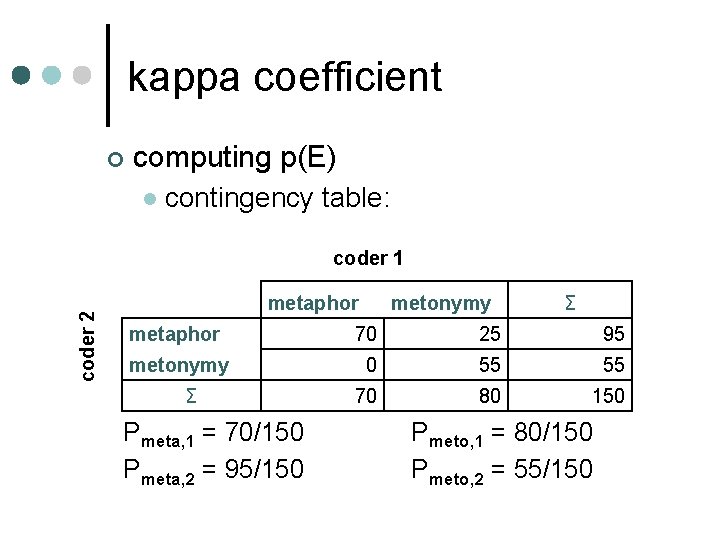

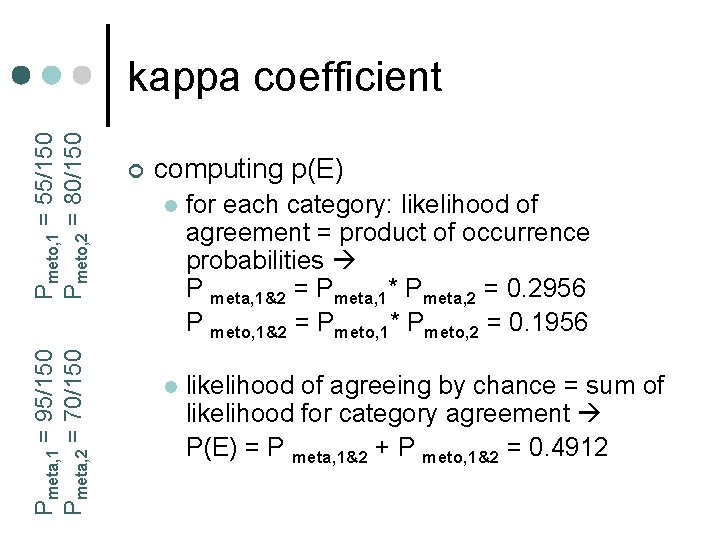

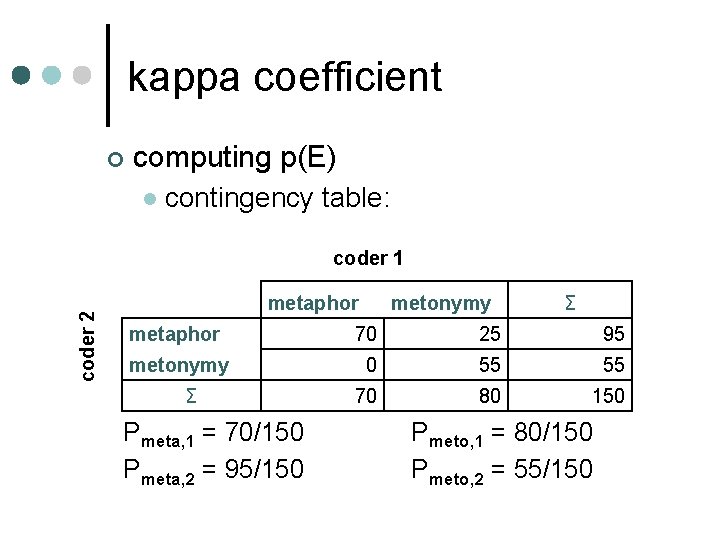

kappa coefficient ¢ computing p(E) l contingency table: coder 2 coder 1 metaphor metonymy Σ metaphor 70 25 95 metonymy 0 55 55 70 80 150 Σ Pmeta, 1 = 70/150 Pmeta, 2 = 95/150 Pmeto, 1 = 80/150 Pmeto, 2 = 55/150

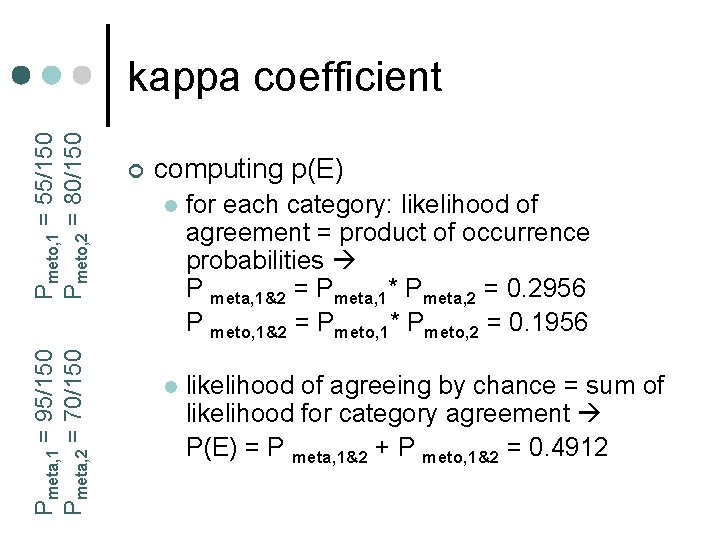

Pmeta, 1 = 95/150 Pmeta, 2 = 70/150 Pmeto, 1 = 55/150 Pmeto, 2 = 80/150 kappa coefficient ¢ computing p(E) l for each category: likelihood of agreement = product of occurrence probabilities P meta, 1&2 = Pmeta, 1* Pmeta, 2 = 0. 2956 P meto, 1&2 = Pmeto, 1* Pmeto, 2 = 0. 1956 l likelihood of agreeing by chance = sum of likelihood for category agreement P(E) = P meta, 1&2 + P meto, 1&2 = 0. 4912

kappa coefficient ¢ computing p(E) l other method (Siegel & Castellan 1988) only considering the category proportions summed up for higher κ–values very similar results l especially

kappa coefficient ¢ problems l bias problem: κ decreases with skewing category distributions l prevalence problem (only for Cohen‘s computation of p(E)): κ decreases with difference of category distribution among coders

kappa coefficient ¢ open questions l usage for system evaluation? which scale to use for κ -values? l how prevent bias/prevalence problems? l (when) introduce an „expert coder“? l how to compute p(E)? l

questions?