Educational Research Chapter 5 Selecting Measuring Instruments Gay

- Slides: 37

Educational Research Chapter 5 Selecting Measuring Instruments Gay, Mills, and Airasian

Topics Discussed in this Chapter n n Data collection Measuring instruments n n Technical issues n n n Terminology Interpreting data Types of instruments Validity Reliability Selection of a test

Data Collection n Scientific inquiry requires the collection, analysis, and interpretation of data n n Data – the pieces of information that are collected to examine the research topic Issues related to the collection of this information are the focus of this chapter

Data Collection n Terminology related to data n Constructs – abstractions that cannot be observed directly but are helpful when trying to explain behavior n n n Intelligence Teacher effectiveness Self concept Obj. 1. 1 & 1. 2

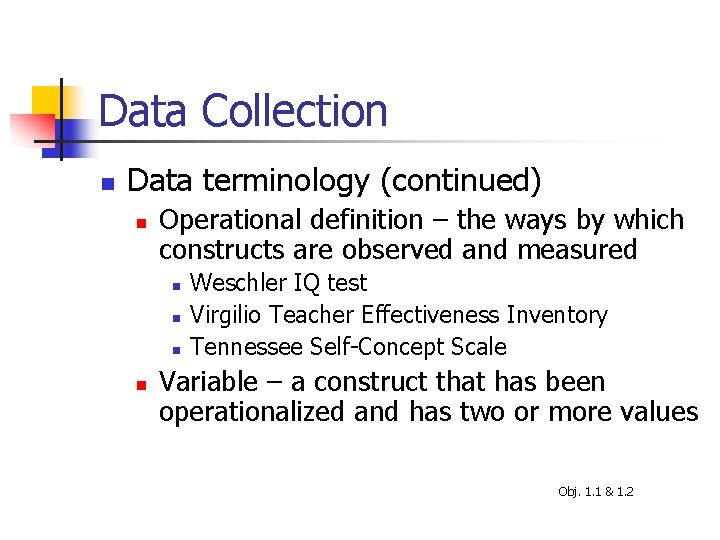

Data Collection n Data terminology (continued) n Operational definition – the ways by which constructs are observed and measured n n Weschler IQ test Virgilio Teacher Effectiveness Inventory Tennessee Self-Concept Scale Variable – a construct that has been operationalized and has two or more values Obj. 1. 1 & 1. 2

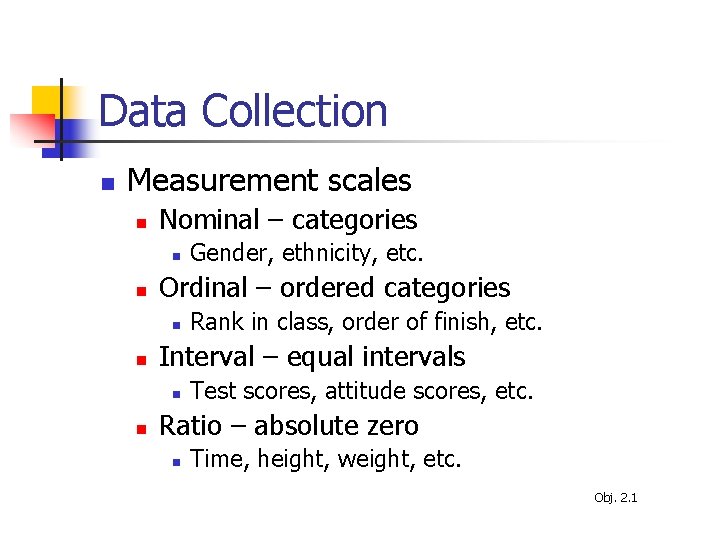

Data Collection n Measurement scales n Nominal – categories n n Ordinal – ordered categories n n Rank in class, order of finish, etc. Interval – equal intervals n n Gender, ethnicity, etc. Test scores, attitude scores, etc. Ratio – absolute zero n Time, height, weight, etc. Obj. 2. 1

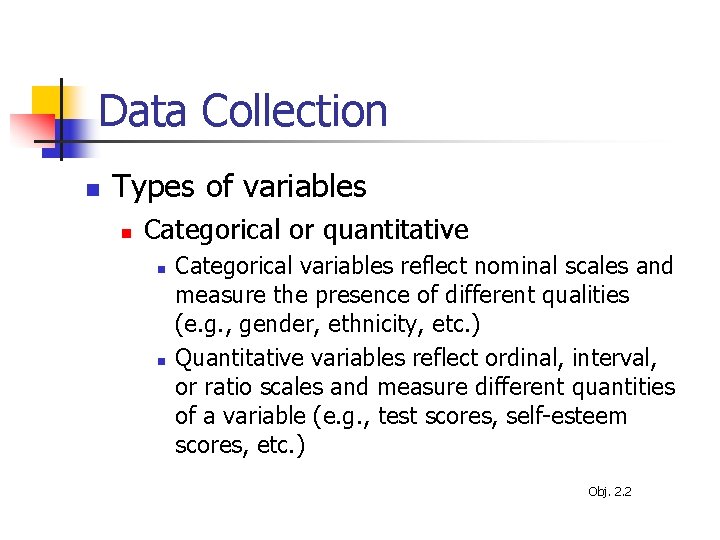

Data Collection n Types of variables n Categorical or quantitative n n Categorical variables reflect nominal scales and measure the presence of different qualities (e. g. , gender, ethnicity, etc. ) Quantitative variables reflect ordinal, interval, or ratio scales and measure different quantities of a variable (e. g. , test scores, self-esteem scores, etc. ) Obj. 2. 2

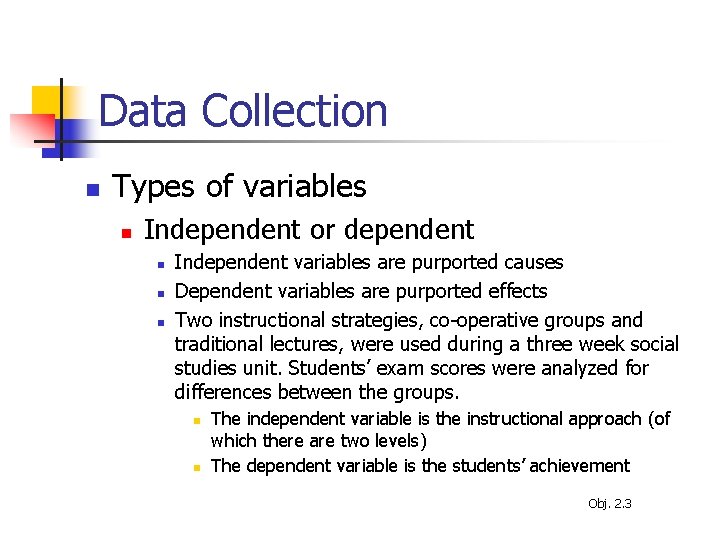

Data Collection n Types of variables n Independent or dependent n n n Independent variables are purported causes Dependent variables are purported effects Two instructional strategies, co-operative groups and traditional lectures, were used during a three week social studies unit. Students’ exam scores were analyzed for differences between the groups. n n The independent variable is the instructional approach (of which there are two levels) The dependent variable is the students’ achievement Obj. 2. 3

Measurement Instruments n Important terms n n Instrument – a tool used to collect data Test – a formal, systematic procedure for gathering information Assessment – the general process of collecting, synthesizing, and interpreting information Measurement – the process of quantifying or scoring a subject’s performance Obj. 3. 1 & 3. 2

Measurement Instruments n Important terms (continued) n n n Cognitive tests – examining subjects’ thoughts and thought processes Affective tests – examining subjects’ feelings, interests, attitudes, beliefs, etc. Standardized tests – tests that are administered, scored, and interpreted in a consistent manner Obj. 3. 1

Measurement Instruments n Important terms (continued) n Selected response item format – respondents select answers from a set of alternatives n n Multiple choice True-false Matching Supply response item format – respondents construct answers n n n Short answer Completion Essay Obj. 3. 3 & 11. 3

Measurement Instruments n Important terms (continued) n n n Individual tests – tests administered on an individual basis Group tests – tests administered to a group of subjects at the same time Performance assessments – assessments that focus on processes or products that have been created Obj. 3. 6

Measurement Instruments n Interpreting data n n Raw scores – the actual score made on a test Standard scores – statistical transformations of raw scores n n n Percentiles (0. 00 – 99. 9) Stanines (1 – 9) Normal Curve Equivalents (0. 00 – 99. 99) Obj. 3. 4

Measurement Instruments n Interpreting data (continued) n n n Norm-referenced – scores are interpreted relative to the scores of others taking the test Criterion-referenced – scores are interpreted relative to a predetermined level of performance Self-referenced – scores are interpreted relative to changes over time Obj. 3. 5

Measurement Instruments n Types of instruments n n n Cognitive – measuring intellectual processes such as thinking, memorizing, problem solving, analyzing, or reasoning Achievement – measuring what students already know Aptitude – measuring general mental ability, usually for predicting future performance Obj. 4. 1 & 4. 2

Measurement Instruments n Types of instruments (continued) n Affective – assessing individuals’ feelings, values, attitudes, beliefs, etc. n Typical affective characteristics of interest n n Values – deeply held beliefs about ideas, persons, or objects Attitudes – dispositions that are favorable or unfavorable toward things Interests – inclinations to seek out or participate in particular activities, objects, ideas, etc. Personality – characteristics that represent a person’s typical behaviors Obj. 4. 1 & 4. 5

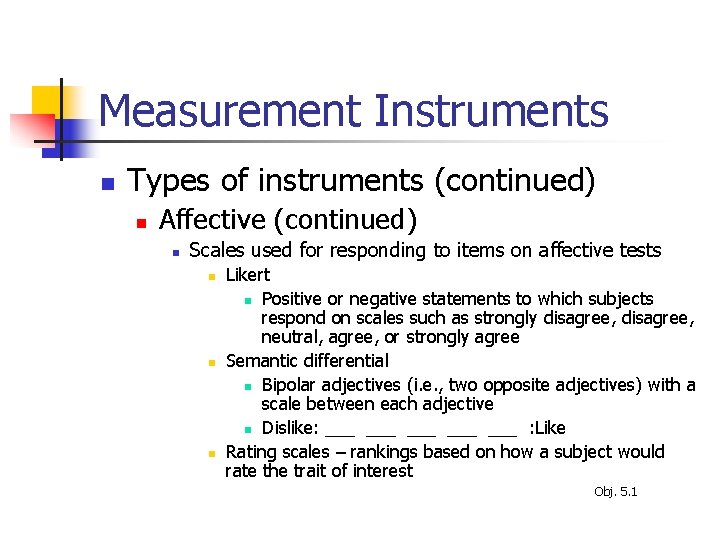

Measurement Instruments n Types of instruments (continued) n Affective (continued) n Scales used for responding to items on affective tests n n n Likert n Positive or negative statements to which subjects respond on scales such as strongly disagree, neutral, agree, or strongly agree Semantic differential n Bipolar adjectives (i. e. , two opposite adjectives) with a scale between each adjective n Dislike: ___ ___ ___ : Like Rating scales – rankings based on how a subject would rate the trait of interest Obj. 5. 1

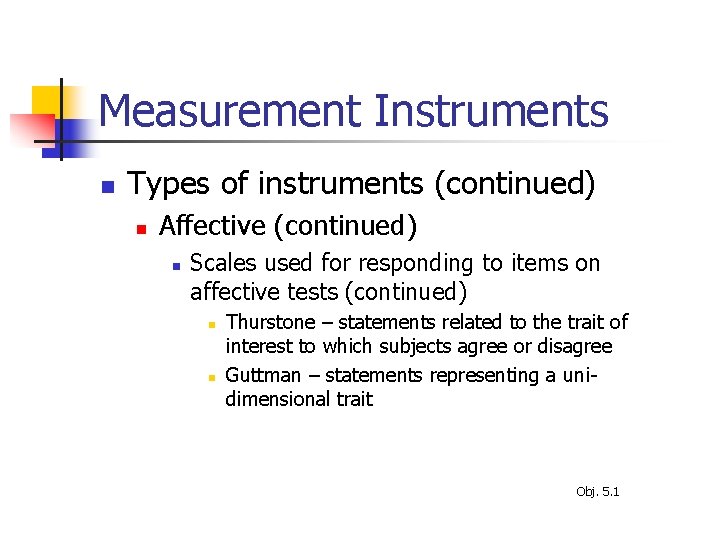

Measurement Instruments n Types of instruments (continued) n Affective (continued) n Scales used for responding to items on affective tests (continued) n n Thurstone – statements related to the trait of interest to which subjects agree or disagree Guttman – statements representing a unidimensional trait Obj. 5. 1

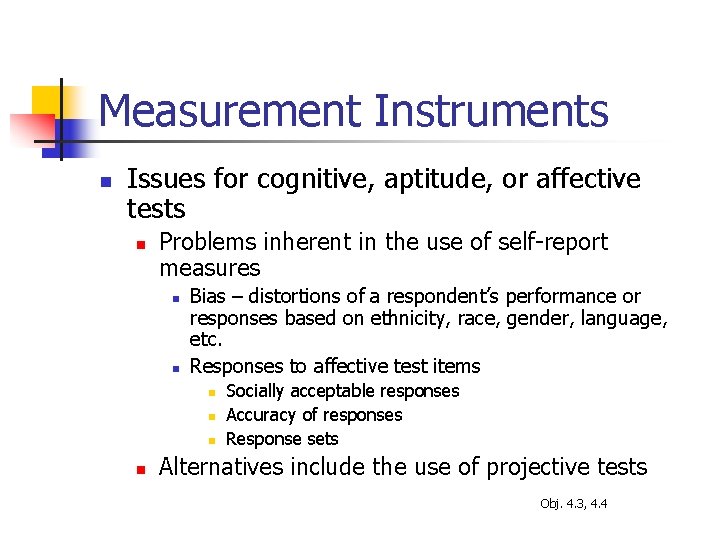

Measurement Instruments n Issues for cognitive, aptitude, or affective tests n Problems inherent in the use of self-report measures n n Bias – distortions of a respondent’s performance or responses based on ethnicity, race, gender, language, etc. Responses to affective test items n n Socially acceptable responses Accuracy of responses Response sets Alternatives include the use of projective tests Obj. 4. 3, 4. 4

Technical Issues n Two concerns n n Validity Reliability

Technical Issues n Validity – extent to which interpretations made from a test score appropriate n Characteristics n n The most important technical characteristic Situation specific Does not refer to the instrument but to the interpretations of scores on the instrument Best thought of in terms of degree Obj. 6. 1 & 7. 1

Technical Issues n Validity (continued) n Four types n Content – to what extent does the test measure what it is supposed to measure n n n Item validity Sampling validity Determined by expert judgment Obj. 7. 1 & 7. 2

Technical Issues n Validity (continued) n Criterion-related n n Predictive – to what extent does the test predict a future performance Concurrent - to what extent does the test predict a performance measured at the same time Estimated by correlations between two tests Construct – the extent to which a test measures the construct it represents n n Underlying difficulty defining constructs Estimated in many ways Obj. 7. 1, 7. 3, & 7. 4

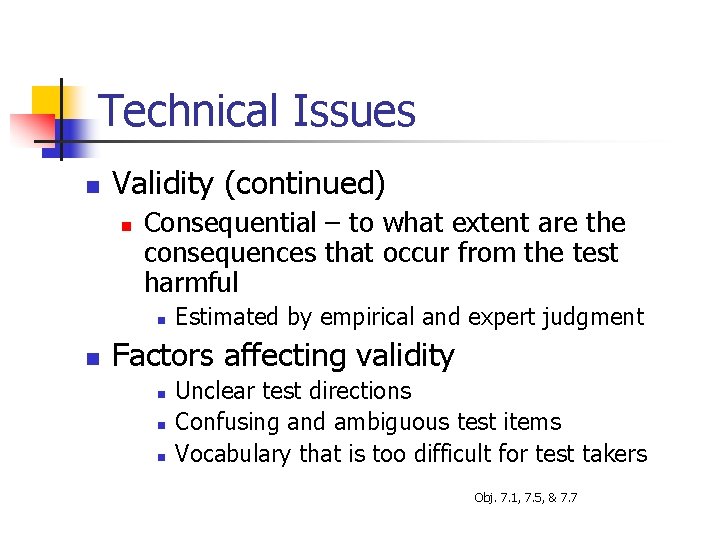

Technical Issues n Validity (continued) n Consequential – to what extent are the consequences that occur from the test harmful n n Estimated by empirical and expert judgment Factors affecting validity n n n Unclear test directions Confusing and ambiguous test items Vocabulary that is too difficult for test takers Obj. 7. 1, 7. 5, & 7. 7

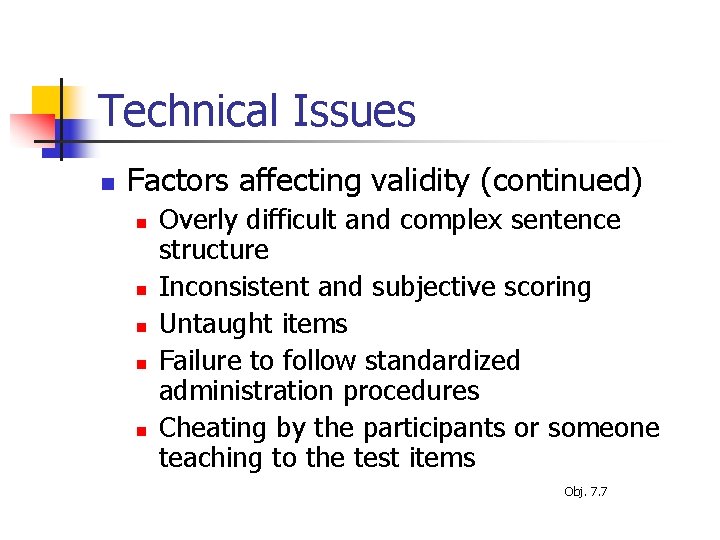

Technical Issues n Factors affecting validity (continued) n n n Overly difficult and complex sentence structure Inconsistent and subjective scoring Untaught items Failure to follow standardized administration procedures Cheating by the participants or someone teaching to the test items Obj. 7. 7

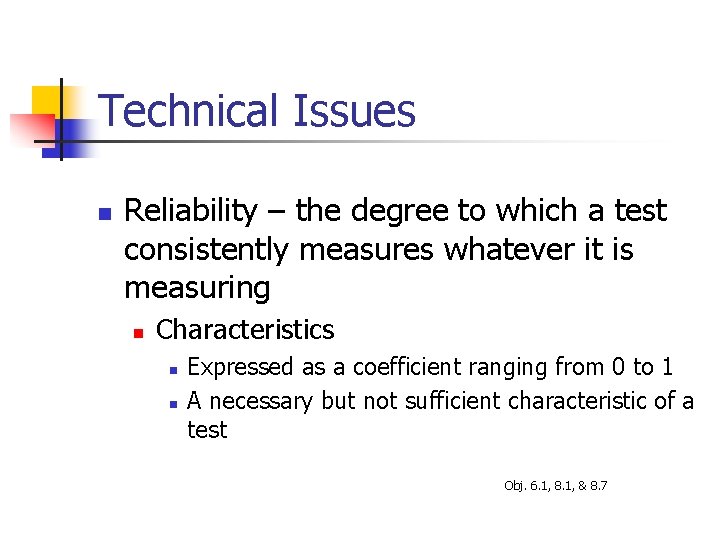

Technical Issues n Reliability – the degree to which a test consistently measures whatever it is measuring n Characteristics n n Expressed as a coefficient ranging from 0 to 1 A necessary but not sufficient characteristic of a test Obj. 6. 1, 8. 1, & 8. 7

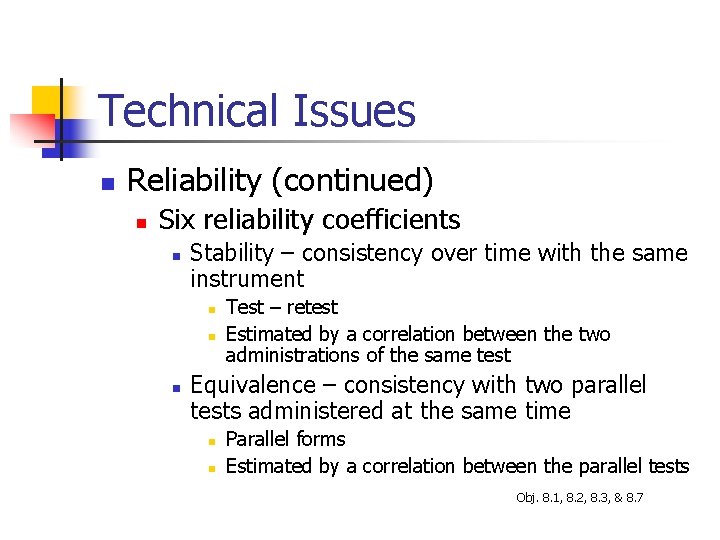

Technical Issues n Reliability (continued) n Six reliability coefficients n Stability – consistency over time with the same instrument n n n Test – retest Estimated by a correlation between the two administrations of the same test Equivalence – consistency with two parallel tests administered at the same time n n Parallel forms Estimated by a correlation between the parallel tests Obj. 8. 1, 8. 2, 8. 3, & 8. 7

Technical Issues n Reliability (continued) n Six reliability coefficients (continued) n Equivalence and stability – consistency over time with parallel forms of the test n n n Combines attributes of stability and equivalence Estimated by a correlation between the parallel forms Internal consistency – artificially splitting the test into halves n n Several coefficients – split halves, KR 20, KR 21, Cronbach alpha All coefficients provide estimates ranging from 0 to 1 Obj. 8. 1, 8. 4, 8. 5, & 8. 7

Technical Issues n Reliability (continued) n Six reliability coefficients n Scorer/rater – consistency of observations between raters n n n Inter-judge – two observers Intra-judge – one judge over two occasions Estimated by percent agreement between observations Obj. 8. 1, 8. 6, & 8. 7

Technical Issues n Reliability (continued) n Six reliability coefficients (continued) n Standard error of measurement (SEM) – an estimate of how much difference there is between a person’s obtained score and his or her true score n n Function of the variation of the test and the reliability coefficient (e. g. , KR 20, Cronbach alpha, etc. ) Estimated by specifying an interval rather than a point estimate of a person’s score Obj. 8. 1, 8. 7, & 9. 1

Selection of a Test n Sources of test information n Mental Measurement Yearbooks (MMY) n n n The reviews in MMY are most easily accessed through your university library and the services to which they subscribe (e. g. , EBSCO) Provides factual information on all known tests Provides objective test reviews Comprehensive bibliography for specific tests Indices: titles, acronyms, subject, publishers, developers Buros Institute Obj. 10. 1 & 12. 1

Selection of a Test n Sources (continued) n Tests in Print n n n Tests in Print is a subsidiary of the Buros Institute The reviews in it are most easily accessed through your university library and the services to which they subscribe (e. g. , EBSCO) Bibliography of all known commercially produced tests currently available Very useful to determine availability Tests in Print Obj. 10. 1 & 12. 1

Selection of a Test n Sources (continued) n ETS Test Collection n n Published and unpublished tests Includes test title, author, publication date, target population, publisher, and description of purpose Annotated bibliographies on achievement, aptitude, attitude and interests, personality, sensory motor, special populations, vocational/occupational, and miscellaneous ETS Test Collection Obj. 10. 1 &12. 1

Selection of a Test n Sources (continued) n n n Professional journals Test publishers and distributors Issues to consider when selecting tests n Psychometric properties n n Validity Reliability Length of test Scoring and score interpretation Obj. 10. 1, 11. 1, & 12. 1

Selection of a Test n Issues to consider when selecting tests n Non-psychometric issues n n Cost Administrative time Objections to content by parents or others Duplication of testing Obj. 11. 1

Selection of a Test n Designing your own tests n n Get help from others with experience in developing tests Item writing guidelines n n n Avoid ambiguous and confusing wording and sentence structure Use appropriate vocabulary Write items that have only one correct answer Give information about the nature of the desired answer Do not provide clues to the correct answer See Writing Multiple Choice Items Obj. 11. 2

Selection of a Test n Test administration guidelines n n n Plan ahead Be certain that there is consistency across testing sessions Be familiar with any and all procedures necessary to administer a test Obj. 11. 4