Dynamic Warp Formation and Scheduling for Efficient GPU

- Slides: 23

Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow Wilson W. L. Fung Ivan Sham George Yuan Tor M. Aamodt Electrical and Computer Engineering University of British Columbia Micro-40 Dec 5, 2007 Dynamic Warp Formation and Scheduling for GPU Control Flow

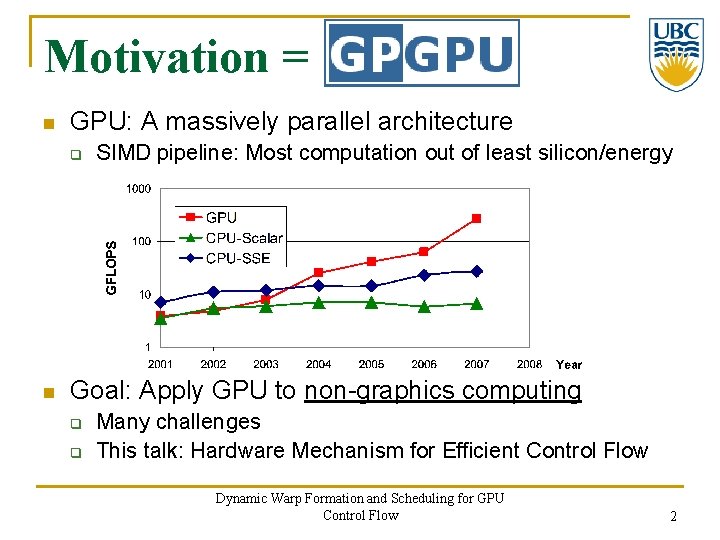

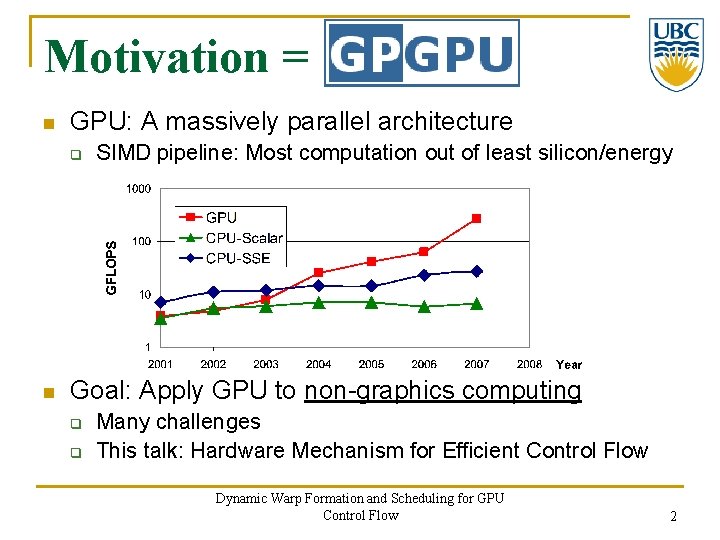

Motivation = n GPU: A massively parallel architecture q n SIMD pipeline: Most computation out of least silicon/energy Goal: Apply GPU to non-graphics computing q q Many challenges This talk: Hardware Mechanism for Efficient Control Flow Dynamic Warp Formation and Scheduling for GPU Control Flow 2

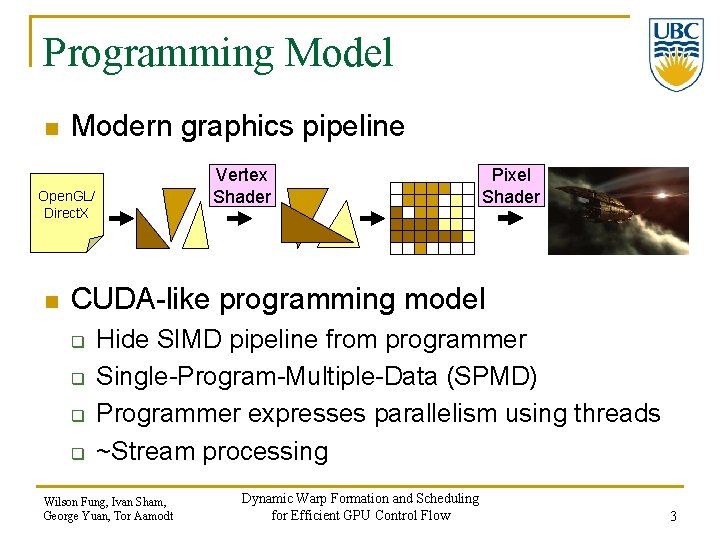

Programming Model n Modern graphics pipeline Vertex Shader Open. GL/ Direct. X n Pixel Shader CUDA-like programming model q q Hide SIMD pipeline from programmer Single-Program-Multiple-Data (SPMD) Programmer expresses parallelism using threads ~Stream processing Wilson Fung, Ivan Sham, George Yuan, Tor Aamodt Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow 3

Programming Model n n Warp = Threads grouped into a SIMD instruction From Oxford Dictionary: q Warp: In the textile industry, the term “warp” refers to “the threads stretched lengthwise in a loom to be crossed by the weft”. Wilson Fung, Ivan Sham, George Yuan, Tor Aamodt Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow 4

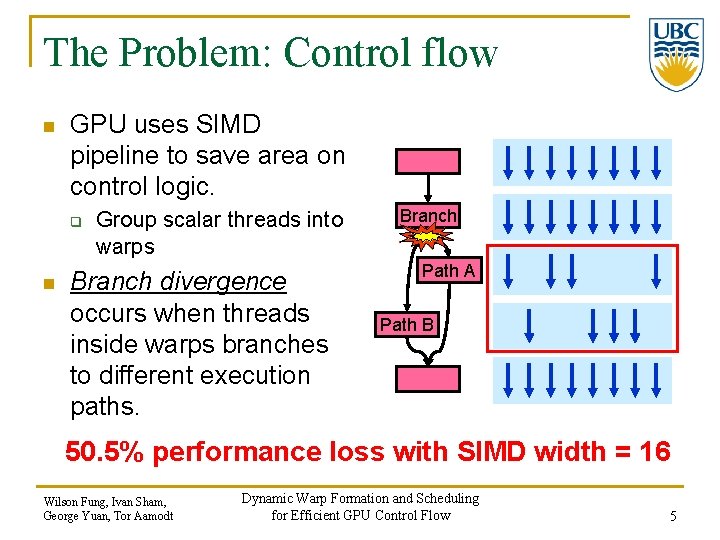

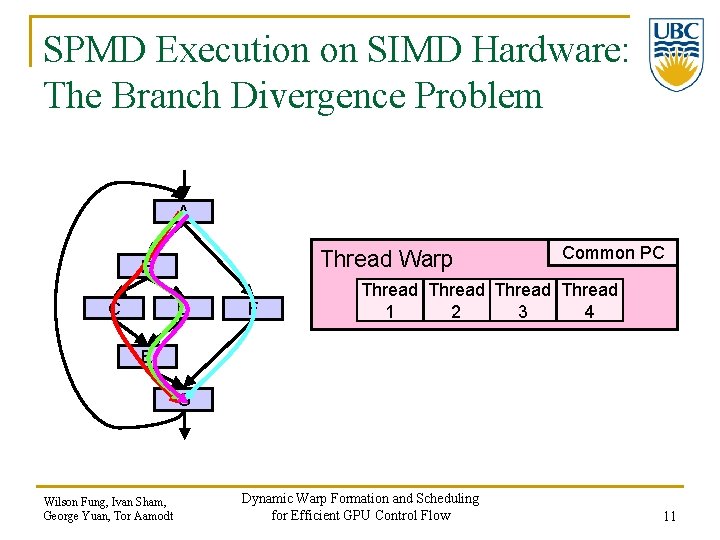

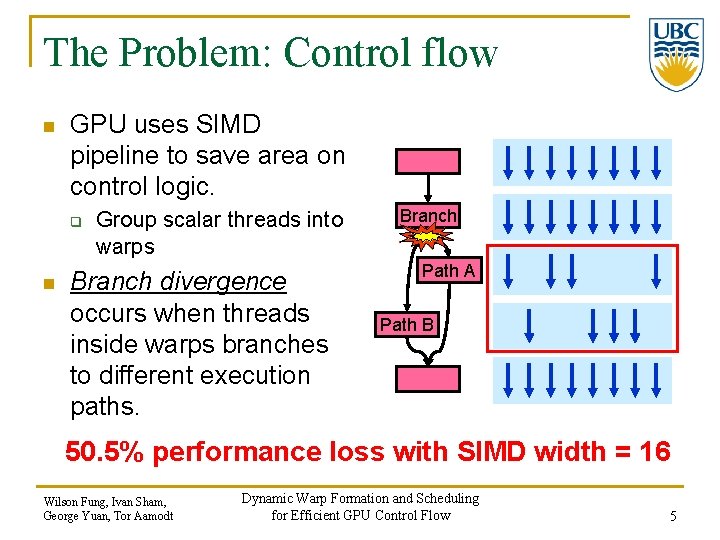

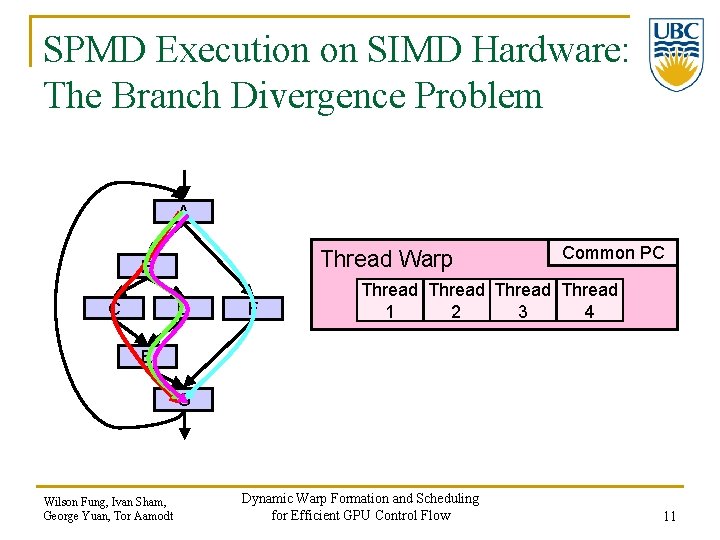

The Problem: Control flow n GPU uses SIMD pipeline to save area on control logic. q n Group scalar threads into warps Branch divergence occurs when threads inside warps branches to different execution paths. Branch Path A Path B 50. 5% performance loss with SIMD width = 16 Wilson Fung, Ivan Sham, George Yuan, Tor Aamodt Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow 5

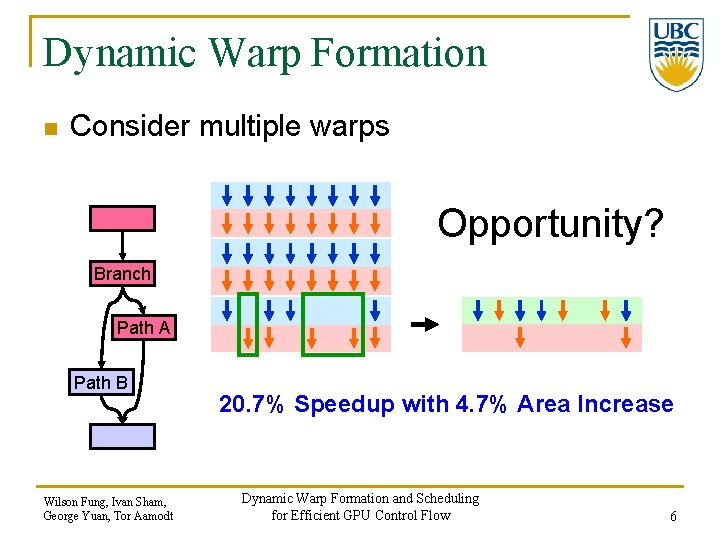

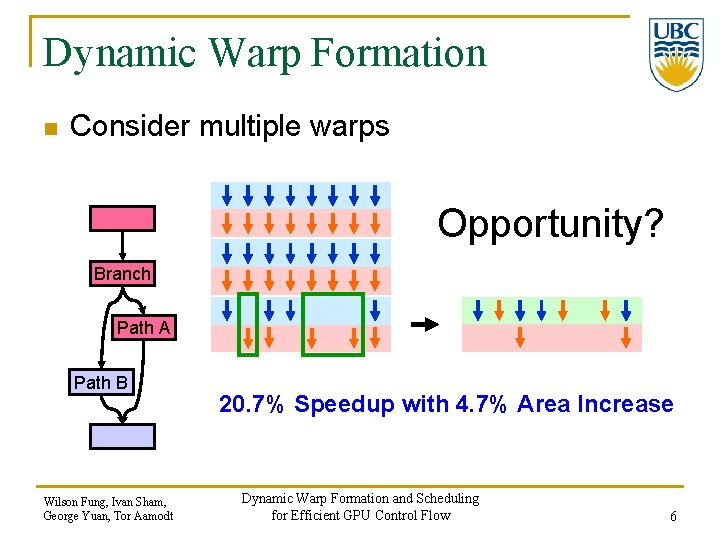

Dynamic Warp Formation n Consider multiple warps Opportunity? Branch Path A Path B Wilson Fung, Ivan Sham, George Yuan, Tor Aamodt 20. 7% Speedup with 4. 7% Area Increase Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow 6

Outline n n n n Introduction Baseline Architecture Branch Divergence Dynamic Warp Formation and Scheduling Experimental Result Related Work Conclusion Wilson Fung, Ivan Sham, George Yuan, Tor Aamodt Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow 7

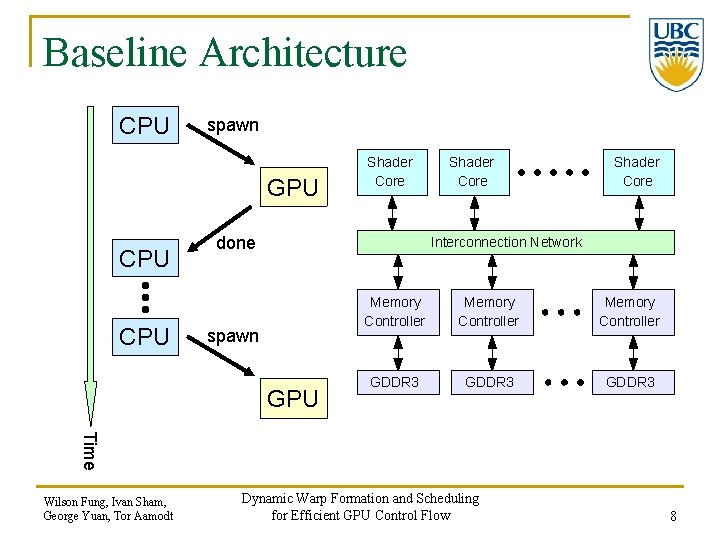

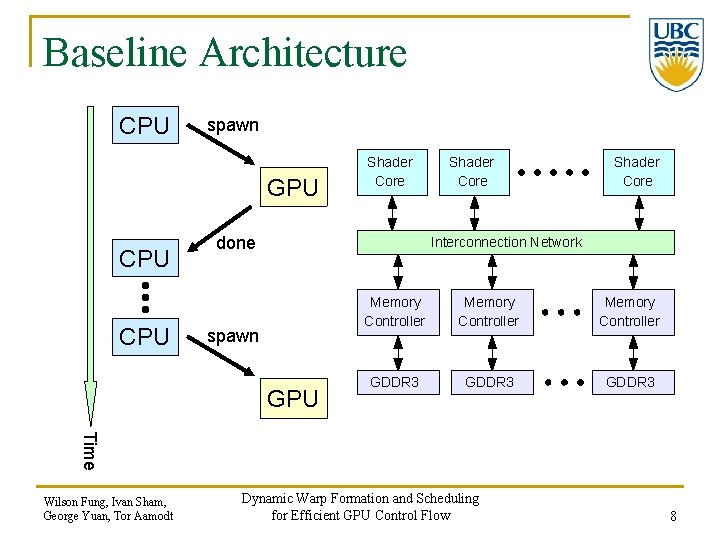

Baseline Architecture CPU spawn GPU CPU Shader Core done Shader Core Interconnection Network spawn GPU Memory Controller GDDR 3 Time Wilson Fung, Ivan Sham, George Yuan, Tor Aamodt Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow 8

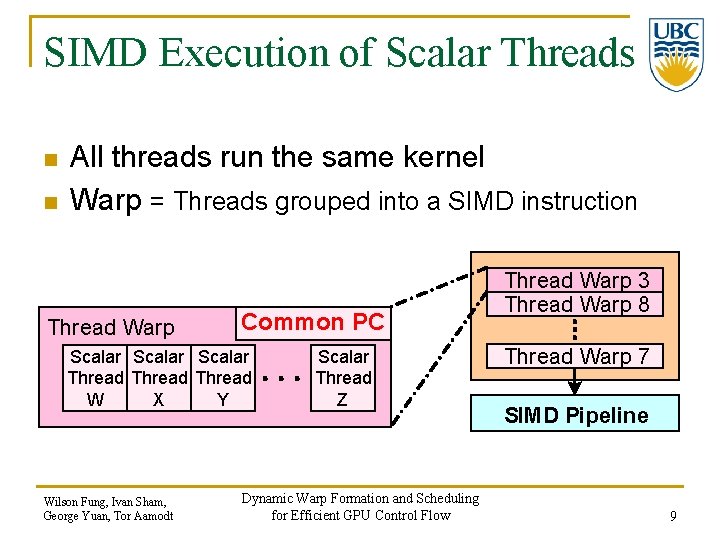

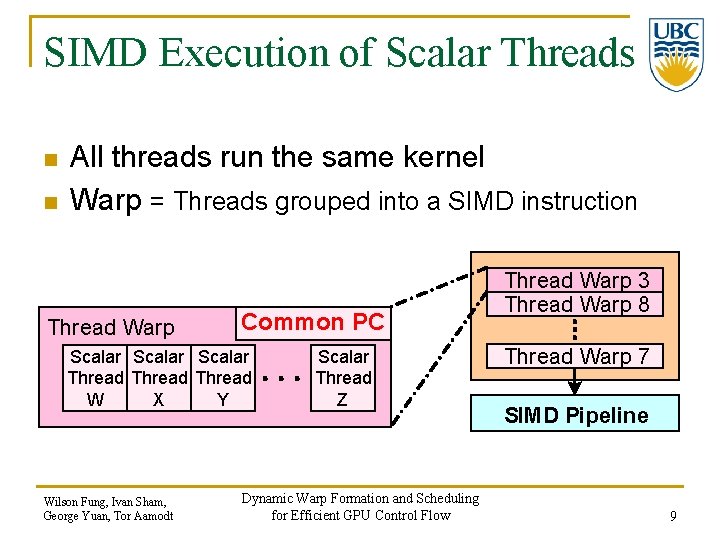

SIMD Execution of Scalar Threads n n All threads run the same kernel Warp = Threads grouped into a SIMD instruction Thread Warp Common PC Scalar Thread W X Y Wilson Fung, Ivan Sham, George Yuan, Tor Aamodt Scalar Thread Z Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow Thread Warp 3 Thread Warp 8 Thread Warp 7 SIMD Pipeline 9

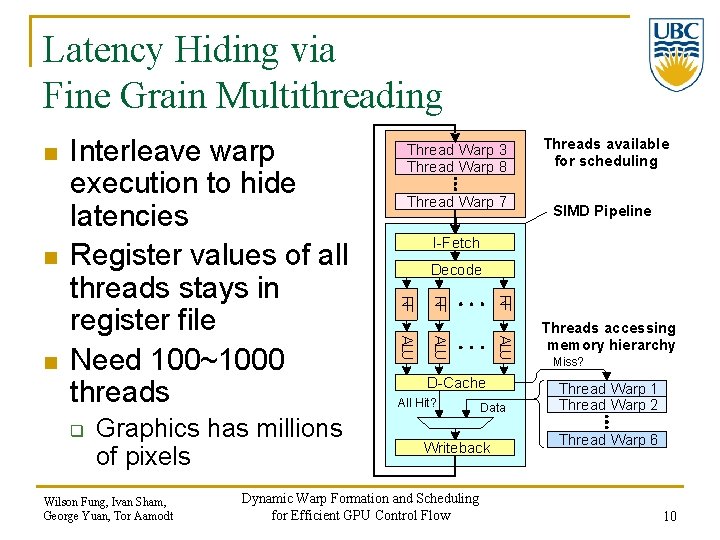

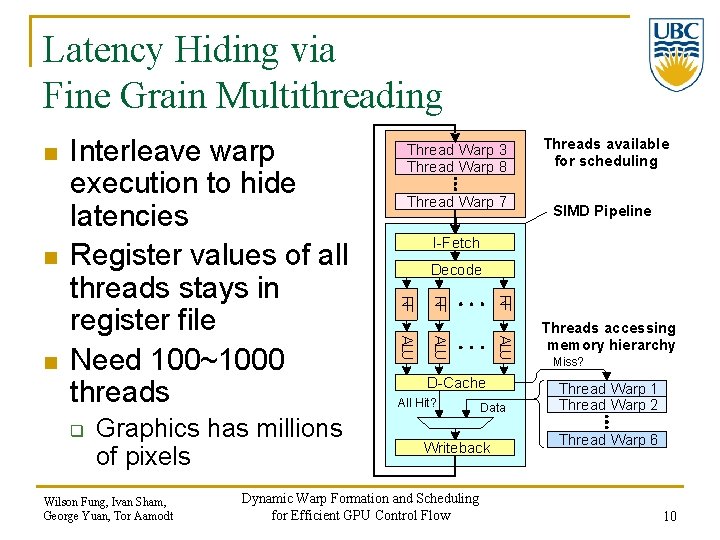

Latency Hiding via Fine Grain Multithreading n n SIMD Pipeline Decode RF ALU Wilson Fung, Ivan Sham, George Yuan, Tor Aamodt Threads available for scheduling I-Fetch RF Graphics has millions of pixels Thread Warp 7 ALU q Thread Warp 3 Thread Warp 8 RF n Interleave warp execution to hide latencies Register values of all threads stays in register file Need 100~1000 threads D-Cache All Hit? Data Writeback Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow Threads accessing memory hierarchy Miss? Thread Warp 1 Thread Warp 2 Thread Warp 6 10

SPMD Execution on SIMD Hardware: The Branch Divergence Problem A Thread Warp B C D F Common PC Thread 1 2 3 4 E G Wilson Fung, Ivan Sham, George Yuan, Tor Aamodt Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow 11

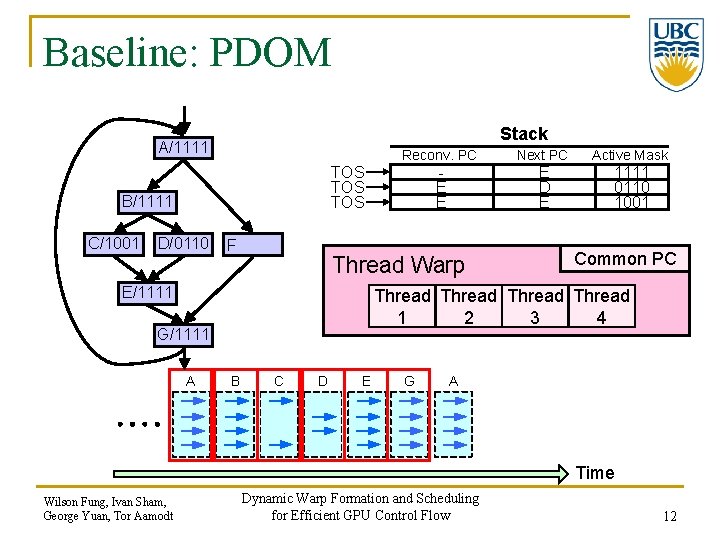

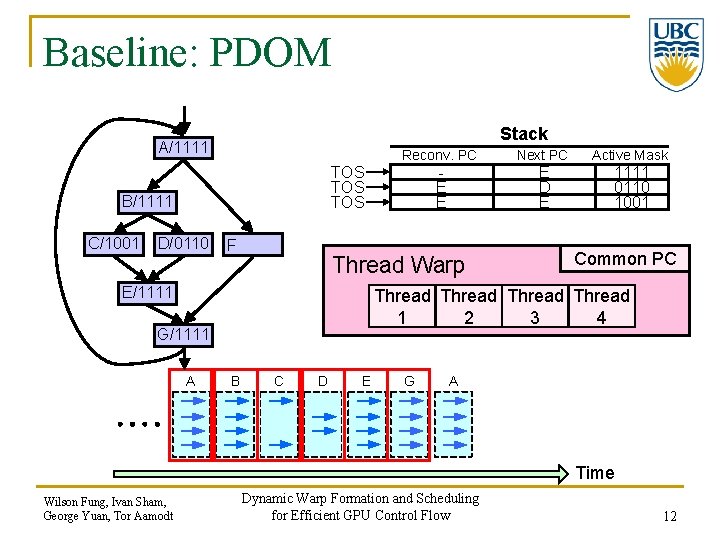

Baseline: PDOM Stack A/1111 A Reconv. PC B/1111 B C/1001 C E E TOS TOS D/0110 D F Active Mask 1111 0110 1001 Common PC Thread 1 2 3 4 G/1111 G A A B G E D C E Thread Warp E/1111 E Next PC B C D E G A Time Wilson Fung, Ivan Sham, George Yuan, Tor Aamodt Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow 12

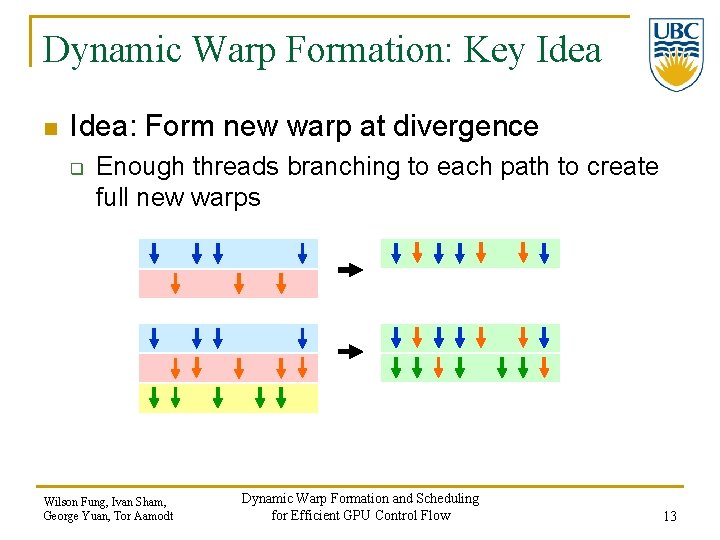

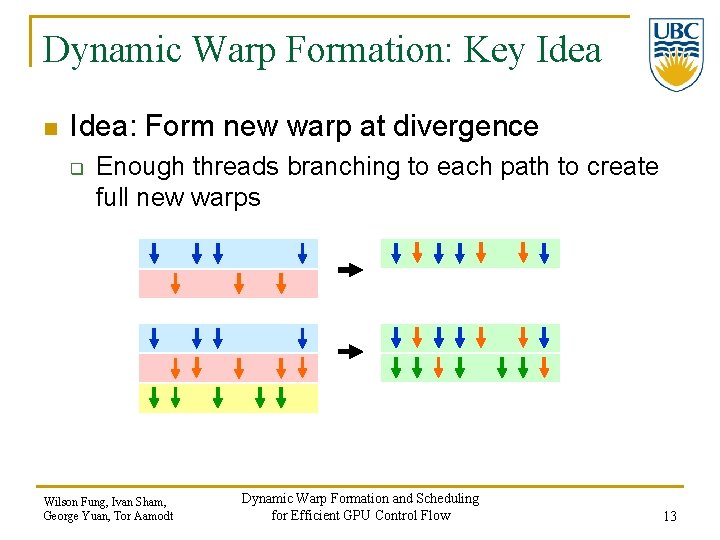

Dynamic Warp Formation: Key Idea n Idea: Form new warp at divergence q Enough threads branching to each path to create full new warps Wilson Fung, Ivan Sham, George Yuan, Tor Aamodt Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow 13

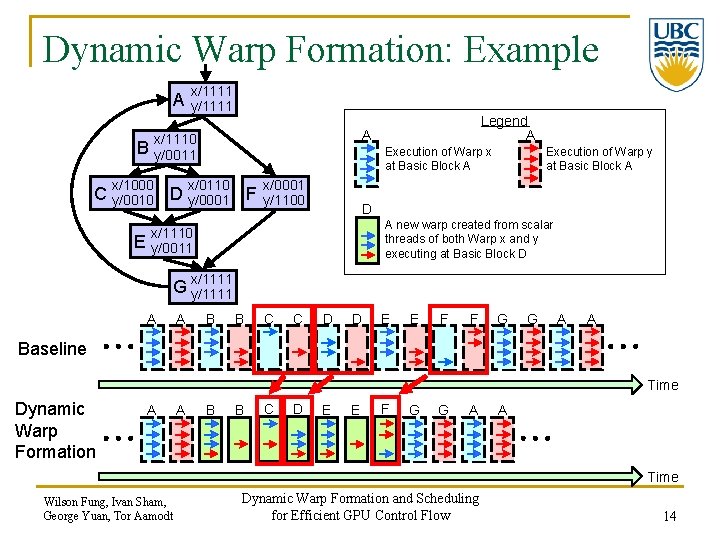

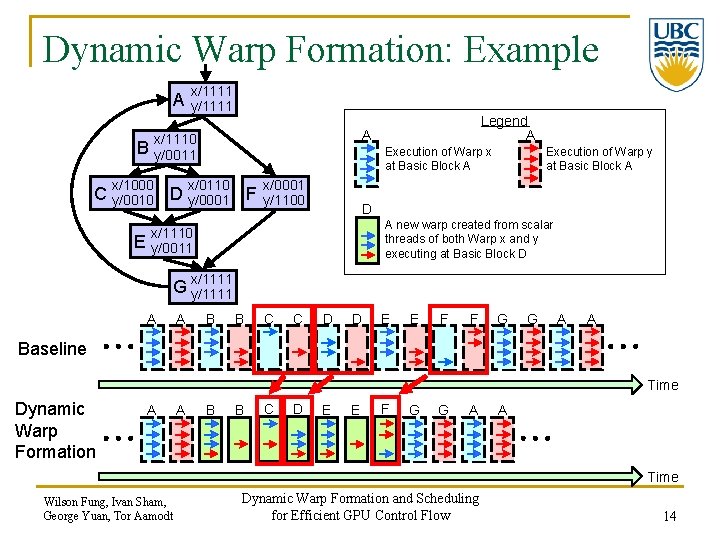

Dynamic Warp Formation: Example A x/1111 y/1111 A x/1110 y/0011 B x/1000 Execution of Warp x at Basic Block A x/0110 C y/0010 D y/0001 F E Legend A x/0001 y/1100 Execution of Warp y at Basic Block A D A new warp created from scalar threads of both Warp x and y executing at Basic Block D x/1110 y/0011 x/1111 G y/1111 A A B B C C D D E E F F G G A A Baseline Time Dynamic Warp Formation A A B B C D E E F G G A A Time Wilson Fung, Ivan Sham, George Yuan, Tor Aamodt Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow 14

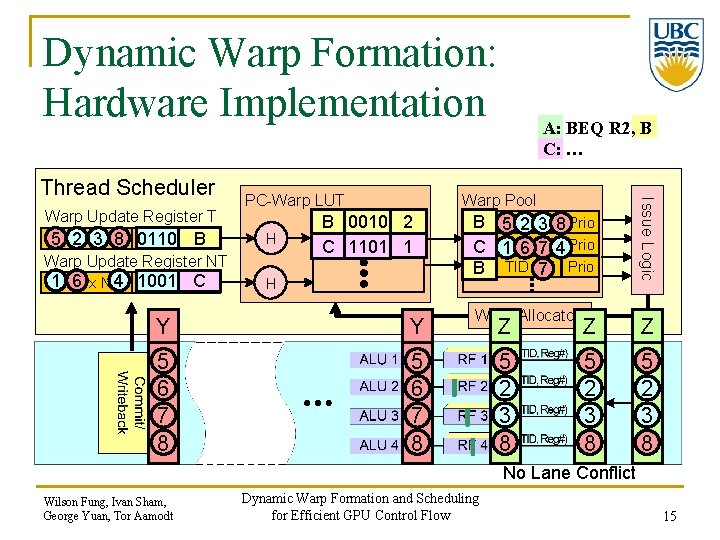

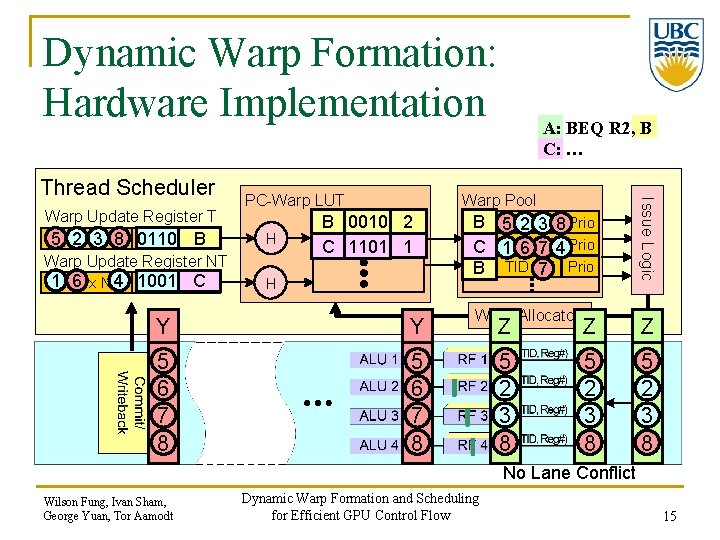

Dynamic Warp Formation: Hardware Implementation Warp Update Register T 5 TID 2 x 7 3 N 8 REQ 1011 PCBA 0110 PC-Warp LUT H Warp Update Register NT 1 TID 6 x N 4 REQ 1001 PCCB 0100 Warp Pool 0 2 B 0010 0110 PC OCC IDX 1 C 1101 1001 A 5 B PC 1 TID 2 x 3 N 4 8 Prio PC A 1 C 5 TID 6 x 7 N 4 8 Prio PC B TID x 7 N Prio H X Y 5 1 2 6 3 7 4 8 Warp Allocator Issue Logic Thread Scheduler A: BEQ R 2, B C: … X Y Z 1 5 6 2 7 3 4 8 No Lane Conflict Wilson Fung, Ivan Sham, George Yuan, Tor Aamodt Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow 15

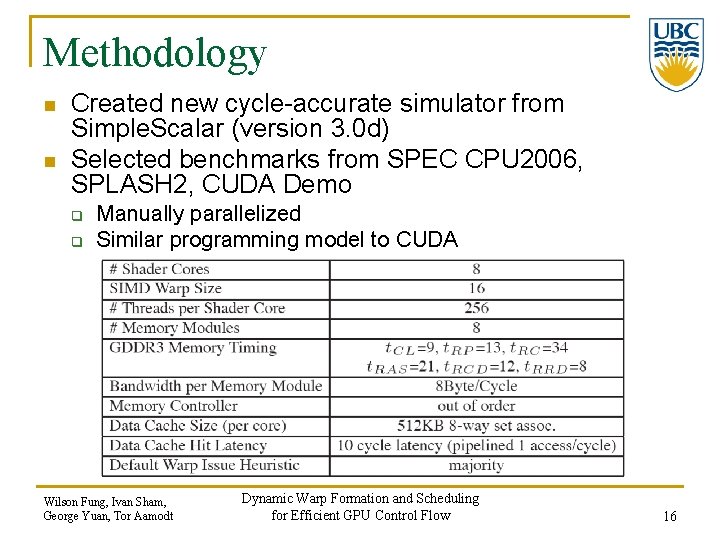

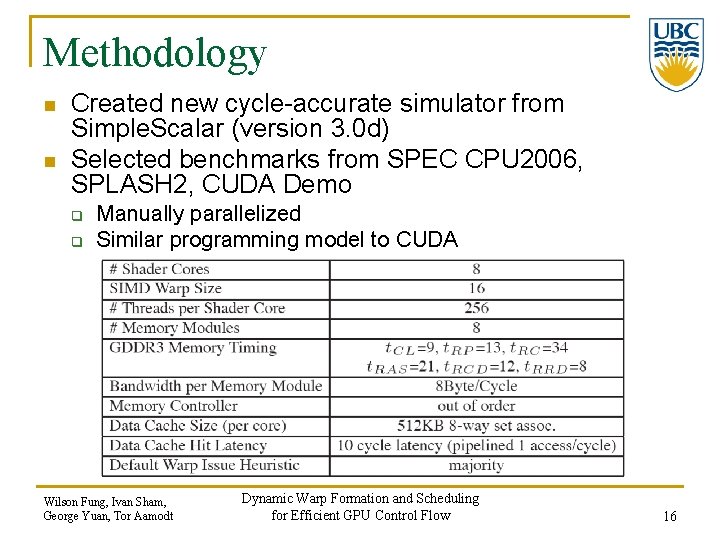

Methodology n n Created new cycle-accurate simulator from Simple. Scalar (version 3. 0 d) Selected benchmarks from SPEC CPU 2006, SPLASH 2, CUDA Demo q q Manually parallelized Similar programming model to CUDA Wilson Fung, Ivan Sham, George Yuan, Tor Aamodt Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow 16

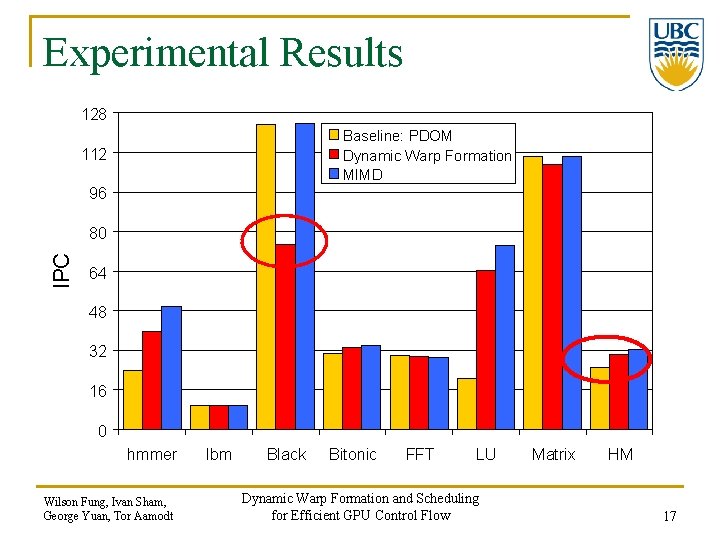

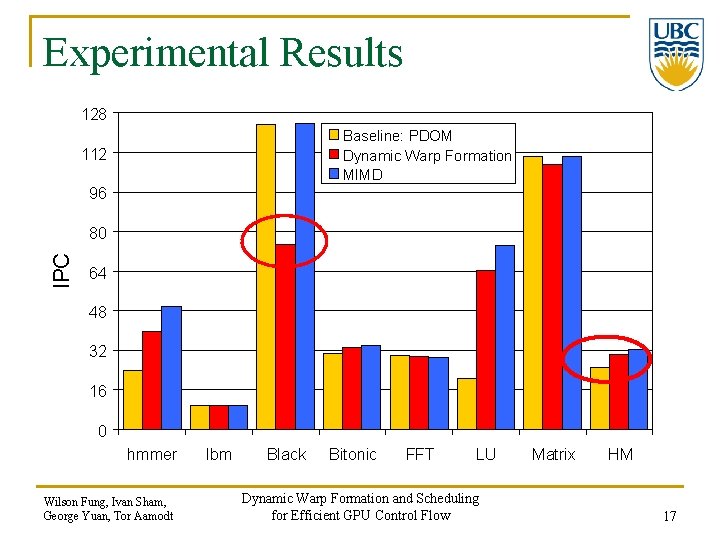

Experimental Results 128 Baseline: PDOM Dynamic Warp Formation MIMD 112 96 IPC 80 64 48 32 16 0 hmmer Wilson Fung, Ivan Sham, George Yuan, Tor Aamodt lbm Black Bitonic FFT LU Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow Matrix HM 17

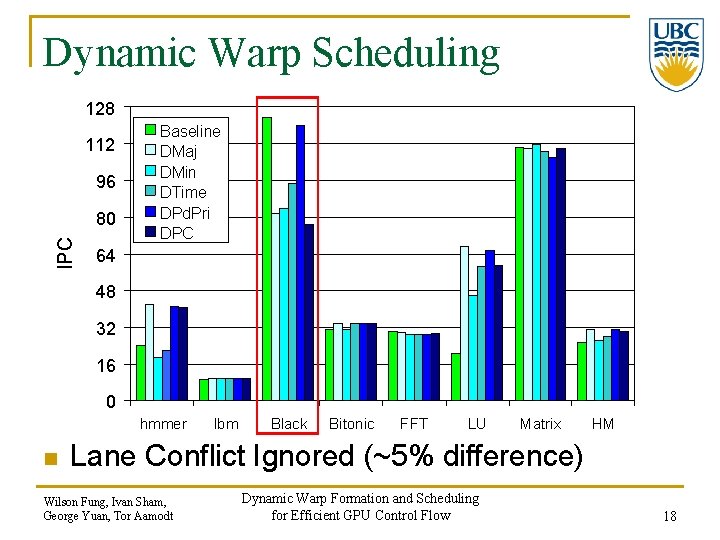

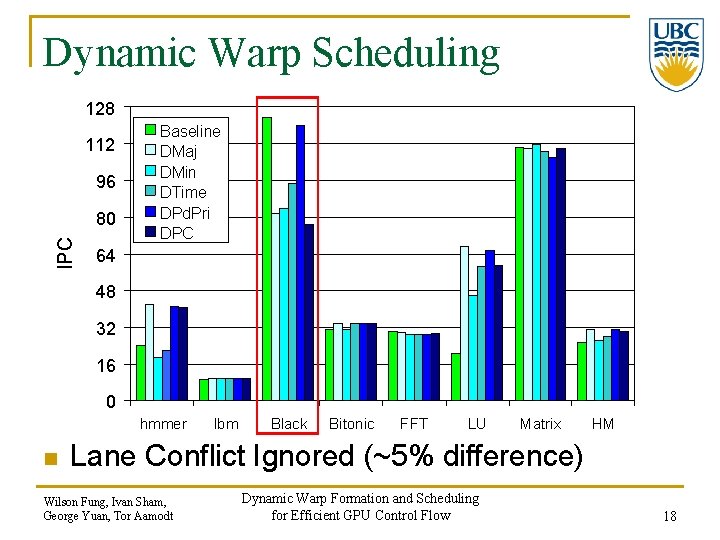

Dynamic Warp Scheduling 128 112 96 IPC 80 Baseline DMaj DMin DTime DPd. Pri DPC 64 48 32 16 0 hmmer n lbm Black Bitonic FFT LU Matrix HM Lane Conflict Ignored (~5% difference) Wilson Fung, Ivan Sham, George Yuan, Tor Aamodt Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow 18

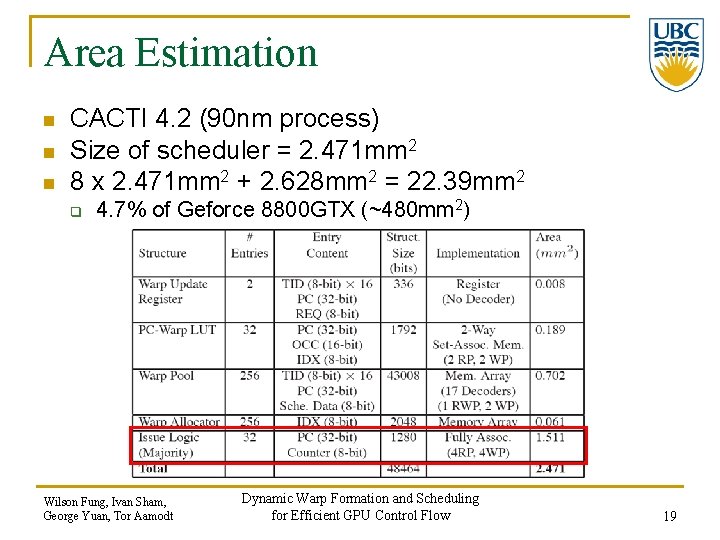

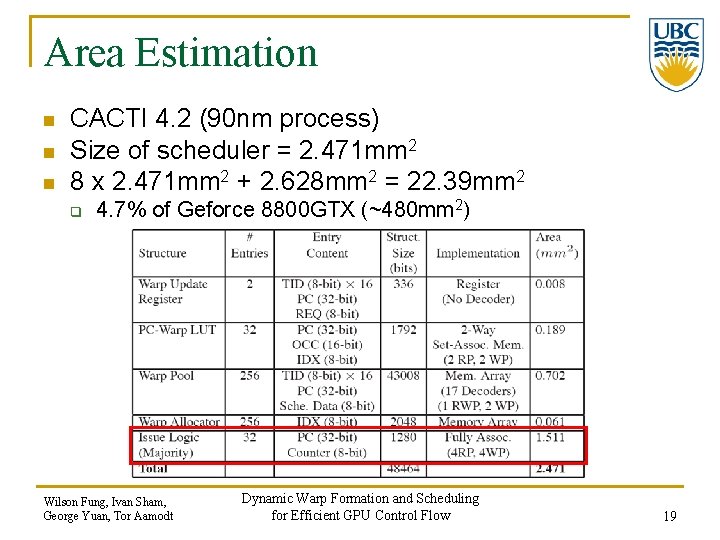

Area Estimation n CACTI 4. 2 (90 nm process) Size of scheduler = 2. 471 mm 2 8 x 2. 471 mm 2 + 2. 628 mm 2 = 22. 39 mm 2 q 4. 7% of Geforce 8800 GTX (~480 mm 2) Wilson Fung, Ivan Sham, George Yuan, Tor Aamodt Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow 19

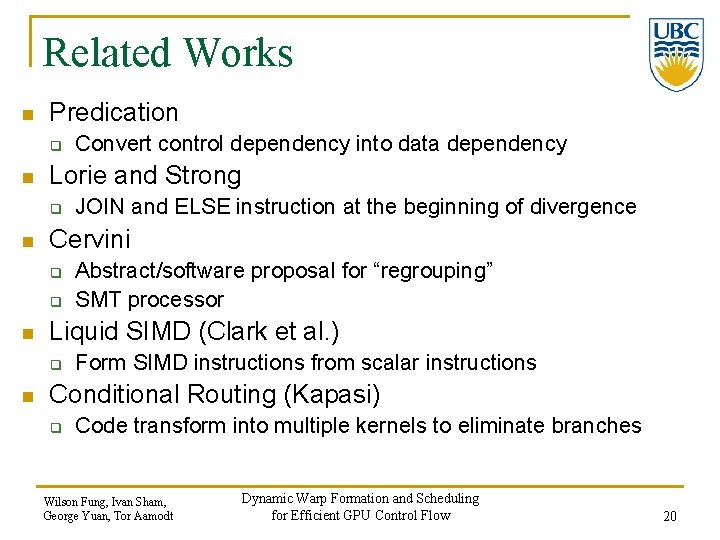

Related Works n Predication q n Lorie and Strong q n q Abstract/software proposal for “regrouping” SMT processor Liquid SIMD (Clark et al. ) q n JOIN and ELSE instruction at the beginning of divergence Cervini q n Convert control dependency into data dependency Form SIMD instructions from scalar instructions Conditional Routing (Kapasi) q Code transform into multiple kernels to eliminate branches Wilson Fung, Ivan Sham, George Yuan, Tor Aamodt Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow 20

Conclusion n Branch divergence can significantly degrade a GPU’s performance. q n Dynamic Warp Formation & Scheduling q q n 50. 5% performance loss with SIMD width = 16 20. 7% on average better than reconvergence 4. 7% area cost Future Work q Warp scheduling – Area and Performance Tradeoff Wilson Fung, Ivan Sham, George Yuan, Tor Aamodt Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow 21

Thank You. Questions? Wilson Fung, Ivan Sham, George Yuan, Tor Aamodt Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow 22

Shared Memory n n Banked local memory accessible by all threads within a shader core (a block) Idea: Break Ld/St into 2 micro-code: q q n Address Calculation Memory Access After address calculation, use bit vector to track bank access just like lane conflict in the scheduler Wilson Fung, Ivan Sham, George Yuan, Tor Aamodt Dynamic Warp Formation and Scheduling for Efficient GPU Control Flow 23