DataCentric System Design CS 6501 Fundamental Concepts Computing

- Slides: 37

Data-Centric System Design CS 6501 Fundamental Concepts: Computing Models Samira Khan University of Virginia Sep 4, 2019 The content and concept of this course are adapted from CMU ECE 740

Review Set 2 • Due Sep 11 • Choose 2 from a set of four • Dennis and Misunas, “A Preliminary Architecture for a Basic Data Flow Processor, ” ISCA 1974. • Arvind and Nikhil, “Executing a Program on the MIT Tagged-Token Dataflow Architecture”, IEEE TC 1990. • H. T. Kung, “Why Systolic Architectures? , ” IEEE Computer 1982. • Annaratone et al. , “Warp Architecture and Implementation, ” ISCA 1986. 2

AGENDA • Logistics • Review from last lecture • Fundamental concepts – Computing models 3

THE VON NEUMANN MODEL/ARCHITECTURE • Also called stored program computer (instructions in memory). Two key properties: • Stored program – Instructions stored in a linear memory array – Memory is unified between instructions and data • The interpretation of a stored value depends on the control signals When is a value interpreted as an instruction? • Sequential instruction processing – One instruction processed (fetched, executed, and completed) at a time – Program counter (instruction pointer) identifies the current instr. – Program counter is advanced sequentially except for control transfer instructions 4

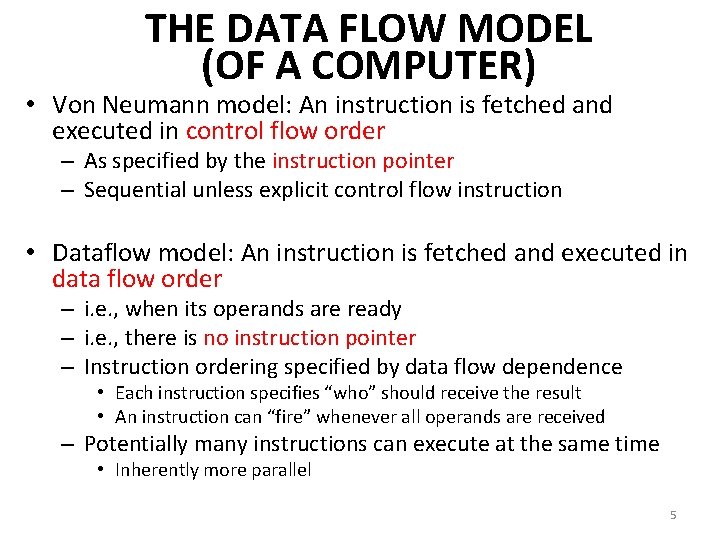

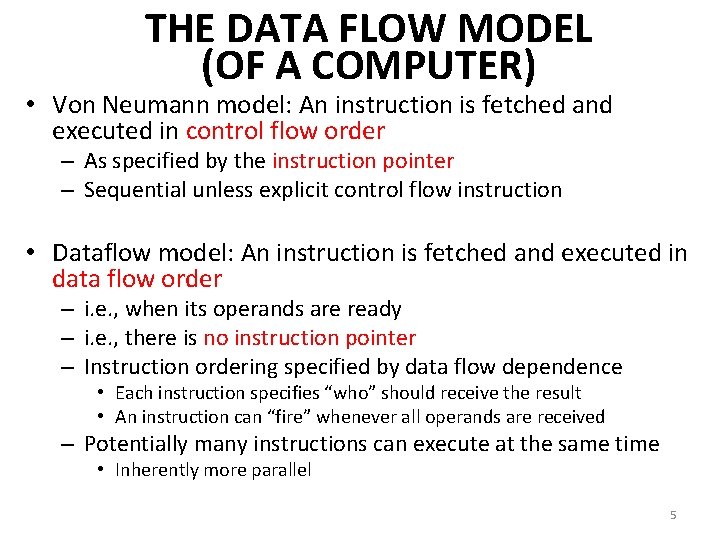

THE DATA FLOW MODEL (OF A COMPUTER) • Von Neumann model: An instruction is fetched and executed in control flow order – As specified by the instruction pointer – Sequential unless explicit control flow instruction • Dataflow model: An instruction is fetched and executed in data flow order – i. e. , when its operands are ready – i. e. , there is no instruction pointer – Instruction ordering specified by data flow dependence • Each instruction specifies “who” should receive the result • An instruction can “fire” whenever all operands are received – Potentially many instructions can execute at the same time • Inherently more parallel 5

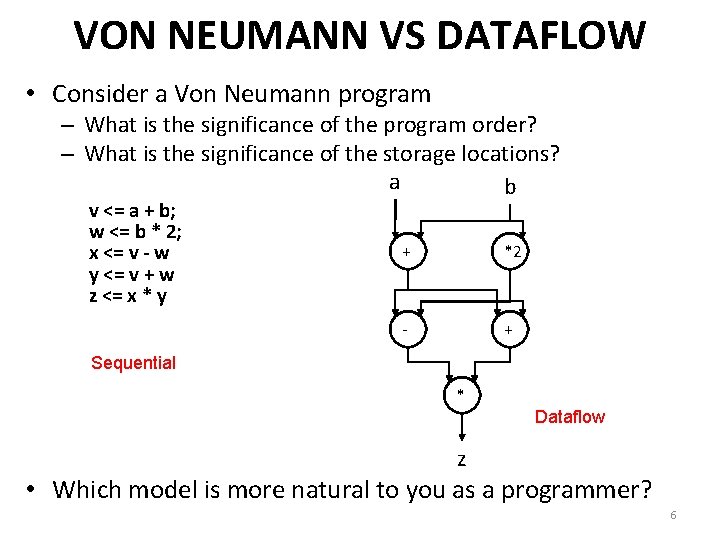

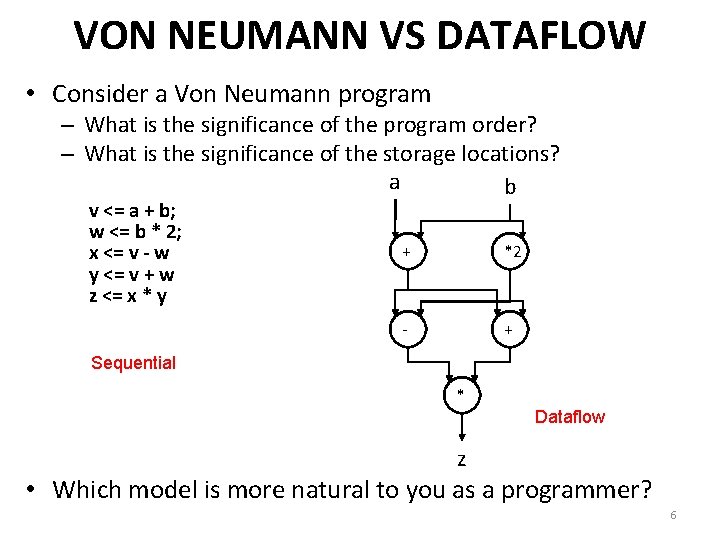

VON NEUMANN VS DATAFLOW • Consider a Von Neumann program – What is the significance of the program order? – What is the significance of the storage locations? a b v <= a + b; w <= b * 2; x <= v - w y <= v + w z <= x * y + *2 - + Sequential * Dataflow z • Which model is more natural to you as a programmer? 6

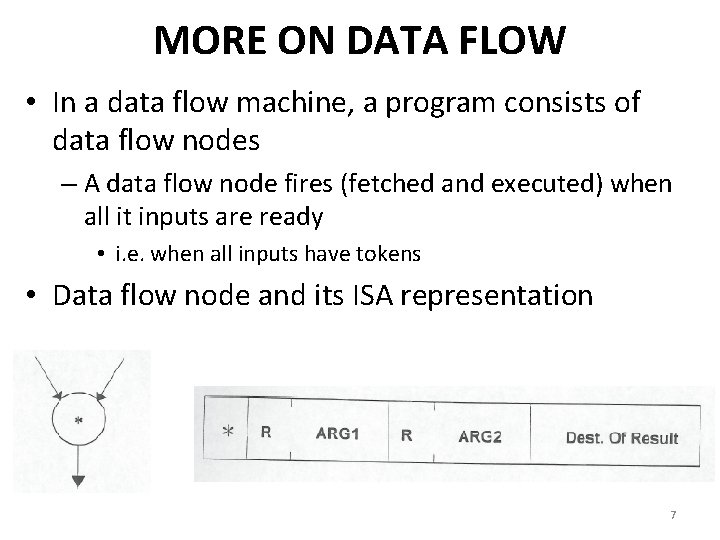

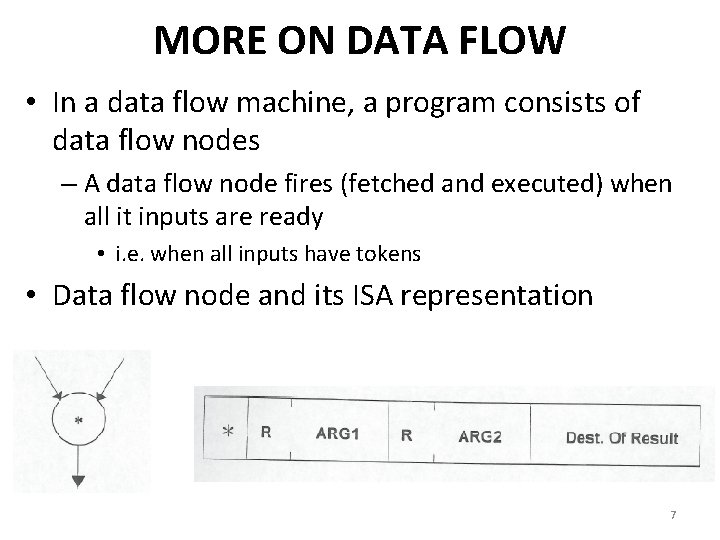

MORE ON DATA FLOW • In a data flow machine, a program consists of data flow nodes – A data flow node fires (fetched and executed) when all it inputs are ready • i. e. when all inputs have tokens • Data flow node and its ISA representation 7

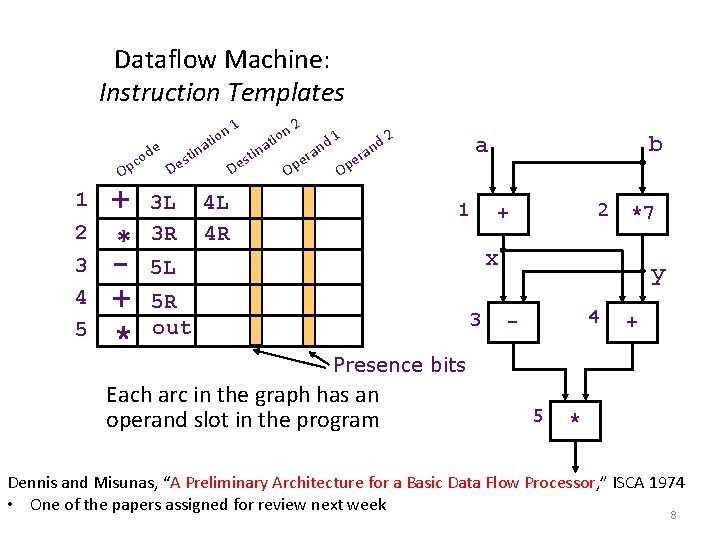

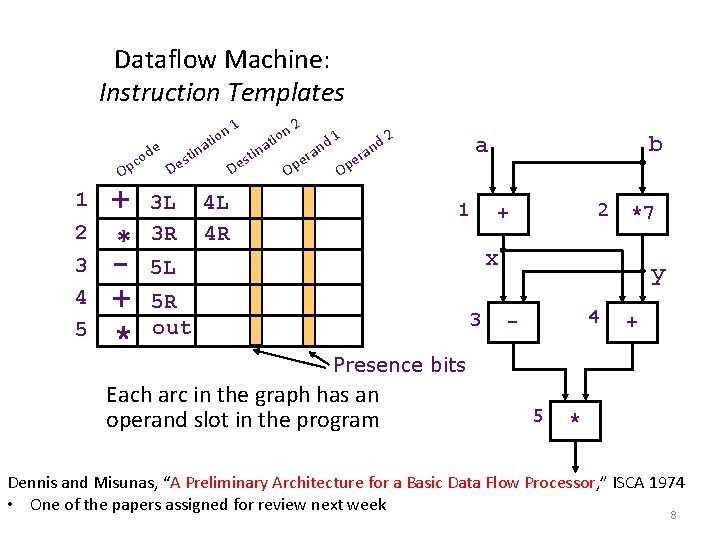

Dataflow Machine: Instruction Templates e od c Op 1 2 3 4 5 + * on ati n sti e D 3 L 3 R n 2 o i at 1 s De tin Op nd a r e 1 nd a r e 2 Op 4 L 4 R b a 1 2 + *7 x 5 L 5 R out 3 y 4 - + Presence bits Each arc in the graph has an operand slot in the program 5 * Dennis and Misunas, “A Preliminary Architecture for a Basic Data Flow Processor, ” ISCA 1974 • One of the papers assigned for review next week 8

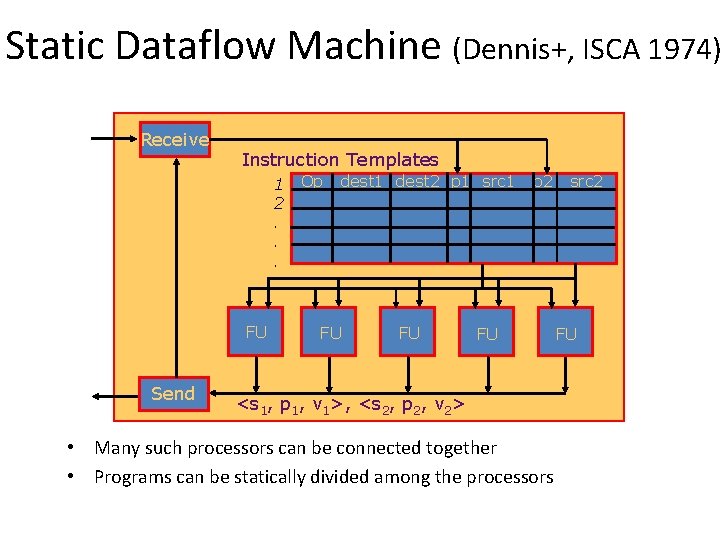

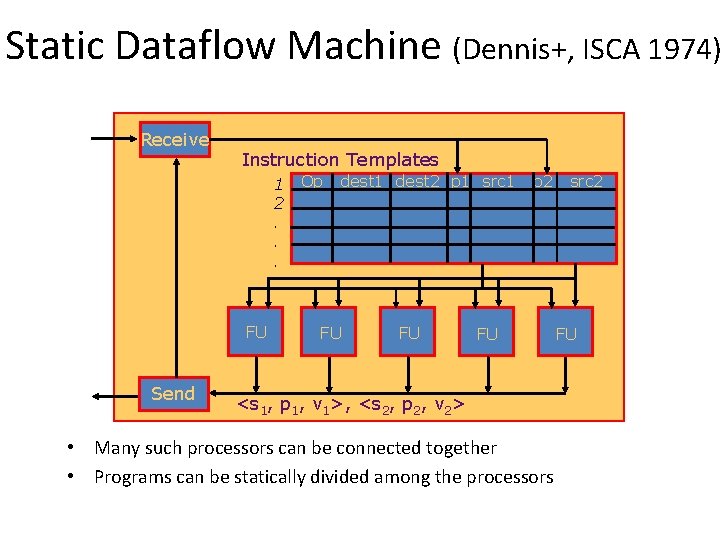

Static Dataflow Machine (Dennis+, ISCA 1974) Receive Instruction Templates 1 2. . . FU Send Op dest 1 dest 2 p 1 src 1 FU FU p 2 FU <s 1, p 1, v 1>, <s 2, p 2, v 2> • Many such processors can be connected together • Programs can be statically divided among the processors src 2 FU

Static Data Flow Machines • Mismatch between the model and the implementation – The model requires unbounded FIFO token queues per arc but the architecture provides storage for one token per arc – The architecture does not ensure FIFO order in the reuse of an operand slot • The static model does not support – Reentrant code • Function calls • Loops – Data Structures 10

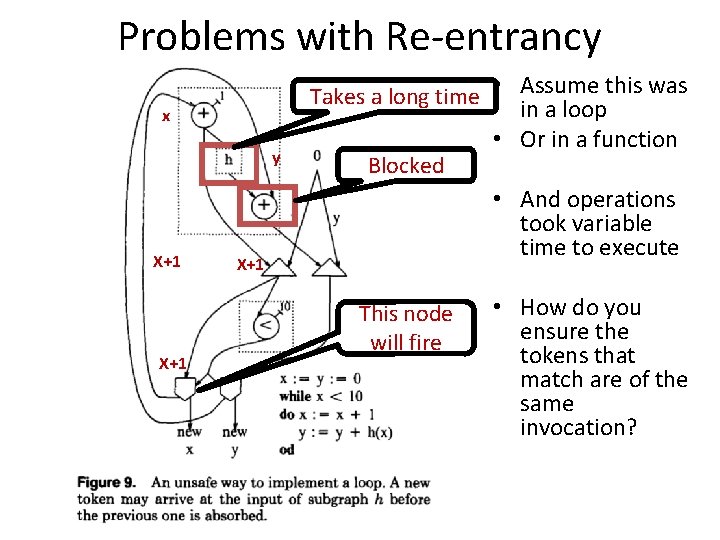

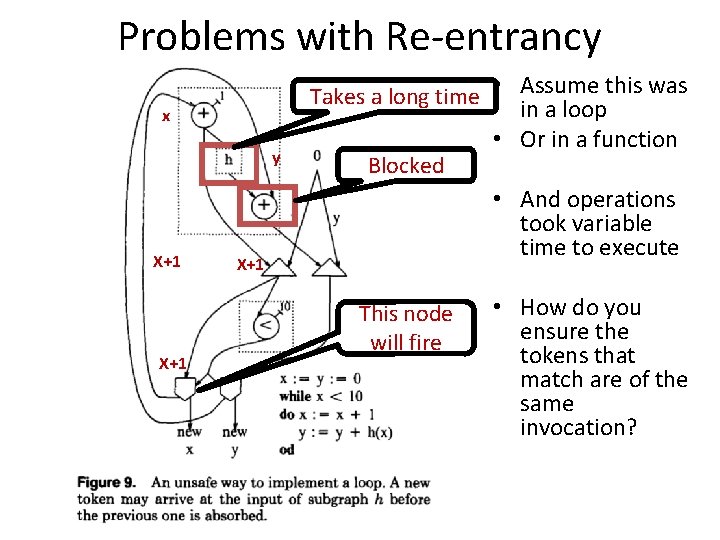

Problems with Re-entrancy x y X+1 X+1 Takes a long time • Assume this was in a loop • Or in a function Blocked • And operations took variable time to execute This node will fire 11 • How do you ensure the tokens that match are of the same invocation?

Monsoon Dataflow Processor 1990 12

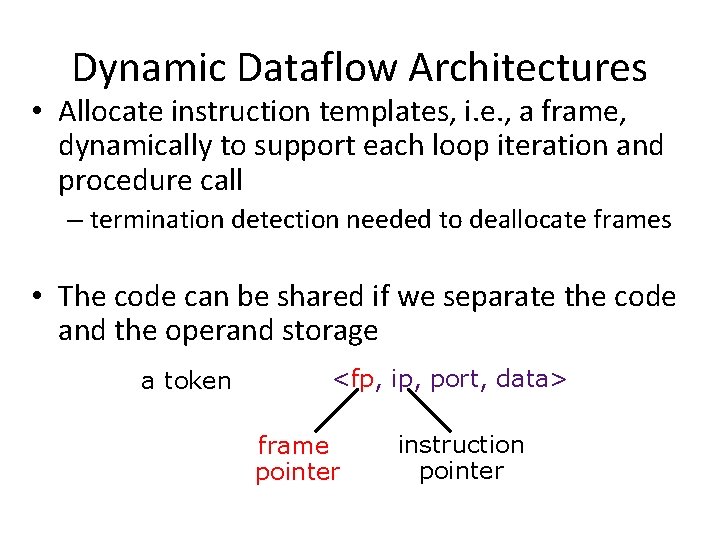

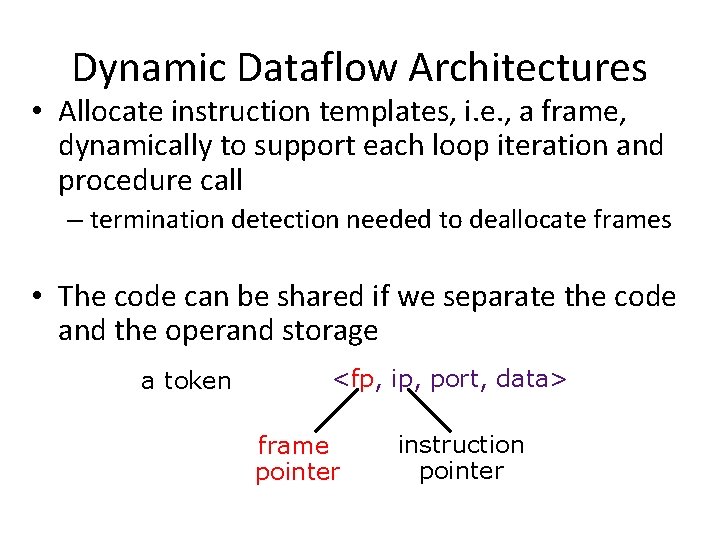

Dynamic Dataflow Architectures • Allocate instruction templates, i. e. , a frame, dynamically to support each loop iteration and procedure call – termination detection needed to deallocate frames • The code can be shared if we separate the code and the operand storage a token <fp, ip, port, data> frame pointer instruction pointer

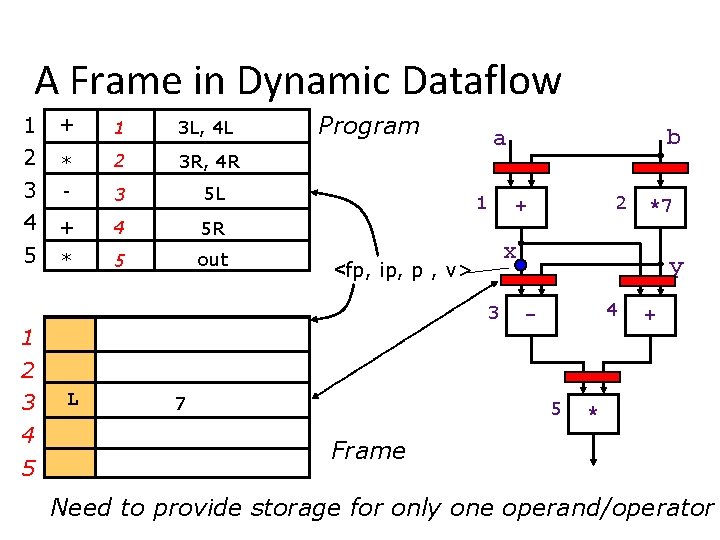

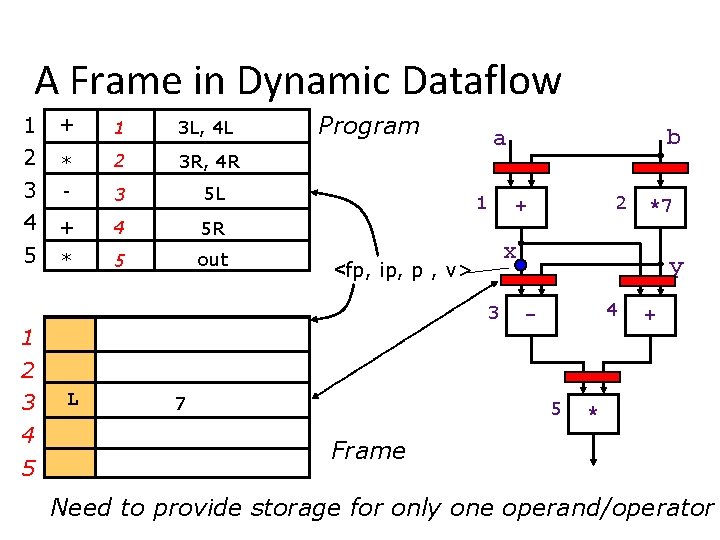

A Frame in Dynamic Dataflow 1 2 + 1 3 L, 4 L * 2 3 R, 4 R 3 4 5 - 3 5 L + 4 5 R * 5 out Program 1 4 5 L 7 *7 x 3 2 + <fp, ip, p , v> 1 b a y 4 - 5 + * Frame Need to provide storage for only one operand/operator

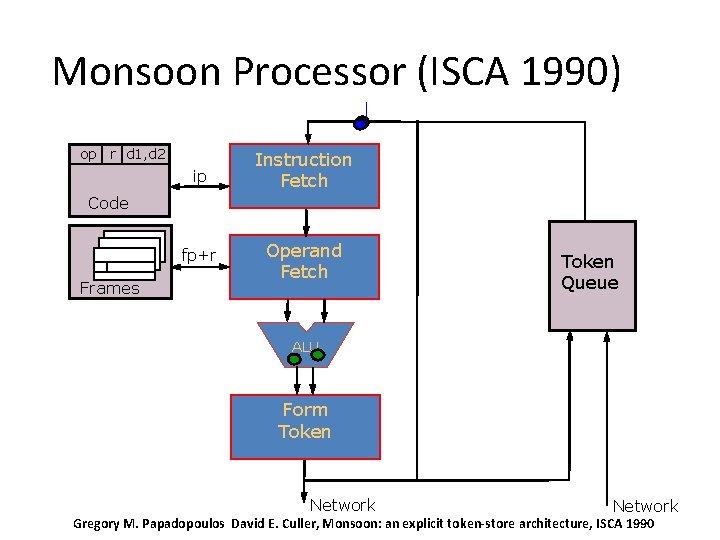

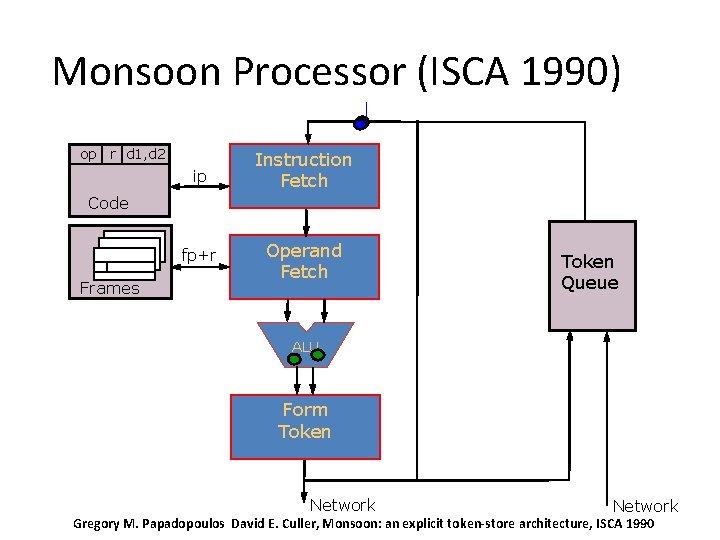

Monsoon Processor (ISCA 1990) op r d 1, d 2 ip Instruction Fetch fp+r Operand Fetch Code Frames Token Queue ALU Form Token Network Gregory M. Papadopoulos David E. Culler, Monsoon: an explicit token-store architecture, ISCA 1990

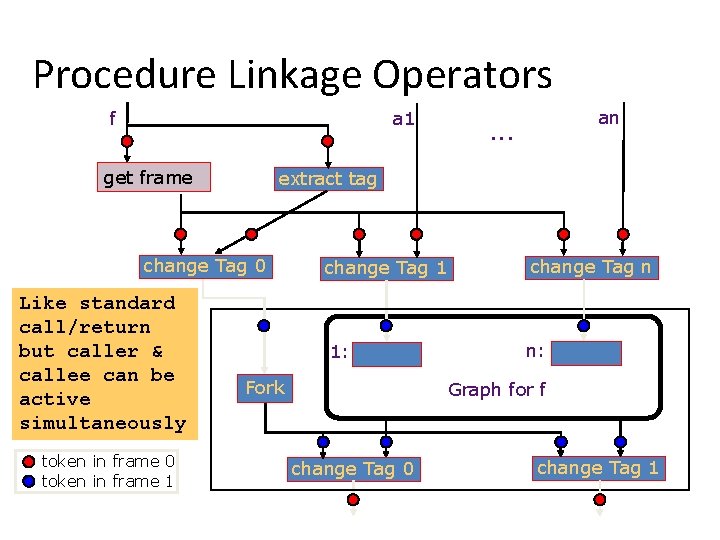

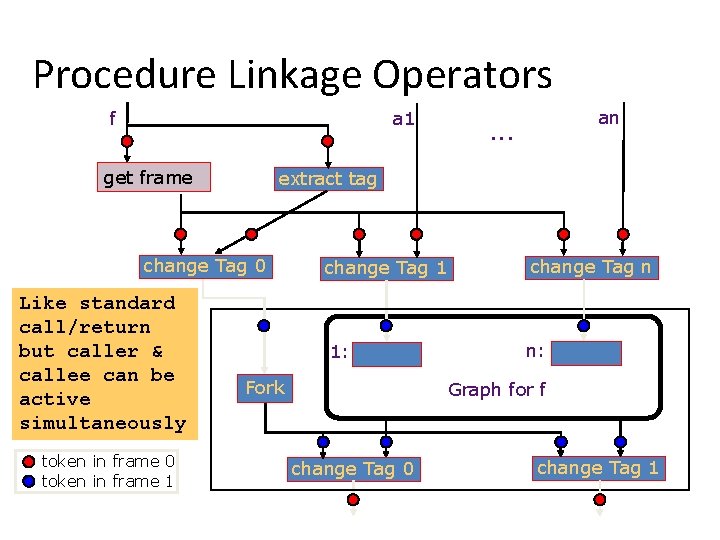

Procedure Linkage Operators f a 1 get frame extract tag change Tag 0 Like standard call/return but caller & callee can be active simultaneously token in frame 0 token in frame 1 an . . . change Tag 1 change Tag n 1: n: Fork Graph for f change Tag 0 change Tag 1

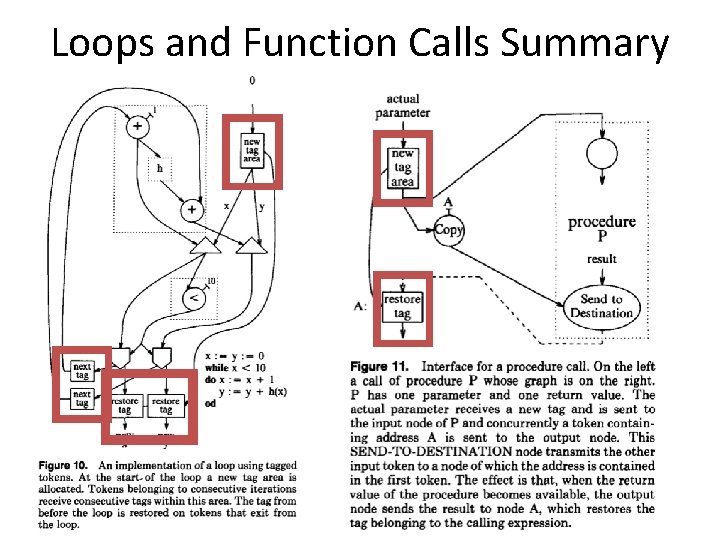

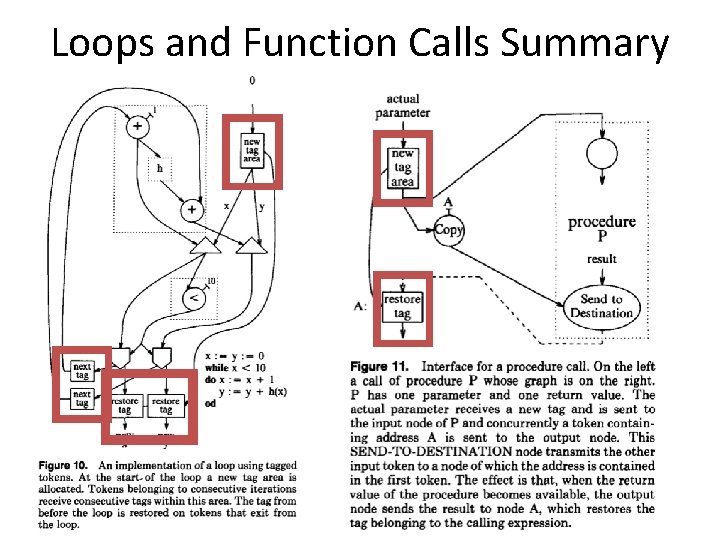

Loops and Function Calls Summary 17

DATA FLOW CHARACTERISTICS • Data-driven execution of instruction-level graphical code – Nodes are operators – Arcs are data (I/O) – As opposed to control-driven execution • Only real dependencies constrain processing • No sequential I-stream – No program counter • Operations execute asynchronously • Execution triggered by the presence of data 18

DATA FLOW ADVANTAGES/DISADVANTAGES • Advantages – Very good at exploiting irregular parallelism – Only real dependencies constrain processing • Disadvantages – Debugging difficult (no precise state) • Interrupt/exception handling is difficult (what is precise state semantics? ) – Too much parallelism? (Parallelism control needed) – High bookkeeping overhead (tag matching, data storage) – Instruction cycle is inefficient (delay between dependent instructions), memory locality is not exploited 19

DATA FLOW SUMMARY • Availability of data determines order of execution • A data flow node fires when its sources are ready • Programs represented as data flow graphs (of nodes) • Data Flow at the ISA level has not been (as) successful • Data Flow implementations under the hood (while preserving sequential ISA semantics) have been successful – Out of order execution – Hwu and Patt, “HPSm, a high performance restricted data flow architecture having minimal functionality, ” ISCA 1986. 20

OOO EXECUTION: RESTRICTED DATAFLOW • An out-of-order engine dynamically builds the dataflow graph of a piece of the program – which piece? • The dataflow graph is limited to the instruction window – Instruction window: all decoded but not yet retired instructions • Can we do it for the whole program? • Why would we like to? • In other words, how can we have a large instruction window? 21

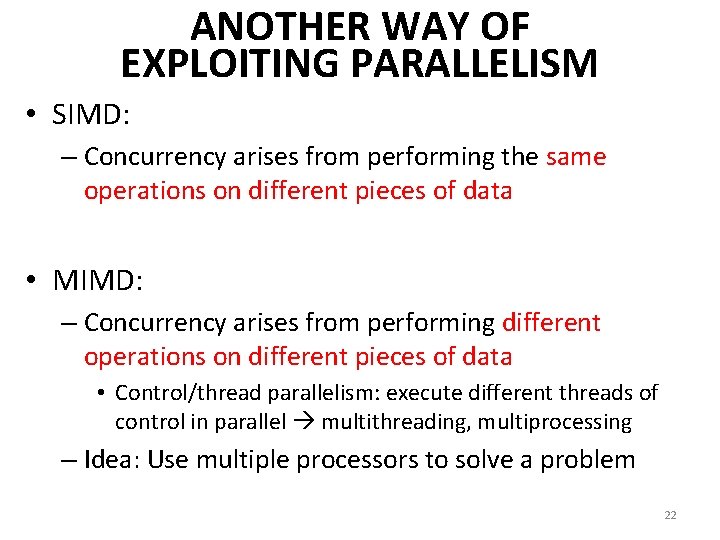

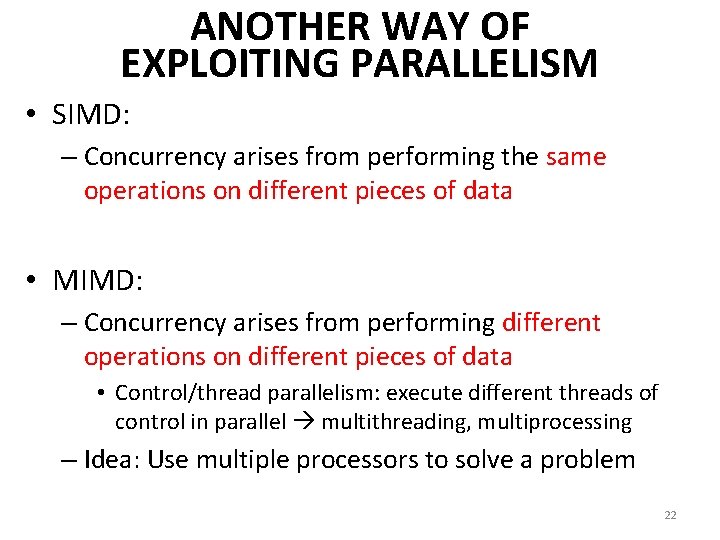

ANOTHER WAY OF EXPLOITING PARALLELISM • SIMD: – Concurrency arises from performing the same operations on different pieces of data • MIMD: – Concurrency arises from performing different operations on different pieces of data • Control/thread parallelism: execute different threads of control in parallel multithreading, multiprocessing – Idea: Use multiple processors to solve a problem 22

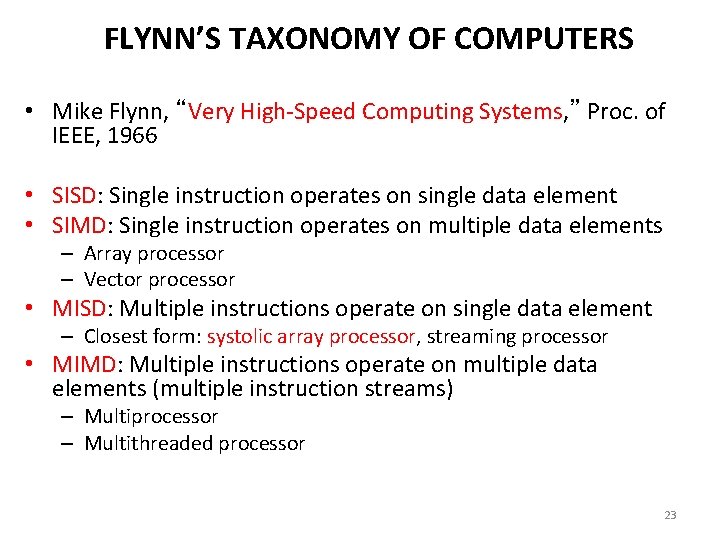

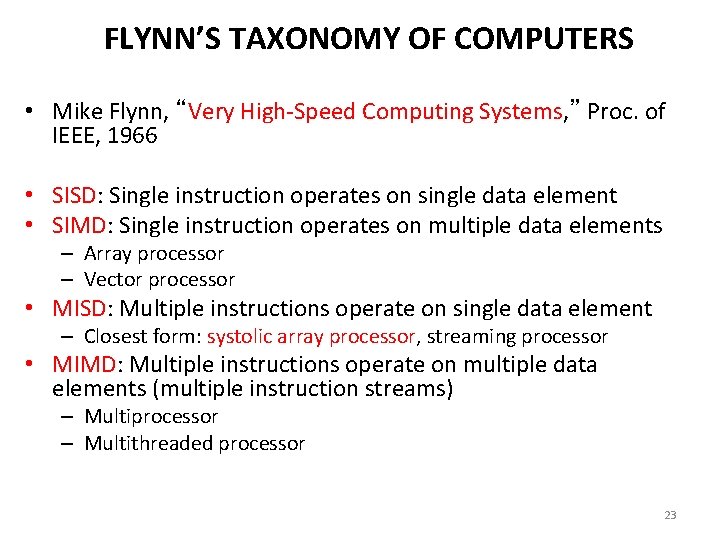

FLYNN’S TAXONOMY OF COMPUTERS • Mike Flynn, “Very High-Speed Computing Systems, ” Proc. of IEEE, 1966 • SISD: Single instruction operates on single data element • SIMD: Single instruction operates on multiple data elements – Array processor – Vector processor • MISD: Multiple instructions operate on single data element – Closest form: systolic array processor, streaming processor • MIMD: Multiple instructions operate on multiple data elements (multiple instruction streams) – Multiprocessor – Multithreaded processor 23

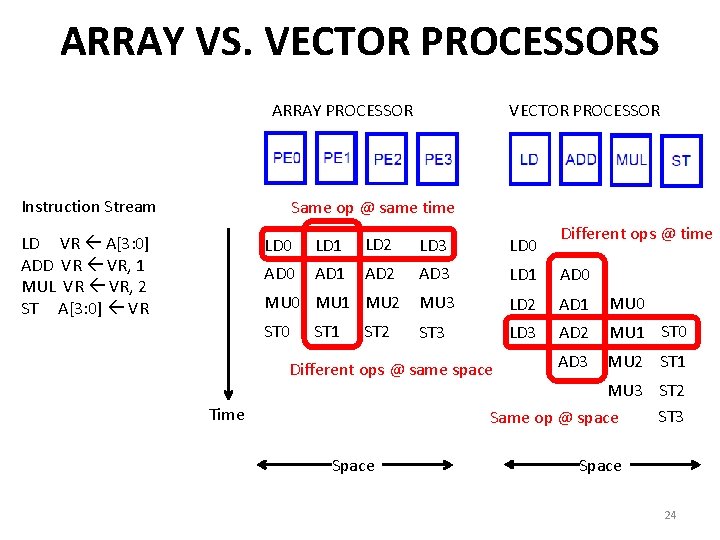

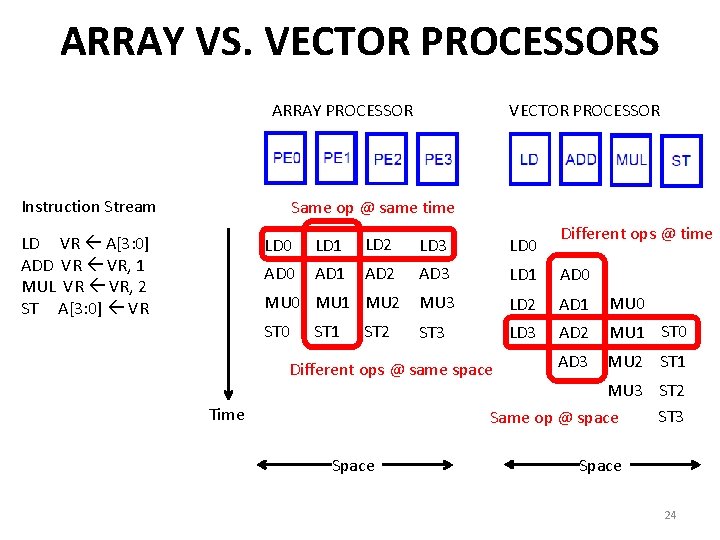

ARRAY VS. VECTOR PROCESSORS ARRAY PROCESSOR Instruction Stream VECTOR PROCESSOR Same op @ same time LD VR A[3: 0] ADD VR VR, 1 MUL VR VR, 2 ST A[3: 0] VR Different ops @ time LD 0 LD 1 LD 2 LD 3 LD 0 AD 1 AD 2 AD 3 LD 1 AD 0 MU 3 LD 2 AD 1 MU 0 ST 3 LD 3 AD 2 MU 1 ST 0 AD 3 MU 2 ST 1 MU 0 MU 1 MU 2 ST 0 ST 1 ST 2 Different ops @ same space MU 3 ST 2 ST 3 Same op @ space Time Space 24

SCALAR PROCESSING • Conventional form of processing (von Neumann model) add r 1, r 2, r 3 25

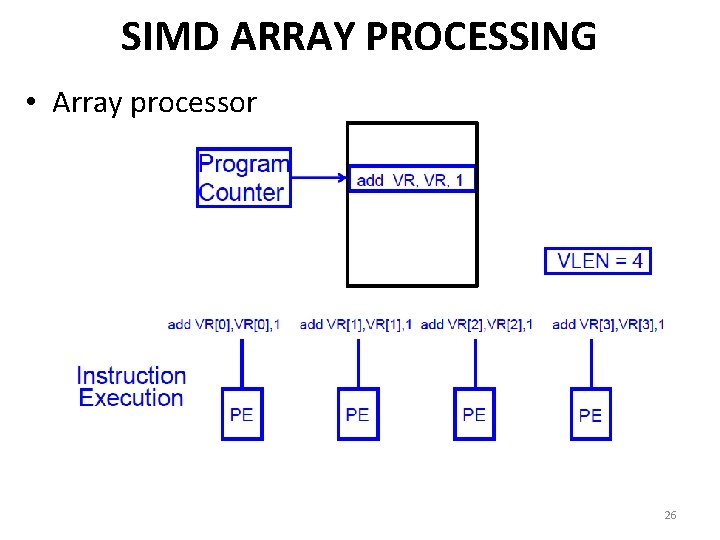

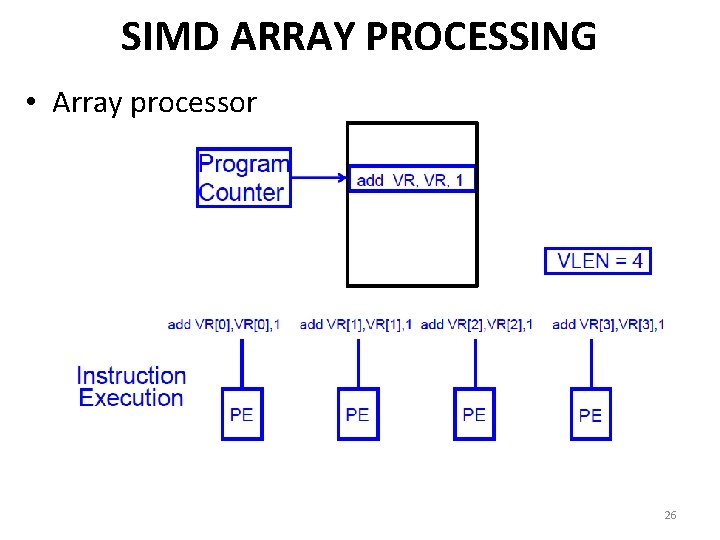

SIMD ARRAY PROCESSING • Array processor 26

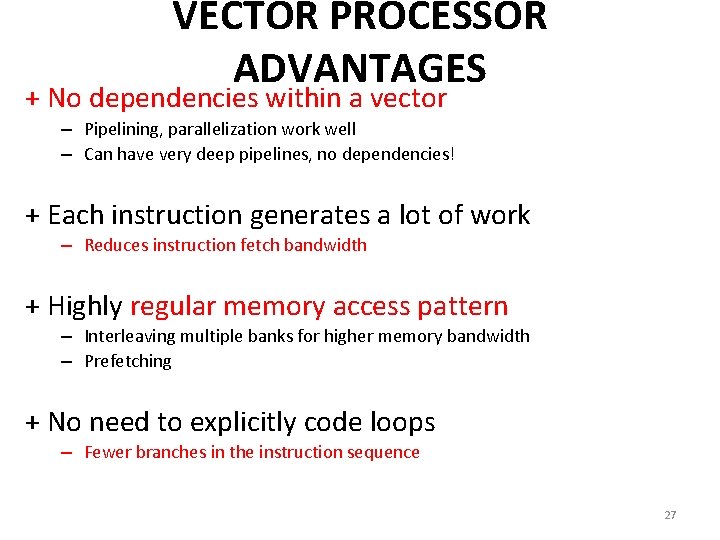

VECTOR PROCESSOR ADVANTAGES + No dependencies within a vector – Pipelining, parallelization work well – Can have very deep pipelines, no dependencies! + Each instruction generates a lot of work – Reduces instruction fetch bandwidth + Highly regular memory access pattern – Interleaving multiple banks for higher memory bandwidth – Prefetching + No need to explicitly code loops – Fewer branches in the instruction sequence 27

VECTOR PROCESSOR DISADVANTAGES -- Works (only) if parallelism is regular (data/SIMD parallelism) ++ Vector operations -- Very inefficient if parallelism is irregular -- How about searching for a key in a linked list? Fisher, “Very Long Instruction Word Architectures and the ELI-512, ” ISCA 1983. 28

VECTOR PROCESSOR LIMITATIONS -- Memory (bandwidth) can easily become a bottleneck, especially if 1. compute/memory operation balance is not maintained 2. data is not mapped appropriately to memory banks 29

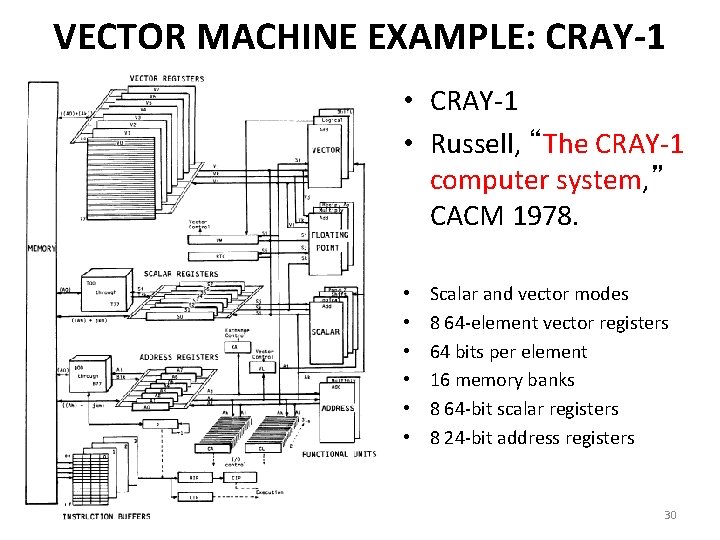

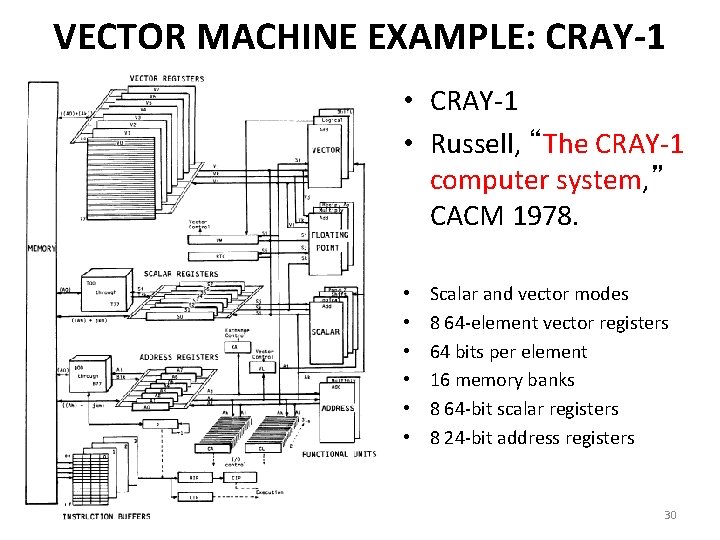

VECTOR MACHINE EXAMPLE: CRAY-1 • Russell, “The CRAY-1 computer system, ” CACM 1978. • • • Scalar and vector modes 8 64 -element vector registers 64 bits per element 16 memory banks 8 64 -bit scalar registers 8 24 -bit address registers 30

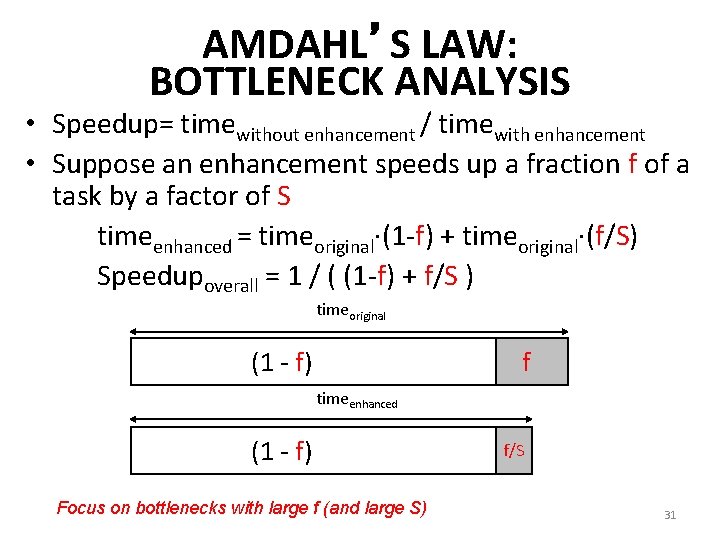

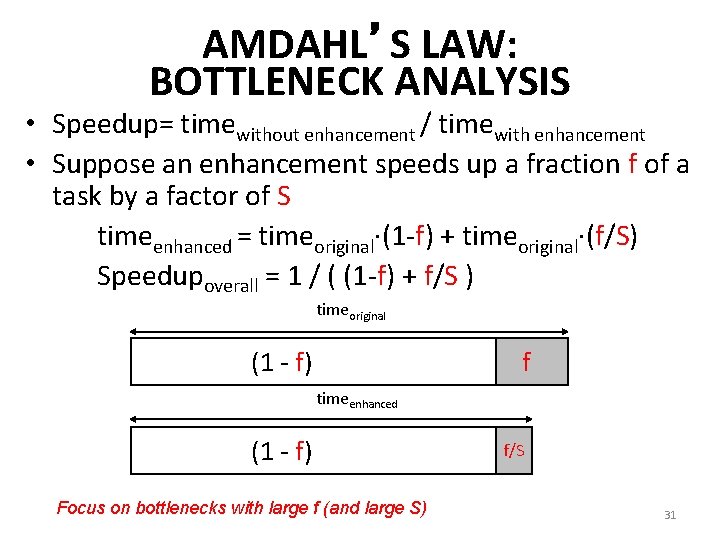

AMDAHL’S LAW: BOTTLENECK ANALYSIS • Speedup= timewithout enhancement / timewith enhancement • Suppose an enhancement speeds up a fraction f of a task by a factor of S timeenhanced = timeoriginal·(1 -f) + timeoriginal·(f/S) Speedupoverall = 1 / ( (1 -f) + f/S ) timeoriginal (1 - f) f timeenhanced (1 - f) Focus on bottlenecks with large f (and large S) f/S 31

FLYNN’S TAXONOMY OF COMPUTERS • Mike Flynn, “Very High-Speed Computing Systems, ” Proc. of IEEE, 1966 • SISD: Single instruction operates on single data element • SIMD: Single instruction operates on multiple data elements – Array processor – Vector processor • MISD: Multiple instructions operate on single data element – Closest form: systolic array processor, streaming processor • MIMD: Multiple instructions operate on multiple data elements (multiple instruction streams) – Multiprocessor – Multithreaded processor 32

SYSTOLIC ARRAYS

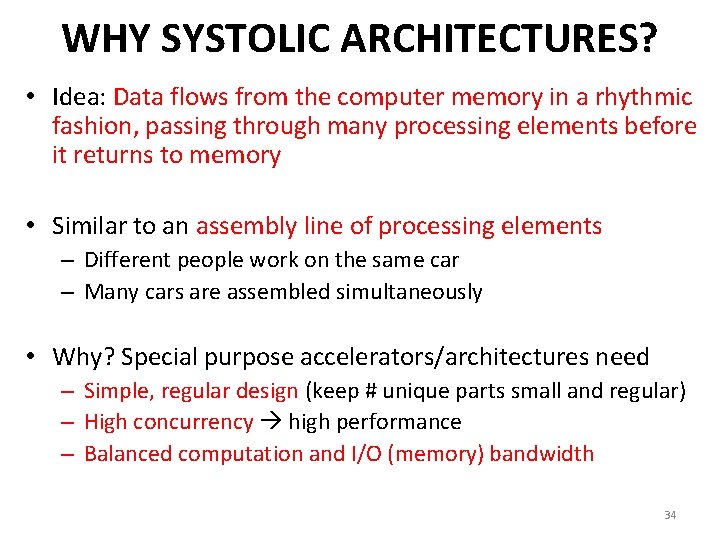

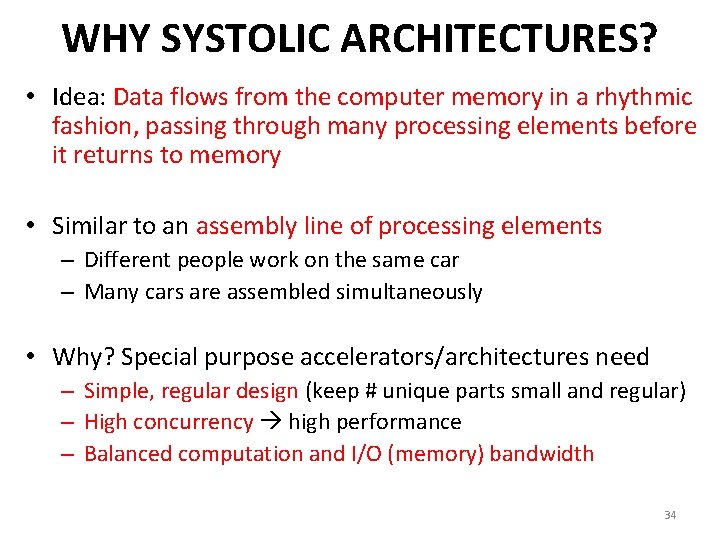

WHY SYSTOLIC ARCHITECTURES? • Idea: Data flows from the computer memory in a rhythmic fashion, passing through many processing elements before it returns to memory • Similar to an assembly line of processing elements – Different people work on the same car – Many cars are assembled simultaneously • Why? Special purpose accelerators/architectures need – Simple, regular design (keep # unique parts small and regular) – High concurrency high performance – Balanced computation and I/O (memory) bandwidth 34

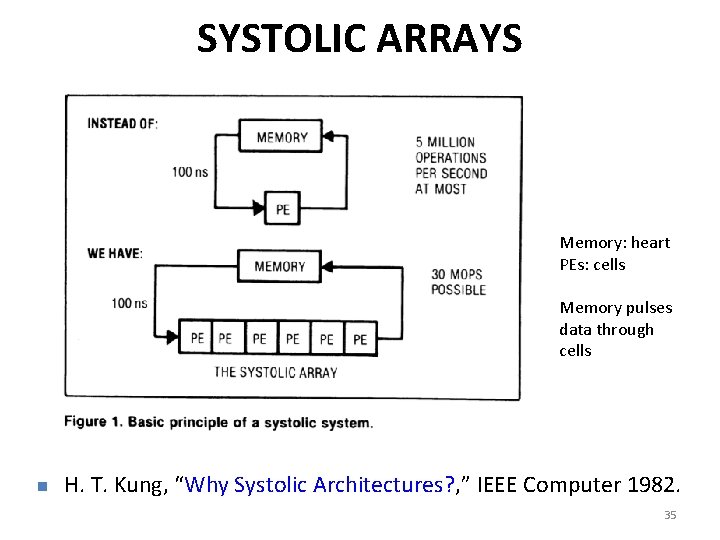

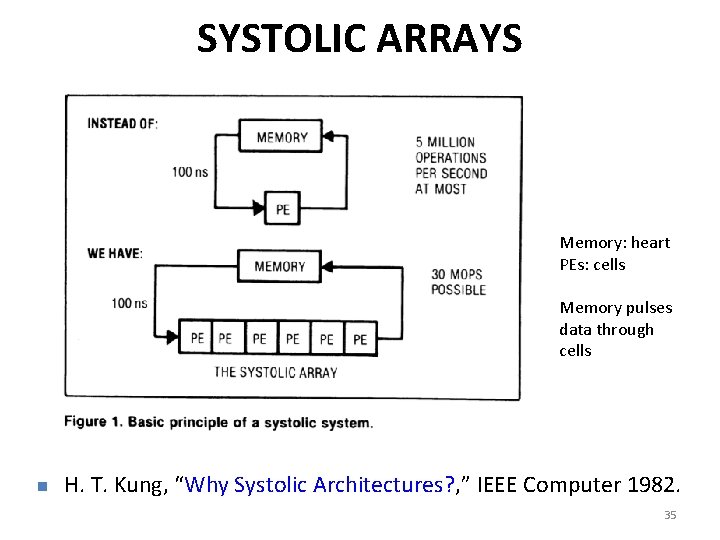

SYSTOLIC ARRAYS Memory: heart PEs: cells Memory pulses data through cells n H. T. Kung, “Why Systolic Architectures? , ” IEEE Computer 1982. 35

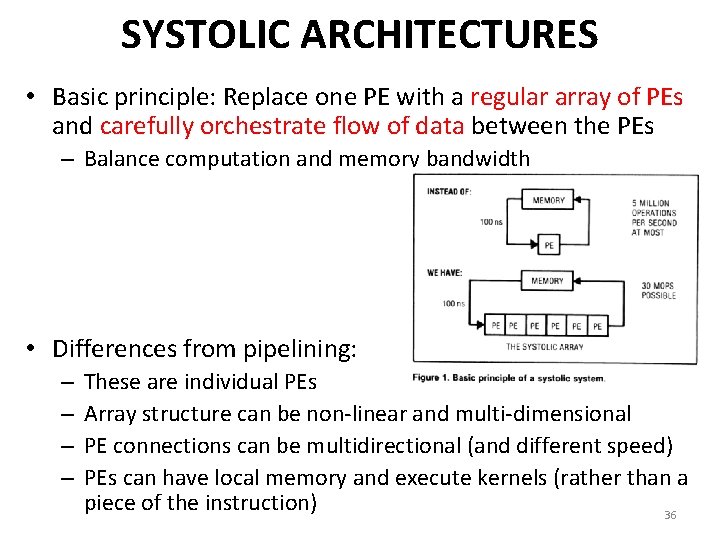

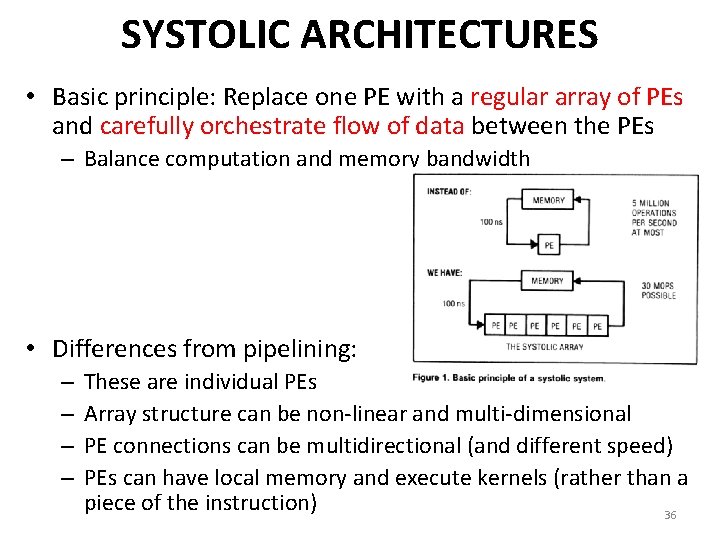

SYSTOLIC ARCHITECTURES • Basic principle: Replace one PE with a regular array of PEs and carefully orchestrate flow of data between the PEs – Balance computation and memory bandwidth • Differences from pipelining: – – These are individual PEs Array structure can be non-linear and multi-dimensional PE connections can be multidirectional (and different speed) PEs can have local memory and execute kernels (rather than a piece of the instruction) 36

Data-Centric System Design CS 6501 Fundamental Concepts: Computing Models Samira Khan University of Virginia Sep 4, 2019 The content and concept of this course are adapted from CMU ECE 740