COT 4600 Operating Systems Spring 2011 Dan C

- Slides: 22

COT 4600 Operating Systems Spring 2011 Dan C. Marinescu Office: HEC 304 Office hours: Tu-Th 5: 00 -6: 00 PM

Lecture 21 - Thursday April 7, 2011 n Last time: ¨ n Scheduling Today: The scheduler ¨ Multi-level memories ¨ n Next Time: ¨ Memory characterization Multilevel memories management using virtual memory ¨ Adding multi-level memory management to virtual memory ¨ Page replacement algorithms ¨ Lecture 21 2

The scheduler n n n The system component which manages the allocation of the processor/core. It runs inside the processor thread and implements the scheduling policies. Other functions Determines the burst ¨ Manages multiple queues of threads ¨ Lecture 20 3

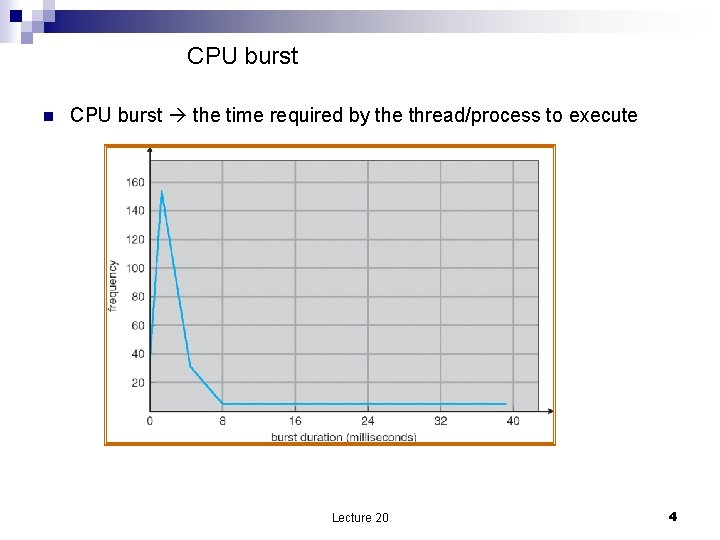

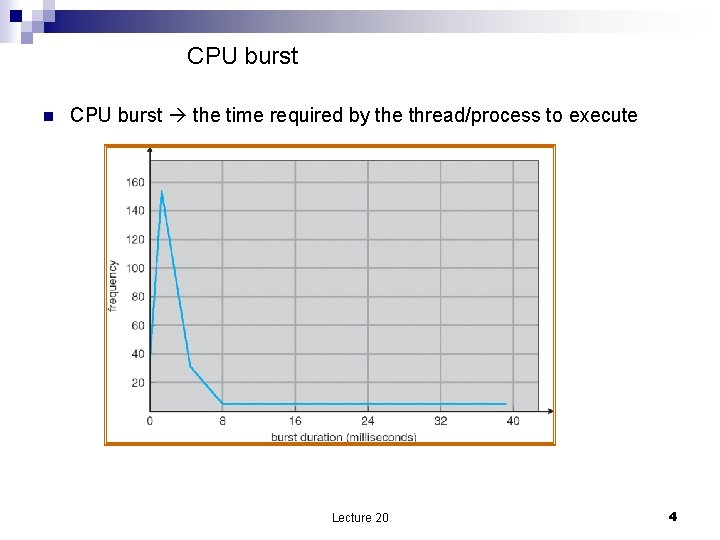

CPU burst n CPU burst the time required by the thread/process to execute Lecture 20 4

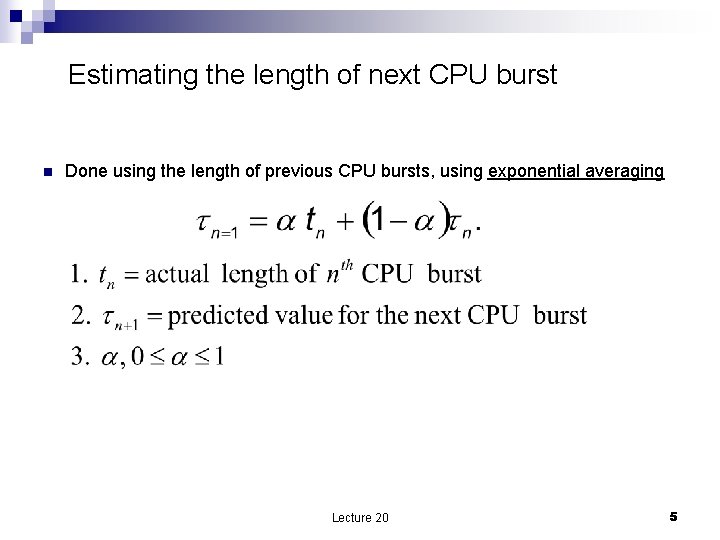

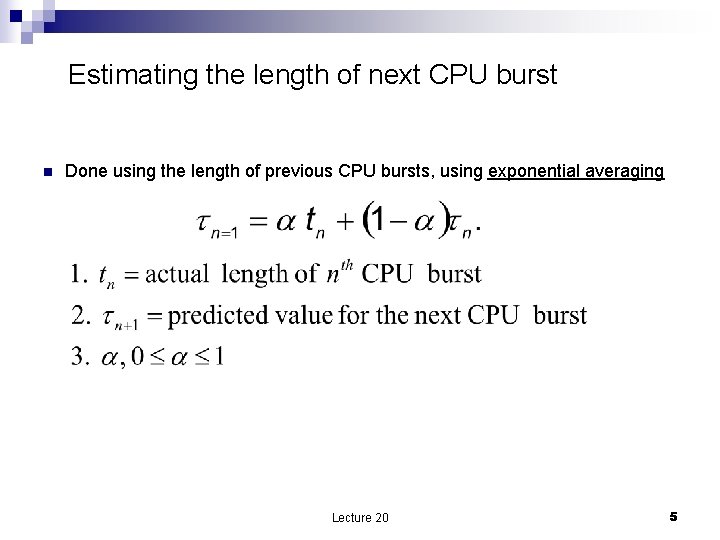

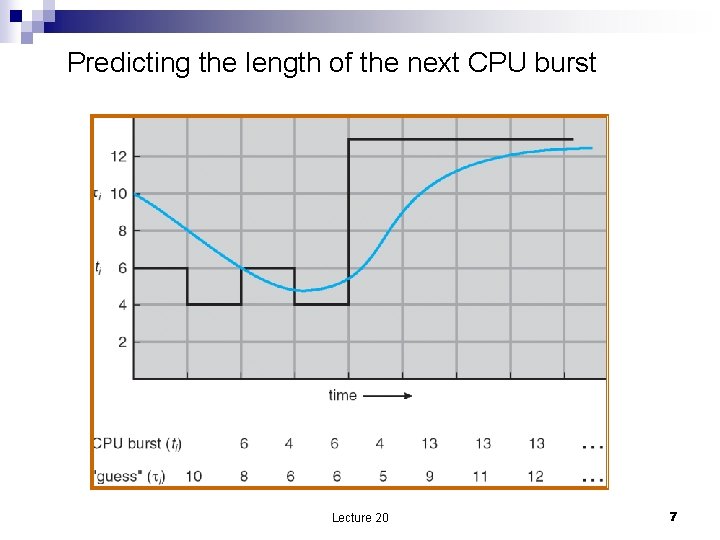

Estimating the length of next CPU burst n Done using the length of previous CPU bursts, using exponential averaging Lecture 20 5

Exponential averaging n n =0 ¨ n+1 = n ¨ Recent history does not count =1 ¨ n+1 = tn ¨ Only the actual last CPU burst counts If we expand the formula, we get: n+1 = tn+(1 - ) tn -1 + … +(1 - )j tn -j + … +(1 - )n +1 0 Since both and (1 - ) are less than or equal to 1, each successive term has less weight than its predecessor Lecture 20 6

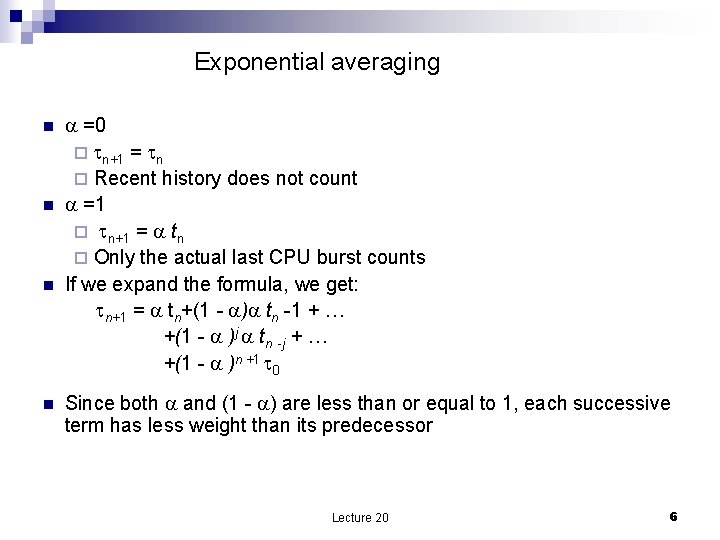

Predicting the length of the next CPU burst Lecture 20 7

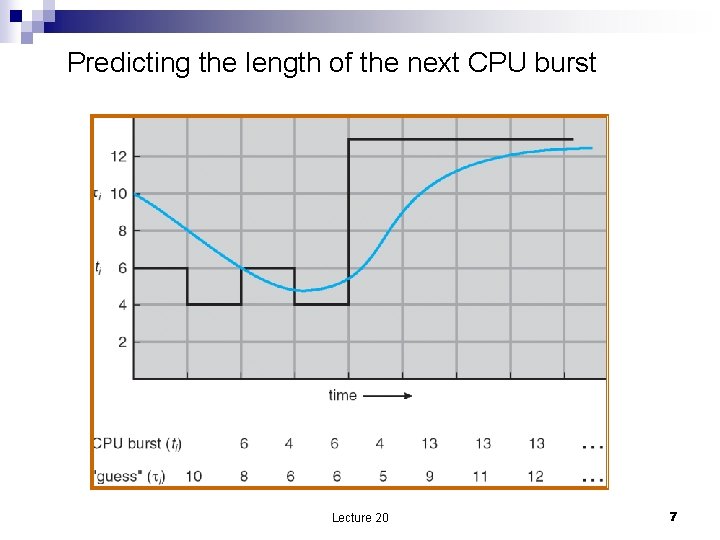

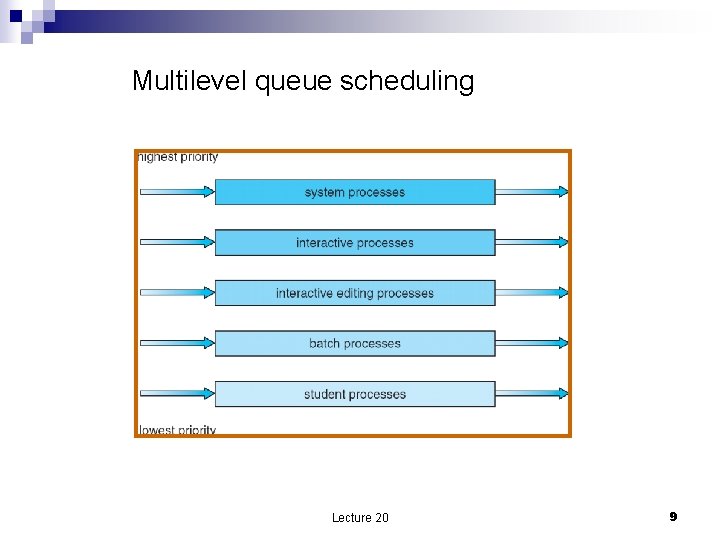

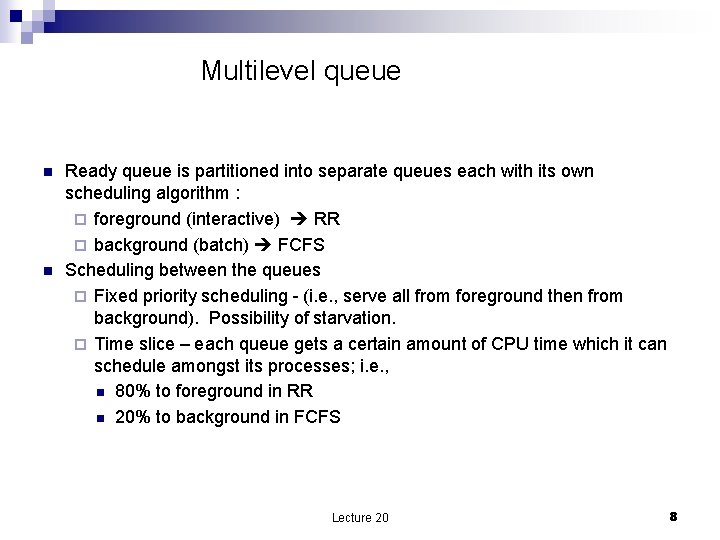

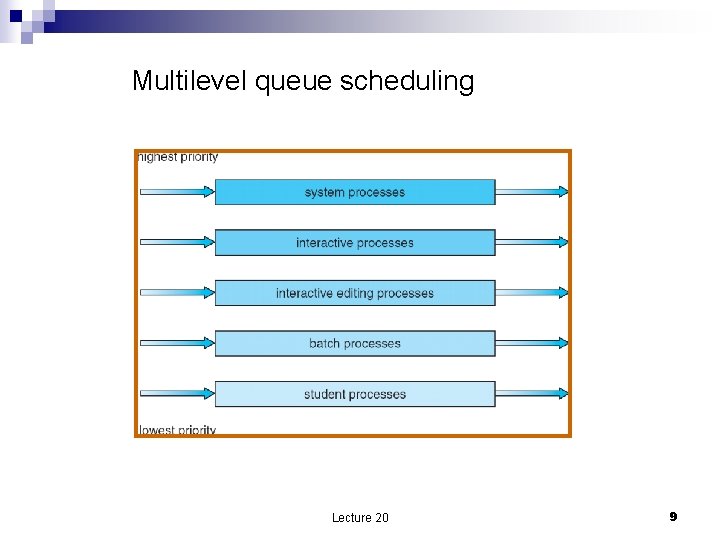

Multilevel queue n n Ready queue is partitioned into separate queues each with its own scheduling algorithm : ¨ foreground (interactive) RR ¨ background (batch) FCFS Scheduling between the queues ¨ Fixed priority scheduling - (i. e. , serve all from foreground then from background). Possibility of starvation. ¨ Time slice – each queue gets a certain amount of CPU time which it can schedule amongst its processes; i. e. , n 80% to foreground in RR n 20% to background in FCFS Lecture 20 8

Multilevel queue scheduling Lecture 20 9

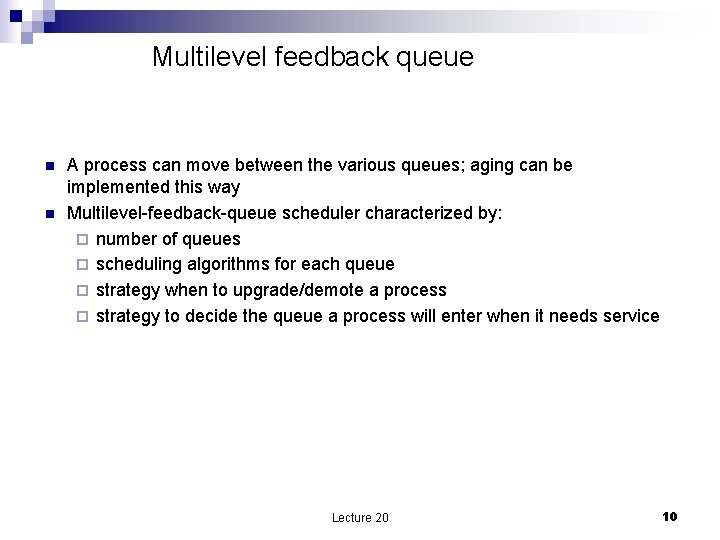

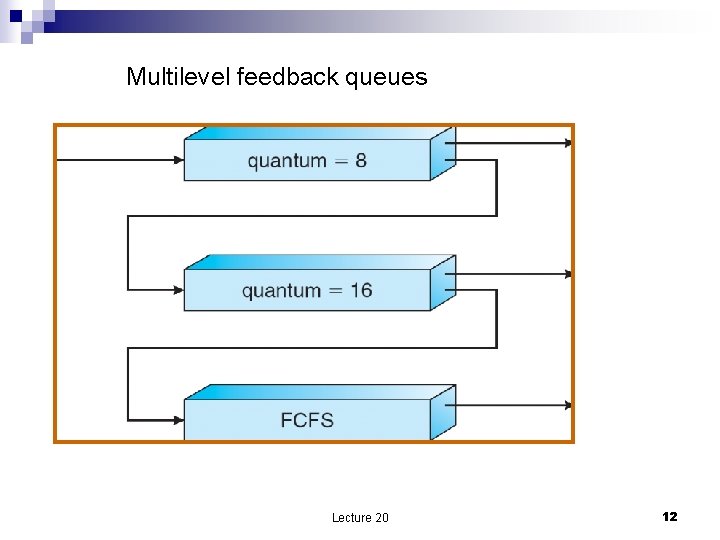

Multilevel feedback queue n n A process can move between the various queues; aging can be implemented this way Multilevel-feedback-queue scheduler characterized by: ¨ number of queues ¨ scheduling algorithms for each queue ¨ strategy when to upgrade/demote a process ¨ strategy to decide the queue a process will enter when it needs service Lecture 20 10

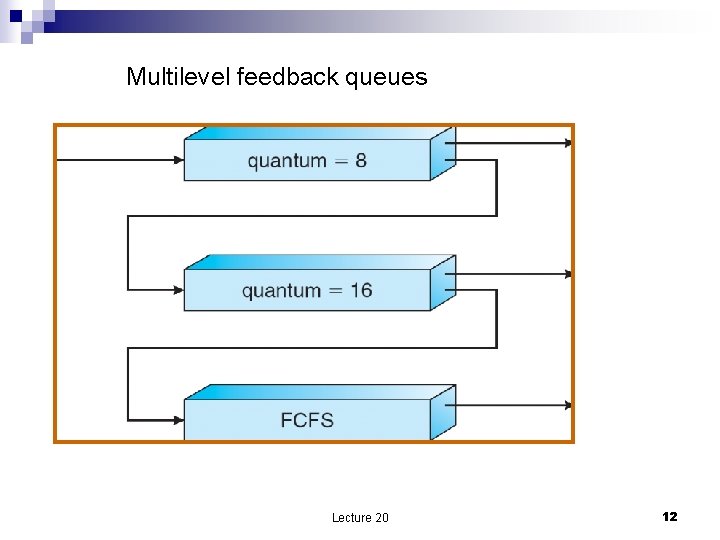

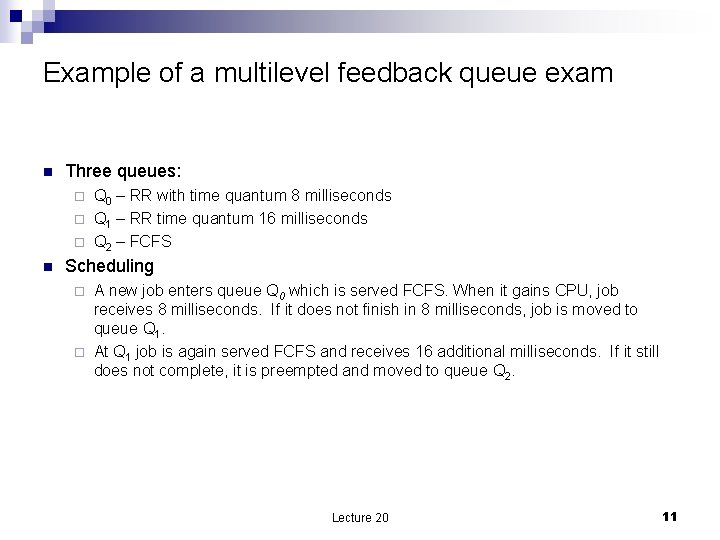

Example of a multilevel feedback queue exam n Three queues: Q 0 – RR with time quantum 8 milliseconds ¨ Q 1 – RR time quantum 16 milliseconds ¨ Q 2 – FCFS ¨ n Scheduling A new job enters queue Q 0 which is served FCFS. When it gains CPU, job receives 8 milliseconds. If it does not finish in 8 milliseconds, job is moved to queue Q 1. ¨ At Q 1 job is again served FCFS and receives 16 additional milliseconds. If it still does not complete, it is preempted and moved to queue Q 2. ¨ Lecture 20 11

Multilevel feedback queues Lecture 20 12

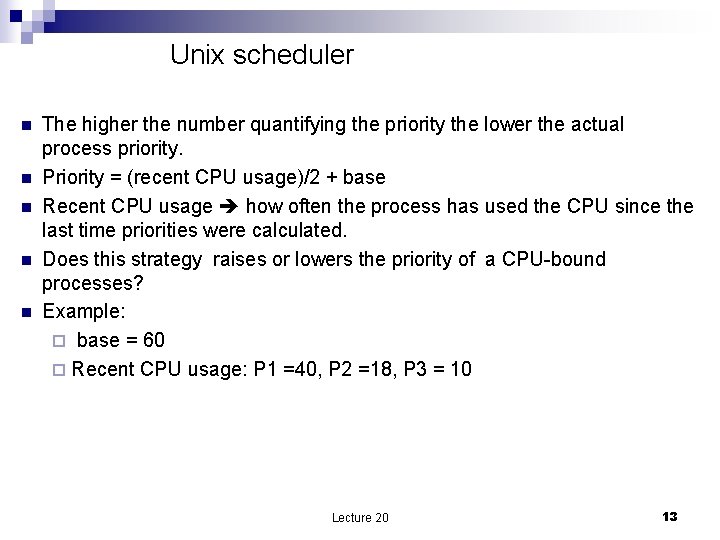

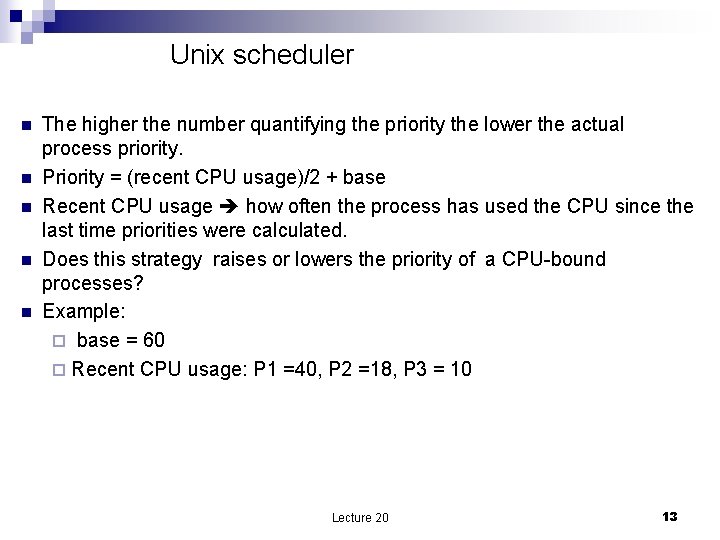

Unix scheduler n n n The higher the number quantifying the priority the lower the actual process priority. Priority = (recent CPU usage)/2 + base Recent CPU usage how often the process has used the CPU since the last time priorities were calculated. Does this strategy raises or lowers the priority of a CPU-bound processes? Example: ¨ base = 60 ¨ Recent CPU usage: P 1 =40, P 2 =18, P 3 = 10 Lecture 20 13

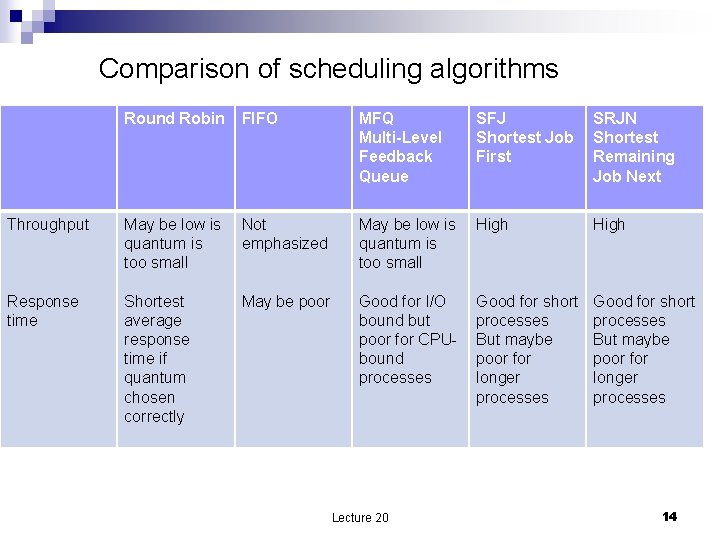

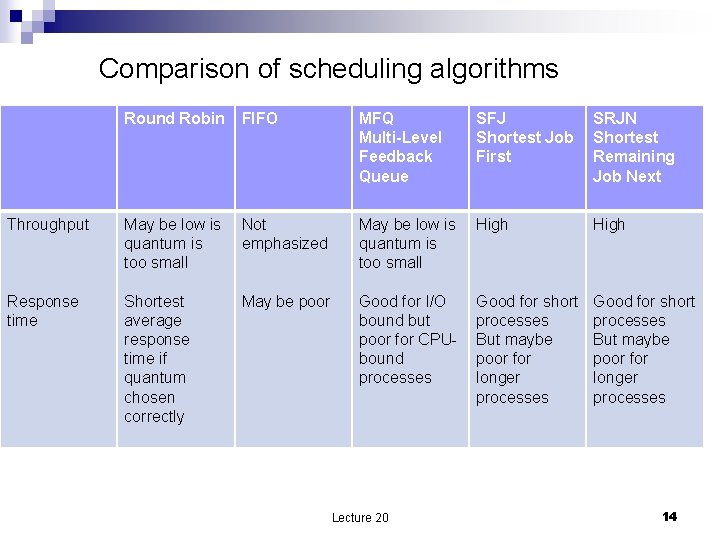

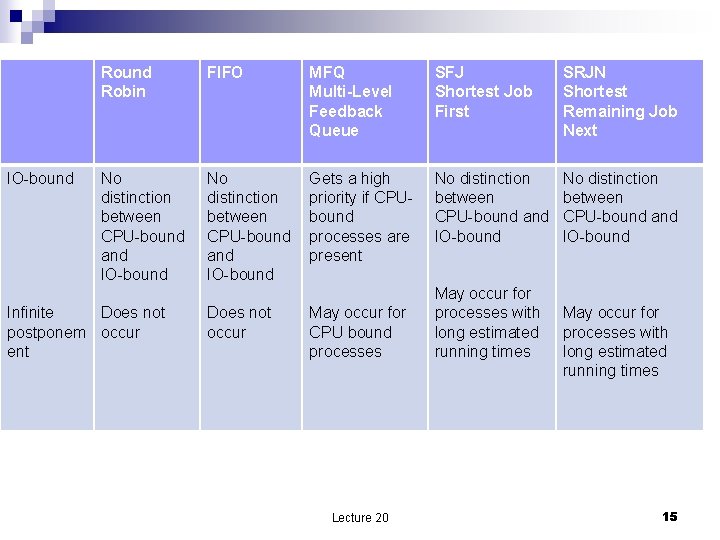

Comparison of scheduling algorithms Round Robin FIFO MFQ Multi-Level Feedback Queue SFJ Shortest Job First SRJN Shortest Remaining Job Next Throughput May be low is quantum is too small Not emphasized May be low is quantum is too small High Response time Shortest average response time if quantum chosen correctly May be poor Good for I/O bound but poor for CPUbound processes Good for short processes But maybe poor for longer processes Lecture 20 14

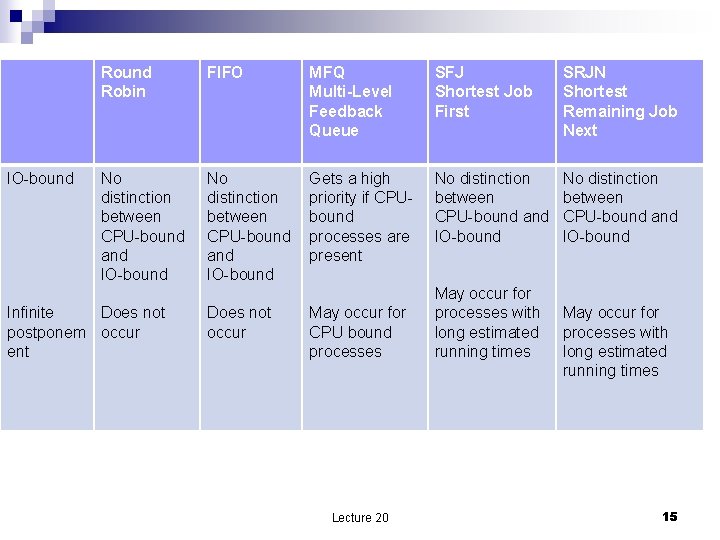

IO-bound Robin FIFO MFQ Multi-Level Feedback Queue SFJ Shortest Job First SRJN Shortest Remaining Job Next No distinction between CPU-bound and IO-bound Gets a high priority if CPUbound processes are present No distinction between CPU-bound and IO-bound Infinite Does not postponem occur ent Does not occur May occur for CPU bound processes Lecture 20 May occur for processes with long estimated running times 15

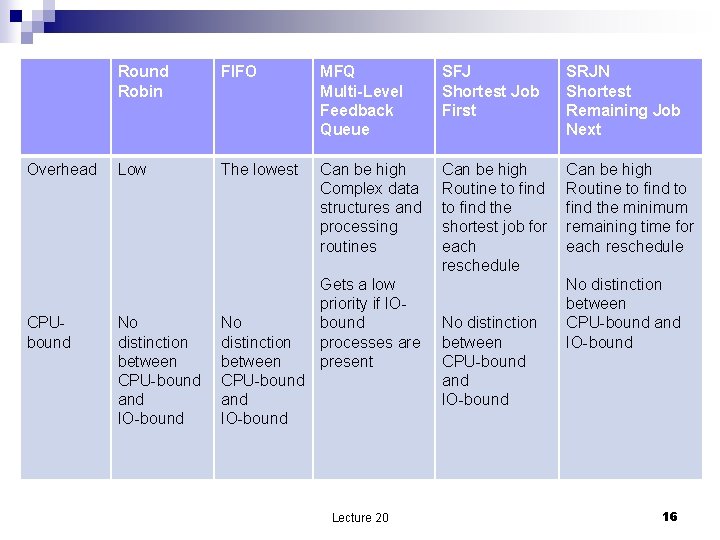

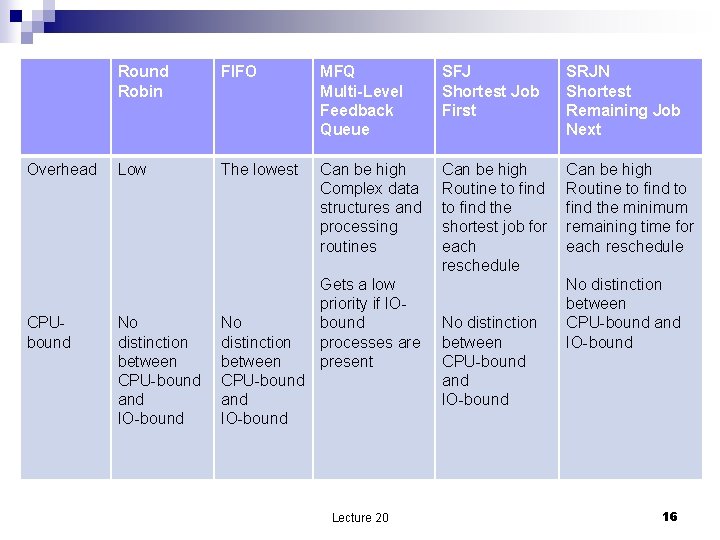

Overhead CPUbound Robin FIFO MFQ Multi-Level Feedback Queue SFJ Shortest Job First SRJN Shortest Remaining Job Next Low The lowest Can be high Complex data structures and processing routines Can be high Routine to find the shortest job for each reschedule Can be high Routine to find the minimum remaining time for each reschedule No distinction between CPU-bound and IO-bound Gets a low priority if IObound processes are present Lecture 20 No distinction between CPU-bound and IO-bound 16

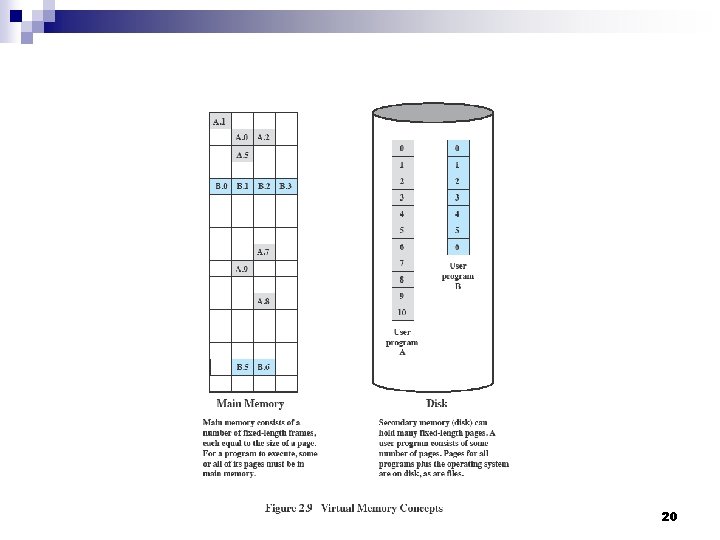

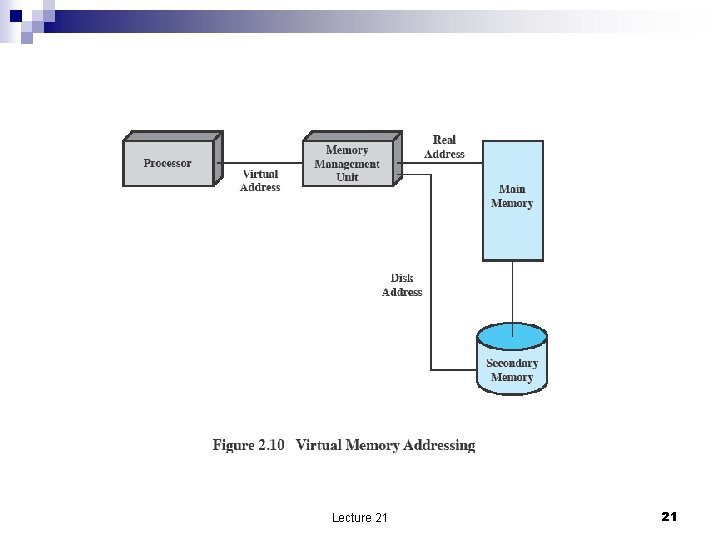

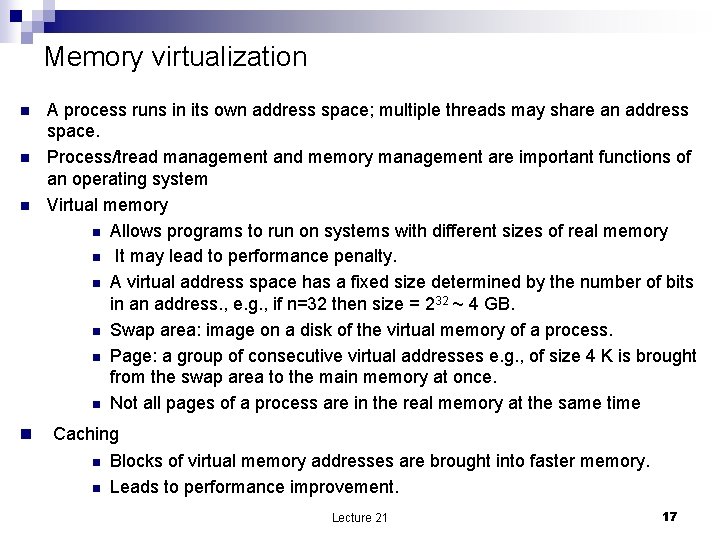

Memory virtualization n n A process runs in its own address space; multiple threads may share an address space. Process/tread management and memory management are important functions of an operating system Virtual memory n Allows programs to run on systems with different sizes of real memory n It may lead to performance penalty. n A virtual address space has a fixed size determined by the number of bits in an address. , e. g. , if n=32 then size = 232 ~ 4 GB. n Swap area: image on a disk of the virtual memory of a process. n Page: a group of consecutive virtual addresses e. g. , of size 4 K is brought from the swap area to the main memory at once. n Not all pages of a process are in the real memory at the same time Caching n Blocks of virtual memory addresses are brought into faster memory. n Leads to performance improvement. Lecture 21 17

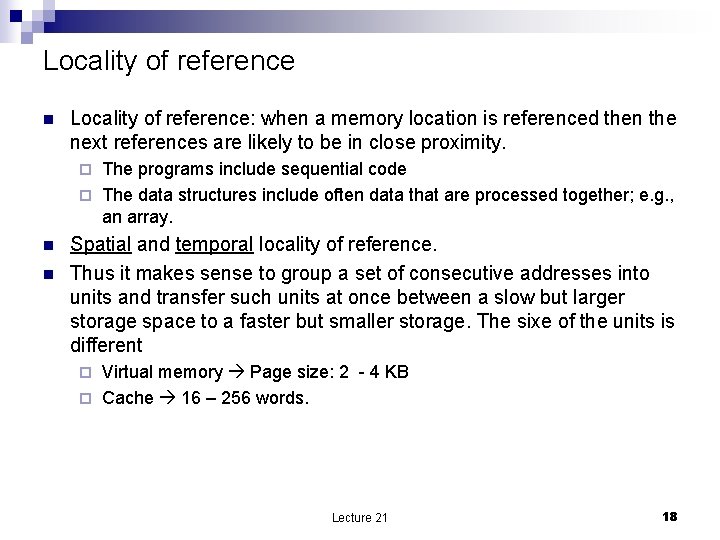

Locality of reference n Locality of reference: when a memory location is referenced then the next references are likely to be in close proximity. The programs include sequential code ¨ The data structures include often data that are processed together; e. g. , an array. ¨ n n Spatial and temporal locality of reference. Thus it makes sense to group a set of consecutive addresses into units and transfer such units at once between a slow but larger storage space to a faster but smaller storage. The sixe of the units is different Virtual memory Page size: 2 - 4 KB ¨ Cache 16 – 256 words. ¨ Lecture 21 18

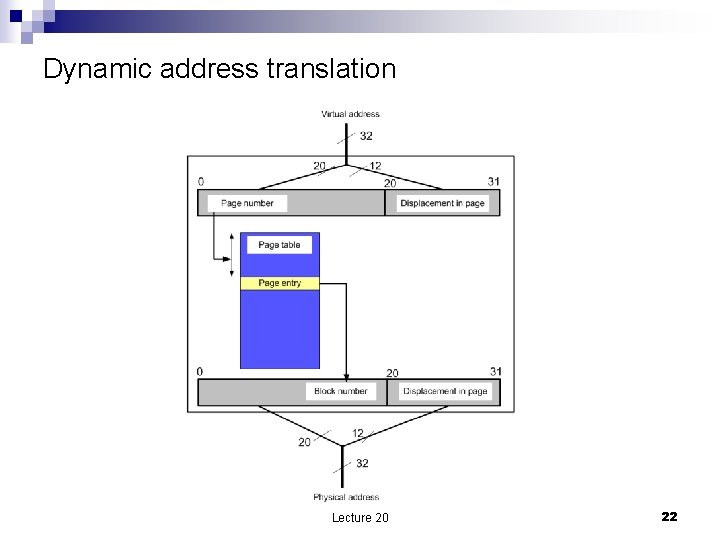

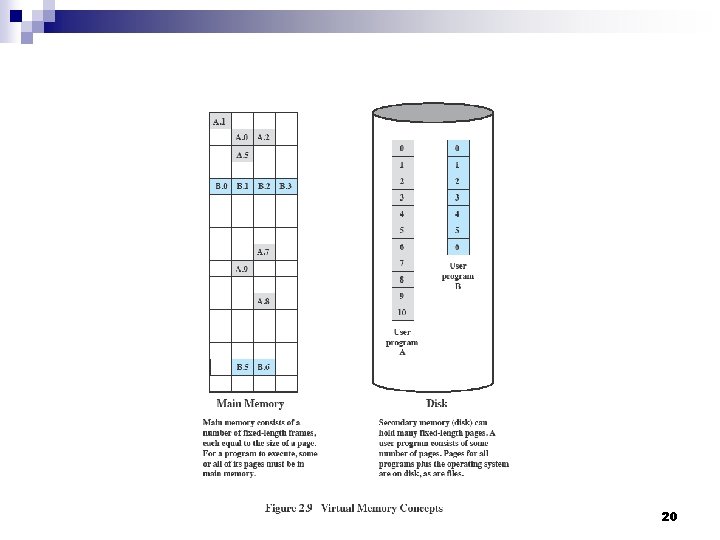

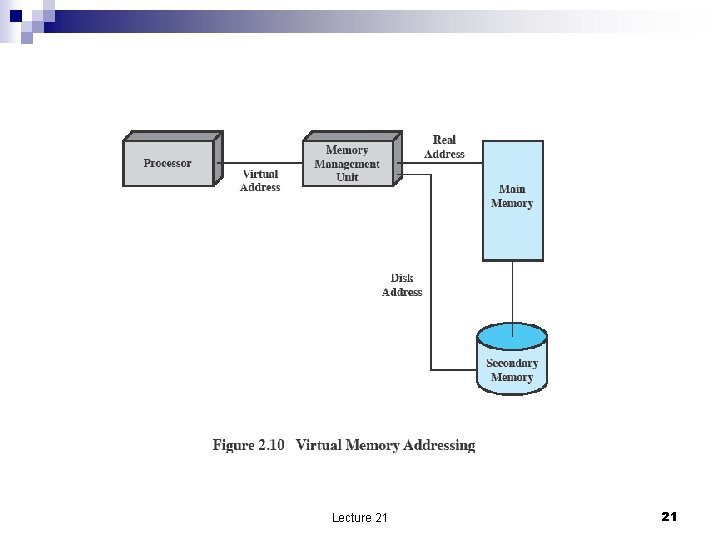

Virtual memory n n Several strategies ¨ Paging ¨ Segmentation ¨ Paging+ segmentation At the time a process/thread is created the system creates a page table for it; an entry in the page table contains ¨ The location in the swap area of the page ¨ The address in main memory where the page resides if the page has been brought in from the disk ¨ Other information e. g. dirty bit. Page fault a process/thread references an address in a page which is not in main memory On demand paging a page is brought in the main memory from the swap area on the disk when the process/thread references an address in that page. Lecture 21 19

Lecture 21 20

Lecture 21 21

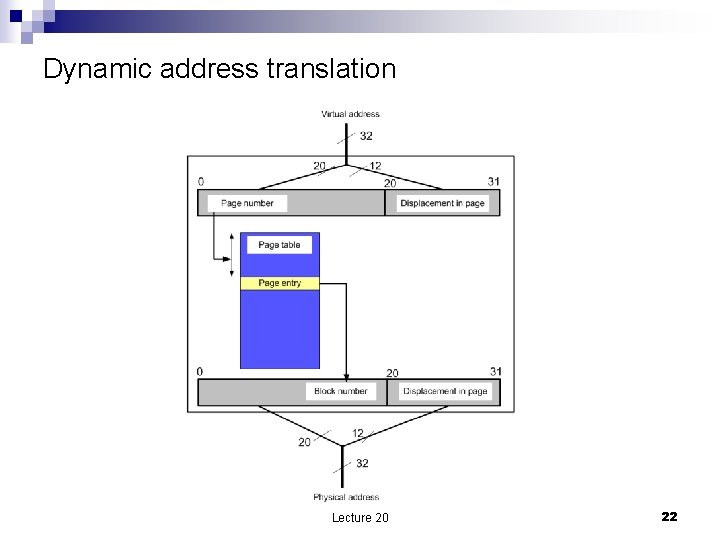

Dynamic address translation Lecture 20 22