COT 4600 Operating Systems Fall 2009 Dan C

- Slides: 42

COT 4600 Operating Systems Fall 2009 Dan C. Marinescu Office: HEC 439 B Office hours: Tu-Th 3: 00 -4: 00 PM

Lecture 27 n Schedule Tuesday November 24 - Project phase 4 and HW 6 are due ¨ Tuesday December 1 st -Research projects instead of final exam presentation ¨ Thursday December 3 rd - Class review ¨ n Last time: ¨ More on Scheduling ¨ Network properties - (Chapter 7) - available online from the publisher of the textbook ¨ Layers ¨ Data Link Layer n Today: n Transport protocols; end-to-end problems Next Time: ¨ Project discussion ¨ 2

Requirements of application level-processes. n The transport protocols are expected to: ¨ ¨ ¨ ¨ Guarantee message delivery Deliver messages in the same order they are sent Deliver at most one copy of each message Support arbitrarily large messages Support synchronization between sender and receiver Allow the receiver to apply flow control to the sender Support multiple application processes on each host

Best-effort networks n Services provided by best-effort networks ¨ ¨ ¨ n Drop messages Re-order messages Deliver duplicate copies of a given message Limit messages to some finite size Deliver messages after an arbitrarily long delay Challenges develop algorithms that turn the less-thandesirable properties of the underlying network into the high level services required by application programs.

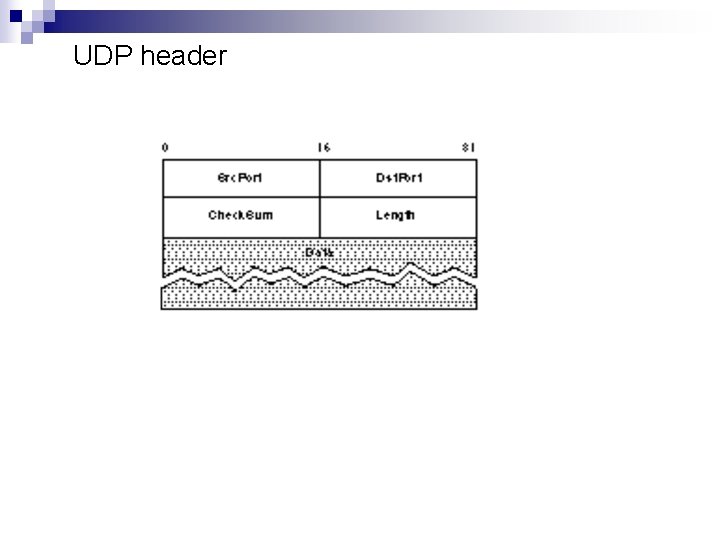

UDP n n n Provides a process-to-process transport service. Unreliable and unordered datagram service No flow control Multiplexing Pseudo-header: fields from the IP header (1)+(2)+(3) (1) protocol number ¨ (2) source IP address ¨ (3) destination IP address ¨ UDP length field ¨ n UDP Checksum optional in IPv 4 but mandatory in IPv 6 ¨ pseudo header + udp header + data

UDP ports n Endpoints identified by ports. servers run at well-known ports (e. g. Web server at port 80, Domain Server at port 53) ¨ see /etc/services on Unix ¨

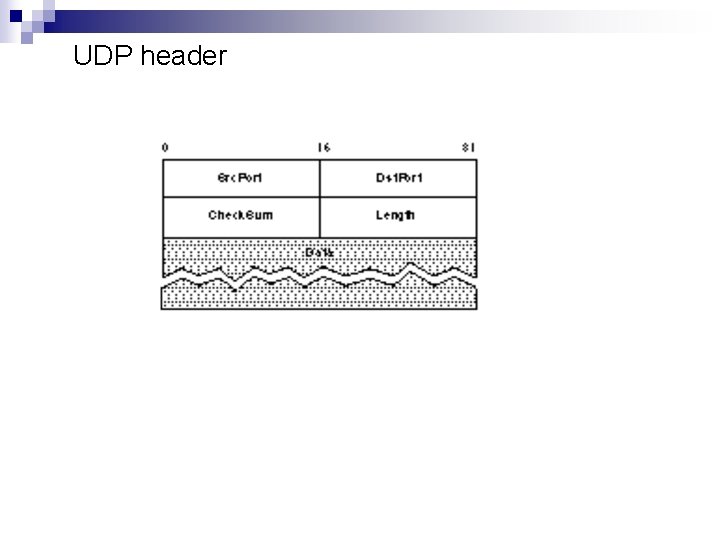

UDP header

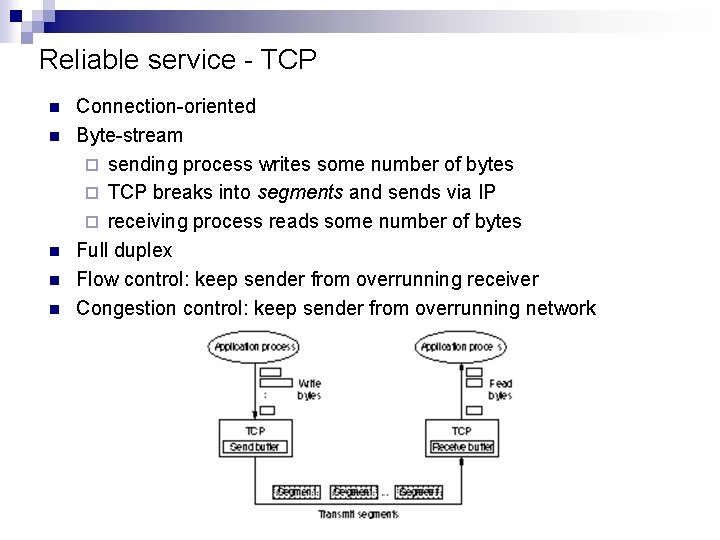

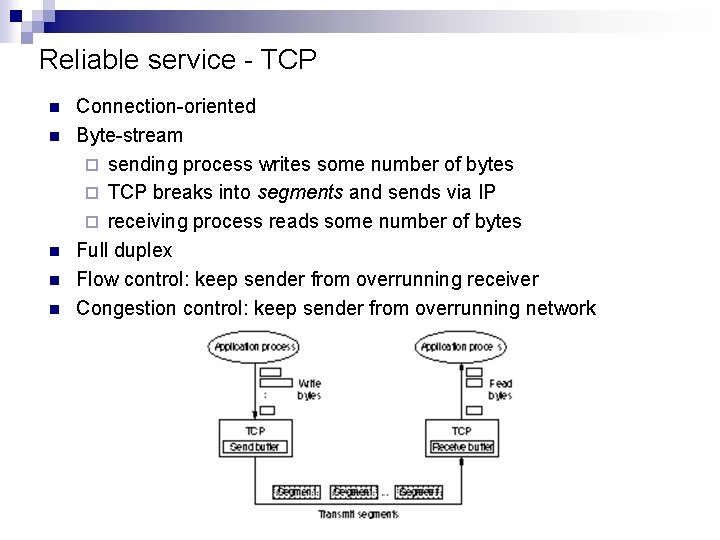

Reliable service - TCP n n n Connection-oriented Byte-stream ¨ sending process writes some number of bytes ¨ TCP breaks into segments and sends via IP ¨ receiving process reads some number of bytes Full duplex Flow control: keep sender from overrunning receiver Congestion control: keep sender from overrunning network

End-to-end issues n TCP is based on sliding window protocol used at data link level, but the situation is very different. ¨ ¨ ¨ Potentially connects many different hosts need explicit connection establishment and termination Potentially different RTT need adaptive timeout mechanism Potentially long delay in network need to be prepared for arrival of very old packets Potentially different capacity at destination need to accommodate different amounts of buffering Potentially different network capacity need to be prepared for network congestion

End-to-end issues (cont’d) n n n At a link level congestion is visible in the queue of packets at the sender. Network congestion much tougher to cope with, detect and then prevent. TCP uses the sliding window algorithm on an end-to end basis to provide reliable/ordered delivery. X. 25 uses the sliding window protocol on a hop-by-hop basis. A sequence of hop-by-hop guarantees does not add up to an endto-end guarantee. End-to-end argument: a function should not be provided at a lower levels of a system unless it can be completely and correctly implemented at that level. Yet for performance optimization a function may be incompletely provided at a low level. E. g. error detection is provided on a hop by hop basis. Why send all the way a corrupted packet?

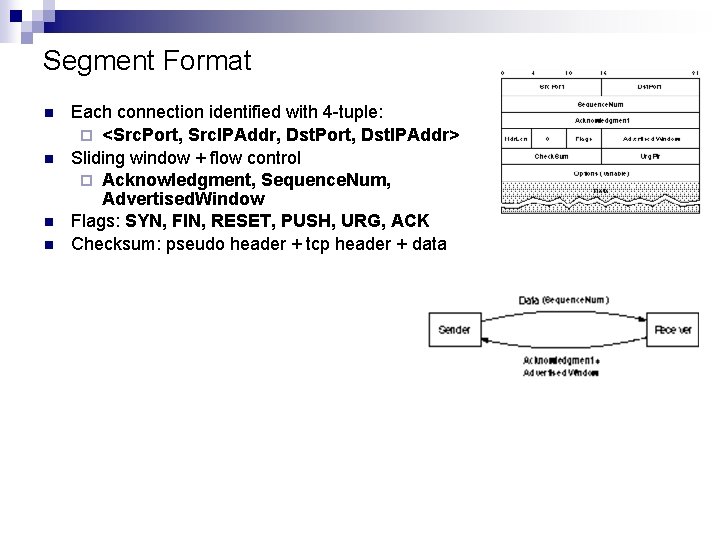

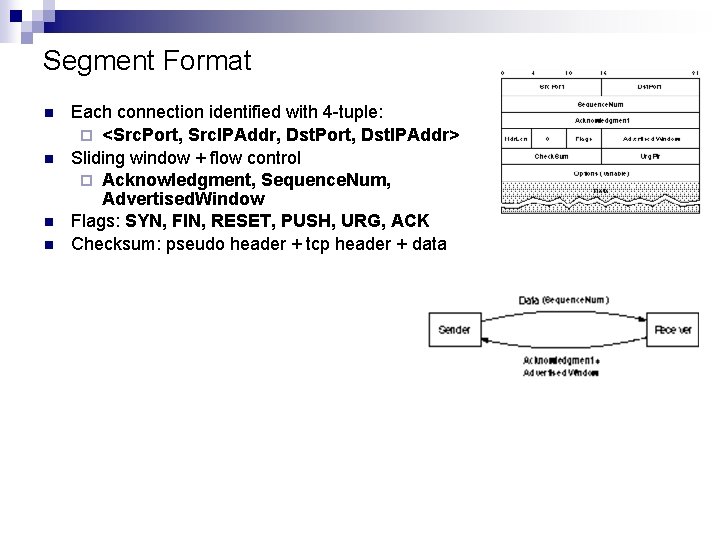

Segment Format n n Each connection identified with 4 -tuple: ¨ <Src. Port, Src. IPAddr, Dst. Port, Dst. IPAddr> Sliding window + flow control ¨ Acknowledgment, Sequence. Num, Advertised. Window Flags: SYN, FIN, RESET, PUSH, URG, ACK Checksum: pseudo header + tcp header + data

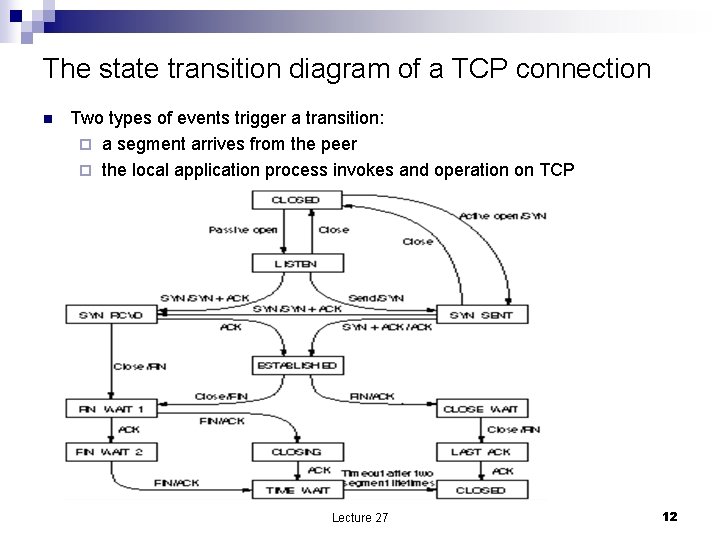

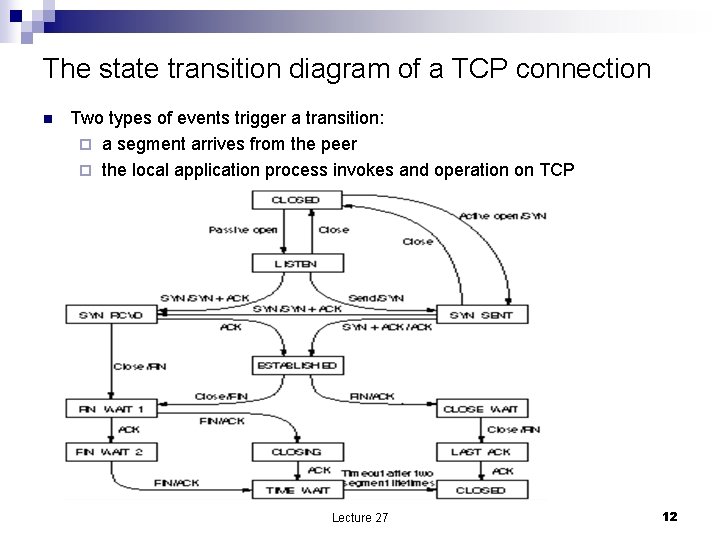

The state transition diagram of a TCP connection n Two types of events trigger a transition: ¨ a segment arrives from the peer ¨ the local application process invokes and operation on TCP Lecture 27 12

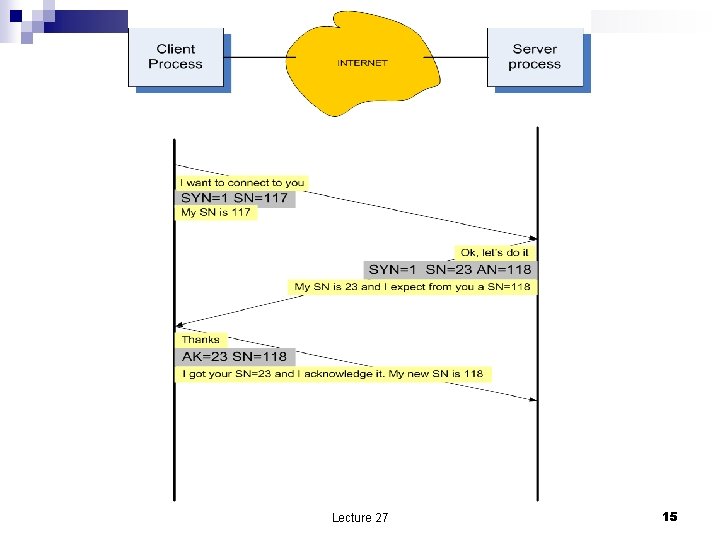

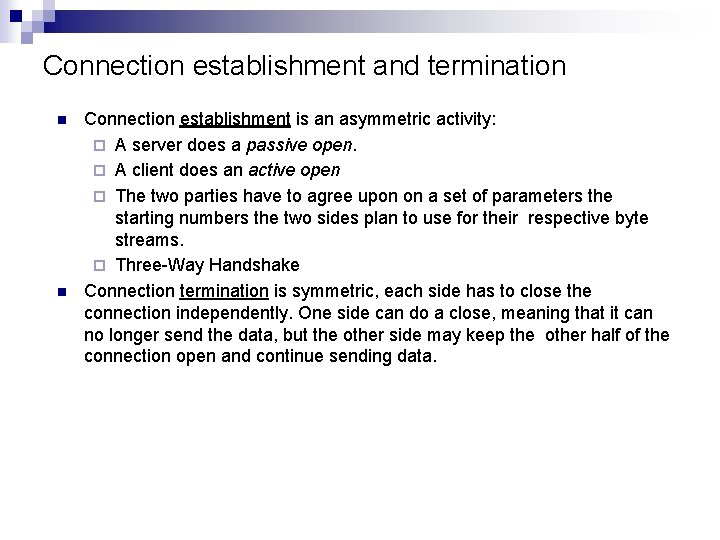

Connection establishment and termination n n Connection establishment is an asymmetric activity: ¨ A server does a passive open. ¨ A client does an active open ¨ The two parties have to agree upon on a set of parameters the starting numbers the two sides plan to use for their respective byte streams. ¨ Three-Way Handshake Connection termination is symmetric, each side has to close the connection independently. One side can do a close, meaning that it can no longer send the data, but the other side may keep the other half of the connection open and continue sending data.

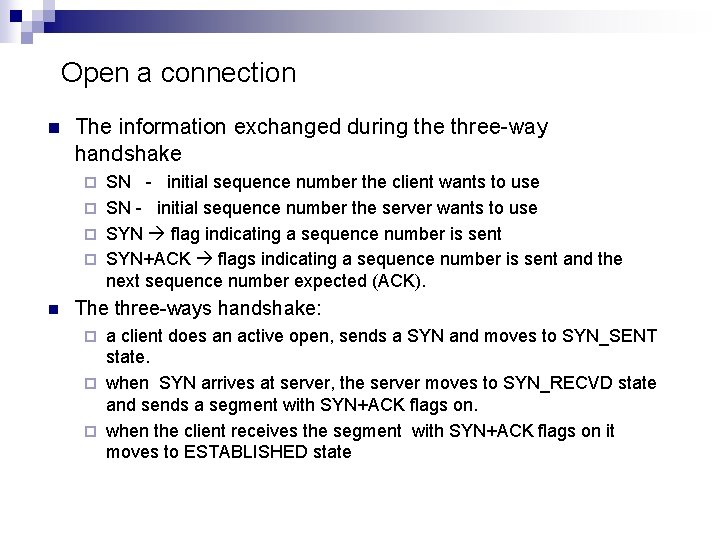

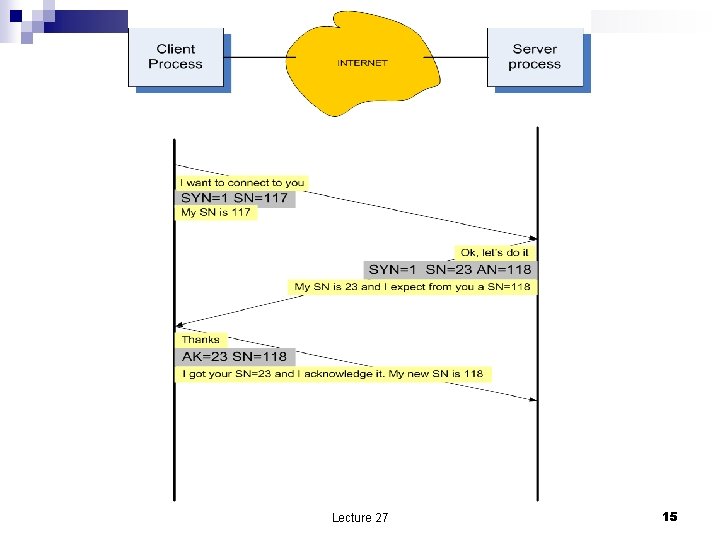

Open a connection n The information exchanged during the three-way handshake SN - initial sequence number the client wants to use ¨ SN - initial sequence number the server wants to use ¨ SYN flag indicating a sequence number is sent ¨ SYN+ACK flags indicating a sequence number is sent and the next sequence number expected (ACK). ¨ n The three-ways handshake: a client does an active open, sends a SYN and moves to SYN_SENT state. ¨ when SYN arrives at server, the server moves to SYN_RECVD state and sends a segment with SYN+ACK flags on. ¨ when the client receives the segment with SYN+ACK flags on it moves to ESTABLISHED state ¨

Lecture 27 15

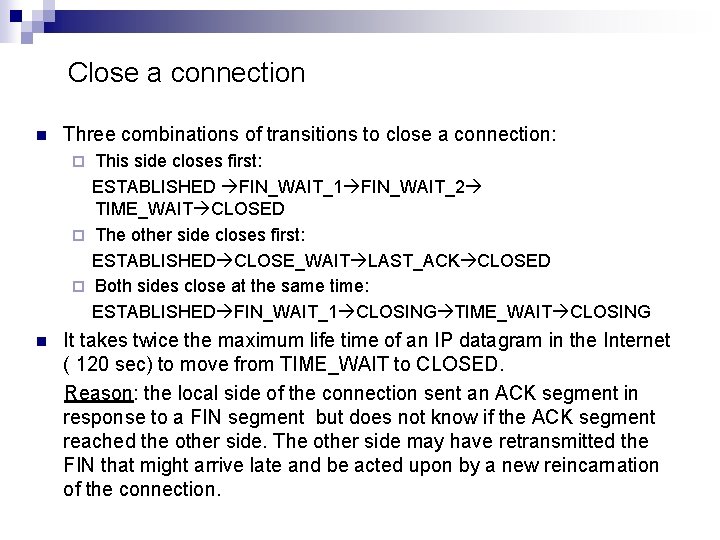

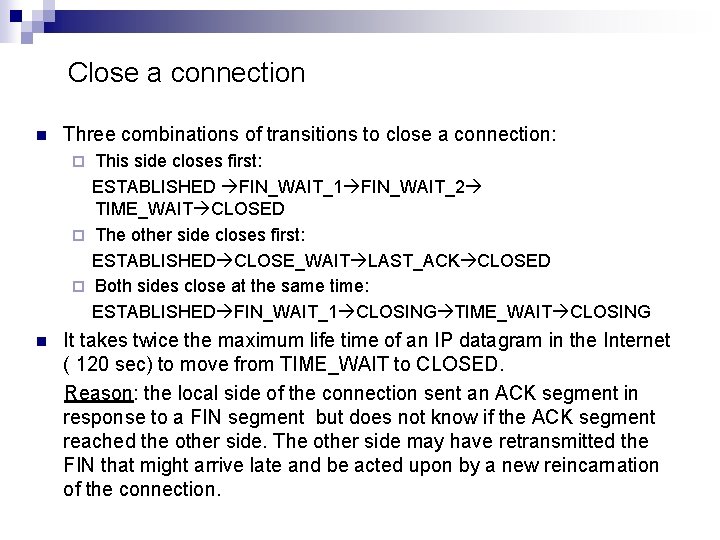

Close a connection n Three combinations of transitions to close a connection: This side closes first: ESTABLISHED FIN_WAIT_1 FIN_WAIT_2 TIME_WAIT CLOSED ¨ The other side closes first: ESTABLISHED CLOSE_WAIT LAST_ACK CLOSED ¨ Both sides close at the same time: ESTABLISHED FIN_WAIT_1 CLOSING TIME_WAIT CLOSING ¨ n It takes twice the maximum life time of an IP datagram in the Internet ( 120 sec) to move from TIME_WAIT to CLOSED. Reason: the local side of the connection sent an ACK segment in response to a FIN segment but does not know if the ACK segment reached the other side. The other side may have retransmitted the FIN that might arrive late and be acted upon by a new reincarnation of the connection.

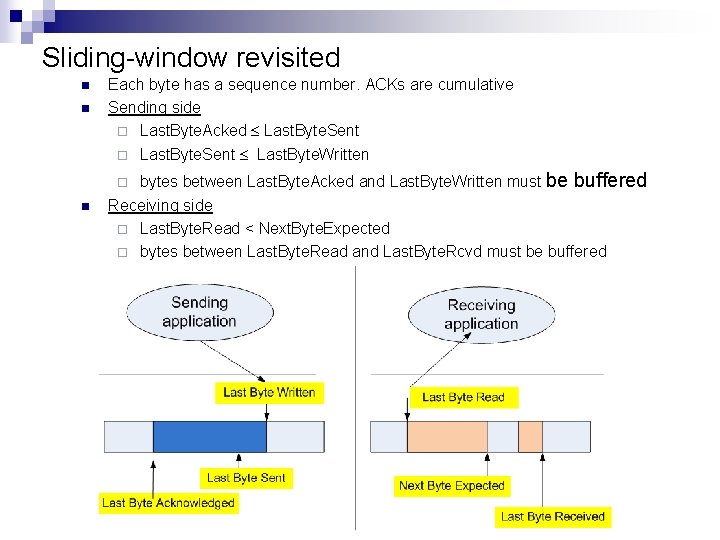

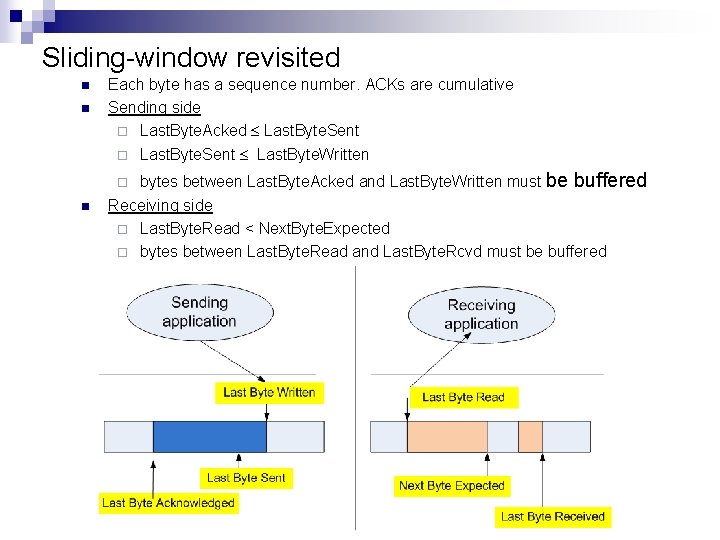

Sliding-window revisited n n Each byte has a sequence number. ACKs are cumulative Sending side ¨ Last. Byte. Acked Last. Byte. Sent ¨ Last. Byte. Sent Last. Byte. Written bytes between Last. Byte. Acked and Last. Byte. Written must be buffered Receiving side ¨ Last. Byte. Read < Next. Byte. Expected ¨ bytes between Last. Byte. Read and Last. Byte. Rcvd must be buffered ¨ n

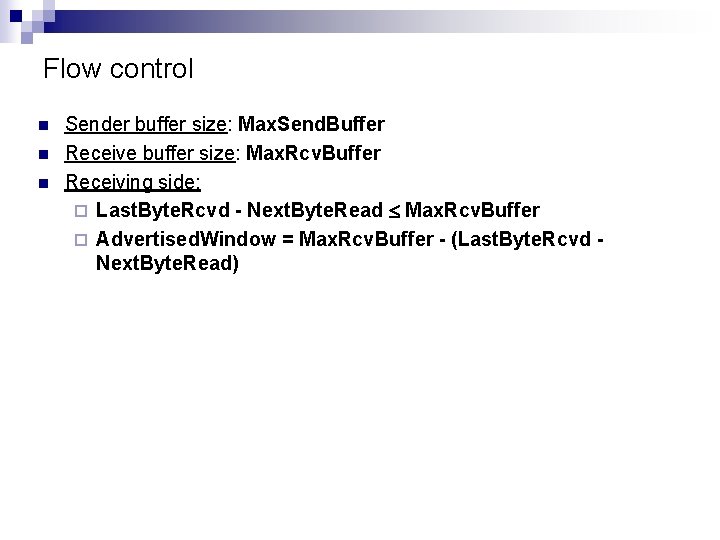

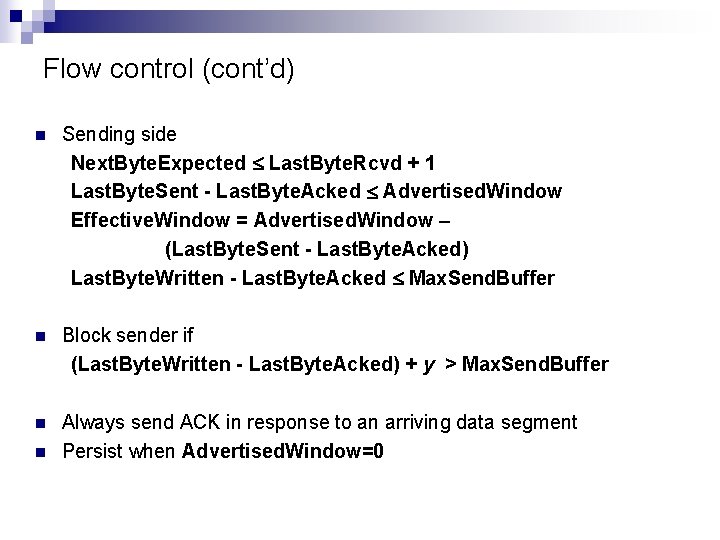

Flow control n n n Sender buffer size: Max. Send. Buffer Receive buffer size: Max. Rcv. Buffer Receiving side: ¨ Last. Byte. Rcvd - Next. Byte. Read Max. Rcv. Buffer ¨ Advertised. Window = Max. Rcv. Buffer - (Last. Byte. Rcvd Next. Byte. Read)

Flow control (cont’d) n Sending side Next. Byte. Expected Last. Byte. Rcvd + 1 Last. Byte. Sent - Last. Byte. Acked Advertised. Window Effective. Window = Advertised. Window – (Last. Byte. Sent - Last. Byte. Acked) Last. Byte. Written - Last. Byte. Acked Max. Send. Buffer n Block sender if (Last. Byte. Written - Last. Byte. Acked) + y > Max. Send. Buffer n Always send ACK in response to an arriving data segment Persist when Advertised. Window=0 n

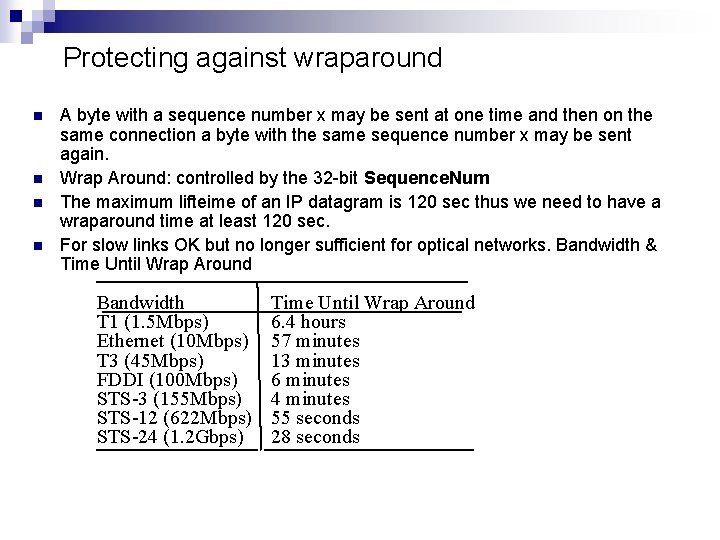

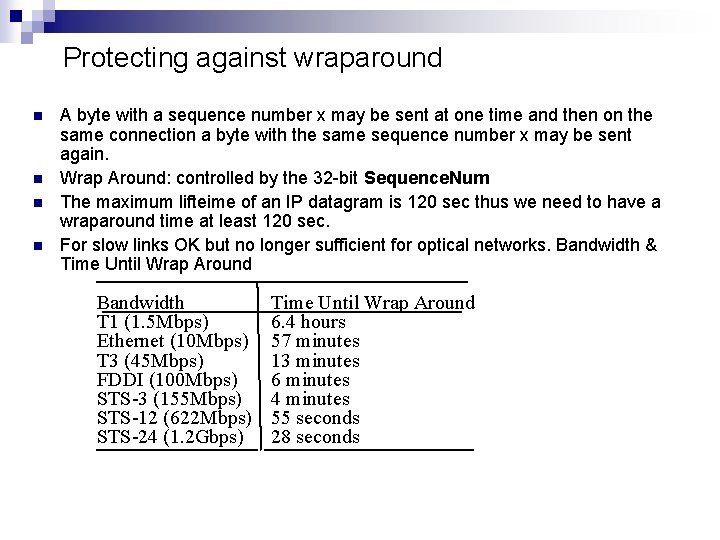

Protecting against wraparound n n A byte with a sequence number x may be sent at one time and then on the same connection a byte with the same sequence number x may be sent again. Wrap Around: controlled by the 32 -bit Sequence. Num The maximum lifteime of an IP datagram is 120 sec thus we need to have a wraparound time at least 120 sec. For slow links OK but no longer sufficient for optical networks. Bandwidth & Time Until Wrap Around Bandwidth T 1 (1. 5 Mbps) Ethernet (10 Mbps) T 3 (45 Mbps) FDDI (100 Mbps) STS-3 (155 Mbps) STS-12 (622 Mbps) STS-24 (1. 2 Gbps) Time Until Wrap Around 6. 4 hours 57 minutes 13 minutes 6 minutes 4 minutes 55 seconds 28 seconds

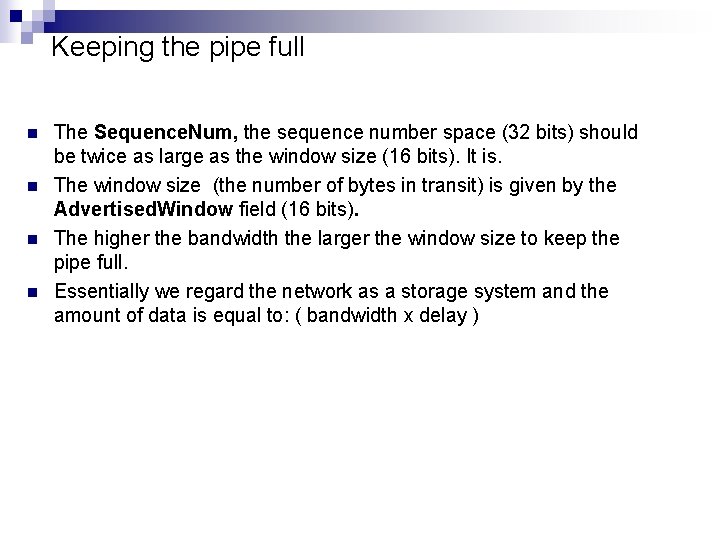

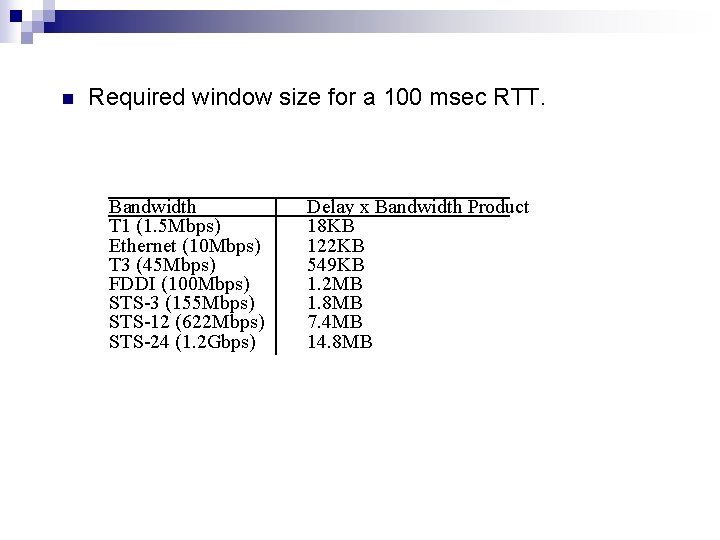

Keeping the pipe full n n The Sequence. Num, the sequence number space (32 bits) should be twice as large as the window size (16 bits). It is. The window size (the number of bytes in transit) is given by the Advertised. Window field (16 bits). The higher the bandwidth the larger the window size to keep the pipe full. Essentially we regard the network as a storage system and the amount of data is equal to: ( bandwidth x delay )

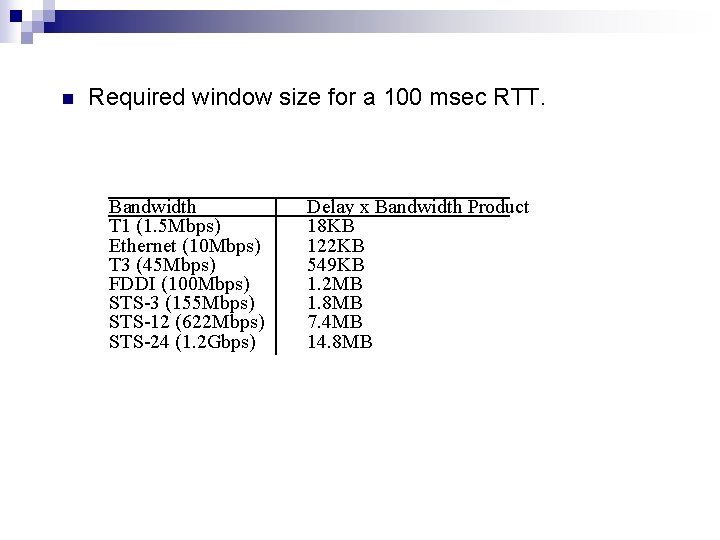

n Required window size for a 100 msec RTT. Bandwidth T 1 (1. 5 Mbps) Ethernet (10 Mbps) T 3 (45 Mbps) FDDI (100 Mbps) STS-3 (155 Mbps) STS-12 (622 Mbps) STS-24 (1. 2 Gbps) Delay x Bandwidth Product 18 KB 122 KB 549 KB 1. 2 MB 1. 8 MB 7. 4 MB 14. 8 MB

Adaptive retransmission –original algorithm n n n Measure Sample. RTT for each segment/ACK pair Compute weighted average of RTT ¨ Estimated. RTT = a x Estimated. RTT + b x Sample. RTT ¨ where a + b = 1 ¨ a between 0. 8 and 0. 9 ¨ b between 0. 1 and 0. 2 Set timeout based on Estimated. RTT ¨ Time. Out = 2 x Estimated. RTT

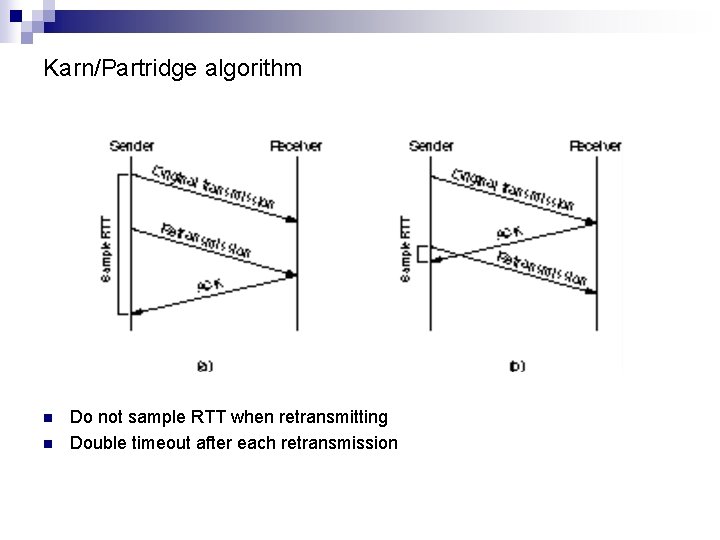

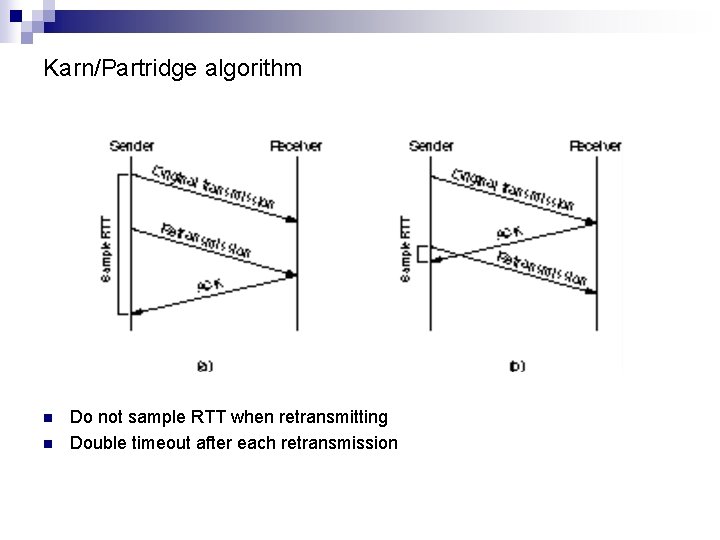

Karn/Partridge algorithm n n Do not sample RTT when retransmitting Double timeout after each retransmission

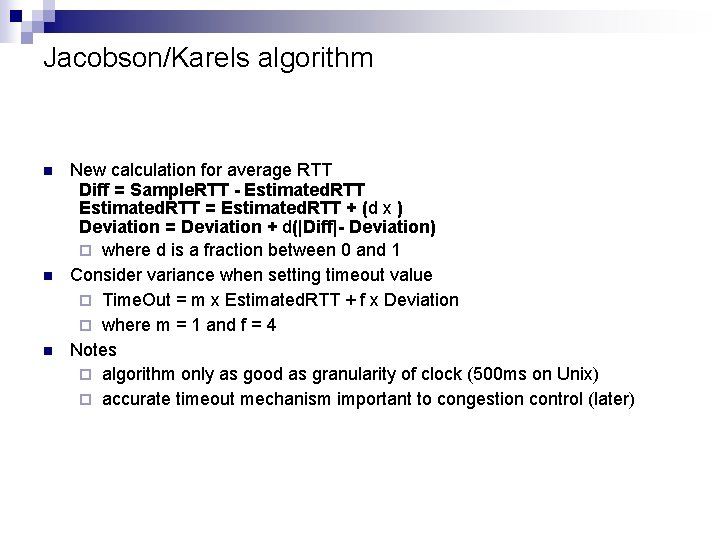

Jacobson/Karels algorithm n n n New calculation for average RTT Diff = Sample. RTT - Estimated. RTT = Estimated. RTT + (d x ) Deviation = Deviation + d(|Diff|- Deviation) ¨ where d is a fraction between 0 and 1 Consider variance when setting timeout value ¨ Time. Out = m x Estimated. RTT + f x Deviation ¨ where m = 1 and f = 4 Notes ¨ algorithm only as good as granularity of clock (500 ms on Unix) ¨ accurate timeout mechanism important to congestion control (later)

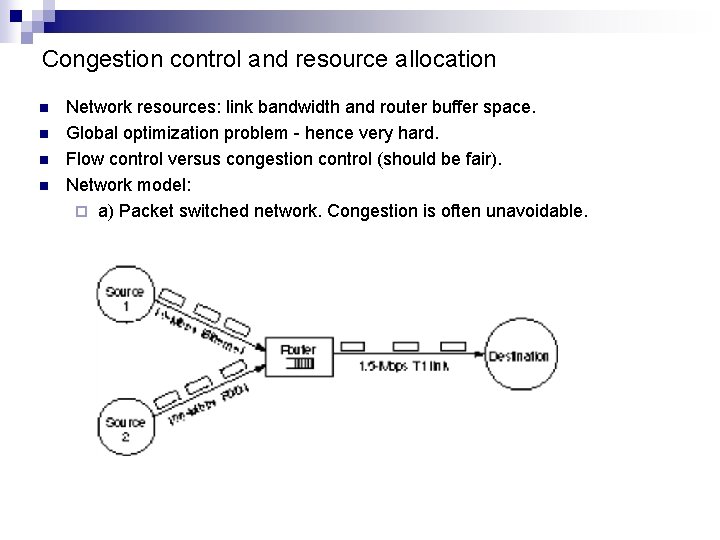

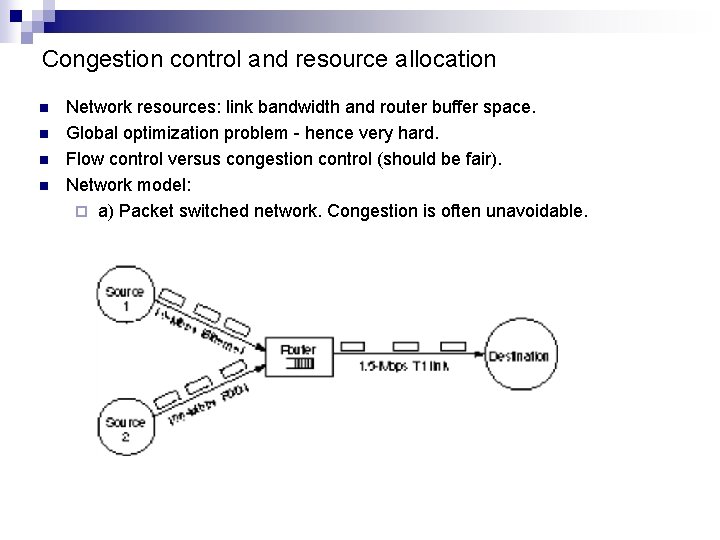

Congestion control and resource allocation n n Network resources: link bandwidth and router buffer space. Global optimization problem - hence very hard. Flow control versus congestion control (should be fair). Network model: ¨ a) Packet switched network. Congestion is often unavoidable.

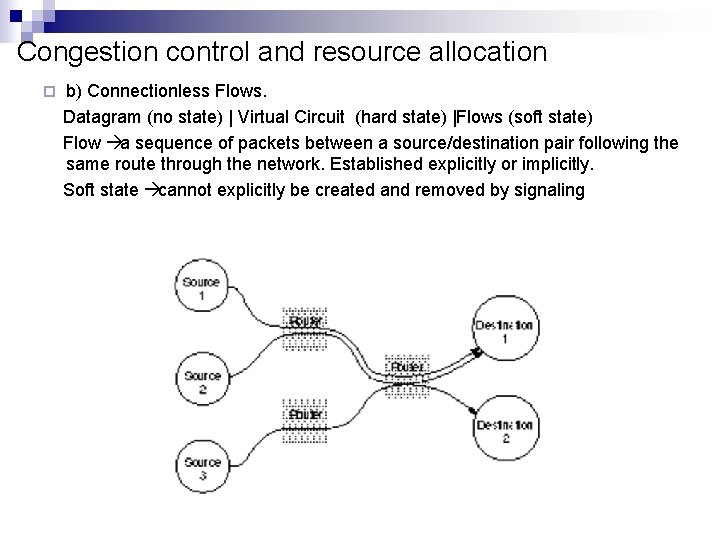

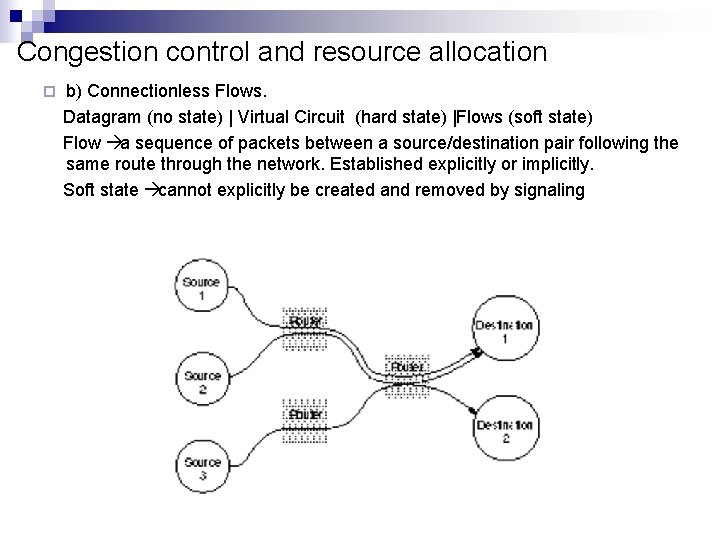

Congestion control and resource allocation ¨ b) Connectionless Flows. Datagram (no state) | Virtual Circuit (hard state) |Flows (soft state) Flow a sequence of packets between a source/destination pair following the same route through the network. Established explicitly or implicitly. Soft state cannot explicitly be created and removed by signaling

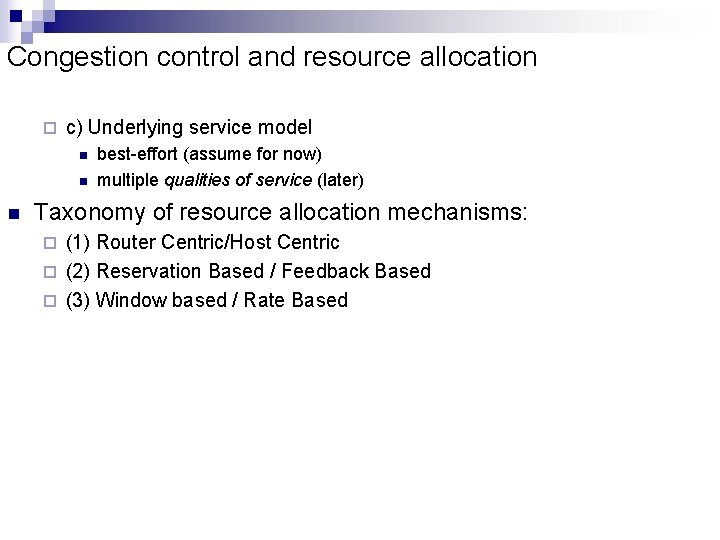

Congestion control and resource allocation ¨ c) Underlying service model n n n best-effort (assume for now) multiple qualities of service (later) Taxonomy of resource allocation mechanisms: (1) Router Centric/Host Centric ¨ (2) Reservation Based / Feedback Based ¨ (3) Window based / Rate Based ¨

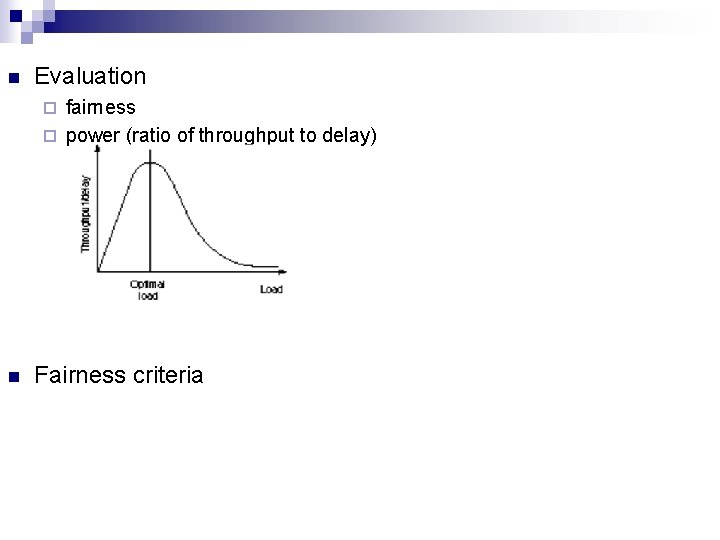

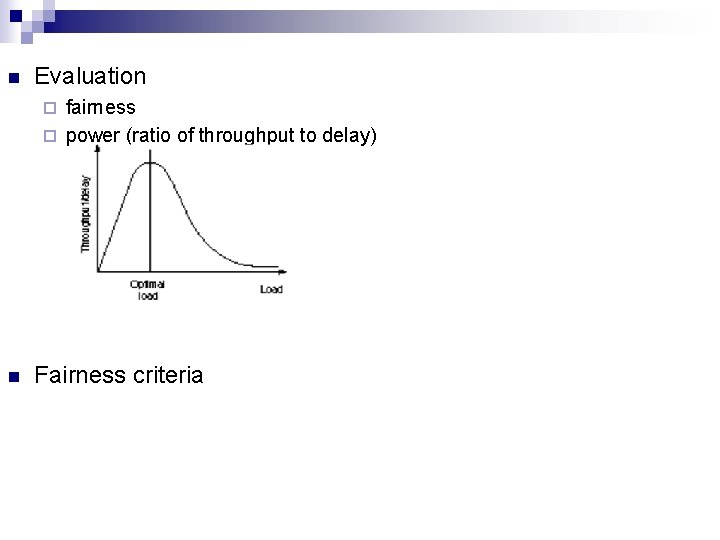

n Evaluation fairness ¨ power (ratio of throughput to delay) ¨ n Fairness criteria

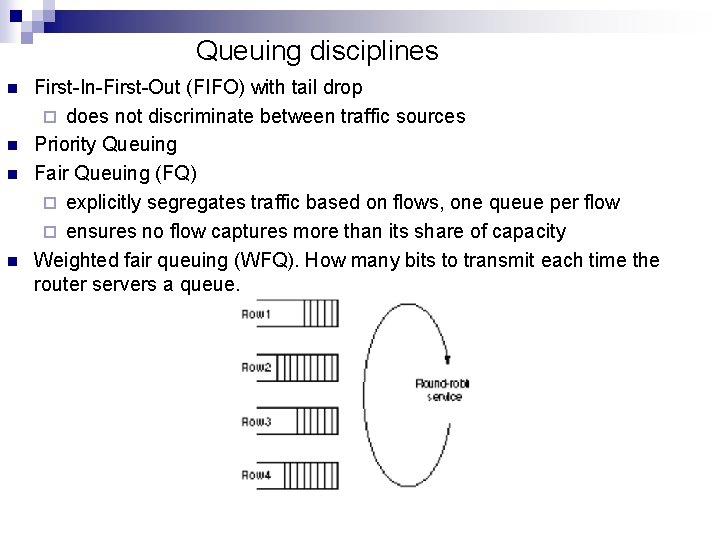

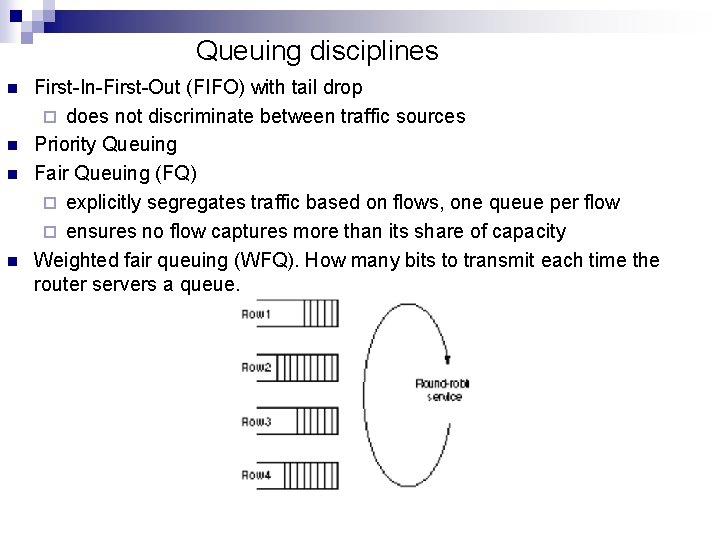

Queuing disciplines n n First-In-First-Out (FIFO) with tail drop ¨ does not discriminate between traffic sources Priority Queuing Fair Queuing (FQ) ¨ explicitly segregates traffic based on flows, one queue per flow ¨ ensures no flow captures more than its share of capacity Weighted fair queuing (WFQ). How many bits to transmit each time the router servers a queue.

n n Problem: packets not all the same length ¨ really want bit-by-bit round robin ¨ not feasible to interleave bits (schedule on packet basis) ¨ simulate by determining when packet would finish For a single flow ¨ suppose clock ticks each time a bit is transmitted ¨ let Pi denote the length of packet i ¨ let Si denote the time when start to transmit packet i ¨ let Fi denote the time when finish transmitting packet i ¨ Fi = S i + P i

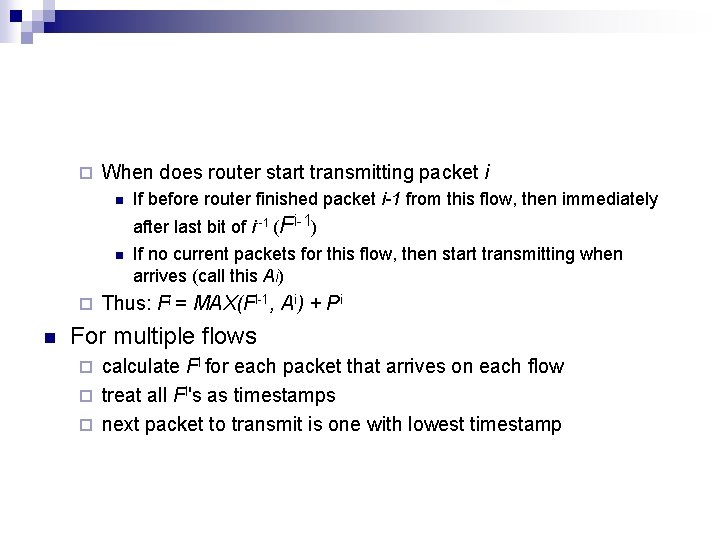

¨ When does router start transmitting packet i n If before router finished packet i-1 from this flow, then immediately after last bit of i--1 (Fi-1) n ¨ n If no current packets for this flow, then start transmitting when arrives (call this Ai) Thus: Fi = MAX(Fi-1, Ai) + Pi For multiple flows calculate Fi for each packet that arrives on each flow ¨ treat all Fi's as timestamps ¨ next packet to transmit is one with lowest timestamp ¨

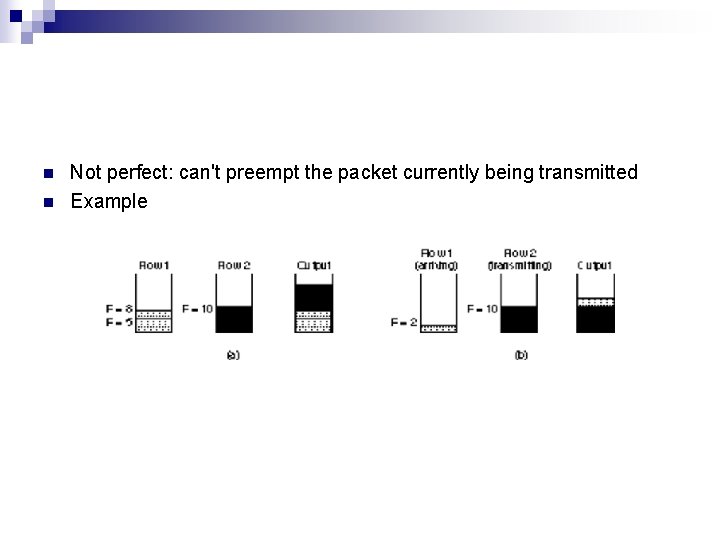

n n Not perfect: can't preempt the packet currently being transmitted Example

TCP congestion control n n In late 1980’s Van Jacobson proposed to augment TCP with a congestion control mechanism. Each source attempts to estimate how much capacity is available in the network. The source uses: (a) the implicit feedback provided by the acknowledgments to determine when it is safe to insert a new packet into the network (self-clocking), and ¨ (b) timeouts to detect congestion. ¨ n Three inter-related mechanisms: Additive Increase/Multiplicative Decrease new state variable a window that combines flow control and congestion control. ¨ Slow Start mechanism to allow a connection to achieve its window fast. ¨ Fast Retransmit a heuristic to deal with timeouts. ¨

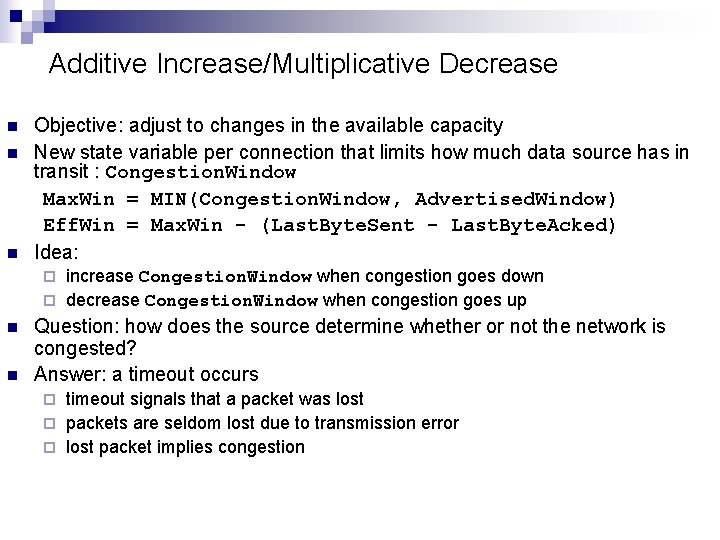

Additive Increase/Multiplicative Decrease n n n Objective: adjust to changes in the available capacity New state variable per connection that limits how much data source has in transit : Congestion. Window Max. Win = MIN(Congestion. Window, Advertised. Window) Eff. Win = Max. Win - (Last. Byte. Sent - Last. Byte. Acked) Idea: increase Congestion. Window when congestion goes down ¨ decrease Congestion. Window when congestion goes up ¨ n n Question: how does the source determine whether or not the network is congested? Answer: a timeout occurs timeout signals that a packet was lost ¨ packets are seldom lost due to transmission error ¨ lost packet implies congestion ¨

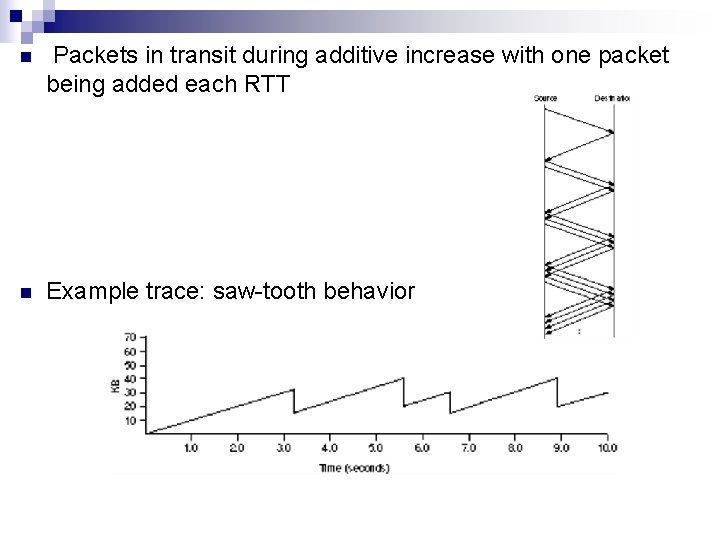

Additive Increase/Multiplicative Decrease Algorithm: ¨ increment Congestion. Window by one packet per RTT (linear increase) ¨ divide Congestion. Window by two whenever a timeout occurs (multiplicative decrease) In practice: increment a little for each ACK. MSS – maximum segment size. ¨ Increment = MSS * MSS/Congestion. Window) ¨ Congestion. Window += Increment

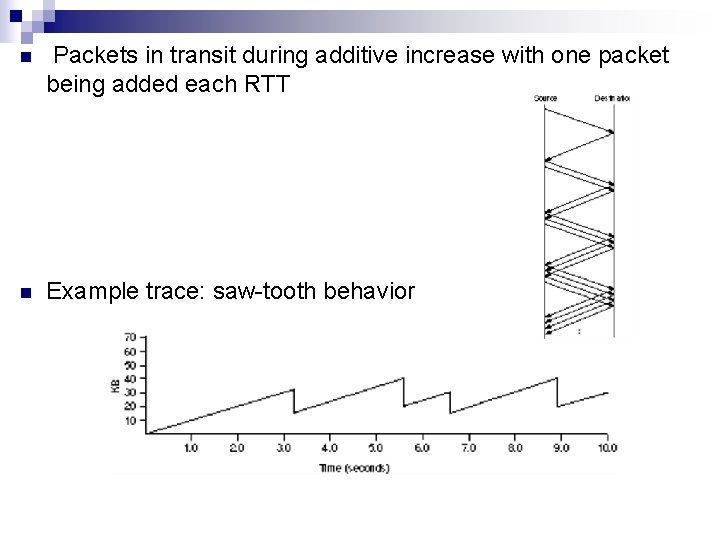

n Packets in transit during additive increase with one packet being added each RTT n Example trace: saw-tooth behavior

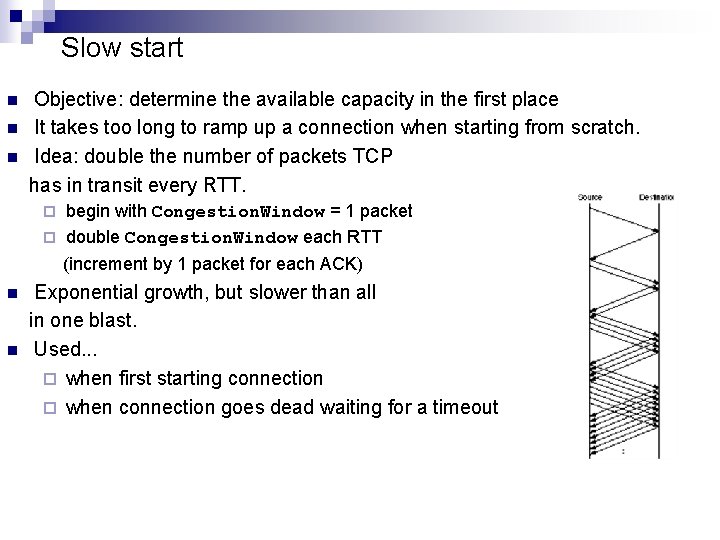

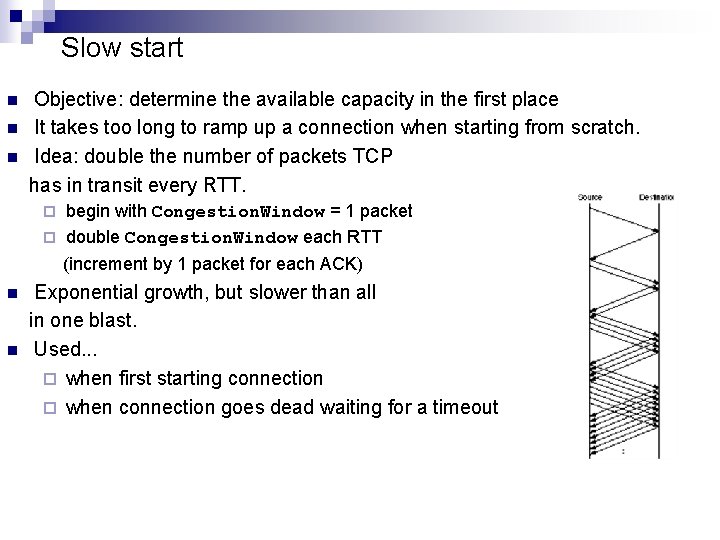

Slow start n n n Objective: determine the available capacity in the first place It takes too long to ramp up a connection when starting from scratch. Idea: double the number of packets TCP has in transit every RTT. begin with Congestion. Window = 1 packet ¨ double Congestion. Window each RTT ¨ (increment by 1 packet for each ACK) n n Exponential growth, but slower than all in one blast. Used. . . ¨ when first starting connection ¨ when connection goes dead waiting for a timeout

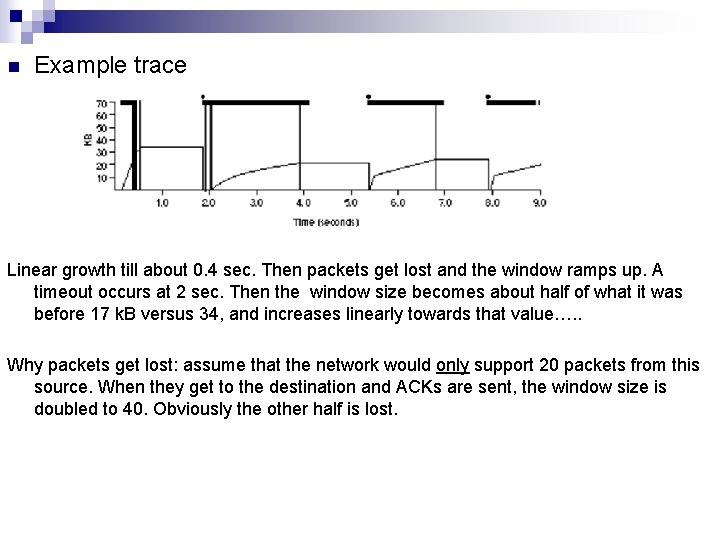

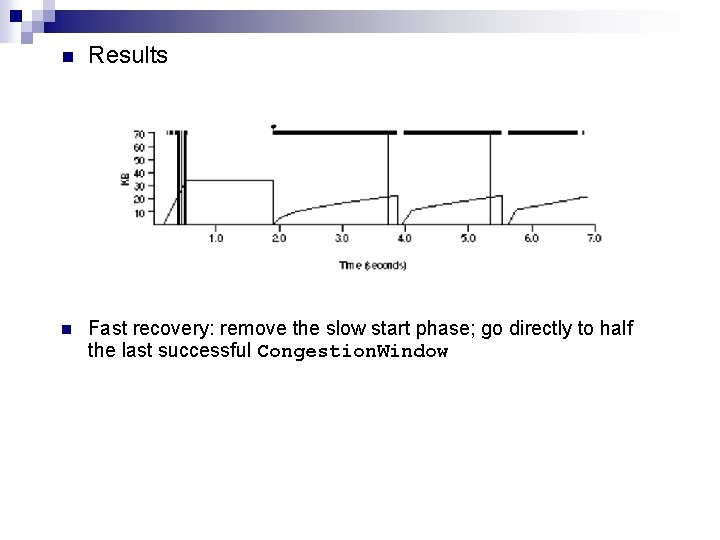

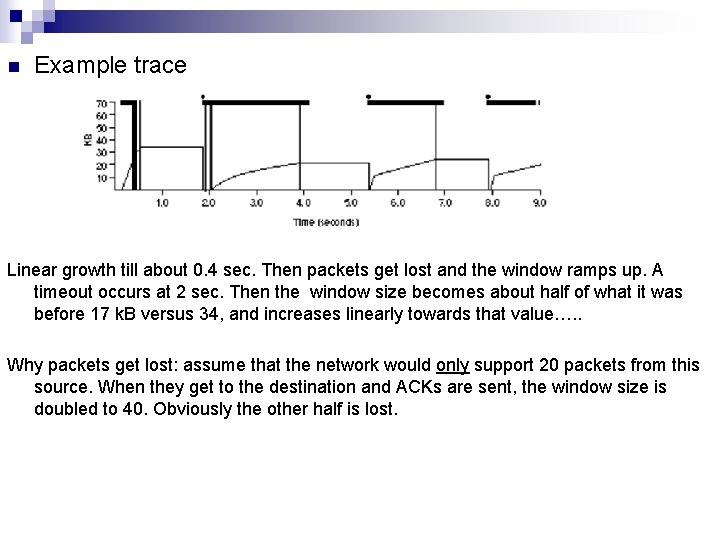

n Example trace Linear growth till about 0. 4 sec. Then packets get lost and the window ramps up. A timeout occurs at 2 sec. Then the window size becomes about half of what it was before 17 k. B versus 34, and increases linearly towards that value…. . Why packets get lost: assume that the network would only support 20 packets from this source. When they get to the destination and ACKs are sent, the window size is doubled to 40. Obviously the other half is lost.

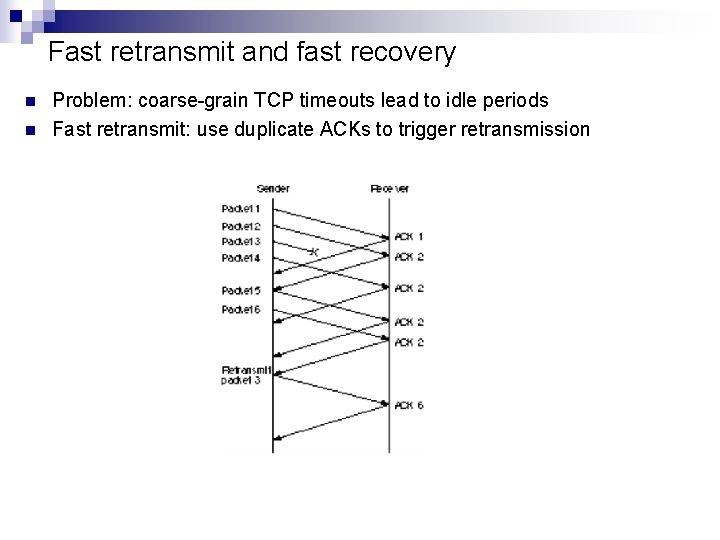

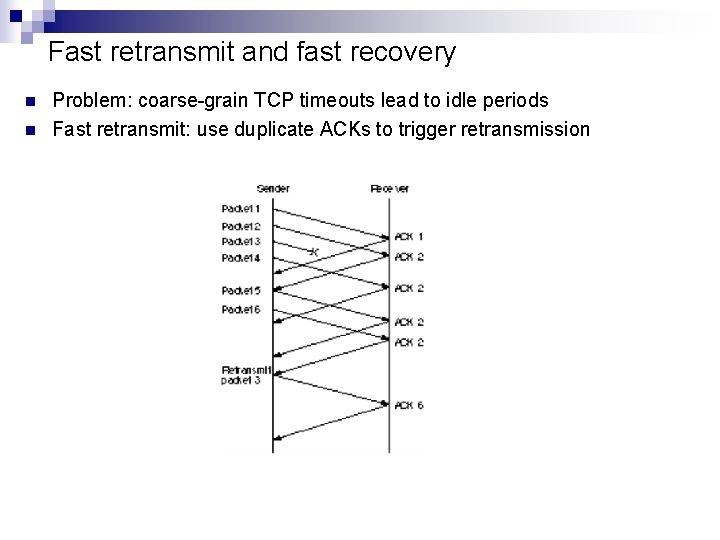

Fast retransmit and fast recovery n n Problem: coarse-grain TCP timeouts lead to idle periods Fast retransmit: use duplicate ACKs to trigger retransmission

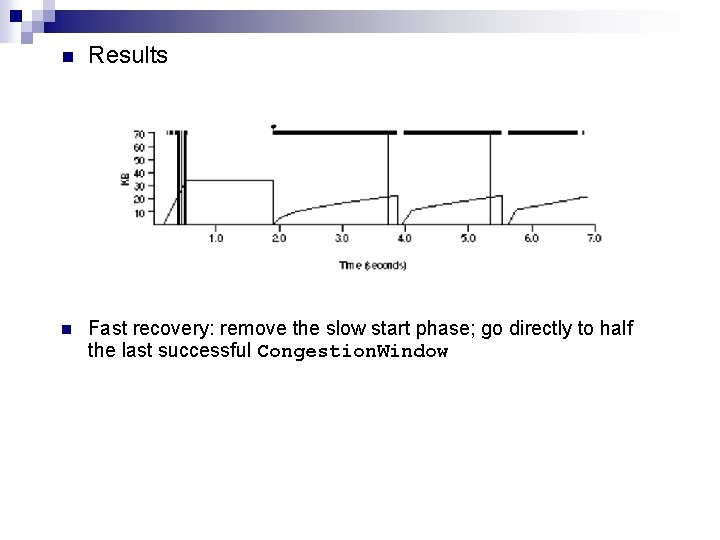

n Results n Fast recovery: remove the slow start phase; go directly to half the last successful Congestion. Window

Congestion avoidance mechanisms n TCP's strategy to control congestion once it happens ¨ to repeatedly increase load in an effort to find the point at which congestion occurs, and then back off ¨ n Alternative strategy predict when congestion is about to happen, and reduce the rate at which hosts send data just before packets start being discarded ¨ we call this congestion avoidance, to distinguish it from congestion control ¨ n Two possibilities router-centric: DECbit and RED Gateways ¨ host-centric: TCP Vegas ¨