Condor Philosophy Greg Thain Agenda The other talks

- Slides: 38

Condor Philosophy Greg Thain

Agenda The other talks are about the hows of HTCondor This talk is about the why

First Principles: Who › 1) Owner: $$$ (€€€, £££ ? ? ? ) › 2) Job Submitter › 3) Administrator

The Philosophy on 1 slide To reliably run as much work as possible on as many machines as possible (in order of precedence)

The other side – administrator’s view To maximize machine utilization ABCs: Always Be Computing “No Cycle Left Behind”

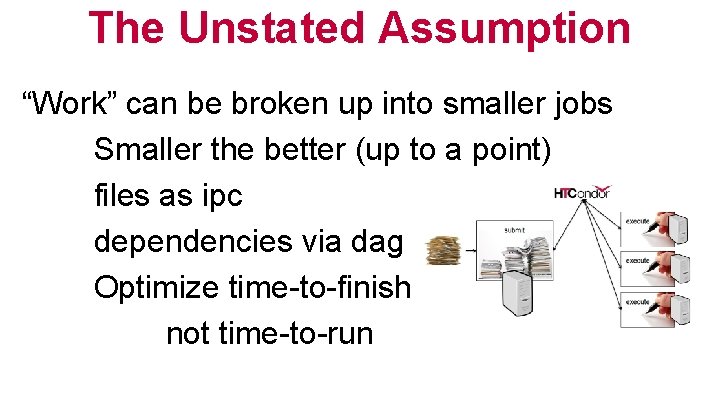

The Unstated Assumption “Work” can be broken up into smaller jobs Smaller the better (up to a point) files as ipc dependencies via dag Optimize time-to-finish not time-to-run

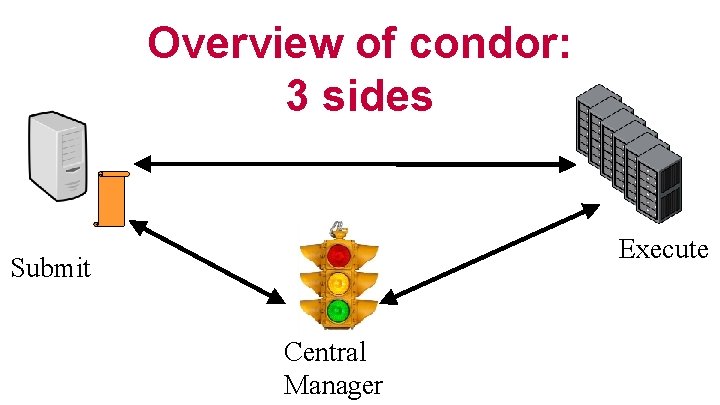

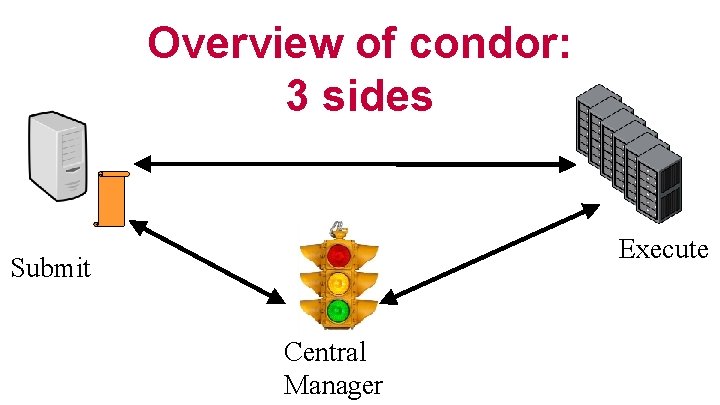

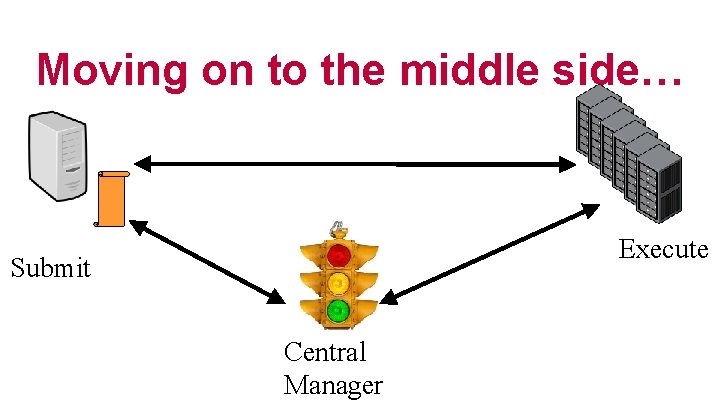

Overview of condor: 3 sides Execute Submit Central Manager

To reliably run… › Reliability 1 st priority › We can make HTCondor fast enough w/o sacrificing any reliability – no screw polishing

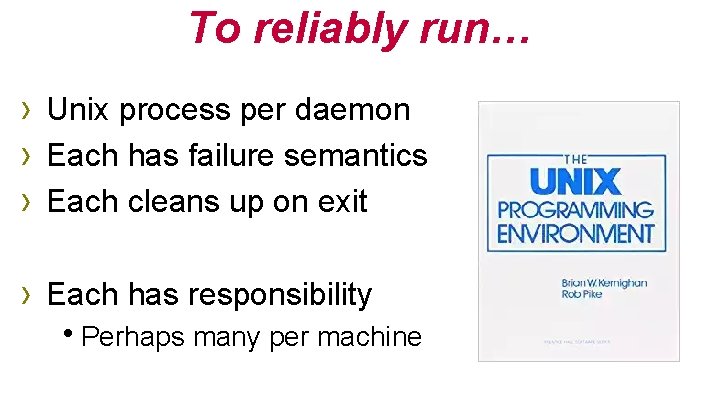

To reliably run… › Unix process per daemon › Each has failure semantics › Each cleans up on exit › Each has responsibility h. Perhaps many per machine

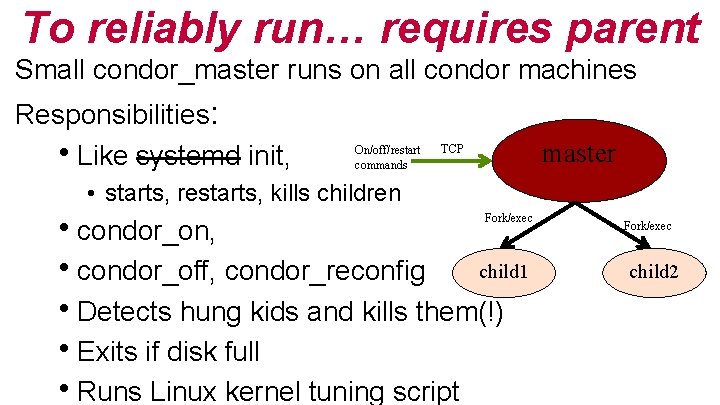

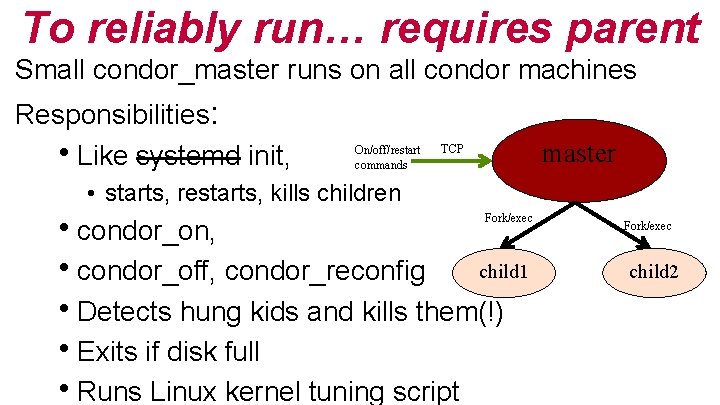

To reliably run… requires parent Small condor_master runs on all condor machines Responsibilities: h. Like systemd init, On/off/restart commands master TCP • starts, restarts, kills children hcondor_on, hcondor_off, condor_reconfig Fork/exec child 1 h. Detects hung kids and kills them(!) h. Exits if disk full h. Runs Linux kernel tuning script Fork/exec child 2

master manages process Manage: › Remove what you create hand what they created… › Measure what you create h. And report it › Limit what you create

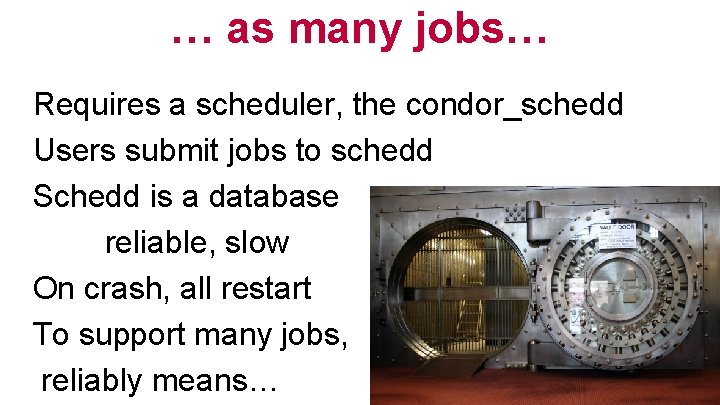

… as many jobs… Requires a scheduler, the condor_schedd Users submit jobs to schedd Schedd is a database reliable, slow On crash, all restart To support many jobs, reliably means…

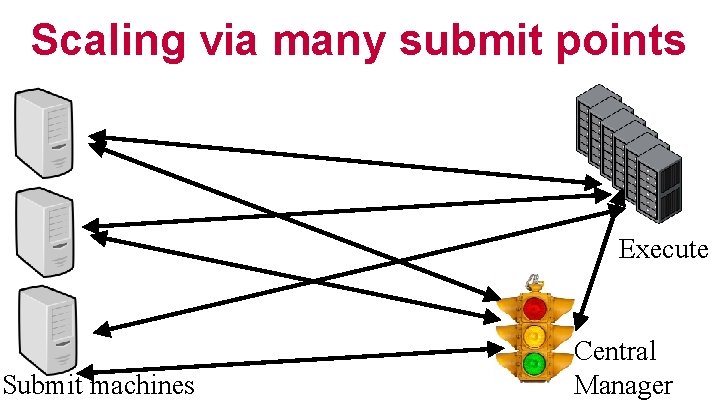

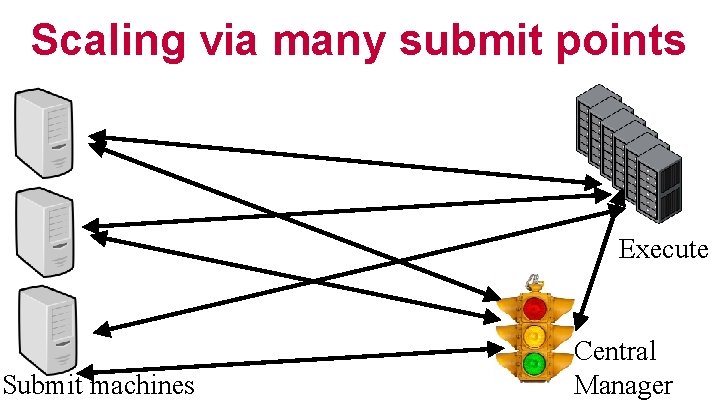

Scaling via many submit points Execute Submit machines Central Manager

Scaling via many submit points Adding submit points just helps scaling Allows submit near the user “Submit locally, run globally”

But the schedd doesn’t schedule › It does a little › Schedd has jobs, can request machines › But only uses the machines given to it › Scheduling, not planning

The shadow manage running, remote jobs › One process per running job on submit › Responsible for job’s policy remotely h. Tells the worker node what to do › Expensive? Yes – worth it

…on as many machines Implies machines are heterogeneous Could be foreign pools Could be same pool with different config Could be places without shared filesystem

Two-faced nature of HTCondor Split responsibility: Worker side Submit side We encourage different config on both sides Always focusing on responsibility of the side Always consider where responsibility goes

The startd › Startd represents the policy of the machine › Creates “slots”, places for jobs to run › Could conflict with job’s policy? h. Who wins? › Always the machine – the job is a guest

Startd Mission Statement › Near sighted › 3 inputs only: h. Machine h. Running Jobs h. Candidate Running Job › Knows nothing about the rest of the system!

Things the startd can do › › Only run some kinds of jobs Preempt one job for another Only run 1 job of some type Expose and match custom resource

But the startd doesn’t run job › Doesn’t run jobs directly, › Creates (and manages!) child process, the starter

The Starter › Startd manages machine, starter job › When job starts, startd spawns starter › One starter per job, thus one per slot

Starter Responsibilities › › › Starter manages running job on machine: Create environment for job Monitor, report job resource usage home Creates “Universe” metaphor Clean up after job h. Condor Philosophy: renters clean up after use • (Startd cleans up after starter…) › File Transfer

A few words on file transfer… › We can use shared FS or File Transfer › Prefer File Transfer: h. Managed h. Portable h. Declarative

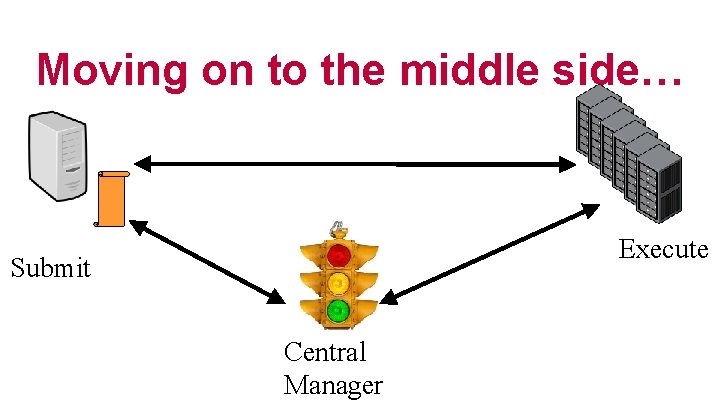

Moving on to the middle side… Execute Submit Central Manager

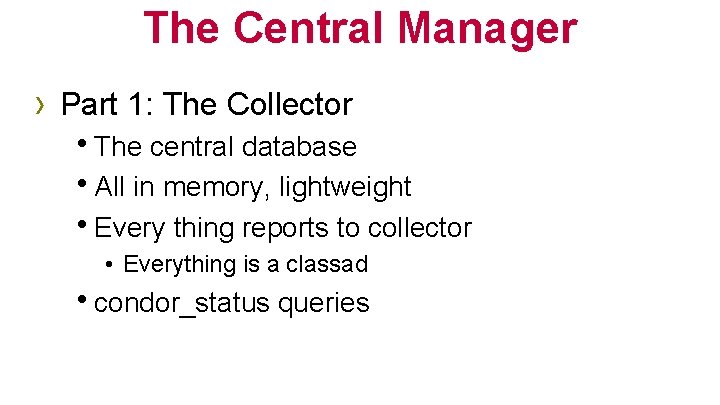

The Central Manager › Part 1: The Collector h. The central database h. All in memory, lightweight h. Every thing reports to collector • Everything is a classad hcondor_status queries

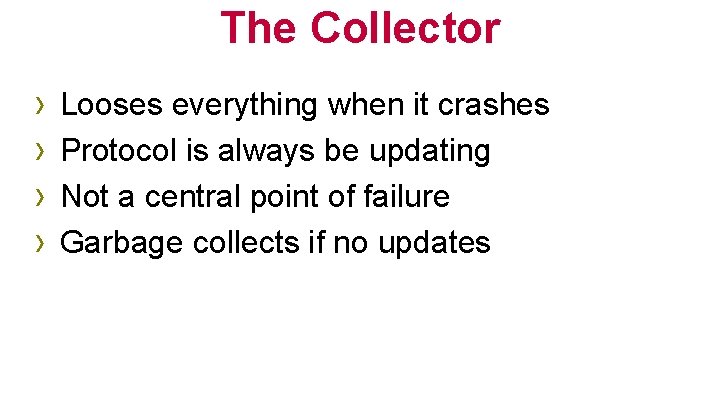

The Collector › › Looses everything when it crashes Protocol is always be updating Not a central point of failure Garbage collects if no updates

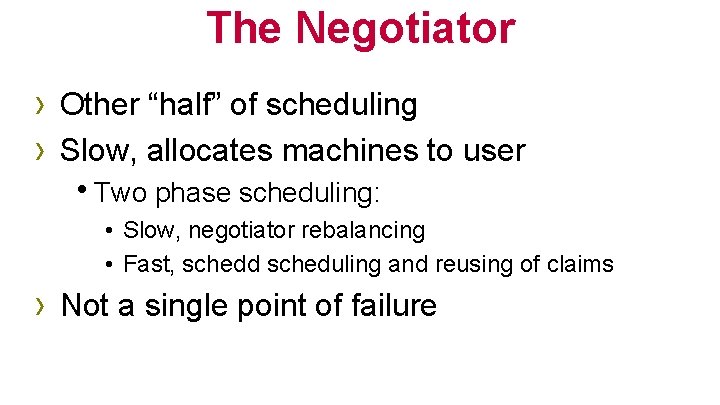

The Negotiator › Other “half” of scheduling › Slow, allocates machines to user h. Two phase scheduling: • Slow, negotiator rebalancing • Fast, schedd scheduling and reusing of claims › Not a single point of failure

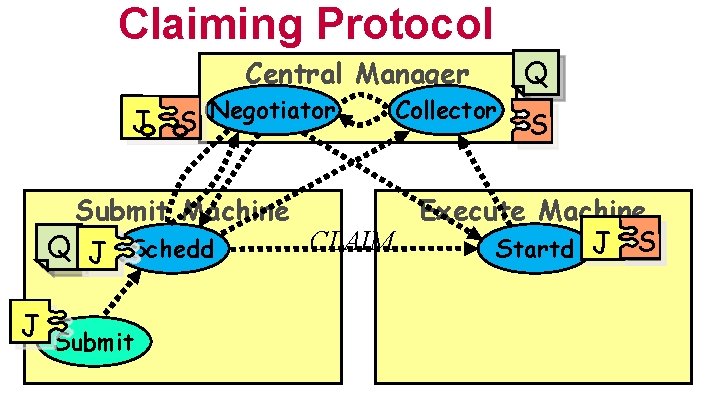

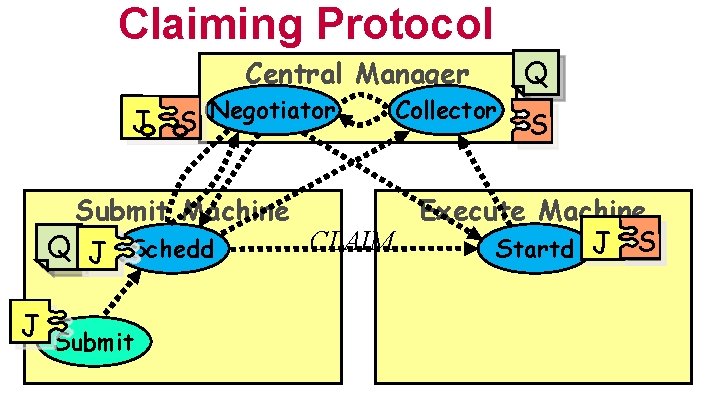

Claiming Protocol Central Manager J S Negotiator Collector Q S Submit Machine Execute Machine CLAIM Q J Schedd Startd J Submit 30

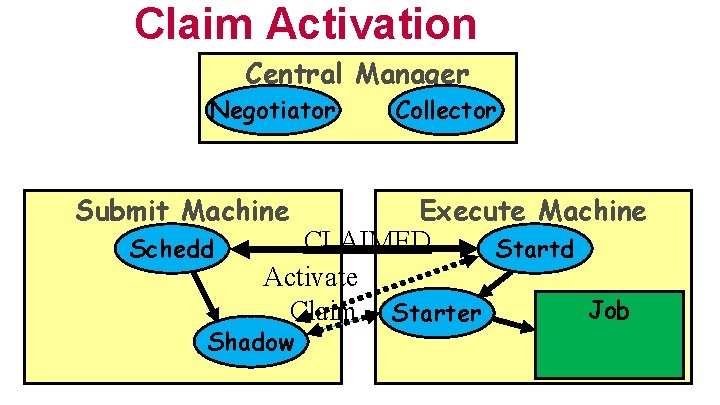

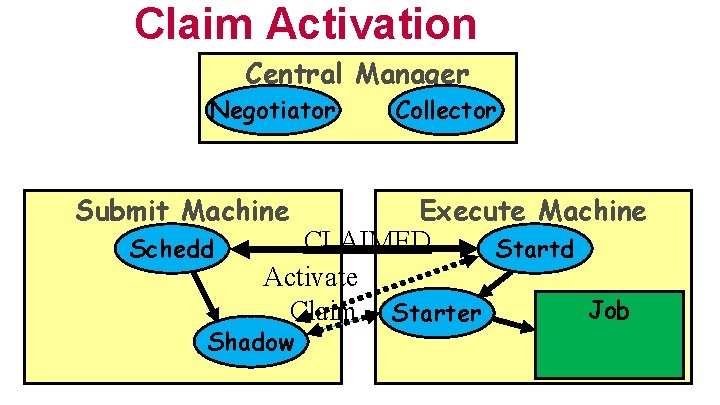

Claim Activation Central Manager Negotiator Submit Machine Schedd Collector Execute Machine CLAIMED Startd Activate Job Claim Starter Shadow 31

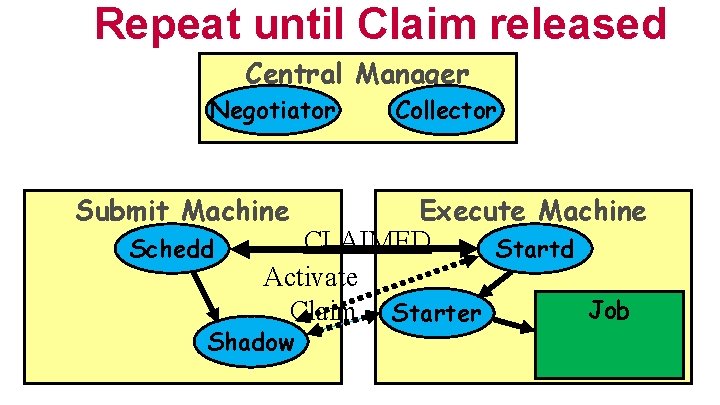

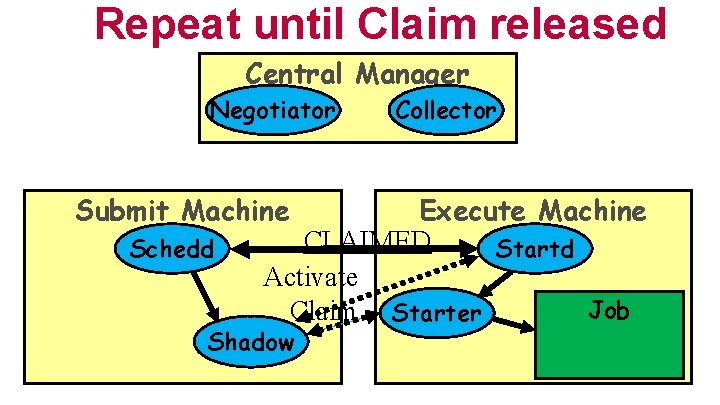

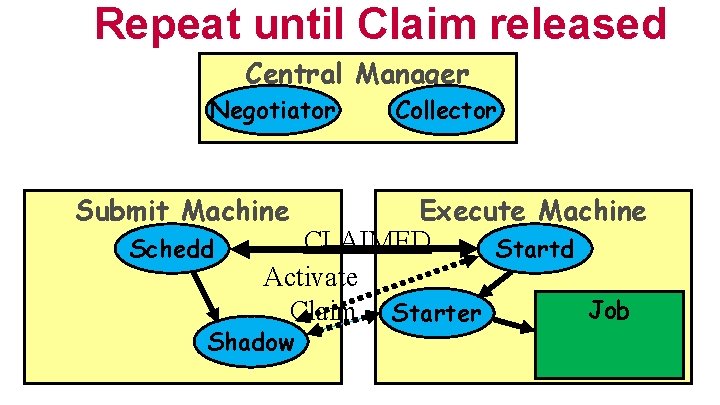

Repeat until Claim released Central Manager Negotiator Submit Machine Schedd Collector Execute Machine CLAIMED Startd Activate Job Claim Starter Shadow 32

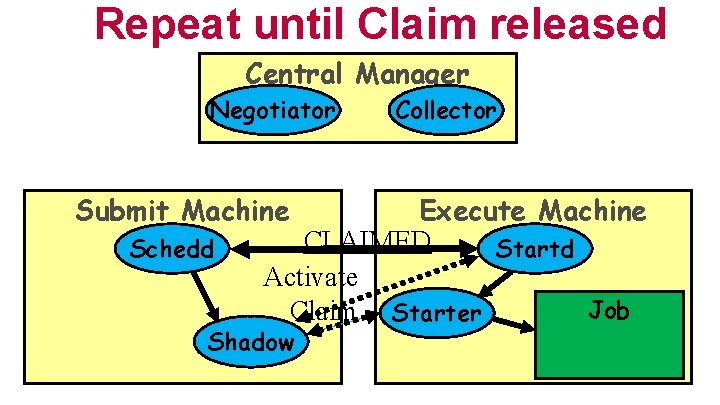

Repeat until Claim released Central Manager Negotiator Submit Machine Schedd Collector Execute Machine CLAIMED Startd Activate Job Claim Starter Shadow 33

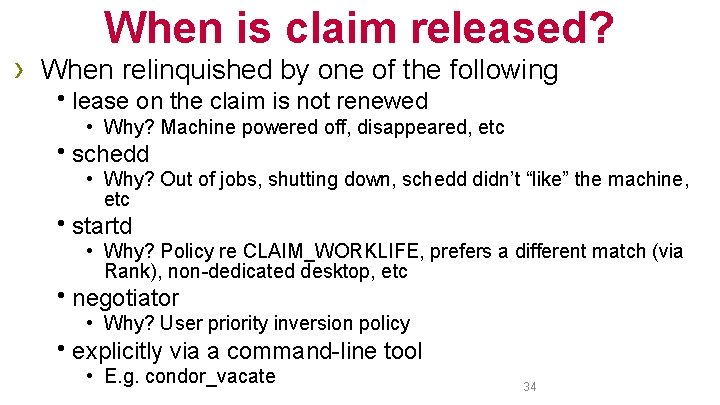

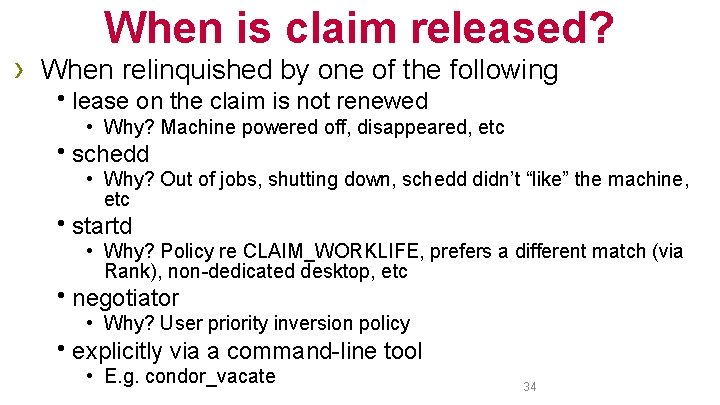

When is claim released? › When relinquished by one of the following hlease on the claim is not renewed • Why? Machine powered off, disappeared, etc hschedd • Why? Out of jobs, shutting down, schedd didn’t “like” the machine, etc hstartd • Why? Policy re CLAIM_WORKLIFE, prefers a different match (via Rank), non-dedicated desktop, etc hnegotiator • Why? User priority inversion policy hexplicitly via a command-line tool • E. g. condor_vacate 34

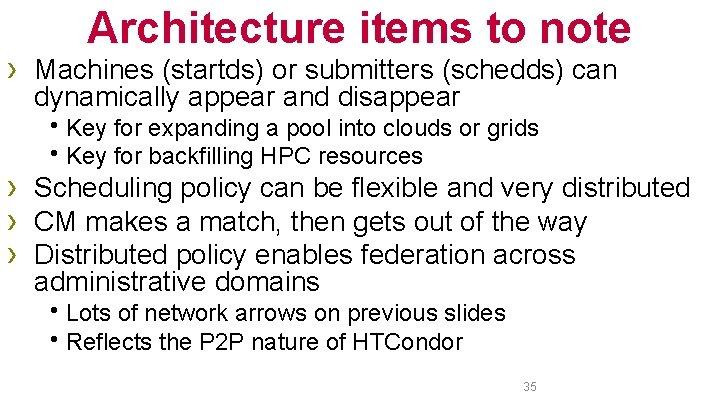

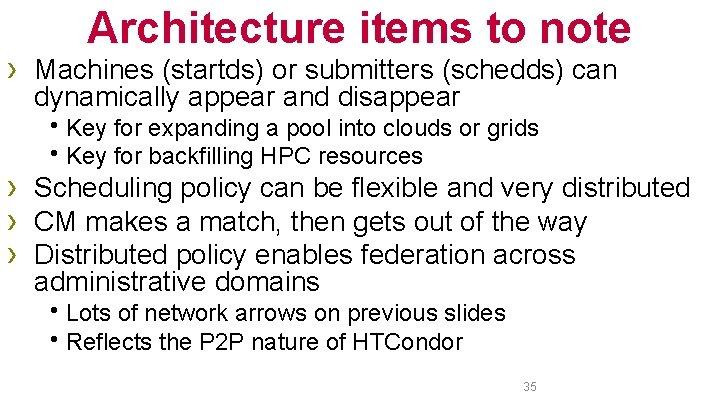

Architecture items to note › Machines (startds) or submitters (schedds) can dynamically appear and disappear h. Key for expanding a pool into clouds or grids h. Key for backfilling HPC resources › Scheduling policy can be flexible and very distributed › CM makes a match, then gets out of the way › Distributed policy enables federation across administrative domains h. Lots of network arrows on previous slides h. Reflects the P 2 P nature of HTCondor 35

Quiz Time › How to hold job that runs > 24 hours h. Or rather, where? › On the submit machine? › Or Execute Machine? Discuss!

Quiz Answer › It depends! h. Property of job or property of machine?

Conclusion › Thank you, and let’s continue discussing…

Jenny thain

Jenny thain Agenda sistemica y agenda institucional

Agenda sistemica y agenda institucional Condor homepage

Condor homepage Condor job flavour

Condor job flavour Three days of the condor cda

Three days of the condor cda Condor colombia escudo

Condor colombia escudo Condor distributed computing

Condor distributed computing Snyder introduction to the california condor download

Snyder introduction to the california condor download Condor wings

Condor wings Whats a condor

Whats a condor Airbus lms

Airbus lms Condor software

Condor software Clasificacion taxonomica del condor

Clasificacion taxonomica del condor Condor scheduler

Condor scheduler Condor de1668

Condor de1668 Bagne de poulo condor

Bagne de poulo condor Condor grid

Condor grid Condor cluster

Condor cluster Condor grid

Condor grid The condor experience

The condor experience Ccondor

Ccondor Apis daten condor

Apis daten condor Condor atm

Condor atm El condor pasa (horse)

El condor pasa (horse) Condor v barron knights

Condor v barron knights Condor aero club

Condor aero club The way my mother speaks

The way my mother speaks Www.fors-online.org.uk

Www.fors-online.org.uk Number talk hand signals

Number talk hand signals 20 days of number sense

20 days of number sense Merdeka talks

Merdeka talks Why does mayella think that atticus is mocking her

Why does mayella think that atticus is mocking her When everyone has short hair rebels want

When everyone has short hair rebels want The text talks about pelé what was his main occupation

The text talks about pelé what was his main occupation Summary of the poem night of the scorpion by nissim ezekiel

Summary of the poem night of the scorpion by nissim ezekiel Microsoft tech talks

Microsoft tech talks Driver training toolbox

Driver training toolbox Ted talks

Ted talks Things a computer scientist rarely talks about

Things a computer scientist rarely talks about