Introduction Condor Software Forum OGF 19 Condor Project

- Slides: 34

Introduction Condor Software Forum OGF 19 Condor Project Computer Sciences Department University of Wisconsin-Madison condor-admin@cs. wisc. edu http: //www. cs. wisc. edu/condor

Outline › › What do YOU want to talk about? Proposed Agenda h h h h Introduction Condor-G APIs << BREAK >> Grid Job Router GCB Roadmap http: //www. cs. wisc. edu/condor

The Condor Project (Established ‘ 85) Distributed High Throughput Computing research performed by a team of ~35 faculty, full time staff and students. http: //www. cs. wisc. edu/condor

The Condor Project (Established ‘ 85) Distributed High Throughput Computing research performed by a team of ~35 faculty, full time staff and students who: hface software engineering challenges in a distributed UNIX/Linux/NT environment hare involved in national and international grid collaborations, hactively interact with academic and commercial users, hmaintain and support large distributed production environments, hand educate and train students. Funding – US Govt. (Do. D, Do. E, NASA, NSF, NIH), AT&T, IBM, INTEL, Microsoft, UW-Madison, … http: //www. cs. wisc. edu/condor

Main Threads of Activities › Distributed Computing Research – develop and › › evaluate new concepts, frameworks and technologies The Open Science Grid (OSG) – build and operate a national distributed computing and storage infrastructure Keep Condor “flight worthy” and support our users The NSF Middleware Initiative (NMI) – develop, build and operate a national Build and Test facility The Grid Laboratory Of Wisconsin (GLOW) – build, maintain and operate a distributed computing and storage infrastructure on the UW campus http: //www. cs. wisc. edu/condor

A Multifaceted Project › Harnessing the power of clusters - opportunistic and/or › › › › dedicated (Condor) Job management services for Grid applications (Condor-G, Stork) Fabric management services for Grid resources (Condor, Glide. Ins, Ne. ST) Distributed I/O technology (Parrot, Kangaroo, Ne. ST) Job-flow management (DAGMan, Condor, Hawk) Distributed monitoring and management (Hawk. Eye) Technology for Distributed Systems (Class. AD, MW) Packaging and Integration (NMI, VDT) http: //www. cs. wisc. edu/condor

Some software produced by the Condor Project › Condor System › › › Class. Ad Library DAGMan GAHP Hawkeye GCB › › › MW Ne. ST Stork Parrot Condor-G And others… all as open source http: //www. cs. wisc. edu/condor

What is Condor? › Condor converts collections of › › distributively owned workstations and dedicated clusters into a distributed highthroughput computing (HTC) facility. Condor manages both resources (machines) and resource requests (jobs) Condor has several unique mechanisms h. Transparent checkpoint/restart h. Transparent process migration h. I/O Redirection h. Class. Ad Matchmaking Technology h. Grid Metacheduling http: //www. cs. wisc. edu/condor

Condor can manage a large number of jobs › Managing a large number of jobs h. You specify the jobs in a file and submit them to Condor, which runs them all and keeps you notified on their progress h. Mechanisms to help you manage huge numbers of jobs (1000’s), all the data, etc. h. Condor can handle inter-job dependencies (DAGMan) h. Condor users can set job priorities h. Condor administrators can set user priorities http: //www. cs. wisc. edu/condor

Condor can manage Dedicated Resources… › Dedicated Resources h. Compute Clusters › Grid Resources › Manage h. Node monitoring, scheduling h Job launch, monitor & cleanup http: //www. cs. wisc. edu/condor

…and Condor can manage non-dedicated resources › Non-dedicated resources examples: h. Desktop workstations in offices h. Workstations in student labs › Non-dedicated resources are often idle --› ~70% of the time! Condor can effectively harness the otherwise wasted compute cycles from nondedicated resources http: //www. cs. wisc. edu/condor

Condor Classads › Capture and communicate attributes of › › › objects (resources, work units, connections, claims, …) Define policies/conditions/triggers via Boolean expressions Class. Ad Collections provide persistent storage Facilitate matchmaking and gangmatching http: //www. cs. wisc. edu/condor

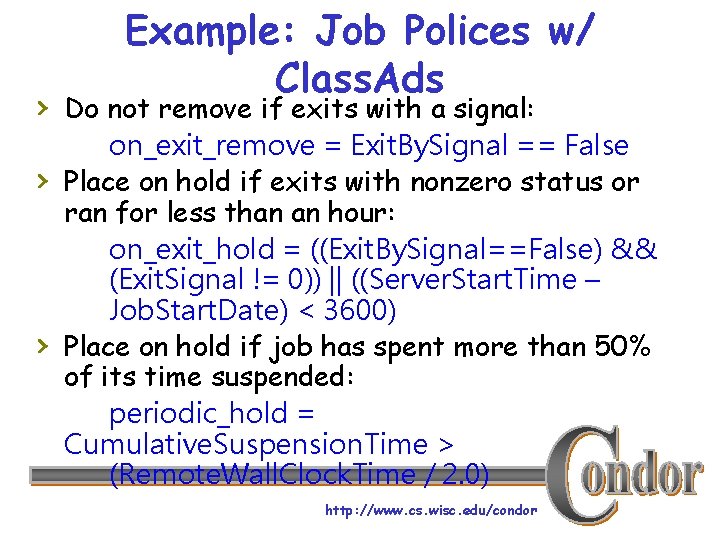

Example: Job Polices w/ Class. Ads › Do not remove if exits with a signal: › › on_exit_remove = Exit. By. Signal == False Place on hold if exits with nonzero status or ran for less than an hour: on_exit_hold = ((Exit. By. Signal==False) && (Exit. Signal != 0)) || ((Server. Start. Time – Job. Start. Date) < 3600) Place on hold if job has spent more than 50% of its time suspended: periodic_hold = Cumulative. Suspension. Time > (Remote. Wall. Clock. Time / 2. 0) http: //www. cs. wisc. edu/condor

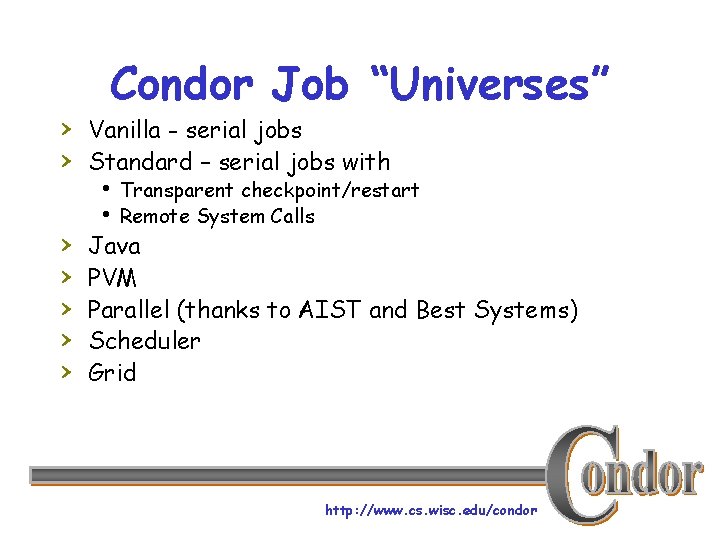

Condor Job “Universes” › Vanilla - serial jobs › Standard – serial jobs with › › › h Transparent checkpoint/restart h Remote System Calls Java PVM Parallel (thanks to AIST and Best Systems) Scheduler Grid http: //www. cs. wisc. edu/condor

Condor Job “Universes”, cont. › Scheduler › Grid http: //www. cs. wisc. edu/condor

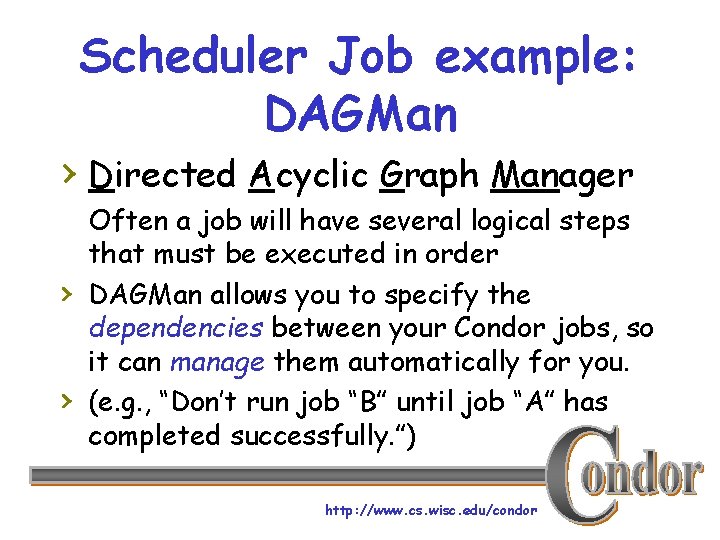

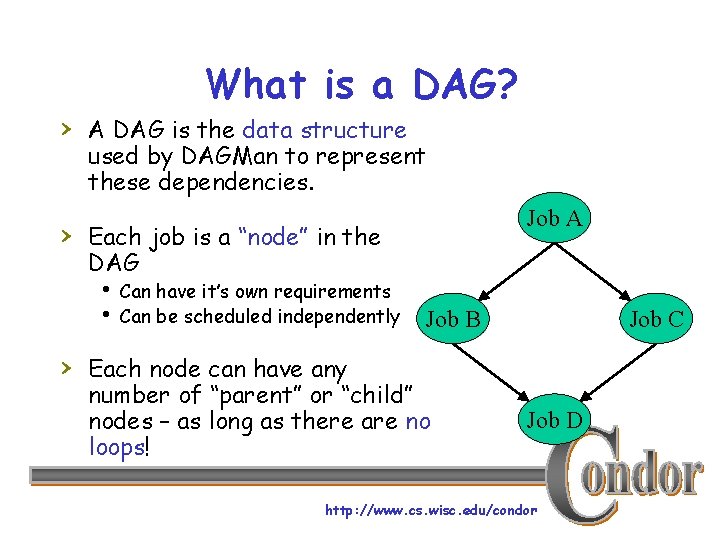

Scheduler Job example: DAGMan › Directed Acyclic Graph Manager › › Often a job will have several logical steps that must be executed in order DAGMan allows you to specify the dependencies between your Condor jobs, so it can manage them automatically for you. (e. g. , “Don’t run job “B” until job “A” has completed successfully. ”) http: //www. cs. wisc. edu/condor

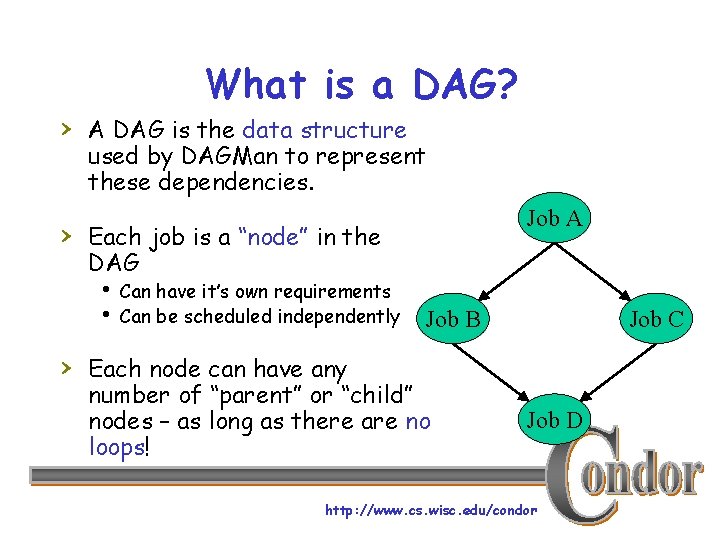

What is a DAG? › A DAG is the data structure used by DAGMan to represent these dependencies. Job A › Each job is a “node” in the DAG h Can have it’s own requirements h Can be scheduled independently Job B Job C › Each node can have any number of “parent” or “child” nodes – as long as there are no loops! Job D http: //www. cs. wisc. edu/condor

Additional DAGMan Features › Provides other handy features for job management… hnodes can have PRE & POST scripts hfailed nodes can be automatically re- tried a configurable number of times hjob submission can be “throttled” http: //www. cs. wisc. edu/condor

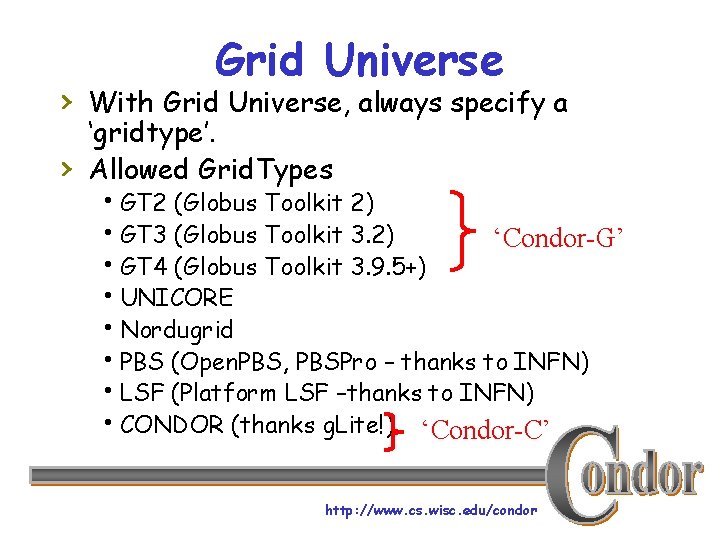

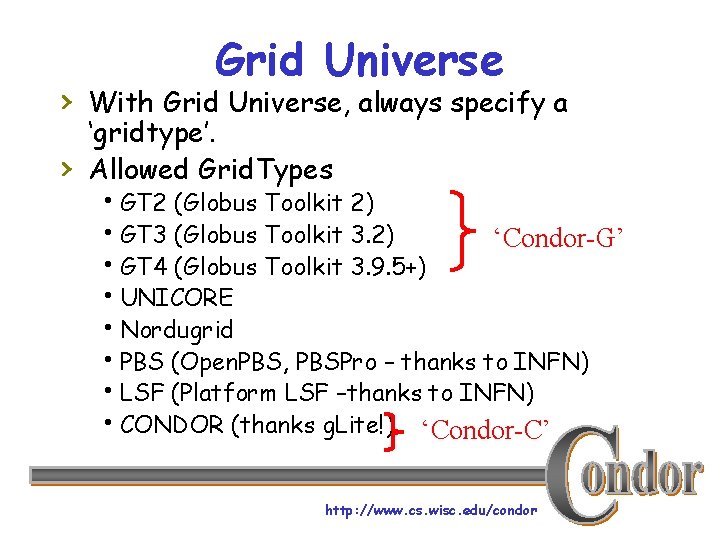

Grid Universe › With Grid Universe, always specify a › ‘gridtype’. Allowed Grid. Types h. GT 2 (Globus Toolkit 2) h. GT 3 (Globus Toolkit 3. 2) ‘Condor-G’ h. GT 4 (Globus Toolkit 3. 9. 5+) h. UNICORE h. Nordugrid h. PBS (Open. PBS, PBSPro – thanks to INFN) h. LSF (Platform LSF –thanks to INFN) h. CONDOR (thanks g. Lite!) ‘Condor-C’ http: //www. cs. wisc. edu/condor

A Grid Meta. Scheduler Grid Universe + Class. Ad Matchmaking http: //www. cs. wisc. edu/condor

COD Computing On Demand http: //www. cs. wisc. edu/condor

What Problem Does COD Solve? › Some people want to run interactive, yet › › › compute-intensive applications Jobs that take lots of compute power over a relatively short period of time They want to use batch computing resources, but need them right away Ideally, when they’re not in use, resources would go back to the batch system http: //www. cs. wisc. edu/condor

COD is not just highpriority jobs › “Checkpoint to Swap Space” h. When a high-priority COD job appears, the lower-priority batch job is suspended h. The COD job can run right away, while the batch job is suspended h. Batch jobs (even those that can’t checkpoint) can resume instantly once there are no more active COD jobs http: //www. cs. wisc. edu/condor

Stork – Data Placement Agent › Need for data placement on the Grid: h Locate the data h Send data to processing sites h Share the results with other sites h Allocate and de-allocate storage h Clean-up everything › Do these reliably and efficiently › “Make data placement a first class citizen in the Grid. ” http: //www. cs. wisc. edu/condor

Stork › A scheduler for data placement activities › › › in the Grid What Condor is for computational jobs, Stork is for data placement Stork understands the characteristics and semantics of data placement jobs. Can make smart scheduling decisions, for reliable and efficient data placement. http: //www. cs. wisc. edu/condor

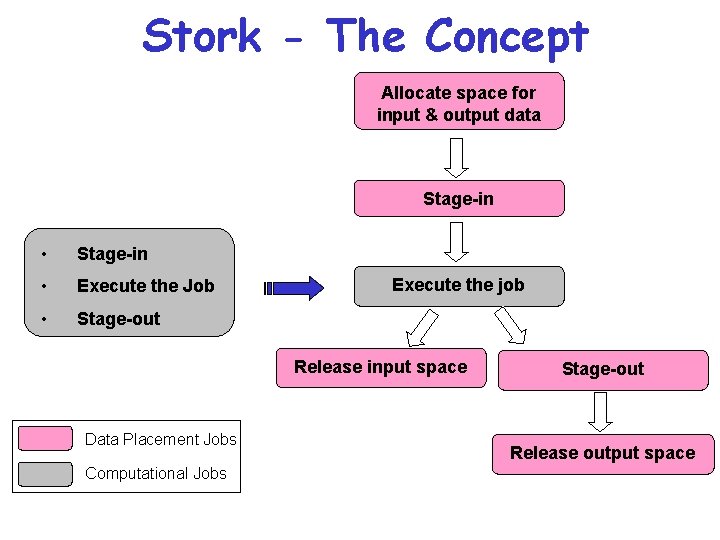

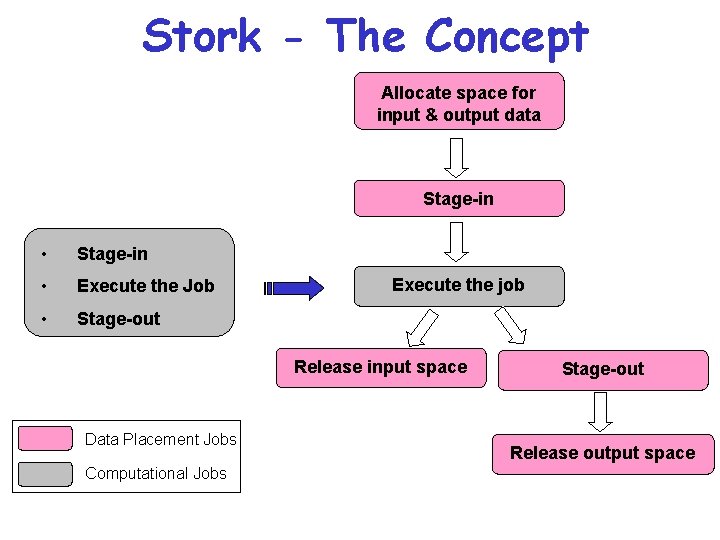

Stork - The Concept Allocate space for input & output data Stage-in • Execute the Job • Stage-out Execute the job Release input space Data Placement Jobs Stage-out Release output space Computational Jobs http: //www. cs. wisc. edu/condor

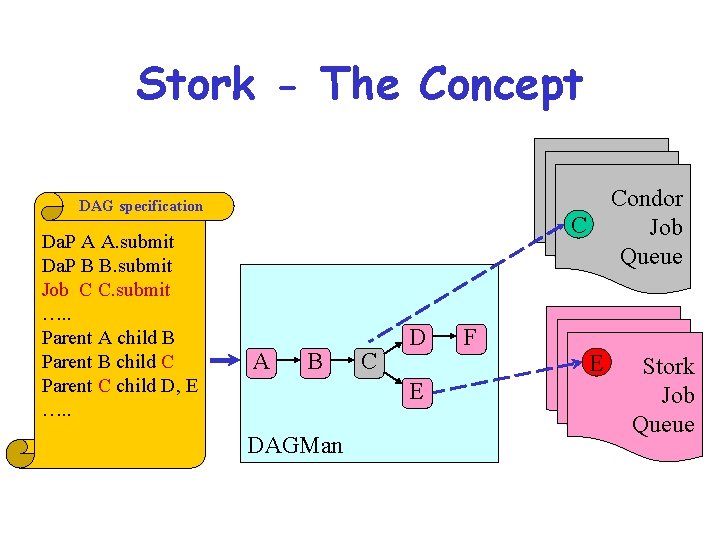

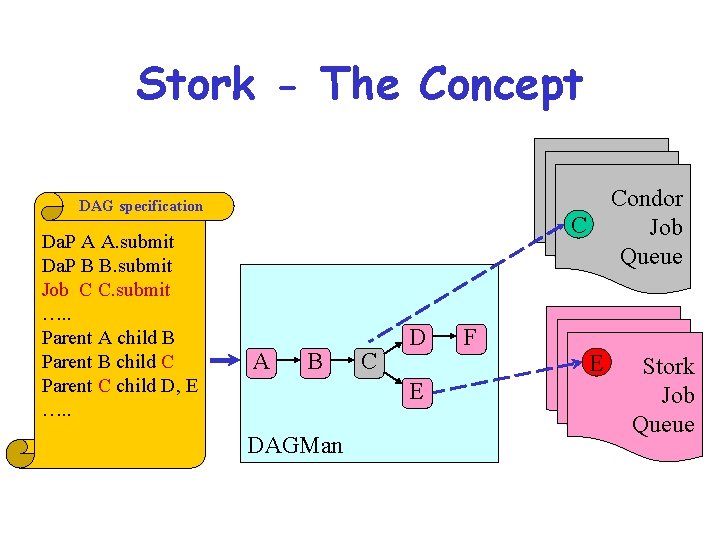

Stork - The Concept DAG specification Da. P A A. submit Da. P B B. submit Job C C. submit …. . Parent A child B Parent B child C Parent C child D, E …. . Condor Job Queue C A B C D F E DAGMan http: //www. cs. wisc. edu/condor E Stork Job Queue

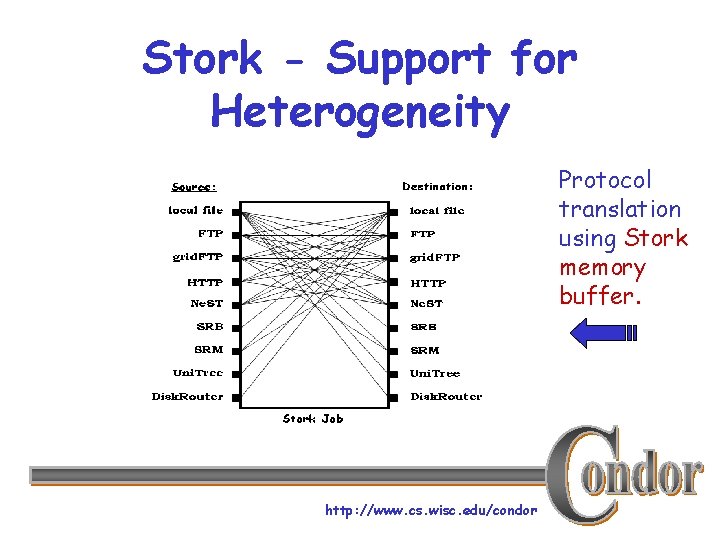

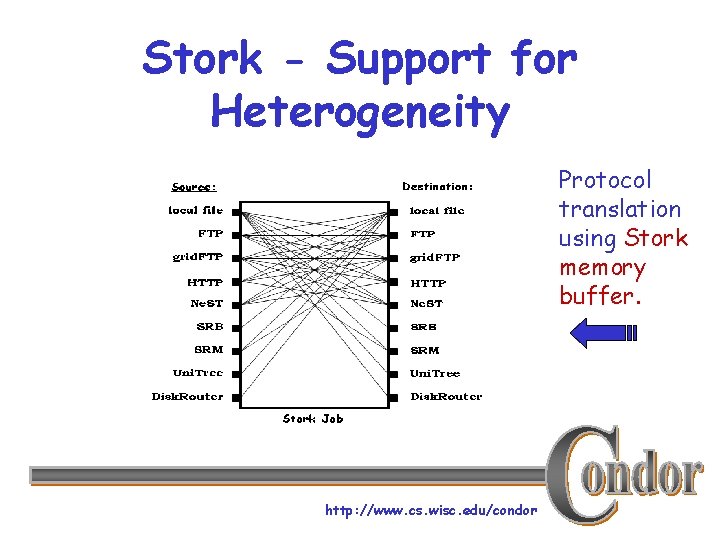

Stork - Support for Heterogeneity Protocol translation using Stork memory buffer. http: //www. cs. wisc. edu/condor

GCB – Generic Connection Broker › Build grids despite the reality of h. Firewalls h. Private Networks h. NATs http: //www. cs. wisc. edu/condor

Condor Usage http: //www. cs. wisc. edu/condor

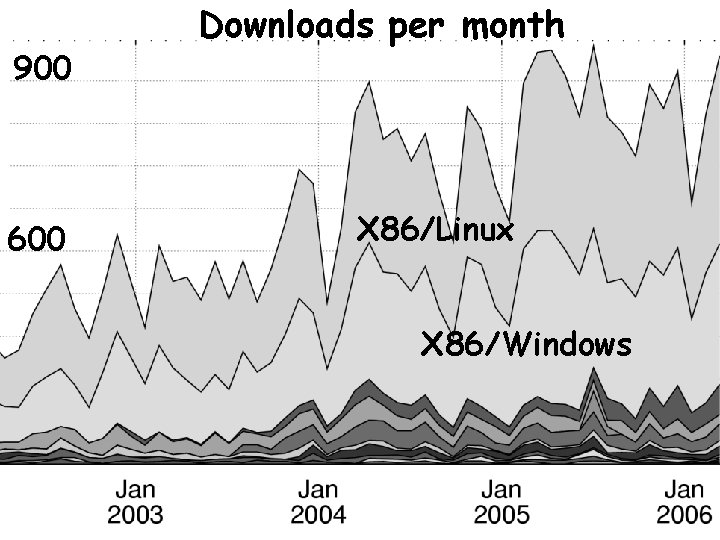

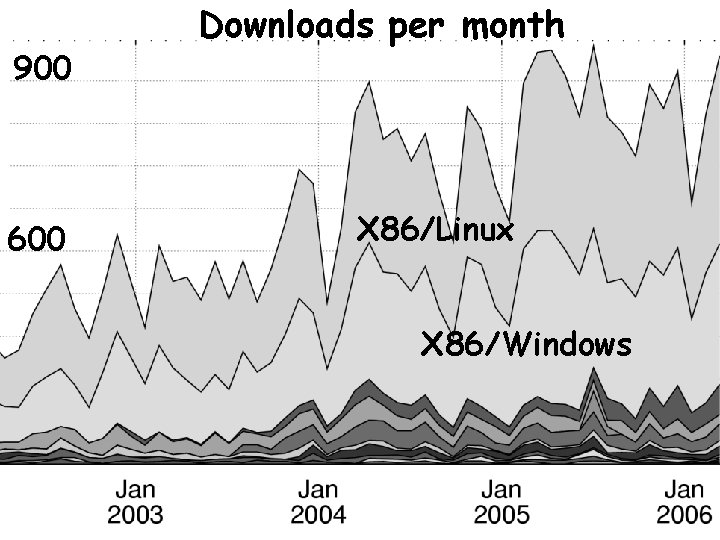

900 600 Downloads per month X 86/Linux X 86/Windows http: //www. cs. wisc. edu/condor

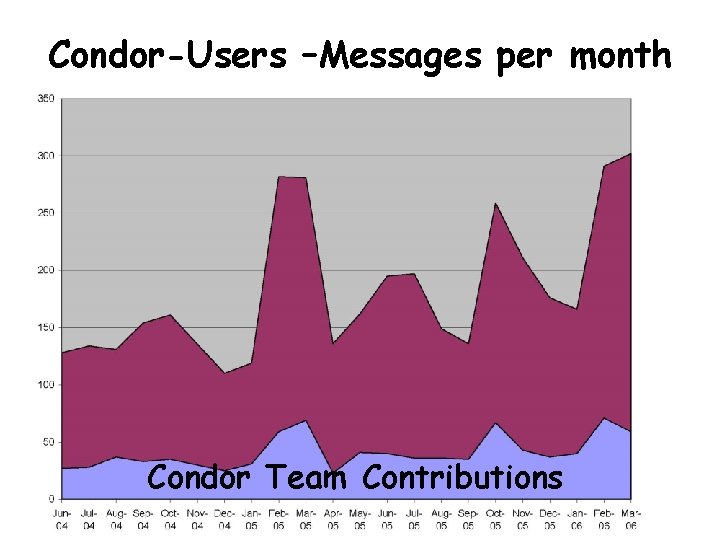

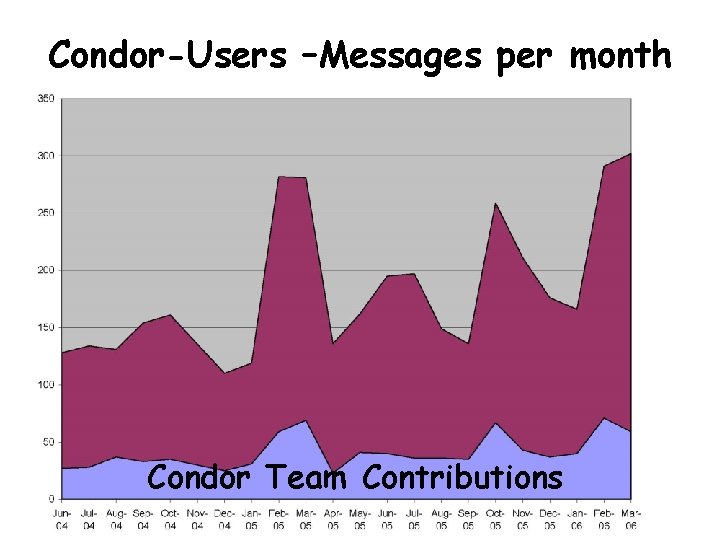

Condor-Users –Messages per month Condor Team Contributions http: //www. cs. wisc. edu/condor

http: //www. cs. wisc. edu/condor

Questions? http: //www. cs. wisc. edu/condor