Chapter 4 Performance Times User CPU time Time

- Slides: 19

Chapter 4 Performance

Times • User CPU time – Time that the CPU is executing the program • System CPU time – time the CPU is executing OS routines for the program • Waiting time – waiting for the completion of I/O or waiting for CPU due to time sharing • Wall clock time – sum of all the above including time waiting to start execution

MIPS and MFLOPS • MIPS – Millions of instructions per second • MFLOPS – Millions of Floating-point Operations Per Second. – Floating-point operations generally take longer than integer instructions – Most scientific codes are dominated by floatingpoint operations

CPI and memory Heirarch • These topics are discussed in 311 • Usually important for single processor performance evaluation, not for parallel performance evaluation

Benchmark Programs • Linpack benchmark – http: //www. netlib. org/utk/people/Jack. Dongarra/faq-linpack. html – A standard program to solve a system of equations – Has grown into a well used standard for benchmarking systems • Real applications – Spec benchmarks – uses real programs like compression and compiling – May or may not be applicable to parallel

Parallel Performance • Parallel Runtime – Time from the start of the program until the last processor has finished. – Usually considered a function of n and p. – Comprised of times from • • Local computations Exchange of data Synchronization Wait time – unequal distribution of work, mutual exclusion

Parallel Program Cost • The “cost” of a parallel program with input size n and p processors is – C(n, p)=p * T(n, p) – Where T(n, p) is the time it takes to run the program with input size n on p processors • Cost-Optimal – A parallel implementation is cost optimal if • C(n, p)= T(n) ----> the best sequential time

Speedup • The speedup is the ratio of best sequential time divided by parallel time – S(n, p)=T(n)/T(n, p) • Theoretically S(n, p)<=p – However, could contradict because of more cache – Could have found a better algorithm for sequential implementation (contradicts using the “best” sequential algorithm requirement).

Best Sequential Algorithm • May not be known • Different data distributions may have different best sequential algorithm • Implementation of the best is too much • So, many people use a sequential version of the parallel algorithm as the base for speedup rather than the best sequential algorithm

Efficiency • Captures the utilization of the processors • E(n, p)=S(n, p)/p • Ideal is 1, typically less than 1

Amdahl’s Law • Using f as the fraction of time that must be done sequentially. • 1 -f is the fraction of time that can be parallelize • If the time for sequential execution is 1 time unit, then the time for the parallel version is f+(1 -f)/p • Speedup is 1/[f+(1 -f)/p] • Even with infinite processors, the best speedup is 1/f – For example, if 5% of the time must be sequential, the best speedup would be 20, even with 1000 processors.

Scalability • How performance changes with increased number of processors. – As increase processors, may increase communication time. • Realize a limitation of Amdahl’s law is that as n increases, f probably decreases • Consider as the number of processors increases, n is also increased

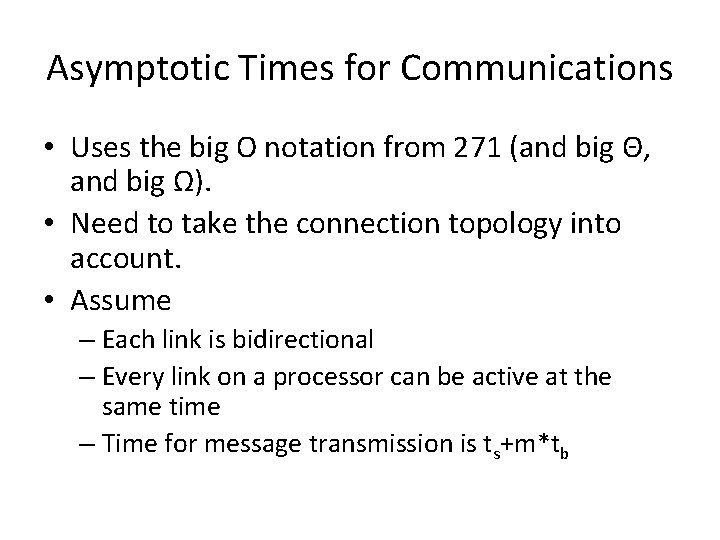

Asymptotic Times for Communications • Uses the big O notation from 271 (and big Θ, and big Ω). • Need to take the connection topology into account. • Assume – Each link is bidirectional – Every link on a processor can be active at the same time – Time for message transmission is ts+m*tb

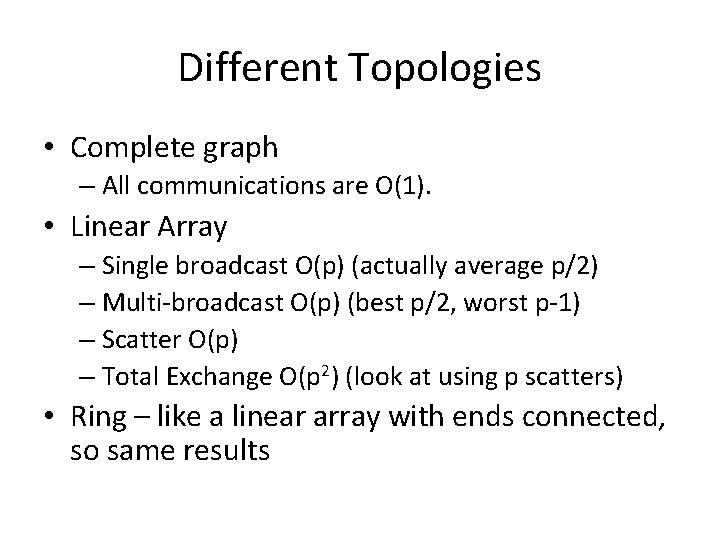

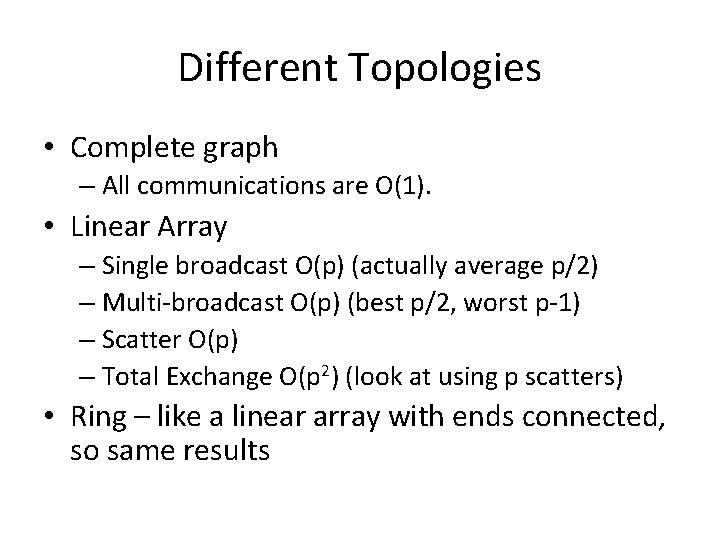

Different Topologies • Complete graph – All communications are O(1). • Linear Array – Single broadcast O(p) (actually average p/2) – Multi-broadcast O(p) (best p/2, worst p-1) – Scatter O(p) – Total Exchange O(p 2) (look at using p scatters) • Ring – like a linear array with ends connected, so same results

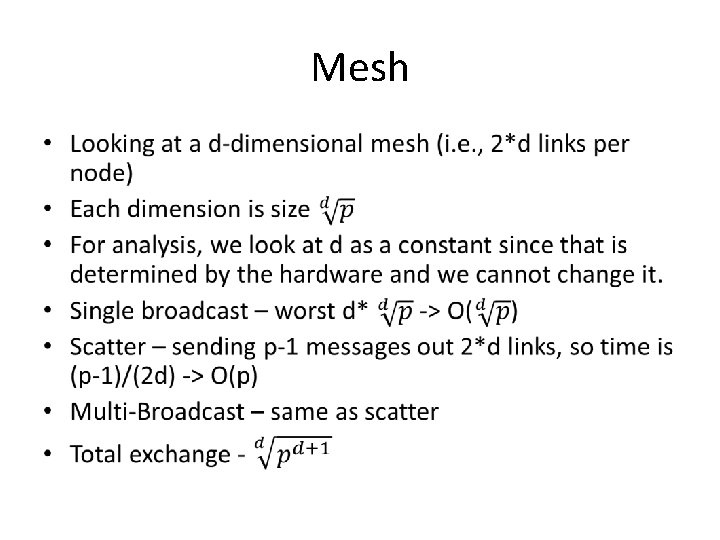

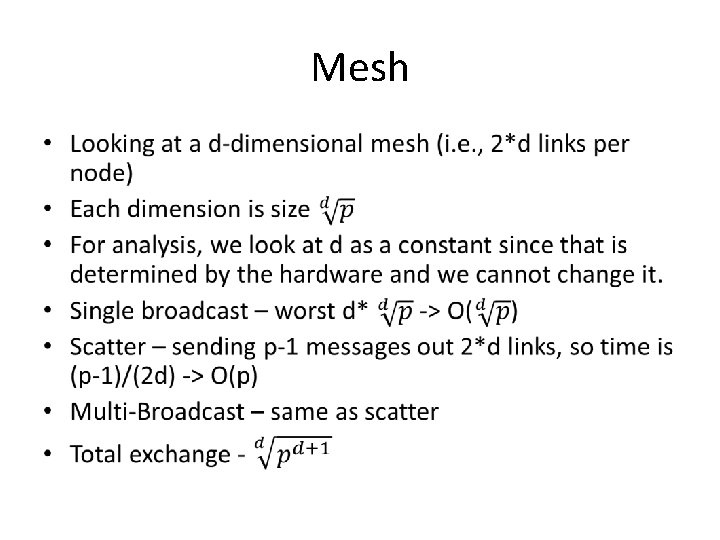

Mesh •

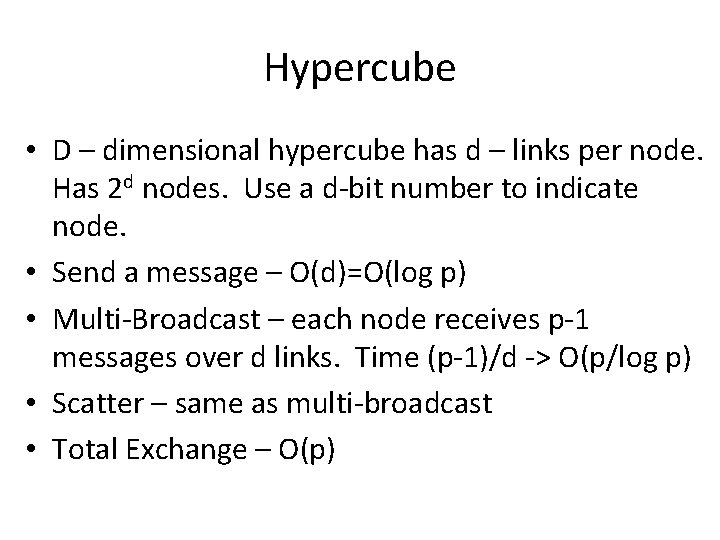

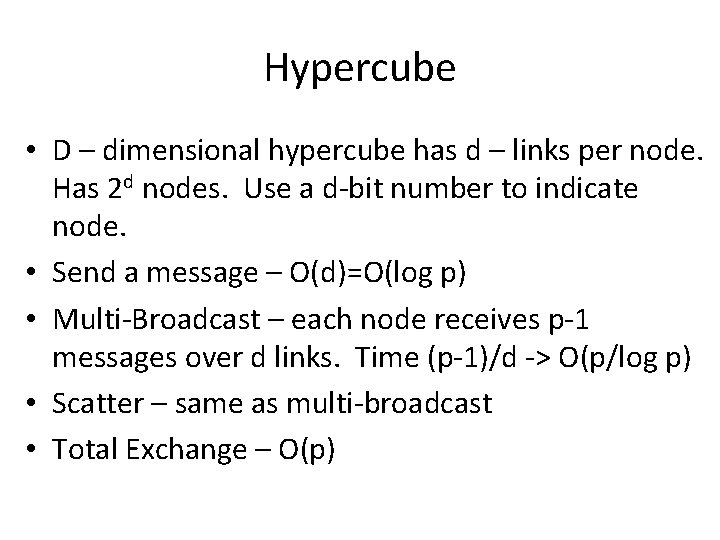

Hypercube • D – dimensional hypercube has d – links per node. Has 2 d nodes. Use a d-bit number to indicate node. • Send a message – O(d)=O(log p) • Multi-Broadcast – each node receives p-1 messages over d links. Time (p-1)/d -> O(p/log p) • Scatter – same as multi-broadcast • Total Exchange – O(p)

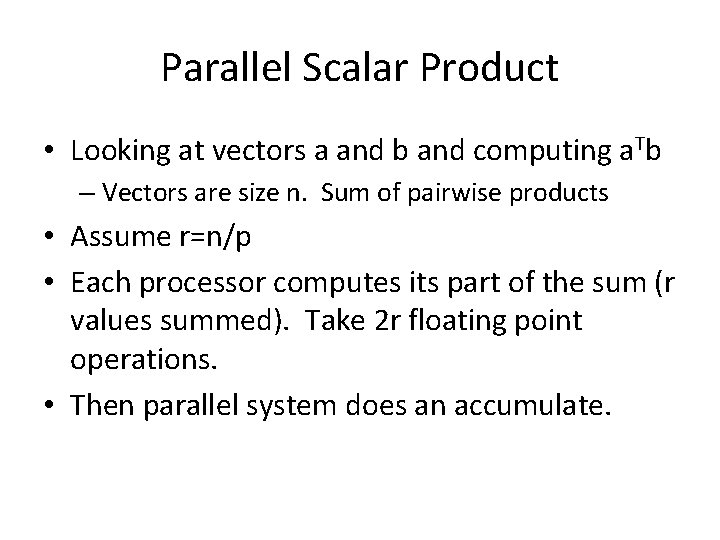

Parallel Scalar Product • Looking at vectors a and b and computing a. Tb – Vectors are size n. Sum of pairwise products • Assume r=n/p • Each processor computes its part of the sum (r values summed). Take 2 r floating point operations. • Then parallel system does an accumulate.

Linear Array • Best processor for root is the middle processor • Takes p/2 communications and float adds. • Total time if it takes time α for floating point operation and time β for communication is – 2 rα+(p/2)(α+β)=2(n/p)α+(p/2)(α+β) • Easy to see that as increase p, the first term goes down, but the second term goes up. • What is best p? Find minimum • T’(p)=-2(n/p 2)α+(α+β)/2 =0 -> (α+β) p 2=4 nα ->p 2= 4 nα/ (α+β)

Hypercube •