Cache Optimization Performance CPU time CPU execution cycles

- Slides: 21

Cache - Optimization

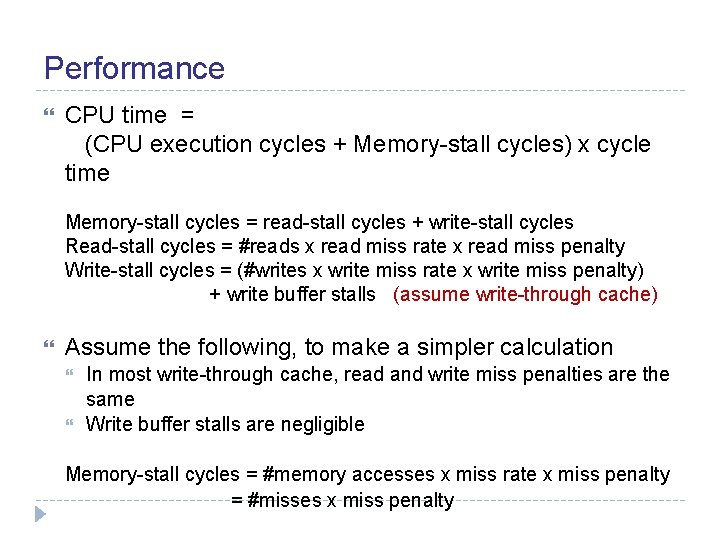

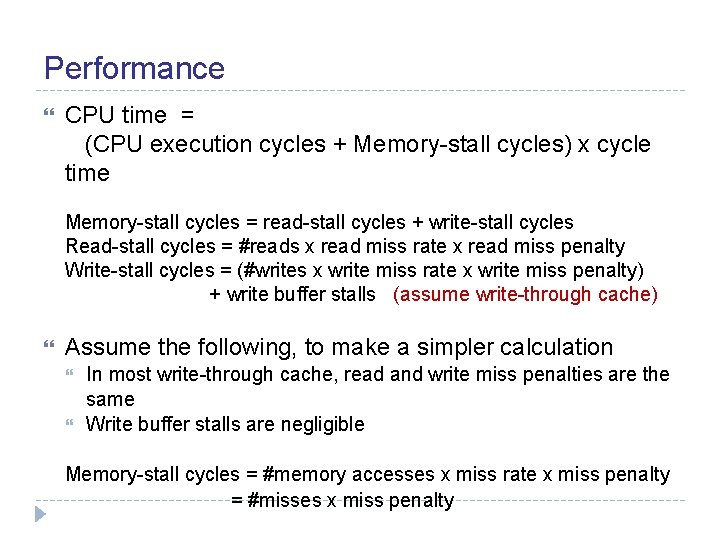

Performance CPU time = (CPU execution cycles + Memory-stall cycles) x cycle time Memory-stall cycles = read-stall cycles + write-stall cycles Read-stall cycles = #reads x read miss rate x read miss penalty Write-stall cycles = (#writes x write miss rate x write miss penalty) + write buffer stalls (assume write-through cache) Assume the following, to make a simpler calculation In most write-through cache, read and write miss penalties are the same Write buffer stalls are negligible Memory-stall cycles = #memory accesses x miss rate x miss penalty = #misses x miss penalty

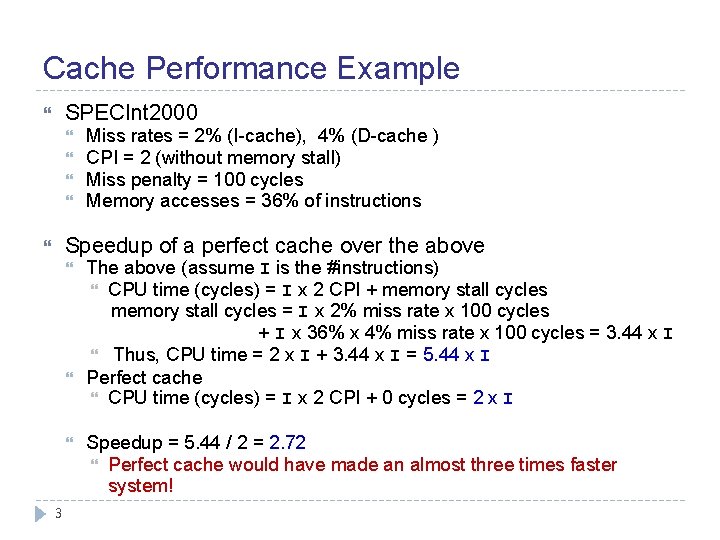

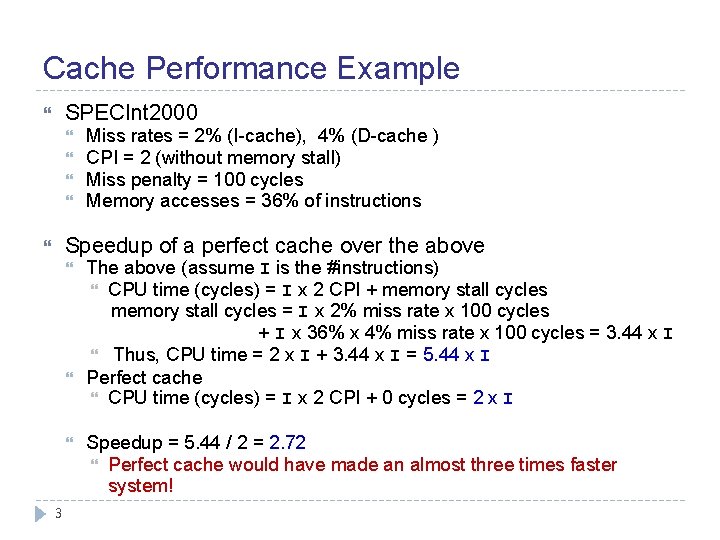

Cache Performance Example SPECInt 2000 Miss rates = 2% (I-cache), 4% (D-cache ) CPI = 2 (without memory stall) Miss penalty = 100 cycles Memory accesses = 36% of instructions Speedup of a perfect cache over the above 3 The above (assume I is the #instructions) CPU time (cycles) = I x 2 CPI + memory stall cycles = I x 2% miss rate x 100 cycles + I x 36% x 4% miss rate x 100 cycles = 3. 44 x I Thus, CPU time = 2 x I + 3. 44 x I = 5. 44 x I Perfect cache CPU time (cycles) = I x 2 CPI + 0 cycles = 2 x I Speedup = 5. 44 / 2 = 2. 72 Perfect cache would have made an almost three times faster system!

Performance on Increased Clock Rate Doubling the clock rate in the previous example Cycle time decreases to a half But miss penalty increases from 100 to 200 cycles Speedup of the fast clock system 4 CPU time of the previous (slow) system Assume C is the cycle time of the slow clock system CPU time slow clock = 5. 44 x I x C (seconds) CPU time of the faster clock system CPU time (cycles) = I x 2 CPI + memory stall cycles = I x 2% miss rate x 200 cycles + I x 36% x 4% miss rate x 200 cycles = 6. 88 x I CPU time fast clock = (2 x I + 6. 88 x I) x (C x ½) = 4. 44 x I x C (seconds) Speedup = 5. 44 / 4. 44 = 1. 23 Not twice faster than the slow clock system!

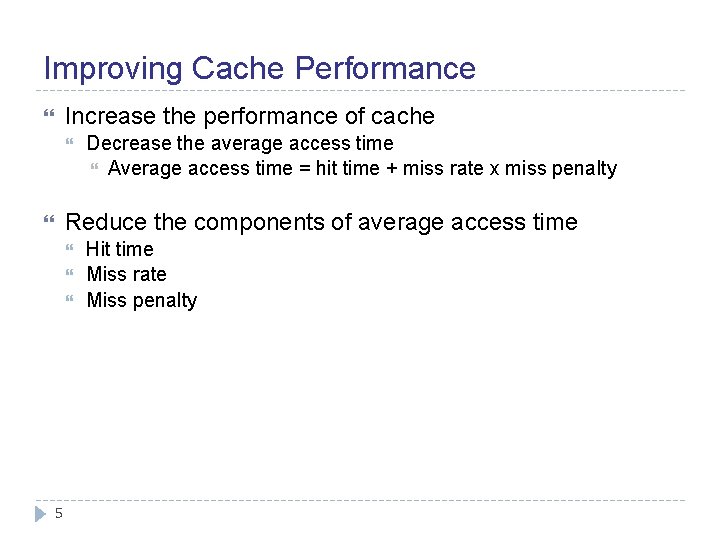

Improving Cache Performance Increase the performance of cache Decrease the average access time Average access time = hit time + miss rate x miss penalty Reduce the components of average access time 5 Hit time Miss rate Miss penalty

Associativity Flexible block placement Direct mapped (12 mod 8) = 4 set# 0 2 3 search: memory address 12 main memory 12 tag 6 1 Fully associative (any block) data 12 cache block# 0 1 2 3 4 5 6 7 2 -way set associative (12 mod 4) = 0 12

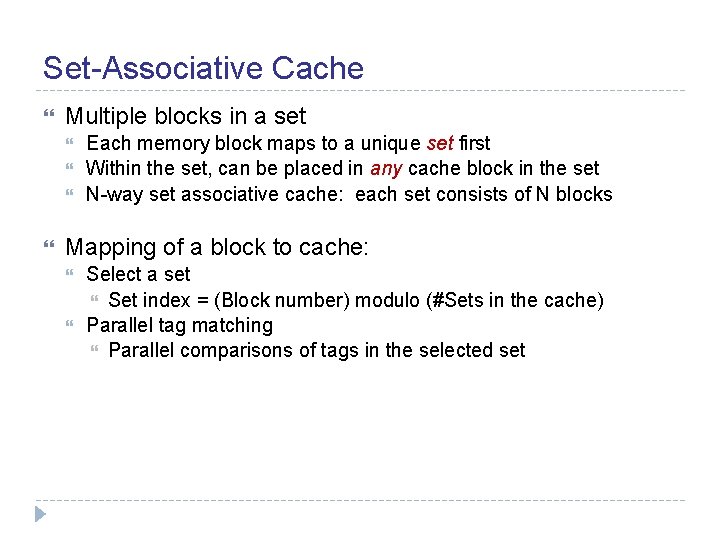

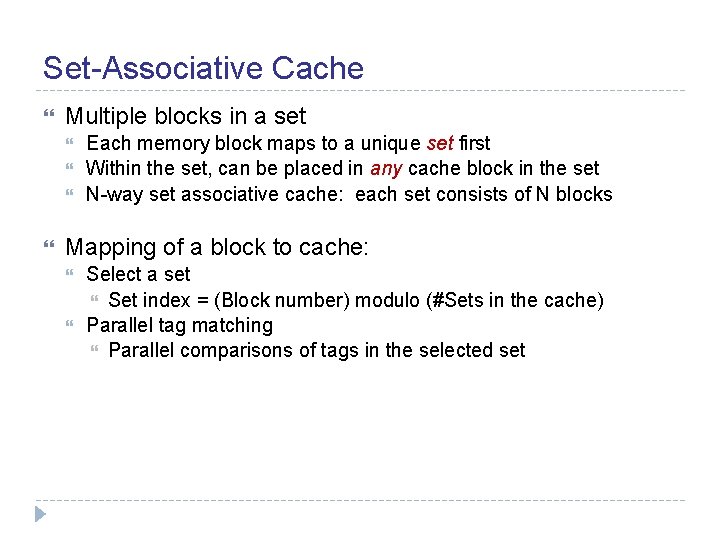

Set-Associative Cache Multiple blocks in a set Each memory block maps to a unique set first Within the set, can be placed in any cache block in the set N-way set associative cache: each set consists of N blocks Mapping of a block to cache: Select a set Set index = (Block number) modulo (#Sets in the cache) Parallel tag matching Parallel comparisons of tags in the selected set

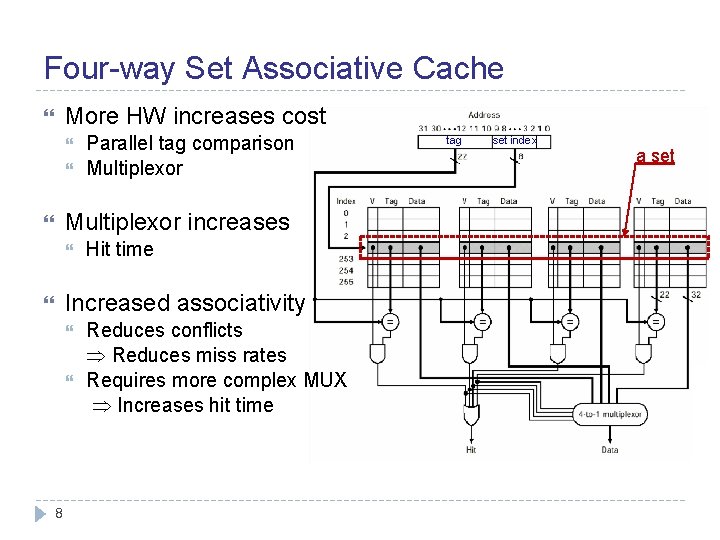

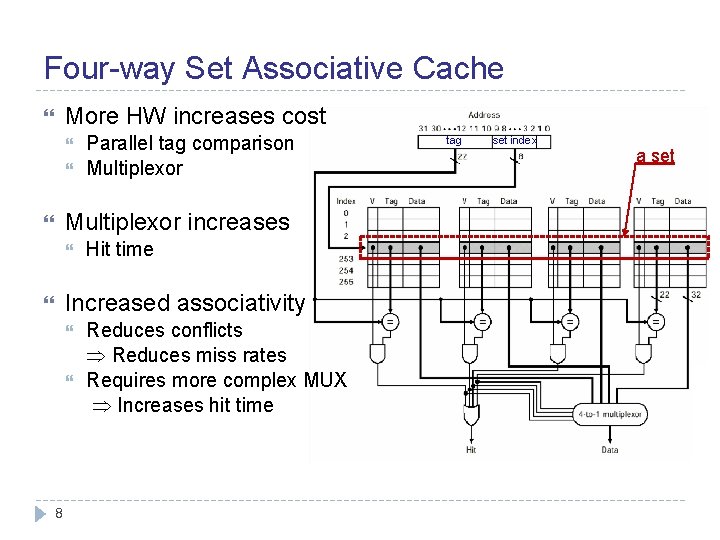

Four-way Set Associative Cache More HW increases cost Parallel tag comparison Multiplexor increases Hit time Increased associativity 8 Reduces conflicts Reduces miss rates Requires more complex MUX Increases hit time tag set index a set

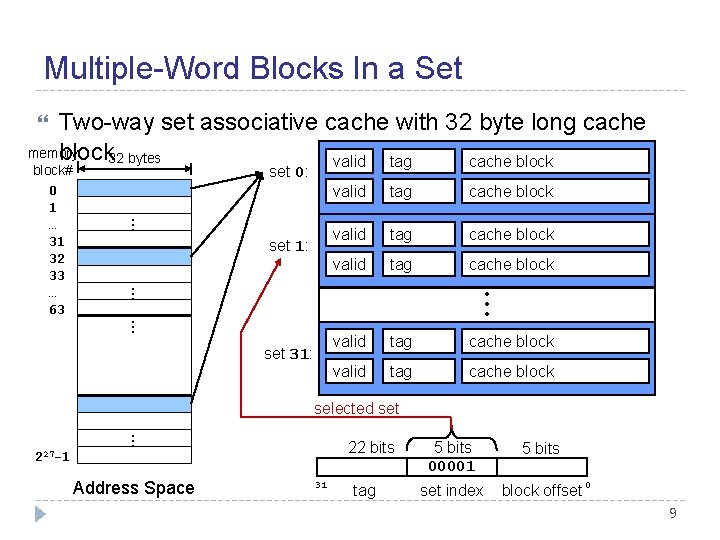

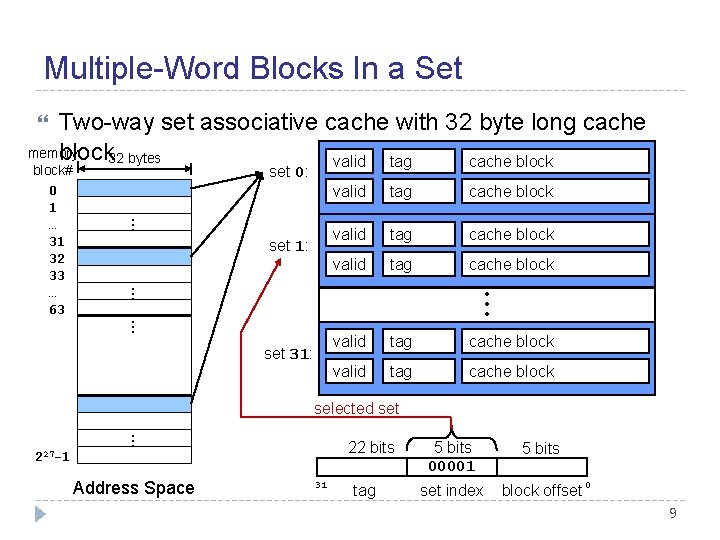

Multiple-Word Blocks In a Set Two-way set associative cache with 32 byte long cache memory block 32 bytes valid tag cache block set 0: … set 1: valid tag cache block valid tag cache block • • • … block# 0 1 … 31 32 33 … 63 … set 31: selected set … 22 bits 227 -1 Address Space 31 tag 5 bits 00001 set index 5 bits block offset 0 9

Accessing a Word Tag matching and word selection Must compare the tag in each valid block in the selected set (a), (b) Select a word by using a multiplexer (c) selected set (I): 1 Y 1 X (b) The tag bits in one of the cache blocks must match the tag bits in the address w 0 w 1 w 2 w 3 w 4 w 5 w 6 w 7 MUX (a) The valid bit must be set for the matching block. =? =? 22 bits X 31 tag 5 bits I 5 bits 10000 set index block offset 0 (c) If (a) and (b), then cache hit, block offset selects a word 10

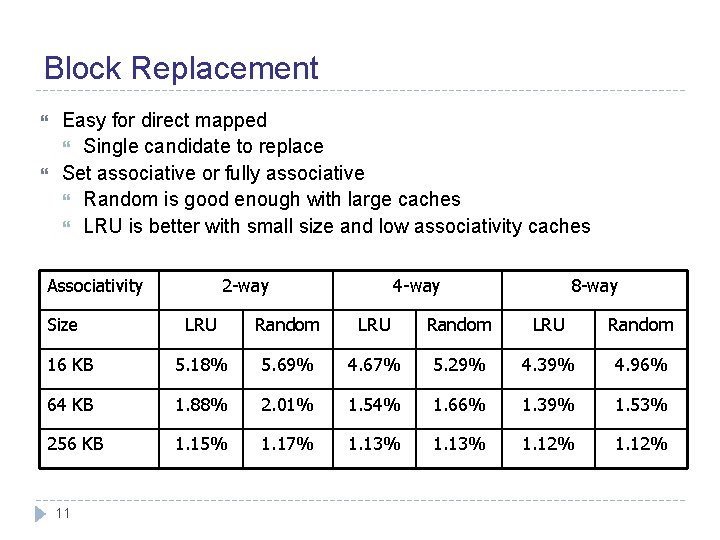

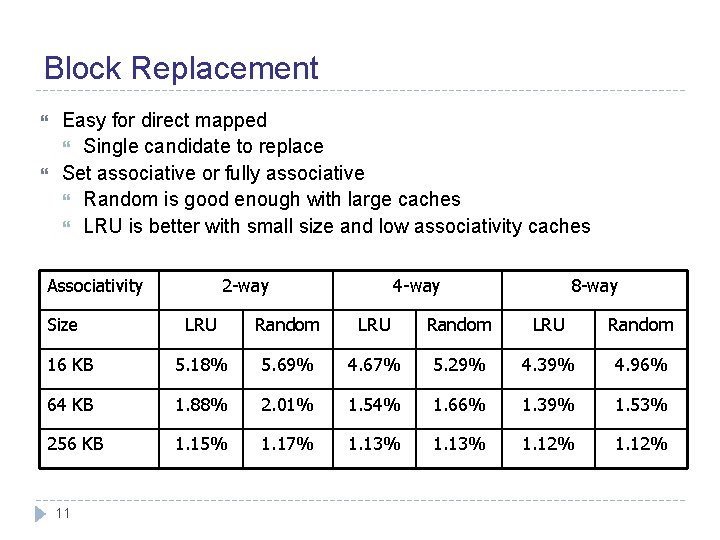

Block Replacement Easy for direct mapped Single candidate to replace Set associative or fully associative Random is good enough with large caches LRU is better with small size and low associativity caches Associativity Size 2 -way 4 -way 8 -way LRU Random 16 KB 5. 18% 5. 69% 4. 67% 5. 29% 4. 39% 4. 96% 64 KB 1. 88% 2. 01% 1. 54% 1. 66% 1. 39% 1. 53% 256 KB 1. 15% 1. 17% 1. 13% 1. 12% 11

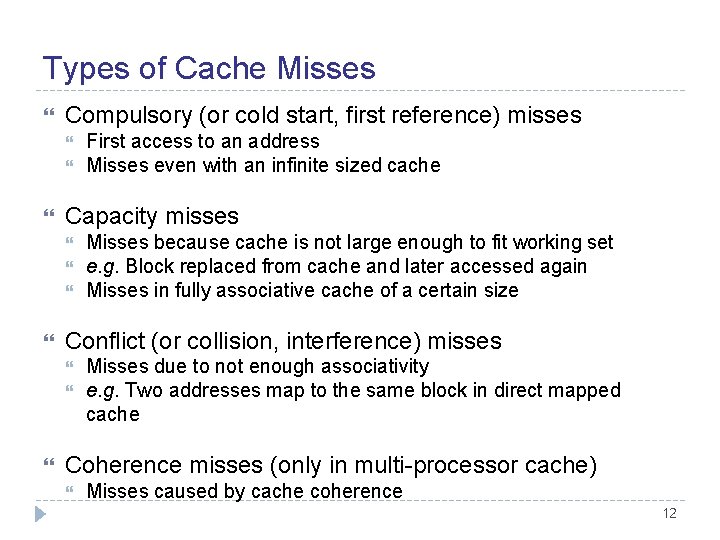

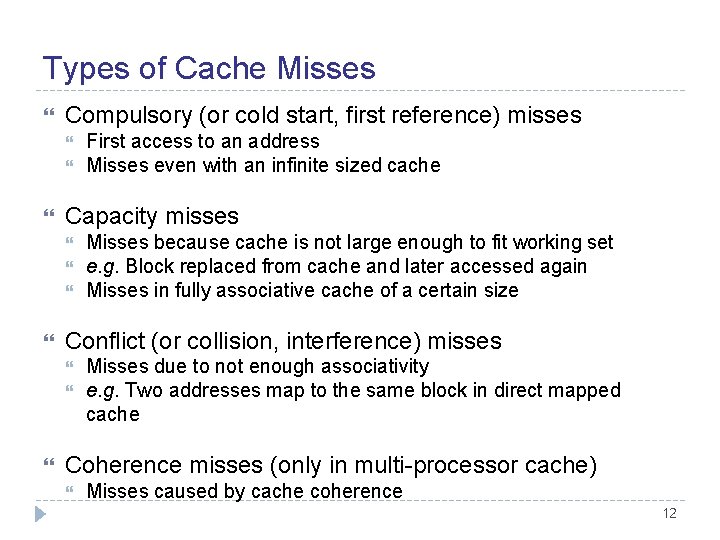

Types of Cache Misses Compulsory (or cold start, first reference) misses Capacity misses Misses because cache is not large enough to fit working set e. g. Block replaced from cache and later accessed again Misses in fully associative cache of a certain size Conflict (or collision, interference) misses First access to an address Misses even with an infinite sized cache Misses due to not enough associativity e. g. Two addresses map to the same block in direct mapped cache Coherence misses (only in multi-processor cache) Misses caused by cache coherence 12

Example: Cache Associativity Four one-word blocks in caches Compare three caches The size of cache is 16 bytes ( = 4 x 4 bytes ) A direct-mapped cache A 2 -way set associative cache A fully associative cache Find the number misses for the following accesses Reference sequence: 0, 8, 0, 6, 8

Example: Direct Mapped Cache Block placement: direct mapping 0 mod 4 = 0 6 mod 4 = 2 8 mod 4 = 0 Cache simulation block# 0 1 2 3 0 M[0] miss 8 M[8] miss 0 M[0] miss 6 M[0] M[6] miss 8 M[8] M[6] miss # of cache misses = 5 (3 compulsory and 2 conflict misses)

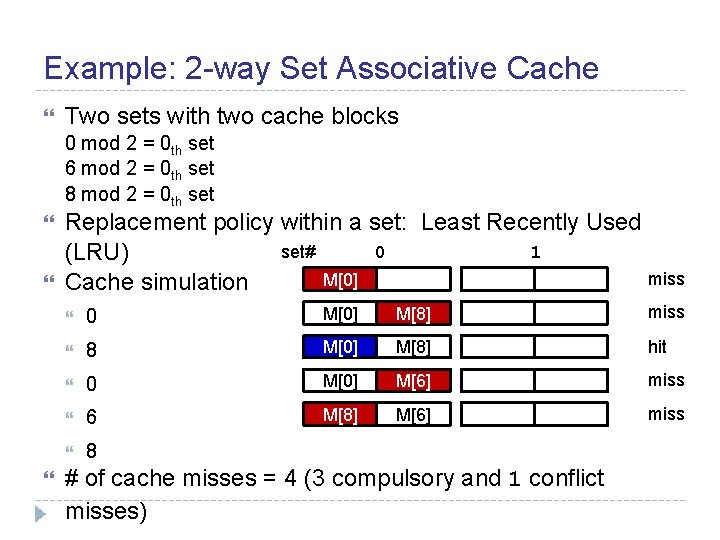

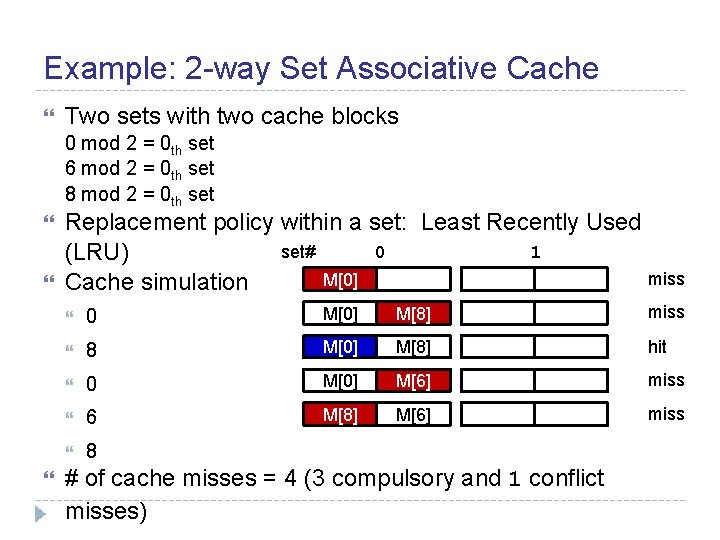

Example: 2 -way Set Associative Cache Two sets with two cache blocks 0 mod 2 = 0 th set 6 mod 2 = 0 th set 8 mod 2 = 0 th set Replacement policy within a set: Least Recently Used set# 0 1 (LRU) miss M[0] Cache simulation 0 M[0] M[8] miss 8 M[0] M[8] hit 0 M[0] M[6] miss 6 M[8] M[6] miss 8 # of cache misses = 4 (3 compulsory and 1 conflict misses)

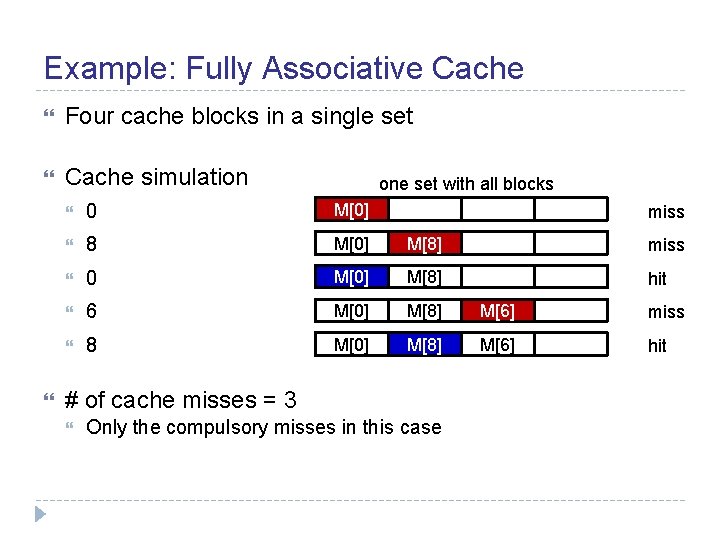

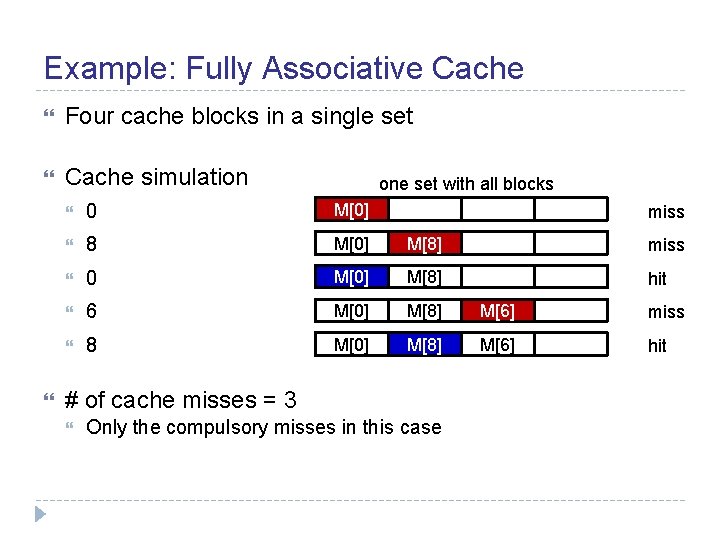

Example: Fully Associative Cache Four cache blocks in a single set Cache simulation one set with all blocks 0 M[0] 8 M[0] M[8] miss 0 M[0] M[8] hit 6 M[0] M[8] M[6] miss 8 M[0] M[8] M[6] hit miss # of cache misses = 3 Only the compulsory misses in this case

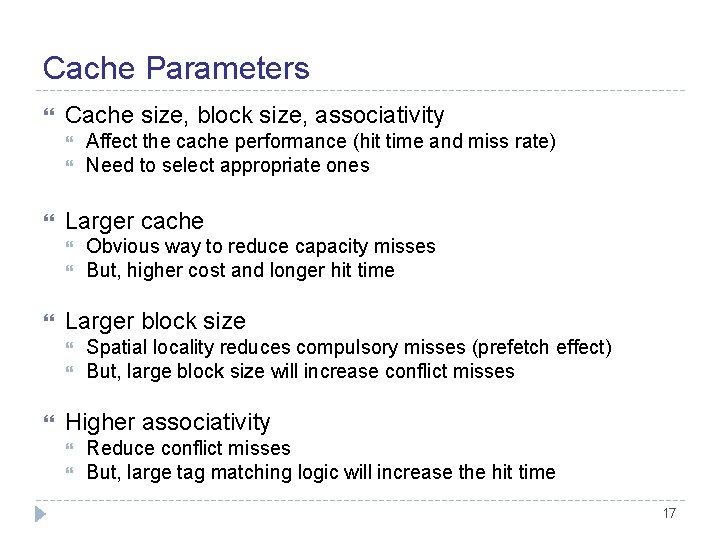

Cache Parameters Cache size, block size, associativity Larger cache Obvious way to reduce capacity misses But, higher cost and longer hit time Larger block size Affect the cache performance (hit time and miss rate) Need to select appropriate ones Spatial locality reduces compulsory misses (prefetch effect) But, large block size will increase conflict misses Higher associativity Reduce conflict misses But, large tag matching logic will increase the hit time 17

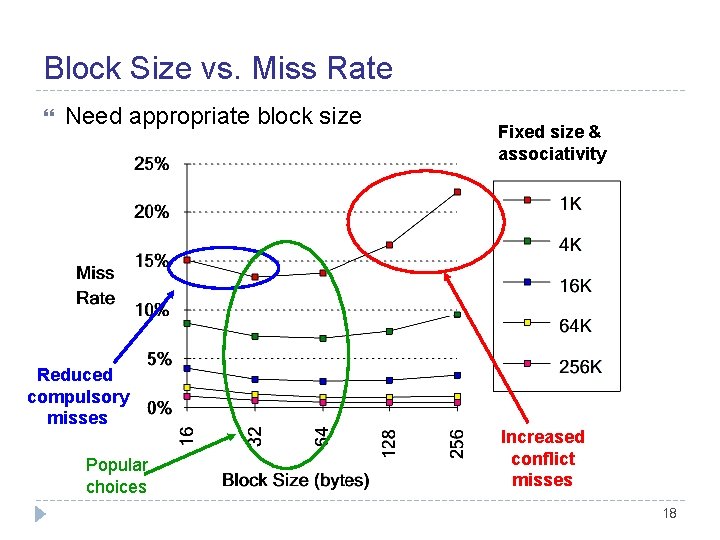

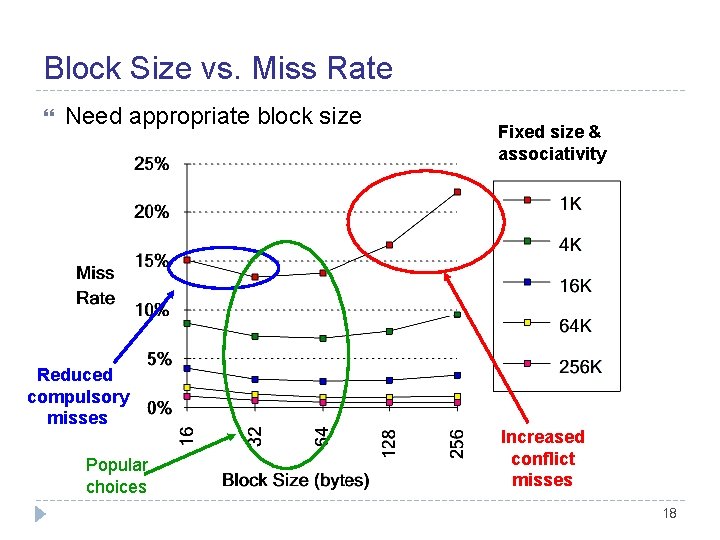

Block Size vs. Miss Rate Need appropriate block size Reduced compulsory misses Popular choices Fixed size & associativity Increased conflict misses 18

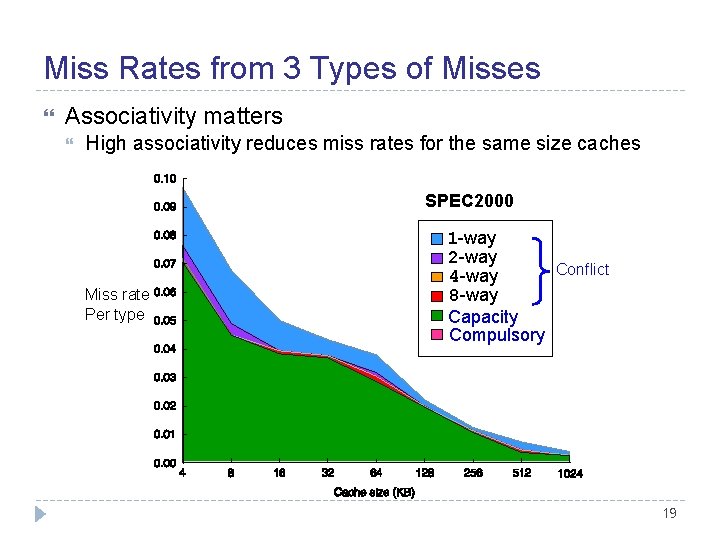

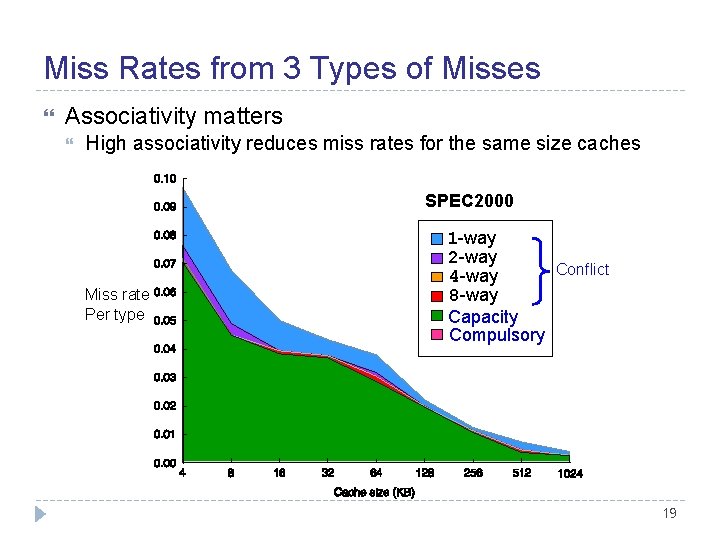

Miss Rates from 3 Types of Misses Associativity matters High associativity reduces miss rates for the same size caches SPEC 2000 Miss rate Per type 1 -way 2 -way Conflict 4 -way 8 -way Capacity Compulsory 19

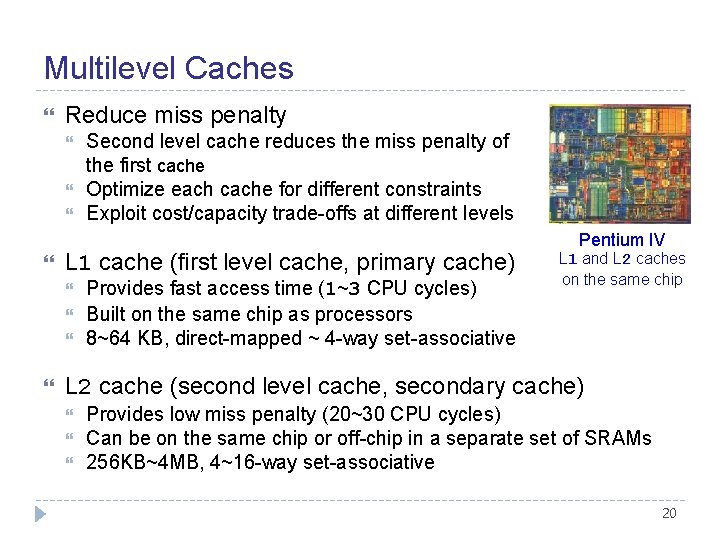

Multilevel Caches Reduce miss penalty L 1 cache (first level cache, primary cache) Second level cache reduces the miss penalty of the first cache Optimize each cache for different constraints Exploit cost/capacity trade-offs at different levels Provides fast access time (1~3 CPU cycles) Built on the same chip as processors 8~64 KB, direct-mapped ~ 4 -way set-associative Pentium IV L 1 and L 2 caches on the same chip L 2 cache (second level cache, secondary cache) Provides low miss penalty (20~30 CPU cycles) Can be on the same chip or off-chip in a separate set of SRAMs 256 KB~4 MB, 4~16 -way set-associative 20

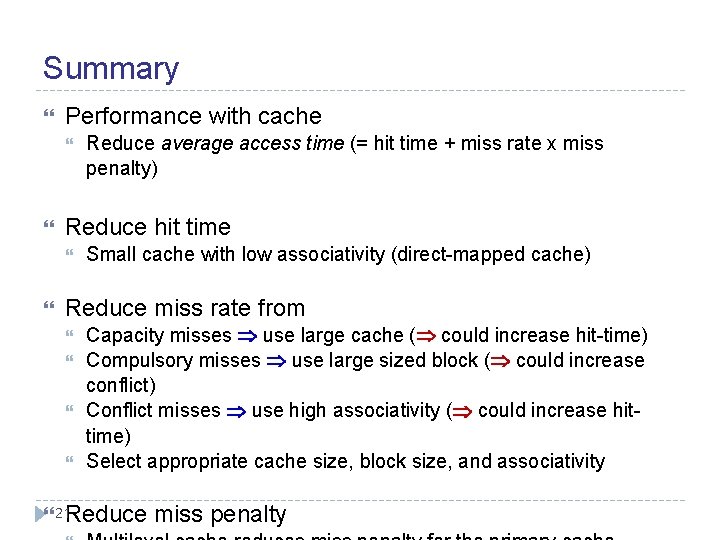

Summary Performance with cache Reduce hit time Reduce average access time (= hit time + miss rate x miss penalty) Small cache with low associativity (direct-mapped cache) Reduce miss rate from Capacity misses use large cache ( could increase hit-time) Compulsory misses use large sized block ( could increase conflict) Conflict misses use high associativity ( could increase hittime) Select appropriate cache size, block size, and associativity 21 Reduce miss penalty