Care and feeding of a gatekeeper Overview The

- Slides: 20

Care and feeding of a gatekeeper

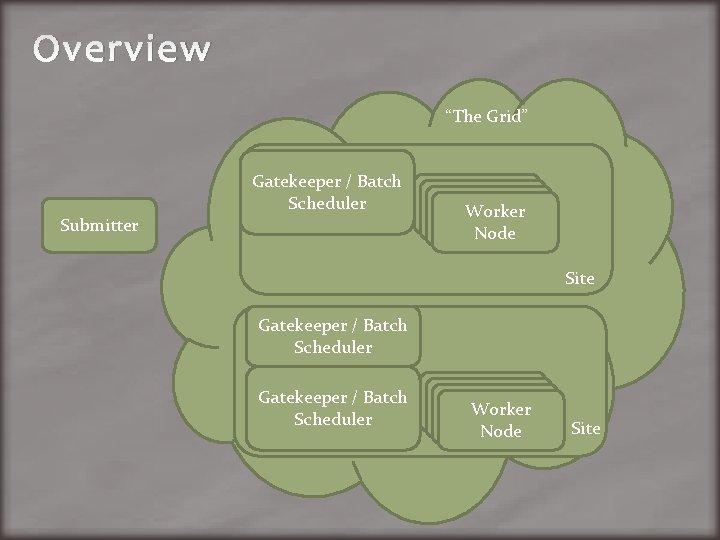

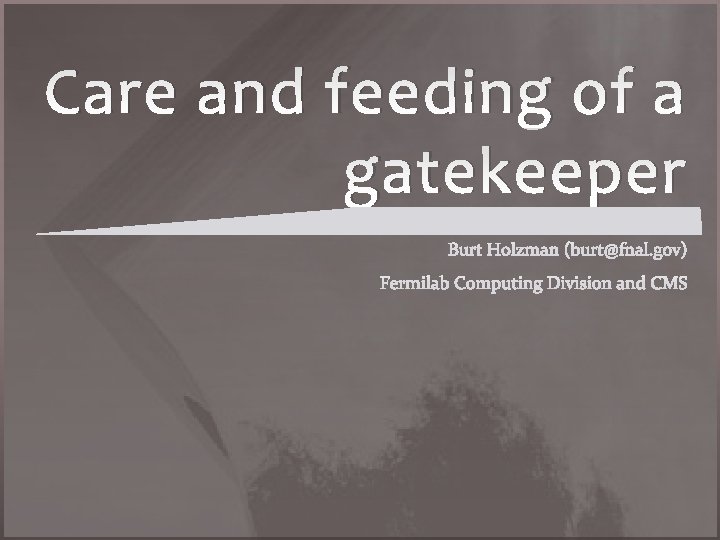

Overview “The Grid” Gatekeeper / Batch Scheduler Submitter Workers Node Site Gatekeeper / Batch Scheduler Workers Node Site

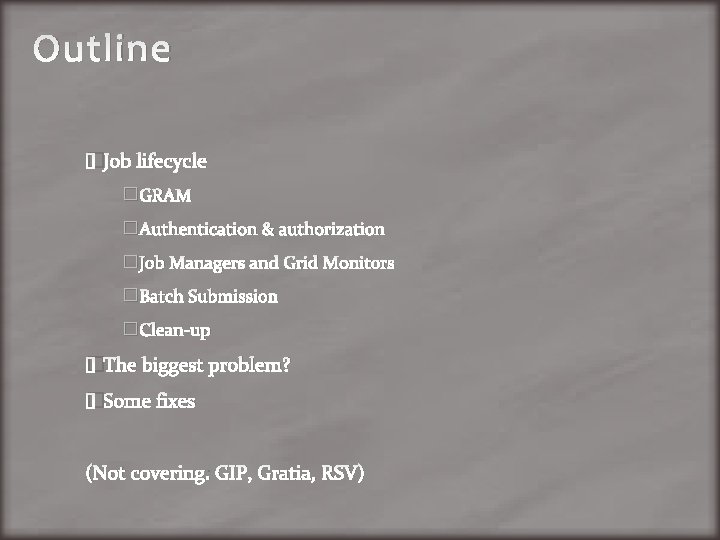

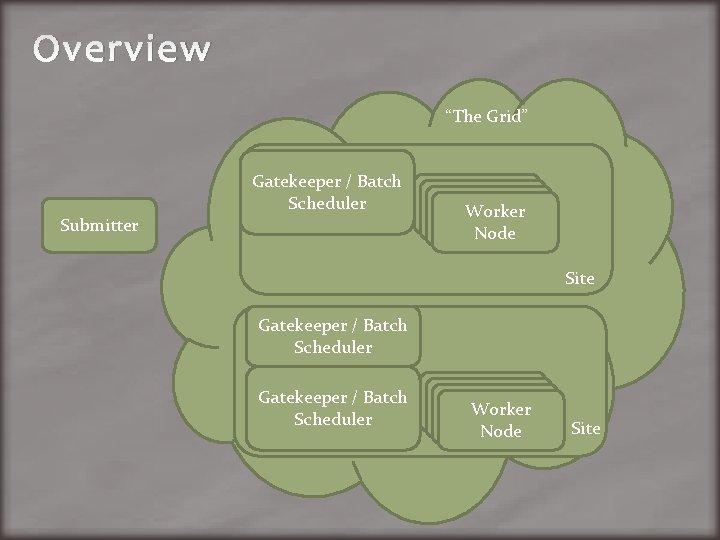

Outline �Job lifecycle �GRAM �Authentication & authorization �Job Managers and Grid Monitors �Batch Submission �Clean-up �The biggest problem? �Some fixes (Not covering: GIP, Gratia, RSV)

GRAM �Globus Resource Allocation Manager �GRAM listens on your gatekeeper �Speaks Globus RSL (Resource Specification Language) �Comes in three flavors �GRAM 2 (a. k. a. “pre-WS GRAM”) � I’ll talk about this quite a bit �GRAM 4 (a. k. a. “WS GRAM”) � Big design changes; never largely adopted � Deprecated – mostly in use by Tera. Grid �GRAM 5 � Built on GRAM 2

A note on plumbing "No, no. Lemme think, " Harry interrupted himself. "It's more like you're hired as a plumber to work in an old house full of ancient, leaky pipes laid out by some long-gone plumbers who were even weirder than you are. Most of the time you spend scratching your head and thinking: Why the !@#$ did they do that? " "Why the !@#$ did they? " Ethan said. Which appeared to amuse Harry to no end. "Oh, you know, " he went on, laughing hoarsely, "they didn't understand whatever the !@#$ had come before them, and they just had to get something working in some ridiculous time. Hey, software is just a !@#$load of pipe fitting you do to get something the hell working. Me, " he said, holding up his chewed, nail-torn hands as if for evidence, "I'm just a plumber. " Ellen Ullman, “The Bug”

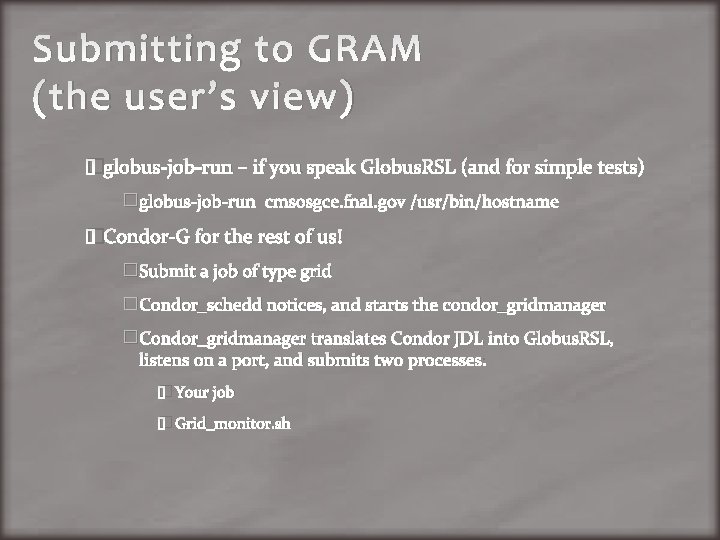

Submitting to GRAM (the user’s view) �globus-job-run – if you speak Globus. RSL (and for simple tests) �globus-job-run cmsosgce. fnal. gov /usr/bin/hostname �Condor-G for the rest of us! �Submit a job of type grid �Condor_schedd notices, and starts the condor_gridmanager �Condor_gridmanager translates Condor JDL into Globus. RSL, listens on a port, and submits two processes: � Your job � Grid_monitor. sh

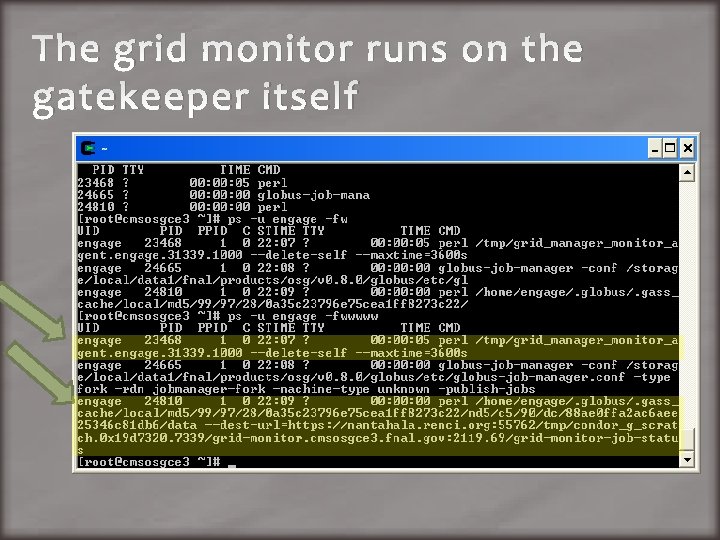

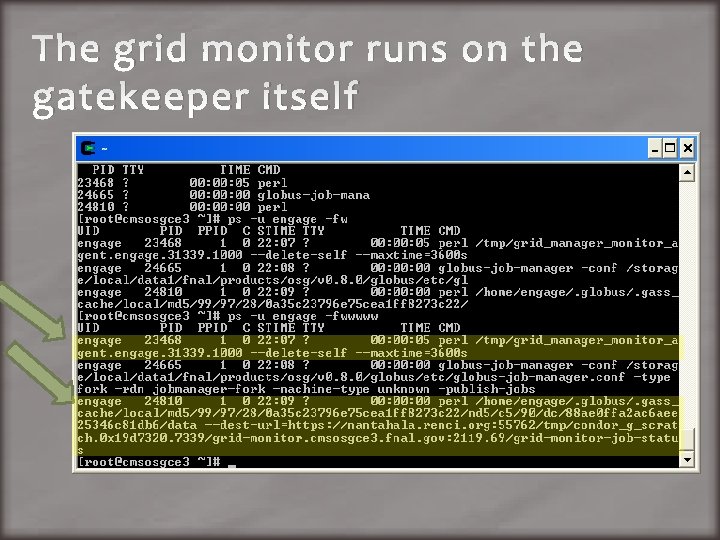

The grid monitor runs on the gatekeeper itself

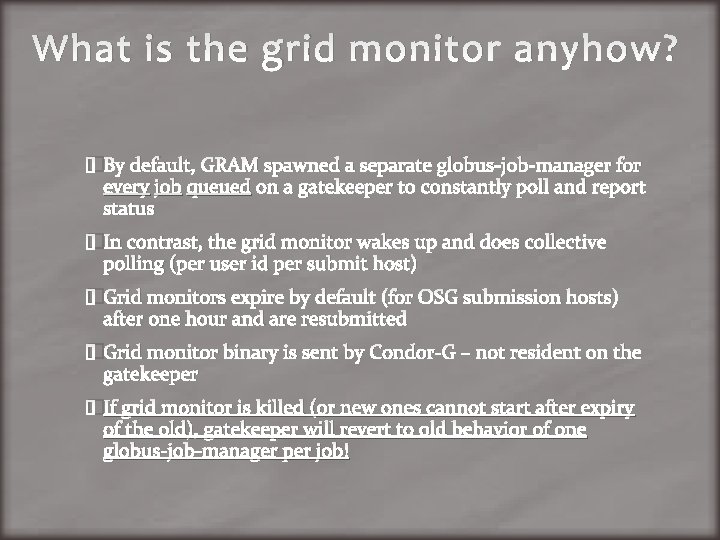

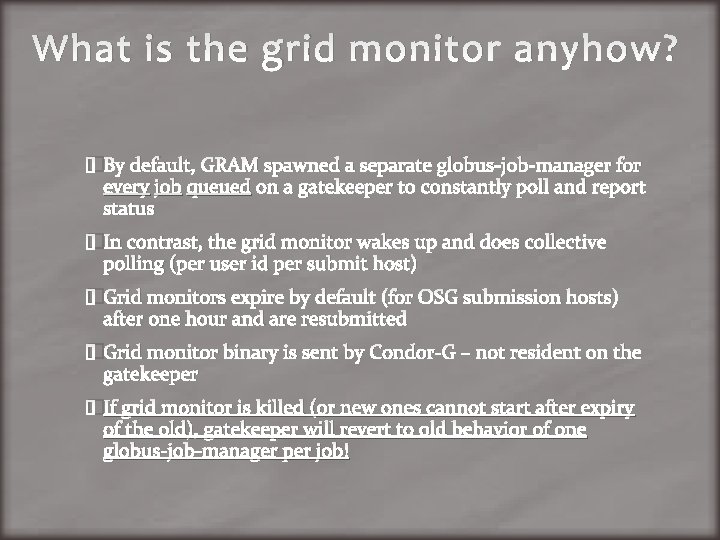

What is the grid monitor anyhow? �By default, GRAM spawned a separate globus-job-manager for every job queued on a gatekeeper to constantly poll and report status �In contrast, the grid monitor wakes up and does collective polling (per user id per submit host) �Grid monitors expire by default (for OSG submission hosts) after one hour and are resubmitted �Grid monitor binary is sent by Condor-G – not resident on the gatekeeper �If grid monitor is killed (or new ones cannot start after expiry of the old), gatekeeper will revert to old behavior of one globus-job-manager per job!

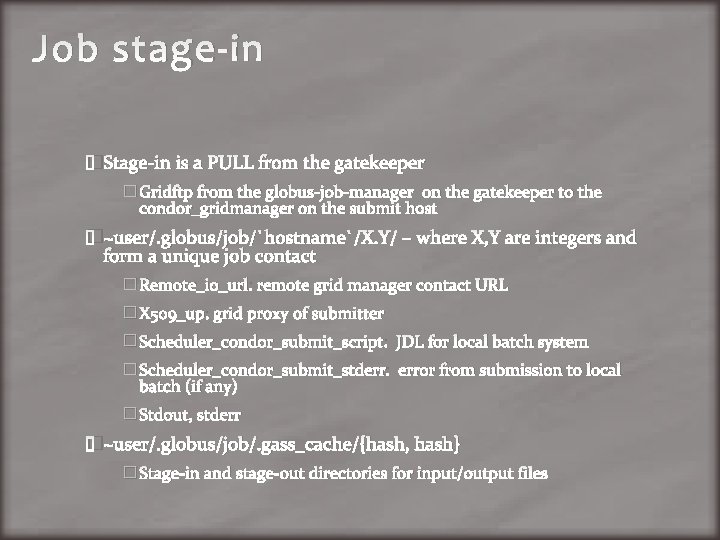

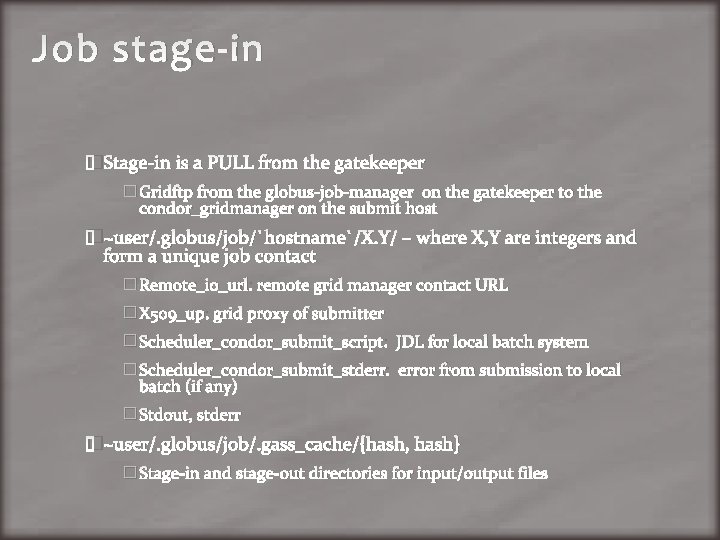

Job stage-in �Stage-in is a PULL from the gatekeeper � Gridftp from the globus-job-manager on the gatekeeper to the condor_gridmanager on the submit host �~user/. globus/job/`hostname`/X. Y/ – where X, Y are integers and form a unique job contact � Remote_io_url: remote grid manager contact URL � X 509_up: grid proxy of submitter � Scheduler_condor_submit_script: JDL for local batch system � Scheduler_condor_submit_stderr: error from submission to local batch (if any) � Stdout, stderr �~user/. globus/job/. gass_cache/{hash, hash} � Stage-in and stage-out directories for input/output files

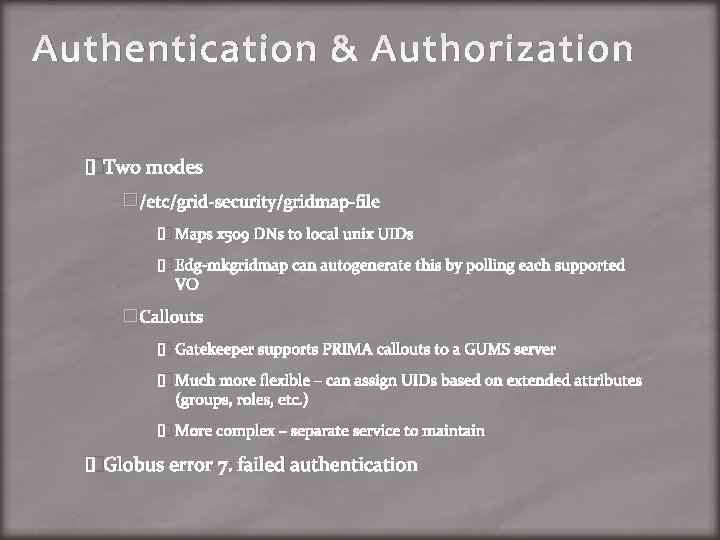

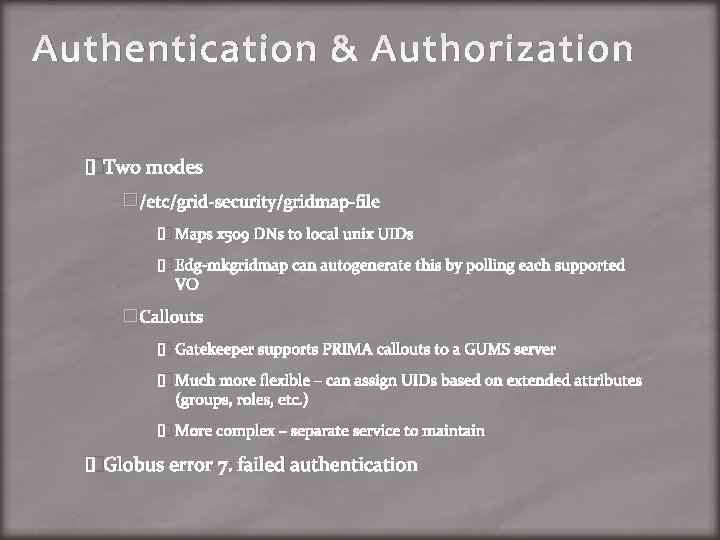

Authentication & Authorization �Two modes �/etc/grid-security/gridmap-file � Maps x 509 DNs to local unix UIDs � Edg-mkgridmap can autogenerate this by polling each supported VO �Callouts � Gatekeeper supports PRIMA callouts to a GUMS server � Much more flexible – can assign UIDs based on extended attributes (groups, roles, etc. ) � More complex – separate service to maintain �Globus error 7: failed authentication

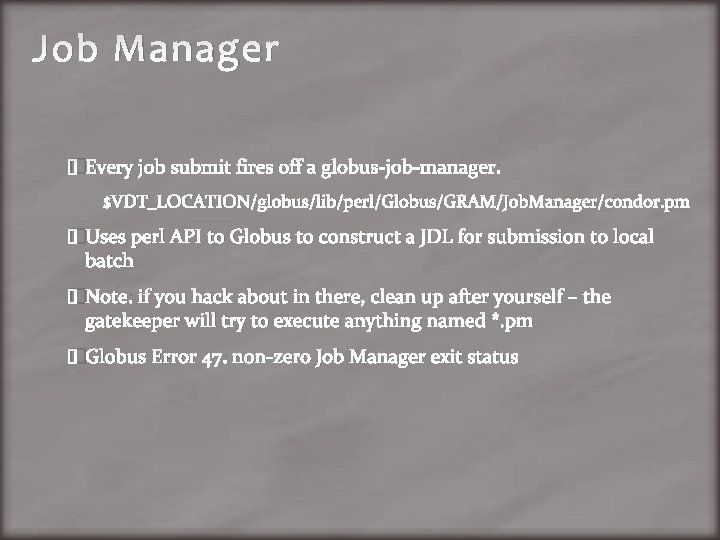

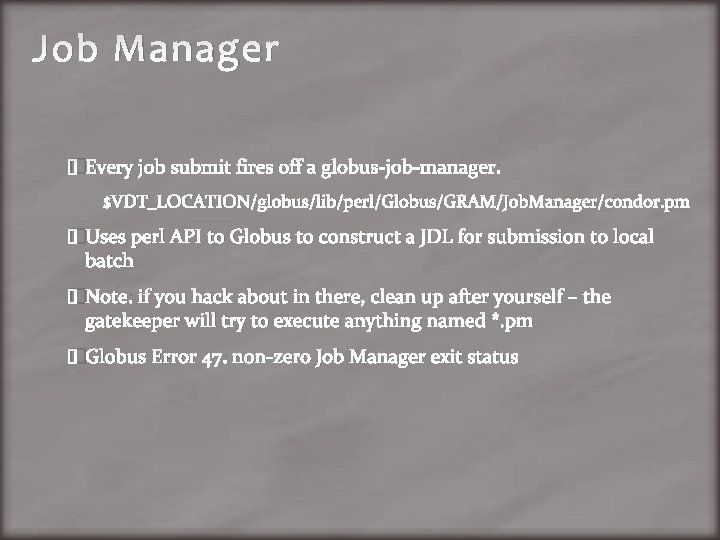

Job Manager �Every job submit fires off a globus-job-manager: $VDT_LOCATION/globus/lib/perl/Globus/GRAM/Job. Manager/condor. pm �Uses perl API to Globus to construct a JDL for submission to local batch �Note: if you hack about in there, clean up after yourself – the gatekeeper will try to execute anything named *. pm �Globus Error 47: non-zero Job Manager exit status

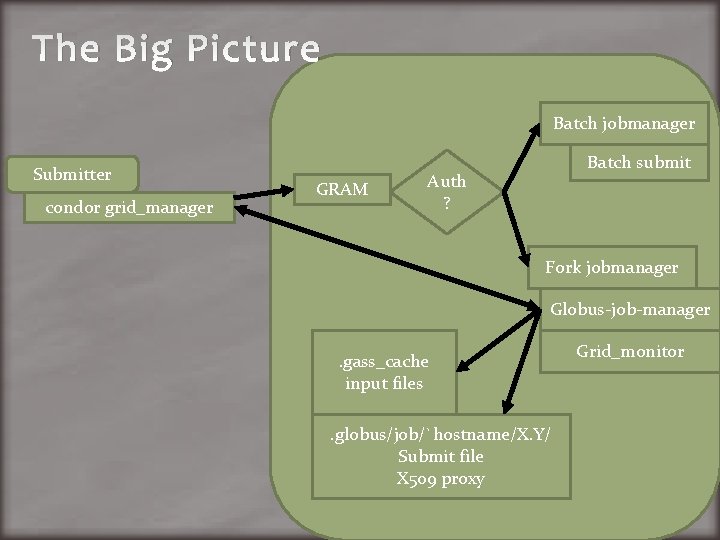

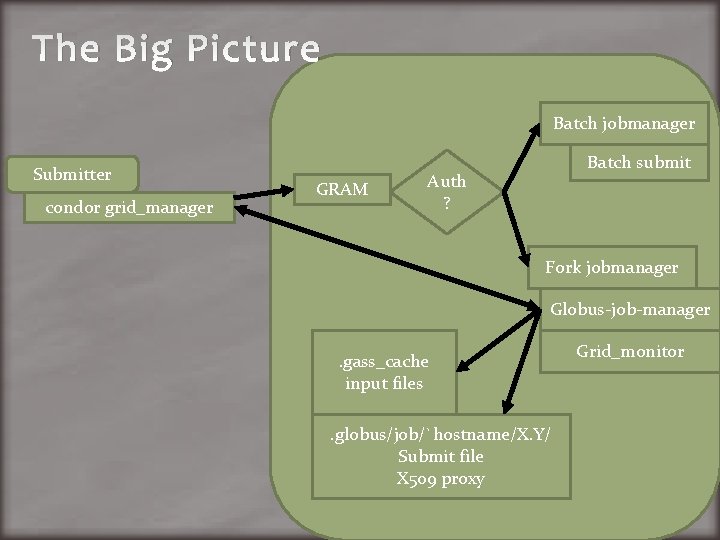

The Big Picture Batch jobmanager Submitter condor grid_manager GRAM Batch submit Auth ? Fork jobmanager Globus-job-manager. gass_cache input files. globus/job/`hostname/X. Y/ Submit file X 509 proxy Grid_monitor

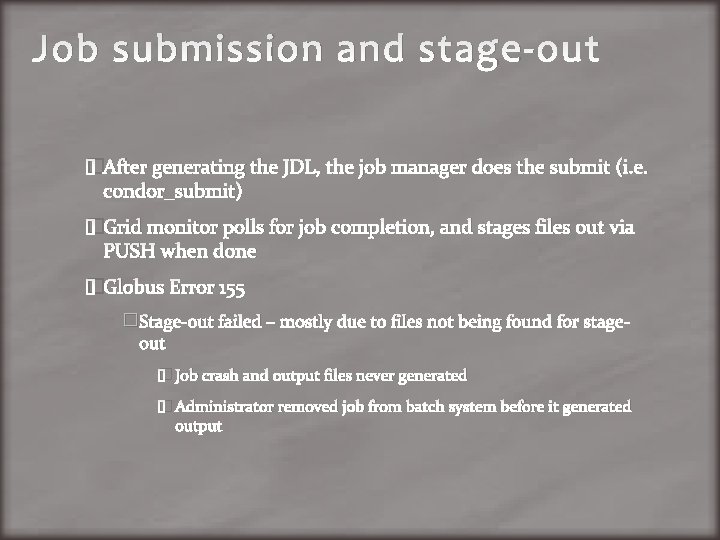

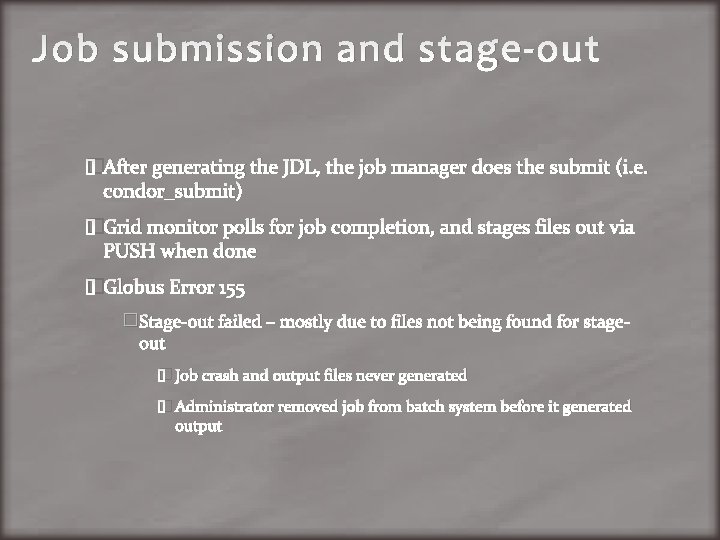

Job submission and stage-out �After generating the JDL, the job manager does the submit (i. e. condor_submit) �Grid monitor polls for job completion, and stages files out via PUSH when done �Globus Error 155 �Stage-out failed – mostly due to files not being found for stageout � Job crash and output files never generated � Administrator removed job from batch system before it generated output

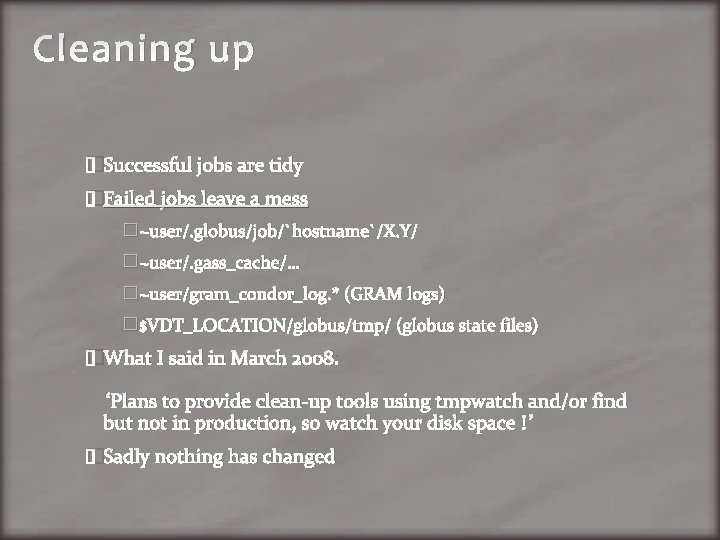

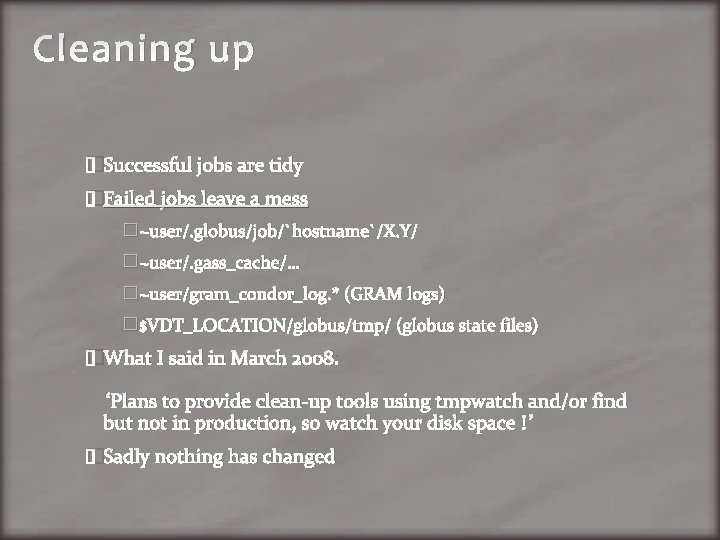

Cleaning up �Successful jobs are tidy �Failed jobs leave a mess �~user/. globus/job/`hostname`/X. Y/ �~user/. gass_cache/… �~user/gram_condor_log. * (GRAM logs) �$VDT_LOCATION/globus/tmp/ (globus state files) �What I said in March 2008: “Plans to provide clean-up tools using tmpwatch and/or find but not in production, so watch your disk space !” �Sadly nothing has changed

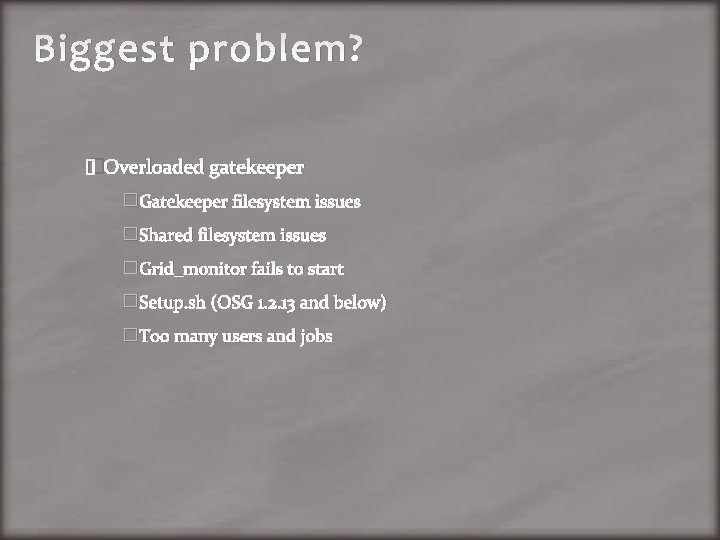

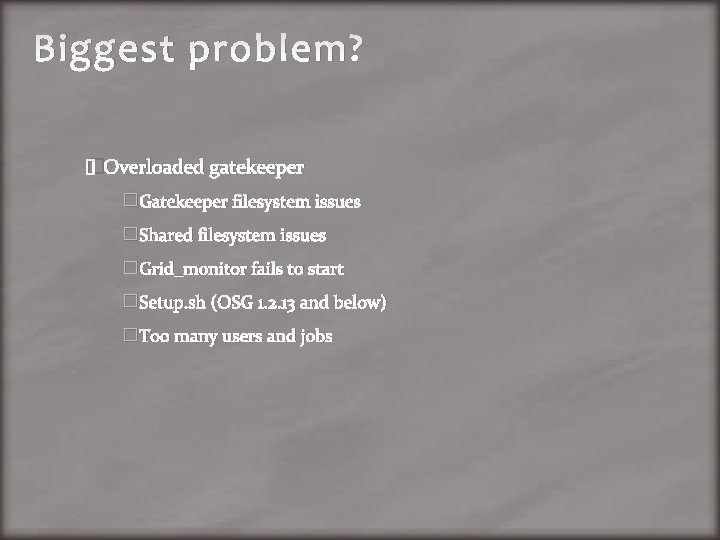

Biggest problem? �Overloaded gatekeeper �Gatekeeper filesystem issues �Shared filesystem issues �Grid_monitor fails to start �Setup. sh (OSG 1. 2. 13 and below) �Too many users and jobs

Gatekeeper filesystem issues �Massive cruft accumulation �Only failed jobs leave cruft, but it sticks around forever �Write your own tmpwatch script: #!/bin/sh for in `grep “: /home” /etc/passwd | awk –F: ‘{print $6}’ `; do /usr/bin/tmpwatch 120 $i /usr/bin/tmpwatch 120 –d /opt/osg/globus/tmp done �VDT should provide a standard one! �Slow disk �Move colocated services to other machines �Faster spindles (SSB? ) �Ramdisks for some services (condor spool? )

Shared filesystem issues �NFS is notoriously inefficient at small writes �Grid gatekeepers love small writes! �If you think your NFS solution is rock-solid, you don’t have enough users yet (q. v. James Letts’ talk, Doug Johnson’s talk) �Not all batch systems require sharing user home directories between gatekeeper and workers �Condor_nfslite – it uses Condor file transfer mechanisms to move the files to the worker (and the admin can use Condor configuration options to limit the scale of the transfers) �In principle this is possible with PBS, LSF �Maybe not SGE

Grid monitor problems �Load abnormally high; ps shows no grid_monitor processes �Paging Capt. Yossarian: new grid monitors may not be able to start because of the increased load created by the missing grid monitors! �Check the Starter. Log for the managedfork jobmanager – if managedfork is failing, new grid monitors can’t take the place of the expired ones � Counterintuitive band-aid: shut off managedfork (revert to non-managed fork jobmanager) �Resource contention between grid monitor and batch scheduler (or other services) � Renice globus-gatekeeper by editing /etc/xinet. d/globus-gateekeper and adding nice = 20 � VDT should do this automatically! �Increase polling interval? (from Brian) � Add sleep(10 + rand(10)) to poll subroutine in fork. pm � VDT should do this automatically!

Setup. sh execution �In OSG 1. 2. 13 and below, job managers executed $VDT_LOCATION/setup. sh, which in turn executes a number of other setup scripts �On busy systems, this created a high amount of load classified as “system load” just from the large number of fork/exec calls �As of 1. 2. 14, it is no longer executed – so upgrade! �If you can’t upgrade, you can replace setup. sh – take a snapshot of your environment before and after, and replace the file with lines of static exports!

Still overloaded? �Add a gatekeeper! �Multi-gatekeeper CEs are widely supported in OSG �Tier 1 and many Tier 2 s are using them (FNAL, CIT, UW, MIT, UCSD, Vanderbilt …) �Tony Tiradani @ CMS T 1 has written an automated install/update script – many things hard-coded for the T 1, but if you’re interested, it may be useful �If all else fails – ask for help from Rob, Doug, and osg-sites!

Abbott feeding pump error codes

Abbott feeding pump error codes Primary secondary tertiary care nursing

Primary secondary tertiary care nursing Qpr gatekeeper quiz answers

Qpr gatekeeper quiz answers Oracle communications services gatekeeper

Oracle communications services gatekeeper Qpr quiz questions and answers

Qpr quiz questions and answers Meekaaeel angel

Meekaaeel angel Gatekeeper meaning

Gatekeeper meaning Media gatekeeper

Media gatekeeper Principles communication

Principles communication Consensual purchase decision

Consensual purchase decision Contoh gatekeeper

Contoh gatekeeper Gatekeeper training

Gatekeeper training Gatekeeper scorekeeper watchdog

Gatekeeper scorekeeper watchdog Gatekeeperfunktion

Gatekeeperfunktion Qpr training quiz answers

Qpr training quiz answers Overview of education in health care

Overview of education in health care Benthic organisms

Benthic organisms Chapter 27 nutritional therapy and assisted feeding

Chapter 27 nutritional therapy and assisted feeding Chapter 18 eating and feeding disorders

Chapter 18 eating and feeding disorders Feeding relationship showing one path of energy flow

Feeding relationship showing one path of energy flow Environment and feeding relationship grade 7

Environment and feeding relationship grade 7