Automatic Identification of Cognates False Friends and Partial

- Slides: 23

Automatic Identification of Cognates, False Friends, and Partial Cognates University of Ottawa, Canada

Outline Overview of the Thesis n Research Contribution n Cognate and False Friend Identification n Partial Cognate Disambiguation n CLPA- Cognate and False Friend Annotator n Conclusions and Future Work n

Overview of the Thesis Tasks – Automatic Identification of Cognates and False Friends – Automatic Disambiguation of Partial Cognates Areas of Applications – CALL, MT, Word Alignment, Cross-Language Information Retrieval CALL Tool - CLPA

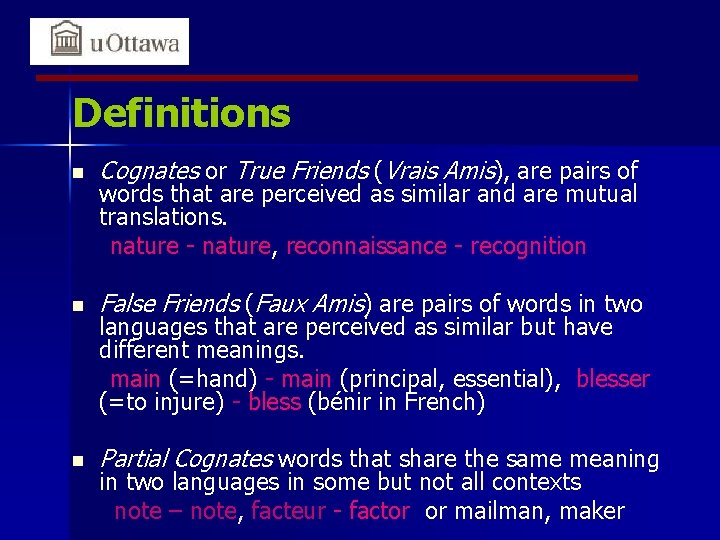

Definitions n Cognates or True Friends (Vrais Amis), are pairs of n False Friends (Faux Amis) are pairs of words in two n Partial Cognates words that share the same meaning words that are perceived as similar and are mutual translations. nature - nature, reconnaissance - recognition languages that are perceived as similar but have different meanings. main (=hand) - main (principal, essential), blesser (=to injure) - bless (bénir in French) in two languages in some but not all contexts note – note, facteur - factor or mailman, maker

Research Contribution n Novel method based on ML algorithms to identify Cognates and False Friends n A method to create complete lists of Cognates and False Friends n Define a novel task: Partial Cognate Disambiguation, and solve it using a supervised and a semi-supervised method – Combine and use corpora from different domains n Implement a CALL Tool – CLPA to annotate Cognates and False Friends

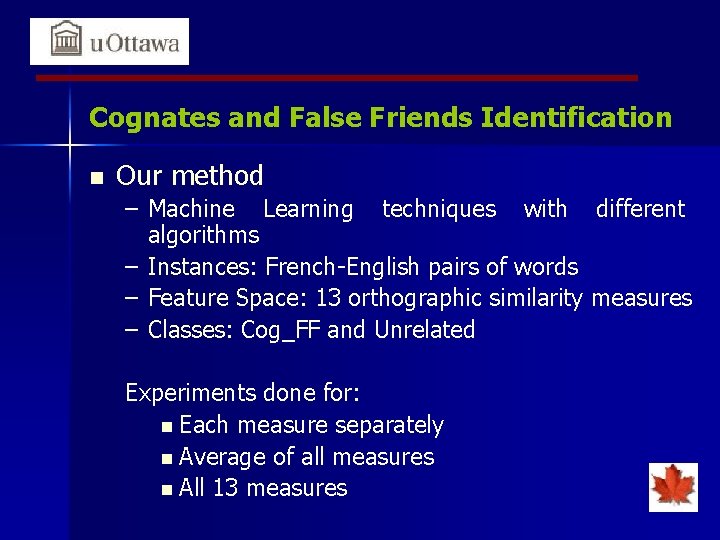

Cognates and False Friends Identification n Our method – Machine Learning techniques with different algorithms – Instances: French-English pairs of words – Feature Space: 13 orthographic similarity measures – Classes: Cog_FF and Unrelated Experiments done for: n Each measure separately n Average of all measures n All 13 measures

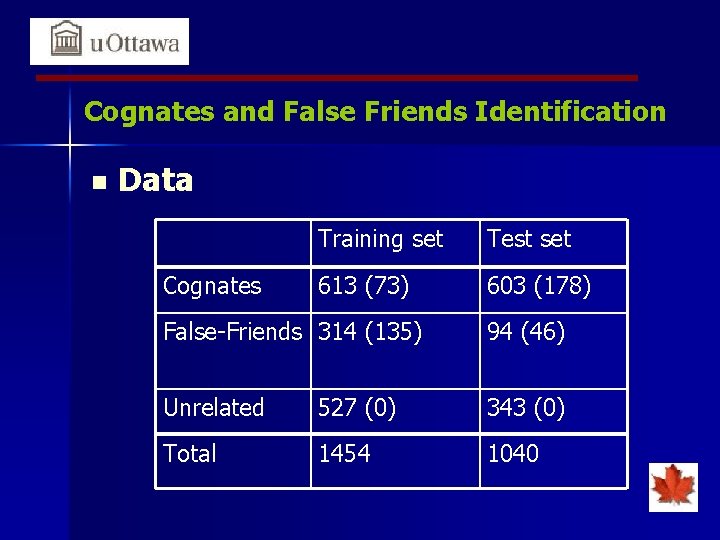

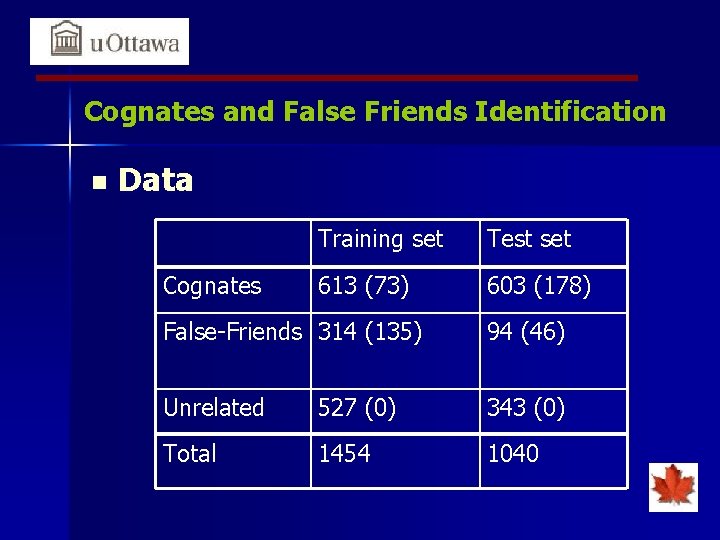

Cognates and False Friends Identification n Data Cognates Training set Test set 613 (73) 603 (178) False-Friends 314 (135) 94 (46) Unrelated 527 (0) 343 (0) Total 1454 1040

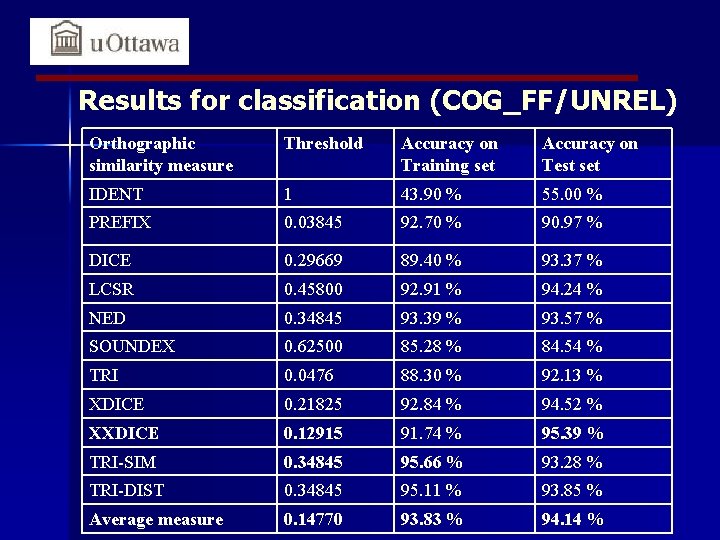

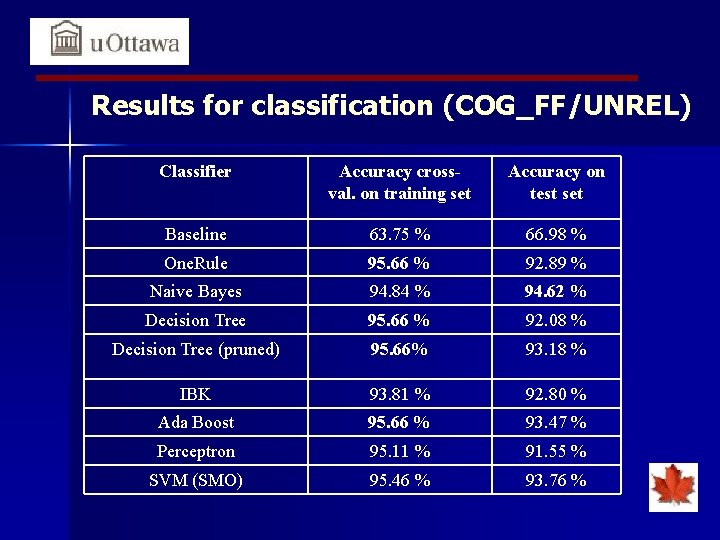

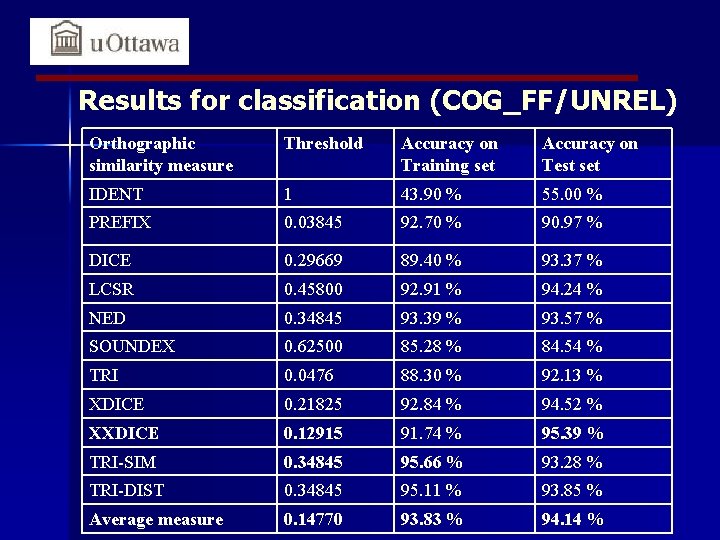

Results for classification (COG_FF/UNREL) Orthographic similarity measure Threshold Accuracy on Training set Accuracy on Test set IDENT 1 43. 90 % 55. 00 % PREFIX 0. 03845 92. 70 % 90. 97 % DICE 0. 29669 89. 40 % 93. 37 % LCSR 0. 45800 92. 91 % 94. 24 % NED 0. 34845 93. 39 % 93. 57 % SOUNDEX 0. 62500 85. 28 % 84. 54 % TRI 0. 0476 88. 30 % 92. 13 % XDICE 0. 21825 92. 84 % 94. 52 % XXDICE 0. 12915 91. 74 % 95. 39 % TRI-SIM 0. 34845 95. 66 % 93. 28 % TRI-DIST 0. 34845 95. 11 % 93. 85 % Average measure 0. 14770 93. 83 % 94. 14 %

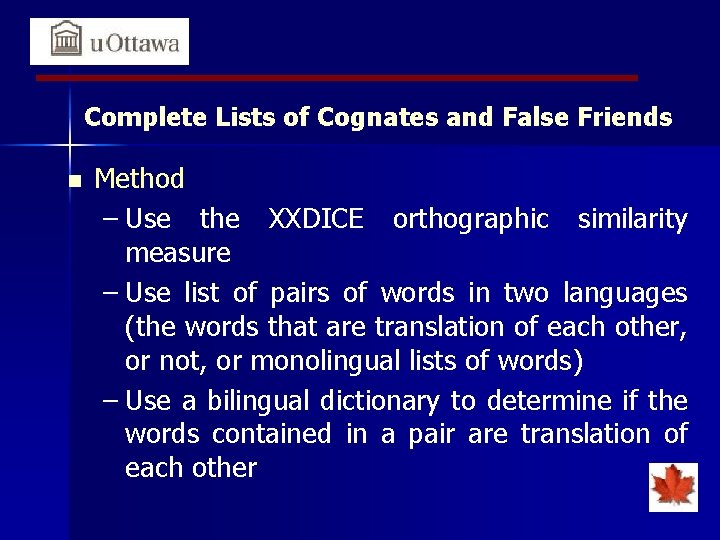

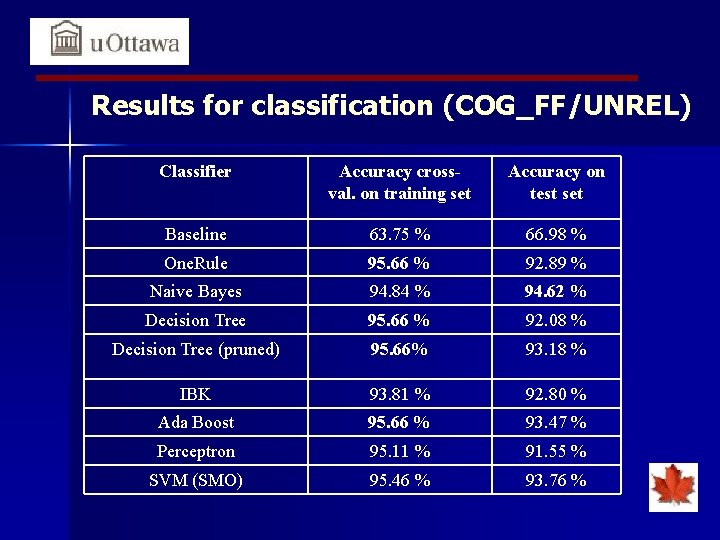

Results for classification (COG_FF/UNREL) Classifier Accuracy crossval. on training set Accuracy on test set Baseline 63. 75 % 66. 98 % One. Rule 95. 66 % 92. 89 % Naive Bayes 94. 84 % 94. 62 % Decision Tree 95. 66 % 92. 08 % Decision Tree (pruned) 95. 66% 93. 18 % IBK 93. 81 % 92. 80 % Ada Boost 95. 66 % 93. 47 % Perceptron 95. 11 % 91. 55 % SVM (SMO) 95. 46 % 93. 76 %

Complete Lists of Cognates and False Friends n Method – Use the XXDICE orthographic similarity measure – Use list of pairs of words in two languages (the words that are translation of each other, or not, or monolingual lists of words) – Use a bilingual dictionary to determine if the words contained in a pair are translation of each other

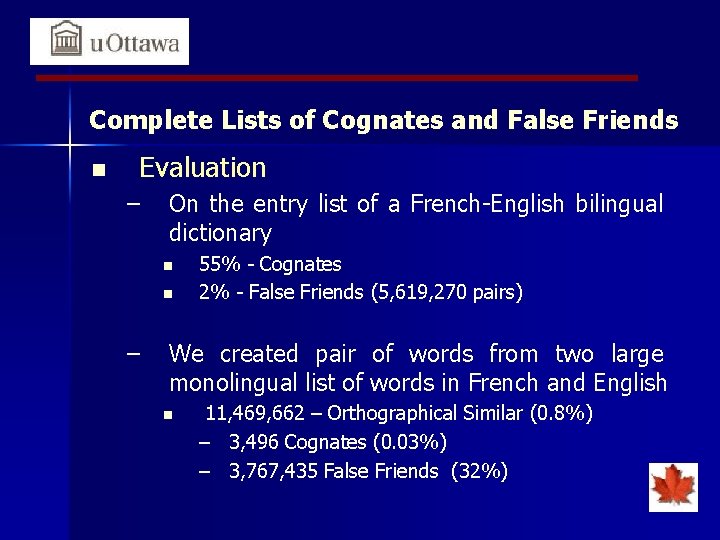

Complete Lists of Cognates and False Friends n Evaluation – On the entry list of a French-English bilingual dictionary n n – 55% - Cognates 2% - False Friends (5, 619, 270 pairs) We created pair of words from two large monolingual list of words in French and English n 11, 469, 662 – Orthographical Similar (0. 8%) – 3, 496 Cognates (0. 03%) – 3, 767, 435 False Friends (32%)

Cognates and False Friends Identification Conclusion n We tested a number of orthographic similarity measures individually, and also combined using different Machine Learning algorithms n We evaluated the methods on a training set using 10 -fold cross validation, on a test set n We proposed an extension of the method to create complete lists of Cognates and False Friends n The results show that, for French and English, it is possible to achieve very good accuracy based on the orthographic measures of word similarity

Partial Cognate Disambiguation n Task – To determine the sense/meaning (Cognate or False Friend with the equivalent English word) of an Partial Cognate in a French context Note Cog Le comité prend note de cette information. The Committee takes note of this reply. FF Mais qui a dû payer la note? So who got left holding the bill?

Data n Use a set of 10 Partial Cognates – Parallel sentences that have on the French side the French Partial Cognate and on the English side the English Cognate (English False Friend) labeled as COG (FF) n Collected from Euro. Par, Hansard – ~ 115 sentences each class for Training – ~ 60 sentences each class for Testing

Supervised Method Traditional ML algorithms Features - used the bag-of-words (BOW) approach of modeling context, with the binary feature values - context words from the training corpus that appeared at least 3 times in the training sentences Classes COG and FF

Monolingual Bootstrapping For each pair of partial cognates (PC) 1. Train a classifier on the training seeds – using the BOW approach and a NB-K classifier with attribute selection on the features 2. Apply the classifier on unlabeled data – sentences that contain the PC word, extracted from Le. Monde (MB-F) or from BNC (MB-E) 3. Take the first k newly classified sentences, both from the COG and FF class and add them to the training seeds (the most confident ones – the prediction accuracy greater or equal than a threshold =0. 85) 4. Rerun the experiments training on the new training set 5. Repeat steps 2 and 3 for t times end. For

Bilingual Bootstrapping 1. Translate the English sentences that were collected in the MB-E step into French using an online MT tool and add them to the French seed training data. 2. Repeat the MB-F and MB-E steps for T times.

Additional Data n Le. Monde – An average of 250 sentences for each class n BNC – An average of 200 sentences for each class n Multi-Domain corpus – An average of 80 sentences for each class

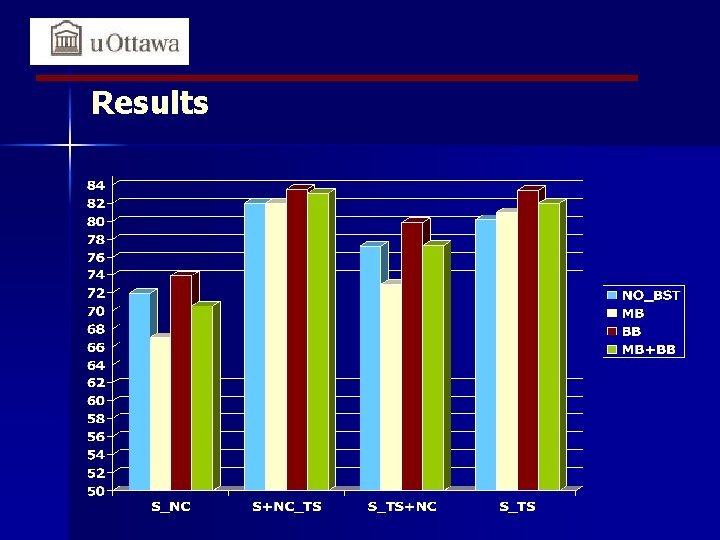

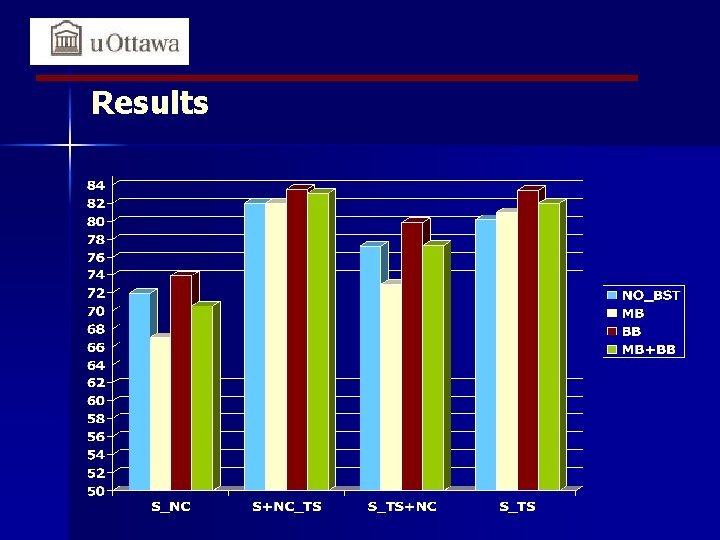

Results

Partial Cognate Disambiguation Conclusions – Simple methods and available tools are used with success for a task hard to solve even for humans – Additional use of unlabeled learning process for the Disambiguation task data improves the Partial Cognates – Semi-Supervised Learning proves to be “as good as” Supervised Learning

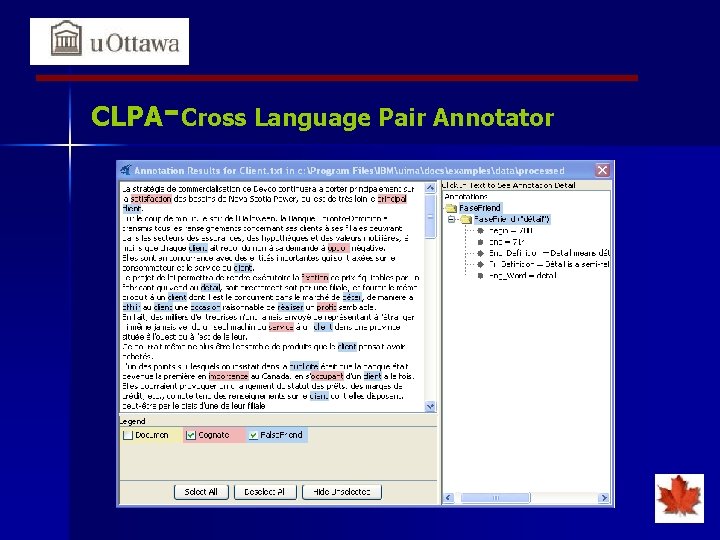

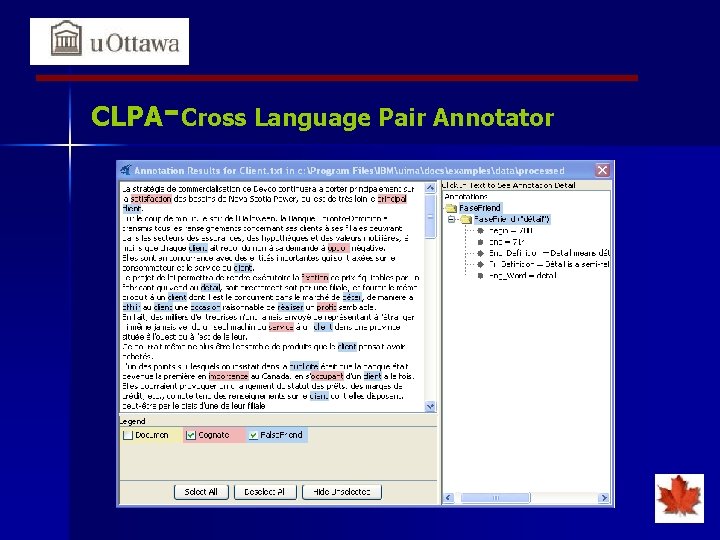

CLPA-Cross Language Pair Annotator

Future Work n Apply the Cognate and False Friend Identification method, and create complete list for other pair of languages n Increase the accuracy results for the Partial Cognate Disambiguation task n Use lemmatization for French texts and human evaluation for CLPA

Thank you!