2001 Summer Student Lectures Computing at CERN Lecture

- Slides: 34

2001 Summer Student Lectures Computing at CERN Lecture 2 — Looking at Data Tony Cass — Tony. Cass@cern. ch

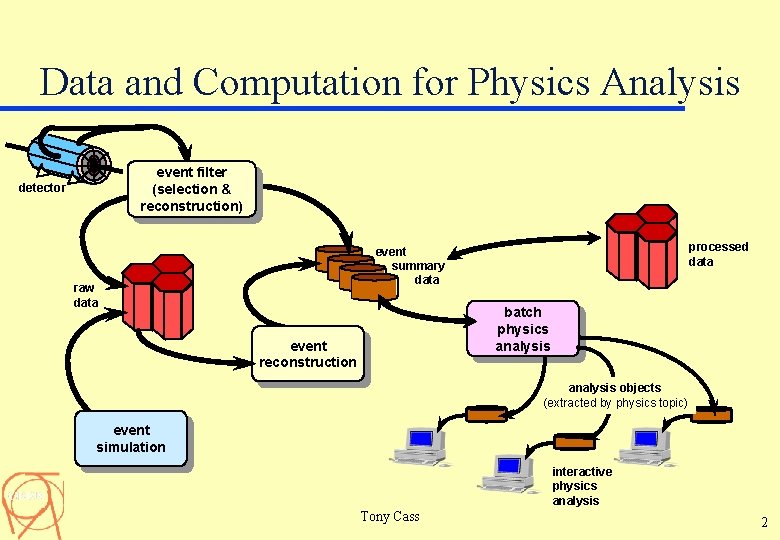

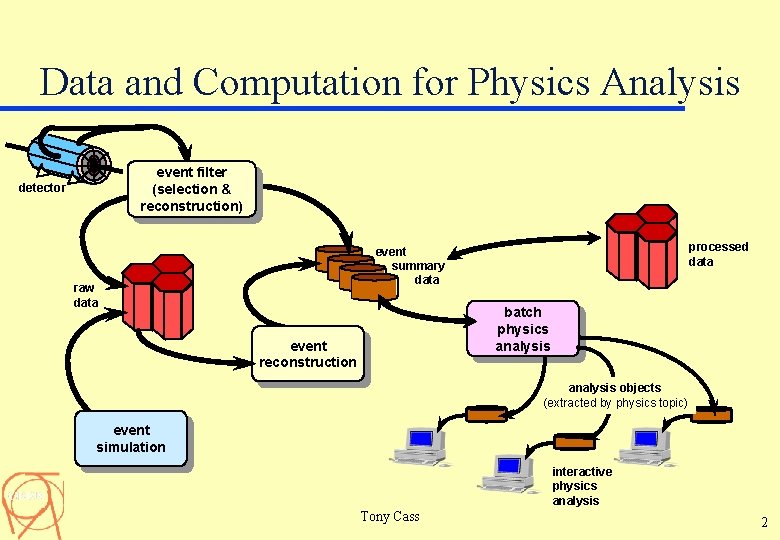

Data and Computation for Physics Analysis event filter (selection & reconstruction) detector processed data event summary data raw data batch physics analysis event reconstruction analysis objects (extracted by physics topic) event simulation interactive physics analysis Tony Cass 2

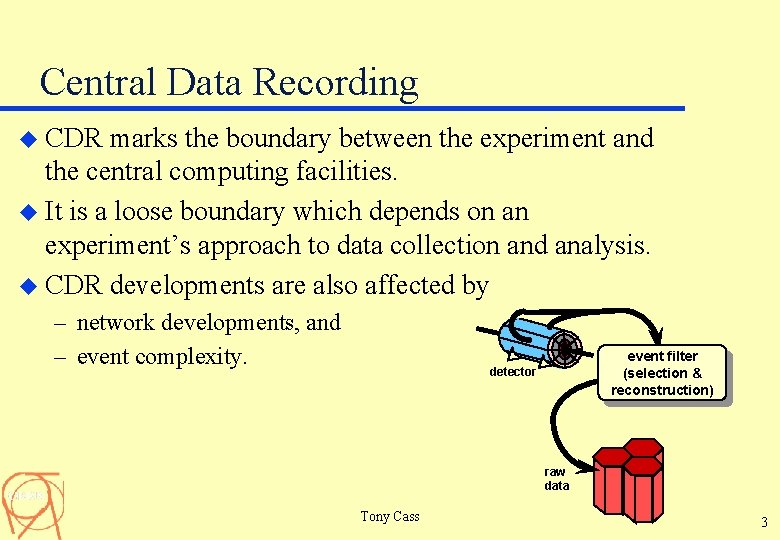

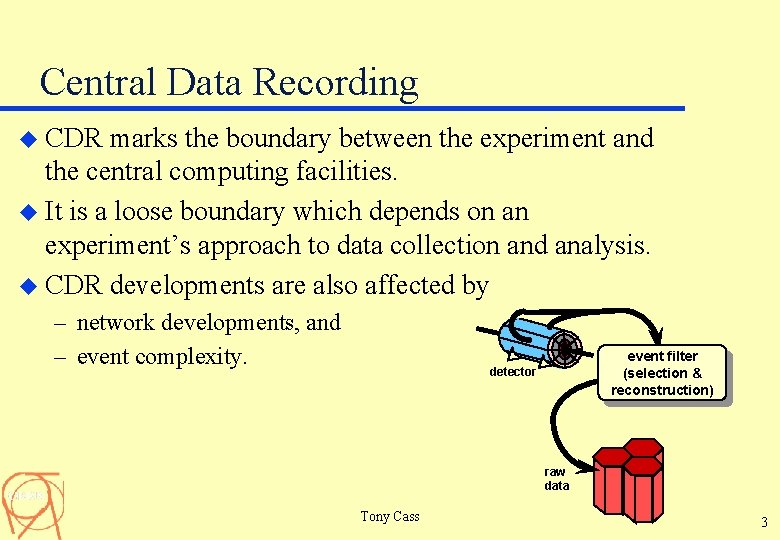

Central Data Recording u CDR marks the boundary between the experiment and the central computing facilities. u It is a loose boundary which depends on an experiment’s approach to data collection and analysis. u CDR developments are also affected by – network developments, and – event complexity. event filter (selection & reconstruction) detector raw data Tony Cass 3

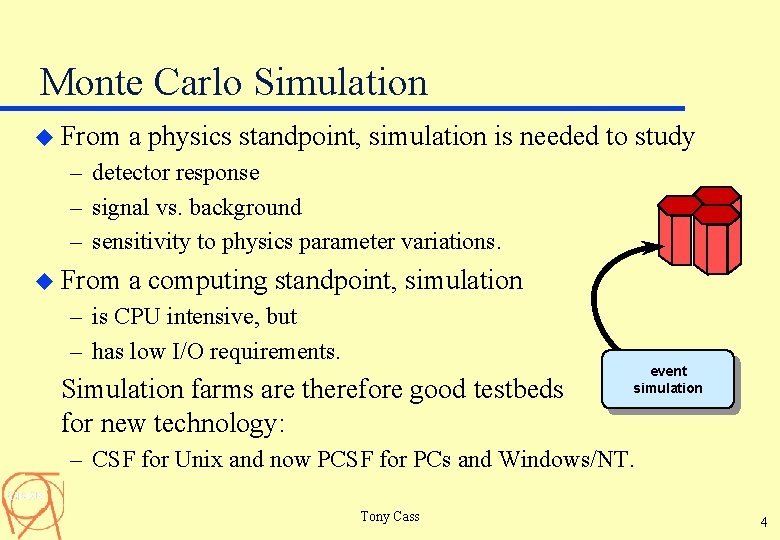

Monte Carlo Simulation u From a physics standpoint, simulation is needed to study – detector response – signal vs. background – sensitivity to physics parameter variations. u From a computing standpoint, simulation – is CPU intensive, but – has low I/O requirements. Simulation farms are therefore good testbeds for new technology: event simulation – CSF for Unix and now PCSF for PCs and Windows/NT. Tony Cass 4

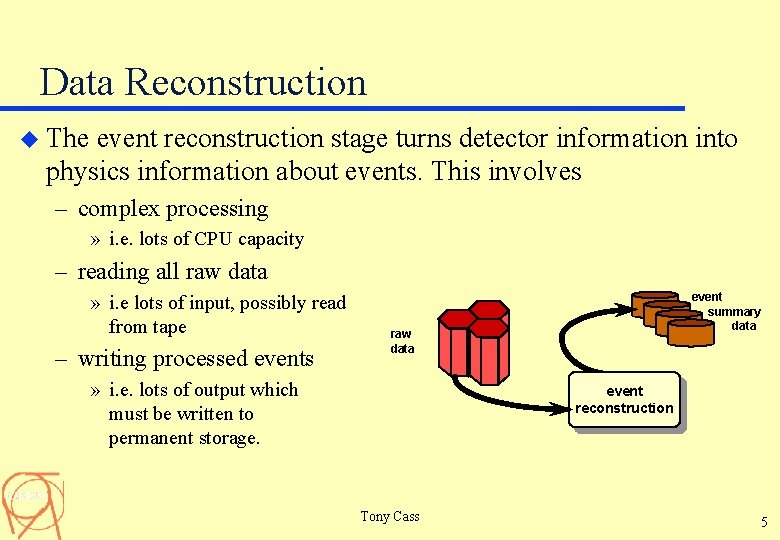

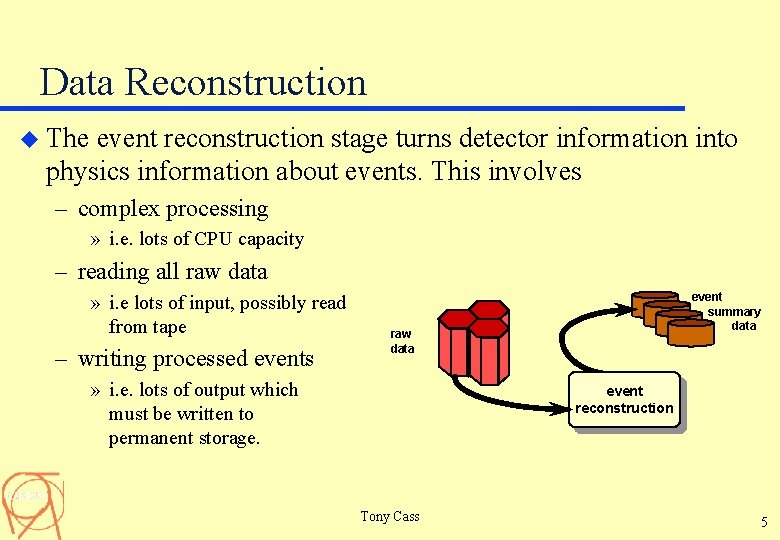

Data Reconstruction u The event reconstruction stage turns detector information into physics information about events. This involves – complex processing » i. e. lots of CPU capacity – reading all raw data » i. e lots of input, possibly read from tape – writing processed events event summary data raw data » i. e. lots of output which must be written to permanent storage. event reconstruction Tony Cass 5

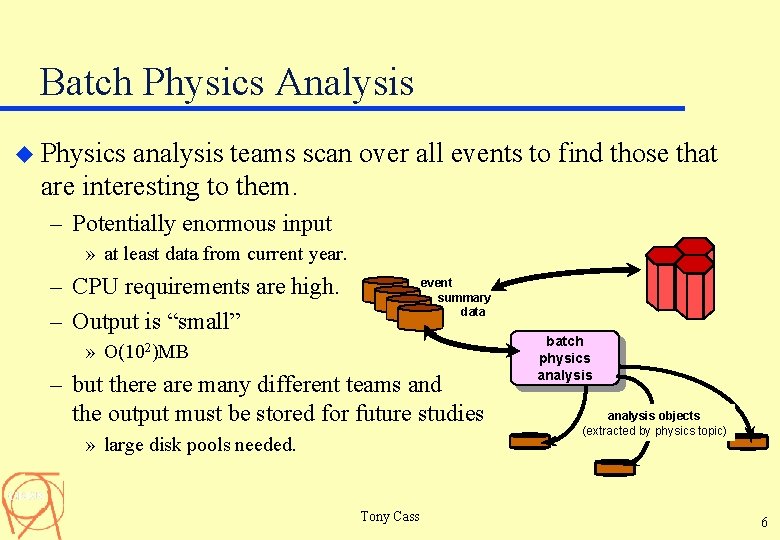

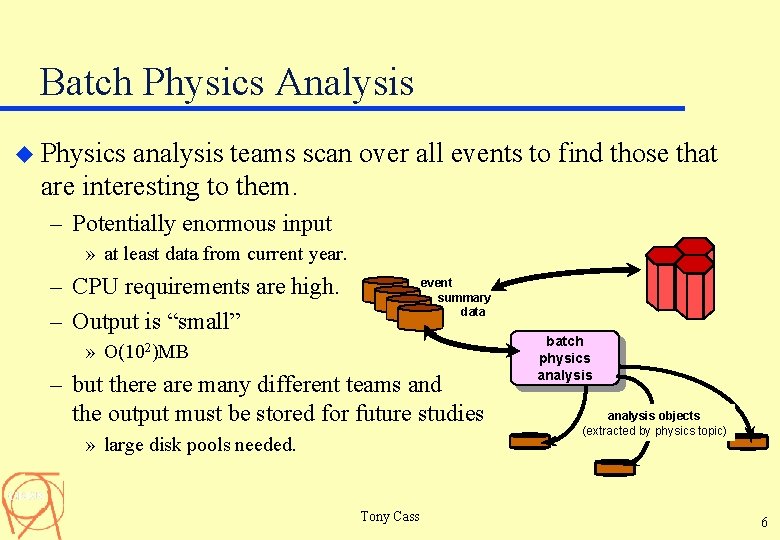

Batch Physics Analysis u Physics analysis teams scan over all events to find those that are interesting to them. – Potentially enormous input » at least data from current year. – CPU requirements are high. – Output is “small” event summary data » O(102)MB – but there are many different teams and the output must be stored for future studies » large disk pools needed. Tony Cass batch physics analysis objects (extracted by physics topic) 6

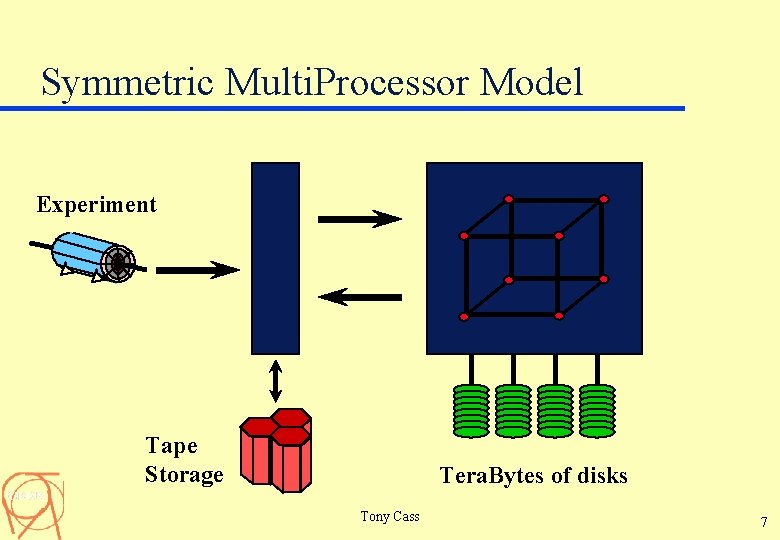

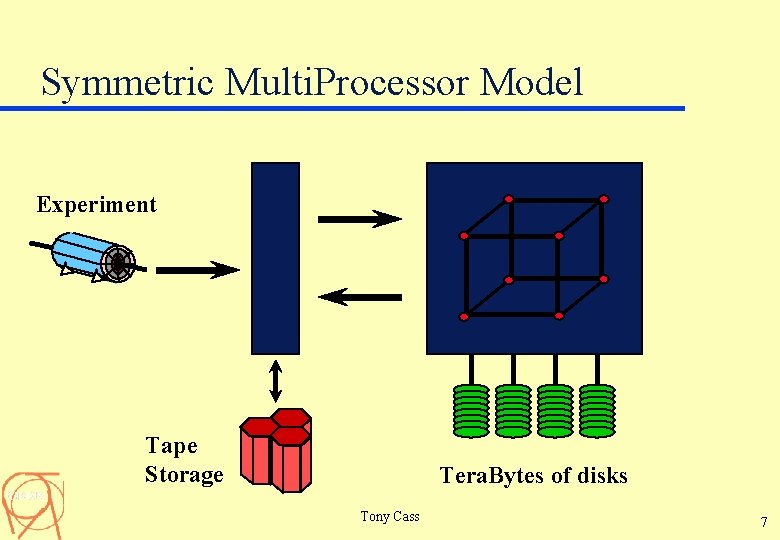

Symmetric Multi. Processor Model Experiment Tape Storage Tera. Bytes of disks Tony Cass 7

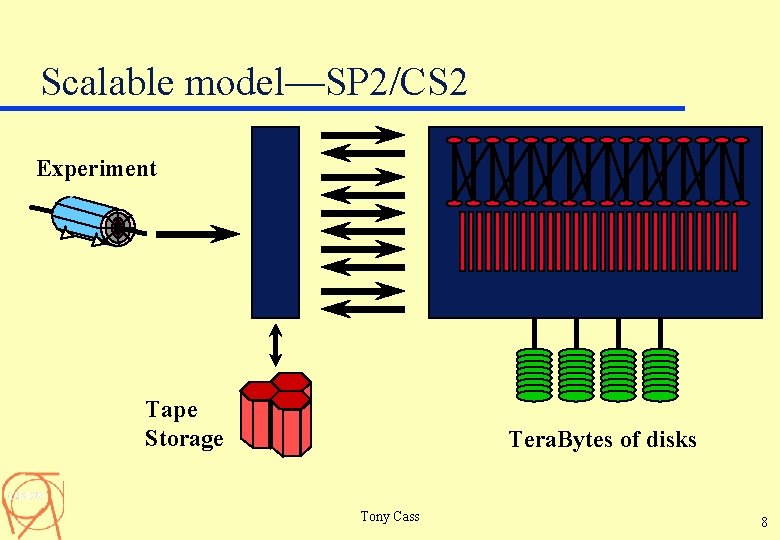

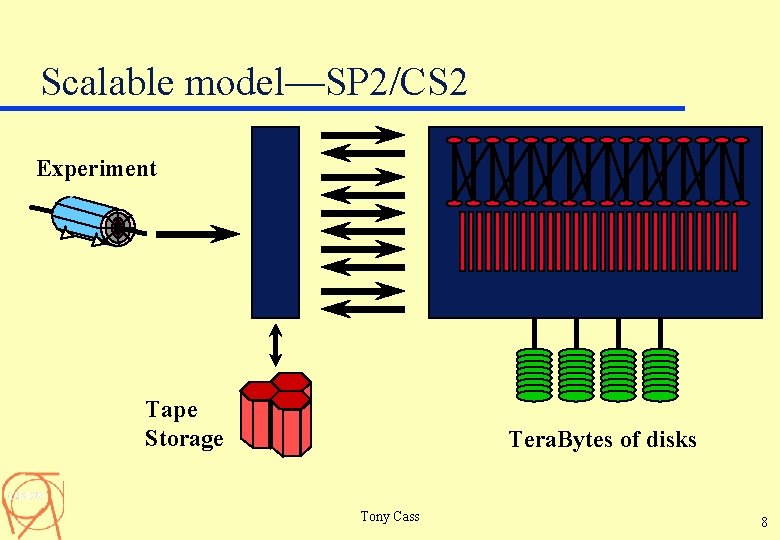

Scalable model—SP 2/CS 2 Experiment Tape Storage Tera. Bytes of disks Tony Cass 8

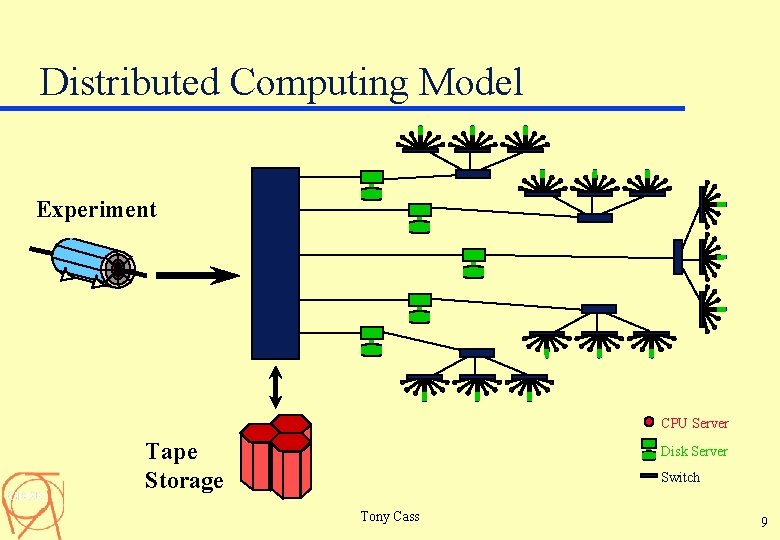

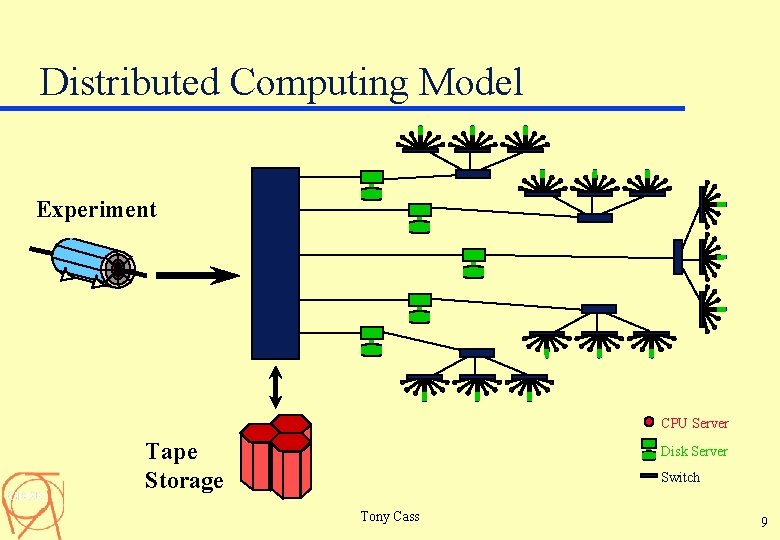

Distributed Computing Model Experiment CPU Server Tape Storage Disk Server Switch Tony Cass 9

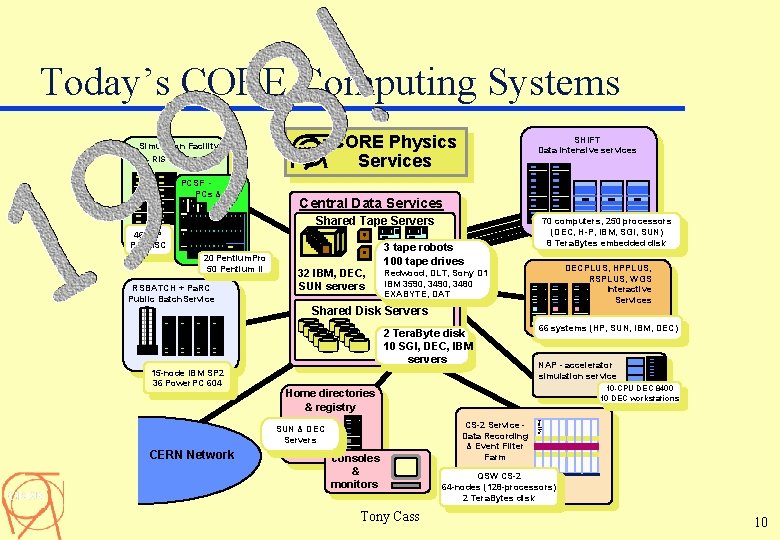

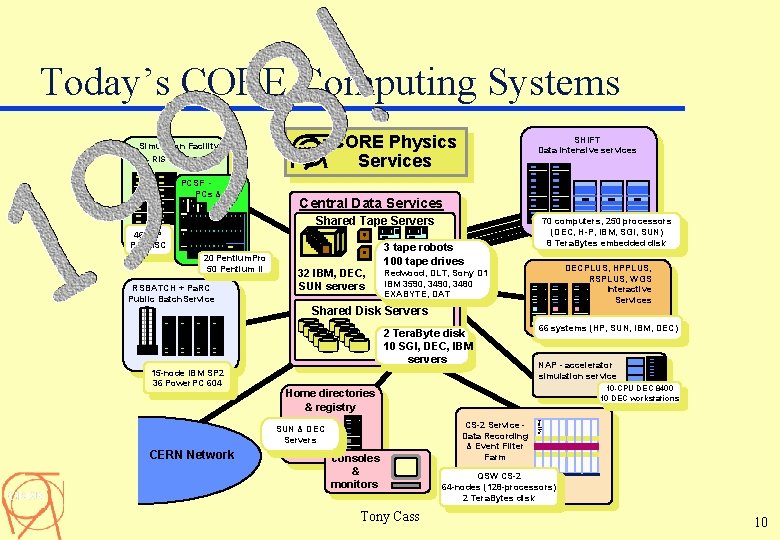

Today’s CORE Computing Systems Simulation Facility CORE Physics Services CERN CSF - RISC servers PCSF PCs & NT SHIFT Data intensive services Central Data Services Shared Tape Servers 46 H-P PA-RISC 20 Pentium. Pro 50 Pentium II RSBATCH + Pa. RC Public Batch. Service 32 IBM, DEC, SUN servers 3 tape robots 100 tape drives Shared Disk Servers 66 systems (HP, SUN, IBM, DEC) NAP - accelerator simulation service 10 -CPU DEC 8400 10 DEC workstations Home directories & registry consoles & monitors Tony Cass CS-2 Service Data Recording & Event Filter Farm mei ko SUN & DEC Servers CERN Network DECPLUS, HPPLUS, RSPLUS, WGS Interactive Services Redwood, DLT, Sony D 1 IBM 3590, 3480 EXABYTE, DAT 2 Tera. Byte disk 10 SGI, DEC, IBM servers 15 -node IBM SP 2 36 Power. PC 604 70 computers, 250 processors (DEC, H-P, IBM, SGI, SUN) 8 Tera. Bytes embedded disk QSW CS-2 64 -nodes (128 -processors) 2 Tera. Bytes disk 10

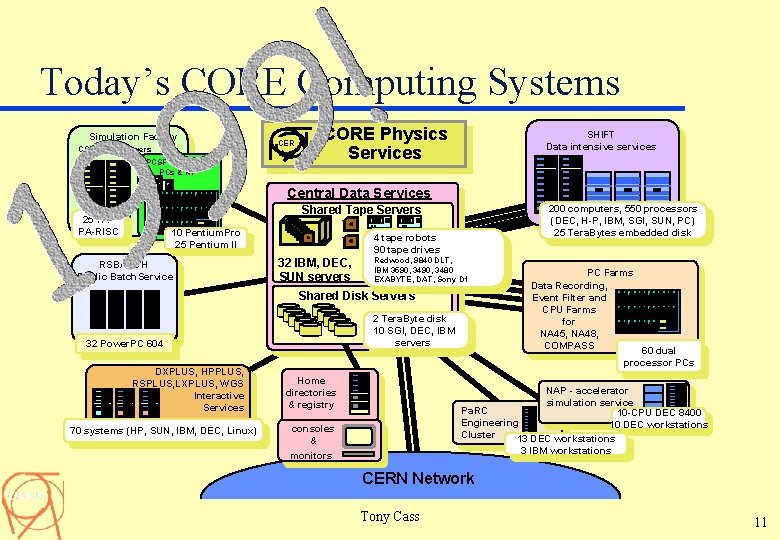

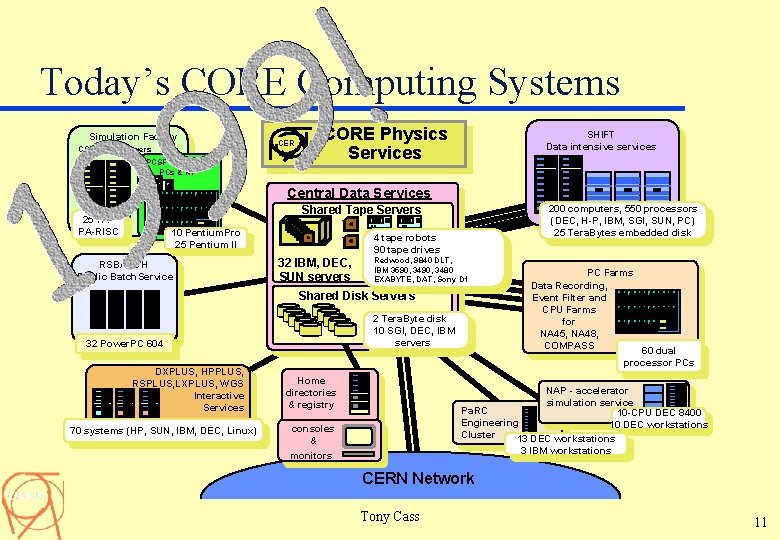

Today’s CORE Computing Systems Simulation Facility CSF - RISC servers PCSF PCs & NT CER N CORE Physics Services SHIFT Data intensive services Central Data Services Shared Tape Servers 25 H-P PA-RISC 10 Pentium. Pro 25 Pentium II RSBATCH Public Batch. Service 200 computers, 550 processors (DEC, H-P, IBM, SGI, SUN, PC) 25 Tera. Bytes embedded disk 4 tape robots 90 tape drives 32 IBM, DEC, SUN servers Redwood, 9840 DLT, IBM 3590, 3480 EXABYTE, DAT, Sony D 1 Shared Disk Servers 2 Tera. Byte disk 10 SGI, DEC, IBM servers 32 Power. PC 604 DXPLUS, HPPLUS, RSPLUS, LXPLUS, WGS Interactive Services 70 systems (HP, SUN, IBM, DEC, Linux) Home directories & registry PC Farms Data Recording, Event Filter and CPU Farms for NA 45, NA 48, COMPASS 60 dual processor PCs NAP - accelerator simulation service Pa. RC 10 -CPU DEC 8400 Engineering 10 DEC workstations Cluster 13 DEC workstations 3 IBM workstations consoles & monitors CERN Network Tony Cass 11

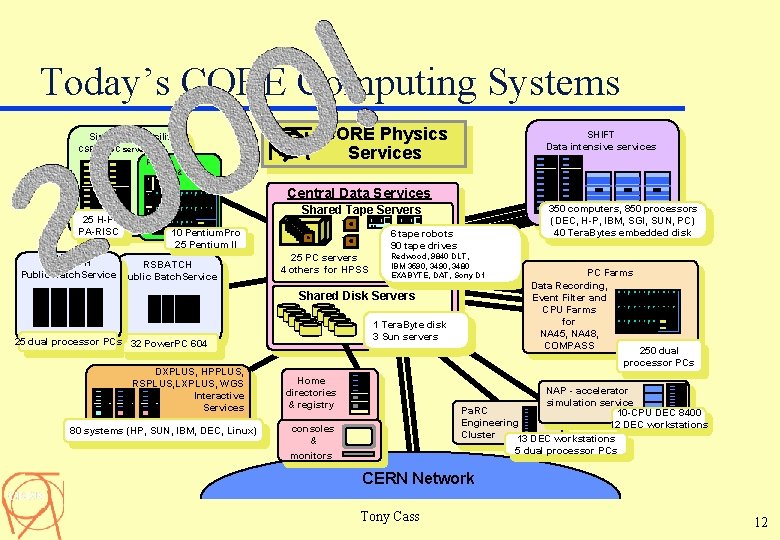

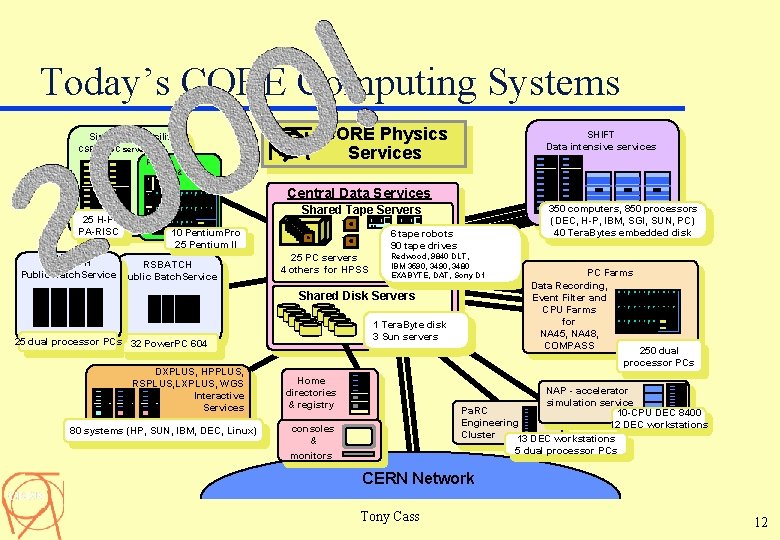

Today’s CORE Computing Systems Simulation Facility CSF - RISC servers PCSF PCs & NT CER N CORE Physics Services SHIFT Data intensive services Central Data Services 25 H-P PA-RISC Shared Tape Servers 10 Pentium. Pro 25 Pentium II LXBATCH RSBATCH Public Batch. Service 350 computers, 850 processors (DEC, H-P, IBM, SGI, SUN, PC) 40 Tera. Bytes embedded disk 6 tape robots 90 tape drives 25 PC servers 4 others for HPSS Redwood, 9840 DLT, IBM 3590, 3480 EXABYTE, DAT, Sony D 1 Shared Disk Servers 1 Tera. Byte disk 3 Sun servers 25 dual processor PCs 32 Power. PC 604 DXPLUS, HPPLUS, RSPLUS, LXPLUS, WGS Interactive Services 80 systems (HP, SUN, IBM, DEC, Linux) PC Farms Data Recording, Event Filter and CPU Farms for NA 45, NA 48, COMPASS 250 dual processor PCs Home directories & registry NAP - accelerator simulation service Pa. RC 10 -CPU DEC 8400 Engineering 12 DEC workstations Cluster 13 DEC workstations 5 dual processor PCs consoles & monitors CERN Network Tony Cass 12

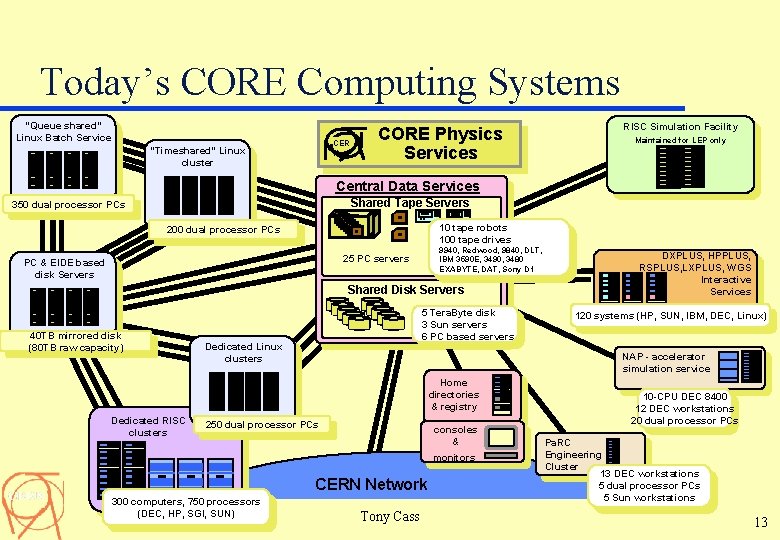

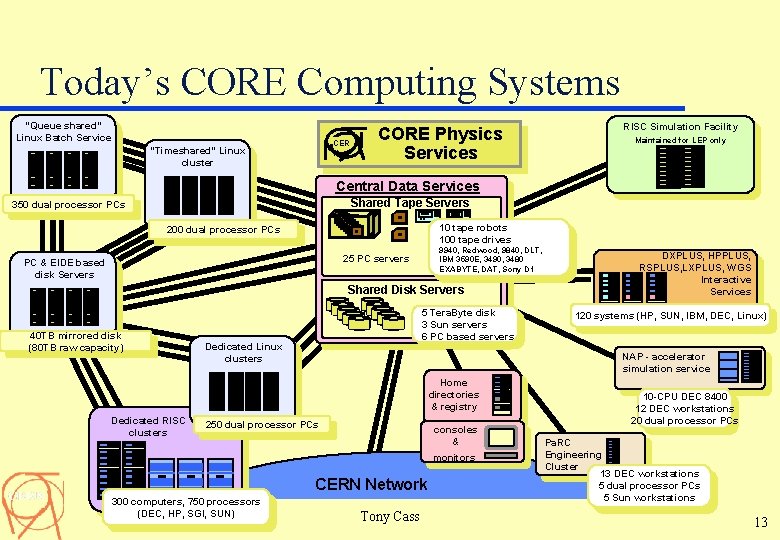

Today’s CORE Computing Systems “Queue shared” Linux Batch Service CER N “Timeshared” Linux cluster CORE Physics Services RISC Simulation Facility Maintained for LEP only Central Data Services Shared Tape Servers 350 dual processor PCs 10 tape robots 100 tape drives 200 dual processor PCs 9940, Redwood, 9840, DLT, IBM 3590 E, 3490, 3480 EXABYTE, DAT, Sony D 1 25 PC servers PC & EIDE based disk Servers Shared Disk Servers 40 TB mirrored disk (80 TB raw capacity) 5 Tera. Byte disk 3 Sun servers 6 PC based servers Dedicated Linux clusters 250 dual processor PCs consoles & monitors CERN Network 300 computers, 750 processors (DEC, HP, SGI, SUN) 120 systems (HP, SUN, IBM, DEC, Linux) NAP - accelerator simulation service Home directories & registry Dedicated RISC clusters DXPLUS, HPPLUS, RSPLUS, LXPLUS, WGS Interactive Services Tony Cass 10 -CPU DEC 8400 12 DEC workstations 20 dual processor PCs Pa. RC Engineering Cluster 13 DEC workstations 5 dual processor PCs 5 Sun workstations 13

Hardware Evolution at CERN, 1989 -2001 Tony Cass 14

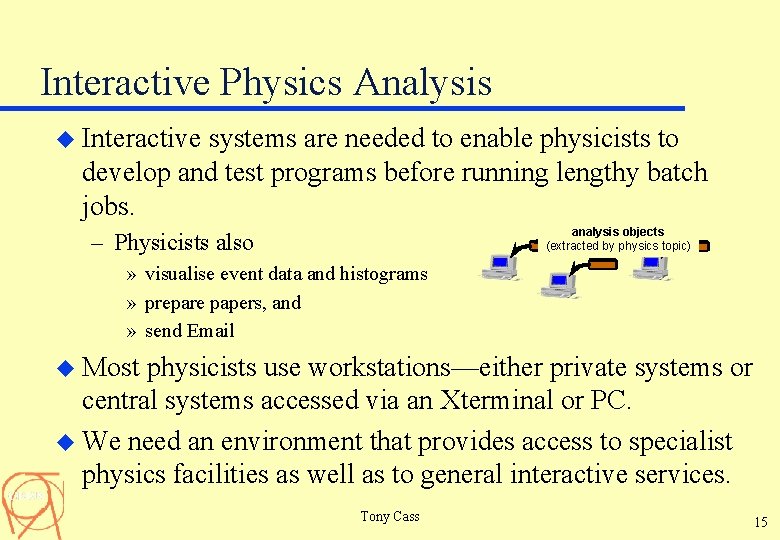

Interactive Physics Analysis u Interactive systems are needed to enable physicists to develop and test programs before running lengthy batch jobs. analysis objects (extracted by physics topic) – Physicists also » visualise event data and histograms » prepare papers, and » send Email u Most physicists use workstations—either private systems or central systems accessed via an Xterminal or PC. u We need an environment that provides access to specialist physics facilities as well as to general interactive services. Tony Cass 15

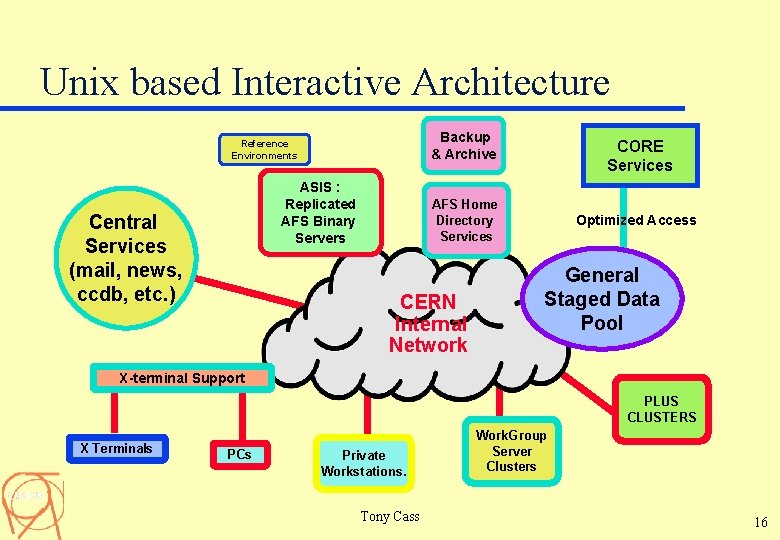

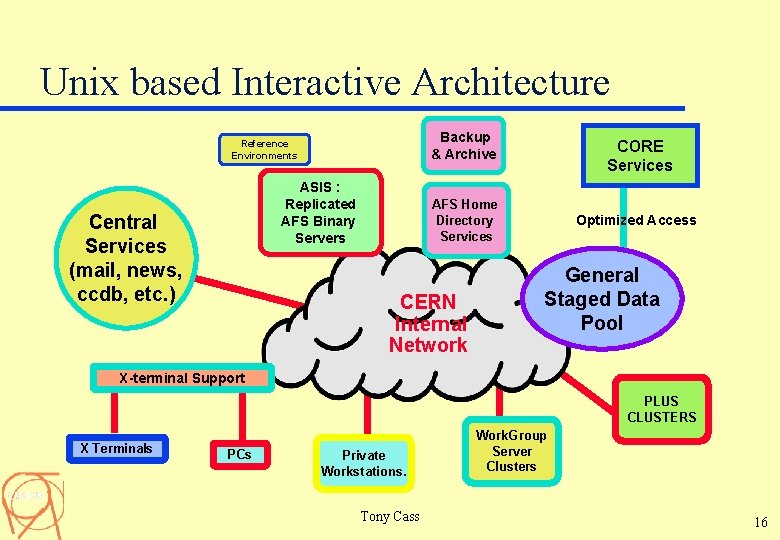

Unix based Interactive Architecture Backup & Archive Reference Environments ASIS : Replicated AFS Binary Servers Central Services (mail, news, ccdb, etc. ) CORE Services AFS Home Directory Services CERN Internal Network Optimized Access General Staged Data Pool X-terminal Support PLUS CLUSTERS X Terminals PCs Private Workstations. Tony Cass Work. Group Server Clusters 16

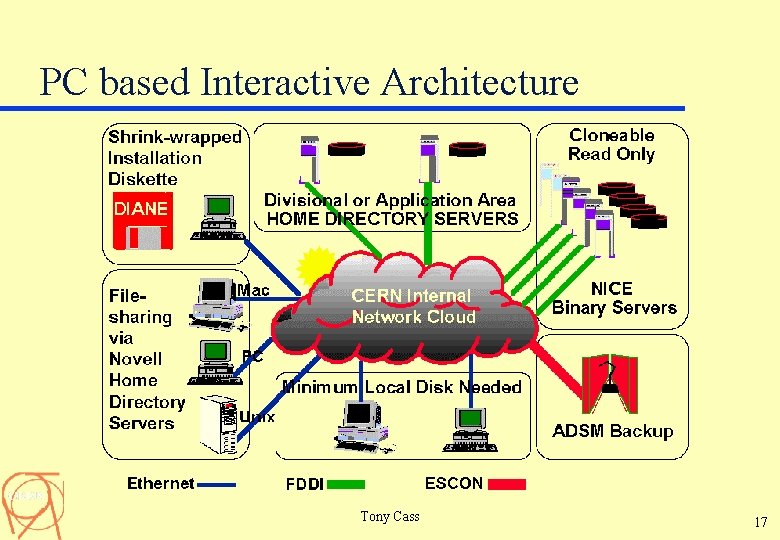

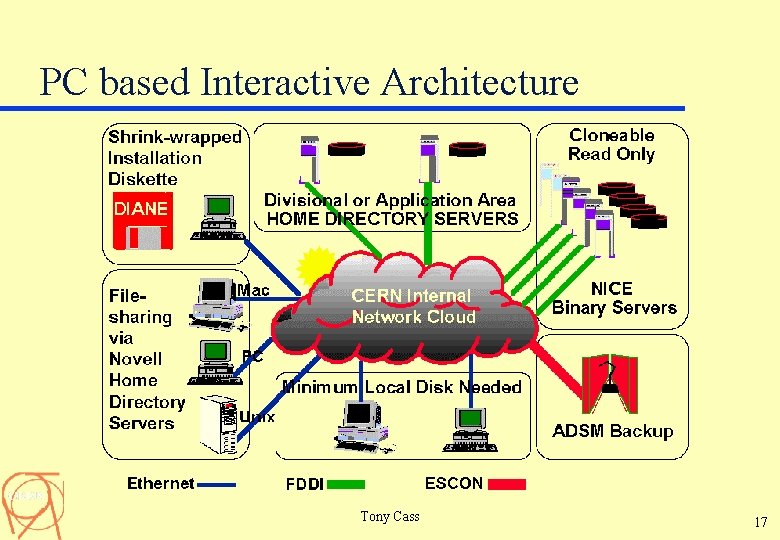

PC based Interactive Architecture Tony Cass 17

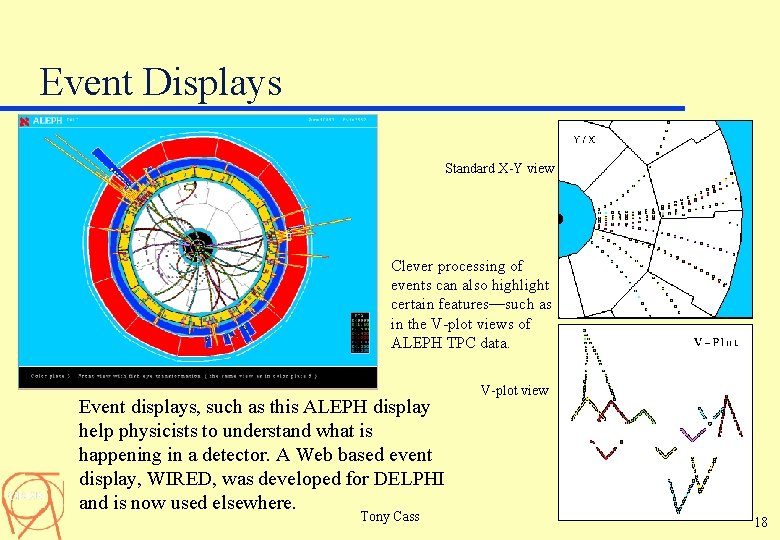

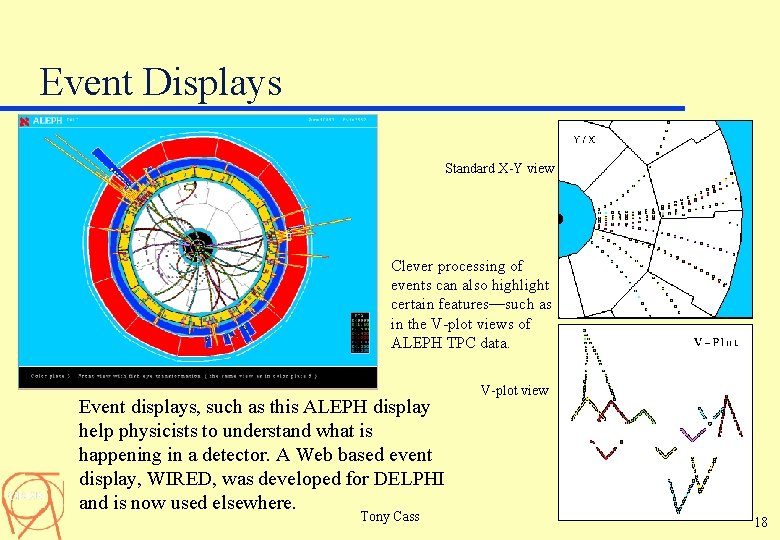

Event Displays Standard X-Y view Clever processing of events can also highlight certain features—such as in the V-plot views of ALEPH TPC data. Event displays, such as this ALEPH display help physicists to understand what is happening in a detector. A Web based event display, WIRED, was developed for DELPHI and is now used elsewhere. Tony Cass V-plot view 18

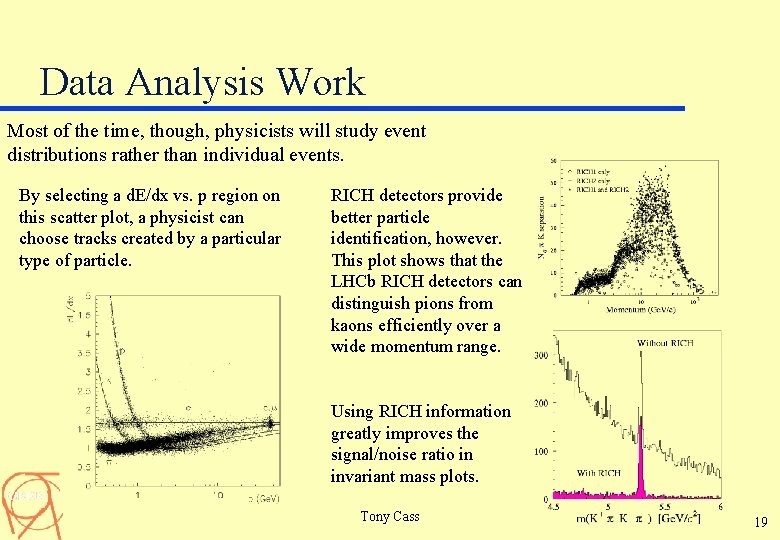

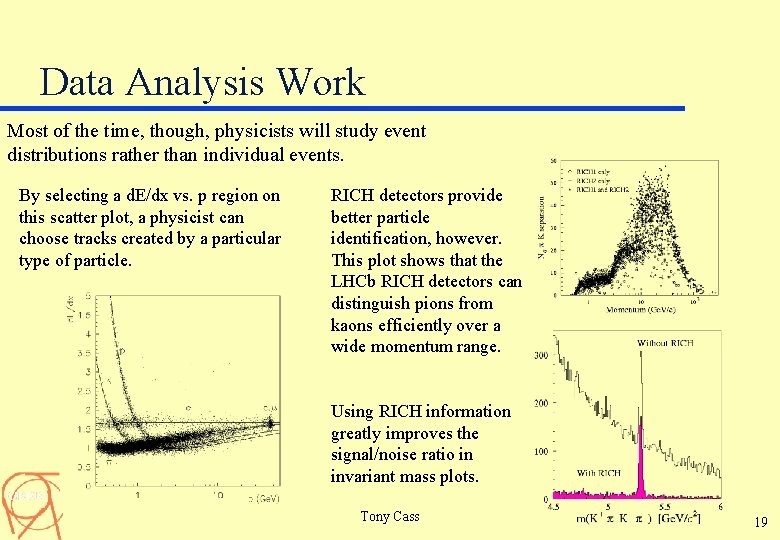

Data Analysis Work Most of the time, though, physicists will study event distributions rather than individual events. By selecting a d. E/dx vs. p region on this scatter plot, a physicist can choose tracks created by a particular type of particle. RICH detectors provide better particle identification, however. This plot shows that the LHCb RICH detectors can distinguish pions from kaons efficiently over a wide momentum range. Using RICH information greatly improves the signal/noise ratio in invariant mass plots. Tony Cass 19

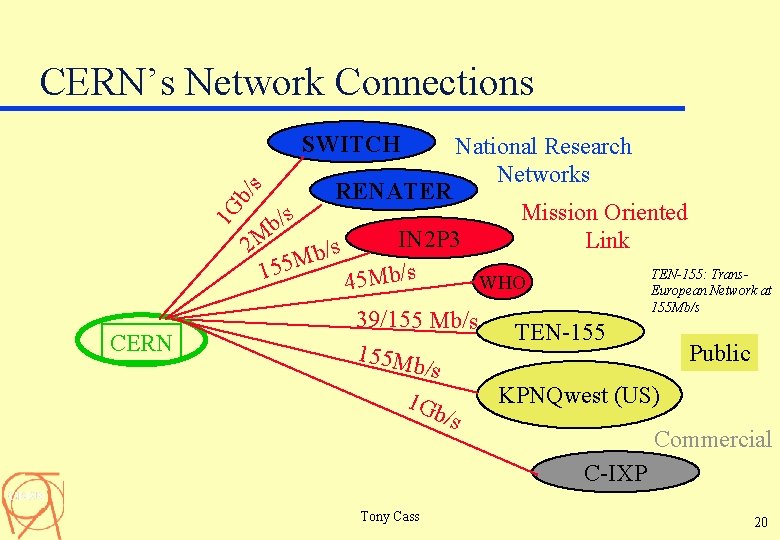

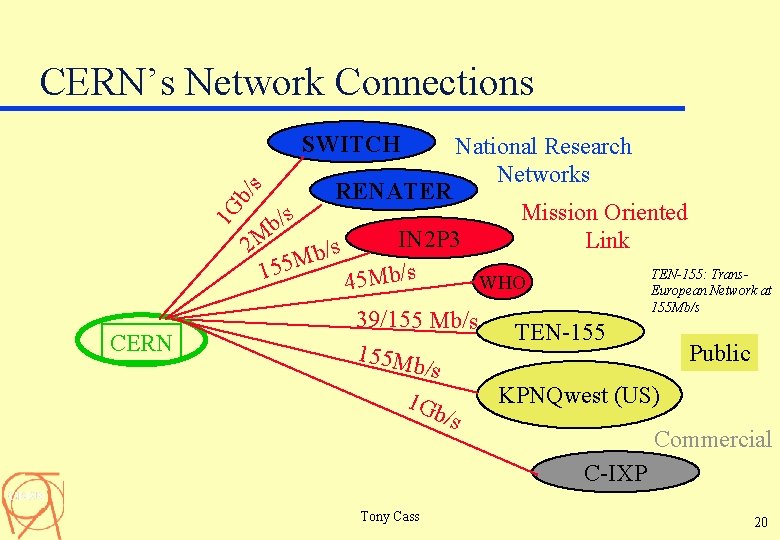

CERN’s Network Connections 1 G b/s SWITCH RENATER National Research Networks Mission Oriented s / b M IN 2 P 3 Link 2 s / b TEN-155: Trans 155 M 45 Mb/s WHO European Network at 155 Mb/s CERN 39/155 Mb/s TEN-155 155 M Public b/s KPNQwest (US) 1 Gb /s Commercial C-IXP Tony Cass 20

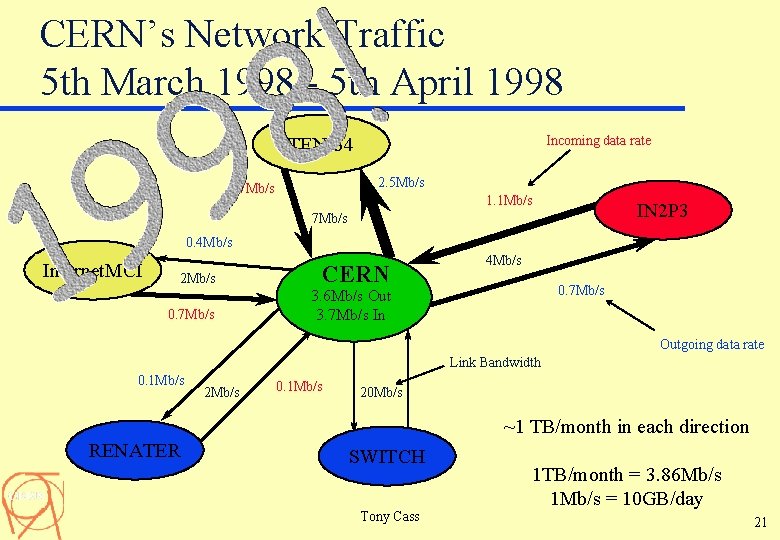

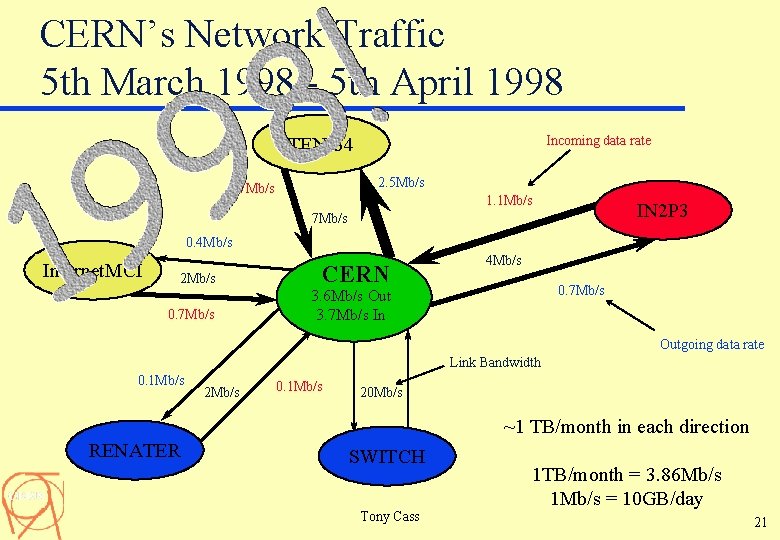

CERN’s Network Traffic 5 th March 1998 - 5 th April 1998 Incoming data rate TEN-34 2. 5 Mb/s 1. 7 Mb/s 1. 1 Mb/s IN 2 P 3 7 Mb/s 0. 4 Mb/s Internet. MCI 2 Mb/s 0. 7 Mb/s CERN 4 Mb/s 0. 7 Mb/s 3. 6 Mb/s Out 3. 7 Mb/s In Outgoing data rate Link Bandwidth 0. 1 Mb/s 2 Mb/s 0. 1 Mb/s 20 Mb/s ~1 TB/month in each direction RENATER SWITCH Tony Cass 1 TB/month = 3. 86 Mb/s 1 Mb/s = 10 GB/day 21

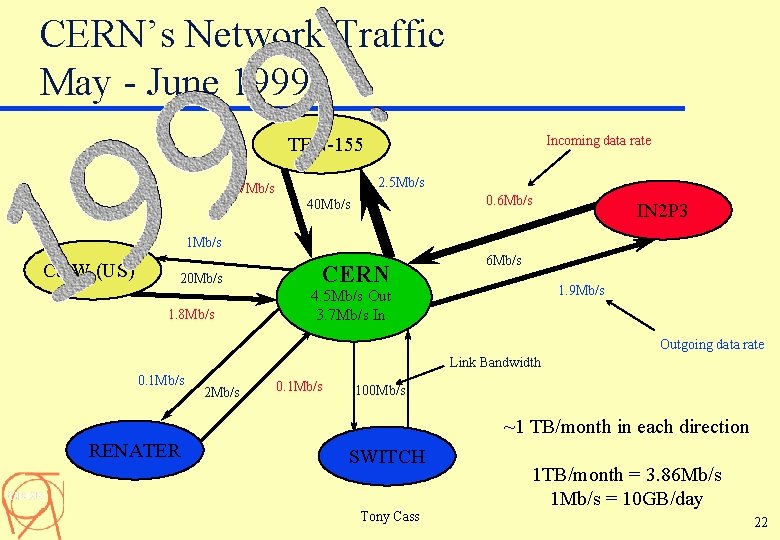

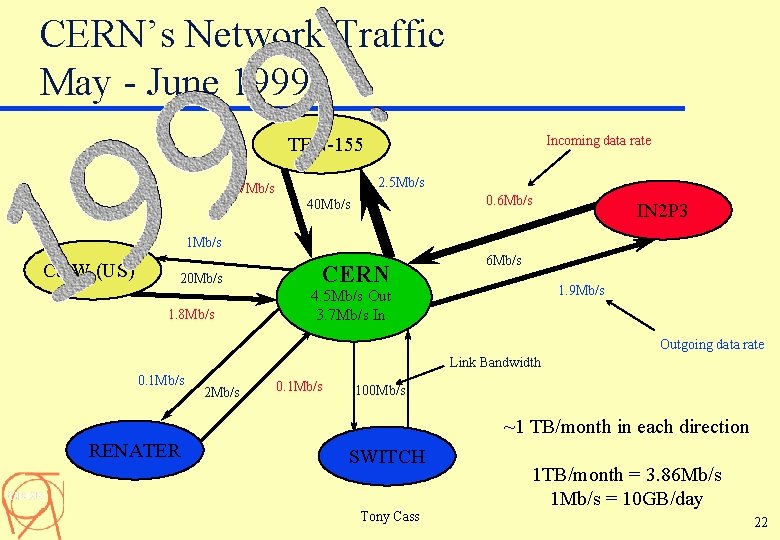

CERN’s Network Traffic May - June 1999 Incoming data rate TEN-155 2. 5 Mb/s 1. 7 Mb/s 0. 6 Mb/s 40 Mb/s IN 2 P 3 1 Mb/s C&W (US) 20 Mb/s 1. 8 Mb/s CERN 6 Mb/s 1. 9 Mb/s 4. 5 Mb/s Out 3. 7 Mb/s In Outgoing data rate Link Bandwidth 0. 1 Mb/s 2 Mb/s 0. 1 Mb/s 100 Mb/s ~1 TB/month in each direction RENATER SWITCH Tony Cass 1 TB/month = 3. 86 Mb/s 1 Mb/s = 10 GB/day 22

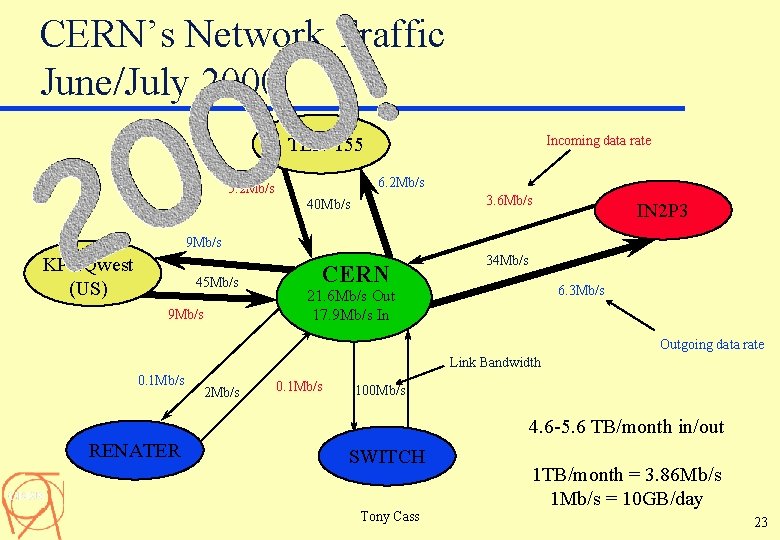

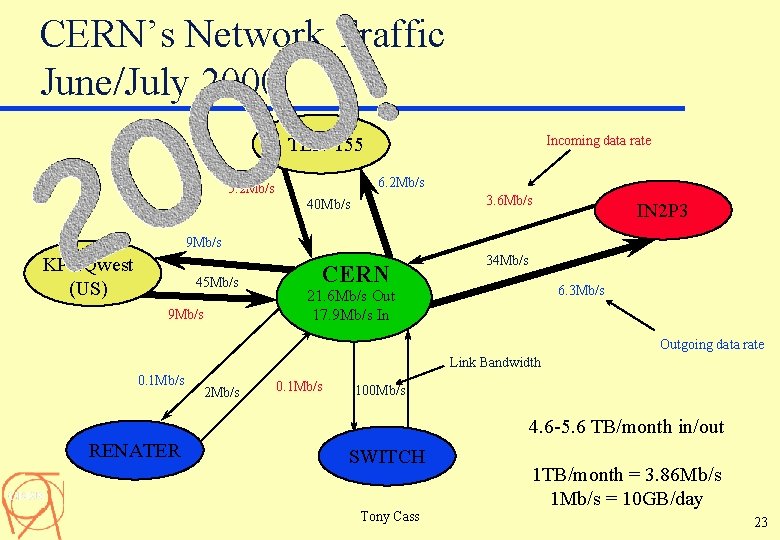

CERN’s Network Traffic June/July 2000 Incoming data rate TEN-155 6. 2 Mb/s 5. 2 Mb/s 3. 6 Mb/s 40 Mb/s IN 2 P 3 9 Mb/s KPNQwest (US) 45 Mb/s 9 Mb/s CERN 34 Mb/s 6. 3 Mb/s 21. 6 Mb/s Out 17. 9 Mb/s In Outgoing data rate Link Bandwidth 0. 1 Mb/s 2 Mb/s 0. 1 Mb/s 100 Mb/s 4. 6 -5. 6 TB/month in/out RENATER SWITCH Tony Cass 1 TB/month = 3. 86 Mb/s 1 Mb/s = 10 GB/day 23

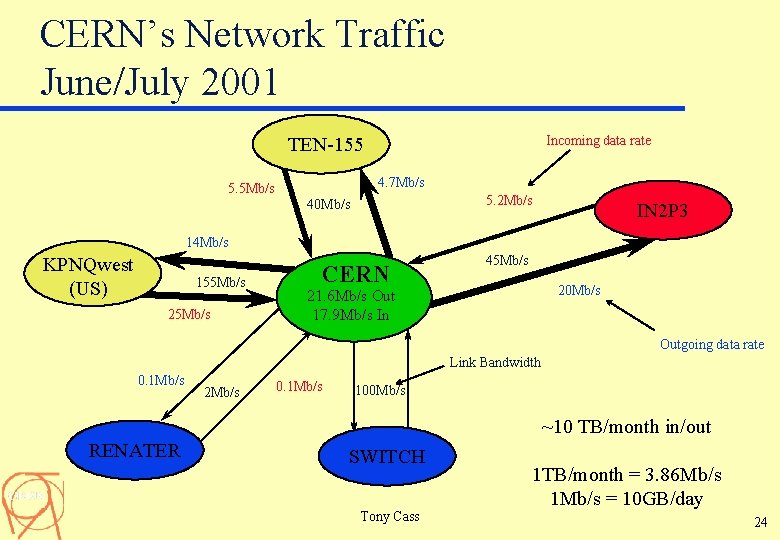

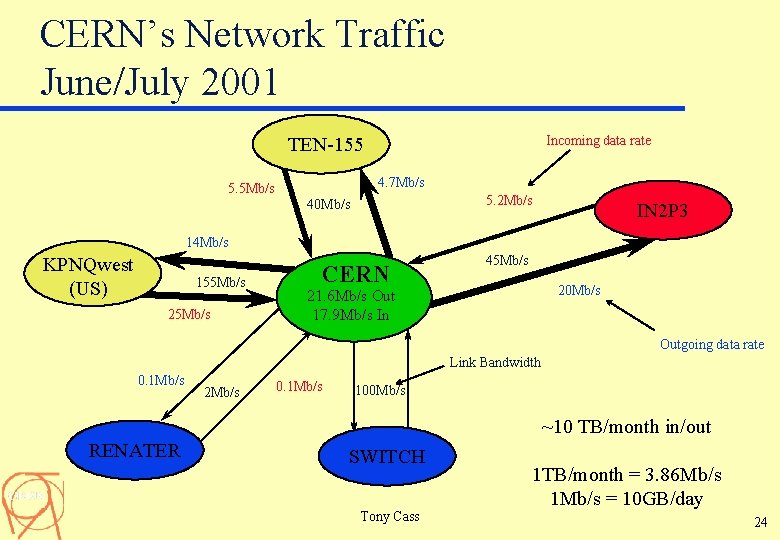

CERN’s Network Traffic June/July 2001 Incoming data rate TEN-155 4. 7 Mb/s 5. 5 Mb/s 5. 2 Mb/s 40 Mb/s IN 2 P 3 14 Mb/s KPNQwest (US) 155 Mb/s 25 Mb/s CERN 45 Mb/s 20 Mb/s 21. 6 Mb/s Out 17. 9 Mb/s In Outgoing data rate Link Bandwidth 0. 1 Mb/s 2 Mb/s 0. 1 Mb/s 100 Mb/s ~10 TB/month in/out RENATER SWITCH Tony Cass 1 TB/month = 3. 86 Mb/s 1 Mb/s = 10 GB/day 24

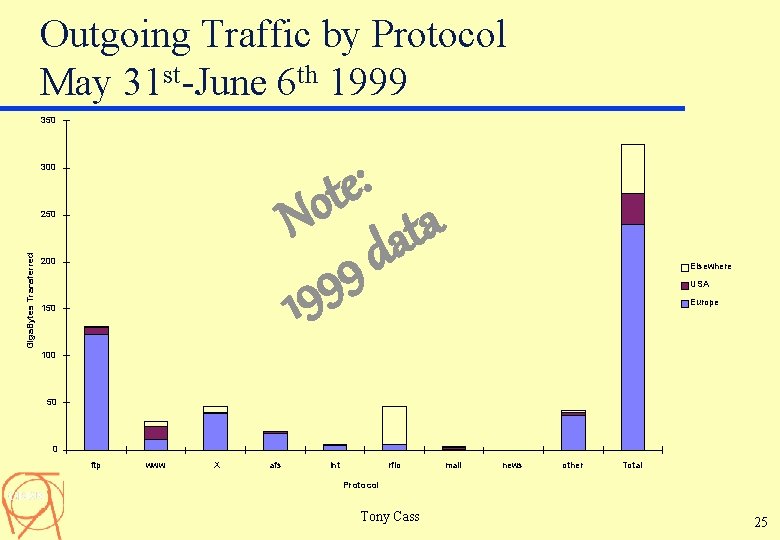

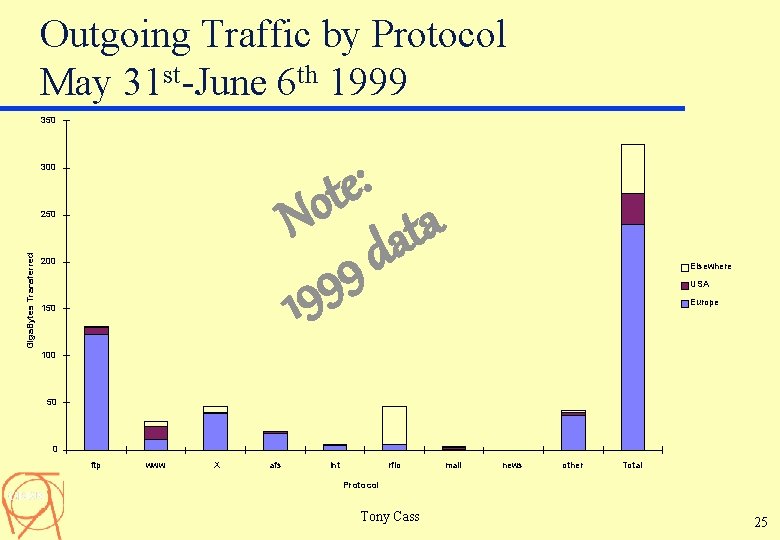

Outgoing Traffic by Protocol May 31 st-June 6 th 1999 350 : e t o N ata d 9 9 19 300 Giga. Bytes Transferred 250 200 150 Elsewhere USA Europe 100 50 0 ftp www X afs int rfio mail news other Total Protocol Tony Cass 25

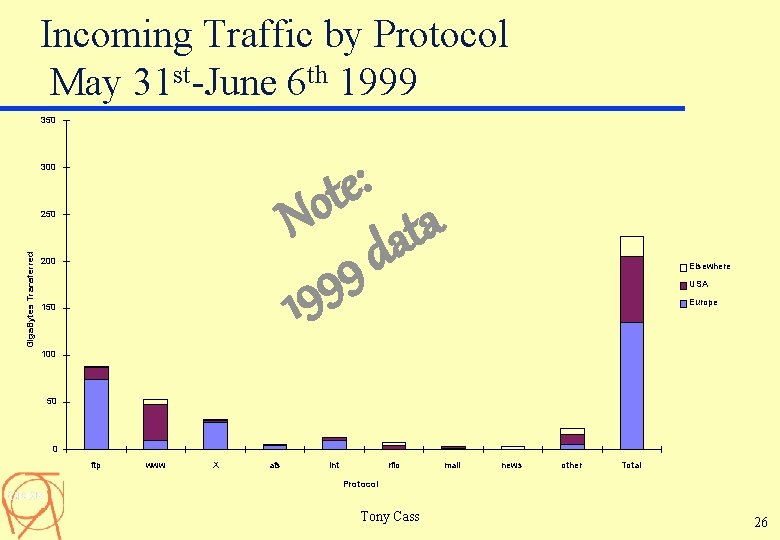

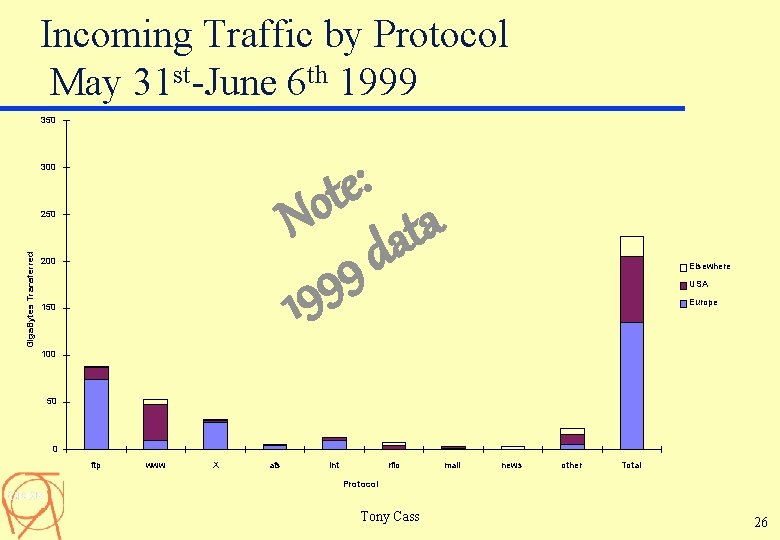

Incoming Traffic by Protocol May 31 st-June 6 th 1999 350 : e t o N ata d 9 9 19 300 Giga. Bytes Transferred 250 200 150 Elsewhere USA Europe 100 50 0 ftp www X afs int rfio mail news other Total Protocol Tony Cass 26

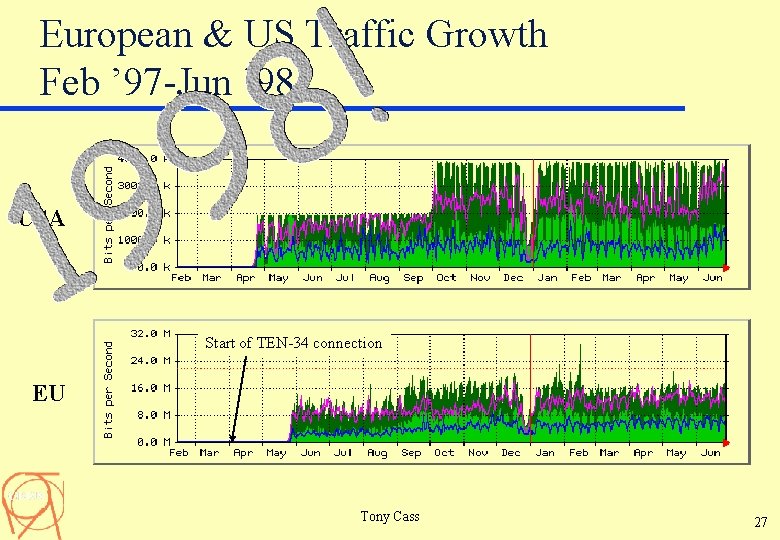

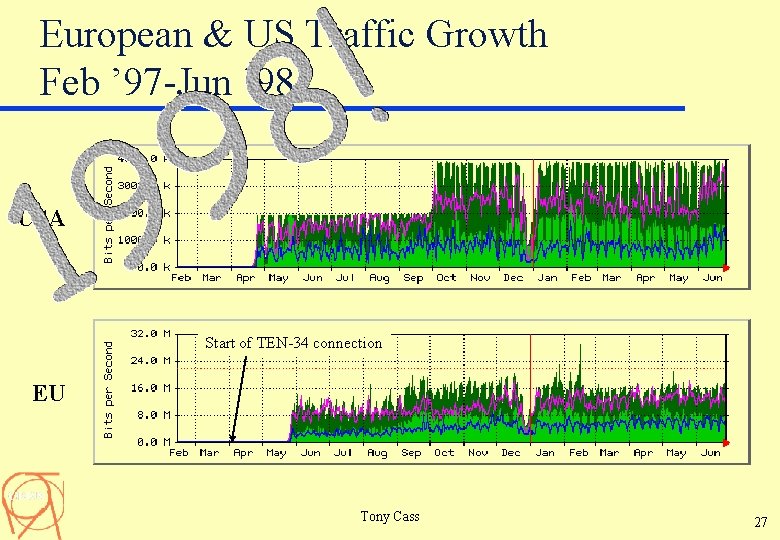

European & US Traffic Growth Feb ’ 97 -Jun ’ 98 USA Start of TEN-34 connection EU Tony Cass 27

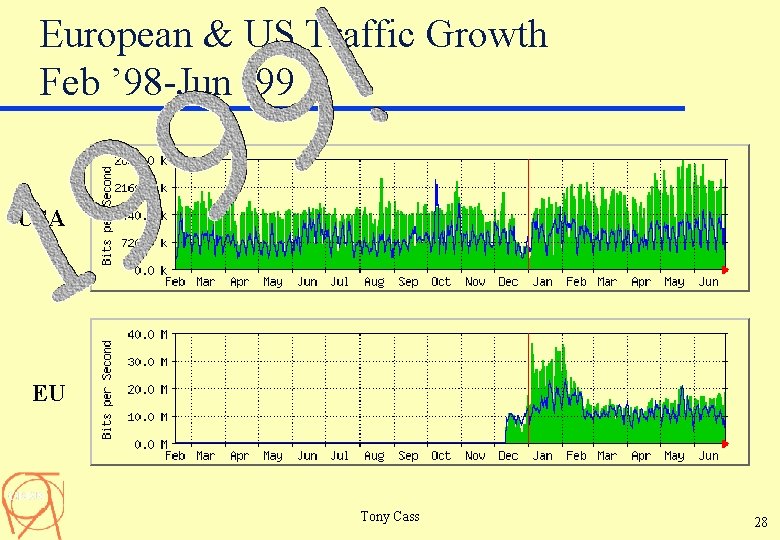

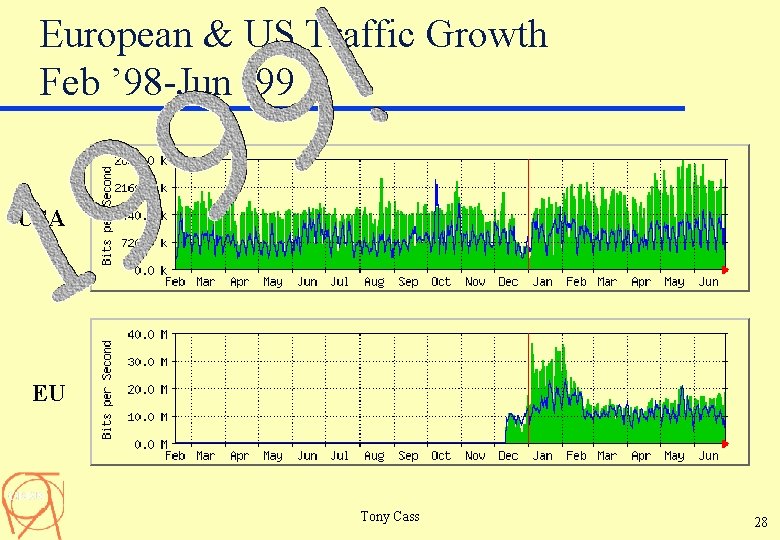

European & US Traffic Growth Feb ’ 98 -Jun ’ 99 USA EU Tony Cass 28

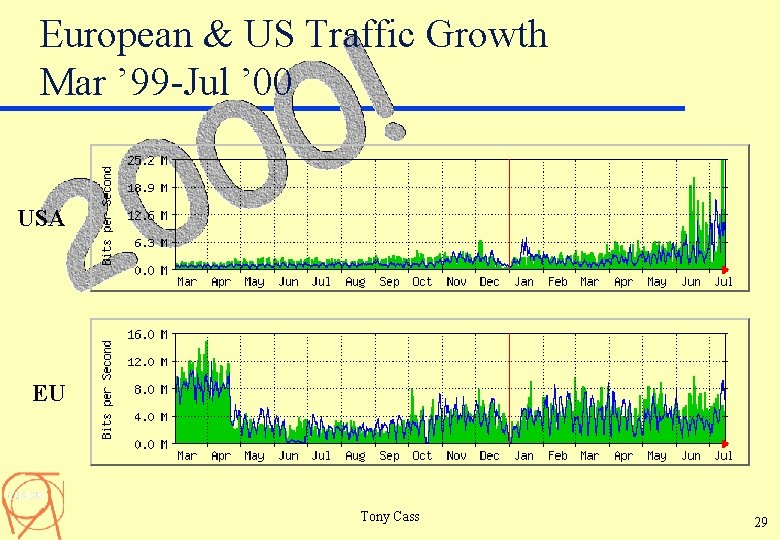

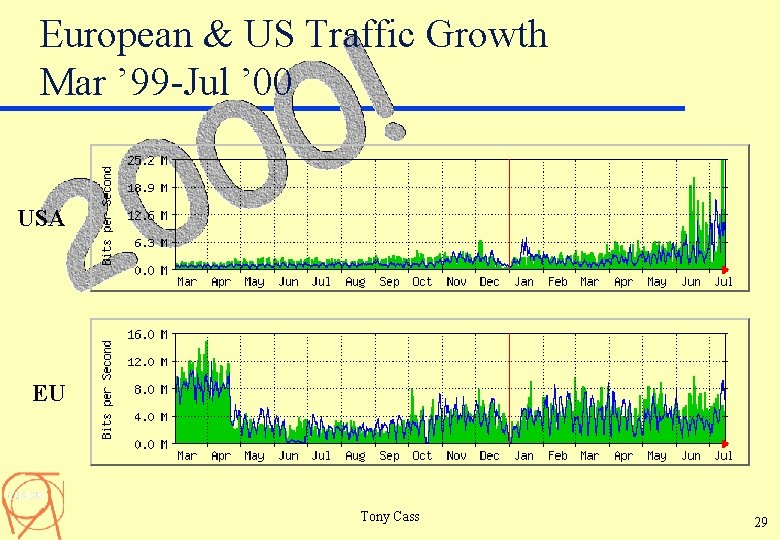

European & US Traffic Growth Mar ’ 99 -Jul ’ 00 USA EU Tony Cass 29

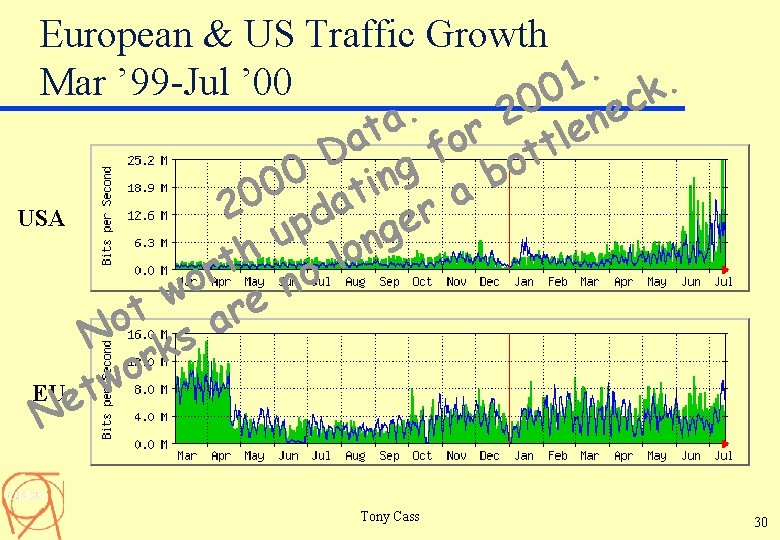

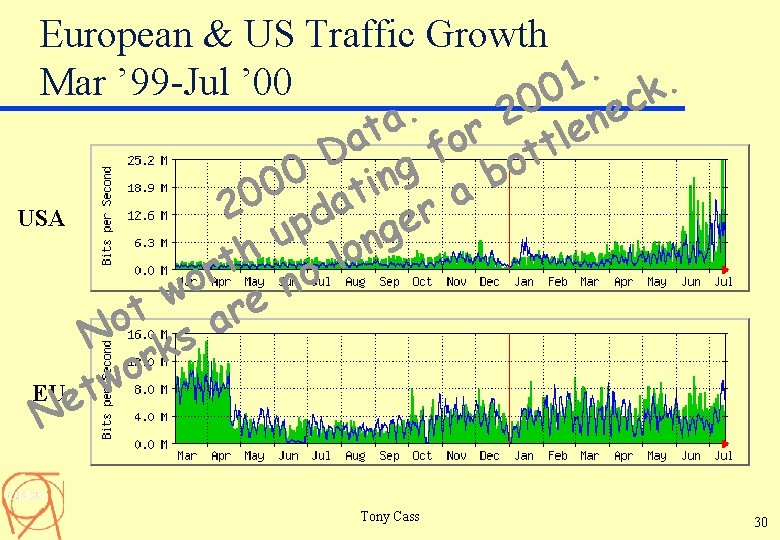

European & US Traffic Growth. 1. Mar ’ 99 -Jul ’ 00 k 0 c 0. e 2 n a t r e l a o t f D t o g 0 b n i 0 t a 0 a 2 pd r USA e g u n o h l t r o o n w e t r o a N ks r o EU tw e N Tony Cass 30

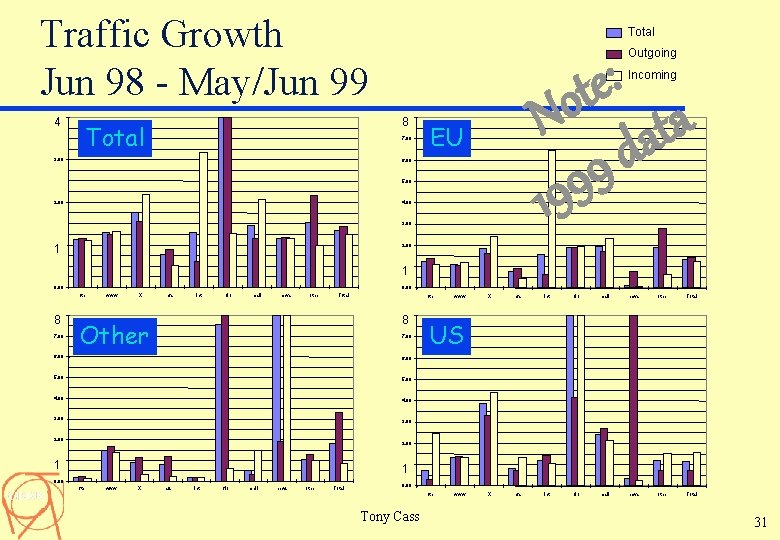

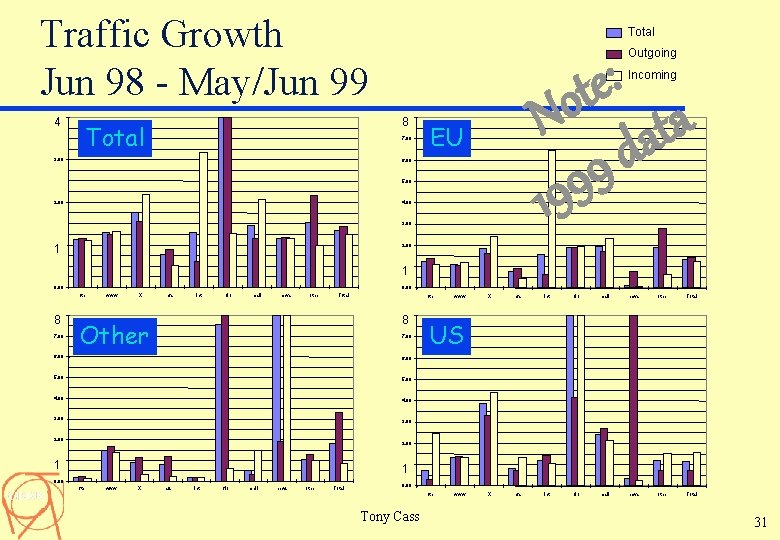

Traffic Growth Jun 98 - May/Jun 99 4 Total 8 Total 7. 00 3. 00 : e ot Incoming N ata d 9 9 19 EU 6. 00 5. 00 2. 00 Outgoing 4. 00 3. 00 2. 00 1 1 0. 00 ftp 8 7. 00 www X afs int rfio mail news other Total ftp 8 Other 7. 00 6. 00 5. 00 4. 00 www X afs int rfio mail news other Total US 4. 00 3. 00 2. 00 1 1 0. 00 ftp www X afs int rfio mail news other Total 0. 00 ftp Tony Cass www 31

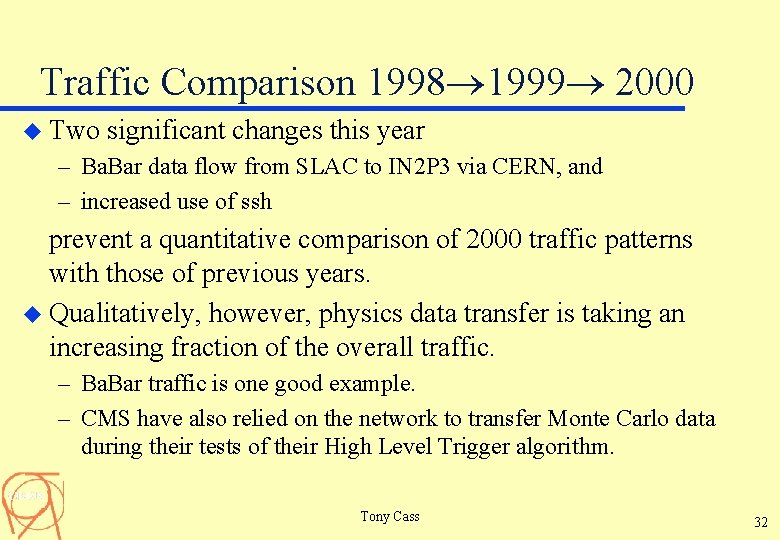

Traffic Comparison 1998 1999 2000 u Two significant changes this year – Ba. Bar data flow from SLAC to IN 2 P 3 via CERN, and – increased use of ssh prevent a quantitative comparison of 2000 traffic patterns with those of previous years. u Qualitatively, however, physics data transfer is taking an increasing fraction of the overall traffic. – Ba. Bar traffic is one good example. – CMS have also relied on the network to transfer Monte Carlo data during their tests of their High Level Trigger algorithm. Tony Cass 32

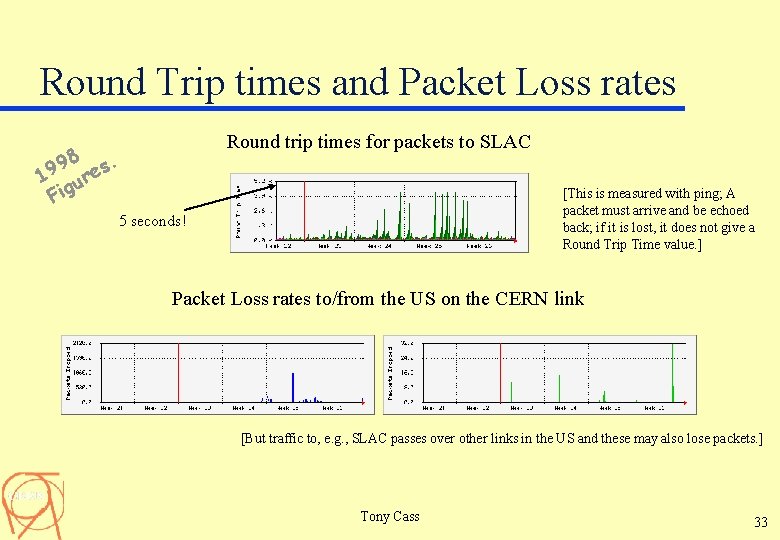

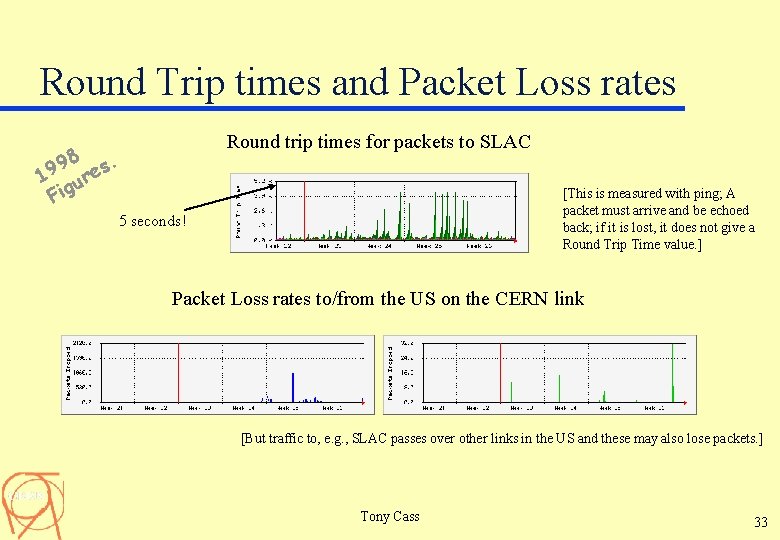

Round Trip times and Packet Loss rates Round trip times for packets to SLAC 98 es. 9 1 ur Fig [This is measured with ping; A packet must arrive and be echoed back; if it is lost, it does not give a Round Trip Time value. ] 5 seconds! Packet Loss rates to/from the US on the CERN link [But traffic to, e. g. , SLAC passes over other links in the US and these may also lose packets. ] Tony Cass 33

Looking at Data—Summary u Physics experiments generate data! – and physcists need to simulate real data to model physics processes and to understand their detectors. u Physics data must be processed, stored and manipulated. u [Central] computing facilities for physicists must be designed to take into account the needs of the data processing stages – from generation through reconstruction to analysis u Physicists also need to – communicate with outside laboratories and institutes, and to – have access to general interactive services. Tony Cass 34