1 FUL Incorporating phonological theory into ASR Aditi

![12 Acoustic font end…. . Phonological features • • [son] • • [high] [low] 12 Acoustic font end…. . Phonological features • • [son] • • [high] [low]](https://slidetodoc.com/presentation_image_h/05263086662c78022aab57c49f79a574/image-12.jpg)

![22 Evidence for underspecification: semantic priming in EEG • word target: Hor[d]e (horde) Pro[b]e 22 Evidence for underspecification: semantic priming in EEG • word target: Hor[d]e (horde) Pro[b]e](https://slidetodoc.com/presentation_image_h/05263086662c78022aab57c49f79a574/image-22.jpg)

![23 Evidence for underspecification: vowel listening in MEG experiment • standard (continuous): [o: ] 23 Evidence for underspecification: vowel listening in MEG experiment • standard (continuous): [o: ]](https://slidetodoc.com/presentation_image_h/05263086662c78022aab57c49f79a574/image-23.jpg)

- Slides: 26

1 FUL: Incorporating phonological theory into ASR Aditi lahiri Henning Reetz (Prof in Oxford) (Prof in Frankfurt) presented by Jacques Koreman (ISK), presntation speech group IET at NTNU

2 Acknowledgment and responsibilities • Some of the slides (in Times New Roman) were made available by Henning Reetz • The ideas are all Aditi’s and Henning’s • Their (mis)representation is mine…

3 What is FUL, and why is it interesting? FUL stands for featurally underspecified lexicon. This presentation addresses its main characteristics: • Underspecified features are omitted from the underlying representation • Non-stochastic approach, in contrast to any current techniques in ASR • Psychological reality proven by psycholinguistic and other evidence

4 An example of underspecification Underspecification can help to deal with assimilation, as for instance in spontaneous speech green bag green grass often realised as greem bag greeng while lame dog is never realised as lane long dog day lon grass day Why? Because /n/ is underspecified for place and can therefore borrow a place features from its neighbour while /m/ is [LABIAL]

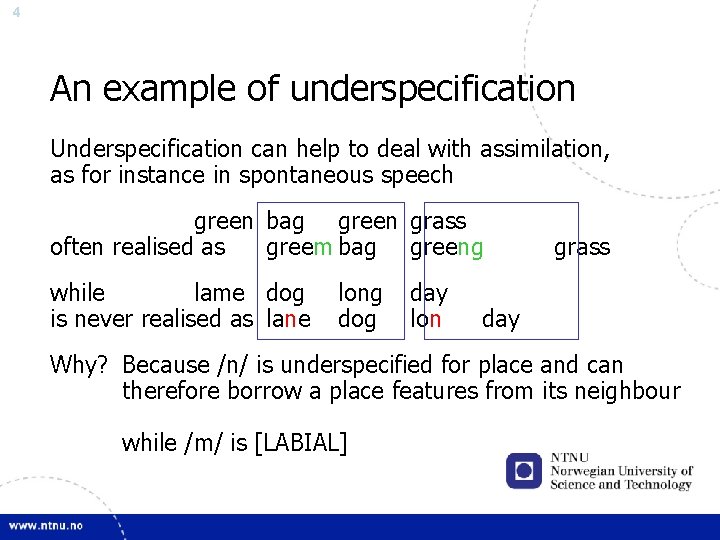

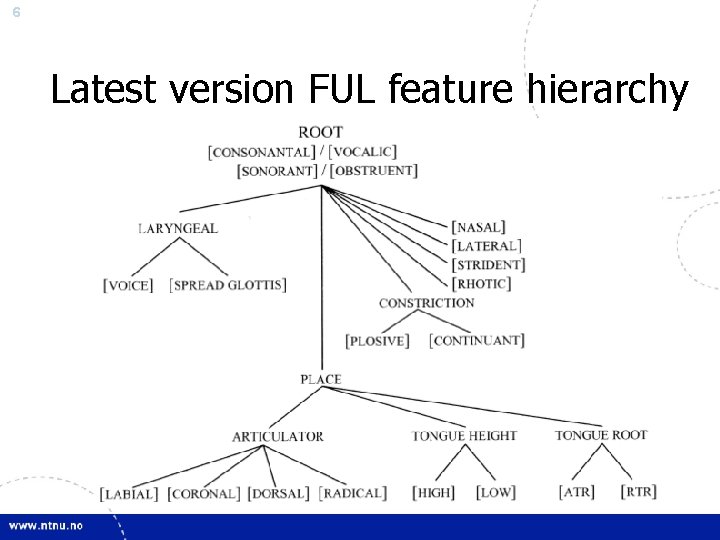

5 FUL featural specification The specification of features is constrained by universal properties and language-specific requirements: for German [ABRUPT] and [CORONAL] (cf. ”green”) are not specified in the lexicon. • FUL uses monovalent, not binary features • V and C share the same place features The type of features are very much under debate: binary or monovalent, fully specified or underspecified, V and C features together or separate, feature names? On the next slide, the latest version of the feature hierarchy in FUL is shown.

6 Latest version FUL feature hierarchy

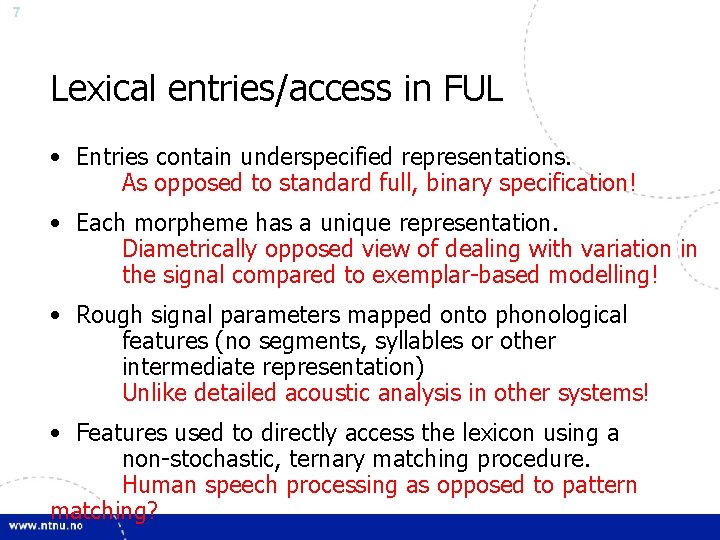

7 Lexical entries/access in FUL • Entries contain underspecified representations. As opposed to standard full, binary specification! • Each morpheme has a unique representation. Diametrically opposed view of dealing with variation in the signal compared to exemplar-based modelling! • Rough signal parameters mapped onto phonological features (no segments, syllables or other intermediate representation) Unlike detailed acoustic analysis in other systems! • Features used to directly access the lexicon using a non-stochastic, ternary matching procedure. Human speech processing as opposed to pattern matching?

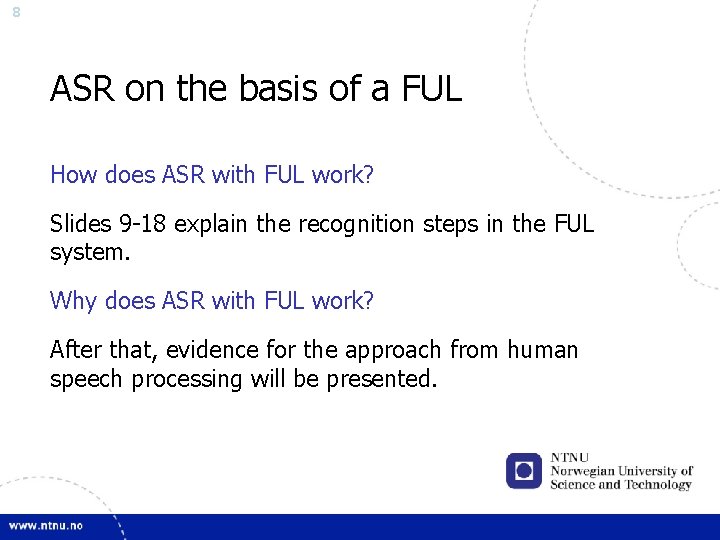

8 ASR on the basis of a FUL How does ASR with FUL work? Slides 9 -18 explain the recognition steps in the FUL system. Why does ASR with FUL work? After that, evidence for the approach from human speech processing will be presented.

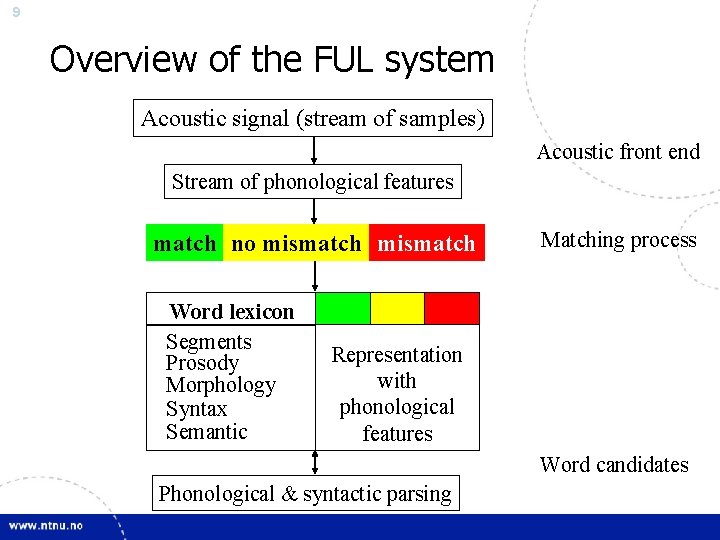

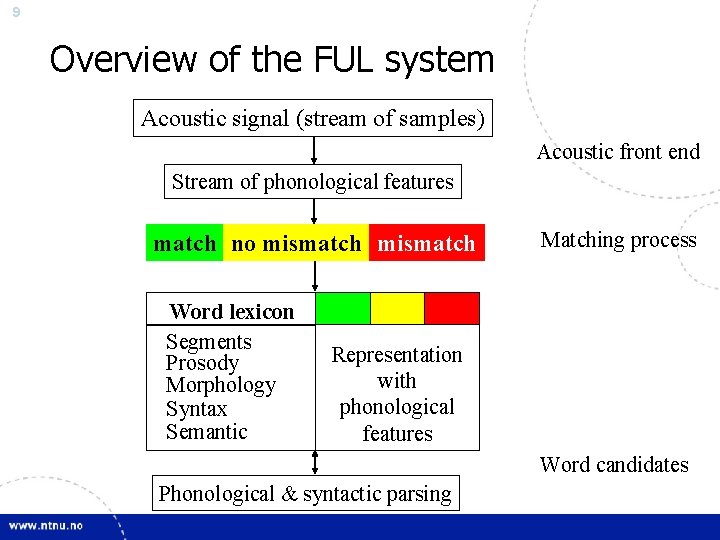

9 Overview of the FUL system Acoustic signal (stream of samples) Acoustic front end Stream of phonological features match no mismatch Word lexicon Segments Prosody Morphology Syntax Semantic Matching process Representation with phonological features Word candidates Phonological & syntactic parsing

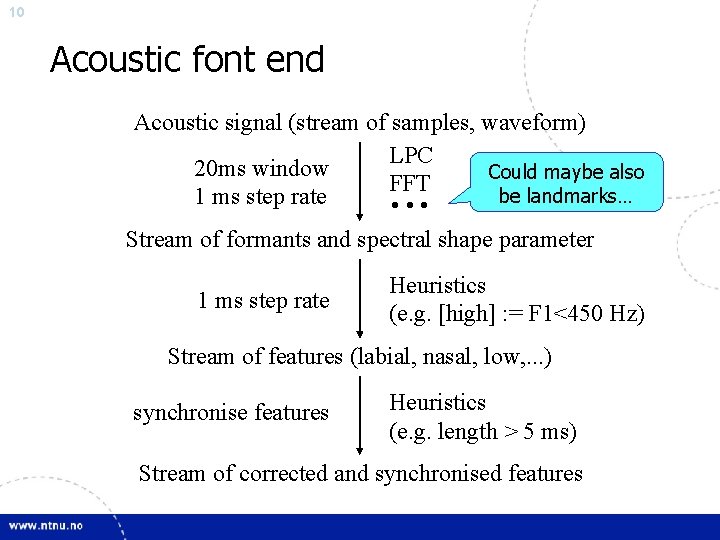

10 Acoustic font end LPC Acoustic signal (stream of samples, waveform) LPC 20 ms window Could maybe also FFT be landmarks… 1 ms step rate • • • Stream of formants and spectral shape parameter 1 ms step rate Heuristics (e. g. [high] : = F 1<450 Hz) Stream of features (labial, nasal, low, . . . ) synchronise features end Heuristics (e. g. length > 5 ms) Stream of corrected and synchronised features

11 Acoustic font end. . . parameter extraction speech signal formants heuristics, e. g. [high] : = F 1 < 450 Hz

![12 Acoustic font end Phonological features son high low 12 Acoustic font end…. . Phonological features • • [son] • • [high] [low]](https://slidetodoc.com/presentation_image_h/05263086662c78022aab57c49f79a574/image-12.jpg)

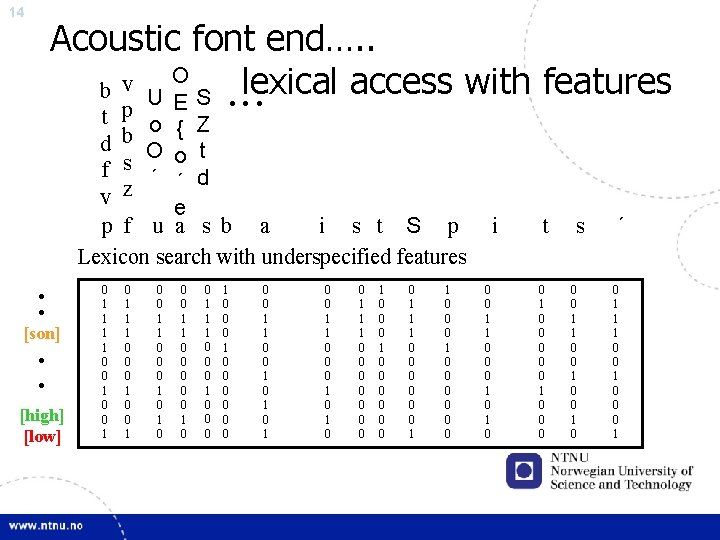

12 Acoustic font end…. . Phonological features • • [son] • • [high] [low] to features

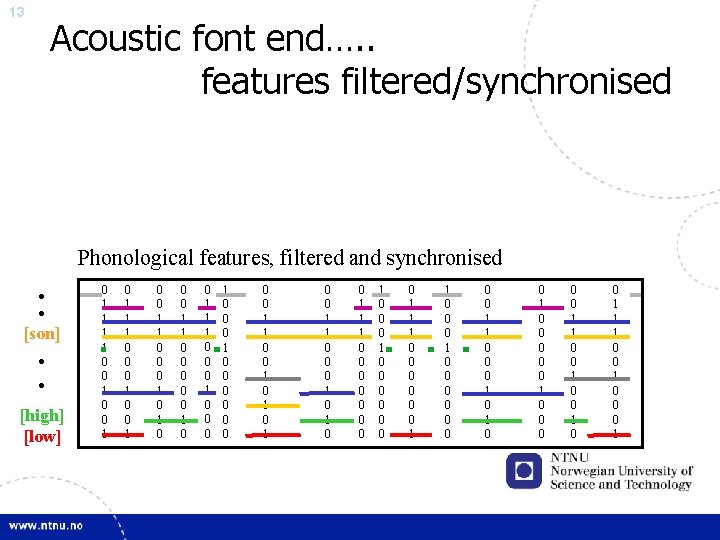

13 Acoustic font end…. . features filtered/synchronised Phonological features, filtered and synchronised • • [son] • • [high] [low] 0 1 1 0 0 1 0 1 1 1 0 0 0 1 0 0 0 1 1 0 0 0 1 1 1 0 0 0 0 0 0 1 1 0 0 1 0 1 0 0 1 1 0 0 0 1 1 1 0 0 0 0 0 1 1 1 0 0 0 0 0 0 1 1 0 0 0 1 0 0 0 0 0 1 1 0 0 1 1 1 0 0 0 1

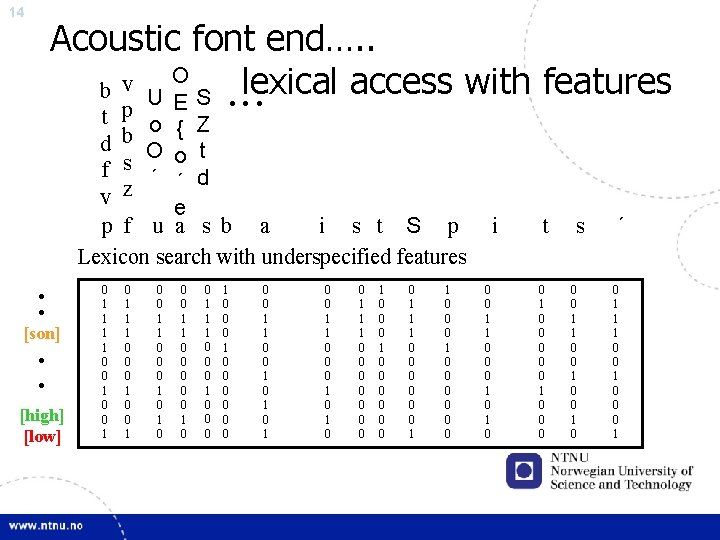

14 Acoustic font end…. . O v lexical access with features b U ES • • • t p o { Z b d O o t s f ´ ´ d z v e p f u a s b a i s t S p Lexicon search with underspecified features • • [son] • • [high] [low] 0 1 1 0 0 1 0 1 1 1 0 0 0 1 0 0 0 1 1 0 0 0 1 1 1 0 0 0 0 0 0 1 1 0 0 1 0 1 0 0 1 1 0 0 0 1 1 1 0 0 0 0 0 1 1 1 0 0 0 0 0 0 i 0 0 1 1 0 0 0 1 0 t 0 1 0 0 0 s 0 0 1 1 0 0 1 0 ´ 0 1 1 1 0 0 0 1

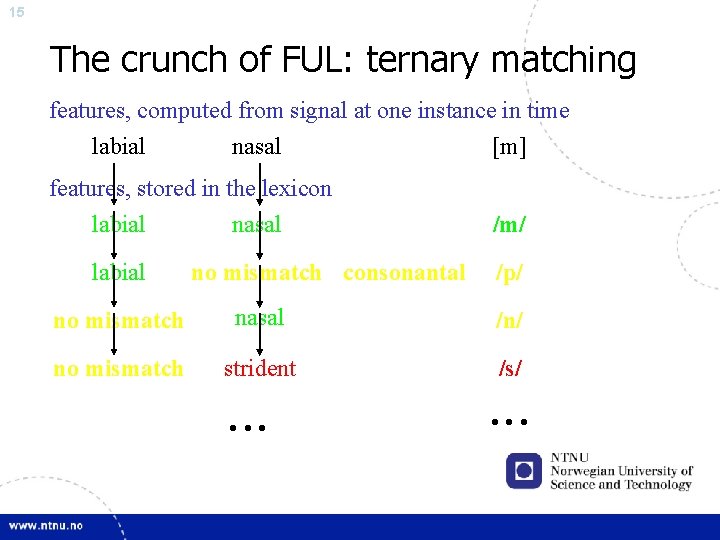

15 The crunch of FUL: ternary matching features, computed from signal at one instance in time labial nasal features, stored in the lexicon labial nasal labial no mismatch consonantal [m] /m/ /p/ no mismatch nasal /n/ no mismatch strident /s/ • • •

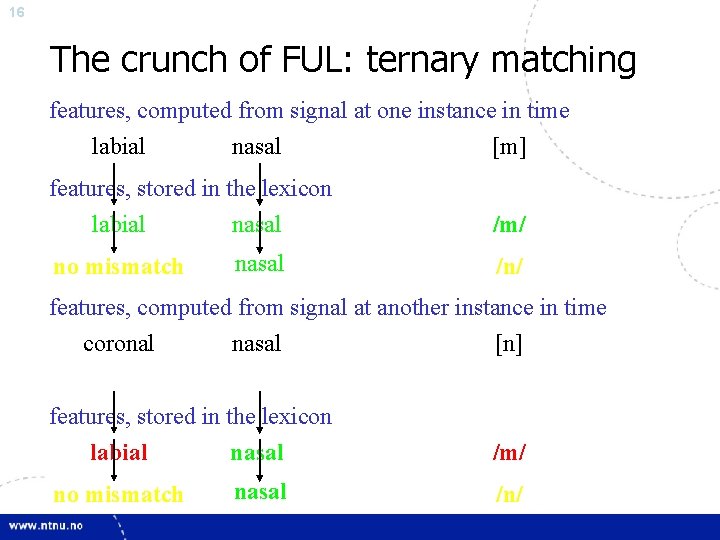

16 The crunch of FUL: ternary matching features, computed from signal at one instance in time labial nasal features, stored in the lexicon labial nasal no mismatch nasal [m] /m/ /n/ features, computed from signal at another instance in time [n] coronal nasal features, stored in the lexicon labial nasal no mismatch nasal /m/ /n/

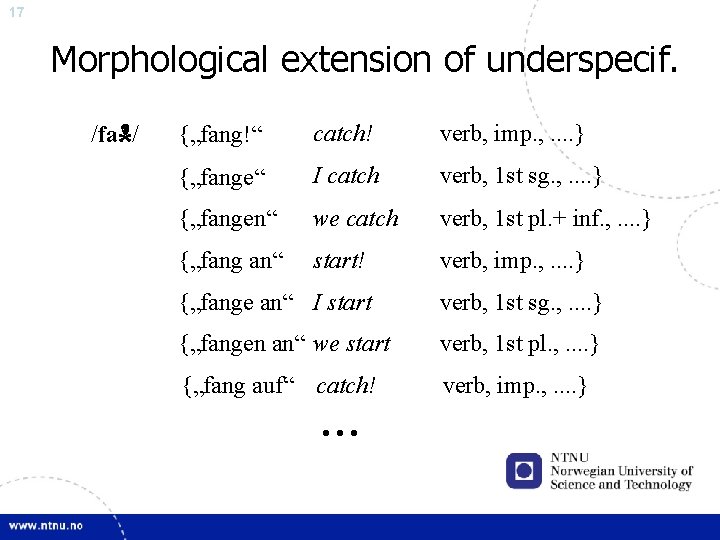

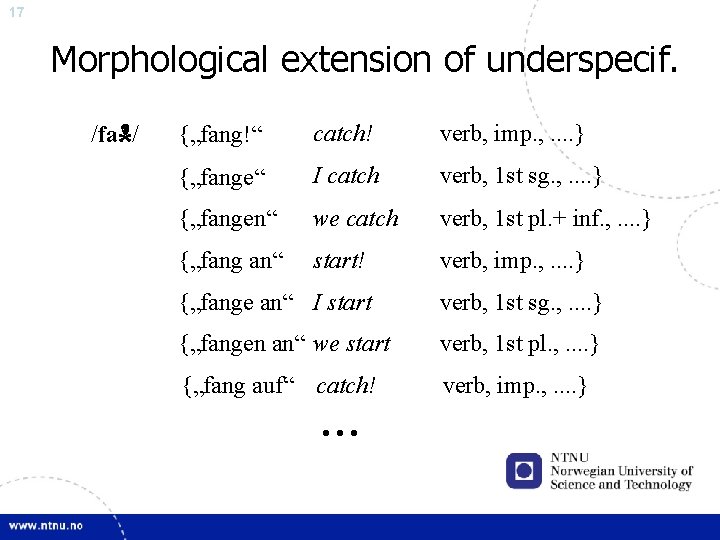

17 Morphological extension of underspecif. /fa / {„fang!“ catch! verb, imp. , . . } {„fange“ I catch verb, 1 st sg. , . . } {„fangen“ we catch verb, 1 st pl. + inf. , . . } {„fang an“ start! verb, imp. , . . } {„fange an“ I start verb, 1 st sg. , . . } {„fangen an“ we start verb, 1 st pl. , . . } {„fang auf“ catch! verb, imp. , . . } • • •

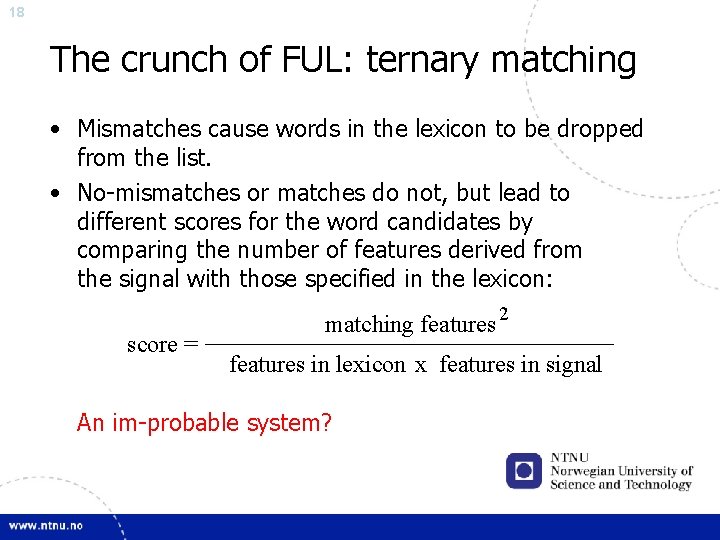

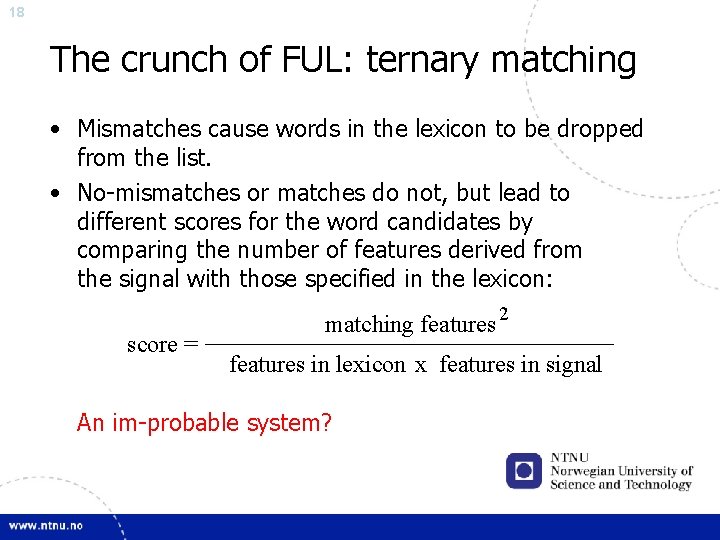

18 The crunch of FUL: ternary matching • Mismatches cause words in the lexicon to be dropped from the list. • No-mismatches or matches do not, but lead to different scores for the word candidates by comparing the number of features derived from the signal with those specified in the lexicon: score = matching features 2 features in lexicon x features in signal An im-probable system?

19 An im-probable system? Evidence. FUL stands for featurally underspecified lexicon. • Underspecified features are omitted from the underlying representation • Non-stochastic approach, in contrast to any current techniques in ASR • Psychological reality proven by psycholinguistic and other evidence

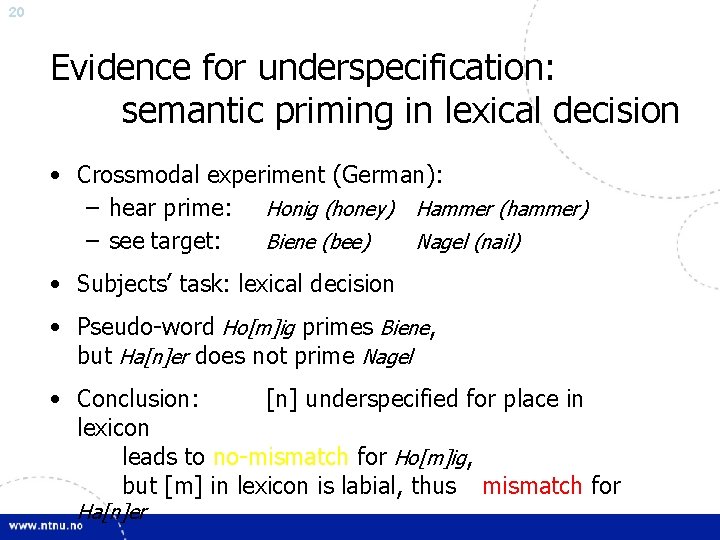

20 Evidence for underspecification: semantic priming in lexical decision • Crossmodal experiment (German): – hear prime: Honig (honey) Hammer (hammer) – see target: Biene (bee) Nagel (nail) • Subjects’ task: lexical decision • Pseudo-word Ho[m]ig primes Biene, but Ha[n]er does not prime Nagel • Conclusion: [n] underspecified for place in lexicon leads to no-mismatch for Ho[m]ig, but [m] in lexicon is labial, thus mismatch for Ha[n]er

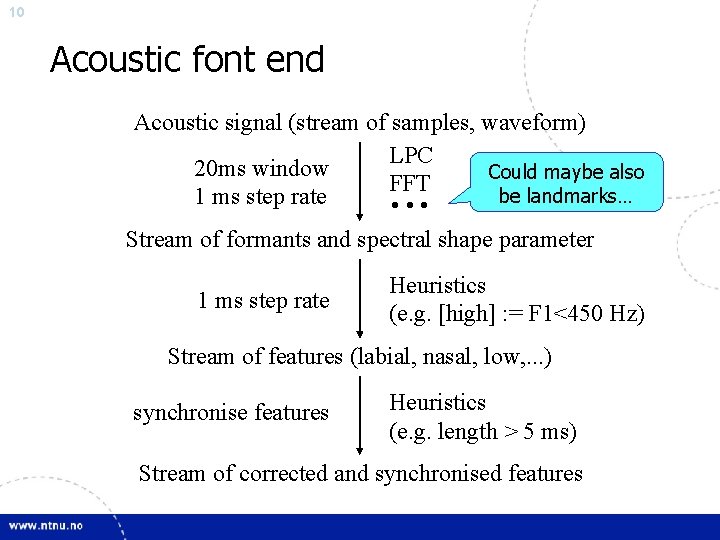

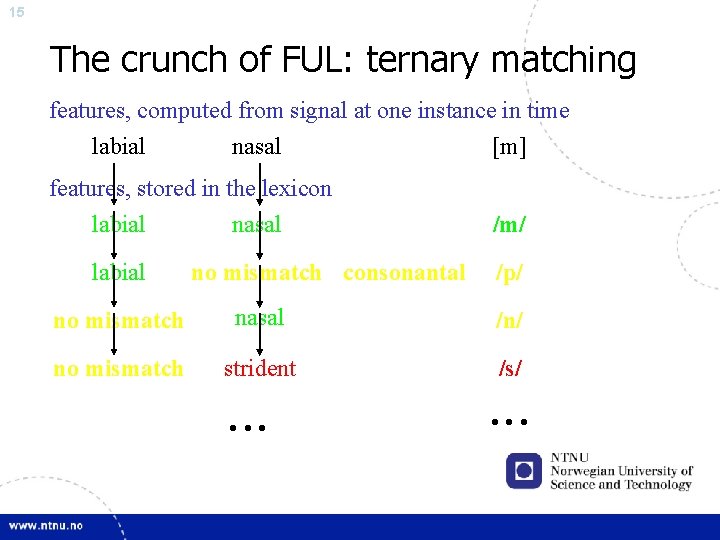

21 Evidence for underspecification: semantic priming in EEG • The N 400 is an event-related potential (ERP) component typically elicited by unexpected linguistic stimuli. • It is characterized as a negative deflection peaking ca. 400 ms after stimulus presentation. • In models of speech comprehension, N 400 is often associated with the semantic integration of words in sentence context; its finding is interpreted as pointing to the activation of a process working on semantics in the general time frame.

![22 Evidence for underspecification semantic priming in EEG word target Horde horde Probe 22 Evidence for underspecification: semantic priming in EEG • word target: Hor[d]e (horde) Pro[b]e](https://slidetodoc.com/presentation_image_h/05263086662c78022aab57c49f79a574/image-22.jpg)

22 Evidence for underspecification: semantic priming in EEG • word target: Hor[d]e (horde) Pro[b]e (test) pseudo-word target: Hor[b]e (horde) Pro[d]e (test) • Subjects’ task: speeded lexical decision • Similar RTs for words and pseudo-words, but more errors in lexical decision for Hor[b]e (no-mismatch for Hor[d]e) than for Pro[d]e (mismatch on Pro[b]e) Also large negative peak for Pro[d]e but not for Hor[b]e (which behaved similarly to real words). • Conclusion: [d] underspecified for place in lexicon, but [b] specified as [LABIAL]

![23 Evidence for underspecification vowel listening in MEG experiment standard continuous o 23 Evidence for underspecification: vowel listening in MEG experiment • standard (continuous): [o: ]](https://slidetodoc.com/presentation_image_h/05263086662c78022aab57c49f79a574/image-23.jpg)

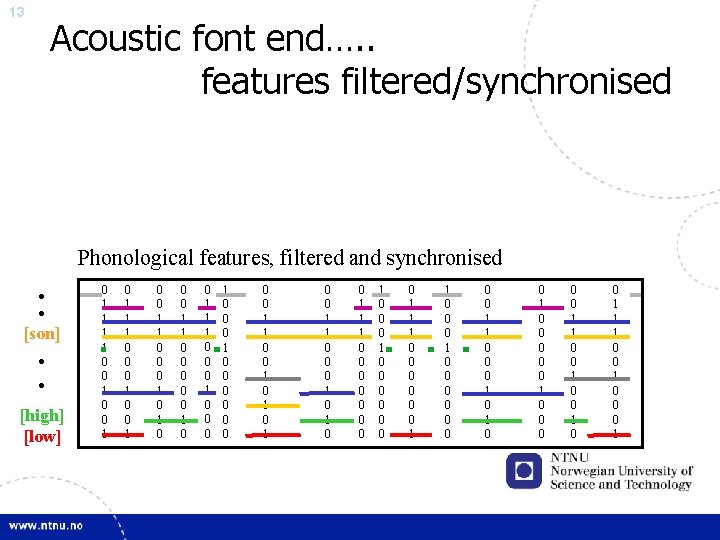

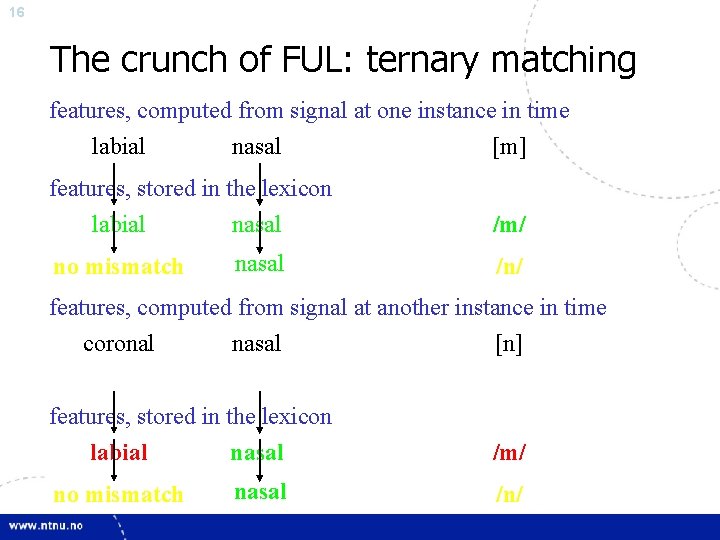

23 Evidence for underspecification: vowel listening in MEG experiment • standard (continuous): [o: ] deviant (played once): [ø: ] • Subjects’ task: just listen…. • Asymmetrical Mis. Match Negativity (MMN) effect (perception of change) for [o: ]- [ø: ] greater than for [ø: ]- [o: ] : higher amplitude difference ca. 180 ms from onset of deviant and earlier effect. Similar effects for other pairs. • Conclusion: Results fit with underspecification

24 Evidence for underspecification And there is more evidence • from CVC gating experiments in English and Bengali, where a non-nasalised oral vowel could lead to both oral and nasal responses when the CV is heard (Lahiri & Marslen-Wilson, 1991, 1992) • from priming experiments, suggesting there are two kinds of [o: ] in German, one which is specified for [labial, dorsal] (Boote-Bötchen as primes for Boot), the other specified only for [labial] (Söhne-Söhnchen as primes for Sohn) • from language change in Miogliola (Northern Italian), wher two types of [n] were shown to exist, one [coronal], the other unspecified for place (Ghini, 2001). …. and more

25 Conclusions • FUL is an implementation of phonological theory in ASR. • FUL is firmly grounded in psycholinguistic experiments and observations on language change. • FUL recognition is robust against variation in speech, but does not contain mechanisms to normalize for variation not directly related to the linguistic content (as we possibly do when we begin to understand a speaker better when we first meet him/talk to him on the phone), nor to use this information.

26 References This presentation was mainly based on • (a draft version of) Lahiri, A. & Reetz, H. (2002). "Underspecified recognition", in C. Gussenhoven & N. Warner (eds. ) Laboratory Phonology 7. Berlin: Mouton, 637 -675. • Lahiri, A. & Reetz, H. (submitted to J. Phon. ). ”Distinctive features: phonological underspecification in processing”. • See also: http: //ling. uni-konstanz. de/pages/ proj/sfb 471/ publ/d-3. html

Field trip by aditi sriram

Field trip by aditi sriram Rowena mittal

Rowena mittal Aditi devi bhava

Aditi devi bhava Direct and indirect speech exercises with answers

Direct and indirect speech exercises with answers Aditi padhi

Aditi padhi Aditi majumder

Aditi majumder Aditi padhi

Aditi padhi Aditi thapar

Aditi thapar Incorporating the change

Incorporating the change Nn

Nn Nested quotations

Nested quotations Incorporating pronunciation

Incorporating pronunciation Incorporating in ohio

Incorporating in ohio Unconventional cash flow

Unconventional cash flow Java ful

Java ful Suffix ful and less rules

Suffix ful and less rules Copyright

Copyright Beszédbanán

Beszédbanán Adding suffix ful

Adding suffix ful Mime and dash ful

Mime and dash ful A fül részei

A fül részei Superlative form of king

Superlative form of king Ful less

Ful less What is phonological ambiguity

What is phonological ambiguity Semantic cues in reading

Semantic cues in reading Central executive

Central executive Phoneme identity activities

Phoneme identity activities