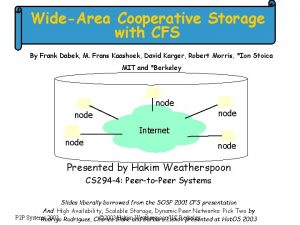

Widearea cooperative storage with CFS Overview CFS Cooperative

- Slides: 26

Wide-area cooperative storage with CFS

Overview • • CFS = Cooperative File System Peer-to-peer read-only file system Distributed hash table for block storage Lookup performed by Chord

Design Overview • CFS clients contain 3 layers: – A file system client – Dhash storage layer – Chord lookup layer • CFS servers contain 2 layers: – Dhash storage layer – Chord lookup layer

Overview con’t • Disk blocks: DHash blocks: : Disk Addresses: Block Identifiers • CFS file systems are read-only as far as clients are concerned – can be modified by its publisher

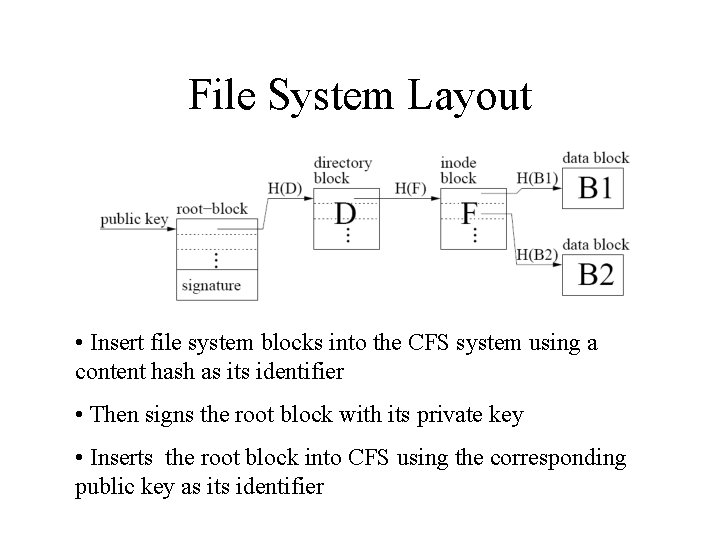

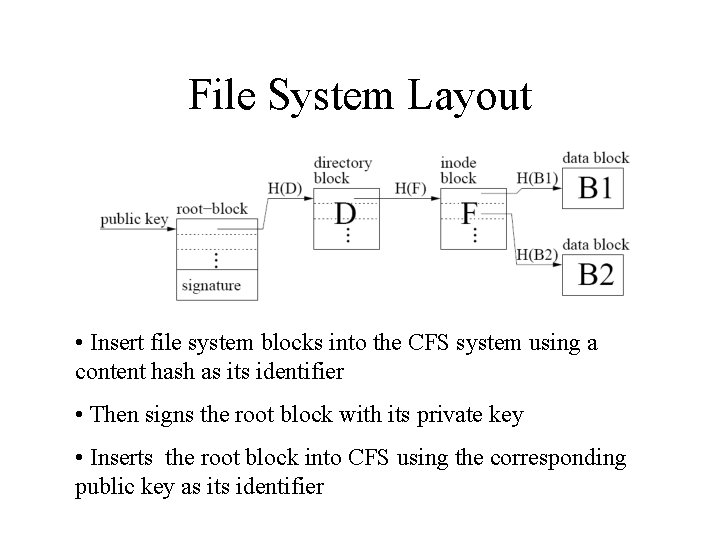

File System Layout • Insert file system blocks into the CFS system using a content hash as its identifier • Then signs the root block with its private key • Inserts the root block into CFS using the corresponding public key as its identifier

Publisher Updates • Updating the file system’s root block to point to the new data • Authentication by checking to make sure that the same key signed both old and new block – Timestamps prevent replays of old data – File systems are updated without changing the root blocks identifier

CFS properties • • Decentralized control Scalability Availability Load balance Persistence Quotas Efficiency

Chord Layer • Same Chord protocol as mentioned earlier with a few modifications – Server Selection – Node ID authentication

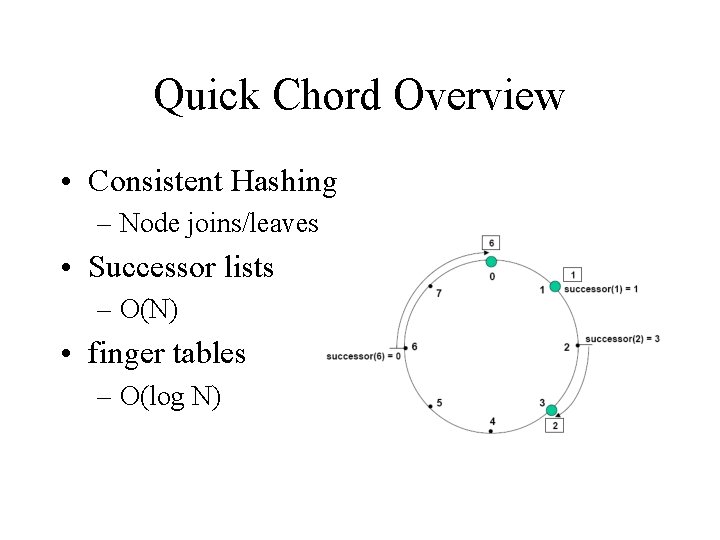

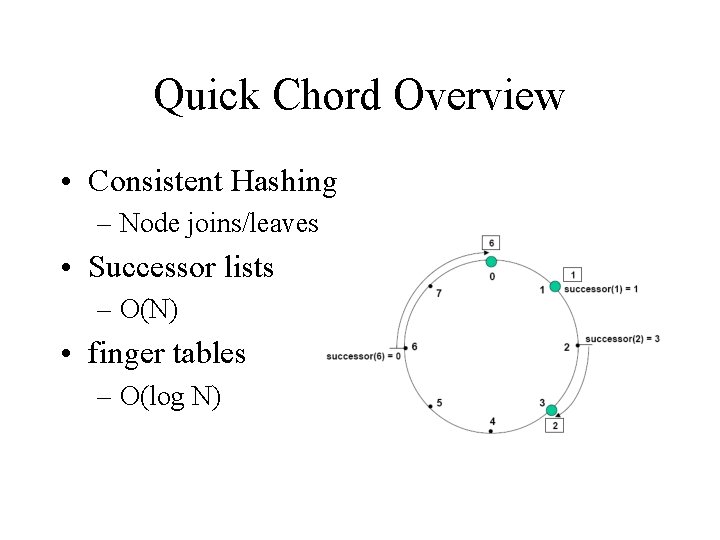

Quick Chord Overview • Consistent Hashing – Node joins/leaves • Successor lists – O(N) • finger tables – O(log N)

Server Selection • Chooses next node to contact from finger table – Gets the closest node to the destination – What about network latency? • Introduced measuring and storing latency in the finger tables – Calculated when acquiring finger table entries – Reasoning: RPCs to different nodes will incur varying latency so you want to choose on that minimizes this

Node ID authentication • Idea: network security • All chord IDs must be in the form h(x) – H = SHA-1 has function – x = the nodes IP + virtual node index • When a new node joins the system – Existing node will send a message to the new node – The ID must match the claimed IP + virtual node index hash to be accepted

DHash Layer • Handles – – Storing and retrieving blocks Distribution Replication Caching of blocks • Uses Chord to locate blocks • Key CFS design: split each file system into blocks and distribute those across many servers

Replication • DHash replicates each block on k servers immediately after the blocks sucessor – Why? …even if the block’s successor fails, the block is still available – Server independence guaranteed because location on the ring is determined by hash of IP not by physical location

Replication con’t • Could save space by storing coded pieces of blocks but…. storage space is not expected to be a highly-constrained resource • Placement of replicas allows a client to select the replica with the fastest download – Result from Chord lookup will be the immediate predecessor to the node with X – This node’s successor table contains entries for the latencies of the nearest Y nodes

Caching • Caching blocks prevents overloading servers with popular data • Using Chord… – Clients contact server closer and closer to the desired location – Once source is found or an intermediate cached copy is found all servers just contacted receive file to cache • Replaces cached blocks in least-recently-used order

Load Balance • Virtual servers… 1 real server acting as several “virtual” servers – Administrator can configure the number of based on server’s storage and network capabilities • Possible Side-effect: creating more hops in Chord algorithm – More nodes = more hops – Solution: allow virtual server’s to look at each other’s tables

Quotas • Control amount of data a publisher can inject – Based on reliable ID of publishers won’t work because it requires centralized administration – CFS uses quotas based on IP address of publishers – Each server imposes a 0. 1% limit…so as the capacity grows the total data amount grows • Not easy to subvert this system because publishers must respond to initial confirmation requests

Updates and Deletion • Only allows the publisher to modify data – 2 conditions for acceptance • Marked as a content-hash block supplied key = SHA-1 hash of the blocks content • Marked as a signed block signed by public key = SHA-1 hash is the block’s CFS key • No explicit delete – Publishers must refresh blocks if they want them stored – CFS deletes blocks that have not been refreshed recently

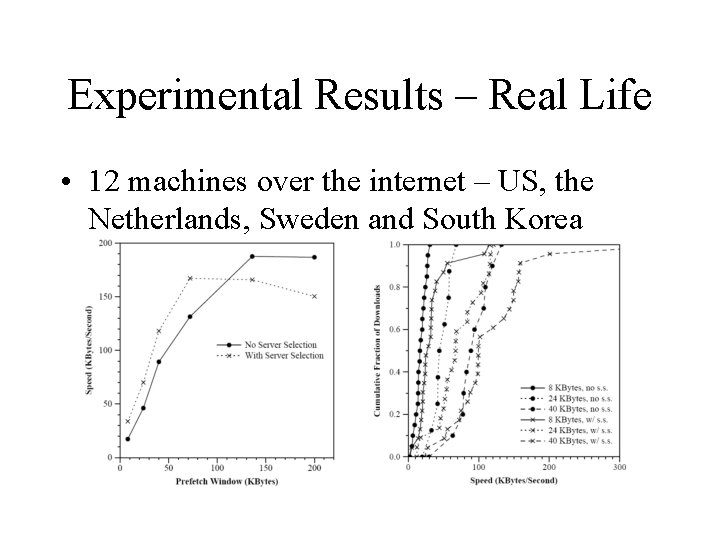

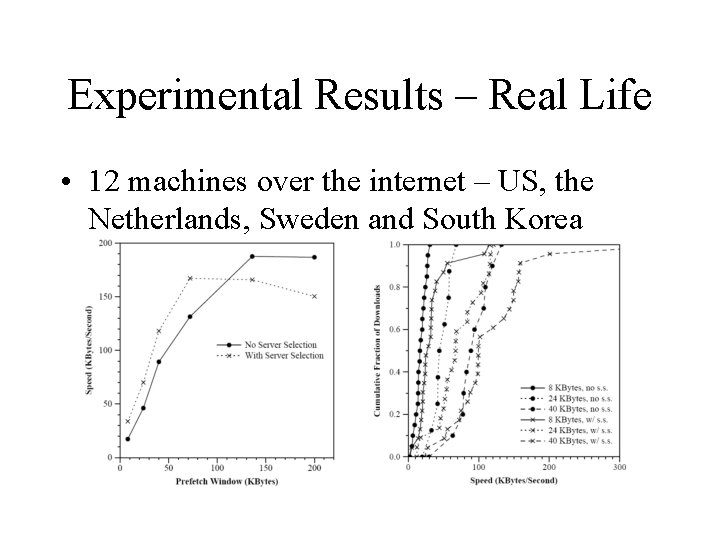

Experimental Results – Real Life • 12 machines over the internet – US, the Netherlands, Sweden and South Korea

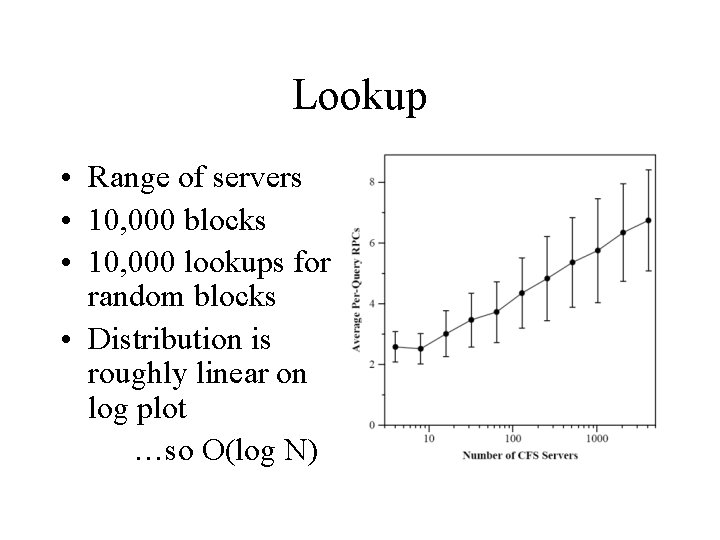

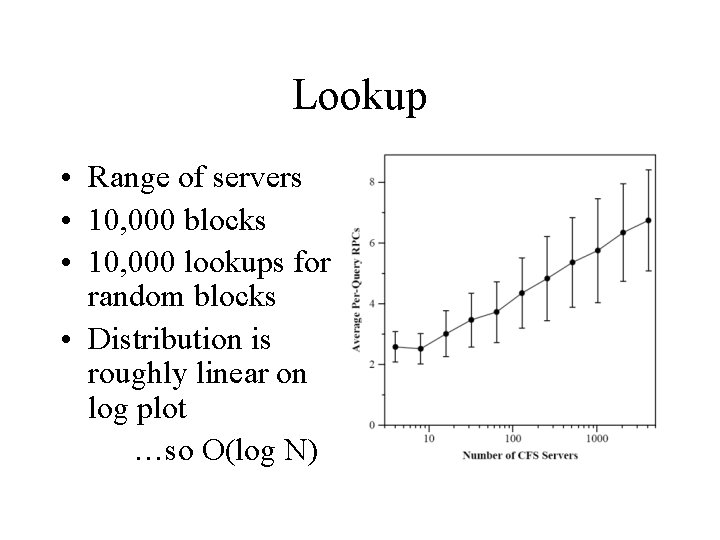

Lookup • Range of servers • 10, 000 blocks • 10, 000 lookups for random blocks • Distribution is roughly linear on log plot …so O(log N)

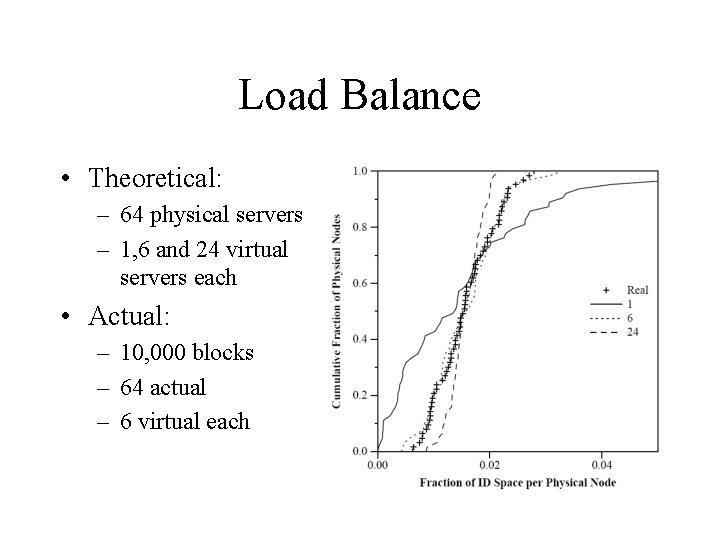

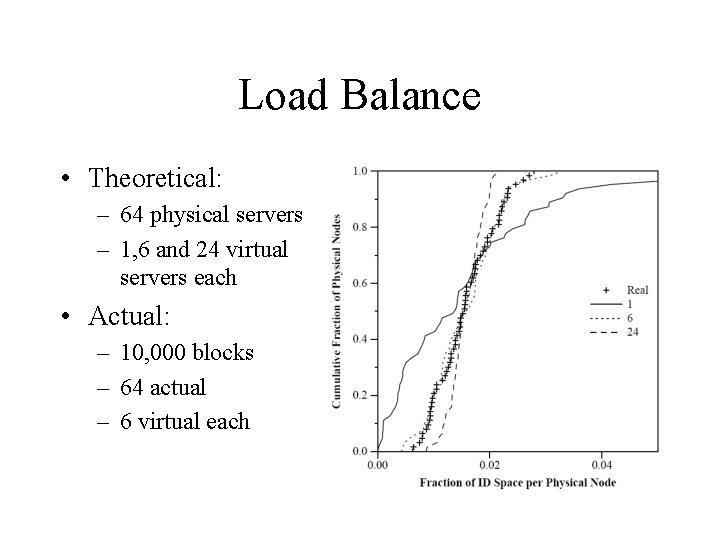

Load Balance • Theoretical: – 64 physical servers – 1, 6 and 24 virtual servers each • Actual: – 10, 000 blocks – 64 actual – 6 virtual each

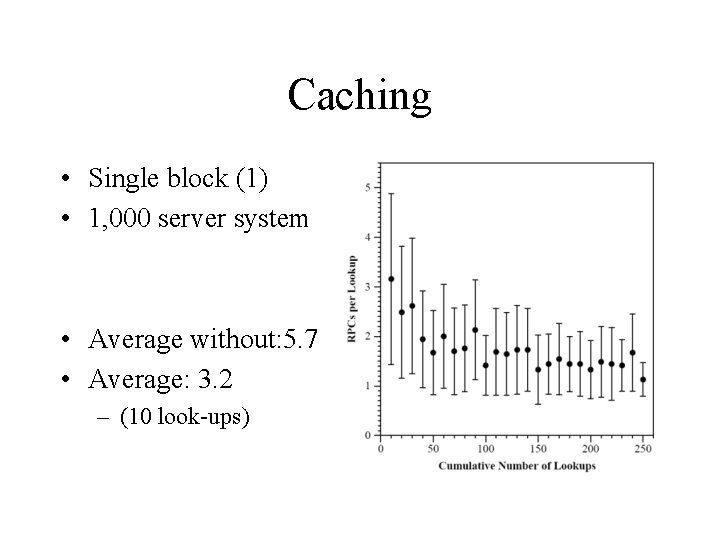

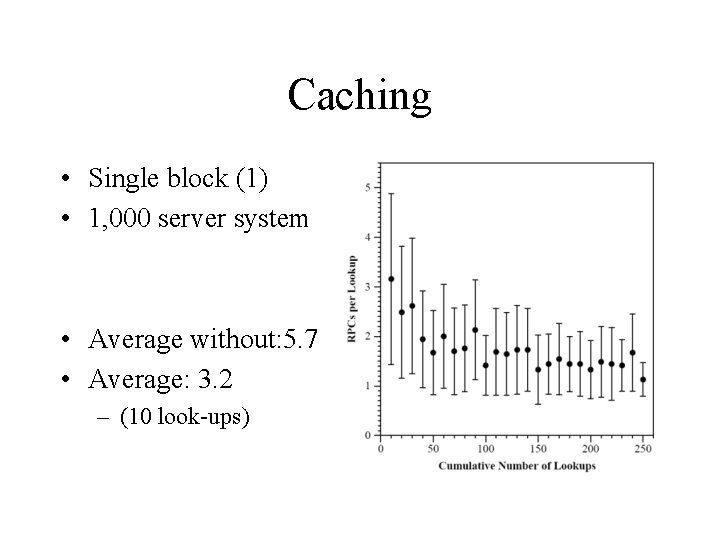

Caching • Single block (1) • 1, 000 server system • Average without: 5. 7 • Average: 3. 2 – (10 look-ups)

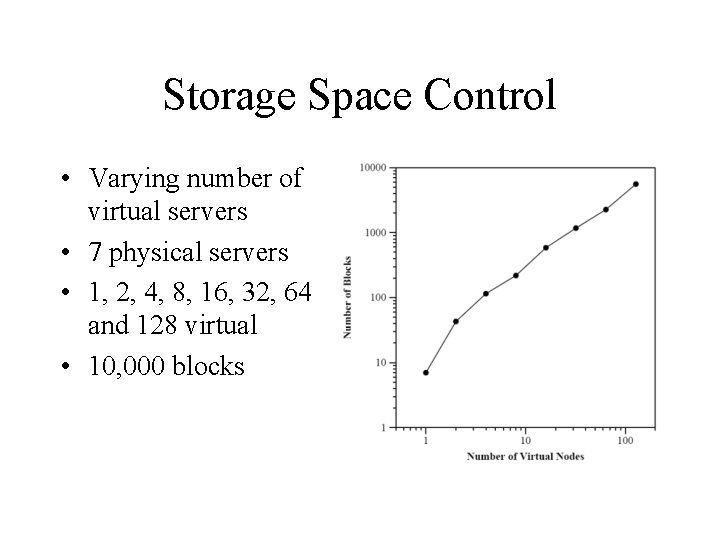

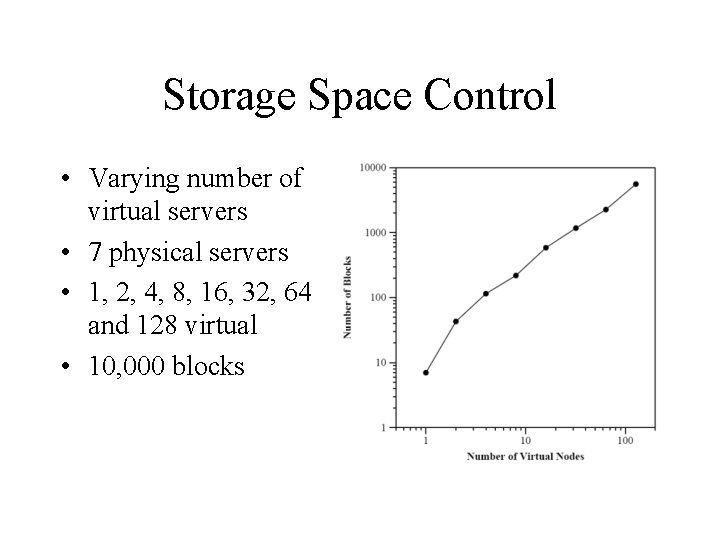

Storage Space Control • Varying number of virtual servers • 7 physical servers • 1, 2, 4, 8, 16, 32, 64 and 128 virtual • 10, 000 blocks

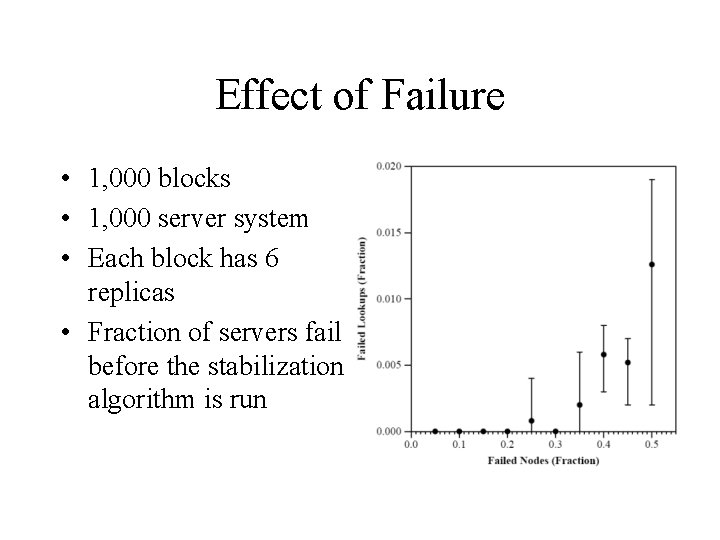

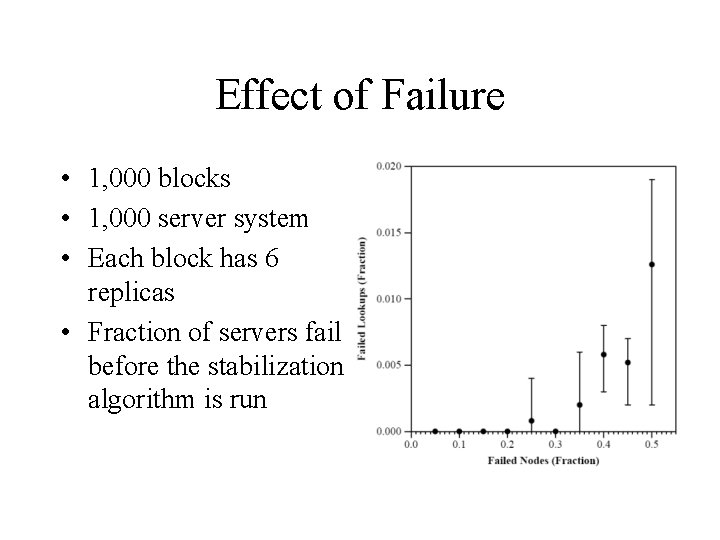

Effect of Failure • 1, 000 blocks • 1, 000 server system • Each block has 6 replicas • Fraction of servers fail before the stabilization algorithm is run

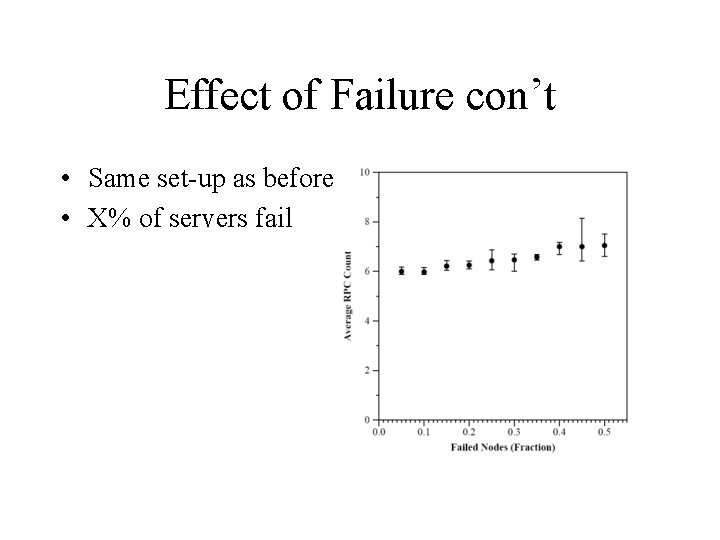

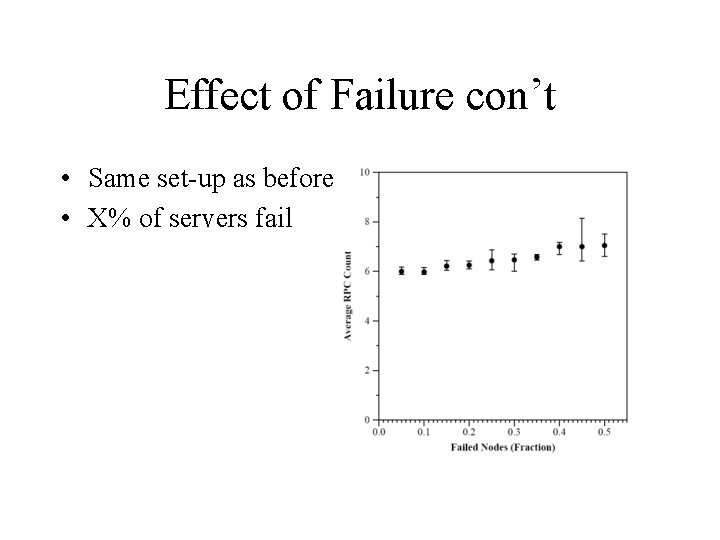

Effect of Failure con’t • Same set-up as before • X% of servers fail

Conclusion • Highly scalable, available, secure read-only file system • Uses peer-to-peer Chord protocol for lookup • Uses replication and caching to achieve availability and load balance • Simple but effective protection against inserting large amount of malicious data

Physical media storage

Physical media storage Overview of storage and indexing

Overview of storage and indexing Secondary storage vs primary storage

Secondary storage vs primary storage Primary storage and secondary storage

Primary storage and secondary storage Secondary storage provides temporary or volatile storage

Secondary storage provides temporary or volatile storage Object based and unified storage

Object based and unified storage Aznm

Aznm Mutaflor cfs

Mutaflor cfs Cfs firstnet

Cfs firstnet Pinnacle cfs framing

Pinnacle cfs framing Cpt iri

Cpt iri Prokarium

Prokarium Cfs nice guidelines

Cfs nice guidelines Cfs jebel ali

Cfs jebel ali Cfs

Cfs Cfs version 2

Cfs version 2 Tcvitals

Tcvitals Reforecast past tense

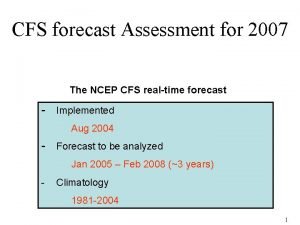

Reforecast past tense Cfs forecast

Cfs forecast Cfs

Cfs Sai gon new port

Sai gon new port Southeast cfs

Southeast cfs Cfs

Cfs Cfs food service

Cfs food service What is conventional implicature

What is conventional implicature Cooperative individualism

Cooperative individualism Cooperative capitalism

Cooperative capitalism