VilaltaEick Informed Search and Exploration Search Strategies Heuristic

- Slides: 30

Vilalta&Eick: Informed Search and Exploration Search Strategies • Heuristic Functions • Local Search Algorithms • Vilalta&Eick: Informed Search

Vilalta&Eick: Informed Search Introduction Informed search strategies: q Use problem specific knowledge q Can find solutions more efficiently than search strategies that do not use domain specific knowledge.

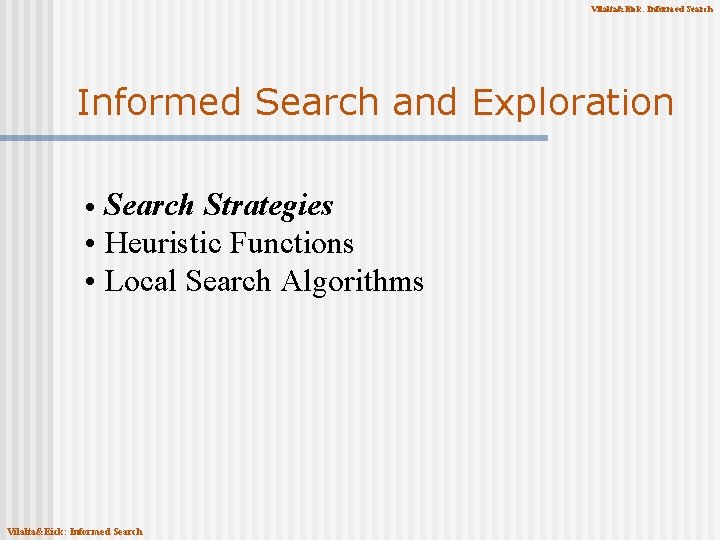

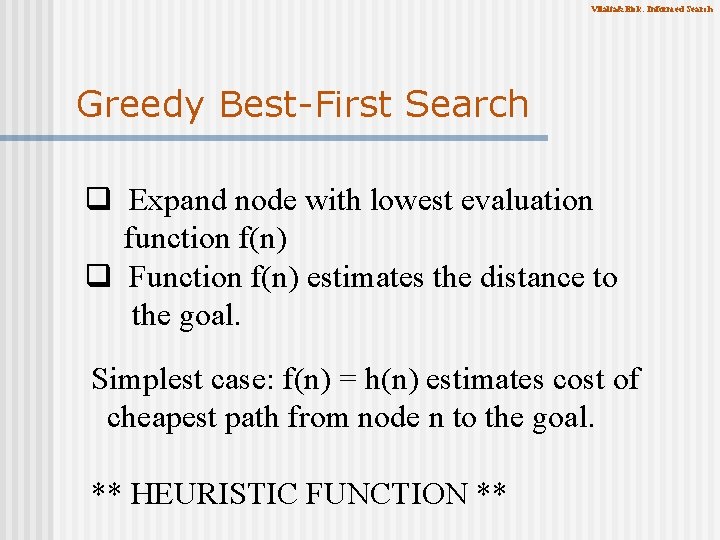

Vilalta&Eick: Informed Search Greedy Best-First Search q Expand node with lowest evaluation function f(n) q Function f(n) estimates the distance to the goal. Simplest case: f(n) = h(n) estimates cost of cheapest path from node n to the goal. ** HEURISTIC FUNCTION **

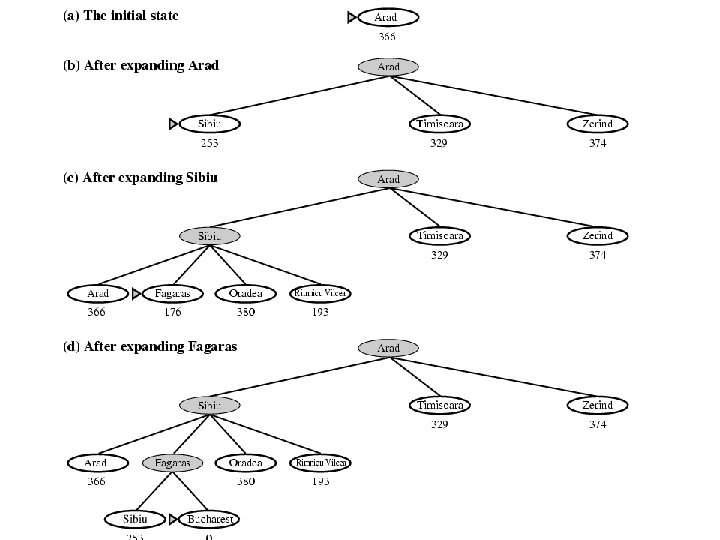

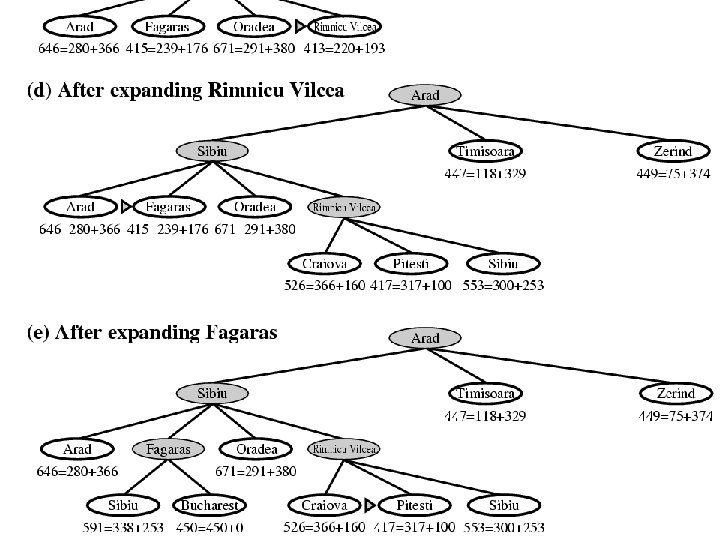

Vilalta&Eick: Informed Search Figure 4. 2

Vilalta&Eick: Informed Search Greedy Best-First Search q Resembles depth-first search q Follows the most promising path q Non-optimal q Incomplete

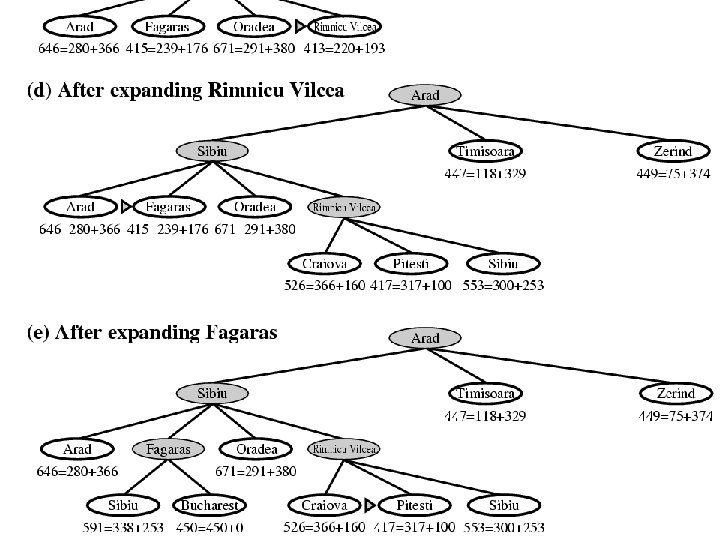

Vilalta&Eick: Informed Search A* Search Evaluation Function: F(n) = g(n) + h(n) Path cost from root to node n Estimated cost of cheapest path from node n to goal

Vilalta&Eick: Informed Search Figure 4. 3

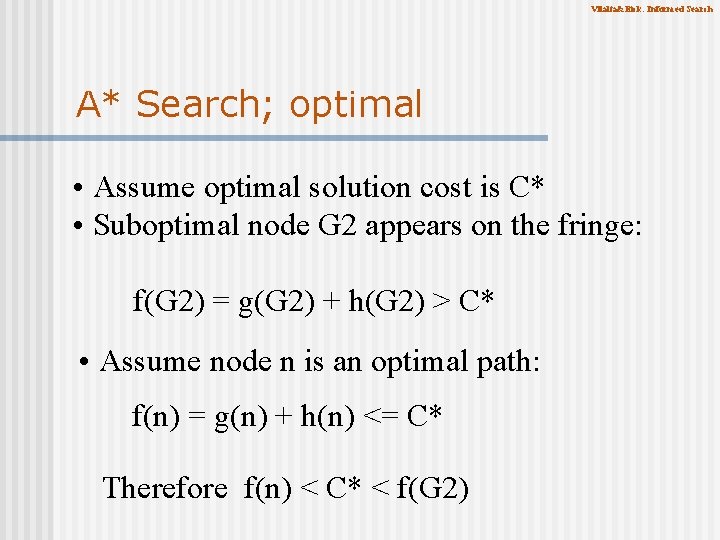

Vilalta&Eick: Informed Search A* Search; optimal Optimal. • Optimal if admissible heuristic • admissible: Never overestimates the cost to reach a goal from a given state.

Vilalta&Eick: Informed Search A* Search; optimal • Assume optimal solution cost is C* • Suboptimal node G 2 appears on the fringe: f(G 2) = g(G 2) + h(G 2) > C* • Assume node n is an optimal path: f(n) = g(n) + h(n) <= C* Therefore f(n) < C* < f(G 2)

Vilalta&Eick: Informed Search A* Search; complete • A* is complete. A* builds search “bands” of increasing f(n) At all points f(n) < C* Eventually we reach the “goal contour” • Optimally efficient • Most times exponential growth occurs

Vilalta&Eick: Informed Search Contours of equal f-cost 400 420

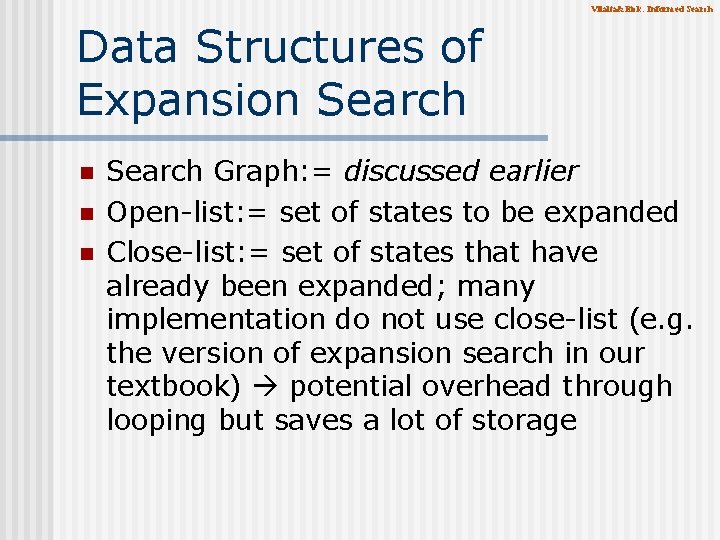

Vilalta&Eick: Informed Search Data Structures of Expansion Search n n n Search Graph: = discussed earlier Open-list: = set of states to be expanded Close-list: = set of states that have already been expanded; many implementation do not use close-list (e. g. the version of expansion search in our textbook) potential overhead through looping but saves a lot of storage

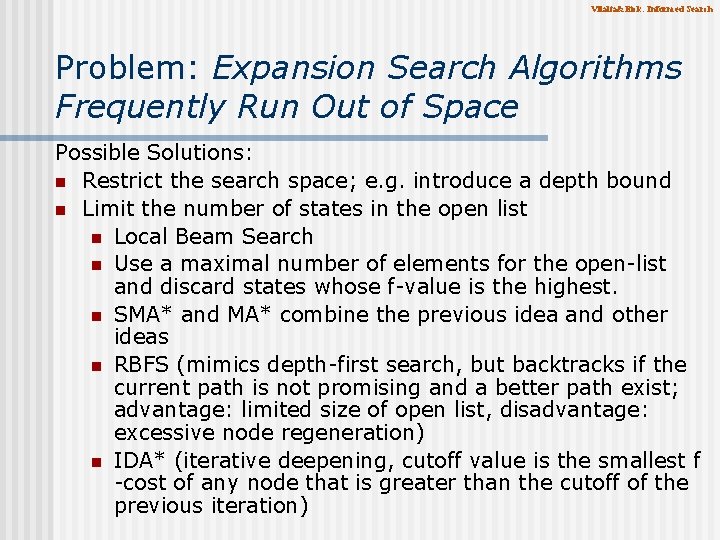

Vilalta&Eick: Informed Search Problem: Expansion Search Algorithms Frequently Run Out of Space Possible Solutions: n Restrict the search space; e. g. introduce a depth bound n Limit the number of states in the open list n Local Beam Search n Use a maximal number of elements for the open-list and discard states whose f-value is the highest. n SMA* and MA* combine the previous idea and other ideas n RBFS (mimics depth-first search, but backtracks if the current path is not promising and a better path exist; advantage: limited size of open list, disadvantage: excessive node regeneration) n IDA* (iterative deepening, cutoff value is the smallest f -cost of any node that is greater than the cutoff of the previous iteration)

Vilalta&Eick: Informed Search Local beam search i. iii. iv. v. Keep track of best k states Generate all successors of these k states If goal is reached stop. If not, select the best k successors and repeat.

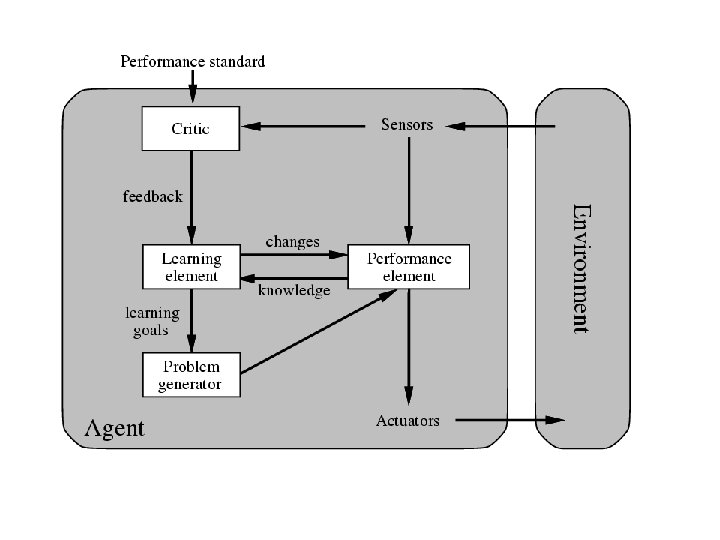

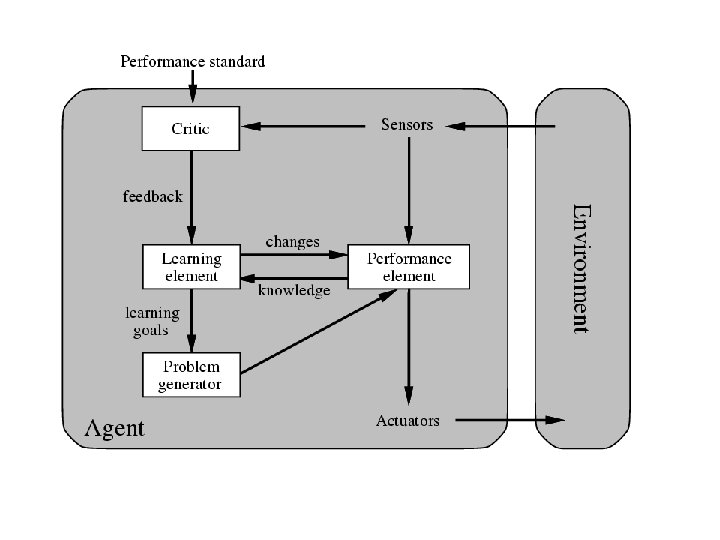

Vilalta&Eick: Informed Search Learning to Search The idea is to search at the meta-level space. Each state here is a search tree. The goal is to learn from different search strategies to avoid exploring useless parts of the tree.

Vilalta&Eick: Informed Search Figure 2. 15

Vilalta&Eick: Informed Search and Exploration Search Strategies • Heuristic Functions • Local Search Algorithms •

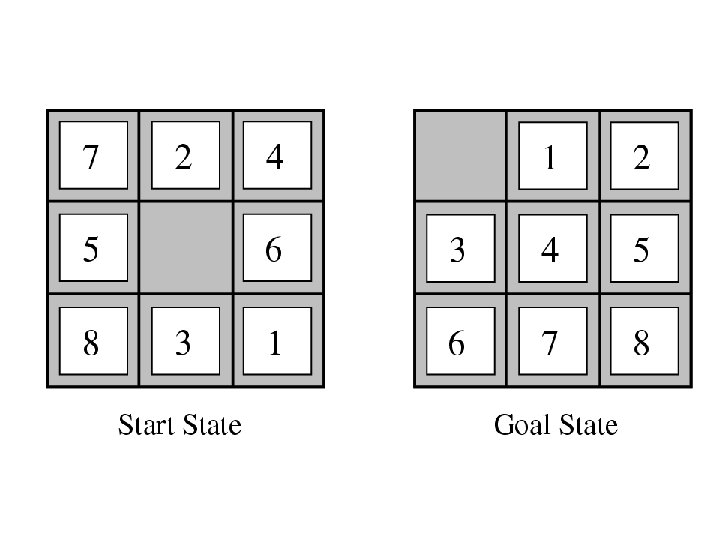

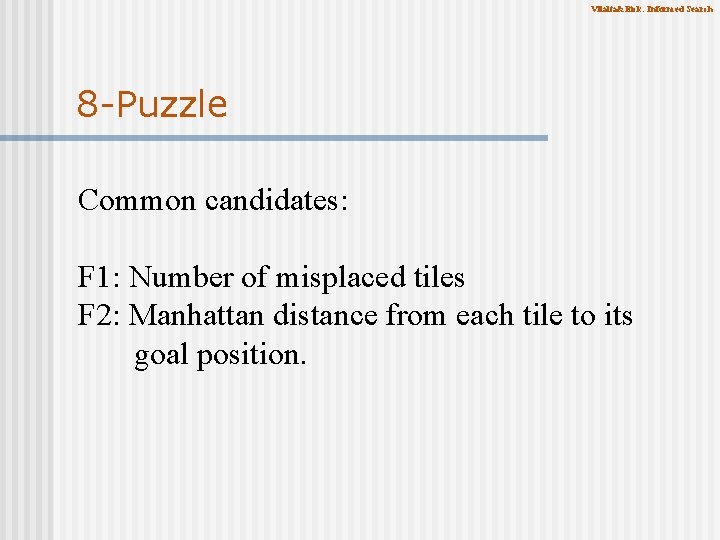

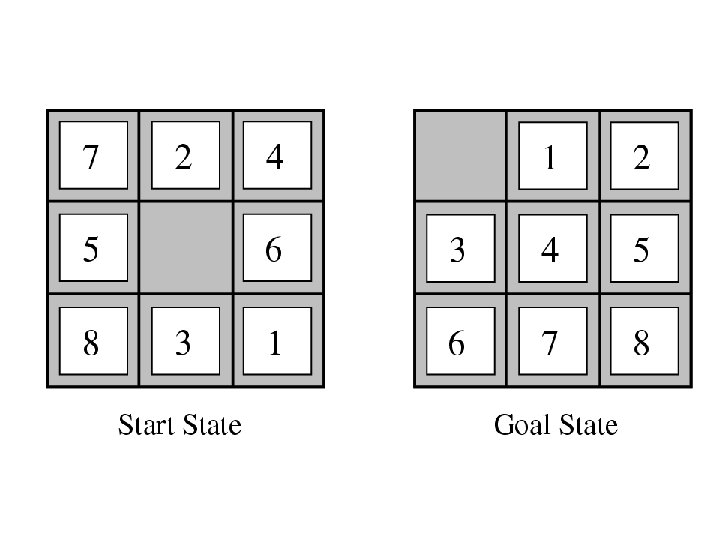

Vilalta&Eick: Informed Search 8 -Puzzle Common candidates: F 1: Number of misplaced tiles F 2: Manhattan distance from each tile to its goal position.

Vilalta&Eick: Informed Search Figure

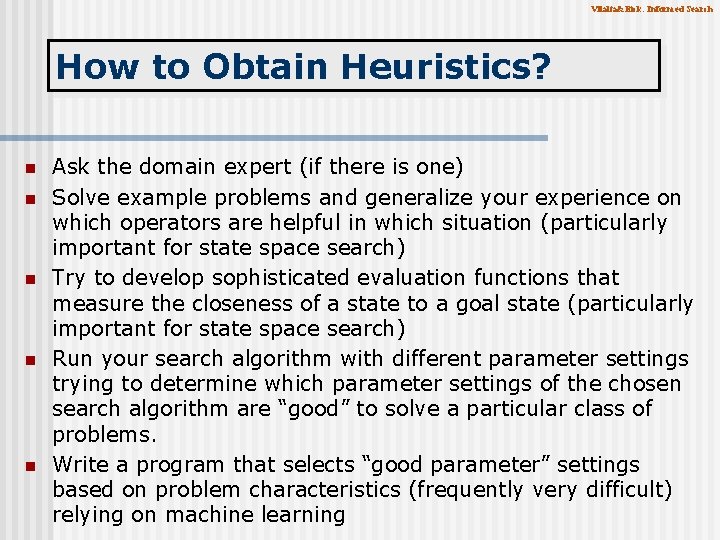

Vilalta&Eick: Informed Search How to Obtain Heuristics? n n n Ask the domain expert (if there is one) Solve example problems and generalize your experience on which operators are helpful in which situation (particularly important for state space search) Try to develop sophisticated evaluation functions that measure the closeness of a state to a goal state (particularly important for state space search) Run your search algorithm with different parameter settings trying to determine which parameter settings of the chosen search algorithm are “good” to solve a particular class of problems. Write a program that selects “good parameter” settings based on problem characteristics (frequently very difficult) relying on machine learning

Vilalta&Eick: Informed Search and Exploration Search Strategies • Heuristic Functions • Local Search Algorithms •

Vilalta&Eick: Informed Search Local Search Algorithms q If the path does not matter we can deal with local search. q They use a single current state q Move only to neighbors of that state Advantages: ü Use very little memory ü Often find reasonable solutions

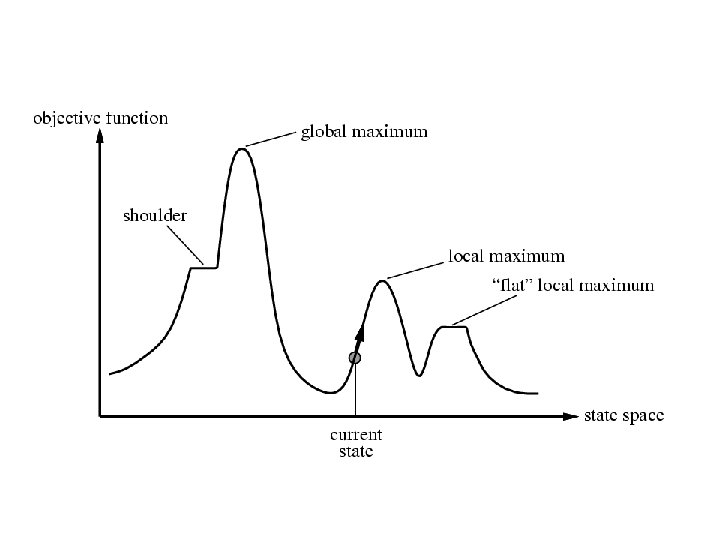

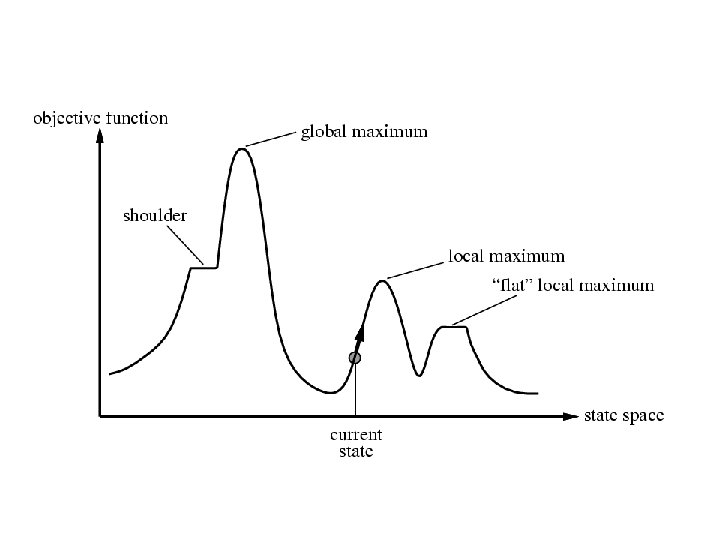

Vilalta&Eick: Informed Search Local Search Algorithms q It helps to see a state space landscape q Find global maximum or minimum Complete search: Always finds a goal Optimal search: Finds global maximum or minimum.

Vilalta&Eick: Informed Search Figure 4. 10

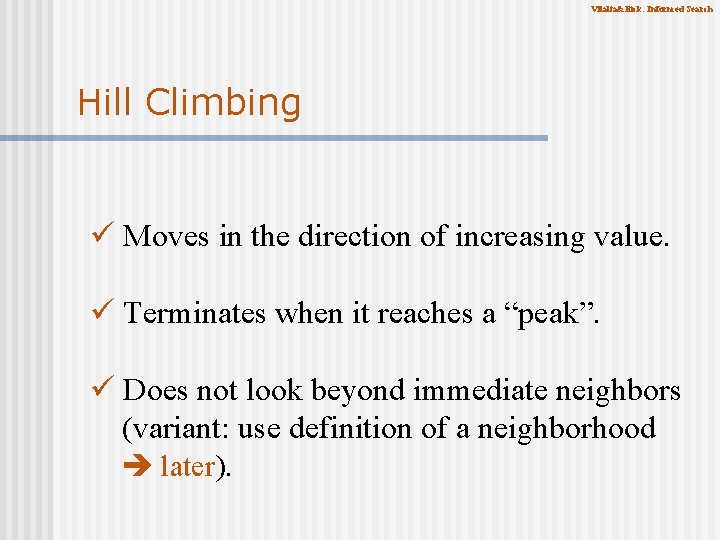

Vilalta&Eick: Informed Search Hill Climbing ü Moves in the direction of increasing value. ü Terminates when it reaches a “peak”. ü Does not look beyond immediate neighbors (variant: use definition of a neighborhood later).

Vilalta&Eick: Informed Search Hill Climbing Can get stuck for some reasons: a. Local maxima b. Ridges c. Plateau Variants: stochastic hill climbing more details later Ø random uphill move Ø generate successors randomly until one is better Ø run hill climbing multiple times using different initial states (random restart)

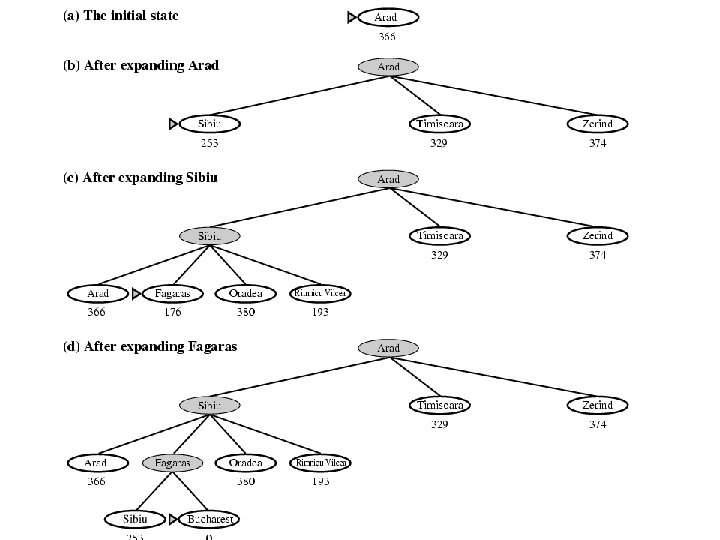

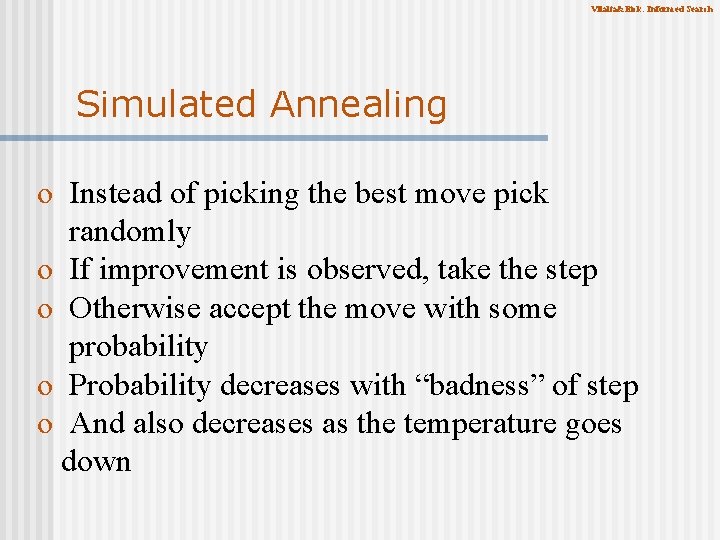

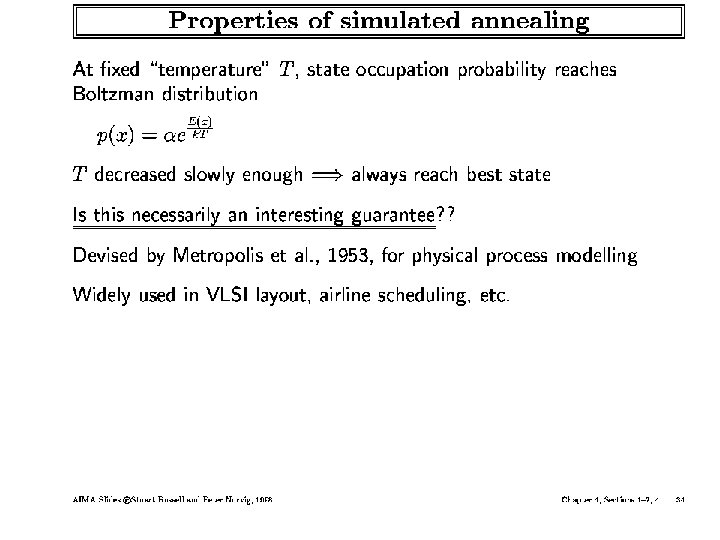

Vilalta&Eick: Informed Search Simulated Annealing o Instead of picking the best move pick randomly o If improvement is observed, take the step o Otherwise accept the move with some probability o Probability decreases with “badness” of step o And also decreases as the temperature goes down

Vilalta&Eick: Informed Search

Vilalta&Eick: Informed Search Example of a Schedule Simulated Annealling T=f(t)= (2000 -t)/2000 --- runs for 2000 iterations Assume E=-1; then we obtain t=0 downward move will be accepted with probability e-1 t=1000 downward move will be accepted with probability e -2 t=1500 downward move will be accepted with probability e -4 t=1999 downward move will be accepted with probability e -2000 Remark: if E=-2 downward moves are less likely to be accepted than when E=-1 (e. g. for t=1000 a downward move would be accepted with probability e-4)

Vilalta&Eick: Informed Search

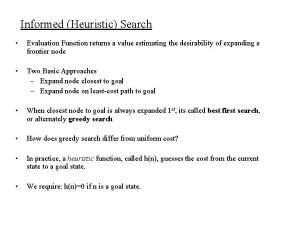

Informed (heuristic) search strategies

Informed (heuristic) search strategies Informed search and uninformed search in ai

Informed search and uninformed search in ai Best first search is a type of informed search which uses

Best first search is a type of informed search which uses Blind search dan heuristic search

Blind search dan heuristic search Difference between informed and uninformed search

Difference between informed and uninformed search Informed and uninformed search

Informed and uninformed search Image search

Image search Informed search example

Informed search example Advantages of informed search

Advantages of informed search Informed search

Informed search Greedy search

Greedy search Heuristic search adalah

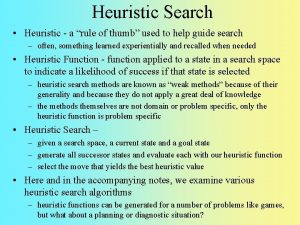

Heuristic search adalah Heuristic search methods

Heuristic search methods Heuristic search

Heuristic search Heuristic search

Heuristic search 22power dot com

22power dot com Cognitive walkthrough and heuristic evaluation

Cognitive walkthrough and heuristic evaluation Admissible and consistent heuristic example

Admissible and consistent heuristic example Successful job search strategies

Successful job search strategies Comparison of uninformed search strategies

Comparison of uninformed search strategies Internal information search

Internal information search Taking informed action

Taking informed action 4 r's trauma informed care

4 r's trauma informed care Trauma informed care lgbtq

Trauma informed care lgbtq Dr anita ravi

Dr anita ravi Pillars of trauma informed care

Pillars of trauma informed care Parents promoters apathetics defenders

Parents promoters apathetics defenders Solace american pie

Solace american pie Power interest grid

Power interest grid Trauma informed icebreakers

Trauma informed icebreakers Trauma-informed workplace checklist

Trauma-informed workplace checklist