Using Clustering to Enhance Classifiers Christoph F Eick

![1. Knowledge Discovery in Data [and Data Mining] (KDD) Let us find something interesting! 1. Knowledge Discovery in Data [and Data Mining] (KDD) Let us find something interesting!](https://slidetodoc.com/presentation_image_h2/776c2b766cc54a0ed0127a4e4955c84d/image-3.jpg)

![Links to 5 Papers [VAE 03] R. Vilalta, M. Achari, C. Eick, Class Decomposition Links to 5 Papers [VAE 03] R. Vilalta, M. Achari, C. Eick, Class Decomposition](https://slidetodoc.com/presentation_image_h2/776c2b766cc54a0ed0127a4e4955c84d/image-35.jpg)

- Slides: 36

Using Clustering to Enhance Classifiers Christoph F. Eick Organization of the Talk 1. Brief Introduction to KDD 2. Using Clustering a. for Nearest Neighbour Editing b. for Distance Function Learning c. for Class Decomposition 3. Representative-Based Supervised Clustering Algorithms 4. Summary and Conclusion

Objectives of Today’s Presentation • Goal: To give you a flavor what kind of questions and techniques are investigated by my/our current research • Brief introduction to KDD • Not discussed: – Why is KDD/classification/clustering important? – Example applications for KDD/classification/clustering. – Evaluation of presented techniques (if you are interested how techniques presented in this presentation compare with other approaches you can read [VAE 03], [EZZ 04], [ERBV 04], [EZV 04], [RE 05]). – Literature survey

![1 Knowledge Discovery in Data and Data Mining KDD Let us find something interesting 1. Knowledge Discovery in Data [and Data Mining] (KDD) Let us find something interesting!](https://slidetodoc.com/presentation_image_h2/776c2b766cc54a0ed0127a4e4955c84d/image-3.jpg)

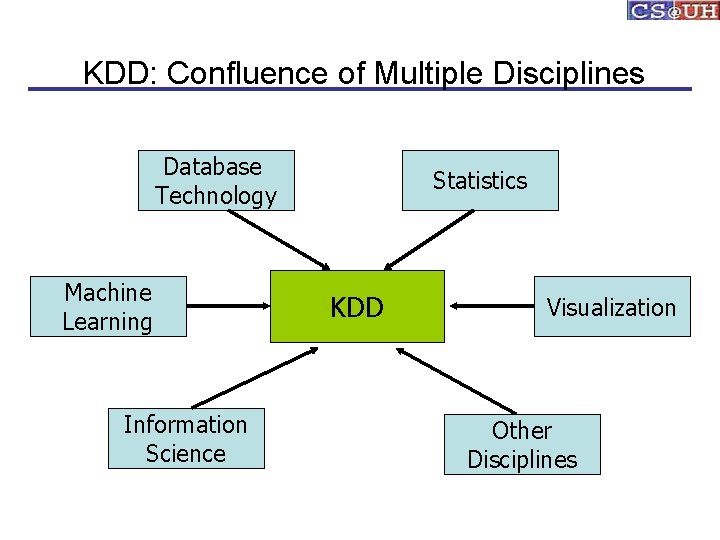

1. Knowledge Discovery in Data [and Data Mining] (KDD) Let us find something interesting! • • • Definition : = “KDD is the non-trivial process of identifying valid, novel, potentially useful, and ultimately understandable patterns in data” (Fayyad) Frequently, the term data mining is used to refer to KDD. Many commercial and experimental tools and tool suites are available (see http: //www. kdnuggets. com/siftware. html)

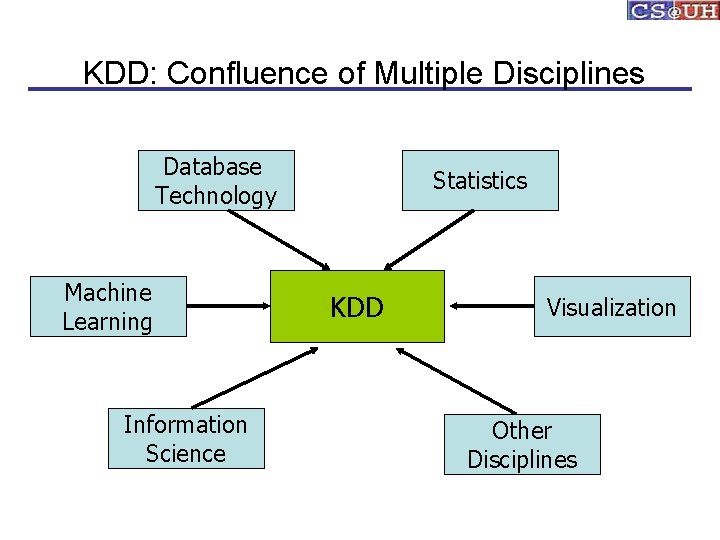

KDD: Confluence of Multiple Disciplines Database Technology Machine Learning Information Science Statistics KDD Visualization Other Disciplines

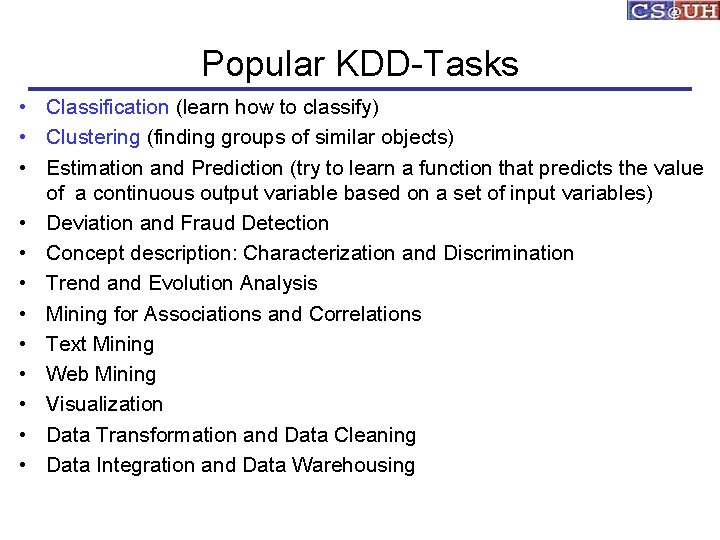

Popular KDD-Tasks • Classification (learn how to classify) • Clustering (finding groups of similar objects) • Estimation and Prediction (try to learn a function that predicts the value of a continuous output variable based on a set of input variables) • Deviation and Fraud Detection • Concept description: Characterization and Discrimination • Trend and Evolution Analysis • Mining for Associations and Correlations • Text Mining • Web Mining • Visualization • Data Transformation and Data Cleaning • Data Integration and Data Warehousing

Important KDD Conferences • KDD (has 500 -900 participants, strong industrial presence, KDD-Cup, controlled by ACM) • ICDM (receives approx. 500 papers each year, controlled by IEEE) • PKDD (European KDD Conference)

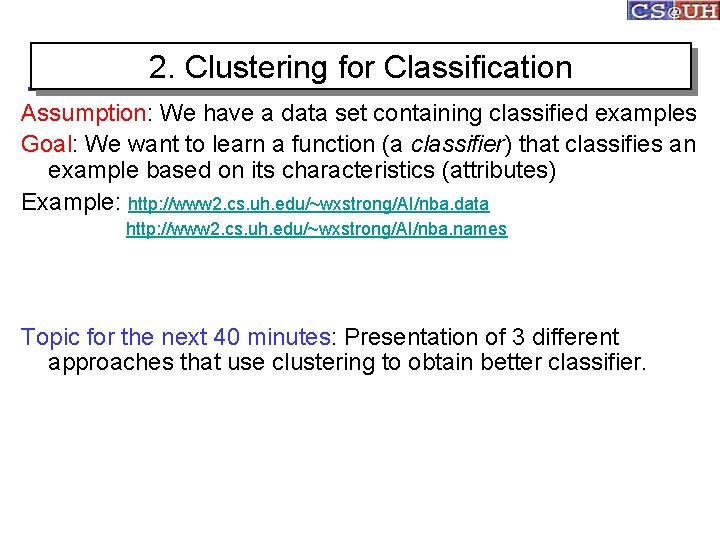

2. Clustering for Classification Assumption: We have a data set containing classified examples Goal: We want to learn a function (a classifier) that classifies an example based on its characteristics (attributes) Example: http: //www 2. cs. uh. edu/~wxstrong/AI/nba. data http: //www 2. cs. uh. edu/~wxstrong/AI/nba. names Topic for the next 40 minutes: Presentation of 3 different approaches that use clustering to obtain better classifier.

List of Persons that Contributed to the Work Presented in Today’s Presentation • • Tae-Wan Ryu Ricardo Vilalta Murali Achari Alain Rouhana Abraham Bagherjeiran Chunshen Chen Nidal Zeidat Zhenghong Zhao

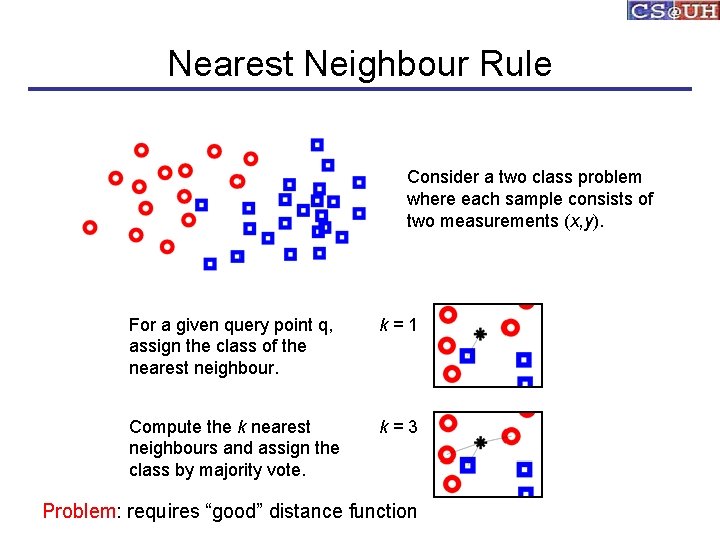

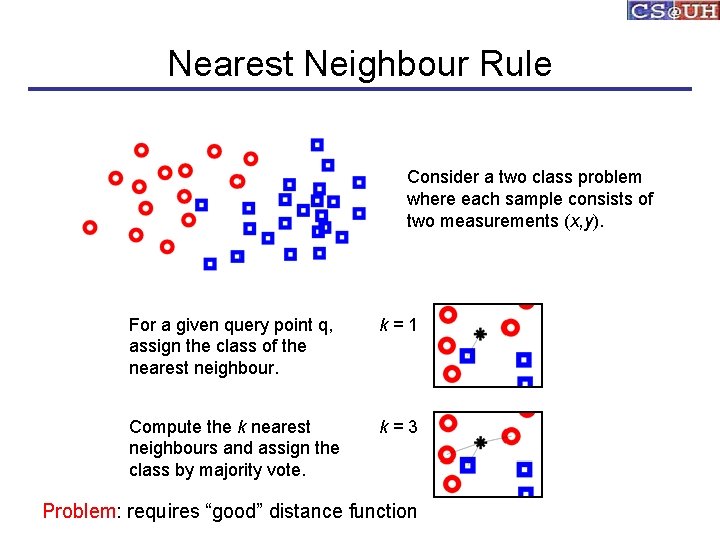

Nearest Neighbour Rule Consider a two class problem where each sample consists of two measurements (x, y). For a given query point q, assign the class of the nearest neighbour. k=1 Compute the k nearest neighbours and assign the class by majority vote. k=3 Problem: requires “good” distance function

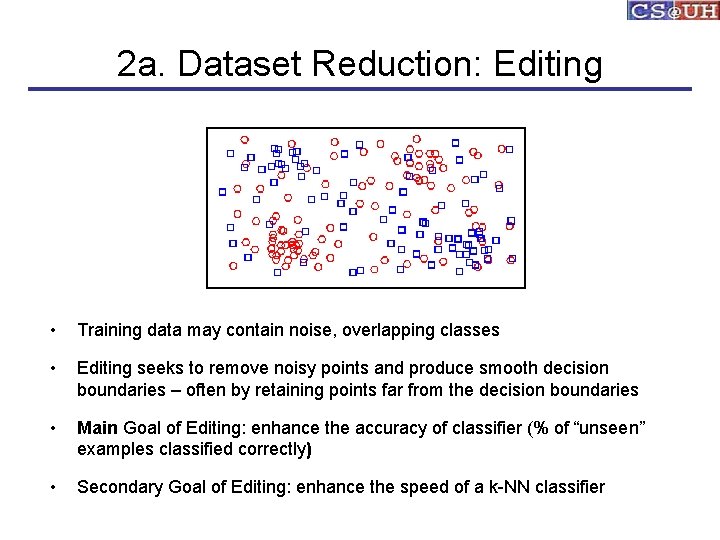

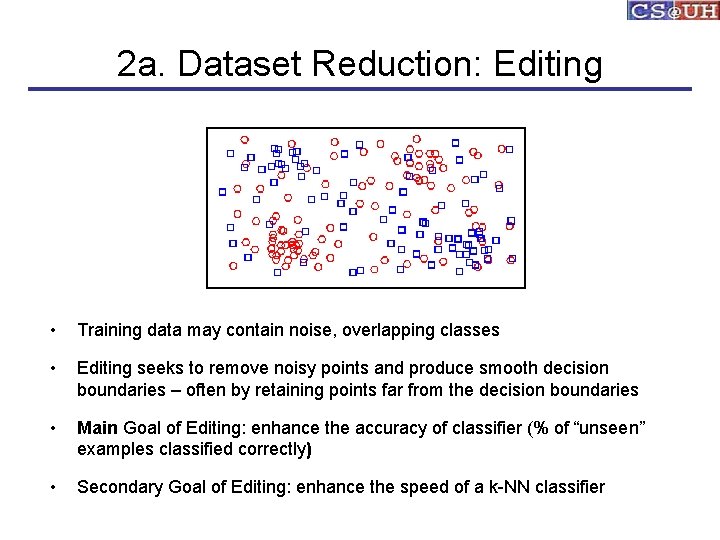

2 a. Dataset Reduction: Editing • Training data may contain noise, overlapping classes • Editing seeks to remove noisy points and produce smooth decision boundaries – often by retaining points far from the decision boundaries • Main Goal of Editing: enhance the accuracy of classifier (% of “unseen” examples classified correctly) • Secondary Goal of Editing: enhance the speed of a k-NN classifier

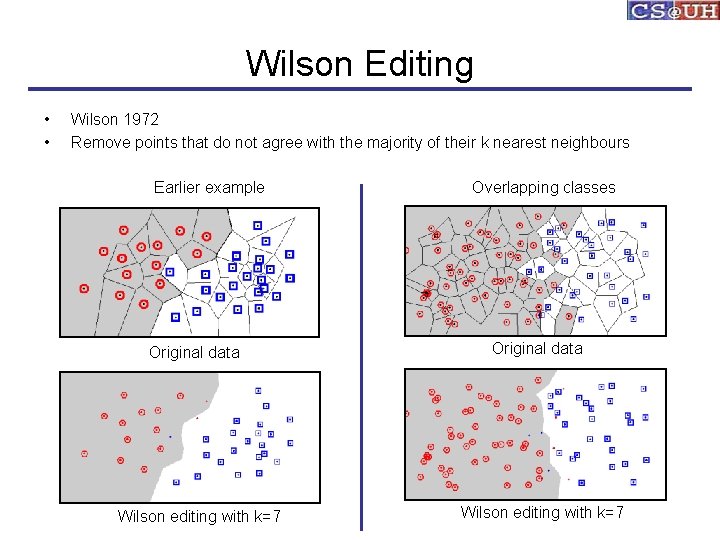

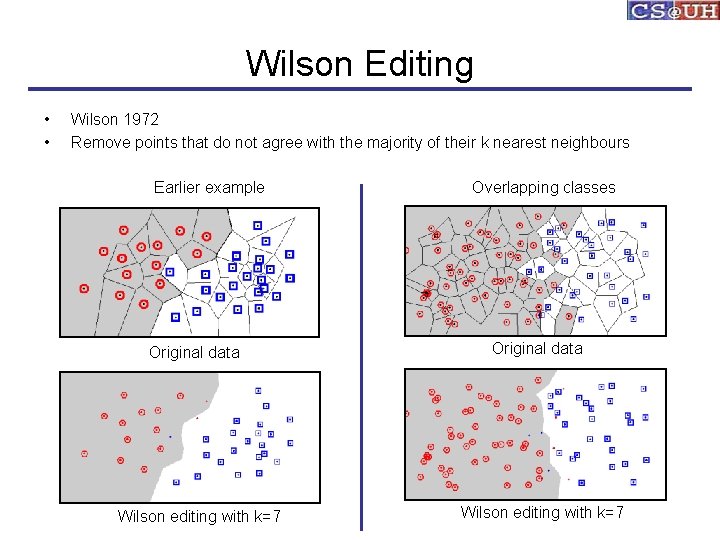

Wilson Editing • • Wilson 1972 Remove points that do not agree with the majority of their k nearest neighbours Earlier example Original data Wilson editing with k=7 Overlapping classes Original data Wilson editing with k=7

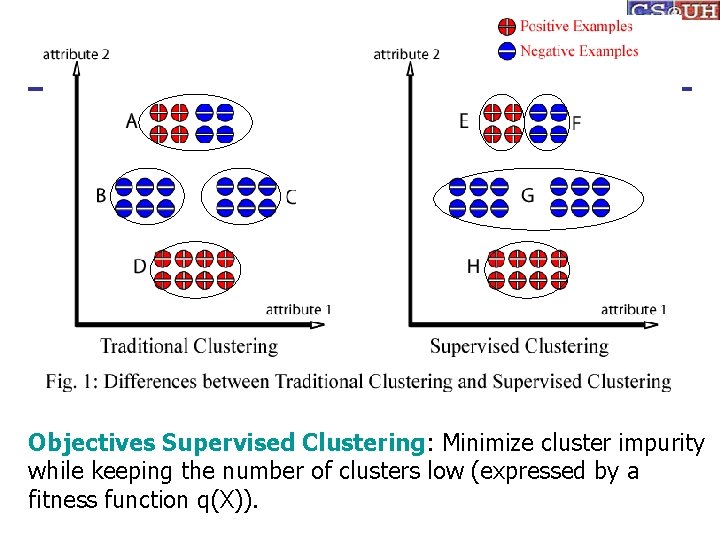

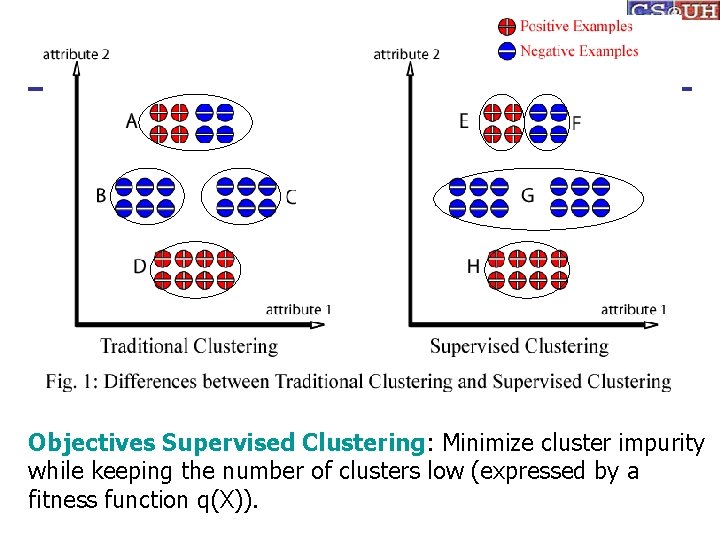

Traditional Clustering • Partition a set of objects into groups of similar objects. Each group is called cluster. • Clustering is used to “detect classes” in data set (“unsupervised learning”). • Clustering is based on a fitness function that relies on a distance measure and usually tries to create “tight” clusters.

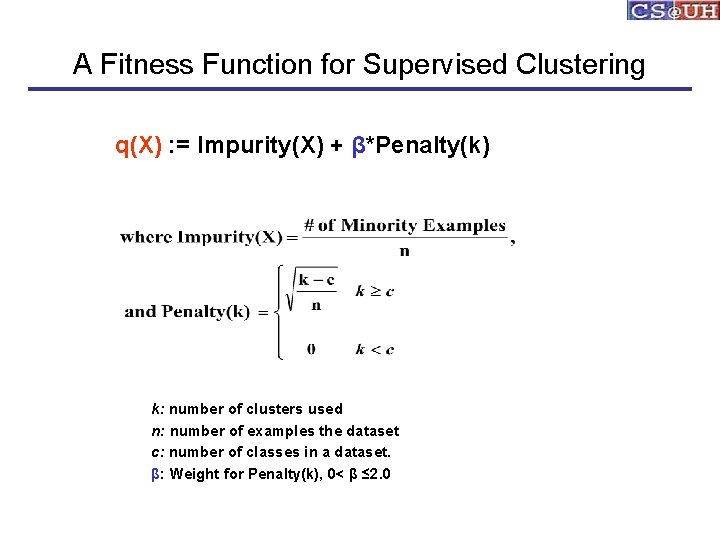

Objectives Supervised Clustering: Minimize cluster impurity while keeping the number of clusters low (expressed by a fitness function q(X)).

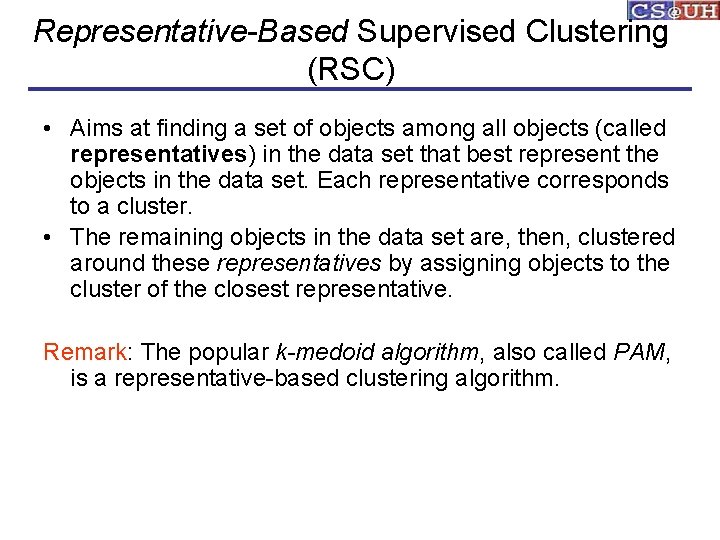

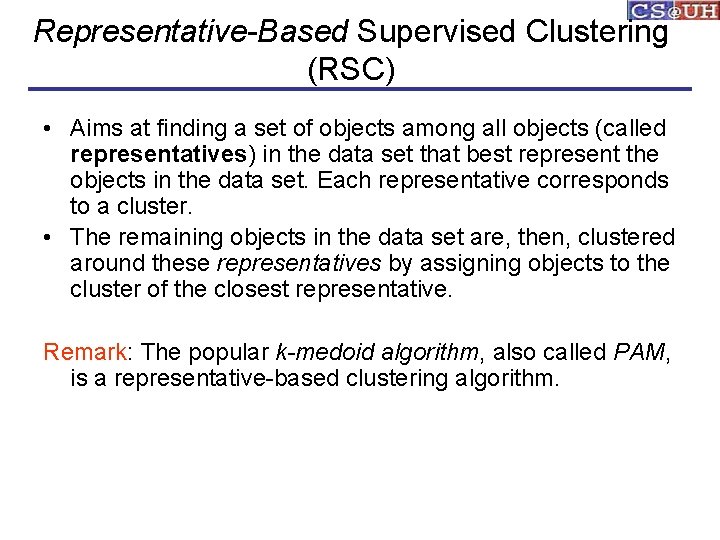

Representative-Based Supervised Clustering (RSC) • Aims at finding a set of objects among all objects (called representatives) in the data set that best represent the objects in the data set. Each representative corresponds to a cluster. • The remaining objects in the data set are, then, clustered around these representatives by assigning objects to the cluster of the closest representative. Remark: The popular k-medoid algorithm, also called PAM, is a representative-based clustering algorithm.

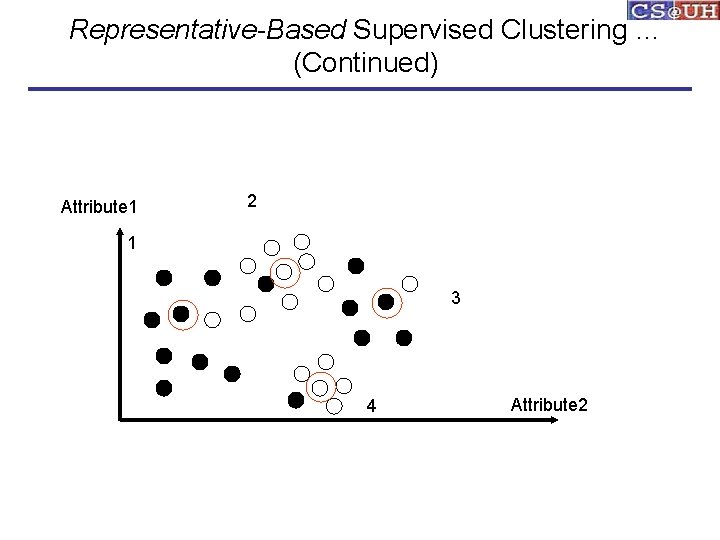

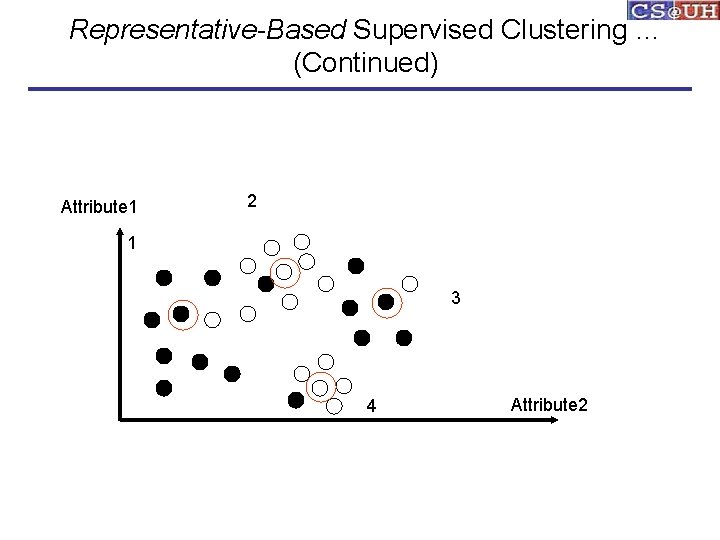

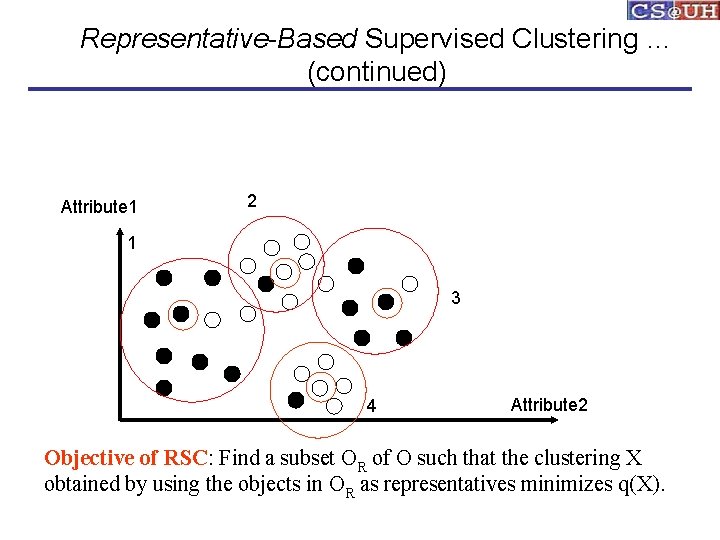

Representative-Based Supervised Clustering … (Continued) Attribute 1 2 1 3 4 Attribute 2

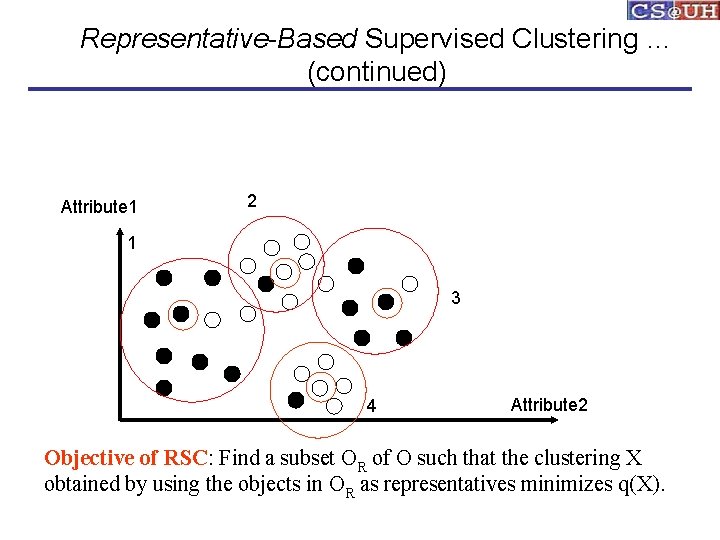

Representative-Based Supervised Clustering … (continued) Attribute 1 2 1 3 4 Attribute 2 Objective of RSC: Find a subset OR of O such that the clustering X obtained by using the objects in OR as representatives minimizes q(X).

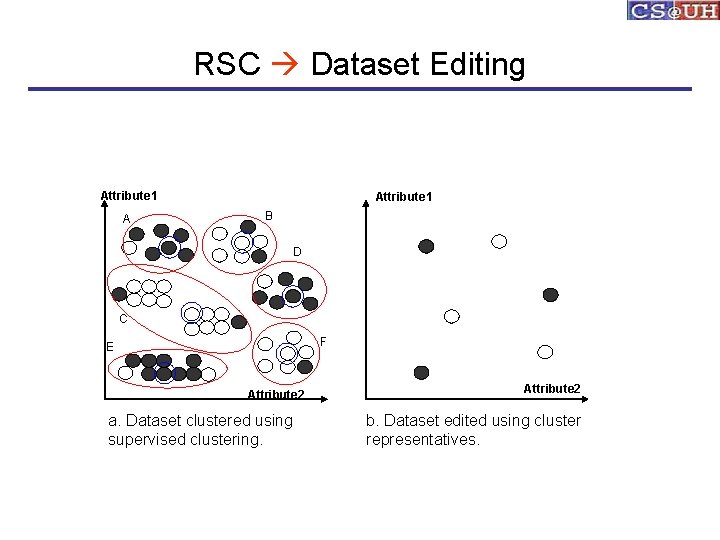

RSC Dataset Editing Attribute 1 A Attribute 1 B D C F E Attribute 2 a. Dataset clustered using supervised clustering. Attribute 2 b. Dataset edited using cluster representatives.

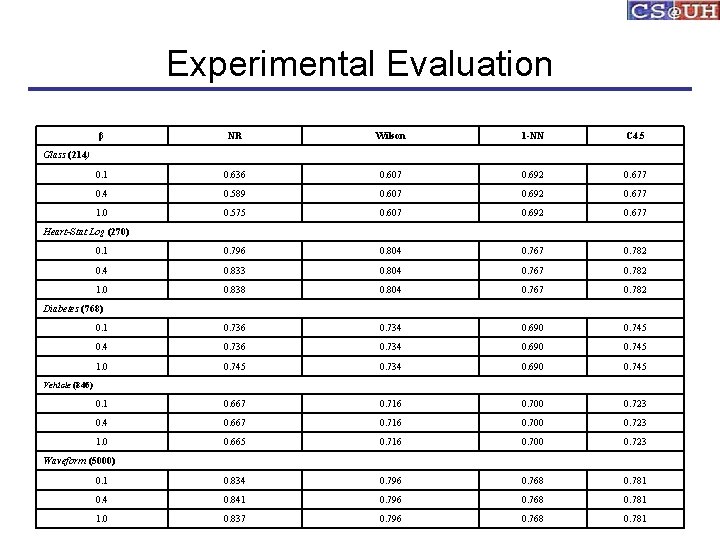

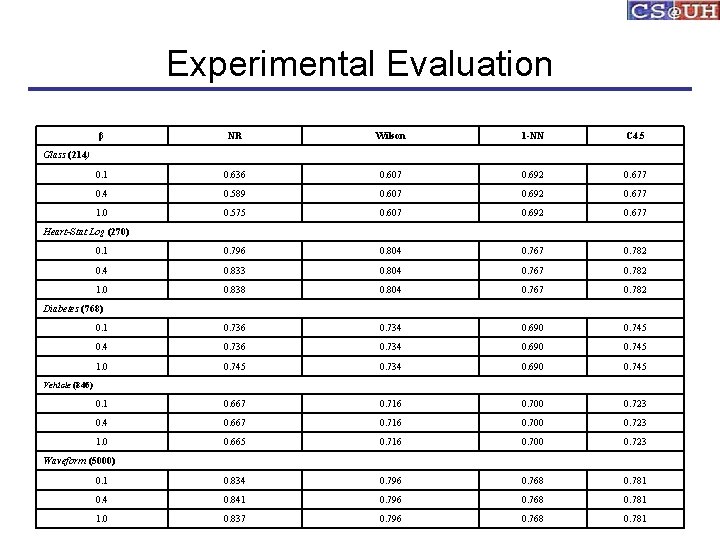

Experimental Evaluation β NR Wilson 1 -NN C 4. 5 0. 1 0. 636 0. 607 0. 692 0. 677 0. 4 0. 589 0. 607 0. 692 0. 677 1. 0 0. 575 0. 607 0. 692 0. 677 0. 1 0. 796 0. 804 0. 767 0. 782 0. 4 0. 833 0. 804 0. 767 0. 782 1. 0 0. 838 0. 804 0. 767 0. 782 0. 1 0. 736 0. 734 0. 690 0. 745 0. 4 0. 736 0. 734 0. 690 0. 745 1. 0 0. 745 0. 734 0. 690 0. 745 0. 1 0. 667 0. 716 0. 700 0. 723 0. 4 0. 667 0. 716 0. 700 0. 723 1. 0 0. 665 0. 716 0. 700 0. 723 0. 1 0. 834 0. 796 0. 768 0. 781 0. 4 0. 841 0. 796 0. 768 0. 781 1. 0 0. 837 0. 796 0. 768 0. 781 Glass (214) Heart-Stat Log (270) Diabetes (768) Vehicle (846) Waveform (5000)

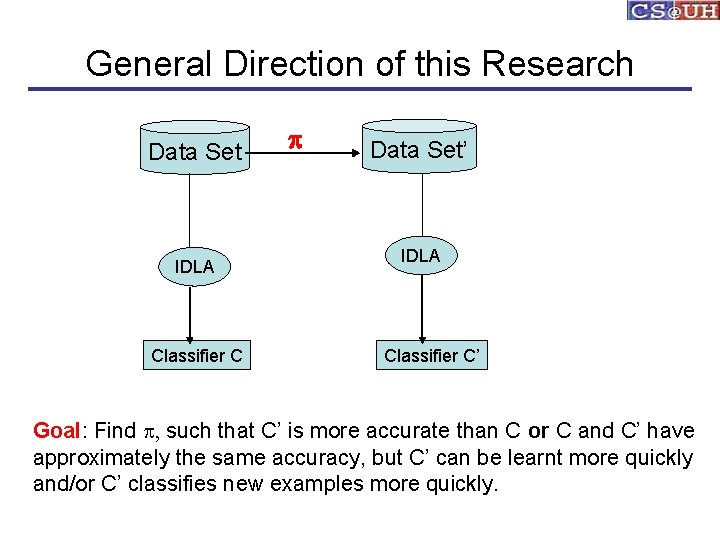

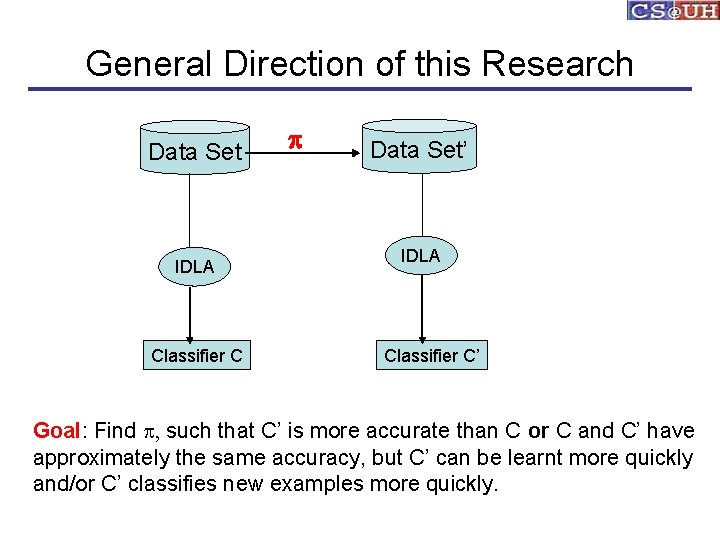

General Direction of this Research Data Set IDLA Classifier C p Data Set’ IDLA Classifier C’ Goal: Find p, such that C’ is more accurate than C or C and C’ have approximately the same accuracy, but C’ can be learnt more quickly and/or C’ classifies new examples more quickly.

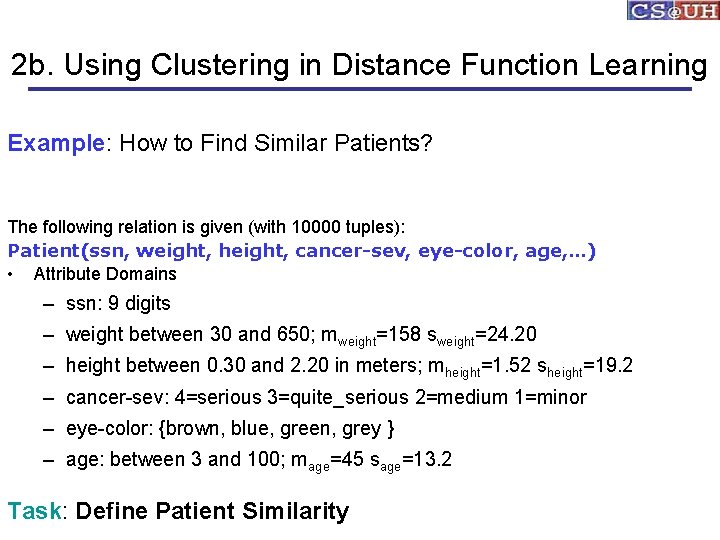

2 b. Using Clustering in Distance Function Learning Example: How to Find Similar Patients? The following relation is given (with 10000 tuples): Patient(ssn, weight, height, cancer-sev, eye-color, age, …) • Attribute Domains – ssn: 9 digits – weight between 30 and 650; mweight=158 sweight=24. 20 – height between 0. 30 and 2. 20 in meters; mheight=1. 52 sheight=19. 2 – cancer-sev: 4=serious 3=quite_serious 2=medium 1=minor – eye-color: {brown, blue, green, grey } – age: between 3 and 100; mage=45 sage=13. 2 Task: Define Patient Similarity

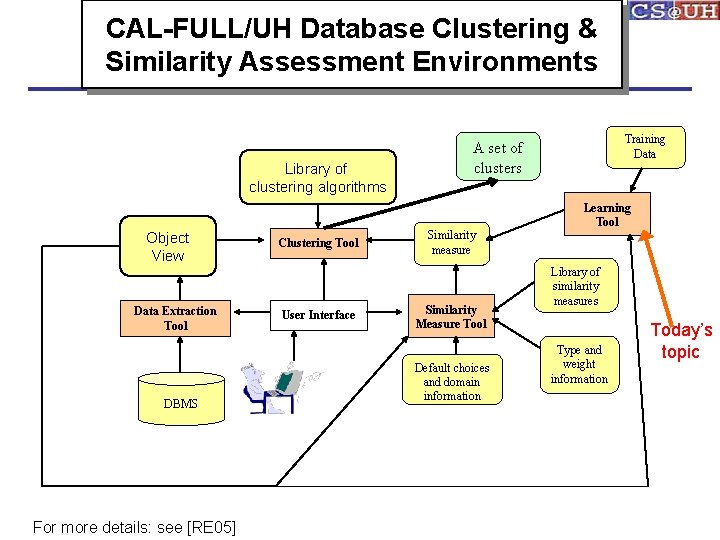

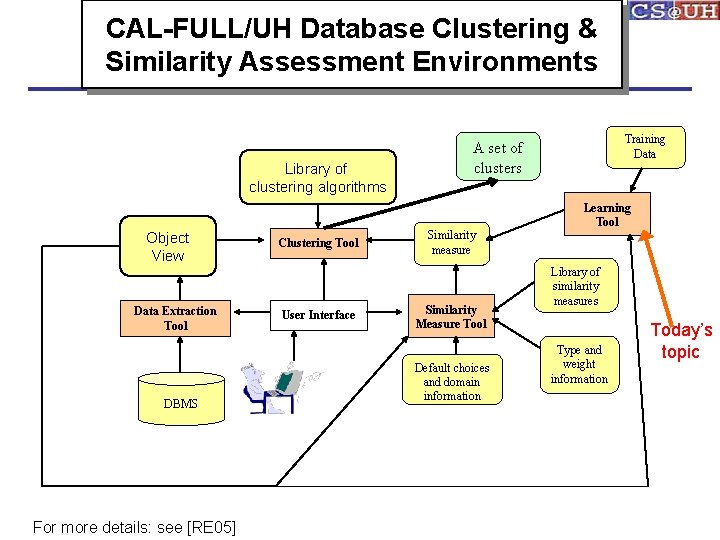

CAL-FULL/UH Database Clustering & Similarity Assessment Environments Library of clustering algorithms Object View Data Extraction Tool DBMS For more details: see [RE 05] Clustering Tool User Interface Training Data A set of clusters Similarity measure Similarity Measure Tool Default choices and domain information Learning Tool Library of similarity measures Type and weight information Today’s topic

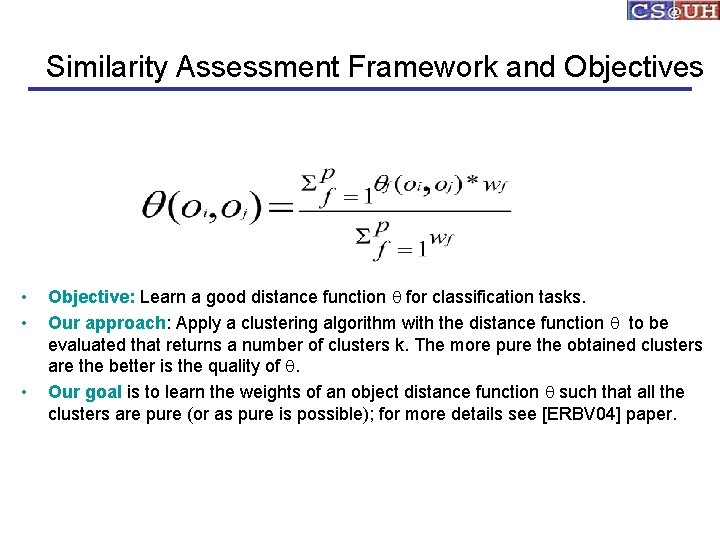

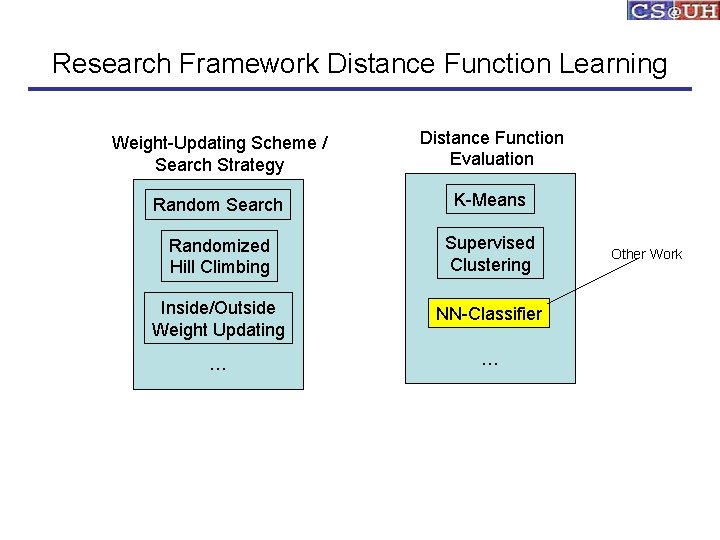

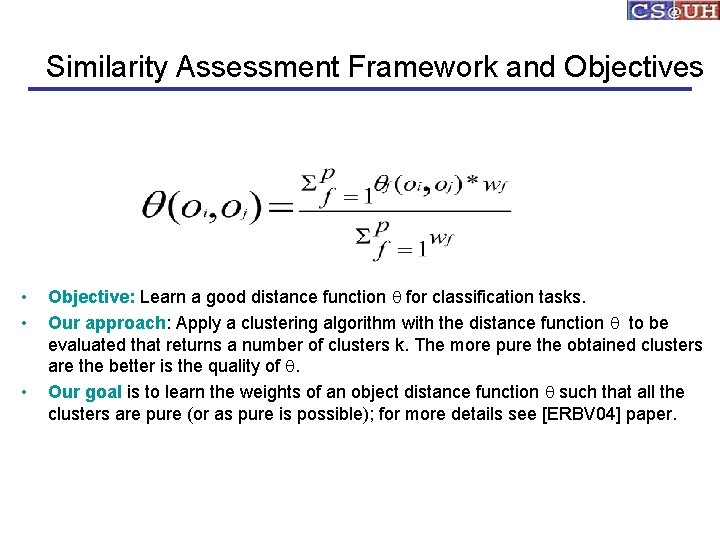

Similarity Assessment Framework and Objectives • • • Objective: Learn a good distance function q for classification tasks. Our approach: Apply a clustering algorithm with the distance function q to be evaluated that returns a number of clusters k. The more pure the obtained clusters are the better is the quality of q. Our goal is to learn the weights of an object distance function q such that all the clusters are pure (or as pure is possible); for more details see [ERBV 04] paper.

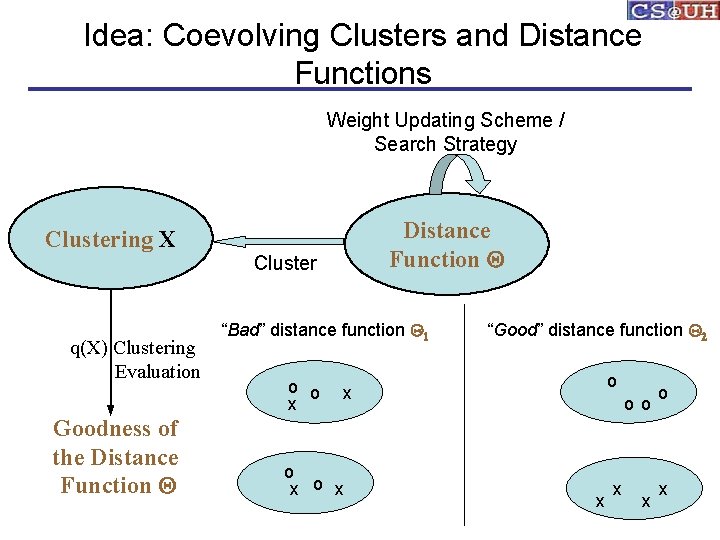

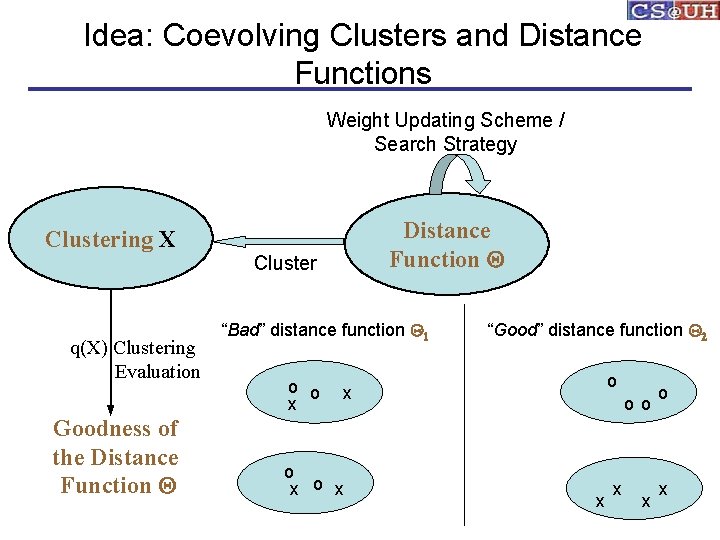

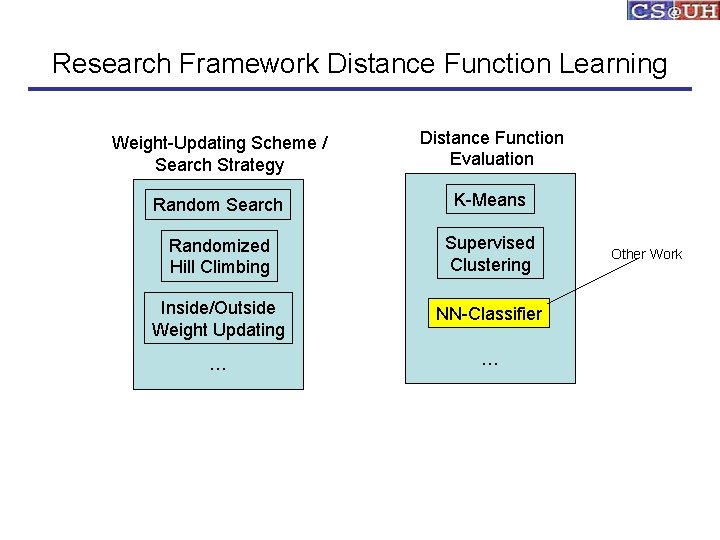

Idea: Coevolving Clusters and Distance Functions Weight Updating Scheme / Search Strategy Clustering X Cluster q(X) Clustering Evaluation Goodness of the Distance Function Q “Bad” distance function Q 1 “Good” distance function Q 2 o o x oox x x o x o oo o x x

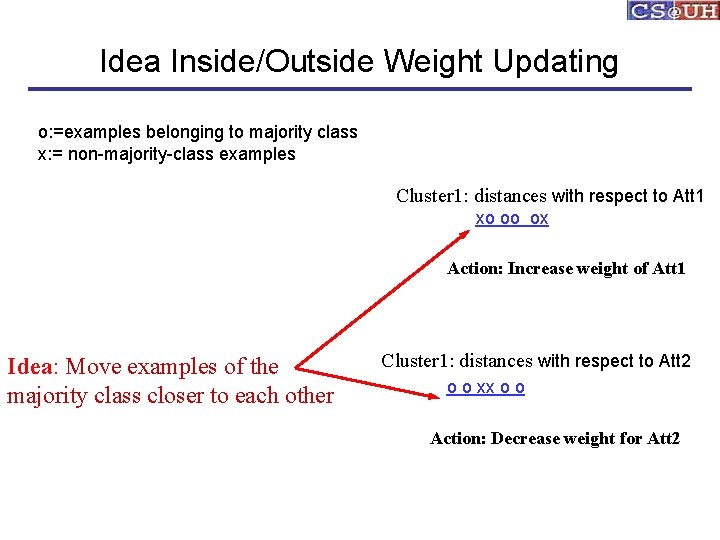

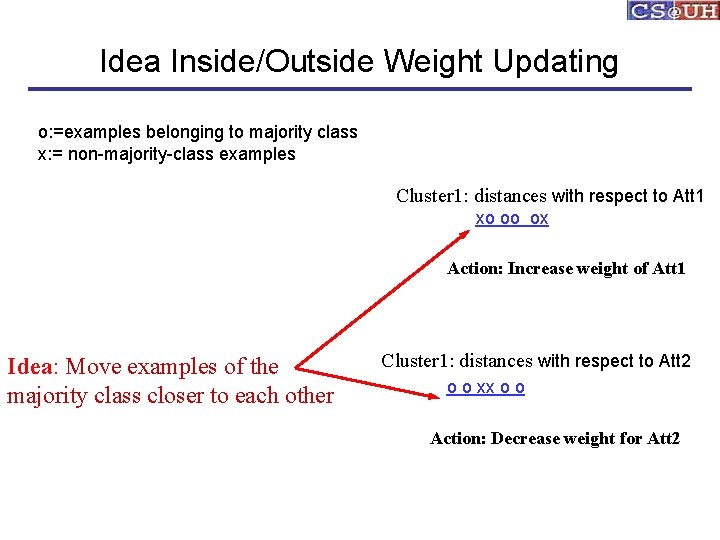

Idea Inside/Outside Weight Updating o: =examples belonging to majority class x: = non-majority-class examples Cluster 1: distances with respect to Att 1 xo oo ox Action: Increase weight of Att 1 Idea: Move examples of the majority class closer to each other Cluster 1: distances with respect to Att 2 o o xx o o Action: Decrease weight for Att 2

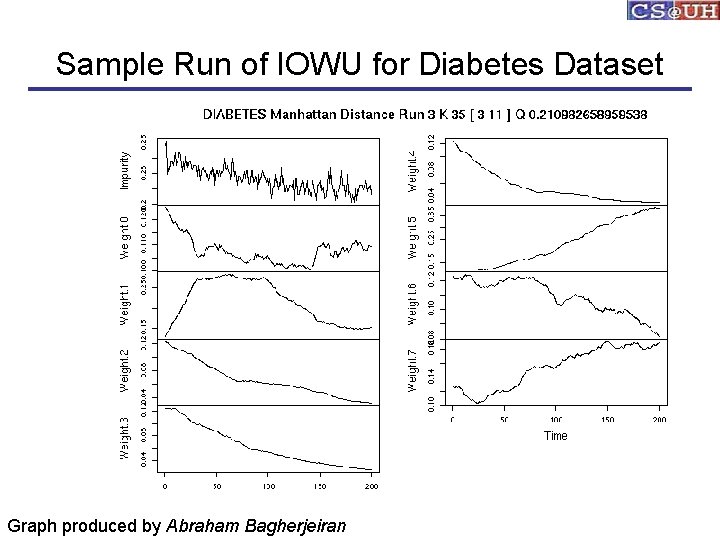

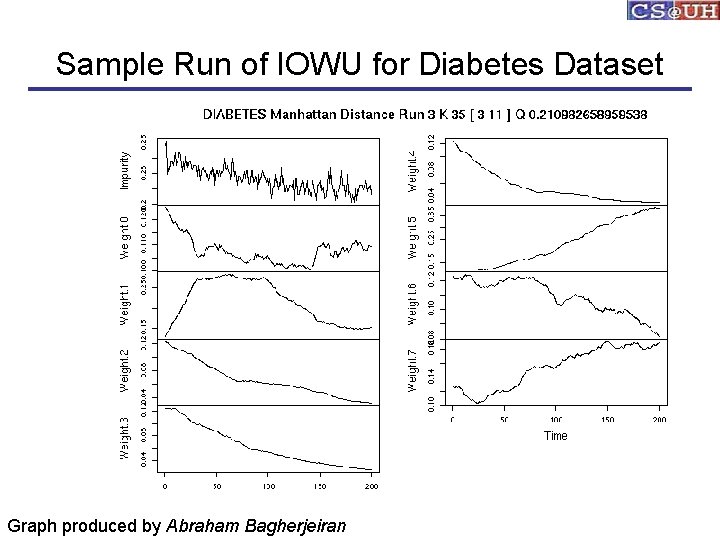

Sample Run of IOWU for Diabetes Dataset Graph produced by Abraham Bagherjeiran

Research Framework Distance Function Learning Weight-Updating Scheme / Search Strategy Distance Function Evaluation Random Search K-Means Randomized Hill Climbing Supervised Clustering Inside/Outside Weight Updating NN-Classifier … … Other Work

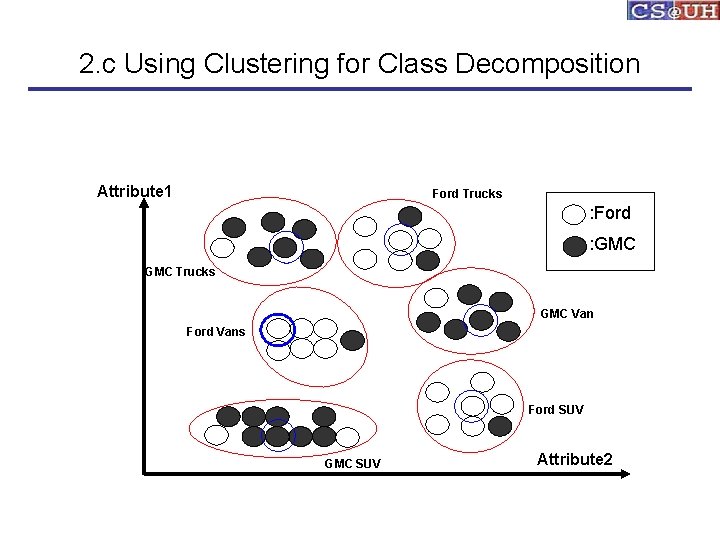

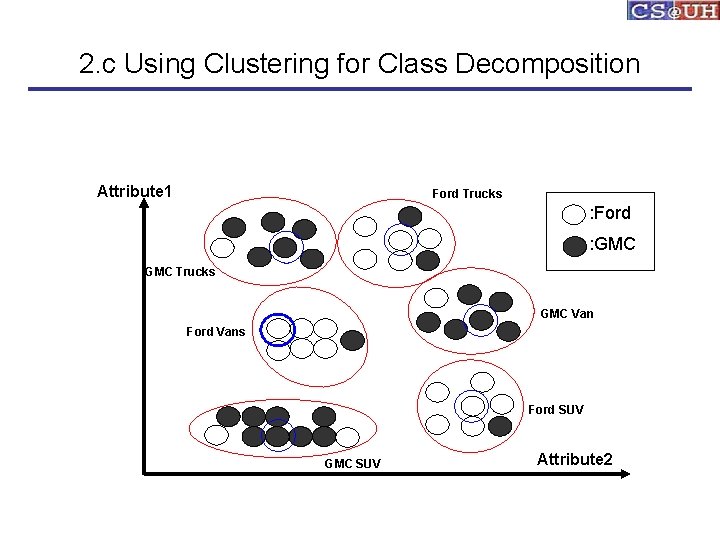

2. c Using Clustering for Class Decomposition Attribute 1 Ford Trucks : Ford : GMC Trucks GMC Van Ford Vans Ford SUV GMC SUV Attribute 2

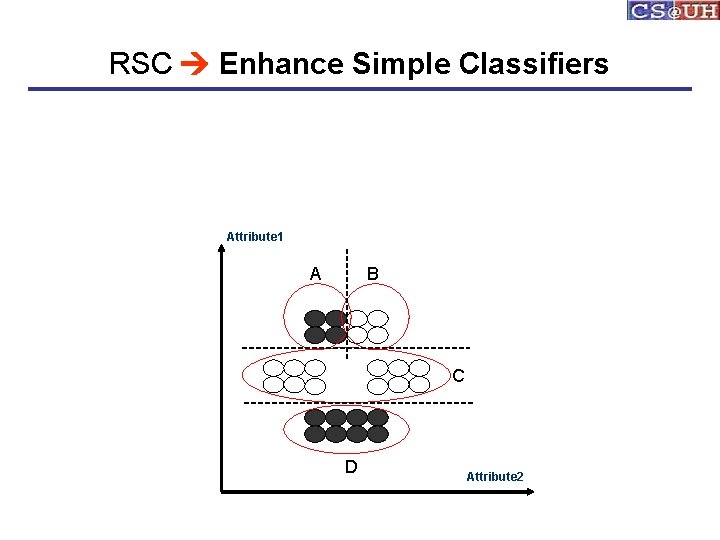

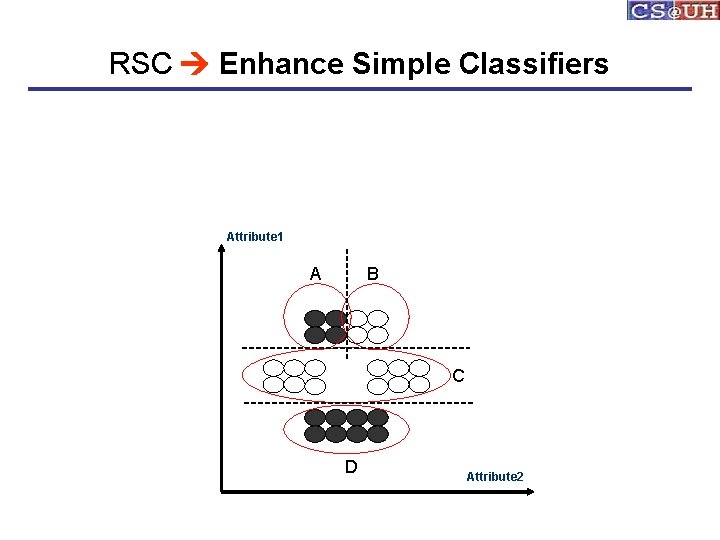

RSC Enhance Simple Classifiers Attribute 1 A B C D Attribute 2

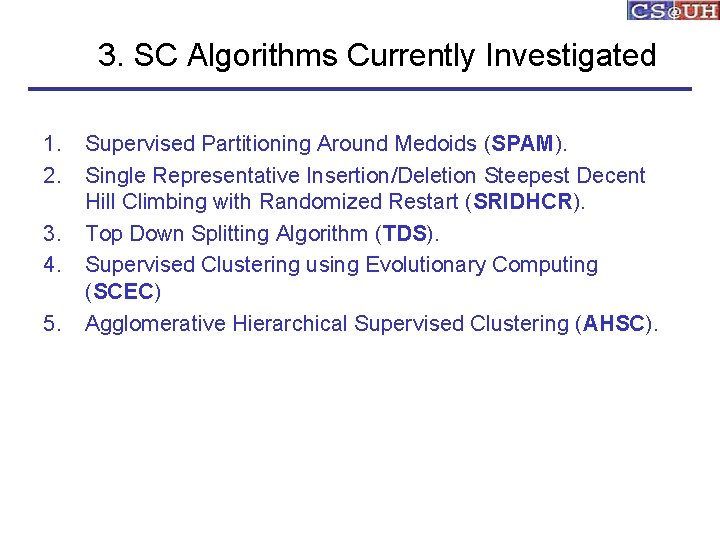

3. SC Algorithms Currently Investigated 1. 2. 3. 4. 5. Supervised Partitioning Around Medoids (SPAM). Single Representative Insertion/Deletion Steepest Decent Hill Climbing with Randomized Restart (SRIDHCR). Top Down Splitting Algorithm (TDS). Supervised Clustering using Evolutionary Computing (SCEC) Agglomerative Hierarchical Supervised Clustering (AHSC).

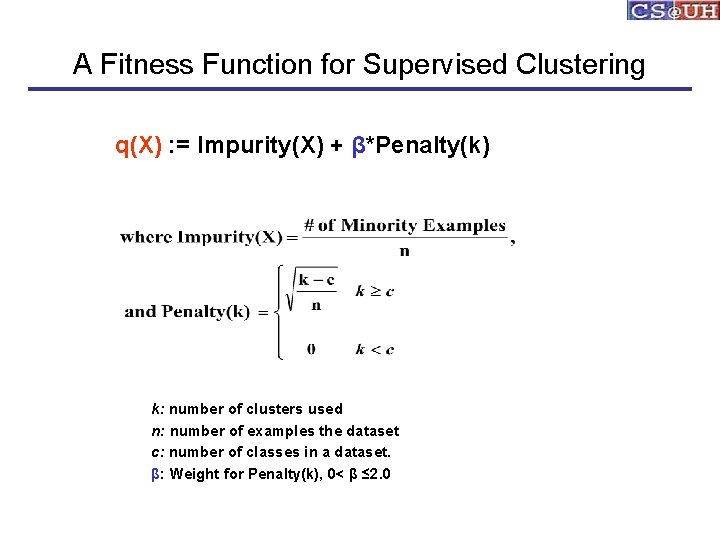

A Fitness Function for Supervised Clustering q(X) : = Impurity(X) + β*Penalty(k) k: number of clusters used n: number of examples the dataset c: number of classes in a dataset. β: Weight for Penalty(k), 0< β ≤ 2. 0

Applications of Supervised Clustering • Enhance classification algorithms. – Use SC for Dataset Editing to enhance NNclassifiers [ICDM 04] – Improve Simple Classifiers [ICDM 03] • Learning Sub-classes • Distance Function Learning [ERBV 04] • Dataset Compression/Reduction • Redistricting • Meta Learning / Creating Signatures for Datasets

4. Summary • We gave a brief introduction to KDD • We demonstrated how clustering can be used to obtain “better” classifiers • We introduced a new form of clustering, called supervised clustering, for this purpose.

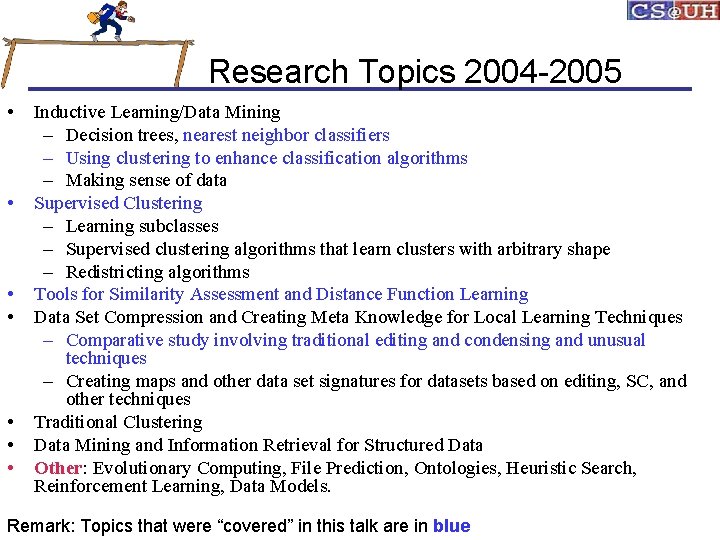

Research Topics 2004 -2005 • • Inductive Learning/Data Mining – Decision trees, nearest neighbor classifiers – Using clustering to enhance classification algorithms – Making sense of data Supervised Clustering – Learning subclasses – Supervised clustering algorithms that learn clusters with arbitrary shape – Redistricting algorithms Tools for Similarity Assessment and Distance Function Learning Data Set Compression and Creating Meta Knowledge for Local Learning Techniques – Comparative study involving traditional editing and condensing and unusual techniques – Creating maps and other data set signatures for datasets based on editing, SC, and other techniques Traditional Clustering Data Mining and Information Retrieval for Structured Data Other: Evolutionary Computing, File Prediction, Ontologies, Heuristic Search, Reinforcement Learning, Data Models. Remark: Topics that were “covered” in this talk are in blue

Where to Find References? • Data mining and KDD (SIGKDD member CDROM): – Conference proceedings: KDD, ICDM, PKDD etc. – Journal: Data Mining and Knowledge Discovery • Database field (SIGMOD member CD ROM): – Conference proceedings: ACM-SIGMOD, VLDB, ICDE, EDBT, DASFAA – Journals: ACM-TODS, J. ACM, IEEE-TKDE, JIIS, etc. • AI and Machine Learning: – Conference proceedings: ICML, AAAI, IJCAI, etc. – Journals: Machine Learning, Artificial Intelligence, etc. • Statistics: – Conference proceedings: Joint Stat. Meeting, etc. – Journals: Annals of statistics, etc. • Visualization: – Conference proceedings: CHI, etc. – Journals: IEEE Trans. visualization and computer graphics, etc.

![Links to 5 Papers VAE 03 R Vilalta M Achari C Eick Class Decomposition Links to 5 Papers [VAE 03] R. Vilalta, M. Achari, C. Eick, Class Decomposition](https://slidetodoc.com/presentation_image_h2/776c2b766cc54a0ed0127a4e4955c84d/image-35.jpg)

Links to 5 Papers [VAE 03] R. Vilalta, M. Achari, C. Eick, Class Decomposition via Clustering: A New Framework for Low-Variance Classifiers, in Proc. IEEE International Conference on Data Mining (ICDM), Melbourne, Florida, November 2003. http: //www. cs. uh. edu/~ceick/kdd/VAE 03. pdf [EZZ 04] C. Eick, N. Zeidat, Z. Zhao, Supervised Clustering --- Algorithms and Benefits, short version of this paper to appear in Proc. International Conference on Tools with AI (ICTAI), Boca Raton, Florida, November 2004. http: //www. cs. uh. edu/~ceick/kdd/EZZ 04. pdf [ERBV 04] C. Eick, A. Rouhana, A. Bagherjeiran, R. Vilalta, Using Clustering to Learn Distance Functions for Supervised Similarity Assessment, in revision, to be submitted to MLDM'05, Leipzig, Germany, July 2005 http: //www. cs. uh. edu/~ceick/kdd/ERBV 04. pdf [EZV 04] C. Eick, N. Zeidat, R. Vilalta, Using Representative-Based Clustering for Nearest Neighbor Dataset Editing, to appear in Proc. IEEE International Conference on Data Mining (ICDM), Brighton, England, November 2004. http: //www. cs. uh. edu/~ceick/kdd/EZV 04. pdf [RE 05]. Ryu, C. Eick, A Database Clustering Methodology and Tool, to appear in Information Science, Spring 2005. http: //www. cs. uh. edu/~ceick/kdd/RE 05. doc

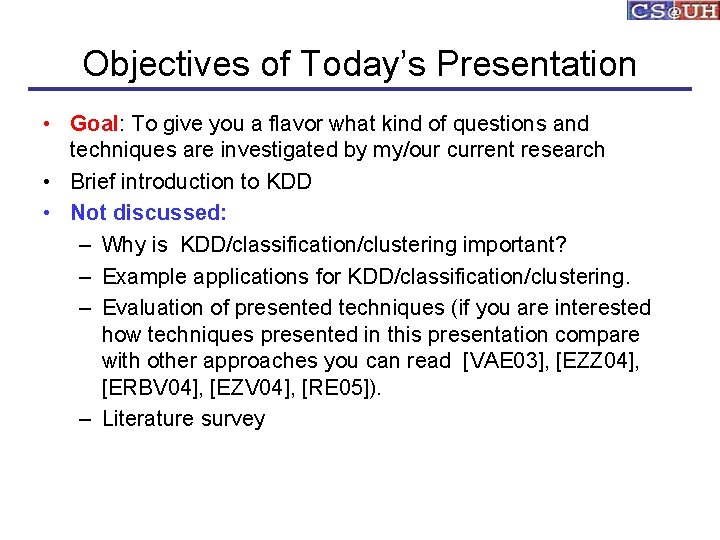

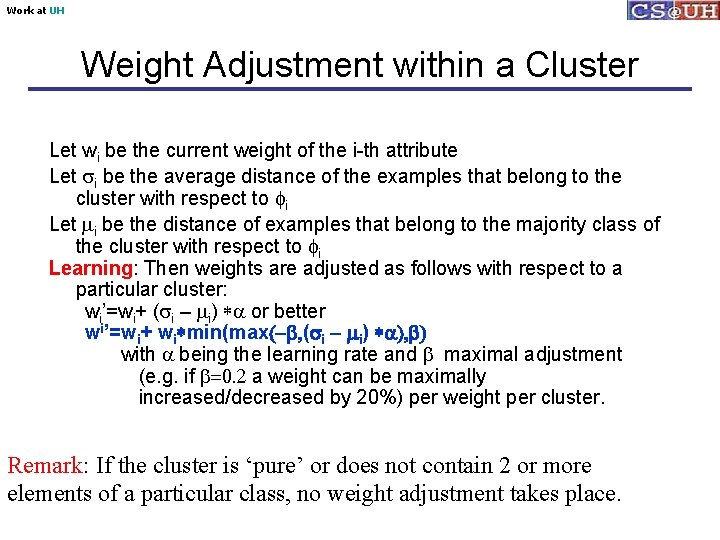

Work at UH Weight Adjustment within a Cluster Let wi be the current weight of the i-th attribute Let si be the average distance of the examples that belong to the cluster with respect to fi Let mi be the distance of examples that belong to the majority class of the cluster with respect to fi Learning: Then weights are adjusted as follows with respect to a particular cluster: wi’=wi+ (si – mi) *a or better wi’=wi+ wi*min(max(-b, (si – mi) *a), b) with a being the learning rate and b maximal adjustment (e. g. if b=0. 2 a weight can be maximally increased/decreased by 20%) per weight per cluster. Remark: If the cluster is ‘pure’ or does not contain 2 or more elements of a particular class, no weight adjustment takes place.