Theory of Information Lecture 9 Entropy Section 3

- Slides: 9

Theory of Information Lecture 9 Entropy (Section 3. 1, a bit of 3. 2) 1

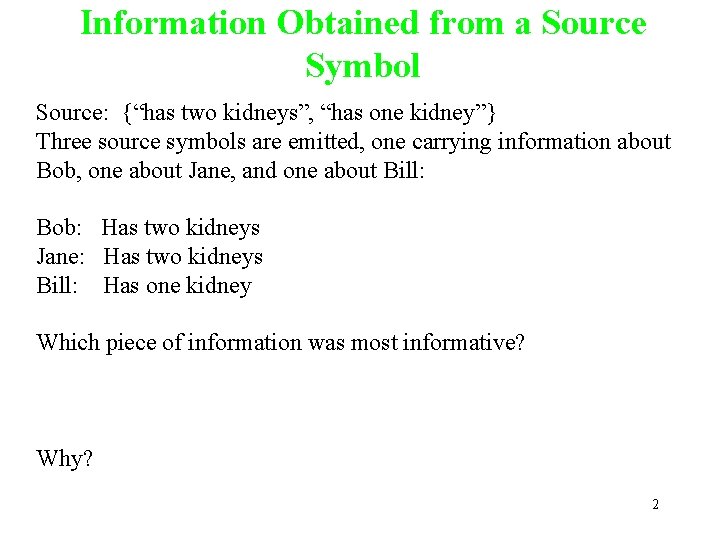

Information Obtained from a Source Symbol Source: {“has two kidneys”, “has one kidney”} Three source symbols are emitted, one carrying information about Bob, one about Jane, and one about Bill: Bob: Has two kidneys Jane: Has two kidneys Bill: Has one kidney Which piece of information was most informative? Why? 2

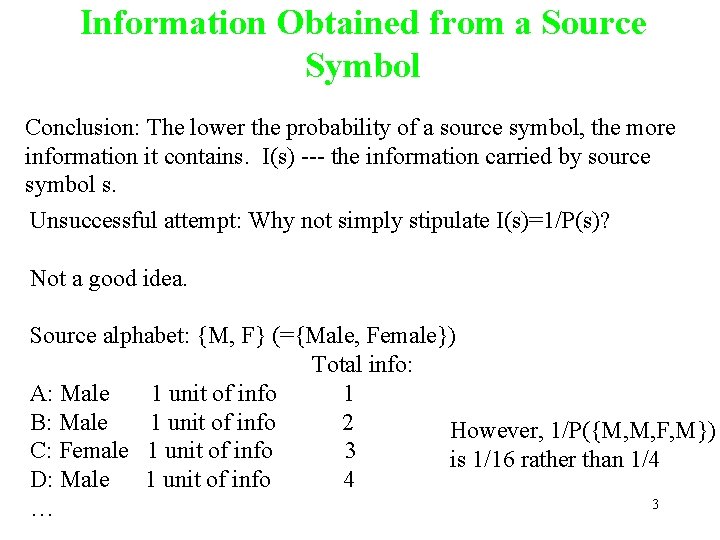

Information Obtained from a Source Symbol Conclusion: The lower the probability of a source symbol, the more information it contains. I(s) --- the information carried by source symbol s. Unsuccessful attempt: Why not simply stipulate I(s)=1/P(s)? Not a good idea. Source alphabet: {M, F} (={Male, Female}) Total info: A: Male 1 unit of info 1 B: Male 1 unit of info 2 However, 1/P({M, M, F, M}) C: Female 1 unit of info 3 is 1/16 rather than 1/4 D: Male 1 unit of info 4 3 …

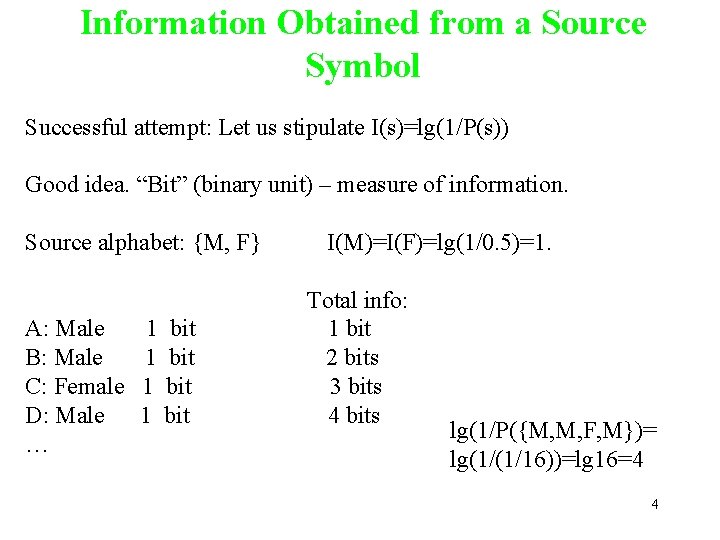

Information Obtained from a Source Symbol Successful attempt: Let us stipulate I(s)=lg(1/P(s)) Good idea. “Bit” (binary unit) – measure of information. Source alphabet: {M, F} A: Male B: Male C: Female D: Male … 1 bit I(M)=I(F)=lg(1/0. 5)=1. Total info: 1 bit 2 bits 3 bits 4 bits lg(1/P({M, M, F, M})= lg(1/(1/16))=lg 16=4 4

Information Obtained from a Source Symbol DEFINITION The information I(p) (or I(s)) obtained from a source symbol s with probability of occurrence p>0 is given by I(p) (or I(s)) =lg(1/p) Example: {a, b, c} P(a)=1/8, P(b)=1/32, P(c)=27/32 I(a)=? I(b)=? I(c)=? 5

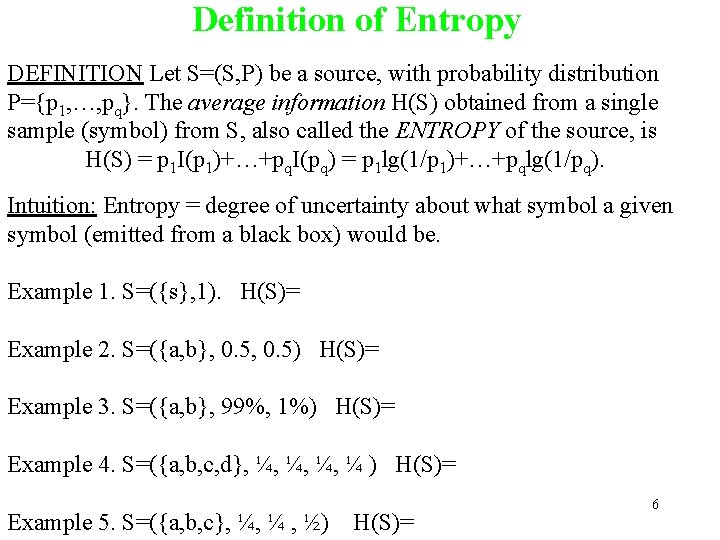

Definition of Entropy DEFINITION Let S=(S, P) be a source, with probability distribution P={p 1, …, pq}. The average information H(S) obtained from a single sample (symbol) from S, also called the ENTROPY of the source, is H(S) = p 1 I(p 1)+…+pq. I(pq) = p 1 lg(1/p 1)+…+pqlg(1/pq). Intuition: Entropy = degree of uncertainty about what symbol a given symbol (emitted from a black box) would be. Example 1. S=({s}, 1). H(S)= Example 2. S=({a, b}, 0. 5) H(S)= Example 3. S=({a, b}, 99%, 1%) H(S)= Example 4. S=({a, b, c, d}, ¼, ¼ ) H(S)= Example 5. S=({a, b, c}, ¼, ¼ , ½) H(S)= 6

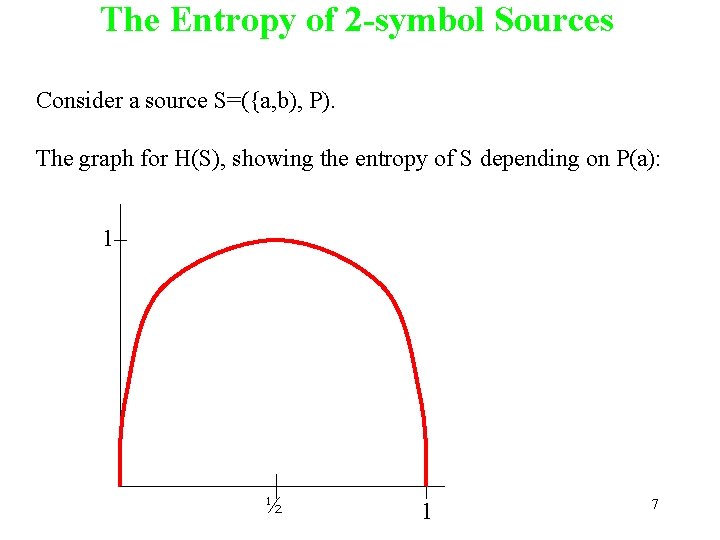

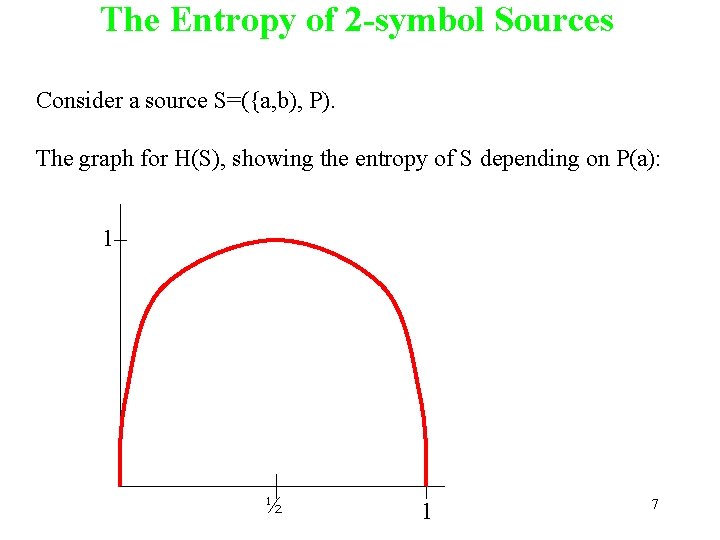

The Entropy of 2 -symbol Sources Consider a source S=({a, b), P). The graph for H(S), showing the entropy of S depending on P(a): 1 ½ 1 7

An Important Property of Entropy THEOREM 3. 2. 3 For a source S of size q, the entropy H(S) satisfies 0 H(S) lg q. Furthermore, H(S)=lg q (is the biggest) if and only if all source symbols are equally likely to occur, and H(S)=0 if and only if one of the symbols has probability 1 of occurring. 8

Homework 9. 1. Exercises 1, 2, 5, 6 of Section 3. 1. 9. 2. The definitions of I(s) (the information obtained from a source symbol) and H(S) (entropy). 9. 3. Give a probability distribution for the source alphabet {a, b, c, d, e, f, g, h} that yields: a) The lowest possible entropy. What does that entropy equal to? b) The highest possible entropy. What does that entropy equal to? 9

Entropy information theory

Entropy information theory 01:640:244 lecture notes - lecture 15: plat, idah, farad

01:640:244 lecture notes - lecture 15: plat, idah, farad Healthmis.ng

Healthmis.ng Natural language processing nlp - theory lecture

Natural language processing nlp - theory lecture Decision theory lecture notes

Decision theory lecture notes Sargur srihari

Sargur srihari Natural language processing nlp - theory lecture

Natural language processing nlp - theory lecture Entropy equation temperature

Entropy equation temperature Enthalpy vs entropy

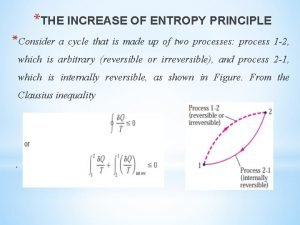

Enthalpy vs entropy Increase in entropy principle

Increase in entropy principle